Efficient Ray Tracing of Large 3D Scenes for Mobile Distributed Computing Environments

Abstract

1. Introduction

2. Related Works

3. Space-Efficient Representation of 3D Geometry for Mobile Distributed Ray Tracing

3.1. Reducing the Size of the Triangular Mesh

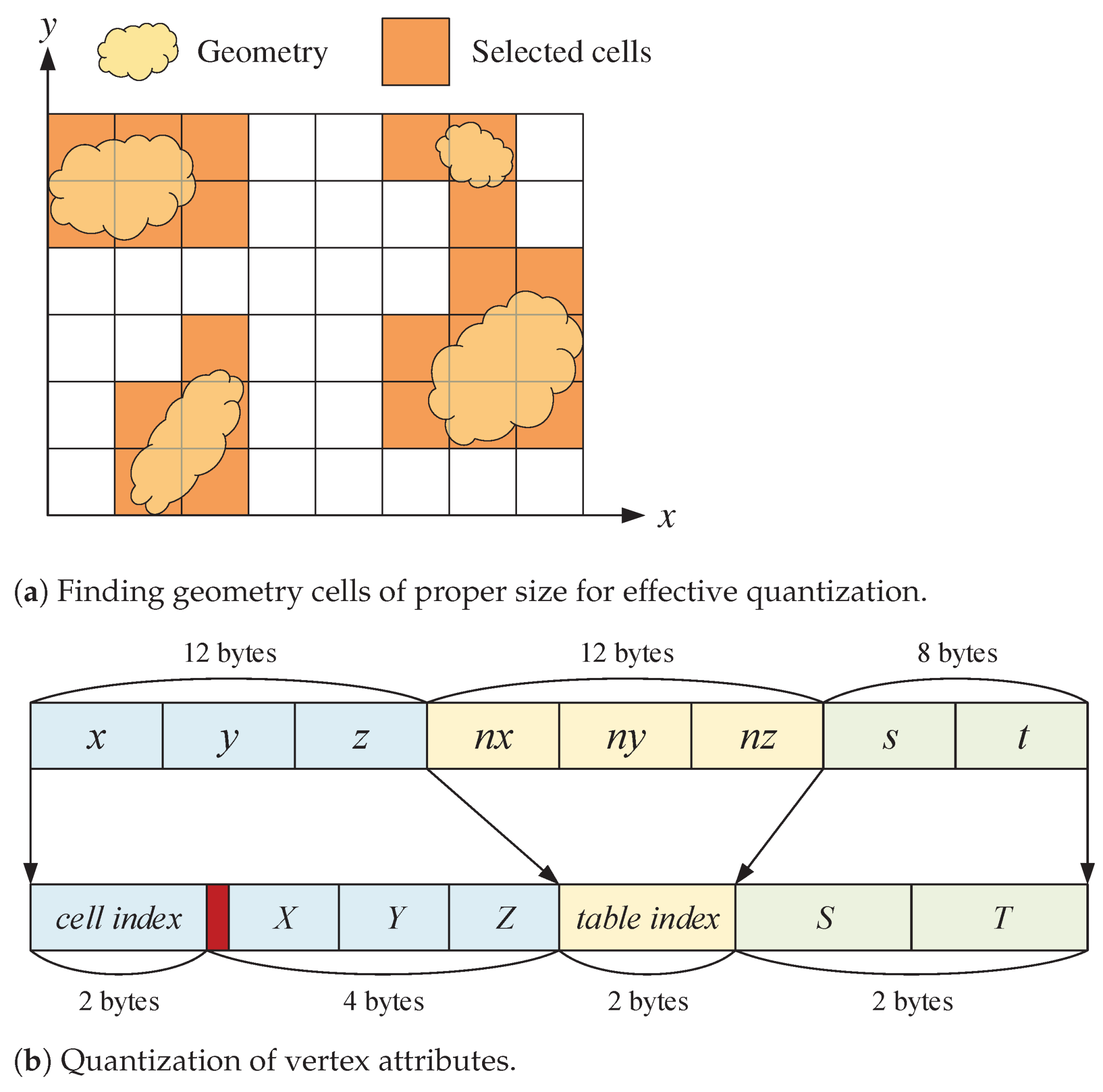

3.1.1. Quantization of Vertex Attributes

3.1.2. Analysis of Triangular-Mesh Compression

3.2. Reducing the Size of the Spatial Acceleration Structure

3.2.1. Enhancement of Memory-Space Efficiency of the kd-Tree

3.2.2. Analysis of kd-Tree Compression

4. Distributed Ray Tracing Framework for Mobile Cluster Computing

4.1. Strategy I: Dynamic Load Balancing

4.2. Strategy II: Static Load Balancing

5. Results

5.1. Spatial and Temporal Performances of Size Reduction Methods

5.2. Performances of Mobile Distributed Ray Tracing

5.3. Augmenting Images Produced by Mobile AR and MR Sensors Using Ray Tracing

6. Concluding Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Whitted, T. An improved model for shaded display. Commun. ACM 1980, 23, 343–349. [Google Scholar] [CrossRef]

- Walton, D.R.; Thomas, D.; Steed, A.; Sugimoto, A. Synthesis of environment maps for mixed reality. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Nantes, France, 9–13 October 2017; pp. 72–81. [Google Scholar]

- Alhakamy, A.; Tuceryan, M. AR360: Dynamic illumination for augmented reality with real-time interaction. In Proceedings of the 2019 IEEE 2nd International Conference on Information and Computer Technologies (ICICT), Kahului, HI, USA, 14–17 March 2019; pp. 170–174. [Google Scholar]

- Zhang, A.; Zhao, Y.; Wang, S. An improved augmented-reality framework for differential rendering beyond the Lambertian-world assumption. IEEE Trans. Vis. Comput. Graph. 2021, 27, 4374–4386. [Google Scholar] [CrossRef] [PubMed]

- NVIDIA. NVIDIA AMPERE GA102 GPU ARCHITECTURE: Second-Generation RTX; Whitepaper; NVIDIA: Santa Clara, CA, USA, 2021. [Google Scholar]

- Deng, Y.; Ni, Y.; Li, Z.; Mu, S.; Zhang, W. Toward real-time ray tracing: A survey on hardware acceleration and microarchitecture techniques. ACM Comput. Surv. 2018, 50, 58. [Google Scholar] [CrossRef]

- Seo, W.; Kim, Y.; Ihm, I. Effective ray tracing of large 3D scenes through mobile distributed computing. In Proceedings of the SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications, Bangkok, Thailand, 27–30 November 2017; pp. 1–5. [Google Scholar]

- Arslan, M.; Singh, I.; Singh, S.; Madhyastha, H.; Sundaresan, K.; Krishnamurthy, S. CWC: A distributed computing infrastructure using smartphones. IEEE Trans. Mob. Comput. 2015, 14, 1587–1600. [Google Scholar] [CrossRef]

- Lamberti, F.; Sanna, A. A streaming-based solution for remote visualization of 3D graphics on mobile devices. IEEE Trans. Vis. Comput. Graph. 2007, 13, 247–260. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Kim, W.; Ihm, I. Mobile collaborative medical display system. Comput. Methods Programs Biomed. 2008, 89, 248–260. [Google Scholar] [CrossRef] [PubMed]

- Shi, S.; Nahrstedt, K.; Campbell, R. A real-time remote rendering system for interactive mobile graphics. ACM Trans. Multimed. Comput. Commun. Appl. 2012, 8, 46. [Google Scholar] [CrossRef]

- Rodríguez, M.B.; Agus, M.; Marton, F.; Gobbetti, E. HuMoRS: Huge models mobile rendering system. In Proceedings of the 19th International ACM Conference on 3D Web Technologies, Vancouver, BC, Canada, 8–10 August 2014; pp. 7–15. [Google Scholar]

- Shi, S.; Hsu, C.H. A survey of interactive remote rendering systems. ACM Comput. Surv. 2015, 47, 57. [Google Scholar] [CrossRef]

- Wu, C.; Yang, B.; Zhu, W.; Zhang, Y. Toward high mobile GPU performance through collaborative workload offloading. IEEE Trans. Parallel Distrib. Syst. 2018, 29, 435–449. [Google Scholar] [CrossRef]

- Nah, J.H.; Kwon, H.J.; Kim, D.S.; Jeong, C.H.; Park, J.; Han, T.D.; Manocha, D.; Park, W.C. RayCore: A ray-tracing hardware architecture for mobile devices. ACM Trans. Graph. 2014, 33, 162. [Google Scholar] [CrossRef]

- Lee, W.; Hwang, S.J.; Shin, Y.; Yoo, J.; Ryu, S. Fast stereoscopic rendering on mobile ray tracing GPU for virtual reality applications. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–10 January 2017; pp. 355–357. [Google Scholar]

- Imagination Technologies. Shining a Light on Ray Tracing; Whitepaper; Imagination Technologies: Kings Langley, UK, 2021. [Google Scholar]

- Wald, I.; Benthin, C.; Slusallek, P. Interactive ray tracing on commodity PC clusters—State of the art and practical applications. In Proceedings of the Euro-Par 2003 Parallel Processing: 9th International Euro-Par Conference, Klagenfurt, Austria, 26–29 August 2003; pp. 499–508. [Google Scholar]

- Heirich, A.; Arvo, J. A competitive analysis of load balancing strategies for parallel ray tracing. J. Supercomput. 1998, 12, 57–68. [Google Scholar] [CrossRef]

- Hasan, R.A.; Mohammed, M.N. A Krill herd behaviour inspired load balancing of tasks in cloud computing. Stud. Inform. Control 2017, 26, 413–424. [Google Scholar] [CrossRef]

- Wald, I.; Havran, V. On building fast Kd-trees for ray tracing, and on doing that in O(Nlog N). In Proceedings of the IEEE Symposium on Interactive Ray Tracing, Salt Lake City, UT, USA, 18–20 September 2006; pp. 61–69. [Google Scholar]

- Wald, I. Realtime Ray Tracing and Interactive Global Illumination. Ph.D. Thesis, Saarland University, Saarbrücken, Germany, 2004. [Google Scholar]

- Maglo, A.; Lavoué, G.; Dupont, F.; Hudelot, C. 3D mesh compression: Survey, comparisons, and emerging trends. ACM Comput. Surv. 2015, 47, 44. [Google Scholar] [CrossRef]

- Smith, J.; Petrova, G.; Schaefer, S. Encoding normal vectors using optimized spherical coordinates. Comput. Graph. 2012, 36, 360–365. [Google Scholar] [CrossRef][Green Version]

- Cigolle, Z.H.; Donow, S.; Evangelakos, D.; Mara, M.; McGuire, M.; Meyer, Q. A survey of efficient representations for independent unit vectors. J. Comput. Graph. Tech. 2014, 3, 1–30. [Google Scholar]

- Isenburg, M.; Snoeyink, J. Compressing texture coordinates with selective linear predictions. In Proceedings of the Computer Graphics International 2003, Tokyo, Japan, 9–11 July 2003; pp. 126–131. [Google Scholar]

- Váša, L.; Brunnett, G. Efficient encoding of texture coordinates guided by mesh geometry. Comput. Graph. Forum 2014, 33, 25–34. [Google Scholar] [CrossRef][Green Version]

- Tarini, M. Volume-encoded UV-maps. ACM Trans. Graph. 2016, 35, 107. [Google Scholar] [CrossRef]

- Choi, B.; Chang, B.; Ihm, I. Improving memory space efficiency of kd-tree for real-time ray tacing. Comput. Graph. Forum 2013, 32, 335–344. [Google Scholar] [CrossRef]

| No.’s of Triangles | No.’s of Vertices | Size of Mesh (MB) | Size of kd-Tree (MB) | |

|---|---|---|---|---|

| Sponza | 279,163 | 193,372 | 9.1 | 13.1 |

| Soda Hall | 2,167,474 | 4,192,793 | 152.8 | 86.0 |

| San Miguel | 9,963,191 | 9,278,776 | 397.2 | 621.1 |

| Power Plant | 12,748,510 | 10,960,555 | 480.4 | 603.7 |

| Scene | Cell Resolution | # of Geom. Cells | Precision |

|---|---|---|---|

| Sponza | 65,532 | ||

| Soda Hall | 65,319 | ||

| San Miguel | 65,065 | ||

| Power Plant | 65,472 |

| Scene | Original (MB) | Qunatized (MB) | Compression Ratio |

|---|---|---|---|

| Sponza | 9.1 | 5.4 | 1.69 |

| Soda Hall | 152.8 | 72.8 | 2.10 |

| San Miguel | 397.2 | 220.2 | 1.80 |

| Power Plant | 480.4 | 271.3 | 1.77 |

| Scene | POS | POS/NORM | POS/NORM/TEX |

|---|---|---|---|

| Sponza | 42.80 | 42.59 | 33.39 |

| Soda Hall | 38.57 | 36.29 | N/A |

| San Miguel | 34.94 | 29.17 | 28.69 |

| - no refl/refr | 35.17 | 35.13 | 33.50 |

| Power Plant | 43.23 | 32.63 | N/A |

| - no refl/refr | 43.23 | 41.10 | N/A |

| (a) | |||

| Scene | Standard | Choi et al. | Seo et al. |

| Sponza | 13.1 | 8.3 (1.58) | 6.6 (1.98) |

| Soda Hall | 86.0 | 50.1 (1.72) | 41.8 (2.06) |

| San Miguel | 621.1 | 285.3 (2.18) | 205.3 (3.03) |

| Power Plant | 603.7 | 321.0 (1.88) | 252.7 (2.39) |

| (b) | |||

| Scene | Choi et al. | Seo et al. | |

| Sponza | 211/281/351 | 203/172/289 | |

| Soda Hall | 1193/1537/1961 | 1170/1030/1685 | |

| San Miguel | 9696/6392/12,892 | 5183/3014/8197 | |

| Power Plant | 7066/10,300/12,216 | 6934/6553/10,210 | |

| (c) | |||

| Scene | Standard | Choi et al. | Seo et al. |

| Sponza | 2632.4 | 2711.6 | 2776.6 (+2.4%) |

| Soda Hall | 2788.1 | 2801.5 | 2936.9 (+4.8%) |

| San Miguel * | - | 3489.5 | 3661.1 (+4.9%) |

| Power Plant | - | 3091.1 | 3203.0 (+3.6%) |

| Scene | Original (MB) | Ours (MB) | Compression Ratio |

|---|---|---|---|

| Sponza | 22.2 | 12.0 | 1.85 |

| Soda Hall | 238.8 | 114.6 | 2.08 |

| San Miguel | 1018.3 | 425.5 | 2.39 |

| Power Plant | 1084.1 | 524.0 | 2.07 |

| (a) One worker node participated | ||||

| Original | T-Mesh | Kd-Tree | Both | |

| Sponza | 2632.4 | 2832.2 | 2776.6 | 2905.4 |

| (+7.6%) | (+5.5%) | (+10.4%) | ||

| Soda Hall | 2788.1 | 2893.4 | 2936.9 | 3224.5 |

| (+3.8%) | (+5.3%) | (+15.7%) | ||

| San Miguel | – | 3487.6 | 3661.1 | 4257.8 |

| Power Plant | – | 3102.2 | 3203.0 | 3862.6 |

| (b) 16 worker nodes participated | ||||

| Original | T-Mesh | Kd-Tree | Both | |

| Sponza | 358.1 | 366.8 | 361.9 | 380.6 |

| (+2.4%) | (+1.1%) | (+6.3%) | ||

| Soda Hall | 321.5 | 343.1 | 342.3 | 347.8 |

| (+6.7%) | (+6.5%) | (+8.2%) | ||

| San Miguel | – | 409.2 | 428.5 | 433.4 |

| Power Plant | – | 368.0 | 378.1 | 393.8 |

| (a) One worker node participated | ||||

| 256 × 256 | 128 × 128 | 64 × 64 | 32 × 32 | |

| Sponza | 3030.6 | 2905.4 | 3903.6 | 5754.2 |

| Soda Hall | 3107.8 | 3224.5 | 3839.0 | 5639.8 |

| San Miguel | 6208.7 | 6128.5 | 6744.9 | 8162.8 |

| Power Plant | 3939.7 | 3862.6 | 4682.2 | 6845.1 |

| (b) 16 worker nodes participated | ||||

| 256×256 | 128 × 128 | 64 × 64 | 32 × 32 | |

| Sponza | 374.4 | 380.6 | 396.6 | 522.7 |

| Soda Hall | 368.6 | 347.8 | 408.2 | 563.5 |

| San Miguel | 678.1 | 647.5 | 660.6 | 698.7 |

| Power Plant | 427.4 | 393.8 | 459.0 | 689.8 |

| (a) Dynamic load balancing | |||||

| 1 | 2 | 4 | 8 | 16 | |

| Sponza | 2763.2 | 1526.9 | 892.9 | 682.4 | 621.7 |

| (1.000) | (0.905) | (0.774) | (0.506) | (0.278) | |

| Soda Hall | 3184.5 | 1711.2 | 937.2 | 720.8 | 698.7 |

| (1.000) | (0.930) | (0.849) | (0.552) | (0.285) | |

| San Miguel | 5129.6 | 2883.7 | 1658.9 | 1293.2 | 1077.3 |

| (1.000) | (0.889) | (0.761) | (0.496) | (0.298) | |

| Power Plant | 3218.5 | 1799.4 | 951.3 | 759.5 | 724.1 |

| (1.000) | (0.894) | (0.846) | (0.530) | (0.278) | |

| (b) Static load balancing | |||||

| 1 | 2 | 4 | 8 | 16 | |

| Sponza | 2905.4 | 1680.9 | 896.4 | 546.5 | 380.6 |

| (1.000) | (0.864) | (0.810) | (0.665) | (0.477) | |

| Soda Hall | 3224.5 | 1621.2 | 876.9 | 553.1 | 347.8 |

| (1.000) | (0.994) | (0.919) | (0.729) | (0.579) | |

| San Miguel | 6128.5 | 3283.8 | 1828.7 | 1145.3 | 647.5 |

| (1.000) | (0.933) | (0.838) | (0.669) | (0.592) | |

| Power Plant | 3862.6 | 2218.0 | 1364.9 | 704.6 | 393.8 |

| (1.000) | (0.871) | (0.707) | (0.685) | (0.613) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, W.; Park, S.; Ihm, I. Efficient Ray Tracing of Large 3D Scenes for Mobile Distributed Computing Environments. Sensors 2022, 22, 491. https://doi.org/10.3390/s22020491

Seo W, Park S, Ihm I. Efficient Ray Tracing of Large 3D Scenes for Mobile Distributed Computing Environments. Sensors. 2022; 22(2):491. https://doi.org/10.3390/s22020491

Chicago/Turabian StyleSeo, Woong, Sanghun Park, and Insung Ihm. 2022. "Efficient Ray Tracing of Large 3D Scenes for Mobile Distributed Computing Environments" Sensors 22, no. 2: 491. https://doi.org/10.3390/s22020491

APA StyleSeo, W., Park, S., & Ihm, I. (2022). Efficient Ray Tracing of Large 3D Scenes for Mobile Distributed Computing Environments. Sensors, 22(2), 491. https://doi.org/10.3390/s22020491