LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine

Abstract

:1. Introduction

2. Algorithm Principle and Improvement

2.1. LeGO-LOAM

- (1)

- Let S be the set of continuous points in the same row in the depth map and calculate the roughness c of the point piwhere, ri and rj are the Euclidean distances from points pi and pj in set S to LiDAR, respectively.

- (2)

- Divide the depth map horizontally into several equal sub images to extract features evenly.

- (3)

- Segment different types of features according to the set threshold cth. The points with roughness value c greater than cth are segmented into edge feature points, and the points less than cth are segmented into plane feature points. The non-ground edge feature point nFe with the largest roughness c and the plane feature point nFp with the smallest roughness c in the ground or segmentation points are selected from each row of the sub image to obtain the edge feature point set Fe and the plane feature point set Fp in all sub images. Then, the non-ground edge feature nFe with the largest roughness c and the ground plane feature nFp with the smallest roughness c are selected from each row of the sub image to obtain the edge feature set Fe and the plane feature set Fp in all sub images. Obviously, Fe ⸦ Fe, Fp ⸦ Fp.

2.2. Scan Context

2.3. Improved Algorithm Principle

- (1)

- Read the point cloud data collected by LiDAR, divide each frame of point cloud Pt by the point cloud segmentation module into different clusters and mark them as ground points or segmentation points. Meanwhile, three characteristics of each point, namely, the label of the point, the row and column index in the depth map and the distance value, are obtained and the point cloud that cannot be clustered is removed.

- (2)

- By the feature extraction module, calculate the feature point roughness c, and extract the edge feature points nFe and the plane feature points nFp according to the roughness c ranking.

- (3)

- The LiDAR odometry module uses edge feature points and plane feature points to obtain the pose transformation matrix through a two-step L-M optimization, and obtains the spatial constraints between two continuous frame point clouds.

- (4)

- The LiDAR mapping module matches the features in {Fet, Fpt} with the surrounding point cloud Q−t−1 to further refine the posture transformation, and then uses the final transformed pose obtained by L-M optimization to add the spatial constraints between the new node of the point cloud map and the historically selected node, sends the pose map to GTSAM for map optimization, updates the sensor estimated attitude and updates the current map.

- (5)

- Further eliminate the drift of point cloud map through a scan context loopback detection algorithm. The process is as follows:

- (a)

- Encode point cloud dataThe 3D point cloud is divided into Ns axial sector and Nr radial ring bin in LiDAR coordinates at equal intervals, as shown in Figure 2a. If the maximum sensing range of the LiDAR is Lmax, the radial gap between the rings is Lmax/Nr, and the central angle of the sector is equal to 2π/Ns. Generally, Ns and Nr are set at 60 and 20, respectively.Set Pij be a point set that belongs to the overlapping bins of the ith ring and jth sector, and take the maximum height of the point cloud p in the point set Pij as the value of the the radial ring bin, then the bin coding function is:where z( ) is a function of the z-coordinate value of point cloud p, and the empty bin is assigned a zero value. Then the scan context I is finally expressed as the Nr × Ns matrix, as follows:

- (b)

- Generate a scan contextThe point cloud and candidate point cloud to be queried are retrieved, the distance between two column vectors at and of the same index by cosine distance is calculate, and the distance function is normalized by dividing the sum of the distances between columns in the same index by the total number of columns Ns.where, Iq and Ic are the scan context obtained from the point cloud to be queried and the candidate point cloud, respectively.Get the vector K. The displacement of LiDAR sensor coordinates relative to the global coordinates will change the column order. Use all possible column displacement scanning contexts to calculate the distance and find the minimum distance. Set shift n columns to get matrix , then the column movement number n and the corresponding distance of the best alignment can be obtained according to the minimum distance,Each row r of scan context is encoded into a real value by the ring coding function ψ, and the ring key is represented by the Nr dimensional vector K, whose element is taken from the nearest ring to the farthest ring from the LiDAR.

- (c)

- Confirm the index of the loopback frame.Vector K is used to construct the key of KD tree. The queried ring key is used to find similar ring keys and their corresponding scan indexes. Use distance to compare the candidate scan context with the scan context to be queried,where c is a set of candidate indexes extracted from KD tree, τ is the given threshold, and c∗ is the index where it is determined to be looped.

- (6)

- Combine m keyframes near c∗ into a local map, convert the current key frame to the world coordinate system, register with the local map and calculate the registration score by the ICP method. If the registration score is less than the given threshold, the loopback is considered successful, and the pose constraints between the loopback frame and the current frame is obtained. The constraints are added to GTSAM for map optimization and to update the point cloud map. The transform fusion module fuses the position and position estimation results from the LiDAR range meter module and the LiDAR mapping module, and outputs the final position and the position estimation.

3. The KITTI Dataset Test

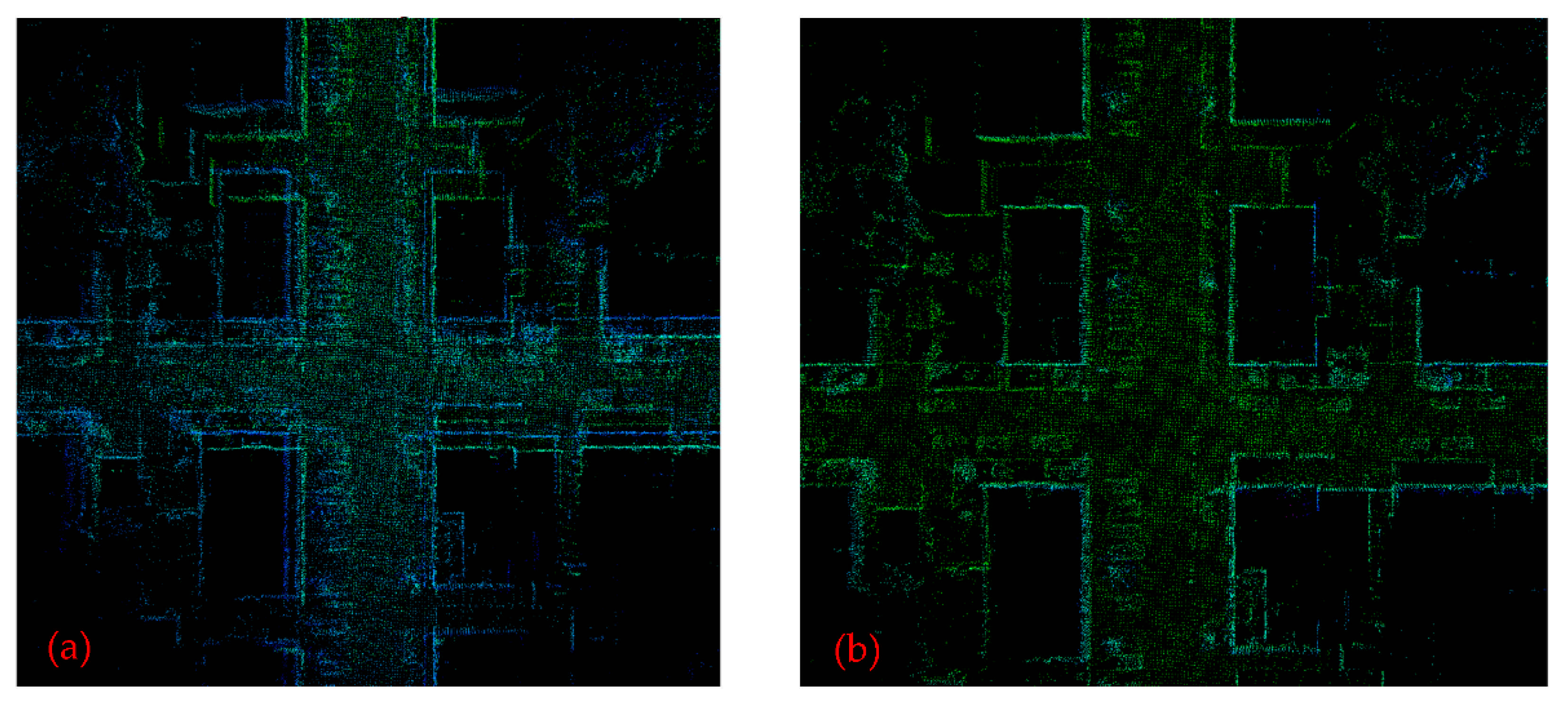

3.1. Mapping Effect

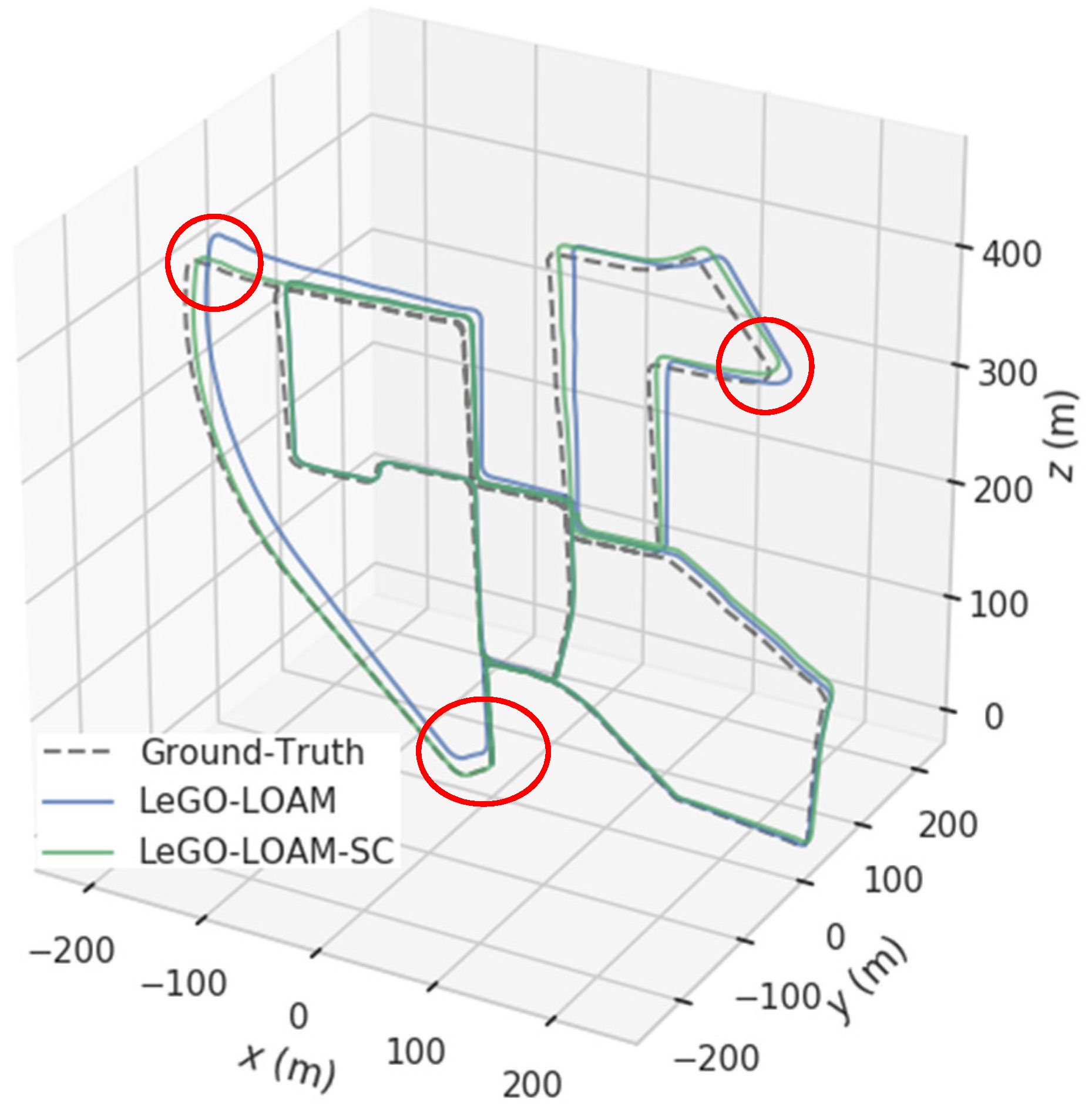

3.2. Track Comparison

3.3. Estimate Trajectory Length Deviation and Time

3.4. Absolute Trajectory Error and Relative Pose Error

4. Experimental Verification

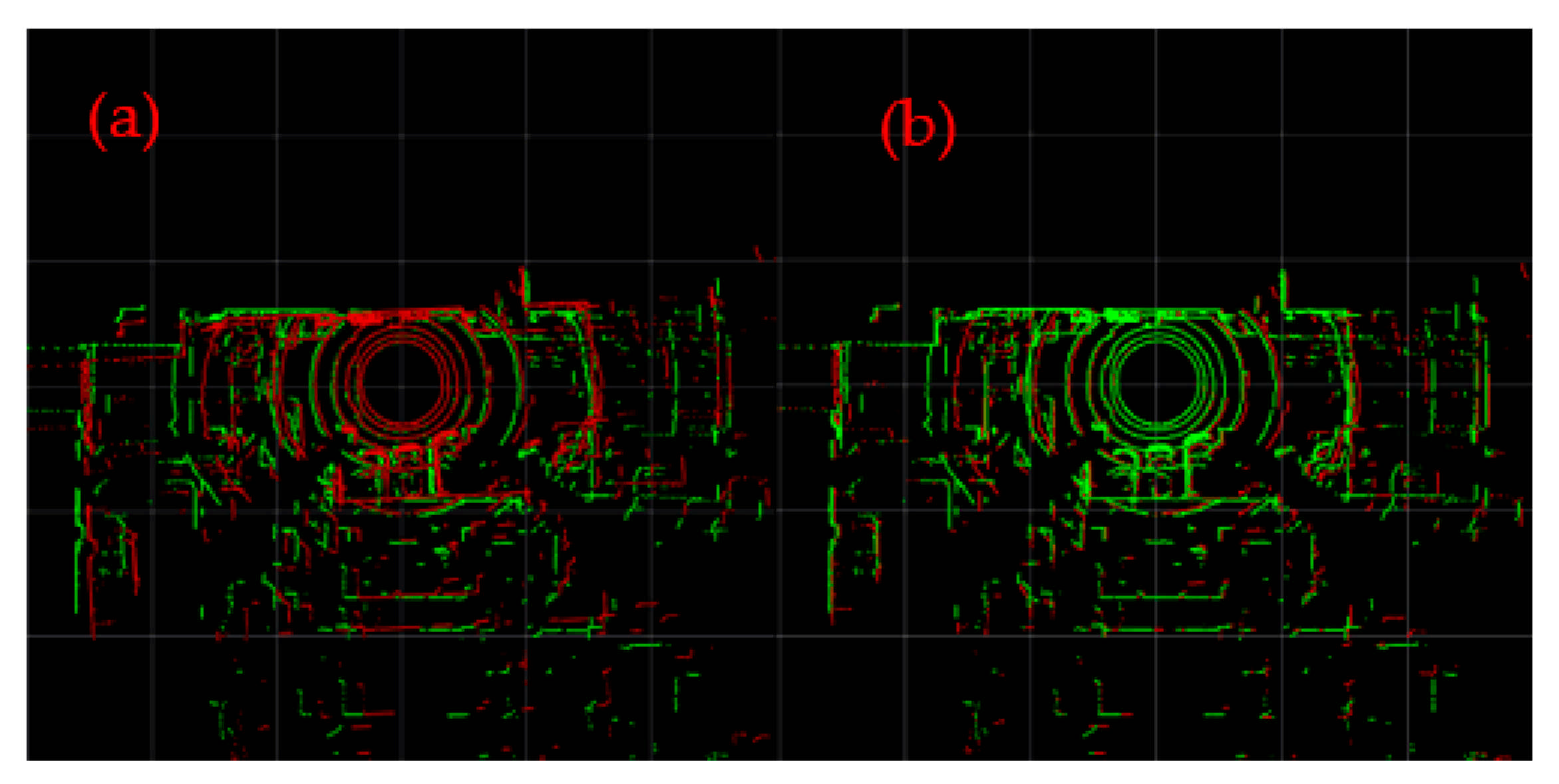

4.1. Mapping Effect

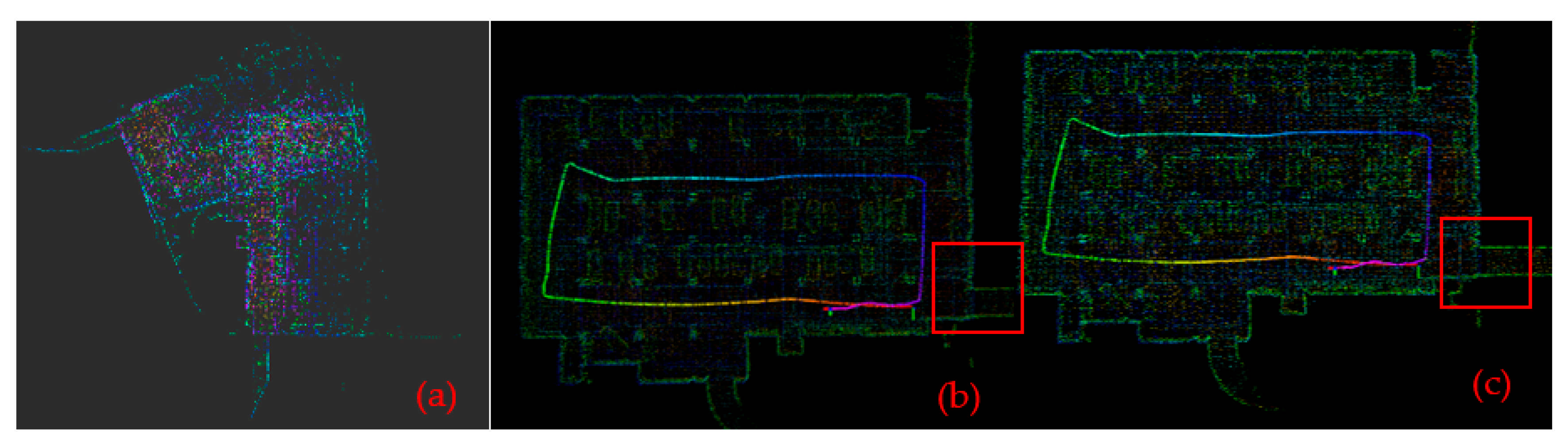

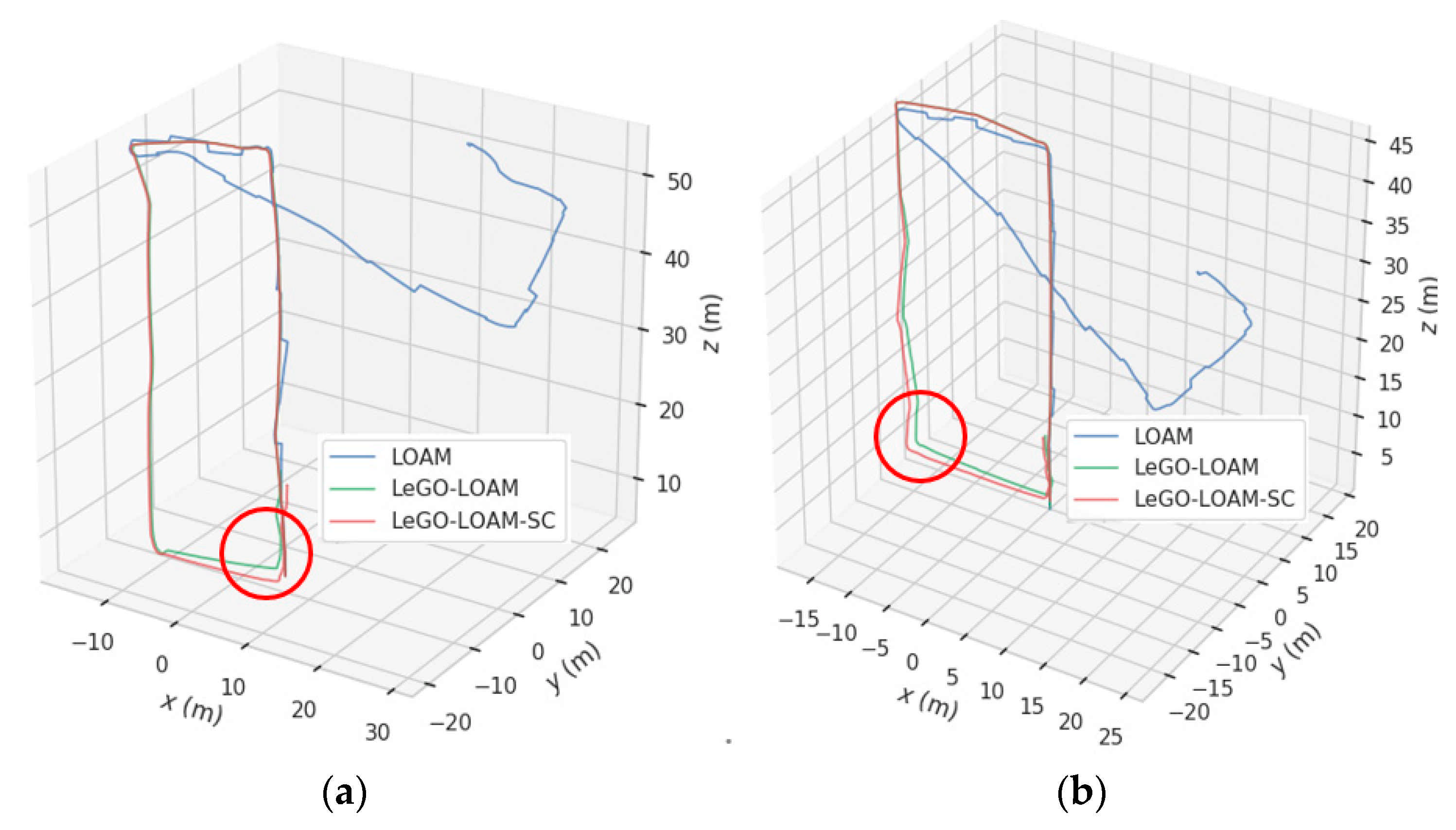

4.2. Trajectory Contrast

4.3. Track Length and Time Consuming

5. Conclusions

- (1)

- An improved SLAM algorithm fusing LeGO-LOAM with scan context, LeGO-LOAM-SC algorithm, is proposed. The data set test and experimental test results show that the improved algorithm has a higher mapping accuracy and a lower time-consumption and resource occupancy.

- (2)

- The KITTI dataset 00 sequence is used to test the mapping and pose estimation performance of LeGO-LOAM and LeGO-LOAM-SC. The results show that LeGO-LOAM-SC improves the drift of the point cloud map, the coincidence degree between motion trajectory estimation and real trajectory is higher, the loop is smoother, the estimated trajectory length is closer to the real trajectory length, and the time consumption is reduced by 2%. The CPU occupancy is reduced by 6%, the maximum error, minimum error and mean square error of ATE are reduced by 49.4%, 79.1% and 55.7% respectively, and the maximum error, minimum error and mean square error of RPE are reduced by 62.9%, 25.0% and 50.3%, respectively.

- (3)

- An experimental test found that the map constructed by LeGO-LOAM-SC algorithm is clearer, the loopback effect is better, the generated estimation trajectory is smoother, the overall positioning is more accurate, the translation and rotation accuracy is improved by about 5%, and the time consumption is reduced by about 4%.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Wang, G.; Zhang, D. Innovation practice and development prospect of intelligent fully mechanized coal mining Technology. J. China Univ. Min. Technol. 2018, 47, 459–467. [Google Scholar]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA 2014), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Rusu, R.; Bradski, G.; Thibaux, R.; Hsu, J. Fast 3d recognition and pose using the viewpoint feature histogram. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2155–2162. [Google Scholar]

- Fernandes, D.; Afonso, T.; Girão, P.; Gonzalez, D.; Silva, A.; Névoa, R.; Novais, P.; Monteiro, J.; Melo-Pinto, P. Real-Time 3D Object Detection and SLAM Fusion in a Low-Cost LiDAR Test Vehicle Setup. Sensors 2021, 21, 8381. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Fang, Z.; Li, H.; Scherer, S. A robust laser-inertial odometry and mapping method for large-scale highway environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1285–1292. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October–24 January 2021; pp. 5135–5142. [Google Scholar]

- Liu, Z.; Zhang, F. Balm: Bundle adjustment for lidar mapping. IEEE Robot. Autom. Lett. 2021, 6, 3184–3191. [Google Scholar] [CrossRef]

- Zhou, Z.; Cao, J.; Di, S. Overview of 3D Lidar Slam Algorithms. Chin. J. Sci. Instrum. 2021, 42, 13–27. [Google Scholar]

- Seetharaman, G.; Lakhotia, A.; Blasch, E.P. Unmaned Vehicle come of age: The DARPA grand challenge. Computer 2006, 39, 26–29. [Google Scholar] [CrossRef]

- Meng, D.; Tian, B.; Cai, F.; Gao, Y.; Chen, L. Road slope real-time detection for unmanned truck in surface mine. Acta Geod. Cartogr. Sin. 2021, 50, 1628–1638. [Google Scholar]

- Rahman, M.F.F.; Fan, S.; Zhang, Y.; Chen, L. A Comparative study and application of unmanned agricultural vehicle system in agriculture. Agriculture 2021, 11, 22. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, X.; Hu, J.; Gong, J.; Wang, K.; Li, G.; Mei, C. An overview on unmanned vehicle technology in off-road environment. Trans. Beijing Inst. Technol. 2021, 41, 1137–1148. [Google Scholar]

- Huber, D.F.; Vandapel, N. Automatic three-dimensional underground mine mapping. Int. J. Robot. Res. 2006, 25, 7–17. [Google Scholar] [CrossRef]

- Ren, Z.; Wang, L.; Bi, L. Robust GICP-based 3D LiDAR SLAM for underground mining environment. Sensors 2019, 19, 2915. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems V, University of Washington, Seattle, WA, USA, 28 June–1 July 2009. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems Conference (RSS), Berkeley, CA, USA, 12–16 July 2014. [Google Scholar]

- Zhang, J.; Singh, S. Low-drift and real-time lidar odometry and mapping. Auton. Robot. 2017, 41, 401–416. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 3, 1–13. [Google Scholar] [CrossRef]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453), Las Vegas, NV, USA, 27–31 October 2003; pp. 2743–2748. [Google Scholar]

- Deschaud, J.E. IMLS-SLAM: Scan-to-model matching based on 3D data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2480–2485. [Google Scholar]

- Behley, J.; Stachniss, C. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018. [Google Scholar]

- Liu, X.; Zhang, L.; Qin, S.; Tian, D.; Ouyang, S.; Chen, C. Optimized LOAM Using Ground Plane Constraints and SegMatch-Based Loop Detection. Sensors 2019, 19, 5419. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Bosse, M.; Zlot, R. Place recognition using keypoint voting in large 3D lidar datasets. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2677–2684. [Google Scholar]

- Rizzini, D.L. Place recognition of 3D landmarks based on geometric relations. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 648–654. [Google Scholar]

- Kim, G.; Kim, A. Scan context: Egocentric spatial descriptor for place recognition within 3d point cloud map. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4802–4809. [Google Scholar]

- Evo: Python Package for the Evaluation of Odometry and SLAM. Available online: https://github.com/MichaelGrupp/evo (accessed on 5 June 2021).

- Huang, W.; Li, Y.; Hu, F. Real-Time 6-DOF Monocular Visual SLAM based on ORB-SLAM2. In Proceedings of the 2019 Chinese Control and Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 2929–2932. [Google Scholar]

| Algorithm | Path Length (m) | Track Length Deviation (m) (Actual Path Length is 3724.187 m) | The CPU Occupancy Rate (%) | Time Consumption (s) |

|---|---|---|---|---|

| LeGO-LOAM | 3730.692 | 6.505 | 65 | 570.167 |

| LeGO-LOAM-SC | 3727.583 | 3.396 | 61 | 558.564 |

| Algorithm | Evaluating Indicator | Maximum Error | Minimum Error | Mean Square Error |

|---|---|---|---|---|

| LeGO-LOAM | ATE | 11.177 m | 0.885 m | 4.976 m |

| RPE | 6.173 | 0.004 | 0.159 | |

| LeGO-LOAM-SC | ATE | 5.652 m | 0.185 m | 2.206 m |

| RPE | 2.289 | 0.003 | 0.079 |

| Scene | #1 | #2 | ||||

|---|---|---|---|---|---|---|

| Algorithm | LOAM | LeGO-LOAM | LeGO-LOAM-SC | LOAM | LeGO-LOAM | LeGO-LOAM-SC |

| Translation X (m) | 22.68 | −0.86 | 0.40 | 20.36 | −1.24 | −0.98 |

| Translation Y (m) | 3.57 | 0.14 | 0.10 | −2.83 | 0.56 | 0.09 |

| Translation Z (m) | 59.84 | 13.86 | 12.23 | 38.32 | 8.92 | 8.59 |

| Total translation (m) | 64.09 | 13.89 | 12.24 | 43.49 | 9.02 | 8.65 |

| Pitch angle (deg) | −69.09 | −2.46 | 0.93 | −75.87 | −6.36 | −5.18 |

| Drift angle (deg) | 8.01 | 0.74 | −6.26 | 13.02 | −6.47 | 2.51 |

| Roll angle (deg) | 2.86 | 6.27 | −0.58 | −26.83 | 4.43 | 1.26 |

| Total rotation (deg) | 70.42 | 6.78 | 6.39 | 81.52 | 10.06 | 5.89 |

| Scene | Algorithm | Path Length (M) | Time Consuming (S) |

|---|---|---|---|

| #1 | LeGO-LOAM | 186.954 | 220.364 |

| LeGO-LOAM-SC | 179.286 | 211.864 | |

| #2 | LeGO-LOAM | 192.258 | 234.950 |

| LeGO-LOAM-SC | 198.360 | 225.268 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, G.; Wei, J.; Li, R.; Cheng, J. LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine. Sensors 2022, 22, 520. https://doi.org/10.3390/s22020520

Xue G, Wei J, Li R, Cheng J. LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine. Sensors. 2022; 22(2):520. https://doi.org/10.3390/s22020520

Chicago/Turabian StyleXue, Guanghui, Jinbo Wei, Ruixue Li, and Jian Cheng. 2022. "LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine" Sensors 22, no. 2: 520. https://doi.org/10.3390/s22020520