A Lightweight Passive Human Tracking Method Using Wi-Fi

Abstract

:1. Introduction

- We are the first to propose using the LoS path between a pair of Wi-Fi transceivers to form an enhanced and featured infrared-like beam with a Fresnel zone.

- A data stream and subcarrier selection algorithm is proposed to reduce the loss of effective characteristics while maintaining the computational effort for supporting near real-time tracking.

- A comprehensive study of environment-adaptive thresholds of eigenvalues of the environment is presented for the recognition of a target crossing the link in different environments. Based on this, we are the first to propose a tracking method based on a Wi-Fi grid that achieves a near real-time, meter-level tracking under the condition of a limited number of transceivers.

2. Related Works

2.1. Human Activities Sensing

2.2. Device-Free Tracking

3. Data Acquisition, Processing, and Model Building

3.1. Feature Extraction and Performance Analysis

3.1.1. Extraction of Amplitude and Phase Difference

| Algorithm 1: Feature extraction. |

| Input: |

| data_file |

| Output: |

| amp, phase_diff |

| 1: original_trace←read_bf_file(data_file); |

| 2: sqeezed_trace←get_sqeezed(original_trace); |

| 3: csi_trace←change_length(sqeezed_trace); |

| 4: Get timestamp and calculate interpolation length; |

| 5: for i← to size(csi_trace) do |

| 6: csi_entry←csi_trace(i); |

| 7: csi(i)←get_scaled_csi(csi_entry); |

| 8: end for |

| 9: abs_amp←abs(csi); |

| 10: amplitude←interp(csi, len); |

| 11: amp←center_data(amplitude); |

| 12: rx1_ph←angle(rx1_csi); |

| 13: rx2_ph←angle(rx2_csi); |

| 14: diff←unwrap(rx1_ph) - unwrap(rx2_ph); |

| 15: ph_diff←warptopi(diff); |

| 16: phase_diff←interp(ph_diff, len); |

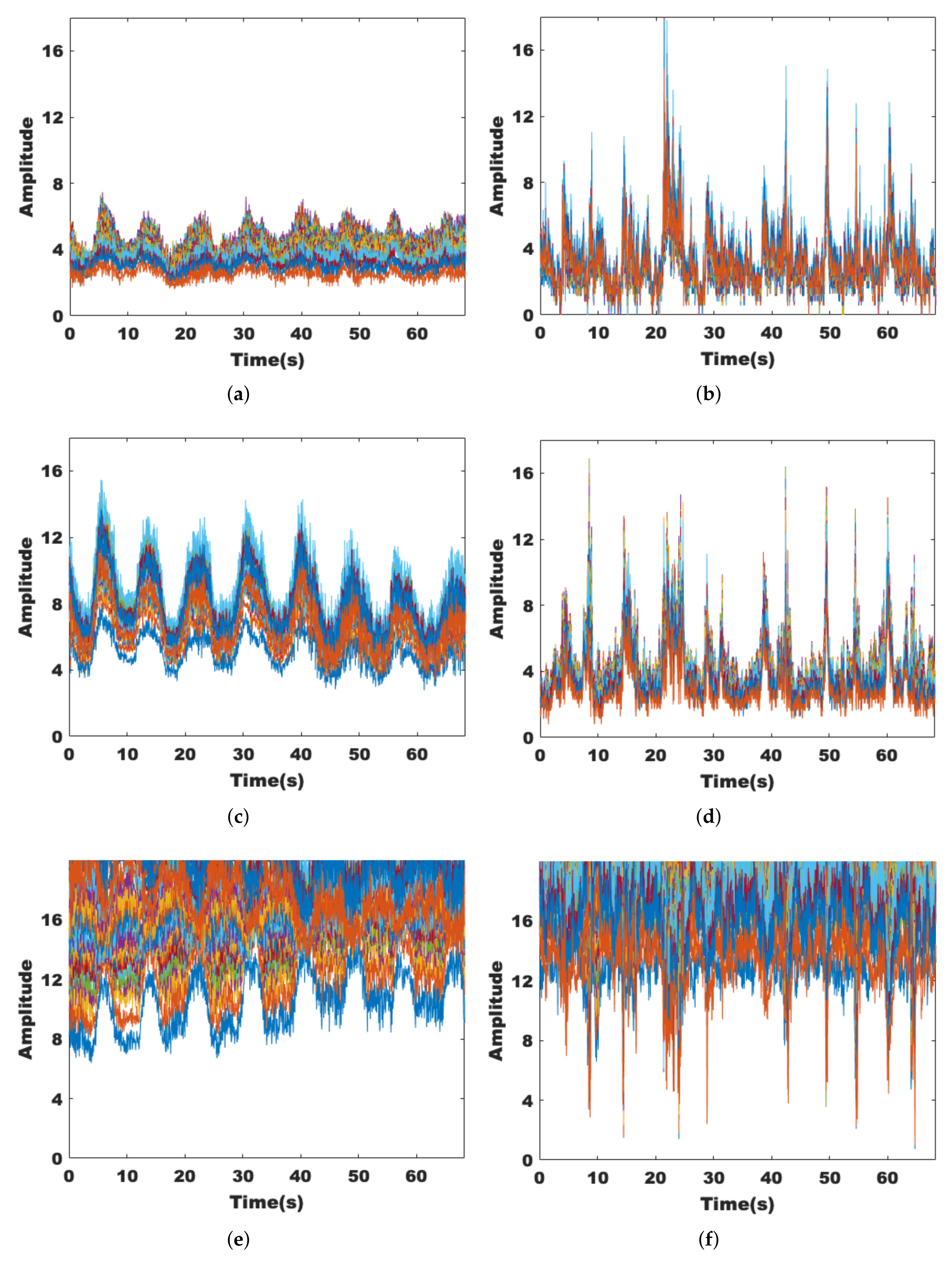

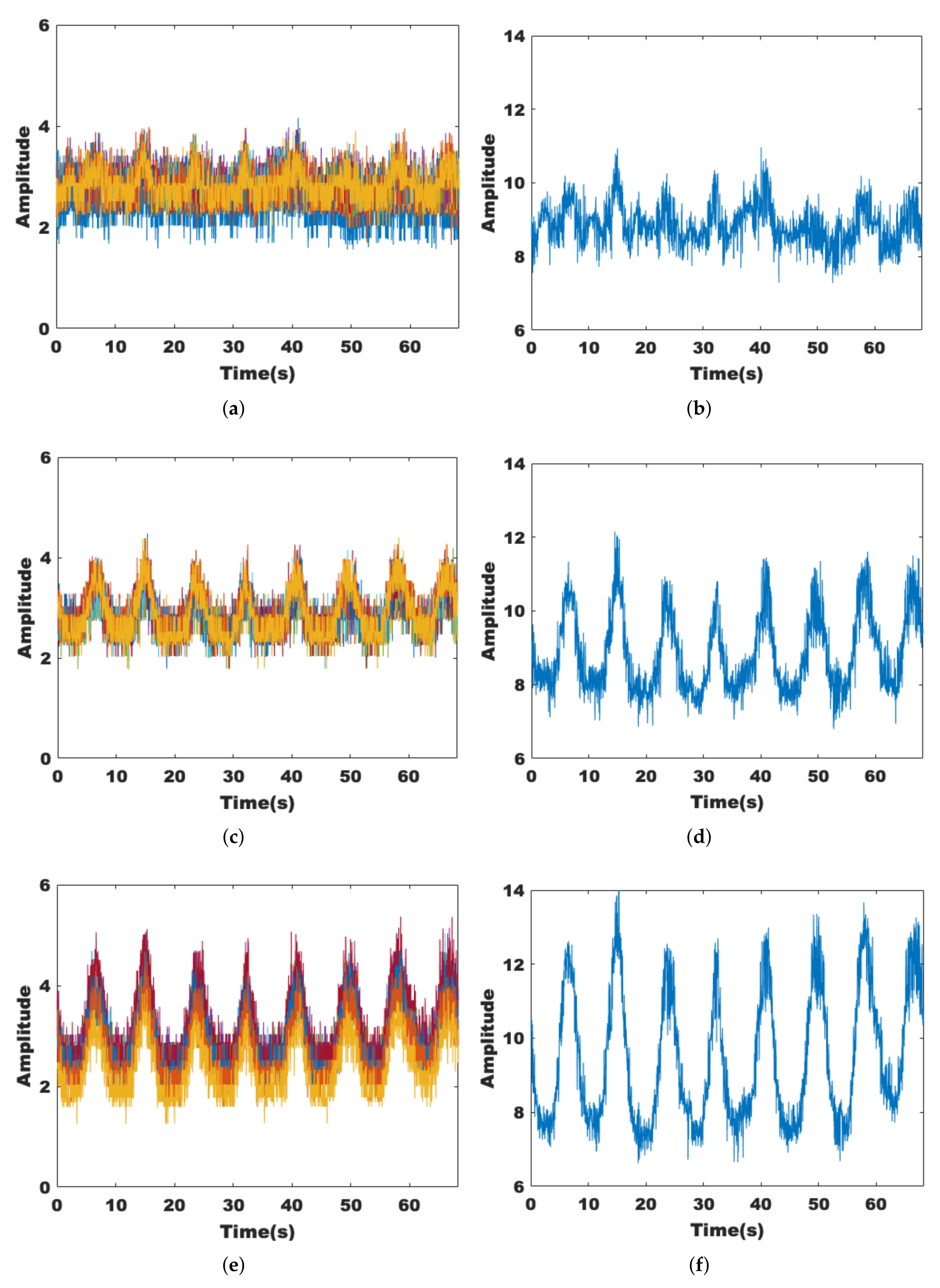

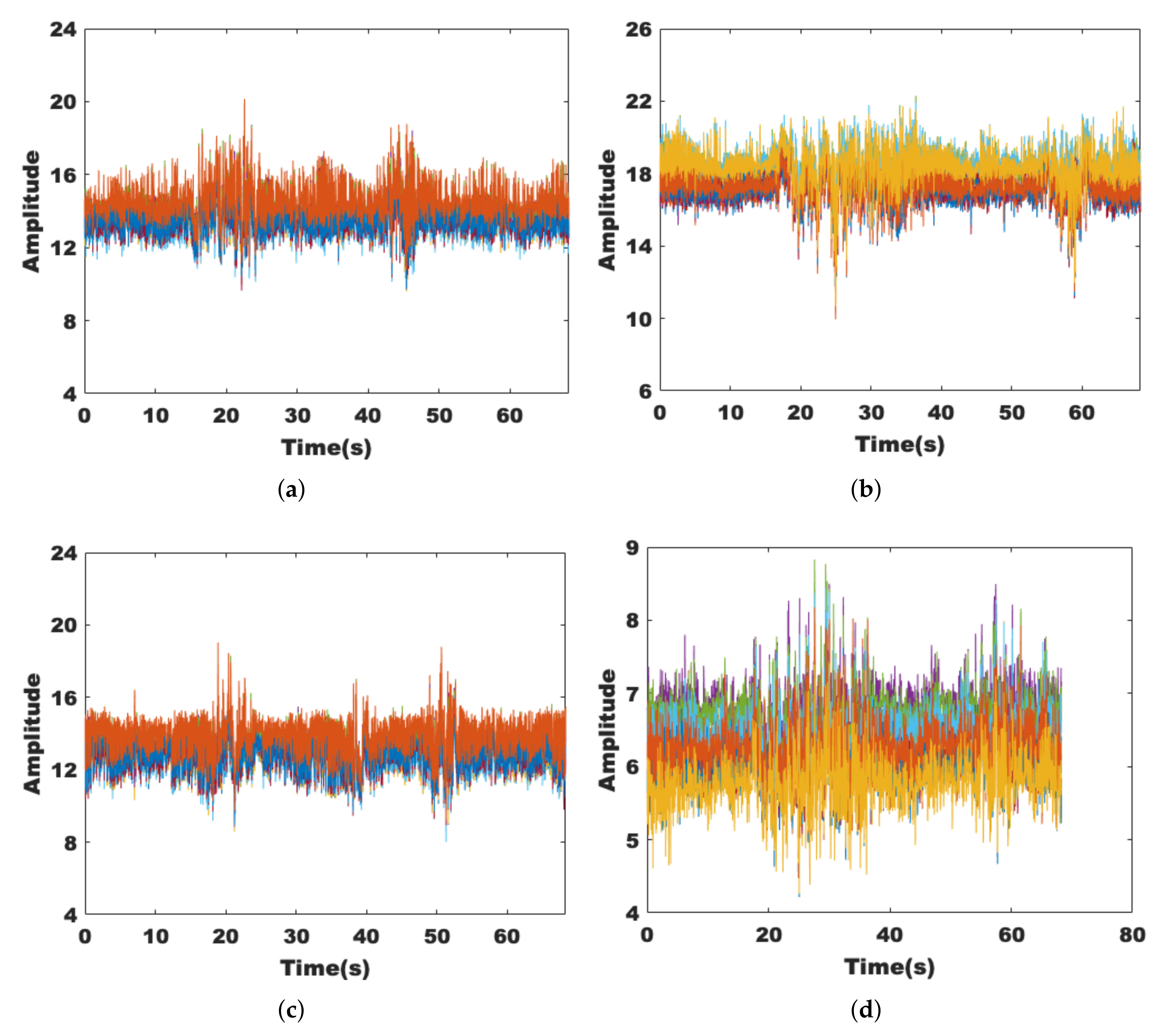

3.1.2. Sensitivity and Robustness Analysis

3.1.3. Dimensionality Reduction of Data Streams and Subcarriers

3.2. Data Pre-Processing

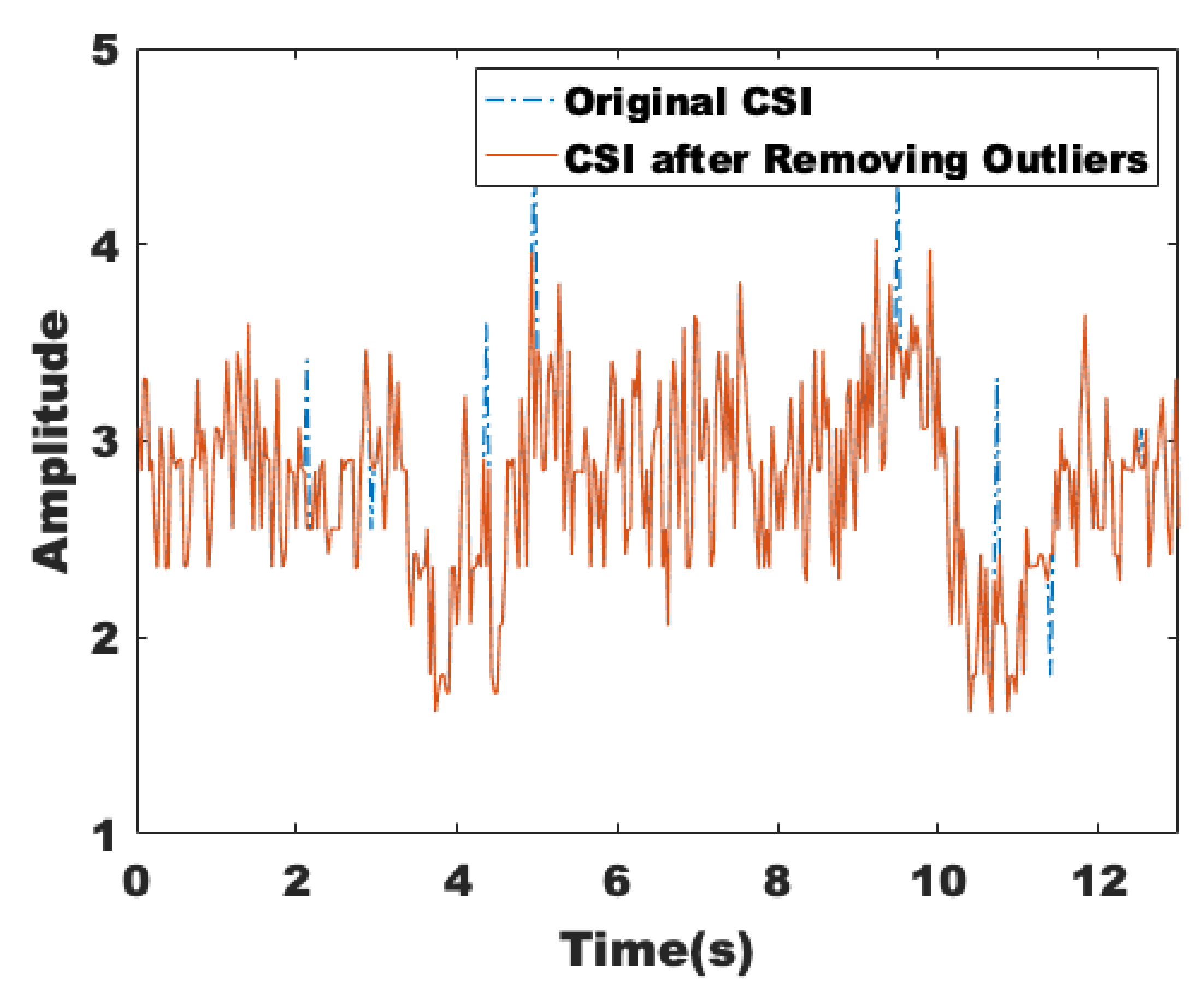

3.2.1. Removing Outliers

3.2.2. Linear Interpolation

3.2.3. Wavelet Denoising

- (1)

- Wavelet decomposition of the signal. Firstly, we need to select a wavelet basis function.The sym wavelet is an improvement of the db wavelet, which has better symmetry while retaining better regularity, so we choose sym8 as the wavelet basis function. Next, the number of layers N to be decomposed is determined. Considering the results of experimental observation, N is set to 6; then, we do the 6-layer wavelet decomposition to according to the wavelet decomposition tree shown in Figure 9.

- (2)

- Threshold quantization of high-frequency coefficients. Determine a suitable threshold value to quantize the high-frequency coefficients of each layer. The main processing methods are hard-threshold and soft-threshold quantization. In this paper, the soft threshold function is chosen because the processing of the signal is relatively smooth. The soft threshold function means that when the absolute value of the wavelet coefficients is less than the given threshold, let it be 0. When it is greater than the threshold, let it be minus the threshold.where w is the wavelet coefficients, is the wavelet coefficients after applying the threshold, and is the threshold value.

- (3)

- Wavelet reconstruction of the signal. Based on the high-frequency coefficients of the N layer and the low-frequency coefficients of the N layer after the second quantization step, wavelet reconstruction is completed to remove the noise and recover the useful signal.

3.3. Environmental Adaptive Mechanism Based on Eigenvalue Density Estimation

| Algorithm 2: Environmental adaptive mechanism. |

| Input: |

| amp_seq |

| Output: |

| sta_profile |

| 1: Initialization: the feature of current environment |

| 2: Get value from the initialized sta_profile: ; |

| 3: flag← mod_select(amp_seq); |

| 4: if flag=true then |

| 5: for i← to length(amp_seq)/15 - 1 do |

| 6: amp_window← amp_seq (i×15+1: i×15+15); |

| 7: feature←; |

| 8: if feature < threshold then |

| 9: add feature to fea_set; |

| 10: end if |

| 11: end for |

| 12: ← Epanechnikov(fea_set); |

| 13: ←; |

| 14: sta_profile←; |

| 15: end if |

3.4. Module Selection

3.5. Anomaly Detection

| Algorithm 3: Anomaly detection. |

| Input: |

| amp_seq |

| Output: |

| time_stamp |

| 1: i← 0; |

| 2: while i<length(amp_seq)/15 do |

| 3: amp_window ← amp_seq (i× 15+1: i× 15+15); |

| 4: feature ←/ |

| 5: if feature(i) > threshold then |

| 6: j←i+1; |

| 7: while feature(i) > threshold do |

| 8: j++; |

| 9: end while |

| 10: if j-1>2 then |

| 11: save i, j to time_stamp; |

| 12: end if |

| 13: i← j; |

| 14: else |

| 15: i++; |

| 16: end if |

| 17: end while |

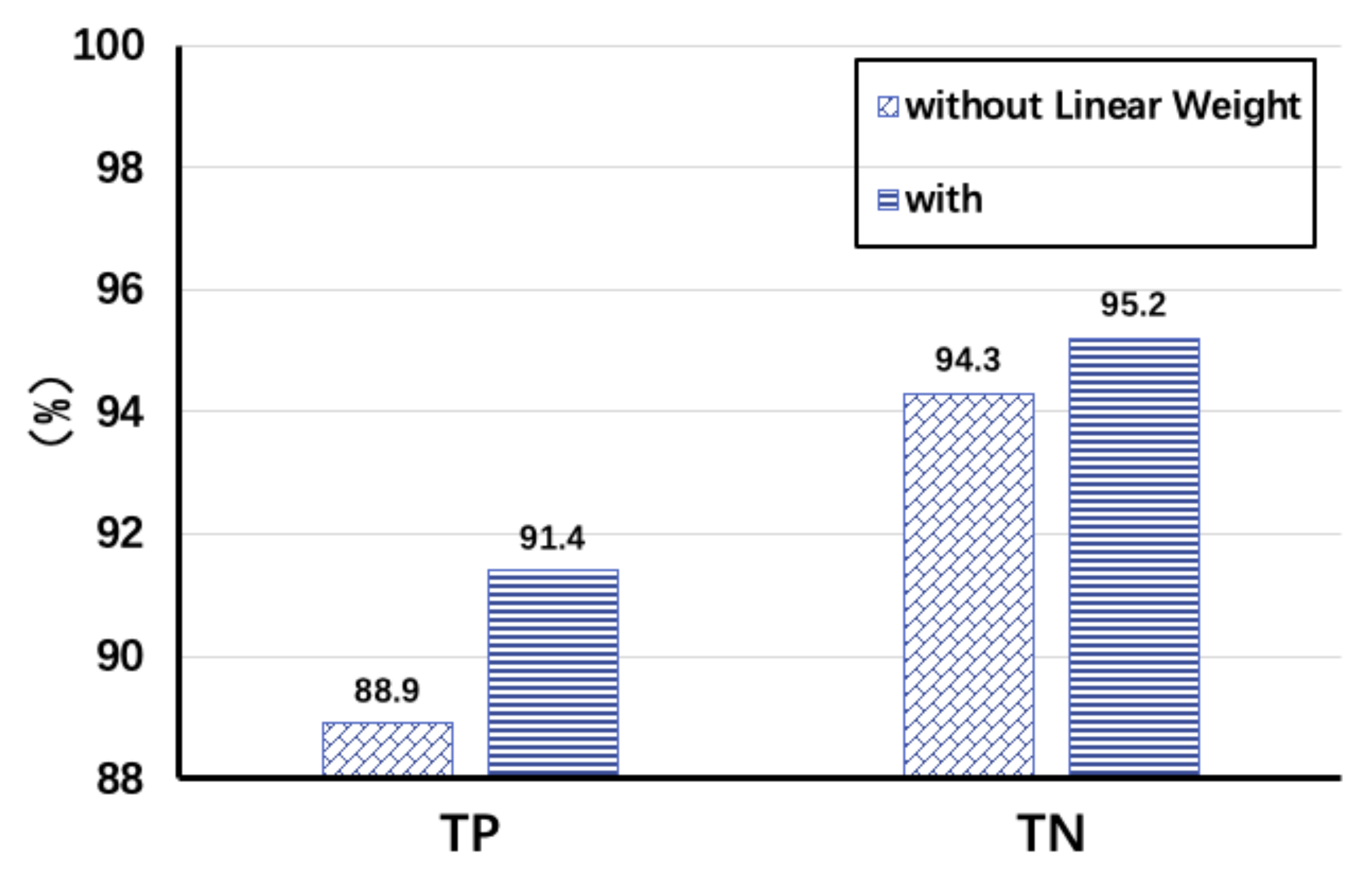

3.6. Verification

4. Rapid Passive Device-Free Tracking

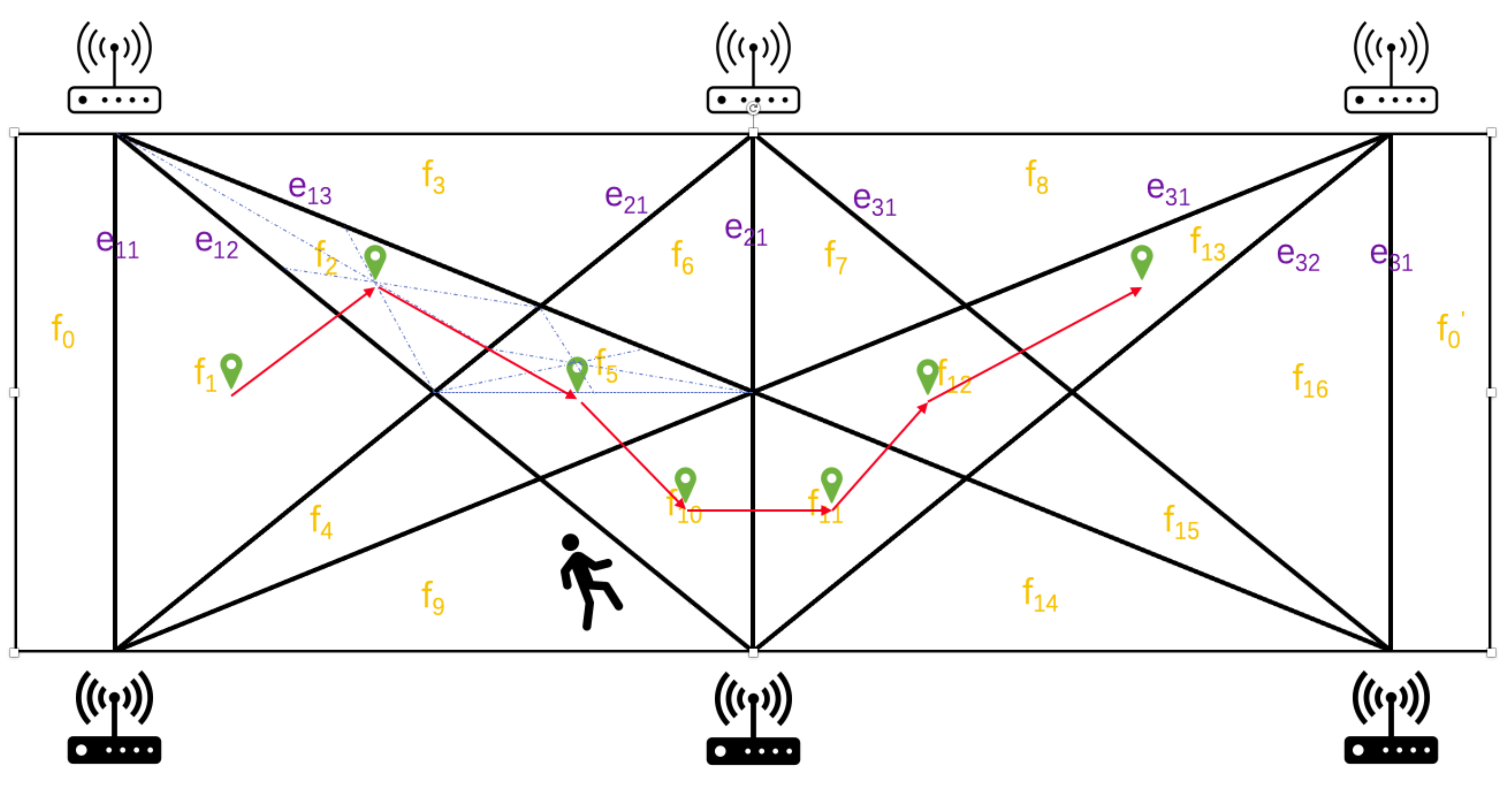

4.1. A Wi-Fi Link Grid for Tracking Targets

- When , it is obvious that .

- Assume when .

- When , the new will connect all the points and forms new links, and intersection points are generated to separate the existed areas and get no less than new areas. Therefore, . Similarly, we get . Therefore, we get .

4.2. Time Synchronization

4.3. Self-Correction of the Wrong Tracking Results

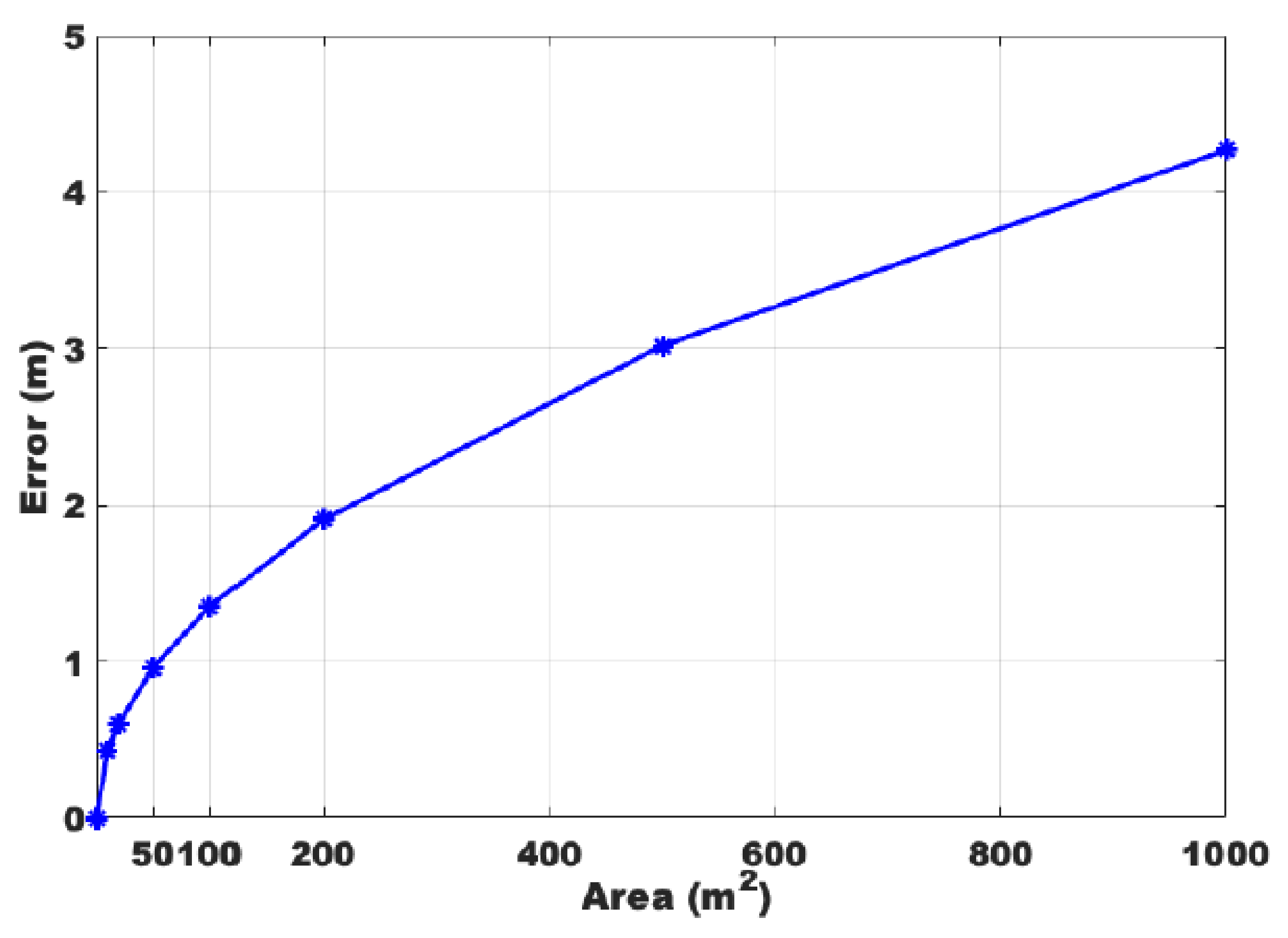

4.4. Analysis on Tracking Error

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yao, P.; Wang, H.; Su, Z. Real-time path planning of unmanned aerial vehicle for target tracking and obstacle avoidance in complex dynamic environment. Aerosp. Sci. Technol. 2015, 47, 269–279. [Google Scholar] [CrossRef]

- Li, X.; Zhang, D.; Lv, Q.; Xiong, J.; Li, S.; Zhang, Y.; Mei, H. IndoTrack: Device-free indoor human tracking with commodity Wi-Fi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1–22. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, H.; Xiong, J.; Jamieson, K.; Chen, X.; Fang, D.; Xie, B. LiFS: Low human-effort, device-free localization with fine-grained subcarrier information. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 3–7 October 2016; pp. 243–256. [Google Scholar]

- Gao, Q.; Wang, J.; Ma, X.; Feng, X.; Wang, H. CSI-based device-free wireless localization and activity recognition using radio image features. IEEE Trans. Veh. Technol. 2017, 66, 10346–10356. [Google Scholar] [CrossRef]

- Khan, U.M.; Kabir, Z.; Hassan, S.A.; Ahmed, S.H. A deep learning framework using passive WiFi sensing for respiration monitoring. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Zayets, A.; Steinbach, E. Robust WiFi-based indoor localization using multipath component analysis. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017; pp. 1–8. [Google Scholar]

- Vasisht, D.; Kumar, S.; Katabi, D. Decimeter-level localization with a single WiFi access point. In Proceedings of the 13th USENIX Symposium on Networked Systems Design and Implementation (NSDI 16), Santa Clara, CA, USA, 16–18 March 2016; pp. 165–178. [Google Scholar]

- Li, X.; Li, S.; Zhang, D.; Xiong, J.; Wang, Y.; Mei, H. Dynamic-music: Accurate device-free indoor localization. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 196–207. [Google Scholar]

- Chang, L.; Chen, X.; Wang, Y.; Fang, D.; Wang, J.; Xing, T.; Tang, Z. FitLoc: Fine-grained and low-cost device-free localization for multiple targets over various areas. IEEE/ACM Trans. Netw. 2017, 25, 1994–2007. [Google Scholar] [CrossRef]

- Kaltiokallio, O.J.; Hostettler, R.; Patwari, N. A novel Bayesian filter for RSS-based device-free localization and tracking. IEEE Trans. Mob. Comput. 2019, 20, 780–795. [Google Scholar] [CrossRef]

- Xiao, J.; Wu, K.; Yi, Y.; Wang, L.; Ni, L.M. Pilot: Passive device-free indoor localization using channel state information. In Proceedings of the 2013 IEEE 33rd ICDCS, Philadelphia, PA, USA, 8–11 July 2013; pp. 236–245. [Google Scholar]

- Qian, K.; Wu, C.; Yang, Z.; Yang, C.; Liu, Y. Decimeter level passive tracking with wifi. In Proceedings of the 3rd Workshop on Hot Topics in Wireless, New York, NY, USA, 3–7 October 2016; ACM: New York, NY, USA, 2016; pp. 44–48. [Google Scholar]

- Wu, C.; Yang, Z.; Zhou, Z.; Qian, K.; Liu, Y.; Liu, M. PhaseU: Real-time LOS identification with WiFi. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Hong Kong, China, 26 April–1 May 2015; pp. 2038–2046. [Google Scholar]

- Fang, S.; Munir, S.; Nirjon, S. Fusing wifi and camera for fast motion tracking and person identification: Demo abstract. In Proceedings of the 18th Conference on Embedded Networked Sensor Systems, Virtual Event, 16–19 November 2020; pp. 617–618. [Google Scholar]

- Hijikata, S.; Terabayashi, K.; Umeda, K. A simple indoor self-localization system using infrared LEDs. In Proceedings of the 6th Int’l Conference Networked Sensing Systems (INSS) 2009, Pittsburgh, PA, USA, 17–19 June 2009; pp. 1–7. [Google Scholar]

- Youssef, M.; Mah, M.; Agrawala, A. Challenges: Device-free passive localization for wireless environments. In Proceedings of the 13th Annual ACM International Conference on Mobile Computing and Networking, Montreal, QC, Canada, 9–14 September 2007; pp. 222–229. [Google Scholar]

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home gesture recognition using wireless signals. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013; pp. 27–38. [Google Scholar]

- Wang, Y.; Wu, K.; Ni, L.M. Wifall: Device-free fall detection by wireless networks. IEEE Trans. Mob. Comput. 2016, 16, 581–594. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, D.; Wang, Y.; Ma, J.; Wang, Y.; Li, S. RT-Fall: A real-time and contactless fall detection system with commodity WiFi devices. IEEE Trans. Mob. Comput. 2016, 16, 511–526. [Google Scholar] [CrossRef]

- Qian, K.; Wu, C.; Yang, Z.; Liu, Y.; Zhou, Z. PADS: Passive detection of moving targets with dynamic speed using PHY layer information. In Proceedings of the 20th IEEE IInternational Conference on Parallel and Distributed Systems (ICPADS), Hsinchu, Taiwan, 16–19 December 2014; pp. 1–8. [Google Scholar]

- Zhou, Z.; Yang, Z.; Wu, C.; Shangguan, L.; Liu, Y. Towards omnidirectional passive human detection. In Proceedings of the IEEE INFOCOM 2013, Turin, Italy, 14–19 April 2013; pp. 3057–3065. [Google Scholar]

- Abdelnasser, H.; Youssef, M.; Harras, K.A. Wigest: A ubiquitous wifi-based gesture recognition system. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Hong Kong, China, 26 April–1 May 2015; pp. 1472–1480. [Google Scholar]

- Sun, L.; Sen, S.; Koutsonikolas, D.; Kim, K.H. Widraw: Enabling hands-free drawing in the air on commodity wifi devices. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; pp. 77–89. [Google Scholar]

- Wang, G.; Zou, Y.; Zhou, Z.; Wu, K.; Ni, L.M. We can hear you with Wi-Fi! IEEE Trans. Mob. Comput. 2016, 15, 2907–2920. [Google Scholar] [CrossRef]

- Wang, X.; Yang, C.; Mao, S. PhaseBeat: Exploiting CSI phase data for vital sign monitoring with commodity WiFi devices. In Proceedings of the 2017 IEEE 37th Int’l Conf. Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 1230–1239. [Google Scholar]

- Liu, X.; Cao, J.; Tang, S.; Wen, J.; Guo, P. Contactless respiration monitoring via off-the-shelf WiFi devices. IEEE Trans. Mob. Comput. 2015, 15, 2466–2479. [Google Scholar] [CrossRef]

- Wu, C.; Yang, Z.; Zhou, Z.; Liu, X.; Liu, Y.; Cao, J. Non-invasive detection of moving and stationary human with WiFi. IEEE J. Sel. Areas Commun. 2015, 33, 2329–2342. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, D.; Ma, J.; Wang, Y.; Wang, Y.; Wu, D.; Gu, T.; Xie, B. Human respiration detection with commodity wifi devices: Do user location and body orientation matter? In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 25–36. [Google Scholar]

- Zeng, Y.; Wu, D.; Xiong, J.; Liu, J.; Liu, Z.; Zhang, D. MultiSense: Enabling multi-person respiration sensing with commodity wifi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–29. [Google Scholar] [CrossRef]

- Zhai, S.; Tang, Z.; Nurmi, P.; Fang, D.; Chen, X.; Wang, Z. RISE: Robust wireless sensing using probabilistic and statistical assessments. In Proceedings of the 27th Annual International Conference on Mobile Computing and Networking, New Orleans, LA, USA, 25–29 October 2021; pp. 309–322. [Google Scholar]

- Li, C.; Cao, Z.; Liu, Y. Deep AI Enabled Ubiquitous Wireless Sensing: A Survey. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Zhang, R.; Jing, X.; Wu, S.; Jiang, C.; Mu, J.; Yu, F.R. Device-free wireless sensing for human detection: The deep learning perspective. IEEE Internet Things J. 2020, 8, 2517–2539. [Google Scholar] [CrossRef]

- Zhang, J.; Tang, Z.; Li, M.; Fang, D.; Nurmi, P.; Wang, Z. CrossSense: Towards cross-site and large-scale WiFi sensing. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November2018; pp. 305–320. [Google Scholar]

- Zheng, Y.; Zhang, Y.; Qian, K.; Zhang, G.; Liu, Y.; Wu, C.; Yang, Z. Zero-effort cross-domain gesture recognition with Wi-Fi. In Proceedings of the 17th Annual International Conference on Mobile Systems, Applications, and Services, Seoul, Korea, 17–21 June 2019; pp. 313–325. [Google Scholar]

- Wang, J.; Zhao, Y.; Ma, X.; Gao, Q.; Pan, M.; Wang, H. Cross-scenario device-free activity recognition based on deep adversarial networks. IEEE Trans. Veh. Technol. 2020, 69, 5416–5425. [Google Scholar] [CrossRef]

- Wang, J.; Fang, D.; Yang, Z.; Jiang, H.; Chen, X.; Xing, T.; Cai, L. E-hipa: An energy-efficient framework for high-precision multi-target-adaptive device-free localization. IEEE Trans. Mob. Comput. 2017, 16, 716–729. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, Y.; Guo, X.; Ni, L.M. Rass: A real-time, accurate, and scalable system for tracking transceiver-free objects. IEEE Trans. Parallel Distrib. Syst. 2013, 24, 996–1008. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, D.; Xiong, J.; Wang, H.; Niu, K.; Jin, B.; Wang, Y. From Fresnel Diffraction Model to Fine-grained Human Respiration Sensing with Commodity Wi-Fi Devices. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 53. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, D.; Niu, K.; Lv, Q.; Liu, Y.; Wu, D.; Gao, R.; Xie, B. MFDL: A Multicarrier Fresnel Penetration Model based Device-Free Localization System leveraging Commodity Wi-Fi Cards. arXiv 2017, arXiv:1707.07514. [Google Scholar]

- Niu, K.; Zhang, F.; Xiong, J.; Li, X.; Yi, E.; Zhang, D. Boosting fine-grained activity sensing by embracing wireless multipath effects. In Proceedings of the 14th International Conference on emerging Networking EXperiments and Technologies, Heraklion, Greece, 4–7 December 2018; pp. 139–151. [Google Scholar]

- Xie, Y.; Xiong, J.; Li, M.; Jamieson, K. xD-track: Leveraging multi-dimensional information for passive wi-fi tracking. In Proceedings of the 3rd Workshop on Hot Topics in Wireless, New York, NY, USA, 3–7 October 2016; pp. 39–43. [Google Scholar]

- Zhang, F.; Chang, Z.; Niu, K.; Xiong, J.; Jin, B.; Lv, Q.; Zhang, D. Exploring lora for long-range through-wall sensing. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–27. [Google Scholar] [CrossRef]

- Xie, B.; Xiong, J. Combating interference for long range LoRa sensing. In Proceedings of the 18th Conference on Embedded Networked Sensor Systems, Virtual Event, 16–19 November 2020; pp. 69–81. [Google Scholar]

- Chen, L.; Xiong, J.; Chen, X.; Lee, S.I.; Zhang, D.; Yan, T.; Fang, D. LungTrack: Towards contactless and zero dead-zone respiration monitoring with commodity RFIDs. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Fang, J.; Wang, L.; Qin, Z.; Hou, Y.; Zhao, W.; Lu, B. Winfrared: An Infrared-Like Rapid Passive Device-Free Tracking with Wi-Fi. In Proceedings of the International Conference on Wireless Algorithms, Systems, and Applications; Springer: Cham, Switzerland, 2021; pp. 65–77. [Google Scholar]

- Halperin, D.; Hu, W.; Sheth, A.; Wetherall, D. Tool Release: Gathering 802.11n Traces with Channel State Information. ACM SIGCOMM CCR 2011, 41, 53. [Google Scholar] [CrossRef]

- Scott, D.W. Multivariate Density Estimation: Theory, Practice, and Visualization; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Zheng, X.; Yang, S.; Jin, N.; Wang, L.; Wymore, M.L.; Qiao, D. Diva: Distributed voronoi-based acoustic source localization with wireless sensor networks. In Proceedings of the IEEE INFOCOM 2016, San Francisco, CA, USA, 10–14 April 2016; pp. 1–9. [Google Scholar]

- Hong, F.; Wang, X.; Yang, Y.; Zong, Y.; Zhang, Y.; Guo, Z. WFID: Passive device-free human identification using WiFi signal. In Proceedings of the 13th International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Hiroshima, Japan, 28 November–1 December 2016; pp. 47–56. [Google Scholar]

- Zou, H.; Zhou, Y.; Yang, J.; Gu, W.; Xie, L.; Spanos, C. Wifi-based human identification via convex tensor shapelet learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Karanam, C.R.; Korany, B.; Mostofi, Y. Tracking from one side: Multi-person passive tracking with WiFi magnitude measurements. In Proceedings of the 18th International Conference on Information Processing in Sensor Networks, Montreal, QC, Canada, 16–18 April 2019; pp. 181–192. [Google Scholar]

- Wu, D.; Zeng, Y.; Gao, R.; Li, S.; Li, Y.; Shah, R.C.; Lu, H.; Zhang, D. WiTraj: Robust Indoor Motion Tracking with WiFi Signals. IEEE Trans. Mob. Comput. 2021. [Google Scholar] [CrossRef]

| Related Work | Accuracy | Experimental Environment | Others |

|---|---|---|---|

| LiFs [3] | 0.5 m, 1.1 m | about 100 m, 11 Tx/Rx | without all Tx/Rx locations |

| Indotrack [2] | 35 cm | 6 m × 6 m, 1Tx and 2Rx | high latency |

| [51] | 55 cm | 7 m × 7 m, 1Tx and 3Rx | multi-person |

| [52] | 26 cm, 82 cm | 7 m × 6 m, 1Tx and 3Rx | single target |

| WIDE method | 0.95 m | 50 m, 6 Tx/Rx | partial multi-target |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, J.; Wang, L.; Qin, Z.; Lu, B.; Zhao, W.; Hou, Y.; Chen, J. A Lightweight Passive Human Tracking Method Using Wi-Fi. Sensors 2022, 22, 541. https://doi.org/10.3390/s22020541

Fang J, Wang L, Qin Z, Lu B, Zhao W, Hou Y, Chen J. A Lightweight Passive Human Tracking Method Using Wi-Fi. Sensors. 2022; 22(2):541. https://doi.org/10.3390/s22020541

Chicago/Turabian StyleFang, Jian, Lei Wang, Zhenquan Qin, Bingxian Lu, Wenbo Zhao, Yixuan Hou, and Jenhui Chen. 2022. "A Lightweight Passive Human Tracking Method Using Wi-Fi" Sensors 22, no. 2: 541. https://doi.org/10.3390/s22020541

APA StyleFang, J., Wang, L., Qin, Z., Lu, B., Zhao, W., Hou, Y., & Chen, J. (2022). A Lightweight Passive Human Tracking Method Using Wi-Fi. Sensors, 22(2), 541. https://doi.org/10.3390/s22020541