Contactless Measurement of Vital Signs Using Thermal and RGB Cameras: A Study of COVID 19-Related Health Monitoring

Abstract

:1. Introduction

- We present a system that estimates multiple subjects’ vital signs including HR and RR using thermal and RGB cameras. To the best of our knowledge, it is the first study that includes different face masks in contactless RR estimation and our results indicate that the proposed system is feasible for COVID 19-related applications;

- We propose signal processing algorithms that estimate the HR and RR of multiple subjects under different conditions. Examples of novel approaches include increasing the contrast of the thermal images to improve the SNR of the extracted signal for RR estimation as well as a sequence of steps including independent component analysis (ICA) and empirical mode decomposition (EMD) to enhance heart rate estimation accuracy from RGB frames. Robustness is improved by performing a signal quality assessment of the physiological signals and detecting the deviation in the orientation of the head from the direction towards the camera. By applying the proposed approaches, the system can provide accurate HR and RR estimations with normal indoor illuminations and for subjects with different skin tones;

- Our work addresses some of the issues reported in other works such as the small distance required between the cameras and the subjects and the need to have a large portion of the face exposed to the camera. Therefore, our system is robust at larger distances, and can simultaneously estimate the vital signs of two people whose faces might be partially covered with face masks or not pointed directly towards the cameras.

2. Related Works

3. Materials and Protocols

3.1. Data Acquisition System

3.2. Experimental Protocols

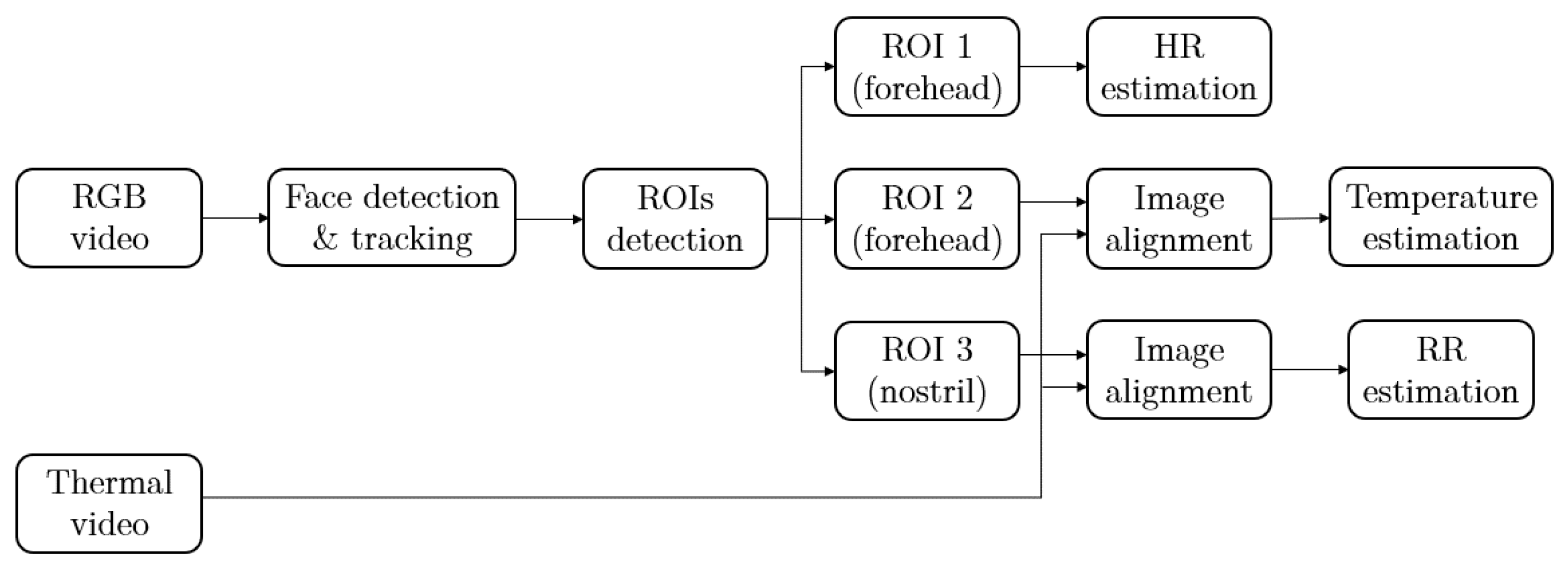

4. Methods

4.1. Face Detection

4.2. Regions of Interest (ROIs)

4.2.1. ROI for BT Estimation

4.2.2. ROI for RR Estimation

4.2.3. ROI for HR Estimation

4.3. Head Movement Detection

4.4. Frame Registration

4.5. Vital Signs Estimation

4.5.1. Body Temperature Measurement

4.5.2. Heart Rate Estimation

4.5.3. Respiration Rate Estimation

4.6. Signal Quality Evaluation

5. Results

5.1. Facial ROIs Detection

5.2. Vital Sign Estimation

5.2.1. Respiration Rate Estimation

5.2.2. Heart Rate Estimation

5.2.3. Two-Subjects RR and HR Estimation

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- WHO. Coronavirus Disease (COVID-19). [Online]. 10 November 2020. Available online: https://www.who.int/health-topics/coronavirus (accessed on 6 April 2021).

- Li, X.; Chen, J.; Zhao, G.; Pietikäinen, M. Remote Heart Rate Measurement from Face Videos under Realistic Situations. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 4264–4271. [Google Scholar] [CrossRef]

- Cho, Y.; Julier, S.J.; Marquardt, N. Robust tracking of respiratory rate in high-dynamic range scenes using mobile thermal imaging. Biomed. Opt. Express 2017, 8, 4480–4503. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mutlu, K.; Rabell, J.E.; del Olmo, P.M.; Haesler, S. IR thermography-based monitoring of respiration phase without image segmentation. J. Neurosci. Methods 2018, 301, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Weenk, M.; van Goor, H.; Frietman, B.; Engelen, L.; van Laarhoven, C.J.; Smit, J.; Bredie, S.J.; van de Belt, T. Continuous monitoring of vital signs using wearable devices on the general ward: Pilot study. JMIRmHealth uHealth 2017, 5, 1–12. [Google Scholar] [CrossRef]

- Verkruysse, W.; Svaasand, L.O.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Poh, M.Z.; McDuff, D.J.; Picard, R.W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express 2010, 18, 10762–10774. [Google Scholar] [CrossRef]

- Zhao, F.; Li, M.; Qian, Y.; Tsien, J.Z. Remote measurements of heart and respiration rates for telemedicine. PLoS ONE 2013, 8, e71384. [Google Scholar] [CrossRef] [Green Version]

- Shao, D.; Yang, Y.; Liu, C.; Tsow, F.; Yu, H. Noncontact monitoring breathing pattern, exhalation flow rate and pulse transit time. IEEE Trans. Biomed. Eng. 2014, 61, 2760–2767. [Google Scholar] [CrossRef] [PubMed]

- Reyes, B.A.; Reljin, N.; Kong, Y.; Nam, Y. Tidal volume and instantaneous respiration rate estimation using a volumetric surrogate signal acquired via a smartphone camera. IEEE J. Biomed. Health Inform. 2017, 21, 764–777. [Google Scholar] [CrossRef] [PubMed]

- Muhammad, U.; Evans, R.; Saatchi, R.; Kingshott, R. P016 Using non-invasive thermal imaging for apnoea detection. BMJ Open Respir. Res. 2019, 6. [Google Scholar] [CrossRef]

- Scebba, G.; da Poian, G.; Karlen, W. Multispectral Video Fusion for Non-Contact Monitoring of Respiratory Rate and Apnea. IEEE Trans. Biomed. Eng. 2021, 68, 350–359. [Google Scholar] [CrossRef] [PubMed]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; p. I. [Google Scholar] [CrossRef]

- Asthana, A.; Zafeiriou, S.; Cheng, S.; Pantic, M. Robust Discriminative Response Map Fitting with Constrained Local Models. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3444–3451. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint Face Detection and Alignment Using Multitask Cascaded Convolutional Networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Zhu, X.; Lei, Z.; Shi, H.; Wang, X.; Li, S.Z. S3FD: Single Shot Scale-Invariant Face Detector. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 192–201. [Google Scholar]

- Tang, X.; Du, D.K.; He, Z.; Liu, J. PyramidBox: A Context-assisted Single Shot Face Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Jiang, Z.; Hu, M.; Gao, Z.; Fan, L.; Dai, R.; Pan, Y.; Tang, W.; Zhai, G.; Lu, Y. Detection of Respiratory Infections Using RGB-Infrared Sensors on Portable Device. IEEE Sens. J. 2020, 20, 13674–13681. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Deng, J.; Guo, J.; Ververas, E.; Kotsia, I.; Zafeiriou, S. RetinaFace: Single-Shot Multi-Level Face Localisation in the Wild. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5202–5211. [Google Scholar] [CrossRef]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. WIDER FACE: A Face Detection Benchmark. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 5525–5533. [Google Scholar]

- Rouast, P.V.; Adam, M.T.; Chiong, R.; Cornforth, D.; Lux, E. Remote heart rate measurement using low-cost RGB face video: A technical literature review. Front. Comput. Sci. 2018, 12, 858–872. [Google Scholar] [CrossRef]

- Lewandowska, M.; Rumiński, J.; Kocejko, T.; Nowak, J. Measuring pulse rate with a webcam—A non-contact method for evaluating cardiac activity. In Proceedings of the 2011 IEEE Federated Conference on Computer Science and Information Systems (FedCSIS), Szczecin, Poland, 18–21 September 2011; pp. 405–410. [Google Scholar]

- Kwon, S.; Kim, H.; Park, K.S. Validation of heart rate extraction using video imaging on a built-in camera system of a smartphone. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 2174–2177. [Google Scholar]

- Hsu, Y.; Lin, Y.L.; Hsu, W. Learning-based heart rate detection from remote photoplethysmography features. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 4433–4437. [Google Scholar]

- Holton, B.D.; Mannapperuma, K.; Lesniewski, P.J.; Thomas, J.C. Signal recovery in imaging photoplethysmography. Physiol. Meas. 2013, 34, 1499. [Google Scholar] [CrossRef] [PubMed]

- Feng, L.; Po, L.M.; Xu, X.; Li, Y. Motion artifacts suppression for remote imaging photoplethysmography. In Proceedings of the 2014 19th International Conference on Digital Signal Processing, Hong Kong, China, 20–23 August 2014; pp. 18–23. [Google Scholar]

- Cardoso, J.F. High-order contrasts for independent component analysis. Neural Comput. 1999, 11, 157–192. [Google Scholar] [CrossRef] [PubMed]

- Ali, M. Reza. Realization of the Contrast Limited Adaptive Histogram Equalization (CLAHE) for Real-Time Image Enhancement. VLSI Signal Process. 2004, 38, 35–44. [Google Scholar]

| 75 cm | 120 cm | 200 cm | |

|---|---|---|---|

| No mask | 0.41 | 0.28 | 0.80 |

| Mask1 (medical/cloth) | 0.52 | 0.45 | 0.59 |

| Mask2 (N90) | 0.06 | 0.01 | 0.17 |

| Mask3 (N95) | 0.06 | 0.42 | 0.88 |

| 60 cm | 80 cm | 100 cm | 120 cm | |||||

|---|---|---|---|---|---|---|---|---|

| No Mask | Mask | No Mask | Mask | No Mask | Mask | No Mask | Mask | |

| Subject1 | 1.83 | 0.50 | 2.04 | 1.60 | 2.73 | 0.74 | 2.08 | 0.74 |

| Subject2 | 2.68 | 0.40 | 2.24 | 0.36 | 2.49 | 0.23 | 1.25 | 0.52 |

| Subject3 | 1.84 | 0.46 | 1.72 | 0.56 | 1.69 | 0.57 | 2.01 | 0.18 |

| Subject4 | 1.70 | 2.30 | 2.06 | 1.65 | 2.31 | 1.69 | 2.09 | 1.54 |

| Subject5 | 1.04 | 1.65 | 1.99 | 1.75 | 2.10 | 0.88 | 1.26 | 1.15 |

| Subject6 | 1.94 | 2.22 | 2.58 | 1.40 | 2.45 | 1.04 | 3.10 | 2.16 |

| Subject7 | 1.82 | 0.59 | 2.18 | 0.49 | 0.65 | 0.60 | 1.14 | 0.78 |

| Subject8 | 1.65 | 1.24 | 1.35 | 0.64 | 2.17 | 1.01 | 1.38 | 1.14 |

| Subject9 | 1.18 | 1.67 | 0.99 | 0.96 | 1.12 | 1.07 | 1.24 | 1.42 |

| Subject10 | 1.71 | 2.47 | 2.30 | 1.80 | 2.85 | 2.46 | 1.56 | 1.70 |

| 60 cm | 80 cm | 100 cm | 120 cm | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Skin Tone | |||||||||

| Subject1 | pale | 2.92 | 1.21 | 3.02 | 1.11 | 4.31 | 1.57 | 2.93 | 1.31 |

| Subject2 | pale | 3.58 | 2.59 | 4.08 | 3.26 | 3.82 | 3.05 | 4.83 | 4.00 |

| Subject3 | pale | 2.61 | 2.42 | 4.28 | 2.86 | 3.89 | 3.01 | 4.50 | 2.88 |

| Subject4 | pale | 3.35 | 2.31 | 2.17 | 1.82 | 2.88 | 1.82 | 2.50 | 1.23 |

| Subject5 | pale | 1.73 | 3.21 | 2.62 | 1.44 | 1.81 | 1.76 | 2.03 | 1.65 |

| Subject6 | pale | 1.50 | 1.78 | 1.05 | 1.43 | 1.86 | 2.29 | 1.76 | 3.02 |

| Subject7 | medium | 1.68 | 1.55 | 2.61 | 2.90 | 3.05 | 2.55 | 3.09 | 2.69 |

| Subject8 | medium | 2.53 | 1.53 | 3.07 | 3.05 | 2.35 | 2.59 | 3.04 | 2.89 |

| Subject9 | dark | 2.72 | 1.75 | 3.83 | 1.88 | 3.31 | 2.44 | 2.21 | 1.56 |

| Subject10 | dark | 3.38 | 2.15 | 2.20 | 1.86 | 2.20 | 1.50 | 2.09 | 1.88 |

| Subject1 | Subject2 | |||

|---|---|---|---|---|

| RR | HR | RR | HR | |

| Experiment 1 | 0.89 ± 0.47 | 3.60 ± 2.10 | 1.31 ± 0.86 | 1.72 ± 1.40 |

| Experiment 2 | 1.25 ± 0.63 | 2.17 ± 2.20 | 0.80 ± 0.57 | 2.41 ± 1.13 |

| Experiment 3 | 0.49 ± 0.33 | 1.79 ± 1.06 | 1.07 ± 0.58 | 1.35 ± 1.12 |

| Experiment 4 (mask) | 1.57 ± 0.96 | 2.03 ± 1.18 | 0.74 ± 0.52 | 2.39 ± 1.67 |

| Experiment 5 (mask) | 1.60 ± 0.58 | 2.15 ± 2.07 | 0.49 ± 0.32 | 2.31 ± 1.09 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, F.; He, S.; Sadanand, S.; Yusuf, A.; Bolic, M. Contactless Measurement of Vital Signs Using Thermal and RGB Cameras: A Study of COVID 19-Related Health Monitoring. Sensors 2022, 22, 627. https://doi.org/10.3390/s22020627

Yang F, He S, Sadanand S, Yusuf A, Bolic M. Contactless Measurement of Vital Signs Using Thermal and RGB Cameras: A Study of COVID 19-Related Health Monitoring. Sensors. 2022; 22(2):627. https://doi.org/10.3390/s22020627

Chicago/Turabian StyleYang, Fan, Shan He, Siddharth Sadanand, Aroon Yusuf, and Miodrag Bolic. 2022. "Contactless Measurement of Vital Signs Using Thermal and RGB Cameras: A Study of COVID 19-Related Health Monitoring" Sensors 22, no. 2: 627. https://doi.org/10.3390/s22020627

APA StyleYang, F., He, S., Sadanand, S., Yusuf, A., & Bolic, M. (2022). Contactless Measurement of Vital Signs Using Thermal and RGB Cameras: A Study of COVID 19-Related Health Monitoring. Sensors, 22(2), 627. https://doi.org/10.3390/s22020627