The Color Improvement of Underwater Images Based on Light Source and Detector

Abstract

:1. Introduction

1.1. The Background of Color Improvement in Marine Surveys

1.2. Related Work

1.2.1. Color Restoration with the Prior Information of Light Attenuation

1.2.2. Color Enhancement without the Information of Light Attenuation

1.3. Our Work

2. Experimental Setup and Details

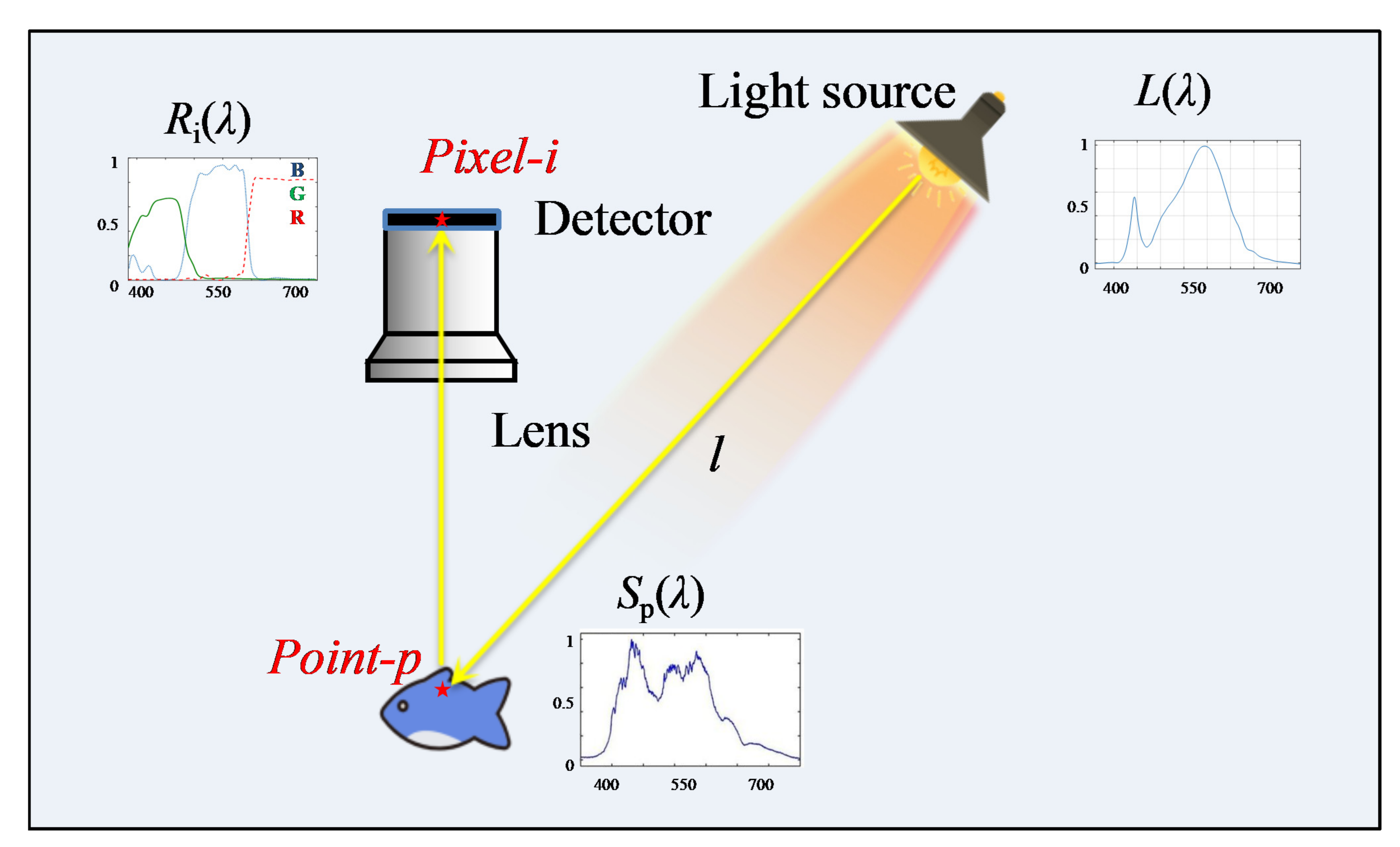

2.1. The Analysis of the Underwater Imaging Process

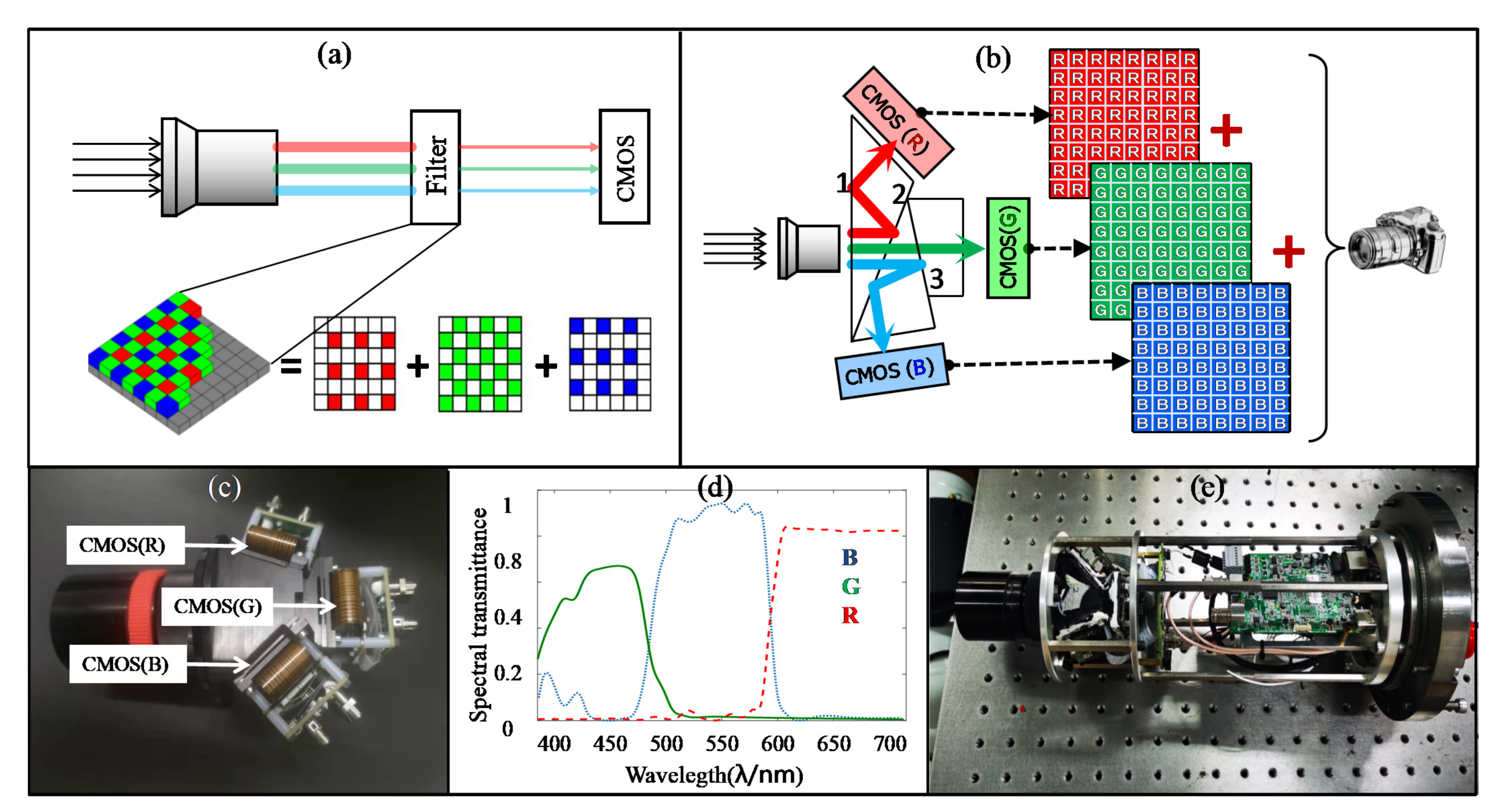

2.2. Experimental Setup and Process

- The camera contains four sub-cameras with 3× optical zoom (with 18 mm, 27 mm, 80 mm) and 30× digital zoom;

- The resolution is 3840 × 2160;

- The white balance setting is 5500 k;

- The shutter speed is 1/125 s;

- The exposure compensation is 0;

- The ISO is 640;

- AF (auto focus) is AF-C.

- The resolution is 3840 × 2160;

- The size of detector is 1/1.8”;

- The minimum illumination in color mode is 0.002 Lux@F1.2;

- The white balance setting is indirect sunlight on sunny days (i.e., 5500 k);

- The digital noise reduction level is set to 50;

- The brightness is set to 50;

- The contrast ratio is set to 50;

- The sharpness is set to 50;

- The saturation is set to 50;

- The shutter speed is 1/25 s;

- The day/night conversion mode is turned off;

- The Backlight compensation function is turned off.

- The resolution is 12 megapixels;

- The white balance setting is 5500 k;

- The sharpness setting is moderate;

- The shutter speed is 1/125 s;

- The exposure compensation is 0;

- ISO is set from 100 to 3200;

- The function of color is set to flat;

- The FOV is set to linearity;

- The function of super phone is turned off.

3. Experimental Results

4. Conclusions and Discussion

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ahn, J.; Yasukawa, S.; Sonoda, T.; Nishida, Y.; Ishii, K.; Ura, T. An Optical Image Transmission System for Deep Sea Creature Sampling Missions Using Autonomous Underwater Vehicle. IEEE J. Ocean. Eng. 2018, 45, 350–361. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Zhang, Y.; Chen, M.; Serikawa, S.; Kim, H. Underwater Optical Image Processing: A Comprehensive Review. Mob. Netw. Appl. 2017, 22, 1204–1211. [Google Scholar] [CrossRef]

- Du, M.; Peng, X.; Zhang, H.; Ye, C.; Dasgupta, S.; Li, J.; Li, J.; Liu, S.; Xu, H.; Chen, C.; et al. Geology, environment and life of the deepest part of the world’s ocean. Innovation 2021, 2, 100109. [Google Scholar] [CrossRef]

- Lee, D.; Kim, G.; Kim, D.; Myung, H.; Choi, H.T. Vision-based object detection and tracking for autonomous navigation of underwater robots. Ocean. Eng. 2012, 48, 59–68. [Google Scholar] [CrossRef]

- Feng, F.; Wu, G.; Wu, Y.; Miao, Y.; Liu, B. Algorithm for Underwater Polarization Imaging Based on Global Estimation. Acta Opt. Sin. 2020, 40, 75–83. [Google Scholar]

- Huang, H.; Sun, Z.; Liu, S.; Di, Y.; Xu, J.; Liu, C.; Xu, R.; Song, H.; Zhan, S.; Wu, J. Underwater Hyperspectral imaging for in situ underwater microplastic detection. Sci. Total Environ. 2021, 776, 145960. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, X.; Cao, X.; Huang, C.; Liu, E.; Qian, S.; Liu, X.; Wu, Y.; Dong, F.; Qiu, C.W.; et al. Artificial Intelligence: A Powerful Paradigm for Scientific Research. Innovation 2021, 2, 100179. [Google Scholar] [CrossRef]

- Zhang, L.; Lei, J.; Zhang, R.; Zhang, F.; Zhang, G.; Zhu, Y. Polarization characteristic analysis of orthogonal reflectors with a side-scanning galvanometer in a laser communication terminal system. Appl. Opt. 2020, 59, 9944–9955. [Google Scholar] [CrossRef] [PubMed]

- Lin, J.; Du, Z.; Yu, C.; Ge, W.; Lü, W.; Deng, H.; Zhang, C.; Chen, X.; Zhang, Z.; Xu, J. Machine-vision-based acquisition, pointing, and tracking system for underwater wireless optical communications. Chin. Opt. Lett. 2021, 19, 050604. [Google Scholar] [CrossRef]

- Liu, B.; Liu, Z.; Men, S.; Li, Y.; Ding, Z.; He, J.; Zhao, Z. Underwater Hyperspectral Imaging Technology and Its Applications for Detecting and Mapping the Seafloor: A Review. Sensors 2020, 20, 4962. [Google Scholar] [CrossRef]

- Vlachos, M.; Skarlatos, D. An Extensive Literature Review on Underwater Image Colour Correction. Sensors 2021, 21, 5690. [Google Scholar] [CrossRef]

- Li, Y.; Lu, H.; Serikawa, S. Underwater Image Devignetting and Color Correction; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Zhao, X.; Jin, T.; Qu, S. Deriving inherent optical properties from background color and underwater image enhancement. Ocean. Eng. 2015, 94, 163–172. [Google Scholar] [CrossRef]

- Chang, H.H.; Cheng, C.Y.; Sung, C.C. Single Underwater Image Restoration Based on Depth Estimation and Transmission Compensation. IEEE J. Ocean. Eng. 2019, 44, 1130–1149. [Google Scholar] [CrossRef]

- Kan, L.; Yu, J.; Yang, Y.; Liu, H.; Wang, J. Color correction of underwater images using spectral data. In Proceedings of the Optoelectronic Imaging and Multimedia Technology III, Beijing, China, 9–11 October 2014; International Society for Optics and Photonics: Bellingham, WA, USA, 2014. [Google Scholar]

- Boffety, M.; Galland, F.; Allais, A. Color image simulation for underwater optics. Appl. Opt. 2012, 51, 5633. [Google Scholar] [CrossRef]

- Kaeli, J.W.; Singh, H.; Murphy, C.; Kunz, C. Improving Color Correction for Underwater Image Surveys. In Proceedings of the OCEANS’11 MTS/IEEE KONA, Waikoloa, HI, USA, 19–22 September 2011. [Google Scholar]

- McGlamery, B.L. A Computer Model for Underwater Camera Systems. Ocean Opt. 1980, 6, 221–231. [Google Scholar]

- Jaffe, J.S. Computer Modeling and the Design of Optimal Underwater Imaging Systems. IEEE J. Ocean. Eng. 1990, 15, 101–111. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, H.; Shang, D.; Li, C.; Quan, X. An Underwater Image Enhancement Method for Different Illumination Conditions Based on Color Tone Correction and Fusion-Based Descattering. Sensors 2019, 19, 5567. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, Y.; Song, H.; Liu, H.; Wei, H.; Yang, P.; Zhan, S.; Wang, H.; Huang, H.; Liao, N.; Mu, Q.; et al. Model-based restoration of underwater spectral images captured with narrowband filters. Opt. Express 2016, 24, 13101. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Li, Y.; Nakashima, S.; Serikawa, S. Turbidity Underwater Image Restoration Using Spectral Properties and Light Compensation. Ieice Trans. Inf. Syst. 2016, 99, 219–227. [Google Scholar] [CrossRef] [Green Version]

- Park, E.; Sim, J.Y. Underwater Image Restoration Using Geodesic Color Distance and Complete Image Formation Model. IEEE Access 2020, 8, 157918–157930. [Google Scholar] [CrossRef]

- He, K.M.; Sun, J.; Tang, X.O. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Galdran, A.; Pardo, D.; Picón, A.; Gila, A.A. Automatic Red-Channel Underwater Image Restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef] [Green Version]

- Drews, P., Jr.; do Nascimento, E.; Moraes, F.; Botelho, S.; Campos, M. Transmission Estimation in Underwater Single Images. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) Workshops, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Meng, H.; Yan, Y.; Cai, C.; Qiao, R.; Wang, F. A hybrid algorithm for underwater image restoration based on color correction and image sharpening. Multimed. Syst. 2020, 1–11. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color Balance and Fusion for Underwater Image Enhancement. IEEE Trans. Image Processing 2017, 27, 379–393. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pramunendar, R.A.; Shidik, G.F.; Supriyanto, C.; Andono, P.N.; Hariadi, M. Auto Level Color Correction for Underwater Image Matching Optimization. Int. J. Comput. Sci. Netw. Secur. 2013, 13, 18–23. [Google Scholar]

- Hassan, N.; Ullah, S.; Bhatti, N.; Mahmood, H.; Zia, M. The Retinex based improved underwater image enhancement. Multimed. Tools Appl. 2021, 80, 1839–1857. [Google Scholar] [CrossRef]

- Tang, Z.; Jiang, L.; Luo, Z. A new underwater image enhancement algorithm based on adaptive feedback and Retinex algorithm. Multimed. Tools Appl. 2021, 80, 28487–28499. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Processing 2002, 6, 965–976. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Iqbal, K.; Salam, R.A.; Osman, A.; Talib, A.Z. Underwater Image Enhancement Using an Integrated Colour Model. IAENG Int. J. Comput. Sci. 2007, 34, 239–244. [Google Scholar]

- Ghani, A. Underwater image quality enhancement through integrated color model with Rayleigh distribution. Appl. Soft Comput. 2014, 27, 219–230. [Google Scholar] [CrossRef]

- Deng, J.; Luo, G.; Zhao, C. UCT-GAN: Underwater image colour transfer generative adversarial network. IET Image Processing 2020, 14, 3613–3622. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, P.; Quan, L.; Yi, C.; Lu, C. Underwater Image Enhancement based on Deep Learning and Image Formation Model. arXiv 2021, arXiv:2101.00991v2. [Google Scholar]

- Lu, J.; Li, N.; Zhang, S.; Yu, Z.; Zheng, H.; Zheng, B. Multi-scale adversarial network for underwater image restoration. Opt. Laser Technol. 2019, 110, 105–113. [Google Scholar] [CrossRef]

- Azmi, K.Z.; Ghani, A.S.; Yusof, Z.M.; Ibrahim, Z. Natural-based underwater image color enhancement through fusion of swarm-intelligence algorithm. Appl. Soft Comput. 2019, 85, 105810. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Guo, C. Emerging from Water: Underwater Image Color Correction Based on Weakly Supervised Color Transfer. IEEE Signal Processing Lett. 2018, 25, 323–327. [Google Scholar] [CrossRef] [Green Version]

- Connolly, C.; Fleiss, T. A study of efficiency and accuracy in the transformation from RGB to CIELAB color space. IEEE Trans. Image Processing A Publ. IEEE Signal Processing Soc. 1997, 6, 1046. [Google Scholar] [CrossRef] [PubMed]

| Items | Day-Light LED | Warm-Light LED | Cold-Light LED | Incandescent Lamp | |

|---|---|---|---|---|---|

| In air | 12.67 | 14.47 | 15.90 | 15.91 | |

| 2 m | 26.20 | 22.67 | 23.87 | 24.27 | |

| Mean value in water | 21.28 | 18.54 | 19.95 | 19.74 | |

| In air | 12.41 | 17.02 | 17.20 | 21.09 | |

| 2 m | 30.45 | 24.68 | 26.13 | 32.29 | |

| Mean value in water | 22.27 | 19.04 | 19.62 | 28.47 | |

| In air | 17.73 | 22.34 | 23.43 | 26.42 | |

| 2 m | 40.17 | 33.51 | 35.40 | 40.40 | |

| Mean value in water | 30.80 | 26.58 | 27.98 | 34.65 | |

| Items | Camera No.1 | Camera No.2 | Camera No.3 | 3×CMOS RGB Camera | |

|---|---|---|---|---|---|

| In air | 10.87 | 22.06 | 13.87 | 12.16 | |

| 2 m | 23.42 | 26.94 | 23.99 | 22.68 | |

| Mean value in water | 18.48 | 24.52 | 18.77 | 17.75 | |

| In air | 15.97 | 24.80 | 13.00 | 13.94 | |

| 2 m | 27.06 | 31.25 | 26.50 | 24.84 | |

| Mean value in water | 24.13 | 28.27 | 17.85 | 17.96 | |

| In air | 19.32 | 33.19 | 19.01 | 18.50 | |

| 2 m | 35.79 | 41.26 | 35.74 | 32.82 | |

| Mean value in water | 30.39 | 37.41 | 25.91 | 25.25 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quan, X.; Wei, Y.; Li, B.; Liu, K.; Li, C.; Zhang, B.; Yang, J. The Color Improvement of Underwater Images Based on Light Source and Detector. Sensors 2022, 22, 692. https://doi.org/10.3390/s22020692

Quan X, Wei Y, Li B, Liu K, Li C, Zhang B, Yang J. The Color Improvement of Underwater Images Based on Light Source and Detector. Sensors. 2022; 22(2):692. https://doi.org/10.3390/s22020692

Chicago/Turabian StyleQuan, Xiangqian, Yucong Wei, Bo Li, Kaibin Liu, Chen Li, Bing Zhang, and Jingchuan Yang. 2022. "The Color Improvement of Underwater Images Based on Light Source and Detector" Sensors 22, no. 2: 692. https://doi.org/10.3390/s22020692

APA StyleQuan, X., Wei, Y., Li, B., Liu, K., Li, C., Zhang, B., & Yang, J. (2022). The Color Improvement of Underwater Images Based on Light Source and Detector. Sensors, 22(2), 692. https://doi.org/10.3390/s22020692