Iris Recognition Method Based on Parallel Iris Localization Algorithm and Deep Learning Iris Verification

Abstract

:1. Introduction

- We used a parallel circular transform to locate the inner boundary of the iris, and the fast Daugman integro-differential localization algorithm for locating the outer boundary, which results in effective and fast iris localization.

- A new lightweight deep learning architecture was developed for iris verification. The network architecture is based on ResNet, which is used to improve the robustness of the iris verification network architecture and the accuracy of the verification results.

- A residual pooling layer was introduced into the deep learning network architecture for iris recognition to extract more iris texture features.

2. Related Work

3. Materials and Methods

3.1. Iris Localization Stage

3.1.1. Inner Boundary Localization

3.1.2. Outer Boundary Localization

3.2. Iris Verification Stage

3.2.1. Residual Modules

3.2.2. Residual Pooling Layer

- Residual Encoding Module

- b.

- Aggregation Module

3.3. Datasets

- (1)

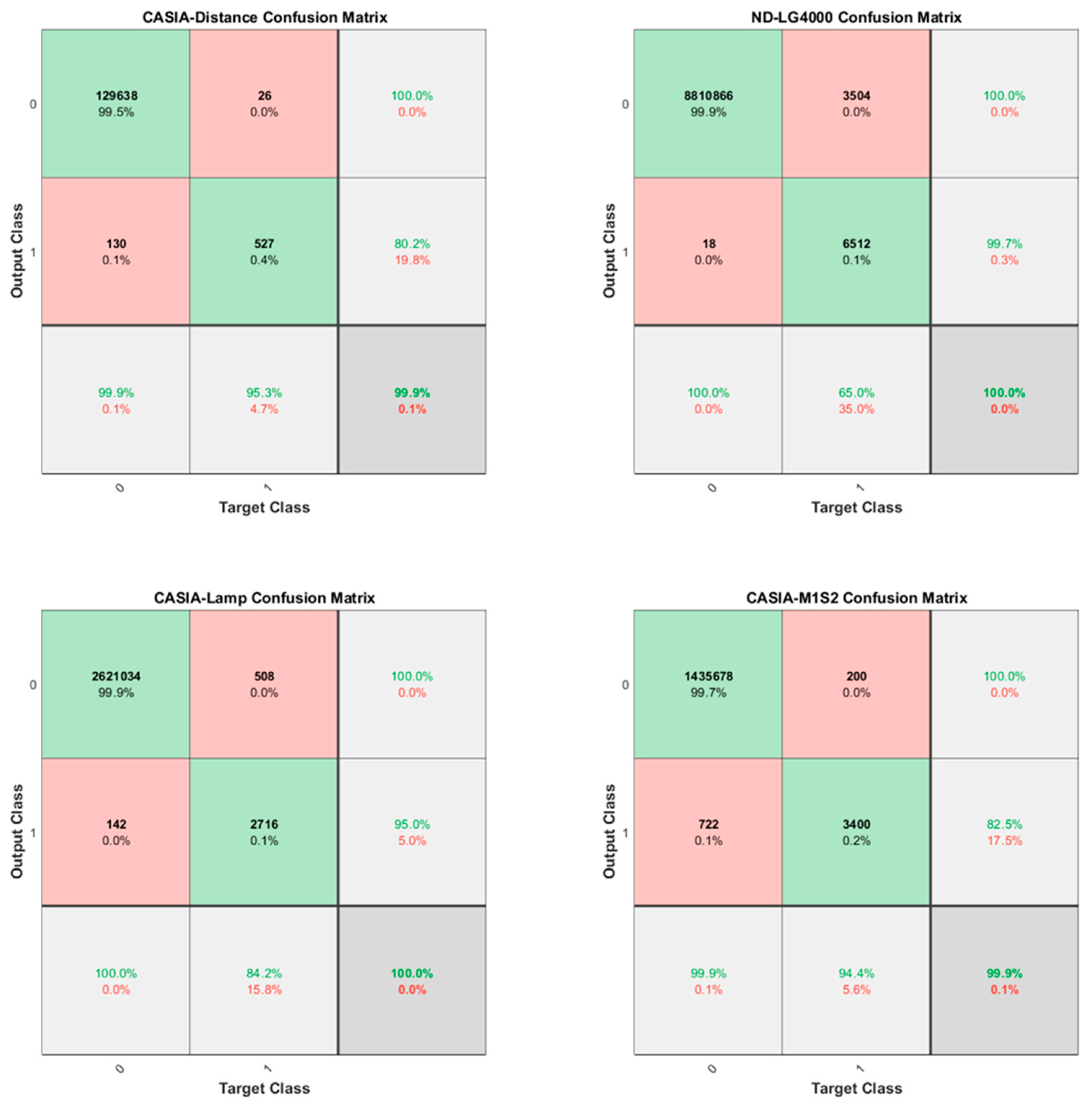

- CASIA.v4-distance: CASIA.v4-distance contains 2567 iris images from 142 subjects. Iris images were collected by the Chinese Academy of Sciences Institute of Automation (CASIA) using a CASIA long-range iris camera. The test set for the performance evaluation consists of 130,321 match scores.

- (2)

- ND-LG4000: ND-LG4000 contains 29,986 iris images acquired from 1352 subjects using an LG 4000 iris biometrics sensor. The test set for the performance evaluation consists of 8,810,866 match scores.

- (3)

- CASIA.v4-lamp: CASIA.v4-lamp contains 16,212 iris images from 819 subjects. This dataset was acquired by turning a lamp on/off near the subject to produce elastic deformation under different lighting conditions. The test set for the performance evaluation consists of 2,624,400 match scores.

- (4)

- CASIA-M1-S2: CASIA-M1-S2 contains 6000 iris images from 400 subjects. Images of this dataset are acquired from mobile devices. The test set for the performance evaluation consists of 1,440,000 match scores.

4. Experimental Results and Discussion

4.1. Experimental Environment

4.2. Discussion and Result Analysis

4.2.1. Investigation of Iris Localization

4.2.2. Evaluation of the Iris Verification Performance

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jain, A.K.; Ross, A.; Prabhakar, S. An introduction to biometric recognition. IEEE Trans. Circuits Syst. Video Technol. 2006, 14, 4–20. [Google Scholar] [CrossRef] [Green Version]

- Noh, K.S. A study on the authentication and security of financial settlement using the finger vein technology in wireless internet environment. Wirel. Person. Commun. 2016, 89, 761–775. [Google Scholar] [CrossRef]

- Fahmi, P.N.A.; Kodirov, E.; Choi, D.J.; Lee, G.-S.; Azli, A.M.F.; Sayeed, S. Implicit authentication based on ear shape biometrics using smartphone camera during a call. In Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics, Seoul, Korea, 14–17 October 2012; pp. 2272–2276. [Google Scholar]

- Jain, A.K.; Chen, H. Matching of dental X-ray images for human identification. Pattern Recognit. 2004, 37, 1519–1532. [Google Scholar] [CrossRef]

- Gacsadi, I.; Buciu, A. Biometrics systems and technologies: A survey. Int. J. Comput. Commun. Control 2016, 11, 315–330. [Google Scholar]

- Shalaby, A.; Gad, R.; Hemdan, E.E.-D.; El-Fishawy, N. An efficient multi-factor authentication scheme based CNNs for securing ATMs over cognitive-IoT. PeerJ Comput. Sci. 2021, 7, e381. [Google Scholar] [CrossRef]

- Liu, C.; Wang, J.; Peng, C.; Shyu, J. Evaluating and selecting the biometrics in network security. Secur. Commun. Netw. 2014, 8, 727–739. [Google Scholar] [CrossRef]

- Daugman, J. High confidence visual recognition of persons by a test of statistical independence. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 1148–1161. [Google Scholar] [CrossRef] [Green Version]

- Wiecha, P.R.; Arbouet, A.; Girard, C.; Muskens, O.L. Deep learning in nano-photonics: Inverse design and beyond. Photonics Res. 2021, 9, B182–B200. [Google Scholar] [CrossRef]

- Ren, Y.; Zhang, L.; Wang, W.; Wang, X.; Lei, Y.; Xue, Y.; Sun, X.; Zhang, W. Genetic-algorithm-based deep neural networks for highly efficient photonic device design. Photon. Res. 2021, 9, 247–252. [Google Scholar] [CrossRef]

- Gao, L.; Chai, Y.; Zibar, D.; Yu, Z. Deep learning in photonics: Introduction. Photonics Res. 2021, 9, DLP1–DLP3. [Google Scholar] [CrossRef]

- Li, Y.; Di, J.; Ren, L.; Zhao, J. Deep-learning-based prediction of living cells mitosis via quantitative phase microscopy. Chin. Opt. Lett. 2021, 19, 051701. [Google Scholar] [CrossRef]

- Wong, I.H.M.; Zhang, Y.; Chen, Z.H.; Kang, L.; Wong, T.T.W. Slide-free histological imaging by microscopy with ultraviolet surface excitation using speckle illumination. Photonics Res. 2022, 10, 120–125. [Google Scholar] [CrossRef]

- Ashalley, E.; Acheampong, K.; Besteir, L.V.; Yu, P.; Neogi, A.; Govorov, A.O.; Wang, Z.M. Multitask deep-learning-based design of chiral plasmonic metamaterials. Photonics Res. 2020, 8, 1213–1225. [Google Scholar] [CrossRef]

- Ling, C.; Zhang, C.; Wang, M.; Meng, F.; Du, L.; Yuan, X. Fast structured illumination microscopy via deep learning. Photonics Res. 2020, 8, 1350–1359. [Google Scholar] [CrossRef]

- Shah, Z.H.; Müller, M.; Wang, T.-C.; Scheidig, P.M.; Schneider, A.; Schüttpelz, M.; Huser, T.; Schenck, W. Deep-learning based denoising and reconstruction of super-resolution structured illumination microscopy images. Photonics Res. 2021, 9, B168–B181. [Google Scholar] [CrossRef]

- Soonil, K. 1D-Cnn: Speech emotion recognition system using a stacked network with dilated CNN features. Comput. Mater. Contin. 2021, 67, 4039–4059. [Google Scholar]

- Anvarjon, T.; Mustaqeem; Kwon, S. Deep-net: A lightweight cnn-based speech emotion recognition system using deep frequency features. Sensors 2020, 20, 5212. [Google Scholar] [CrossRef]

- Battistone, F.; Petrosino, A. TGLSTM: A time-based graph deep learning approach to gait recognition. Pattern Recognit. Lett. 2019, 126, 132–138. [Google Scholar] [CrossRef]

- Zeng, F.; Hu, S.; Xiao, K. Research on partial fingerprint recognition algorithm based on deep learning. Neural Comput. Appl. 2019, 31, 4789–4798. [Google Scholar] [CrossRef]

- Tursunov, A.; Choeh, J.Y.; Kwon, S. Age and gender recognition using a convolutional neural network with a specially designed multi-attention module through speech spectrograms. Sensors 2021, 21, 5892. [Google Scholar] [CrossRef]

- Gangwar, A.; Joshi, A. DeepIrisNet: Deep iris representation with applications in iris recognition and cross-sensor iris recognition. In Proceedings of the IEEE International Conference Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2301–2305. [Google Scholar]

- Zhao, T.; Liu, Y.; Huo, G.; Zhu, X. A deep learning iris recognition method based on capsule network architecture. IEEE Access 2019, 7, 49691–49701. [Google Scholar] [CrossRef]

- Minaee, S.; Abdolrashidiy, A.; Wang, Y. An experimental study of deep convolutional features for iris recognition. In Proceedings of the IEEE Signal Processing in Medicine Biology Symposium (SPMB), Philadelphia, PA, USA, 3 December 2016; pp. 1–6. [Google Scholar]

- Nguyen, K.; Fookes, C.; Ross, A.; Sridharan, S. Iris recognition with off-the-shelf CNN features: A deep learning perspective. IEEE Access 2018, 6, 18848–18855. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Mao, S.; Rajan, D.; Chia, L.T. Deep residual pooling network for texture recognition. Pattern Recognit. 2021, 112, 107817. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, H.; Sun, Z.; Tan, T. Deep feature fusion for iris and periocular biometrics on mobile devices. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2897–2912. [Google Scholar] [CrossRef]

- Liu, N.; Zhang, M.; Li, H.; Sun, Z.; Tan, T. DeepIris: Learning pairwise filter bank for heterogeneous iris verification. Pattern Recognit. Lett. 2016, 82, 154–161. [Google Scholar] [CrossRef]

- Zhao, Z.; Kumar, A. Towards more accurate iris recognition using deeply learned spatially corresponding features. In Proceedings of the IEEE International Conference Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3829–3838. [Google Scholar]

- Wang, K.; Kumar, A. Towards more accurate iris recognition using dilated residual features. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3233–3245. [Google Scholar] [CrossRef]

- Noruzi, A.; Mahlouji, M.; Shahidinejad, A. Iris recognition in unconstrained environment on graphic processing units with CUDA. Artif Intell Rev. 2019, 53, 3705–3729. [Google Scholar] [CrossRef]

- CASIA Iris Image Database. 2010. Available online: http://biometrics.idealtest.org/ (accessed on 25 July 2022).

- ND-CrossSensor-Iris-2013 dataset-LG4000. Available online: https://cvrl.nd.edu/projects/data/#nd-crosssensor-iris-2013-data-set (accessed on 25 July 2022).

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

| Database | Tp(S) | Ts(S) | Speedup |

|---|---|---|---|

| CASIA.v4-distance | 20.63 | 0.79 | 26x |

| ND-LG4000 | 41.26 | 1.16 | 36x |

| CASIA.v4-lamp | 23.13 | 0.73 | 32x |

| CASIA-M1-S2 | 13.31 | 0.64 | 21x |

| Database | Localization Accuracy |

|---|---|

| CASIA.v4-distance | 94% |

| ND-LG4000 | 90% |

| CASIA.v4-lamp | 91% |

| CASIA-M1-S2 | 85% |

| Recognition Approach | CASIA-Distance | ND-LG4000 | CASIA-Lamp | CASIA-M1-S2 | ||||

|---|---|---|---|---|---|---|---|---|

| FRR@FAR = 0.01% | EER | FRR@FAR = 0.01% | EER | FRR@FAR = 0.01% | EER | FRR@FAR = 0.01% | EER | |

| ResNet | 13.38% | 2.53% | 21.17% | 2.12% | 26.80% | 4.40% | 18.50% | 2.33% |

| Ours | 11.93% | 1.08% | 8.17% | 1.07% | 13.46% | 1.71% | 11.72% | 1.11% |

| Recognition Approach | CASIA-Distance | ND-LG4000 | CASIA-Lamp | CASIA-M1-S2 | ||||

|---|---|---|---|---|---|---|---|---|

| FRR@FAR = 0.01% | EER | FRR@FAR = 0.01% | EER | FRR@FAR = 0.01% | EER | FRR@FAR = 0.01% | EER | |

| ResNet-18 | 19.89% | 3.61% | 25.78% | 2.33% | 21.53% | 2.73% | 18.11% | 1.77% |

| MobileNet-v2 | 14.83% | 1.44% | 20.07% | 1.65% | 19.85% | 2.36% | 13.28% | 1.18% |

| Maxout-CNNs | 20.25% | 6.88% | 12.48% | 1.58% | 15.51% | 2.11% | 19.72% | 2.18% |

| Ours | 11.93% | 1.08% | 8.17% | 1.07% | 13.46% | 1.71% | 11.72% | 1.11% |

| Recognition Approach. | #Params | FLOPs |

|---|---|---|

| ResNet-18 | 14.53 MB | 2.24 G |

| MobileNet-v2 | 11.78 MB | 111.91 M |

| Maxout-CNNs | 10.12 MB | 1.27 G |

| Ours | 8.79 MB | 274.48 M |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, Y.; Zhang, X.; Zeng, A.; Huang, H. Iris Recognition Method Based on Parallel Iris Localization Algorithm and Deep Learning Iris Verification. Sensors 2022, 22, 7723. https://doi.org/10.3390/s22207723

Wei Y, Zhang X, Zeng A, Huang H. Iris Recognition Method Based on Parallel Iris Localization Algorithm and Deep Learning Iris Verification. Sensors. 2022; 22(20):7723. https://doi.org/10.3390/s22207723

Chicago/Turabian StyleWei, Yinyin, Xiangyang Zhang, Aijun Zeng, and Huijie Huang. 2022. "Iris Recognition Method Based on Parallel Iris Localization Algorithm and Deep Learning Iris Verification" Sensors 22, no. 20: 7723. https://doi.org/10.3390/s22207723