Pruning the Communication Bandwidth between Reinforcement Learning Agents through Causal Inference: An Innovative Approach to Designing a Smart Grid Power System

Abstract

1. Introduction

- (1)

- To apply the causal model to optimize the communication selection in the problem of the smart grid, also in the collaborative agent tasks.

- (2)

- To formulate a graphical model which connects the control problem and causal inference that decides the necessity of communication.

- (3)

- To prove empirically that the proposed CICM model effectively reduces communication bandwidth in smart grid networks and collaborative agent tasks while improving task completion.

- (1)

- This is the first study to apply the causal model to optimize the communication selection in the problem of the collaborative agent tasks in smart grid.

- (2)

- A new graphical model is developed which connects the control problem and causal inference that decides the necessity of communication.

- (3)

- Innovative numerical proof that the proposed CICM model effectively reduces communication bandwidth is provided.

2. Related Works: Smart Grids, Communications in Cooperative Agents, Causal Inference

2.1. Smart Grid

2.2. Communications in Cooperative Agents

2.3. Causal Inference

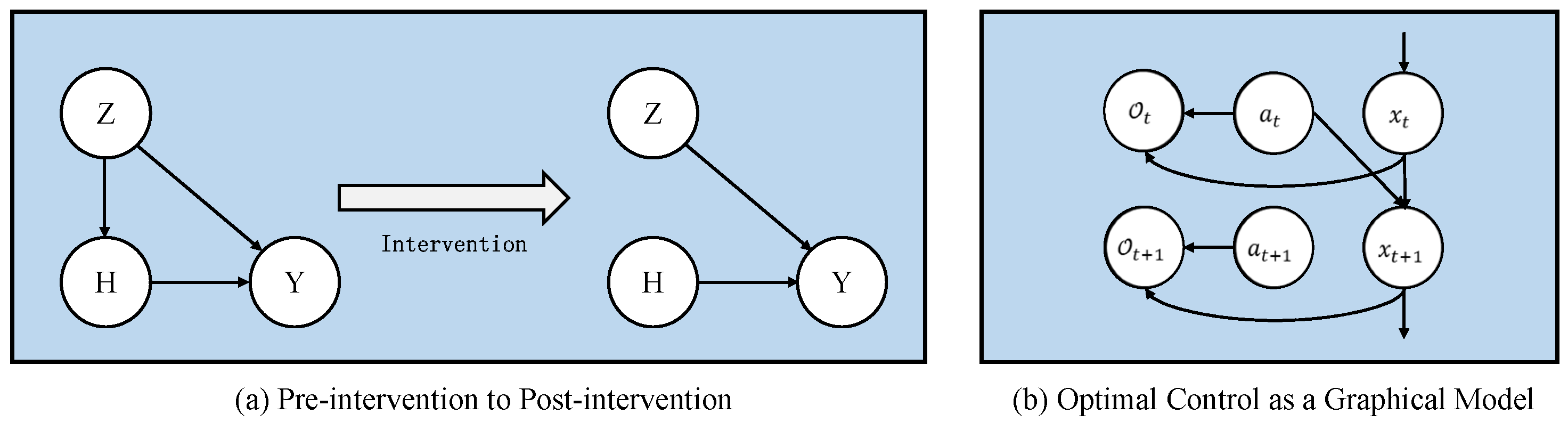

3. A Graphical Model: Preliminaries

3.1. Causal Models

3.2. Reinforcement Learning as a Graphical Model

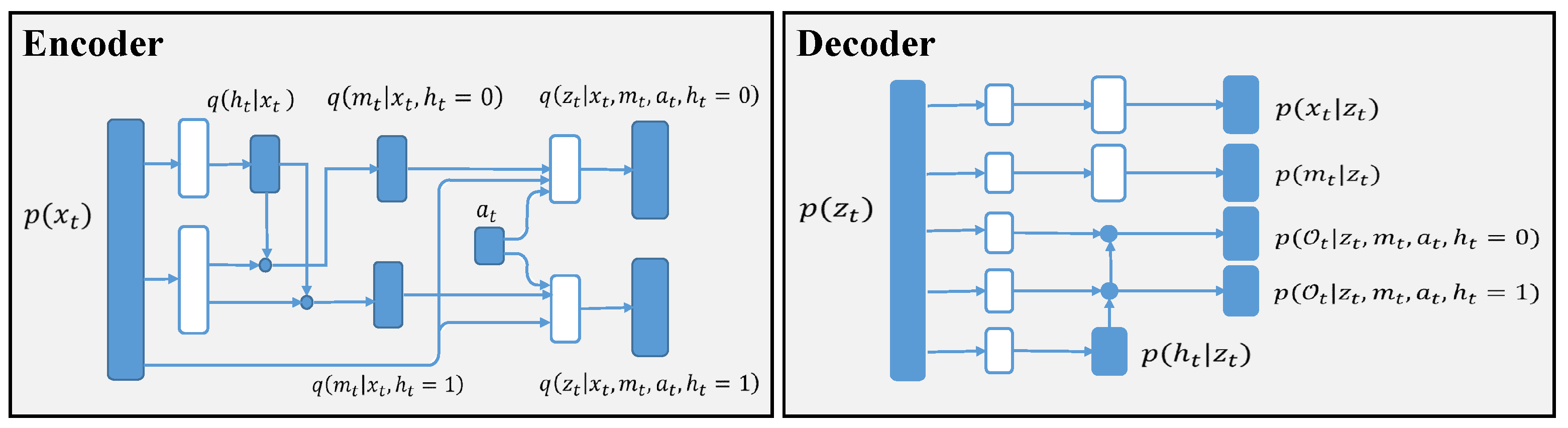

4. Method: The Causal Inference Communication Model (CICM)

4.1. Connecting Causal Model and Reinforcement Learning

4.2. Estimating Individual Treatment Effect

5. Experiments, Datasets and the Environment

5.1. Datasets and Environment

- Power Grid Problem

- Habitat

- StarCraft II Environment

5.2. Reward Structure and Evaluation Indicators

5.3. Network Architecture and Baseline Algorithm

6. Analysis of Experiment Results

6.1. Complexity Analysis

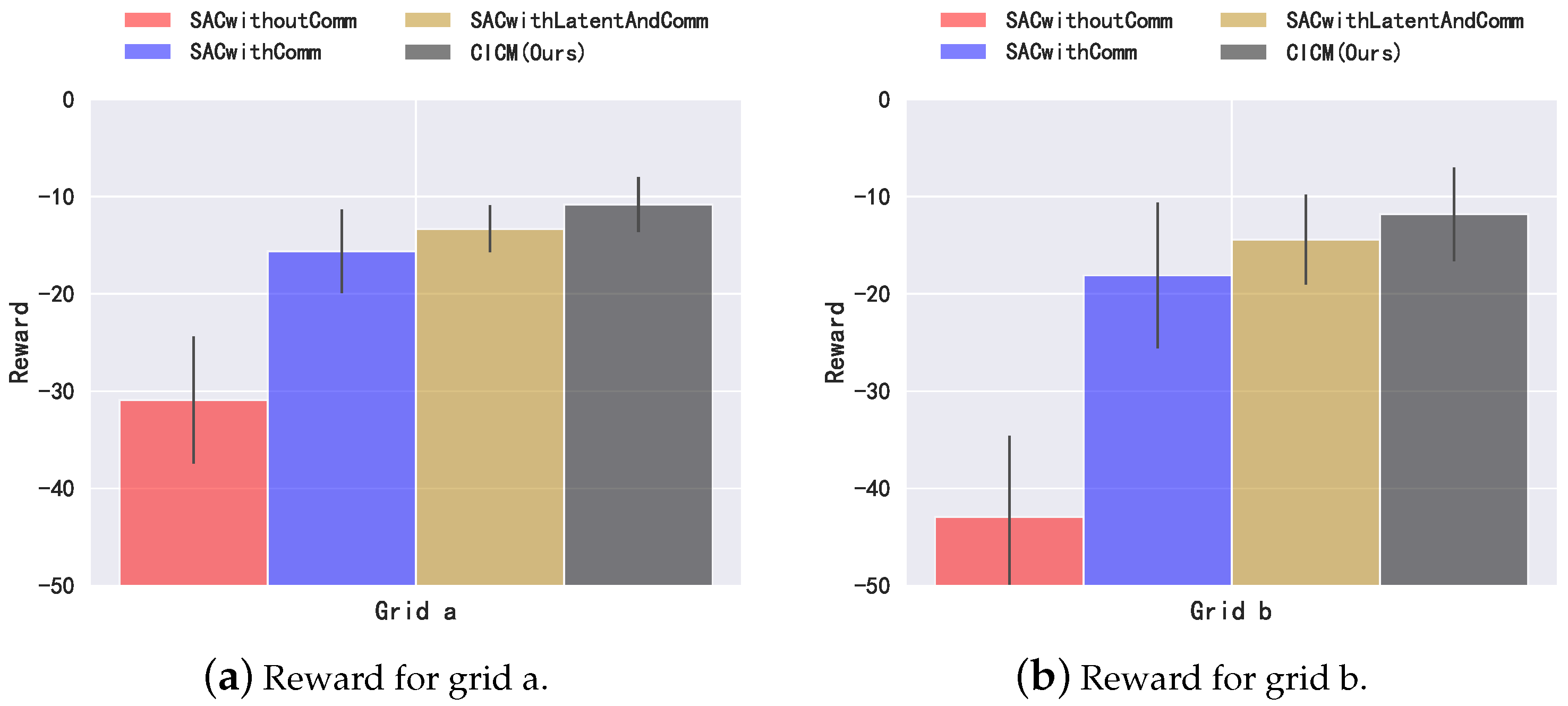

6.2. Power Grid Environment

6.3. StarCraft Experiment

6.4. Habitat Experiment

7. Discussion

7.1. Research Limitations

- We only conduct experimental tests on the DC power grid simulation environment of the power grid, which is different from the real production environment. There is still a big gap between simulation and reality. How to deploy the algorithms trained in the simulation environment to the real environment requires more effort.

- Our algorithm only considers the counterfactual of causal inference in agents’ communication, and does not introduce some cooperative mechanisms of multi-agent reinforcement learning in policy learning, nor does it consider the credit assignment among multiple agents. The introduction of these mechanisms in the future will further improve the performance.

- Our algorithm mainly takes consideration of theoretical completeness, thus introducing latent variable models with reinforcement learning objective function and causal inference. However, the model is relatively complex, and its stability is weak during training, and the stability of the algorithm needs to be paid attention to in the follow-up research.

7.2. Threat to Validity

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Derivation of the Latent Variable Model

Appendix B. Implementation and Experimental Details

| Parameters | Value |

|---|---|

| Critic learning rate | 0.005 |

| Actor learning rate | 0.005 |

| Batch size | 32 |

| Discount factor | 0.99 |

| VEA network learning rate | 0.0001 |

- NoCom (i.e., “No communication”): It means the performance of the navigation agent without relying on the oracle agent, which can be used as a model without communication.

- U-Comm (i.e., “Unstructured communication”): the agent delivers real-valued vector messages. Oracle’s information is directly encoded by the linear layer to form information and send it to the navigation agent.

- S-Comm (i.e., “Structured communication”): the agent has a vocabulary consisting of K words, implemented as a learnable embedding. These probabilities are obtained by passing information to the linear and softmax layers. On the receiving end, the agent decodes these incoming message probabilities by linearly combining its word embeddings using the probabilities as weights.

- S-Comm&CIC (i.e., “Structured communication with Causal Inference model”): Combining our causal inference network on the basis of U-Comm.

- U-Comm&CIC (i.e., “Unstructured communication with Causal Inference model”): Combining our causal inference network on the basis of S-Comm.

- SACwithoutComm: model without communication.

- SACwithComm, only using SAC algorithm with the communication.

- SACwithLatentAndComm: adding latent variable model and communication using VAE to SAC algorithm.

- CICM (our method): leveraging causal inference and VAE model.

References

- Li, D. Cooperative Communications in Smart Grid Networks. Ph.D. Thesis, University of Sheffield, Sheffield, UK, 2020. [Google Scholar]

- Fan, Z.; Kulkarni, P.; Gormus, S.; Efthymiou, C.; Kalogridis, G.; Sooriyabandara, M.; Zhu, Z.; Lambotharan, S.; Chin, W.H. Smart Grid Communications: Overview of Research Challenges, Solutions, and Standardization Activities. IEEE Commun. Surv. Tutor. 2013, 15, 21–38. [Google Scholar] [CrossRef]

- Camarinha-Matos, L.M. Collaborative smart grids—A survey on trends. Renew. Sustain. Energy Rev. 2016, 65, 283–294. [Google Scholar] [CrossRef]

- Dileep, G. A survey on smart grid technologies and applications. Renew. Energy 2020, 146, 2589–2625. [Google Scholar] [CrossRef]

- Arya, A.K.; Chanana, S.; Kumar, A. Energy Saving in Distribution System using Internet of Things in Smart Grid environment. Int. J. Comput. Digit. Syst. 2019, 8, 157–165. [Google Scholar]

- Ma, K.; Liu, X.; Li, G.; Hu, S.; Yang, J.; Guan, X. Resource allocation for smart grid communication based on a multi-swarm artificial bee colony algorithm with cooperative learning. Eng. Appl. Artif. Intell. 2019, 81, 29–36. [Google Scholar] [CrossRef]

- Lowe, R.; Wu, Y.; Tamar, A.; Harb, J.; Abbeel, O.P.; Mordatch, I. Multi-agent actor-critic for mixed cooperative-competitive environments. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Bernstein, D.S.; Givan, R.; Immerman, N.; Zilberstein, S. The complexity of decentralized control of Markov decision processes. Math. Oper. Res. 2002, 27, 819–840. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, Y.; Xu, X.; Huang, Q.; Mao, H.; Carie, A. Structural relational inference actor-critic for multi-agent reinforcement learning. Neurocomputing 2021, 459, 383–394. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, Y.; Mao, H.; Yu, C. Common belief multi-agent reinforcement learning based on variational recurrent models. Neurocomputing 2022, 513, 341–350. [Google Scholar] [CrossRef]

- Li, F.D.; Wu, M.; He, Y.; Chen, X. Optimal control in microgrid using multi-agent reinforcement learning. ISA Trans. 2012, 51, 743–751. [Google Scholar] [CrossRef]

- Schneider, J.G.; Wong, W.; Moore, A.W.; Riedmiller, M.A. Distributed Value Functions. In Proceedings of the Sixteenth International Conference on Machine Learning (ICML 1999), Bled, Slovenia, 27–30 June 1999; Bratko, I., Dzeroski, S., Eds.; Morgan Kaufmann: Burlington, MA, USA, 1999; pp. 371–378. [Google Scholar]

- Shirzeh, H.; Naghdy, F.; Ciufo, P.; Ros, M. Balancing Energy in the Smart Grid Using Distributed Value Function (DVF). IEEE Trans. Smart Grid 2015, 6, 808–818. [Google Scholar] [CrossRef]

- Riedmiller, M.; Moore, A.; Schneider, J. Reinforcement Learning for Cooperating and Communicating Reactive Agents in Electrical Power Grids. In Proceedings of the Balancing Reactivity and Social Deliberation in Multi-Agent Systems; Springer: Berlin/Heidelberg, Germany, 2001; pp. 137–149. [Google Scholar]

- Kofinas, P.; Dounis, A.; Vouros, G. Fuzzy Q-Learning for multi-agent decentralized energy management in microgrids. Appl. Energy 2018, 219, 53–67. [Google Scholar] [CrossRef]

- Elsayed, M.; Erol-Kantarci, M.; Kantarci, B.; Wu, L.; Li, J. Low-latency communications for community resilience microgrids: A reinforcement learning approach. IEEE Trans. Smart Grid 2019, 11, 1091–1099. [Google Scholar] [CrossRef]

- Mao, H.; Zhang, Z.; Xiao, Z.; Gong, Z.; Ni, Y. Learning agent communication under limited bandwidth by message pruning. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 5142–5149. [Google Scholar]

- Bogin, B.; Geva, M.; Berant, J. Emergence of Communication in an Interactive World with Consistent Speakers. CoRR 2018. [Google Scholar] [CrossRef]

- Patel, S.; Wani, S.; Jain, U.; Schwing, A.G.; Lazebnik, S.; Savva, M.; Chang, A.X. Interpretation of Emergent Communication in Heterogeneous Collaborative Embodied Agents. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11 October 2021; pp. 15953–15963. [Google Scholar]

- Liu, I.J.; Ren, Z.; Yeh, R.A.; Schwing, A.G. Semantic Tracklets: An Object-Centric Representation for Visual Multi-Agent Reinforcement Learning. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 5603–5610. [Google Scholar]

- Liu, X.; Guo, D.; Liu, H.; Sun, F. Multi-Agent Embodied Visual Semantic Navigation with Scene Prior Knowledge. IEEE Robot. Autom. Lett. 2022, 7, 3154–3161. [Google Scholar] [CrossRef]

- Foerster, J.; Assael, I.A.; de Freitas, N.; Whiteson, S. Learning to Communicate with Deep Multi-Agent Reinforcement Learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016. [Google Scholar]

- Sukhbaatar, S.; Fergus, R. Learning multiagent communication with backpropagation. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2244–2252. [Google Scholar]

- Peng, P.; Yuan, Q.; Wen, Y.; Yang, Y.; Tang, Z.; Long, H.; Wang, J. Multiagent Bidirectionally-Coordinated Nets for Learning to Play StarCraft Combat Games. CoRR 2017. [Google Scholar] [CrossRef]

- Das, A.; Gervet, T.; Romoff, J.; Batra, D.; Parikh, D.; Rabbat, M.; Pineau, J. Tarmac: Targeted multi-agent communication. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 1538–1546. [Google Scholar]

- Wang, T.; Wang, J.; Zheng, C.; Zhang, C. Learning Nearly Decomposable Value Functions Via Communication Minimization. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Niu, Y.; Paleja, R.; Gombolay, M. Multi-Agent Graph-Attention Communication and Teaming; AAMAS ’21; International Foundation for Autonomous Agents and Multiagent Systems: Richland, SC, USA, 2021; pp. 964–973. [Google Scholar]

- Wang, R.; He, X.; Yu, R.; Qiu, W.; An, B.; Rabinovich, Z. Learning Efficient Multi-agent Communication: An Information Bottleneck Approach. In Proceedings of the 37th International Conference on Machine Learning, Virtual Event, 13–18 July 2020; Volume 119, pp. 9908–9918. [Google Scholar]

- Louizos, C.; Shalit, U.; Mooij, J.M.; Sontag, D.; Zemel, R.; Welling, M. Causal Effect Inference with Deep Latent-Variable Models. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Shalit, U.; Johansson, F.D.; Sontag, D. Estimating individual treatment effect: Generalization bounds and algorithms. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 3076–3085. [Google Scholar]

- Yao, L.; Li, S.; Li, Y.; Huai, M.; Gao, J.; Zhang, A. Representation Learning for Treatment Effect Estimation from Observational Data. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Bou Ghosn, S.; Ranganathan, P.; Salem, S.; Tang, J.; Loegering, D.; Nygard, K.E. Agent-Oriented Designs for a Self Healing Smart Grid. In Proceedings of the 2010 First IEEE International Conference on Smart Grid Communications, Gaithersburg, MD, USA, 4–6 October 2010; pp. 461–466. [Google Scholar] [CrossRef]

- Brown, R.E. Impact of Smart Grid on distribution system design. In Proceedings of the 2008 IEEE Power and Energy Society General Meeting-Conversion and Delivery of Electrical Energy in the 21st Century, Pittsburgh, PA, USA, 20–24 July 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Ahmed, M.H.U.; Alam, M.G.R.; Kamal, R.; Hong, C.S.; Lee, S. Smart grid cooperative communication with smart relay. J. Commun. Netw. 2012, 14, 640–652. [Google Scholar] [CrossRef]

- Kilkki, O.; Kangasrääsiö, A.; Nikkilä, R.; Alahäivälä, A.; Seilonen, I. Agent-based modeling and simulation of a smart grid: A case study of communication effects on frequency control. Eng. Appl. Artif. Intell. 2014, 33, 91–98. [Google Scholar] [CrossRef]

- Yu, P.; Wan, C.; Song, Y.; Jiang, Y. Distributed Control of Multi-Energy Storage Systems for Voltage Regulation in Distribution Networks: A Back-and-Forth Communication Framework. IEEE Trans. Smart Grid 2021, 12, 1964–1977. [Google Scholar] [CrossRef]

- Gong, C.; Yang, Z.; Bai, Y.; He, J.; Shi, J.; Sinha, A.; Xu, B.; Hou, X.; Fan, G.; Lo, D. Mind Your Data! Hiding Backdoors in Offline Reinforcement Learning Datasets. arXiv 2022, arXiv:2210.04688. [Google Scholar]

- Hoshen, Y. Vain: Attentional multi-agent predictive modeling. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 2701–2711. [Google Scholar]

- Jiang, J.; Lu, Z. Learning attentional communication for multi-agent cooperation. In Proceedings of the Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, Montréal, QC, Canada, 3–8 December 2018; pp. 7265–7275. [Google Scholar]

- Iqbal, S.; Sha, F. Actor-Attention-Critic for Multi-Agent Reinforcement Learning. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 2961–2970. [Google Scholar]

- Yang, Y.; Hao, J.; Liao, B.; Shao, K.; Chen, G.; Liu, W.; Tang, H. Qatten: A General Framework for Cooperative Multiagent Reinforcement Learning. CoRR 2020. [Google Scholar] [CrossRef]

- Bai, Y.; Gong, C.; Zhang, B.; Fan, G.; Hou, X. Value Function Factorisation with Hypergraph Convolution for Cooperative Multi-agent Reinforcement Learning. arXiv 2021, arXiv:2112.06771. [Google Scholar]

- Parnika, P.; Diddigi, R.B.; Danda, S.K.R.; Bhatnagar, S. Attention Actor-Critic Algorithm for Multi-Agent Constrained Co-Operative Reinforcement Learning. In Proceedings of the International Foundation for Autonomous Agents and Multiagent Systems, Online, 3–7 May 2021; pp. 1616–1618. [Google Scholar]

- Chen, H.; Yang, G.; Zhang, J.; Yin, Q.; Huang, K. RACA: Relation-Aware Credit Assignment for Ad-Hoc Cooperation in Multi-Agent Deep Reinforcement Learning. arXiv 2022, arXiv:2206.01207. [Google Scholar]

- Gentzel, A.M.; Pruthi, P.; Jensen, D. How and Why to Use Experimental Data to Evaluate Methods for Observational Causal Inference. In Proceedings of the 38th International Conference on Machine Learning, Virtual Event, 18–24 July 2021; Volume 139, pp. 3660–3671. [Google Scholar]

- Cheng, L.; Guo, R.; Moraffah, R.; Sheth, P.; Candan, K.S.; Liu, H. Evaluation Methods and Measures for Causal Learning Algorithms. IEEE Trans. Artif. Intell. 2022, 2022, 1. [Google Scholar] [CrossRef]

- Cheng, L.; Guo, R.; Liu, H. Causal mediation analysis with hidden confounders. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, Chengdu, China, 16–19 May 2022; pp. 113–122. [Google Scholar]

- Bibaut, A.; Malenica, I.; Vlassis, N.; Van Der Laan, M. More efficient off-policy evaluation through regularized targeted learning. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 654–663. [Google Scholar]

- Tennenholtz, G.; Shalit, U.; Mannor, S. Off-Policy Evaluation in Partially Observable Environments. Proc. AAAI Conf. Artif. Intell. 2020, 34, 10276–10283. [Google Scholar] [CrossRef]

- Oberst, M.; Sontag, D. Counterfactual off-policy evaluation with gumbel-max structural causal models. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 4881–4890. [Google Scholar]

- Jaber, A.; Zhang, J.; Bareinboim, E. Causal Identification under Markov Equivalence: Completeness Results. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 2981–2989. [Google Scholar]

- Bennett, A.; Kallus, N.; Li, L.; Mousavi, A. Off-policy Evaluation in Infinite-Horizon Reinforcement Learning with Latent Confounders. In Proceedings of the 24th International Conference on Artificial Intelligence and Statistics, Virtual, 13–15 April 2021; Volume 130, pp. 1999–2007. [Google Scholar]

- Jung, Y.; Tian, J.; Bareinboim, E. Estimating Identifiable Causal Effects through Double Machine Learning. Proc. AAAI Conf. Artif. Intell. 2021, 35, 12113–12122. [Google Scholar] [CrossRef]

- Lu, C.; Schölkopf, B.; Hernández-Lobato, J.M. Deconfounding Reinforcement Learning in Observational Settings. CoRR 2018. [Google Scholar] [CrossRef]

- Lauritzen, S.L. Graphical Models; Clarendon Press: Oxford, UK, 1996; Volume 17. [Google Scholar]

- Wermuth, N.; Lauritzen, S.L. Graphical and Recursive Models for Contigency Tables; Institut for Elektroniske Systemer, Aalborg Universitetscenter: Aalborg, Denmark, 1982. [Google Scholar]

- Kiiveri, H.; Speed, T.P.; Carlin, J.B. Recursive causal models. J. Aust. Math. Soc. 1984, 36, 30–52. [Google Scholar] [CrossRef]

- Levine, S. Reinforcement Learning and Control as Probabilistic Inference: Tutorial and Review. CoRR 2018. [Google Scholar] [CrossRef]

- Wani, S.; Patel, S.; Jain, U.; Chang, A.; Savva, M. MultiON: Benchmarking Semantic Map Memory using Multi-Object Navigation. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 9700–9712. [Google Scholar]

- Savva, M.; Kadian, A.; Maksymets, O.; Zhao, Y.; Wijmans, E.; Jain, B.; Straub, J.; Liu, J.; Koltun, V.; Malik, J.; et al. Habitat: A Platform for Embodied AI Research. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Chang, A.; Dai, A.; Funkhouser, T.; Halber, M.; Niebner, M.; Savva, M.; Song, S.; Zeng, A.; Zhang, Y. Matterport3D: Learning from RGB-D Data in Indoor Environments. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; IEEE Computer Society: Los Alamitos, CA, USA, 2017; pp. 667–676. [Google Scholar] [CrossRef]

- Samvelyan, M.; Rashid, T.; de Witt, C.S.; Farquhar, G.; Nardelli, N.; Rudner, T.G.J.; Hung, C.M.; Torr, P.H.S.; Foerster, J.; Whiteson, S. The StarCraft Multi-Agent Challenge. CoRR 2019. [Google Scholar] [CrossRef]

- Ammirato, P.; Poirson, P.; Park, E.; Košecká, J.; Berg, A.C. A dataset for developing and benchmarking active vision. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May 2017; pp. 1378–1385. [Google Scholar] [CrossRef]

- Jain, U.; Weihs, L.; Kolve, E.; Rastegari, M.; Lazebnik, S.; Farhadi, A.; Schwing, A.G.; Kembhavi, A. Two Body Problem: Collaborative Visual Task Completion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Long Beach, CA, USA, 15–19 June 2019; pp. 6689–6699. [Google Scholar]

- Jain, U.; Weihs, L.; Kolve, E.; Farhadi, A.; Lazebnik, S.; Kembhavi, A.; Schwing, A.G. A Cordial Sync: Going Beyond Marginal Policies for Multi-agent Embodied Tasks. In Proceedings of the Computer Vision-ECCV 2020-16th European Conference, Glasgow, UK, 23–28 August 2020; Volume 12350, pp. 471–490. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 1861–1870. [Google Scholar]

- Lee, A.X.; Nagabandi, A.; Abbeel, P.; Levine, S. Stochastic Latent Actor-Critic: Deep Reinforcement Learning with a Latent Variable Model. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 741–752. [Google Scholar]

| Distributor 1 | Distributor 2 | ||

|---|---|---|---|

| Halve | Same | Double | |

| Halve | Halve | Halve | Same |

| Same | Halve | Same | Double |

| Double | Same | Double | Double |

| Environment | Grid a | Grid b |

|---|---|---|

| Communication probability | 37.4% | 32.9% |

| Communication Penalty | −0.05 | −0.15 | −0.20 | −0.5 | −1.0 | −1.5 | −2.0 |

|---|---|---|---|---|---|---|---|

| Communication probability | 48.8% | 45.0% | 46.3% | 38.0% | 32.7% | 29.1% | 27.4% |

| Test Result | 1.83 | 1.87 | 1.89 | 1.93 | 1.85 | 1.79 | 1.75 |

| PROGRESS (%) | PPL (%) | |||||

|---|---|---|---|---|---|---|

| 1-ON | 2-ON | 3-ON | 1-ON | 2-ON | 3-ON | |

| NoCom | 56 | 39 | 26 | 35 | 26 | 16 |

| U-Comm | 87 | 77 | 63 | 60 | 51 | 39 |

| S-Comm | 85 | 80 | 70 | 67 | 59 | 50 |

| U-Comm&CIC | 84 | 76 | 64 | 57 | 49 | 40 |

| S-Comm&CIC | 86 | 78 | 70 | 67 | 57 | 51 |

| Communication Penalty | −0.001 | −0.01 | −0.1 |

|---|---|---|---|

| Communication probability | 38.3% | 34.8% | 29.5% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Liu, Y.; Li, W.; Gong, C. Pruning the Communication Bandwidth between Reinforcement Learning Agents through Causal Inference: An Innovative Approach to Designing a Smart Grid Power System. Sensors 2022, 22, 7785. https://doi.org/10.3390/s22207785

Zhang X, Liu Y, Li W, Gong C. Pruning the Communication Bandwidth between Reinforcement Learning Agents through Causal Inference: An Innovative Approach to Designing a Smart Grid Power System. Sensors. 2022; 22(20):7785. https://doi.org/10.3390/s22207785

Chicago/Turabian StyleZhang, Xianjie, Yu Liu, Wenjun Li, and Chen Gong. 2022. "Pruning the Communication Bandwidth between Reinforcement Learning Agents through Causal Inference: An Innovative Approach to Designing a Smart Grid Power System" Sensors 22, no. 20: 7785. https://doi.org/10.3390/s22207785

APA StyleZhang, X., Liu, Y., Li, W., & Gong, C. (2022). Pruning the Communication Bandwidth between Reinforcement Learning Agents through Causal Inference: An Innovative Approach to Designing a Smart Grid Power System. Sensors, 22(20), 7785. https://doi.org/10.3390/s22207785

.png)