Design and Calibration of Plane Mirror Setups for Mobile Robots with a 2D-Lidar

Abstract

:1. Introduction

1.1. Lidar Sensors on Vehicles

1.2. Previous Work on Lidar Sensors with Reshaped FOV

1.3. Aim

2. Materials and Methods

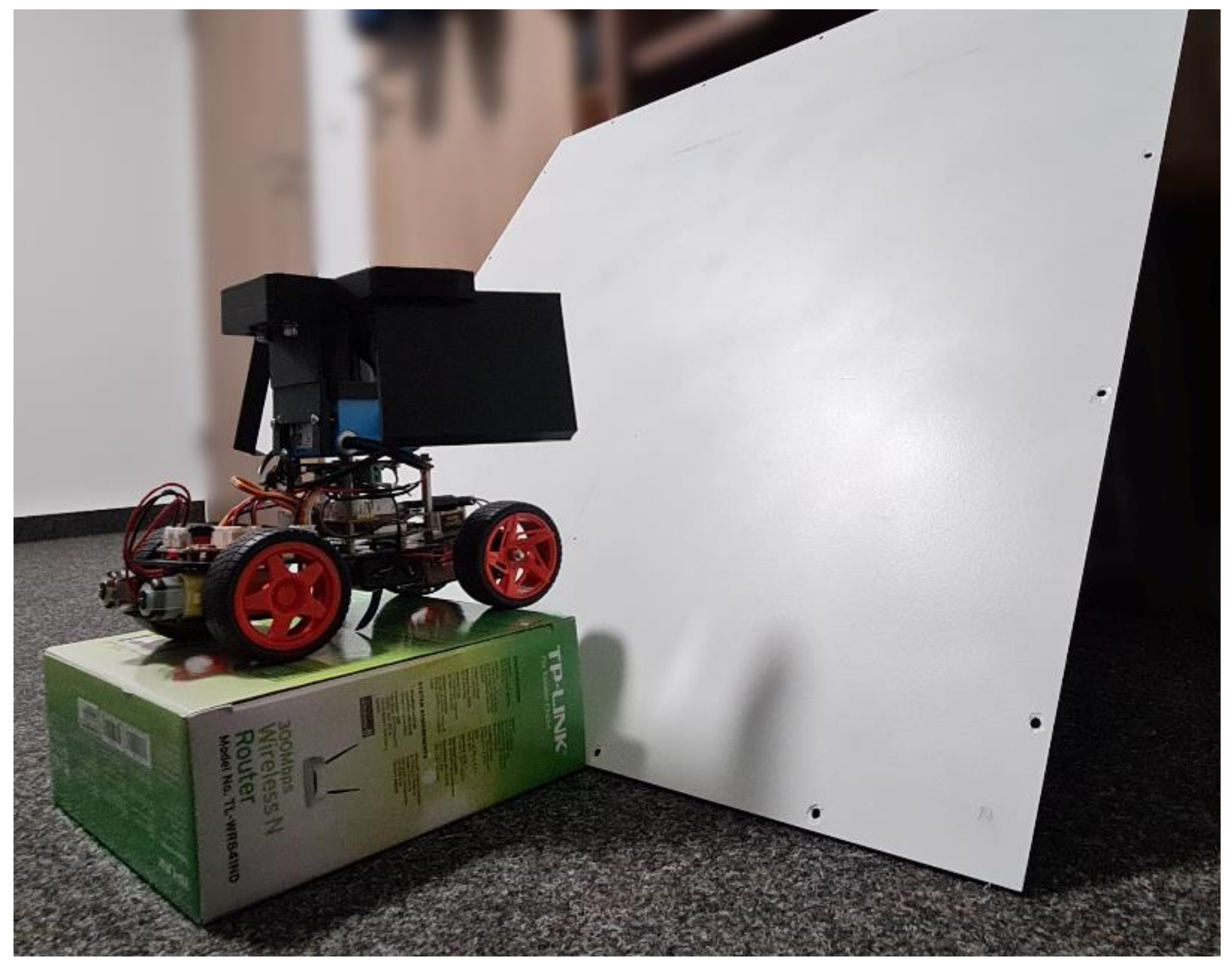

2.1. Prototype Vehicle

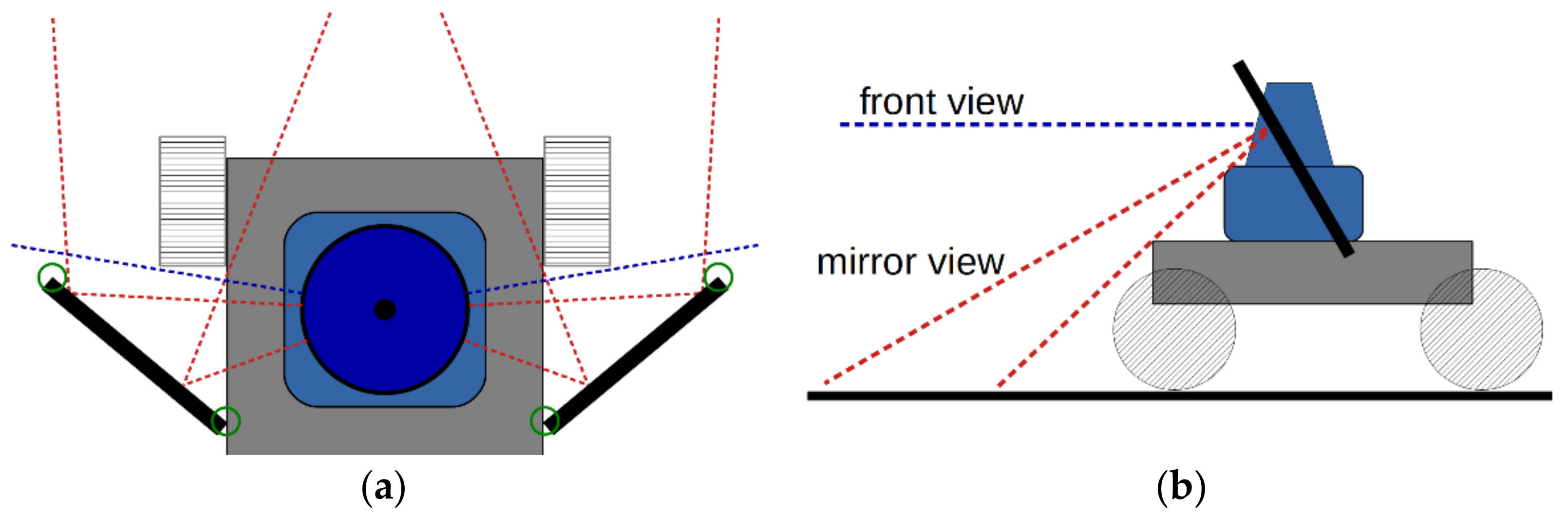

2.2. Geometric Position and Orientation of Mirrors

2.3. Point Cloud Transformation Algorithm

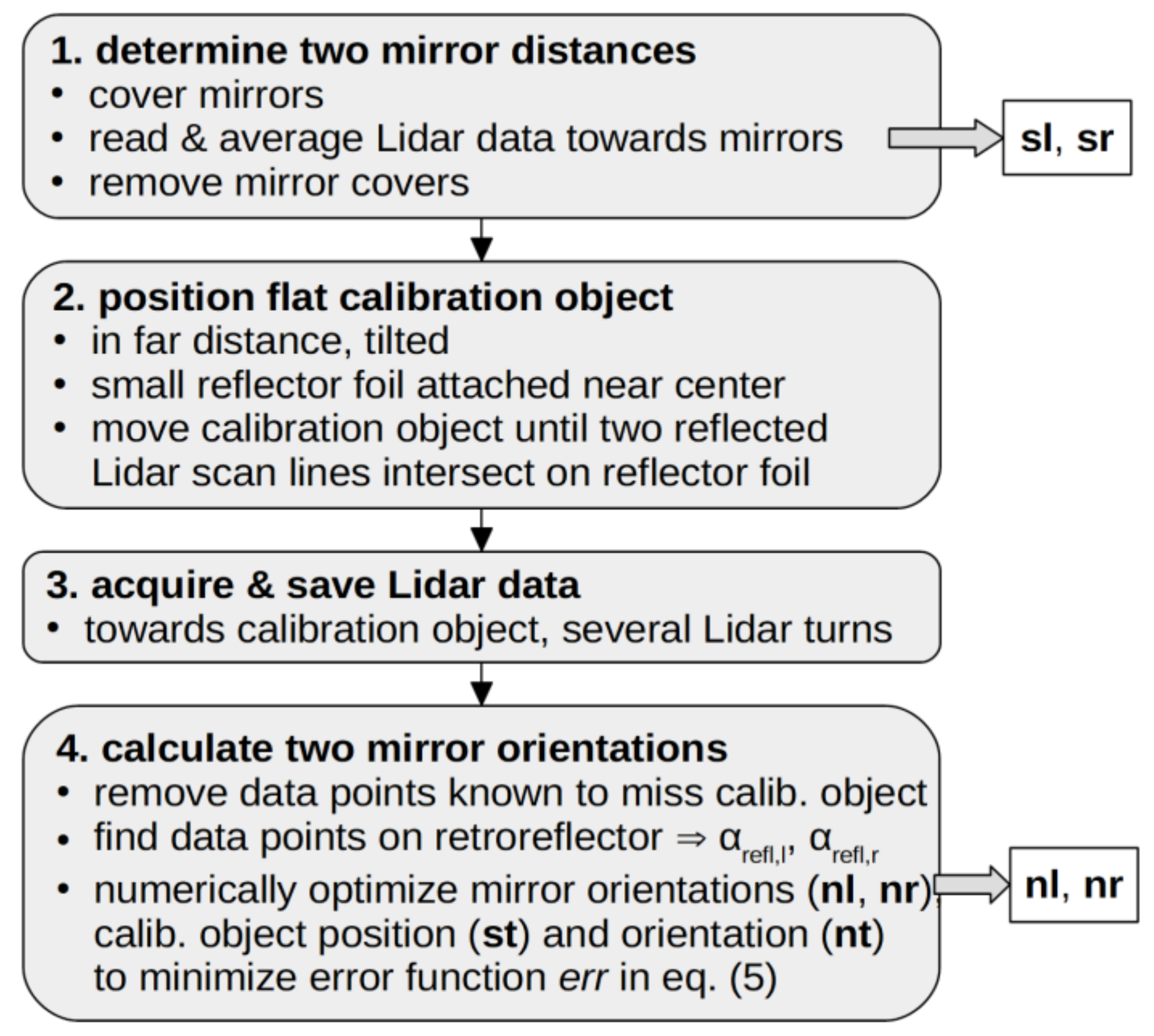

2.4. Calibration Setup and Procedure

- Determine the distance between each mirror and the Lidar along a fixed direction;

- Position the flat calibration object in front of the Lidar;

- Acquire and save Lidar distance data on this flat calibration object;

- Calculate the position and orientation of both mirrors that fit the data best.

- Normal vector of the right mirror surface: nr = (nrx, nry, −1);

- Normal vector of the left mirror surface: nl = (nlx, nly, −1);

- Normal vector of the flat calibration target: nt = (ntx, −1, ntz);

- Support vector of the flat calibration target: st = (stx, sty, stz); this vector was also taken to be the position vector of the retroreflector foil on the target.

- Support vector of right mirror surface: sr = (dr, 0, 0);

- Support vector of left mirror surface: sl = (−dl, 0, 0).

3. Results

3.1. Prototype Vehicle Performance

- Direct view (no mirror): mean distance 403.2 mm, standard deviation 1.9 mm;

- View reflected by mirror: mean distance 400.4 mm, standard deviation 2.1 mm.

- Direct view (no mirror), less object roughness: mean dist. 404.5 mm, st. dev. 4.5 mm.

3.2. Geometric Design Results

- Each mirror covers the near ground on the opposite side;

- Each mirror covers the near ground on the same side;

- Angular FOV in the forward direction (parameter α) as large as possible;

- Total width of the vehicle (parameter L1) as small as possible;

- Width of the mirrors as small as possible.

3.3. Calibration Results and Verification

- Setup A: target tilted ~45° around x-axis, ~0° around z-axis, distance ~300 mm;

- Setup B: target tilted ~30° around x-axis, ~0° around z-axis, distance ~300 mm;

- Setup C: target tilted ~45° around x-axis, ~20° around z-axis, distance ~300 mm;

- Setup D/E/F: same orientation as Setup A/B/C, larger distance ~400 mm;

- Setup G/H/I: same orientation as Setup A/B/C, larger distance ~600 mm.

- The RMS absolute distance between the acquired points and the location of the calibration target plane; see Equation (6). All Lidar rotation angles that produced data on the calibration target, as well as the data from several turns of the spinning Lidar were considered for the calculation of this criterion.

- The arithmetic mean intensity value of the acquired points on the calibration target, in received signal strength indicator (RSSI) values as given by the Lidar sensor.

- The number of successfully acquired data points, given as a percentage of the number of measured data points. (The Lidar sensor can mark return data as invalid.)

- The standard deviation of the measured object distances into one fixed direction directly in front of the vehicle.

- Distance right mirror: dr = (83.3 ± 1.9) mm;

- Distance left mirror: dl = (82.5 ± 1.9) mm.

- Left mirror: βl = 23.74° ± 0.29°, δl = 33.62° ± 0.57°;

- Right mirror: βr = −24.76° ± 0.18°, δr = 32.64° ± 0.55°.

4. Discussion and Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Roriz, R.; Cabral, J.; Gomes, T. Automotive LiDAR Technology: A Survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6282–6297. [Google Scholar] [CrossRef]

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Ito, S.; Hiratsuka, S.; Ohta, M.; Matsubara, H.; Ogawa, M. Small Imaging Depth LIDAR and DCNN-Based Localization for Automated Guided Vehicle. Sensors 2018, 18, 177. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Endres, F.; Sprunk, C.; Kümmerle, R.; Burgard, W. A Catadioptric Extension for RGB-D Cameras. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 466–471. [Google Scholar]

- Anselment, C.; Weber, H.; Aschenbrenner, J.; Roser, C.; Schnurr, N.; Strohmeier, D. Device for Deviating and for Enlarging the Field of View. European Patent EP 2863253 B1, 19 August 2015. [Google Scholar]

- Barone, S.; Carulli, M.; Neri, P.; Paoli, A.; Razionale, A.V. An Omnidirectional Vision Sensor Based on a Spherical Mirror Catadioptric System. Sensors 2018, 18, 408. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baker, S.; Nayar, S.K. A Theory of Single-Viewpoint Catadioptric Image Formation. Int. J. Comput. Vis. 1999, 35, 175–196. [Google Scholar] [CrossRef]

- Willhoeft, V.; Fuerstenberg, K. Multilevel-Extension for Laserscanners. In Proceedings of the ITSC 2001. 2001 IEEE Intelligent Transportation Systems. Proceedings (Cat. No.01TH8585), Oakland, CA, USA, 25–29 August 2001; pp. 446–450. [Google Scholar]

- Abiko, S.; Sakamoto, Y.; Hasegawa, T.; Yuta, S.; Shimaji, N. Development of Constant Altitude Flight System Using Two Dimensional Laser Range Finder with Mirrors. In Proceedings of the 2017 IEEE International Conference on Advanced Intelligent Mechatronics (AIM), Munich, Germany, 3–7 July 2017; pp. 833–838. [Google Scholar]

- Matsubara, K.; Nagatani, K. Improvement in Measurement Area of Three-Dimensional LiDAR Using Mirrors Mounted on Mobile Robots. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Matsubara, K.; Nagatani, K.; Hirata, Y. Improvement in Measurement Area of 3D LiDAR for a Mobile Robot Using a Mirror Mounted on a Manipulator. IEEE Robot. Autom. Lett. 2020, 5, 6350–6356. [Google Scholar] [CrossRef]

- Aalerud, A.; Dybedal, J.; Subedi, D. Reshaping Field of View and Resolution with Segmented Reflectors: Bridging the Gap between Rotating and Solid-State LiDARs. Sensors 2020, 20, 3388. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Pitzer, B.; Droz, P.-Y.; Grossman, W. Mirrors to Extend Sensor Field of View in Self-Driving Vehicles. U.S. Patent Application US 2020/0341118 A1, 29 October 2020. [Google Scholar]

- Pełka, M.; Będkowski, J. Calibration of Planar Reflectors Reshaping LiDAR’s Field of View. Sensors 2021, 21, 6501. [Google Scholar] [CrossRef] [PubMed]

- SunFounder PiCar-S Kit V2.0 for Raspberry Pi. Available online: https://www.sunfounder.com/products/raspberrypi-sensor-car (accessed on 22 April 2022).

- Raspberry Pi 4 Model B. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 22 April 2022).

- Python.Org. Available online: https://www.python.org/ (accessed on 28 April 2022).

- 2D LiDAR Sensors|TiM3xx|SICK (TIM310-S01 Is Not Sold Anymore). Available online: https://www.sick.com/de/en/detection-and-ranging-solutions/2d-lidar-sensors/tim3xx/c/g205751 (accessed on 22 April 2022).

- Metallic Mirror Coatings|Edmund Optics. Available online: https://www.edmundoptics.eu/knowledge-center/application-notes/optics/metallic-mirror-coatings/ (accessed on 22 April 2022).

- Creo Parametric 3D Modeling Software|PTC. Available online: https://www.ptc.com/en/products/creo/parametric (accessed on 22 April 2022).

- Diamond Grade Reflective Sheeting|3M United States. Available online: https://www.3m.com/3M/en_US/p/c/films-sheeting/reflective-sheeting/b/diamond-grade/ (accessed on 28 April 2022).

- Scipy.Optimize.Fmin—SciPy v1.8.0 Manual. Available online: https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.fmin.html (accessed on 28 April 2022).

- Plane (Geometry)—Distance from a Point to a Plane. Wikipedia. Available online: https://en.wikipedia.org/wiki/Plane_(geometry) (accessed on 13 April 2022).

| Geometry Parameter | α | β | γ | L1 | L2 | L3 | L4 |

|---|---|---|---|---|---|---|---|

| CAD value used in prototype vehicle | 110.2° | 25.1° | 73.5° | 214.4 mm | 81.1 mm | 419.8 mm | 100 mm |

| FOV Position Value on Ground in Front of Vehicle | Theoretical CAD Value | Measurement Result |

|---|---|---|

| min. distance L2, left side of vehicle | 81.1 mm | 53 mm |

| min. distance L2, right side of vehicle | 81.1 mm | 62 mm |

| max. distance L3, left side of vehicle | 419.8 mm | 368 mm |

| max. distance L3, right side of vehicle | 419.8 mm | 425 mm |

| Design Goal | α | β | γ | L1 | L2 | L3 | L4 |

|---|---|---|---|---|---|---|---|

| 1 | 110.2° | 25.1° | 73.5° | 214.4 mm | 81.1 mm | 419.8 mm | 100 mm |

| 2 | 145.4° | 45.0° | 73.0° | 220.8 mm | 96.7 mm | 337.1 mm | 100 mm |

| 3 | 180.0° | 46.2° | 67.0° | 223.3 mm | 59.6 mm | 237.0 mm | 34.8 mm |

| 4 | 135.6° | 29.8° | 73.0° | 159.3 mm | 85.0 mm | 239.0 mm | 100 mm |

| 5 | 144.6° | 38.0° | 70.0° | 167.9 mm | 182.8 mm | 290.4 mm | 34.8 mm |

| Calibration Setup | RMS Distance | Mean Intensity(RSSI) | Distance st. dev. Front | Right Mirror | Left Mirror | ||

|---|---|---|---|---|---|---|---|

| βr | δr | βl | δl | ||||

| CAD (theory) | - | - | - | −25.07° | 34.96° | 25.07° | 34.96° |

| A | 1.05 mm | 142.46 | 1.25 mm | −26.73°. | 15.25° | 24.99° | 15.82° |

| 1.22 mm | 142.29 | 1.19 mm | −25.34° | 25.29° | 23.76° | 26.18° | |

| B | 0.55 mm | 143.54 | 0.97 mm | −26.83° | 9.82° | 24.97° | 10.25° |

| 0.40 mm | 140.50 | 0.79 mm | −26.95° | 6.07° | 25.12° | 6.32° | |

| C | 1.18 mm | 156.25 | 1.17 mm | −24.57° | 33.37° | 23.36° | 34.48° |

| 1.30 mm | 155.25 | 1.19 mm | −24.67° | 32.39° | 23.47° | 33.49° | |

| D | 1.17 mm | 159.73 | 0.98 mm | −25.37° | 29.51° | 23.83° | 30.55° |

| 1.18 mm | 158.44 | 0.89 mm | −25.07° | 30.58° | 23.66° | 31.61° | |

| E | 1.07 mm | 158.68 | 0.70 mm | −25.52° | 28.86° | 23.73° | 30.00° |

| 1.03 mm | 158.25 | 0.76 mm | −25.14° | 31.19° | 23.48° | 32.33° | |

| F | 1.53 mm | 175.41 | 0.74 mm | −23.45° | 37.37° | 23.63° | 37.25° |

| 1.35 mm | 174.06 | 0.83 mm | −23.61° | 37.03° | 23.56° | 36.97° | |

| G | 1.65 mm | 178.87 | 1.18 mm | −24.44° | 28.91° | 23.10° | 30.08° |

| 1.05 mm | 180.81 | 1.11 mm | −24.63° | 22.29° | 24.32° | 22.84° | |

| H | 1.11 mm | 168.35 | 1.06 mm | −24.60° | 33.30° | 24.01° | 34.21° |

| 1.03 mm | 168.86 | 1.06 mm | −24.79° | 32.44° | 24.10° | 33.35° | |

| I | 1.23 mm | 170.79 | 0.90 mm | −25.00° | 32.18° | 23.72° | 33.22° |

| 1.30 mm | 170.69 | 0.69 mm | −24.93° | 32.14° | 23.75° | 33.16° | |

| CalibrationSetup | Verification Setup | RMS Distance | Distancest. dev. Front | Plane Object Angle 90° + βtarget | Plane Object Angle δtarget |

|---|---|---|---|---|---|

| C | D (~45°,~0°) | 1.27 mm | 0.98 mm | 39.60° | −2.15° |

| H | I (~45°~,20°) | 1.57 mm | 0.69 mm | 36.56° | 21.67° |

| H | A (~45°,~0°) | 1.55 mm | 1.25 mm | 39.42° | 1.38° |

| I | H(~30°, ~0°) | 1.10 mm | 1.06 mm | 25.97° | −0.60° |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kibii, J.E.; Dreher, A.; Wormser, P.L.; Gimpel, H. Design and Calibration of Plane Mirror Setups for Mobile Robots with a 2D-Lidar. Sensors 2022, 22, 7830. https://doi.org/10.3390/s22207830

Kibii JE, Dreher A, Wormser PL, Gimpel H. Design and Calibration of Plane Mirror Setups for Mobile Robots with a 2D-Lidar. Sensors. 2022; 22(20):7830. https://doi.org/10.3390/s22207830

Chicago/Turabian StyleKibii, James E., Andreas Dreher, Paul L. Wormser, and Hartmut Gimpel. 2022. "Design and Calibration of Plane Mirror Setups for Mobile Robots with a 2D-Lidar" Sensors 22, no. 20: 7830. https://doi.org/10.3390/s22207830

APA StyleKibii, J. E., Dreher, A., Wormser, P. L., & Gimpel, H. (2022). Design and Calibration of Plane Mirror Setups for Mobile Robots with a 2D-Lidar. Sensors, 22(20), 7830. https://doi.org/10.3390/s22207830