A Novel Adaptive Deskewing Algorithm for Document Images

Abstract

1. Introduction

2. Related Works

3. Method

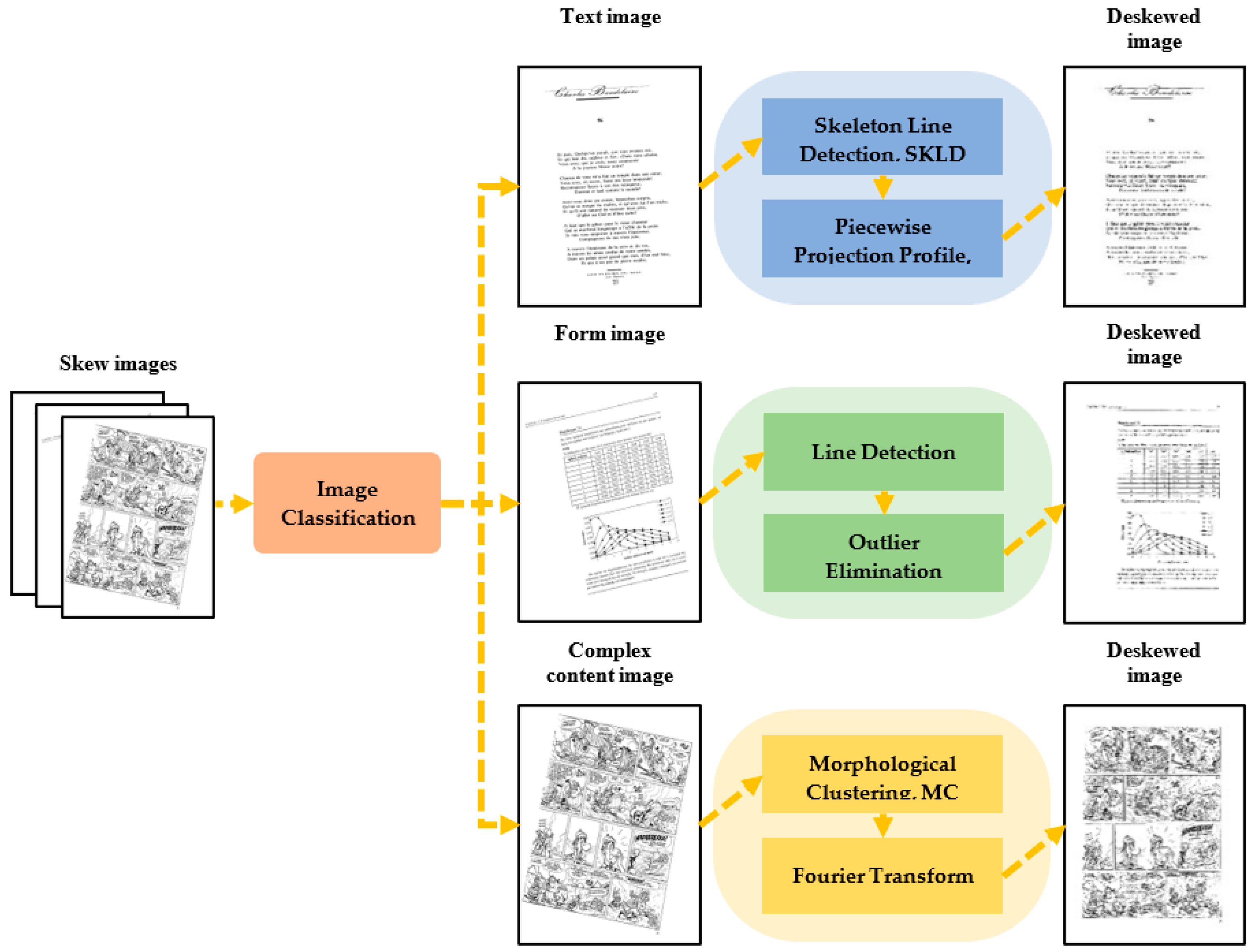

3.1. Algorithm Overview

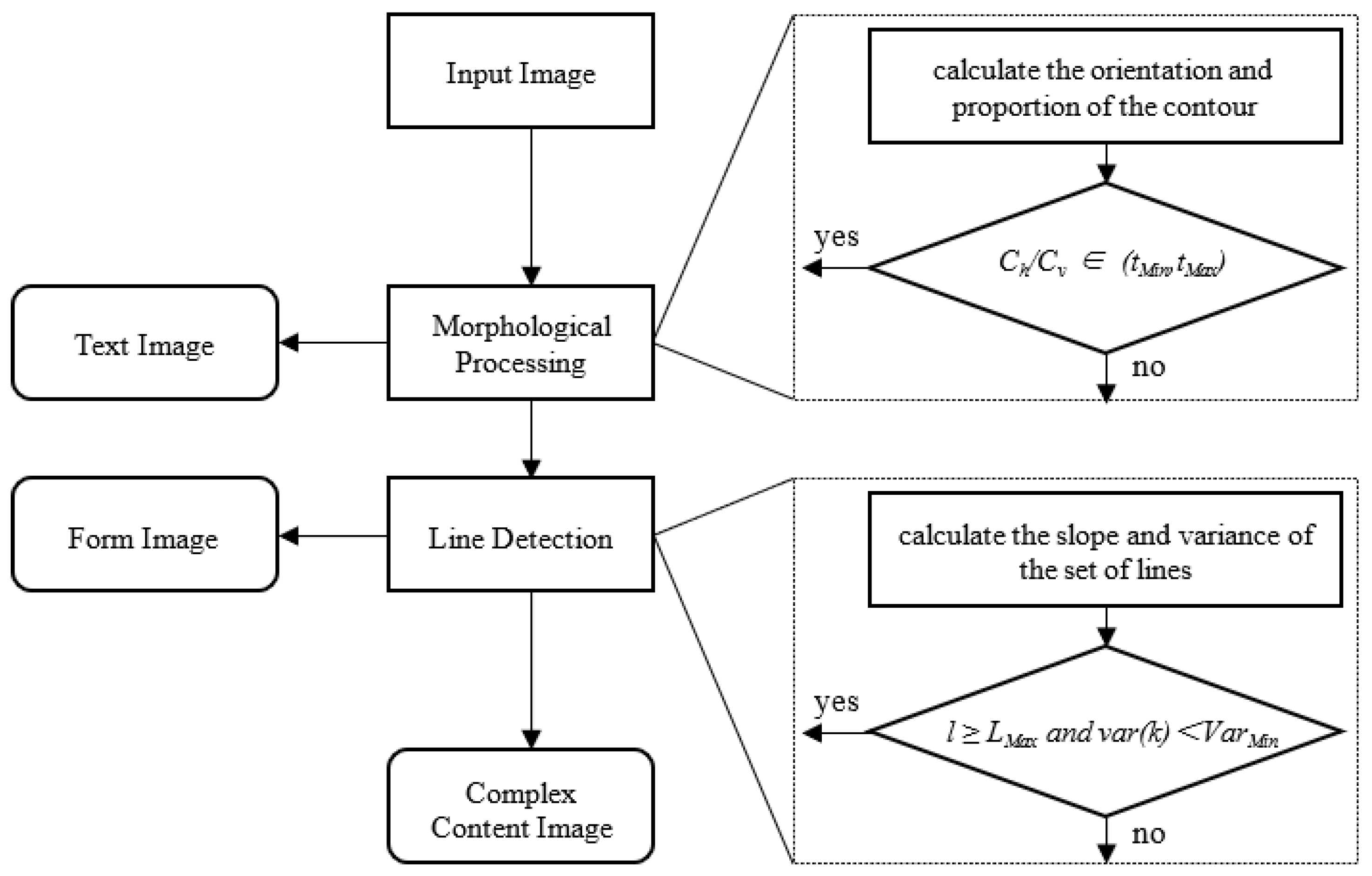

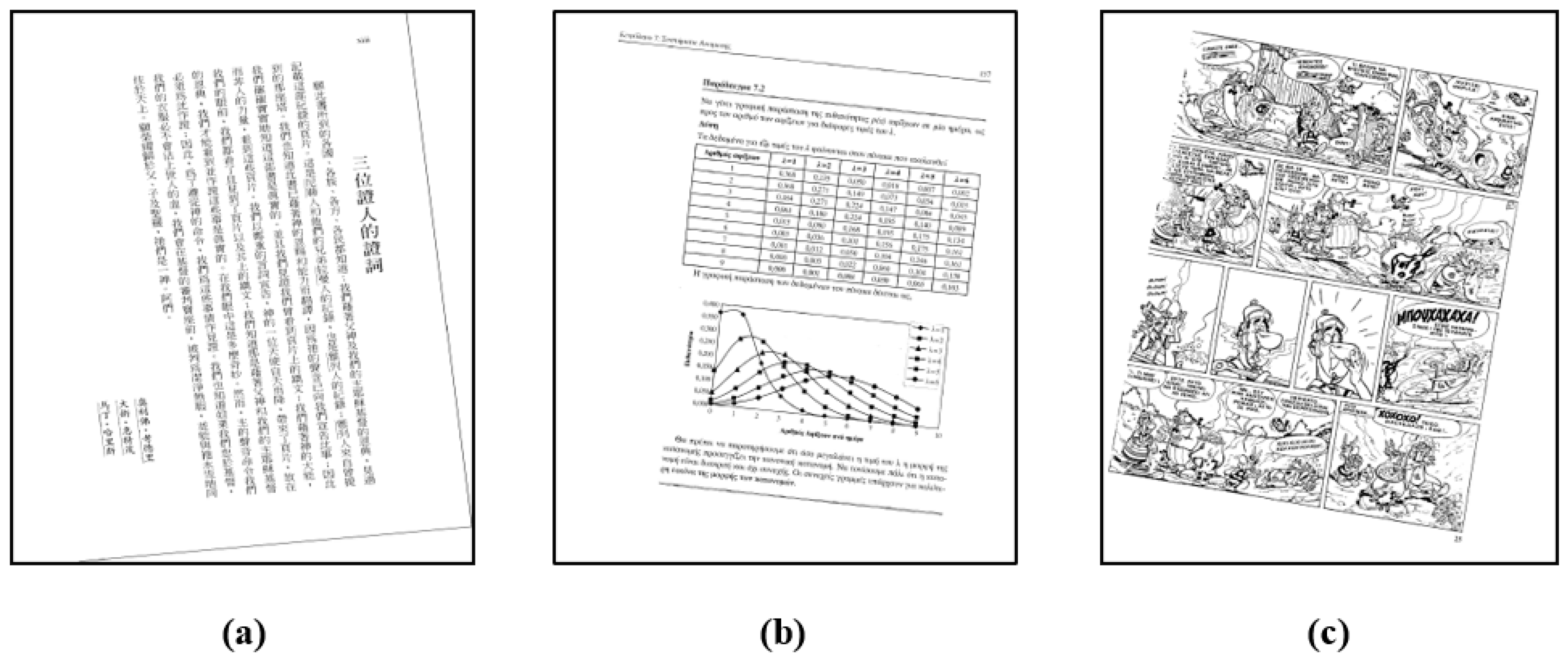

3.2. Image Classification

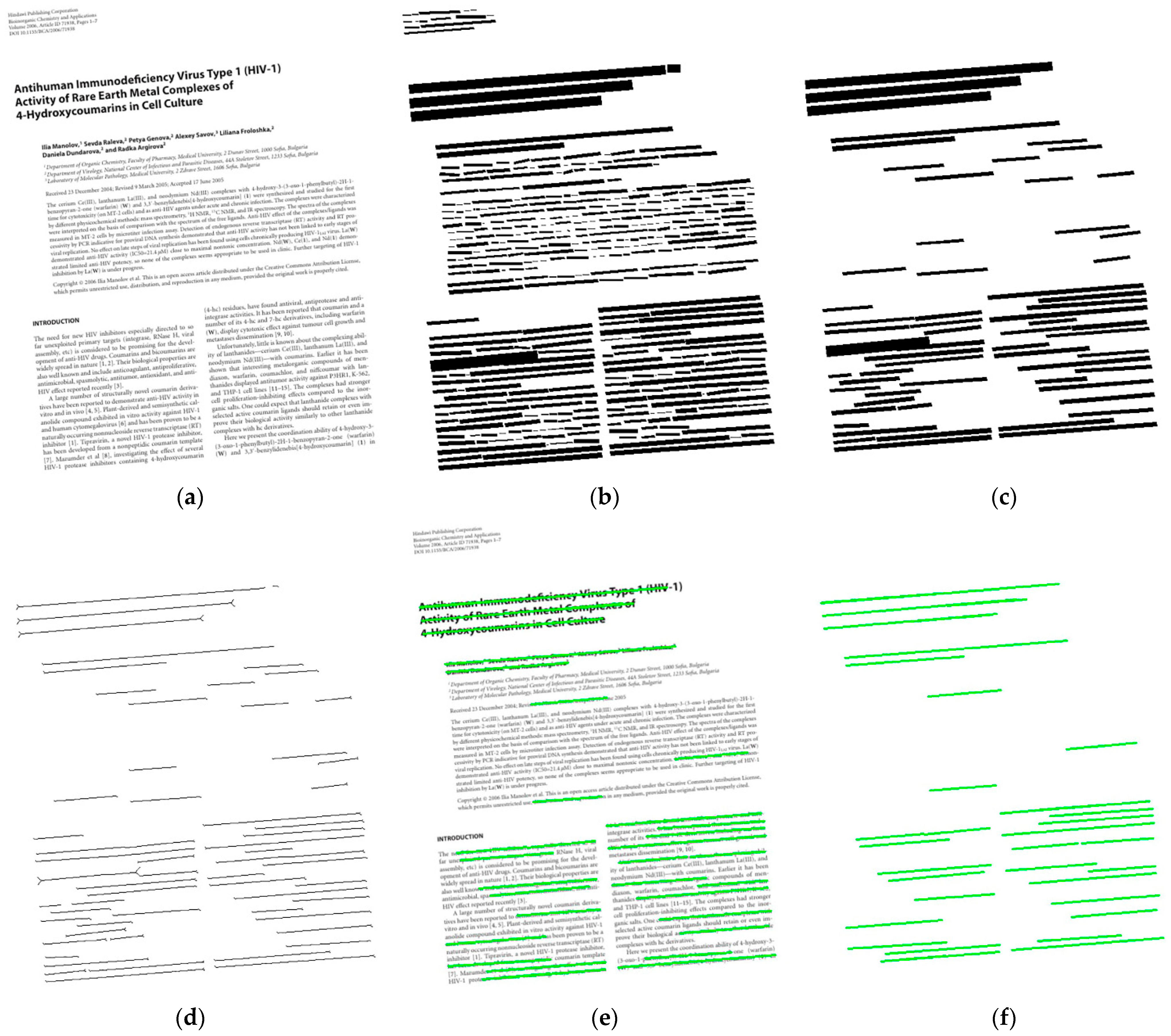

3.3. Text Image Correction

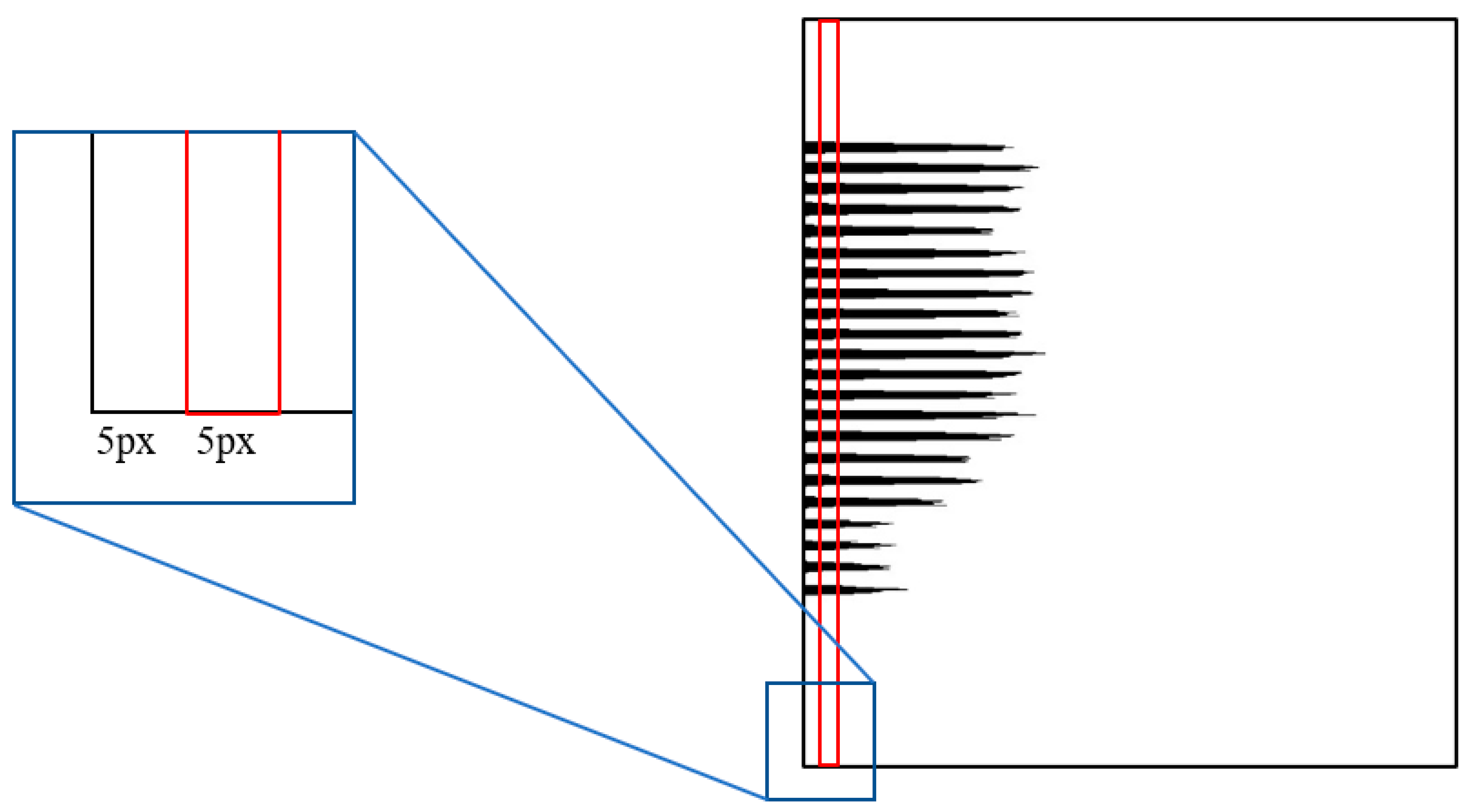

- Scale the image equally, as shown in Equation (3). Then, distinguish the foreground and background of the image by using adaptive binarization algorithm.

- 2.

- For the first segment projection, let be a rotation range, and denote by L1 the rotation angle’s interval. In this paper, we set L1 = 0.1°, θstart = −0.5° and θend = 0.5°. The projection direction is selected according to the text writing direction. If the text writing direction is horizontal, the document image is projected horizontally to obtain the horizontal projection profile. Otherwise, it is projected vertically to get a vertical projection profile.

- 3.

- Calculate the valley value of the projection profile and find the angle θ corresponding to the minimum valley value. Θ is the skew angle of the image when the accuracy is L1. For example, the valley value (Val) of the horizontal projection is calculated as shown in Equation (4).

- 4.

- If rotation angle θ is more than one when Val is the smallest, the starting angle of the new range is the smallest angle which is denoted by θmin in rotation angles, and the end angle of the new range is the largest angle which denoted by θmax in rotation angles. The rotation range of the second segment projection is , as shown in Equation (5).

- 5.

- Set the rotation angle L2 = L1/10, and repeat the operation in step (3) according to the rotation range obtained in step (4). The angle θ finally predicted is the skew angle of the image. If there are multiple θ, we take the mean value of θ as the final skew angle.

| Algorithm 1: Piecewise projection |

| Input: The document image that has been pre-corrected by line detection correction. Start resize the image from θstart to θend stride: L1 Project the image to the prior text writing direction. Calculate the valley value of the project profile in each projection, and find the smallest one. Calculate the new projection angle interval [, ] based on the minimum valley value. From to stride: L2 Project the image to the prior text writing direction. Calculate the valley value (Var) of the project profile in each projection and find the smallest one. Estimate the skew angle θ based on the minimum valley value. Deskew image. End |

3.4. Form Image Correction

3.5. Complex Content Image Correction

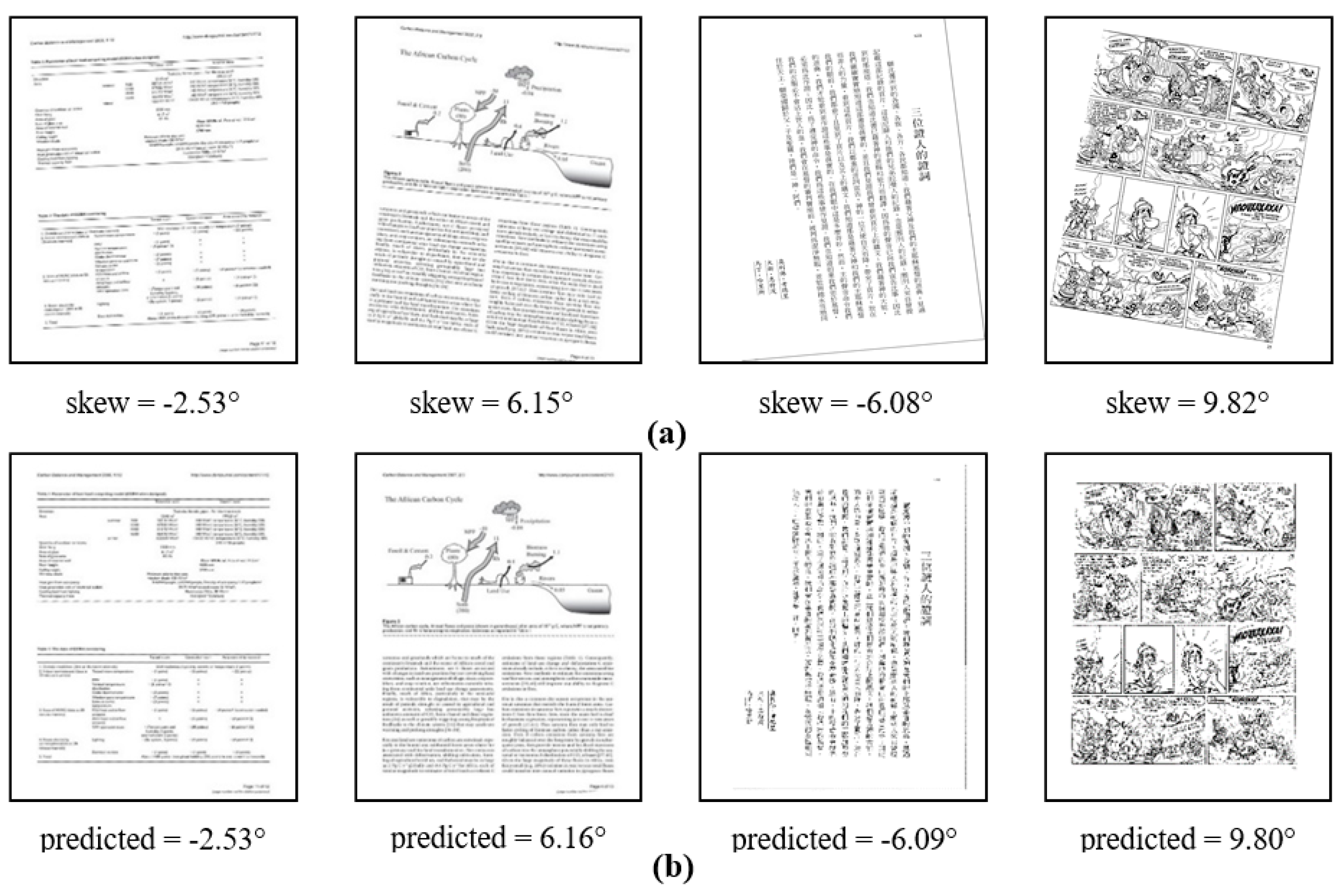

4. Experiments

4.1. Datasets

4.2. Evaluation Criteria

4.3. Experimental Result

- M1: Baseline with Line Detection.

- M2: Baseline with Line Detection and Skeleton Extraction.

- M3: Baseline with Line Detection, Skeleton Extraction, and Piecewise Projection.

- M4: Baseline with Line Detection, Skeleton Extraction, and Morphological Fourier Transform.

- Ours: Refers to the method we proposed.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Rice, S.V.; Jenkins, F.R.; Nartker, T.A. The Fourth Annual Test of OCR Accuracy. Available online: https://www.stephenvrice.com/images/AT-1995.pdf (accessed on 10 October 2022).

- Hemantha, K.G.; Shivakumara, P. Skew Detection Technique for Binary Document Images based on Hough Transform. Int. J. Inf. Technol. 2007, 1, 2401–2407. [Google Scholar]

- Singh, C.; Bhatia, N.; Kaur, A. Hough transform based fast skew detection and accurate skew correction methods. Pattern Recognit. 2008, 41, 3528–3546. [Google Scholar] [CrossRef]

- Le, D.S.; Thoma, G.R.; Wechsler, H. Automated page orientation and skew angle detection for binary document images. Pattern Recognit. 1994, 27, 1325–1344. [Google Scholar] [CrossRef]

- Boukharouba, A. A new algorithm for skew correction and baseline detection based on the randomized Hough Transform. J. King Saud Univ.-Comput. Inf. Sci. 2017, 29, 29–38. [Google Scholar] [CrossRef]

- Deans, S.R. The Radon Transform and Some of Its Applications; Courier Corporation: Honolulu, HI, USA, 2007. [Google Scholar]

- Aradhya, V.; Kumar, G.H.; Shivakumara, P. An accurate and efficient skew estimation technique for South Indian documents: A new boundary growing and nearest neighbor clustering based approach. Int. J. Robot. Autom. 2007, 22, 272–280. [Google Scholar] [CrossRef]

- Al-Khatatneh, A.; Pitchay, S.A.; Al-Qudah, M. A Review of Skew Detection Techniques for Document. In Proceedings of the 17th UKSIM-AMSS International Conference on Modelling and Simulation, Washington, DC, USA, 25–27 March 2015; pp. 316–321. [Google Scholar]

- Sun, S.J. Skew detection using wavelet decomposition and projection profile analysis. Pattern Recognit. Lett. 2007, 28, 555–562. [Google Scholar]

- Bekir, Y. Projection profile analysis for skew angle estimation of woven fabric images. J. Text. Inst. Part 3 Technol. New Century 2014, 105, 654–660. [Google Scholar]

- Papandreou, A.; Gatos, B. A Novel Skew Detection Technique Based on Vertical Projections. In Proceedings of the 2011 International Conference on Document Analysis and Recognition, Beijing, China, 18–21 September 2011; pp. 384–388. [Google Scholar]

- Belhaj, S.; Kahla, H.B.; Dridi, M.; Moakher, M. Blind image deconvolution via Hankel based method for computing the GCD of polynomials. Math. Comput. Simul. 2018, 144, 138–152. [Google Scholar] [CrossRef]

- Nussbaumer, H.J. The fast Fourier transform. In Fast Fourier Transform and Convolution Algorithms; Springer: Berlin/Heidelberg, Germany, 1981; pp. 80–111. [Google Scholar]

- Boiangiu, C.-A.; Dinu, O.-A.; Popescu, C.; Constantin, N.; Petrescu, C. Voting-Based Document Image Skew Detection. Appl. Sci. 2020, 10, 2236. [Google Scholar] [CrossRef]

- Shafii, M. Optical Character Recognition of Printed Persian/Arabic Documents; University of Windsor (Canada): Windsor, ON, Canada, 2014. [Google Scholar]

- Mascaro, A.A.; Cavalcanti, R.D.C.; Mello, R.A.B. Fast and robust skew estimation of scanned documents through background area information. Pattern Recognit. Lett. 2010, 31, 1403–1411. [Google Scholar] [CrossRef]

- Chou, C.H.; Chu, S.Y.; Chang, F. Estimation of skew angles for scanned documents based on piecewise covering by parallelograms. Pattern Recognit. 2007, 40, 443–455. [Google Scholar] [CrossRef]

- Wood, J. Minimum Bounding Rectangle; Springer: New York, NY, USA, 2017. [Google Scholar]

- Papandreou, A.; Gatos, B.; Louloudis, G.; Stamatopoulos, N. ICDAR 2013 document image skew estimation contest (DISEC 2013). In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1444–1448. [Google Scholar]

- Fabrizio, J. A precise skew estimation algorithm for document images using KNN clustering and fourier transform. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 2585–2588. [Google Scholar]

- Cai, C.; Meng, H.; Qiao, R. Adaptive cropping and deskewing of scanned documents based on high accuracy estimation of skew angle and cropping value. Vis. Comput. 2021, 37, 1917–1930. [Google Scholar] [CrossRef]

- Koo, H.I.; Cho, N.I. Skew estimation of natural images based on a salient line detector. J. Electron. Imaging 2013, 22, 3020. [Google Scholar] [CrossRef]

- Matas, J.; Galambos, C.; Kittler, J. Robust Detection of Lines Using the Progressive Probabilistic Hough Transform. Comput. Vis. Image Underst. 2000, 78, 119–137. [Google Scholar] [CrossRef]

- Ahmad, R.; Naz, S.; Razzak, I. Efficient skew detection and correction in scanned document images through clustering of probabilistic hough transforms. Pattern Recognit. Lett. 2021, 152, 93–99. [Google Scholar] [CrossRef]

- Stahlberg, F.; Vogel, S. Document Skew Detection Based on Hough Space Derivatives. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 366–370. [Google Scholar]

- Gari, A.; Khaissidi, G.; Mrabti, M.; Chenouni, D.; El Yacoubi, M. Skew detection and correction based on Hough transform and Harris corners. In Proceedings of the International Conference on Wireless Technologies, Embedded and Intelligent Systems, Fez, Morocco, 19–20 April 2017; pp. 1–4. [Google Scholar]

- Boudraa, O.; Hidouci, W.K.; Michelucci, D. An improved skew angle detection and correction technique for historical scanned documents using morphological skeleton and progressive probabilistic Hough transform. In Proceedings of the 2017 5th International Conference on Electrical Engineering-Boumerdes (ICEE-B), Boumerdes, Algeria, 29–31 October 2017; pp. 1–6. [Google Scholar]

- Ju, Z.; Wang, P. Skew angle detection algorithm of text image based on geometric constraints. Comput. Appl. Res. 2013, 30, 950–952. [Google Scholar]

- Wagdy, M.; Faye, I.; Rohaya, D. Document image skew detection and correction method based on extreme points. In Proceedings of the 2014 International Conference on Computer and Information Sciences (ICCOINS), Kuala Lumpur, Malaysia, 3–5 June 2014; pp. 1–5. [Google Scholar]

- Papandreou, A.; Gatos, B.; Perantonis, S.J.; Gerardis, I. Efficient skew detection of printed document images based on novel combination of enhanced profiles. Int. J. Doc. Anal. Recognit. (IJDAR) 2014, 17, 433–454. [Google Scholar] [CrossRef]

- Dai, J.; Guo, L.; Wang, Z.; Liu, S. An Orientation-correction Detection Method for Scene Text Based on SPP-CNN. In Proceedings of the 2019 IEEE 4th International Conference on Cloud Computing and Big Data Analysis (ICCCBDA), Chengdu, China, 12–15 April 2019; pp. 671–675. [Google Scholar]

- Wang, W.; Shen, J.; Ling, H. A Deep Network Solution for Attention and Aesthetics Aware Photo Cropping. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1531–1544. [Google Scholar] [CrossRef] [PubMed]

- Gioi, R.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A line segment detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Zhong, X.; Tang, J.; Yepes, A.J. PubLayNet: Largest Dataset Ever for Document Layout Analysis. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1015–1022. [Google Scholar]

- Simon, G.; Tabbone, S. Generic Document Image Dewarping by Probabilistic Discretization of Vanishing Points. In Proceedings of the ICPR 2020—25th International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2344–2351. [Google Scholar]

- Zhai, M.; Workman, S.; Jacobs, N. Detecting Vanishing Points using Global Image Context in a Non-Manhattan World. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition CVPR, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5657–5665. [Google Scholar]

- Li, X.; Zhang, B.; Liao, J.; Sander, P.V. Document rectification and illumination correction using a patch-based CNN. ACM Trans. Graph. 2019, 38, 1–11. [Google Scholar] [CrossRef]

| Skewed Image | Precision (%) |

|---|---|

| Text images | 96.97 |

| form images | 100 |

| Complex content images | 96.97 |

| Skewed Image | Method | AED (°) | TOP80 (°) | CE (%) |

|---|---|---|---|---|

| Text images | Text image correction | 0.065 | 0.042 | 83.0 |

| Form image correction | 0.137 | 0.077 | 55.3 | |

| Complex content correction | 0.570 | 0.322 | 39.8 | |

| Form images | Text image correction | 0.631 | 0.107 | 48.2 |

| Form image correction | 0.084 | 0.066 | 77.0 | |

| Complex content correction | 0.949 | 0.781 | 31.2 | |

| Complex content images | Text image correction | 1.414 | 1.050 | 16.1 |

| Form image correction | 1.127 | 0.815 | 27.4 | |

| Complex content correction | 0.055 | 0.045 | 71.7 |

| Method | AED (°) | TOP80 (°) | CE (%) |

|---|---|---|---|

| Peak value | 0.128 | 0.081 | 60.4 |

| Valley value | 0.065 | 0.042 | 83.0 |

| Method | AED (°) | TOP80 (°) | CE (%) |

|---|---|---|---|

| FT | 0.109 | 0.062 | 64.2 |

| HT | 0.095 | 0.053 | 72.2 |

| PP | 0.072 | 0.046 | 78.8 |

| NNC | 0.079 | 0.054 | 73.1 |

| Our method | 0.025 | 0.014 | 97.6 |

| Method | AED | TOP80 | CE | S | Overall Rank |

|---|---|---|---|---|---|

| FT | 5 | 5 | 5 | 15 | 5 |

| GT | 4 | 3 | 4 | 11 | 4 |

| PP | 2 | 2 | 2 | 6 | 2 |

| NNC | 3 | 4 | 3 | 10 | 3 |

| Our method | 1 | 1 | 1 | 3 | 1 |

| Method | AED (°) | TOP80 (°) | CE (%) |

|---|---|---|---|

| CCM [21] | 0.083 | / | 68.00 |

| OBM [27] | 0.078 | 0.051 | / |

| FSM [25] | 0.115 | 0.049 | 73.74 |

| RAM [24] | 0.370 | 0.079 | 55.41 |

| LRDE-EPITA-a 1 | 0.072 | 0.046 | 77.48 |

| Ajou-SNU 1 | 0.085 | 0.051 | 71.23 |

| LRDE-EPITA-b 1 | 0.097 | 0.053 | 68.32 |

| Our method | 0.077 | 0.045 | 80.10 |

| Method | AED | TOP80 | CE | S | Overall Rank |

|---|---|---|---|---|---|

| CCM [21] | 4 | (4) | 6 | 14 | 6 |

| OBM [27] | 3 | 4 | (3) | 10 | 3 |

| FSM [25] | 7 | 3 | 3 | 13 | 4 |

| RAM [24] | 8 | 8 | 8 | 24 | 8 |

| LRDE-EPITA-a 1 | 1 | 2 | 2 | 5 | 2 |

| Ajou-SNU 1 | 5 | 4 | 4 | 13 | 4 |

| LRDE-EPITA-b 1 | 6 | 5 | 5 | 16 | 7 |

| Our method | 2 | 1 | 1 | 4 | 1 |

| Modules | M1 | M2 | M3 | M4 | Ours |

|---|---|---|---|---|---|

| Line Detection | √ | √ | √ | √ | √ |

| Skeleton Extraction | × | √ | √ | √ | √ |

| Piecewise Projection | × | × | √ | × | √ |

| Morphological Fourie Transform | × | × | × | √ | √ |

| CE | 72.2% | 80.1% | 88.4% | 83.9% | 97.6% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bao, W.; Yang, C.; Wen, S.; Zeng, M.; Guo, J.; Zhong, J.; Xu, X. A Novel Adaptive Deskewing Algorithm for Document Images. Sensors 2022, 22, 7944. https://doi.org/10.3390/s22207944

Bao W, Yang C, Wen S, Zeng M, Guo J, Zhong J, Xu X. A Novel Adaptive Deskewing Algorithm for Document Images. Sensors. 2022; 22(20):7944. https://doi.org/10.3390/s22207944

Chicago/Turabian StyleBao, Wuzhida, Cihui Yang, Shiping Wen, Mengjie Zeng, Jianyong Guo, Jingting Zhong, and Xingmiao Xu. 2022. "A Novel Adaptive Deskewing Algorithm for Document Images" Sensors 22, no. 20: 7944. https://doi.org/10.3390/s22207944

APA StyleBao, W., Yang, C., Wen, S., Zeng, M., Guo, J., Zhong, J., & Xu, X. (2022). A Novel Adaptive Deskewing Algorithm for Document Images. Sensors, 22(20), 7944. https://doi.org/10.3390/s22207944