Multispectral Benchmark Dataset and Baseline for Forklift Collision Avoidance

Abstract

:1. Introduction

1.1. Related Works

1.1.1. Multispectral Pedestrian Detection Datasets

1.1.2. Multispectral Pedestrian Detection Algorithms

1.1.3. Pedestrian Detection Algorithms for Intralogistics

2. Materials and Methods

2.1. Proposed Multispectral Pedestrian Dataset

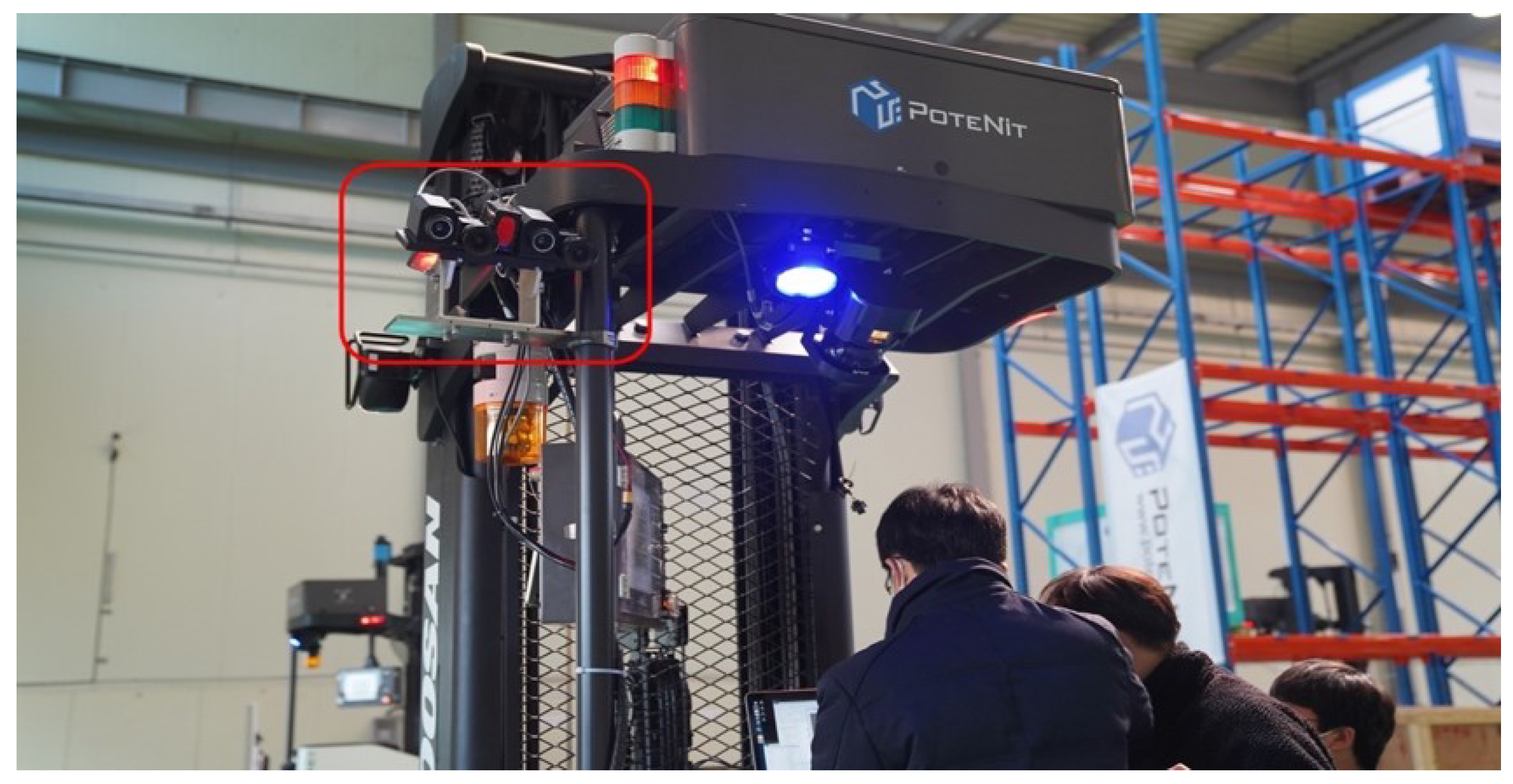

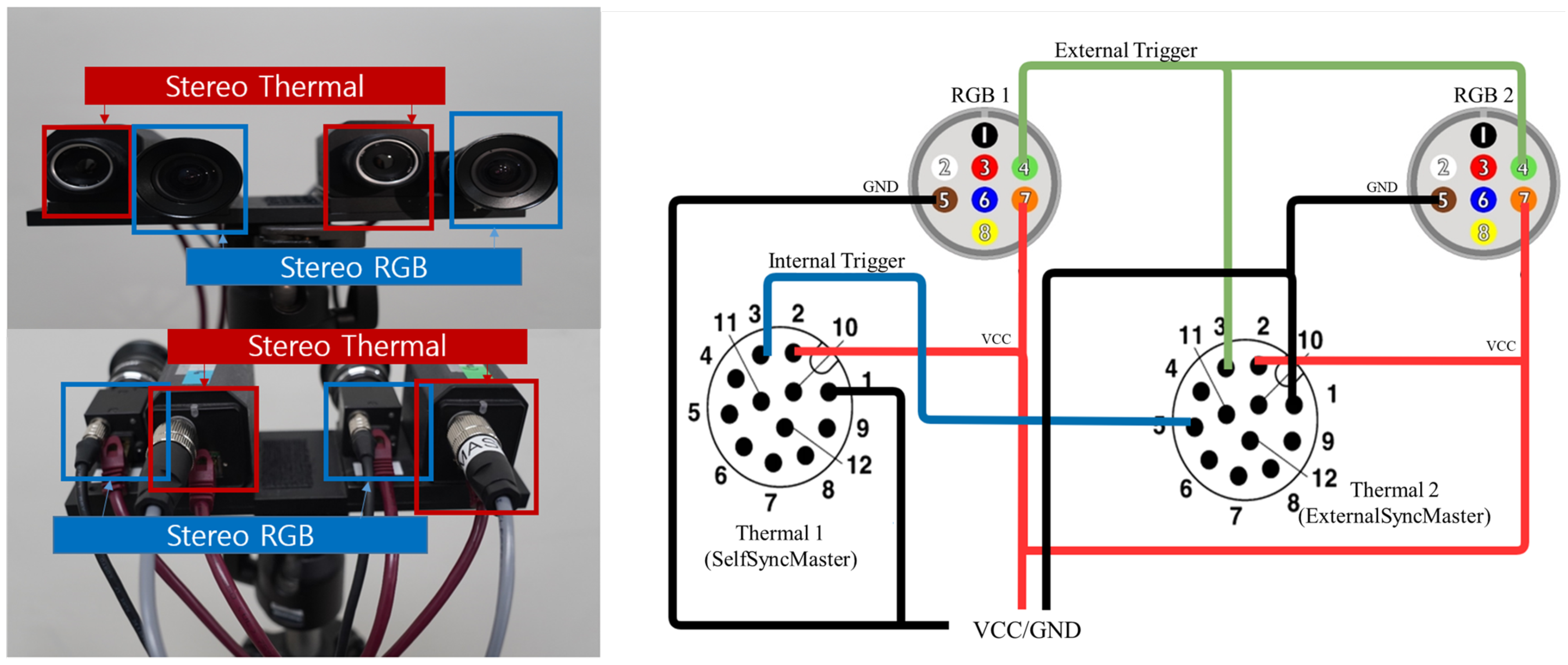

2.1.1. Multi-Modal Camera Configuration

- 2 × PointGrey Flea3 color camera (FL3-GE-13S2C 1288 × 964, 1.3 MP, Sony ICX445) GigE 84.9(H) × 68.9(V) with Spacecom HF3.5M-2 Lens 3.5 mm

- 2 × FLIR A35 thermal camera (320 × 256, 7.5 13 m GigE 63(H) × 50(V) with 7.5 mm.

- j5create USB3.0 Gigabit Ethernet Adapter (JUE130).

- j5create USB3.0 HUB (JUH377).

2.1.2. Multi-Modal Sensor Synchronization

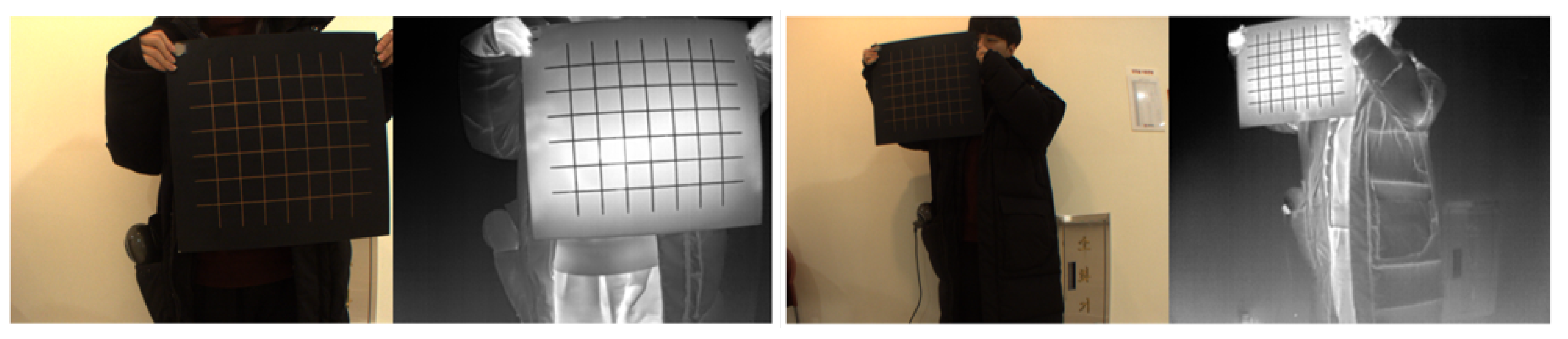

2.1.3. Multi-Modal Sensor Calibration

2.1.4. Multi-Modal Sensor Alignment

2.1.5. Annotation

- Only images captured by a single RGB camera are annotated, as the rest of the images can be interpreted using the same annotation after being geometrically aligned using the direct linear transformation (DLT), which includes rotation and translation.

- To obtain more accurate centroid distance annotations, we modified the SGM hyperparameters and then employed a hole-filling method to reduce error. After manually removing outliers, we used the average depth value surrounding the centroid pixel of each pedestrian.

- For a more specific annotation class, we defined three class labels, each of which represents information about what the instance contains, as follows: (1) person; (2) people; (3) background.

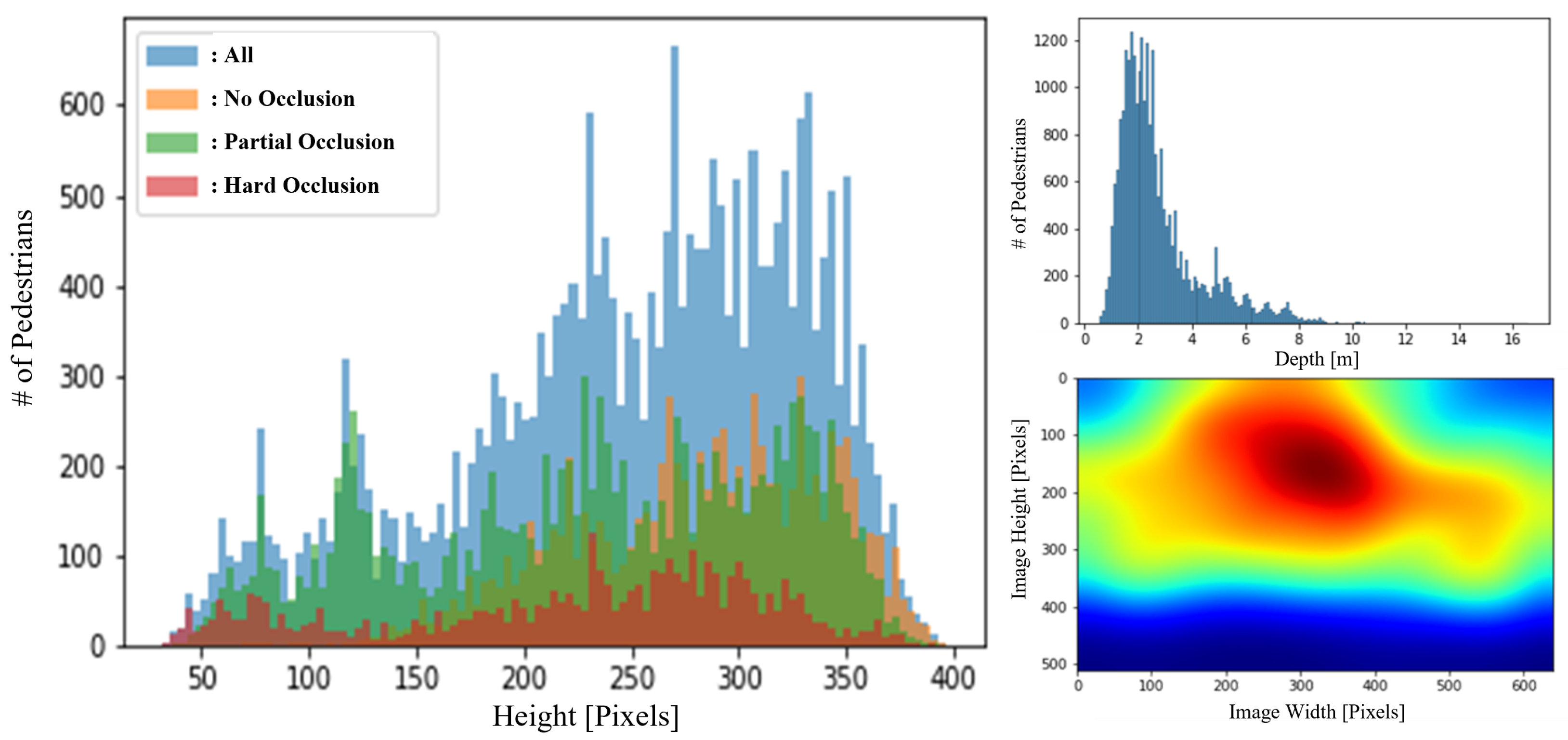

- Occlusion information is also included for each annotated instance based on the degree of occlusion, which is defined, as follows: (1) no-occlusion (0%); (2) partially-occluded (0–50%); (3) strongly-occluded (50–100%), respectively. This information is crucial for real-world applications, as all circumstances must be taken into account to prevent a collision.

2.1.6. Considerations Made during Dataset Collection Process

2.2. Proposed 2.5D Multispectral Pedestrian Detection Algorithm

3. Results

3.1. Proposed Multispectral Pedestrian Dataset

Distribution and Characteristics of Proposed Dataset

3.2. Results of Proposed 2.5D Multispectral Pedestrian Detection Algorithm

3.2.1. Experiment Setups

3.2.2. Evaluation Metrics

3.2.3. Experimental Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, J. Internal Logistics Process Improvement through AGV Integration at TMMI; Scholarly Open Access Repository at the University of Southern Indiana: Evansville, IN, USA, 2022. [Google Scholar]

- Kim, J.; Kim, H.; Kim, T.; Kim, N.; Choi, Y. MLPD: Multi-Label Pedestrian Detector in Multispectral Domain. IEEE Robot. Autom. Lett. 2021, 6, 7846–7853. [Google Scholar] [CrossRef]

- Zhang, L.; Zhu, X.; Chen, X.; Yang, X.; Lei, Z.; Liu, Z. Weakly aligned cross-modal learning for multispectral pedestrian detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5127–5137. [Google Scholar]

- Zhou, K.; Chen, L.; Cao, X. Improving multispectral pedestrian detection by addressing modality imbalance problems. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 787–803. [Google Scholar]

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A visible-infrared paired dataset for low-light vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 3496–3504. [Google Scholar]

- Dos Reis, W.P.N.; Morandin Junior, O. Sensors applied to automated guided vehicle position control: A systematic literature review. Int. J. Adv. Manuf. Technol. 2021, 113, 21–34. [Google Scholar] [CrossRef]

- Reis, W.P.N.D.; Couto, G.E.; Junior, O.M. Automated guided vehicles position control: A systematic literature review. J. Intell. Manuf. 2022, 33, 1–63. [Google Scholar] [CrossRef]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- González, A.; Fang, Z.; Socarras, Y.; Serrat, J.; Vázquez, D.; Xu, J.; López, A.M. Pedestrian detection at day/night time with visible and FIR cameras: A comparison. Sensors 2016, 16, 820. [Google Scholar] [CrossRef] [PubMed]

- FLIR ADAS Dataset. Available online: https://www.flir.in/oem/adas/adas-dataset-form/ (accessed on 6 September 2022).

- Martin-Martin, R.; Patel, M.; Rezatofighi, H.; Shenoi, A.; Gwak, J.; Frankel, E.; Sadeghian, A.; Savarese, S. Jrdb: A dataset and benchmark of egocentric robot visual perception of humans in built environments. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 10335–10342. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Fan, X.; Huang, Z.; Wu, G.; Liu, R.; Zhong, W.; Luo, Z. Target-aware Dual Adversarial Learning and a Multi-scenario Multi-Modality Benchmark to Fuse Infrared and Visible for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Montreal, BC, Canada, 11–17 October 2022; pp. 5802–5811. [Google Scholar]

- Krotosky, S.J.; Trivedi, M.M. On color-, infrared-, and multimodal-stereo approaches to pedestrian detection. IEEE Trans. Intell. Transp. Syst. 2007, 8, 619–629. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D.N. Multispectral deep neural networks for pedestrian detection. arXiv 2016, arXiv:1611.02644. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, BC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Cao, Z.; Yang, H.; Zhao, J.; Guo, S.; Li, L. Attention fusion for one-stage multispectral pedestrian detection. Sensors 2021, 21, 4184. [Google Scholar] [CrossRef] [PubMed]

- Roszyk, K.; Nowicki, M.R.; Skrzypczyński, P. Adopting the YOLOv4 architecture for low-latency multispectral pedestrian detection in autonomous driving. Sensors 2022, 22, 1082. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, G.; Zhang, R.; Wang, Y.; Man, R. Road Scene Recognition of Forklift AGV Equipment Based on Deep Learning. Processes 2021, 9, 1955. [Google Scholar] [CrossRef]

- Linder, T.; Pfeiffer, K.Y.; Vaskevicius, N.; Schirmer, R.; Arras, K.O. Accurate detection and 3D localization of humans using a novel YOLO-based RGB-D fusion approach and synthetic training data. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1000–1006. [Google Scholar]

- Kim, N.; Choi, Y.; Hwang, S.; Park, K.; Yoon, J.S.; Kweon, I.S. Geometrical calibration of multispectral calibration. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Goyang, Korea, 28–30 October 2015; pp. 384–385. [Google Scholar]

- Hirschmuller, H. Accurate and efficient stereo processing by semi-global matching and mutual information. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 25–26 June 2005; Volume 2, pp. 807–814. [Google Scholar]

- Computer Vision Toolbox. Available online: https://www.mathworks.com/products/computer-vision.html (accessed on 6 September 2022).

- Starkeforklift. Available online: https://www.starkeforklift.com/2018/08/22/frequently-asked-forklift-safety-questions/ (accessed on 6 September 2022).

- Waytronic Security Technology. Available online: http://www.wt-safe.com/Article/howmuchisthefo.html (accessed on 6 September 2022).

- CertifyMe. Available online: https://www.certifyme.net/osha-blog/forklift-speed-and-navigation/ (accessed on 6 September 2022).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Zheng, Y.; Izzat, I.H.; Ziaee, S. GFD-SSD: Gated fusion double SSD for multispectral pedestrian detection. arXiv 2019, arXiv:1903.06999. [Google Scholar]

- Olmeda, D.; Premebida, C.; Nunes, U.; Armingol, J.M.; de la Escalera, A. Pedestrian detection in far infrared images. Integr. Comput.-Aided Eng. 2013, 20, 347–360. [Google Scholar] [CrossRef]

- Jeong, M.; Ko, B.C.; Nam, J.Y. Early detection of sudden pedestrian crossing for safe driving during summer nights. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 1368–1380. [Google Scholar] [CrossRef]

- Liu, Q.; He, Z.; Li, X.; Zheng, Y. PTB-TIR: A thermal infrared pedestrian tracking benchmark. IEEE Trans. Multimed. 2019, 22, 666–675. [Google Scholar] [CrossRef] [Green Version]

- Bertoni, L.; Kreiss, S.; Alahi, A. Monoloco: Monocular 3d pedestrian localization and uncertainty estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 6861–6871. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Griffin, B.A.; Corso, J.J. Depth from camera motion and object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1397–1406. [Google Scholar]

| RGB 1,2 | Thermal 1 | Thermal 2 | |

|---|---|---|---|

| Width | 1288 | 320 | 320 |

| Height | 964 | 256 | 256 |

| Sycn. Mode | Mode 14 | Self Master | External Master |

| VCC | 4 | 1 | 1 |

| Ground | 5 | 2 | 1 |

| Sync. In | 4(GPIO 3) | x | 5 |

| Sync. Out | x | 3 | 3 |

| Image Format | Raw8 | Mono8 | Mono8 |

| Shutter Speed | 15 | x | x |

| Training | Testing | Properties | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Peds | Images | Peds | Images | Frames | RGB | Ther. | Depth | Occ. Labels | Moving Cam | Vid. Seqs | Aligned | Domain | |

| KAIST [8] | 41.5 k | 50.2 k | 44.7 k | 45.1 k | 95.3 k | Mono | Mono | - | 🗸 | 🗸 | 🗸 | 🗸 | Streets |

| CVC-14 [9] | 4.8 k | 3.5 k | 4.3 k | 1.4 k | 5.0 k | Mono | Mono | - | - | 🗸 | 🗸 | - | Streets |

| LSI [29] | 10.2 k | 6.2 k | 5.9 k | 9.1 k | 15.2 k | - | Mono | - | 🗸 | 🗸 | 🗸 | - | Streets |

| KMU [30] | - | 7.9 k | - | 5.0 k | 12.9 k | - | Mono | - | - | 🗸 | 🗸 | - | Streets |

| PTB-TIR [31] | - | - | - | - | 30.1k | - | Mono | - | 🗸 | 🗸 | 🗸 | - | - |

| LLVIP [5] | 33.6 k | 12.0 k | 7.9 k | 3.5 k | 15.5 k | Mono | Mono | - | - | - | 🗸 | 🗸 | Surveillance |

| FLIR-ADAS [10] | 22.3 k | 8.8 k | 5.7 k | 1.3 k | 10.1 k | Mono | Mono | - | - | 🗸 | 🗸 | - | Streets |

| Ours | 12.6 k | 7.3 k | 20.6 k | 12.2 k | 19.5 k | Stereo | Stereo | 🗸 | 🗸 | 🗸 | 🗸 | 🗸 | Intralogistics |

| Model | Modality | Detection Result [%] | |||||

|---|---|---|---|---|---|---|---|

| AP | AP Warn. | AP Hazard. | MR | MR Warn. | MR Hazard. | ||

| SSD 2D | RGB | 61.6 | 61.5 | 45.2 | 4.10 | 4.31 | 6.39 |

| SSD 2D | Ther. | 59.5 | 59.2 | 41.2 | 4.41 | 4.69 | 7.82 |

| SSD 2D | Multi. | 65.2 | 65.2 | 40.5 | 3.53 | 3.59 | 8.81 |

| SSD 2.5D | RGB | 60.4 | 60.4 | 43.3 | 3.79 | 3.93 | 5.52 |

| SSD 2.5D | Ther. | 58.1 | 58.1 | 41.4 | 4.76 | 5.0 | 7.71 |

| SSD 2.5D (Ours) | Multi | 65.7 | 65.7 | 45.7 | 3.24 | 3.33 | 7.83 |

| Model | Modality | Percent Error [%] | ||||||

|---|---|---|---|---|---|---|---|---|

| Total | 1 m | 2 m | 3 m | 4 m | 5 m | >5 m | ||

| NCR (w/o Act.) | GT | 38.25 | 3.97 | 25.88 | 29.48 | 31.46 | 34.30 | 36.27 |

| NCR (w Act.) | GT | 17.06 | 1.23 | 7.63 | 11.17 | 12.27 | 14.18 | 15.56 |

| SSD 2.5D | RGB | 6.16 | 0.36 | 4.27 | 5.84 | 5.98 | 6.08 | 6.1 |

| SSD 2.5D | Ther. | 6.27 | 0.36 | 4.2 | 5.94 | 6.1 | 6.22 | 6.24 |

| SSD 2.5D (Ours) | Multi | 4.09 | 0.22 | 2.86 | 3.71 | 3.84 | 3.96 | 4.02 |

| Model | Modality | Average Localization Precision | ||||||

|---|---|---|---|---|---|---|---|---|

| Total | 1 m | 2 m | 3 m | 4 m | 5 m | >5 m | ||

| NCR (w/o Act.) | GT | 0.1587 | 0.0222 | 0.1406 | 0.2106 | 0.2001 | 0.1832 | 0.1712 |

| NCR (w Act.) | GT | 0.4348 | 0.0044 | 0.5103 | 0.5166 | 0.5103 | 0.4851 | 0.4616 |

| SSD 2.5D | RGB | 0.7958 | 0.5268 | 0.7151 | 0.7534 | 0.7709 | 0.7828 | 0.7930 |

| SSD 2.5D | Ther. | 0.7594 | 0.4675 | 0.6793 | 0.7196 | 0.7359 | 0.7453 | 0.7546 |

| SSD 2.5D (Ours) | Multi | 0.8571 | 0.5446 | 0.7984 | 0.8383 | 0.8483 | 0.8552 | 0.8593 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Kim, T.; Jo, W.; Kim, J.; Shin, J.; Han, D.; Hwang, Y.; Choi, Y. Multispectral Benchmark Dataset and Baseline for Forklift Collision Avoidance. Sensors 2022, 22, 7953. https://doi.org/10.3390/s22207953

Kim H, Kim T, Jo W, Kim J, Shin J, Han D, Hwang Y, Choi Y. Multispectral Benchmark Dataset and Baseline for Forklift Collision Avoidance. Sensors. 2022; 22(20):7953. https://doi.org/10.3390/s22207953

Chicago/Turabian StyleKim, Hyeongjun, Taejoo Kim, Won Jo, Jiwon Kim, Jeongmin Shin, Daechan Han, Yujin Hwang, and Yukyung Choi. 2022. "Multispectral Benchmark Dataset and Baseline for Forklift Collision Avoidance" Sensors 22, no. 20: 7953. https://doi.org/10.3390/s22207953

APA StyleKim, H., Kim, T., Jo, W., Kim, J., Shin, J., Han, D., Hwang, Y., & Choi, Y. (2022). Multispectral Benchmark Dataset and Baseline for Forklift Collision Avoidance. Sensors, 22(20), 7953. https://doi.org/10.3390/s22207953