Research on Waste Plastics Classification Method Based on Multi-Scale Feature Fusion

Abstract

:1. Introduction

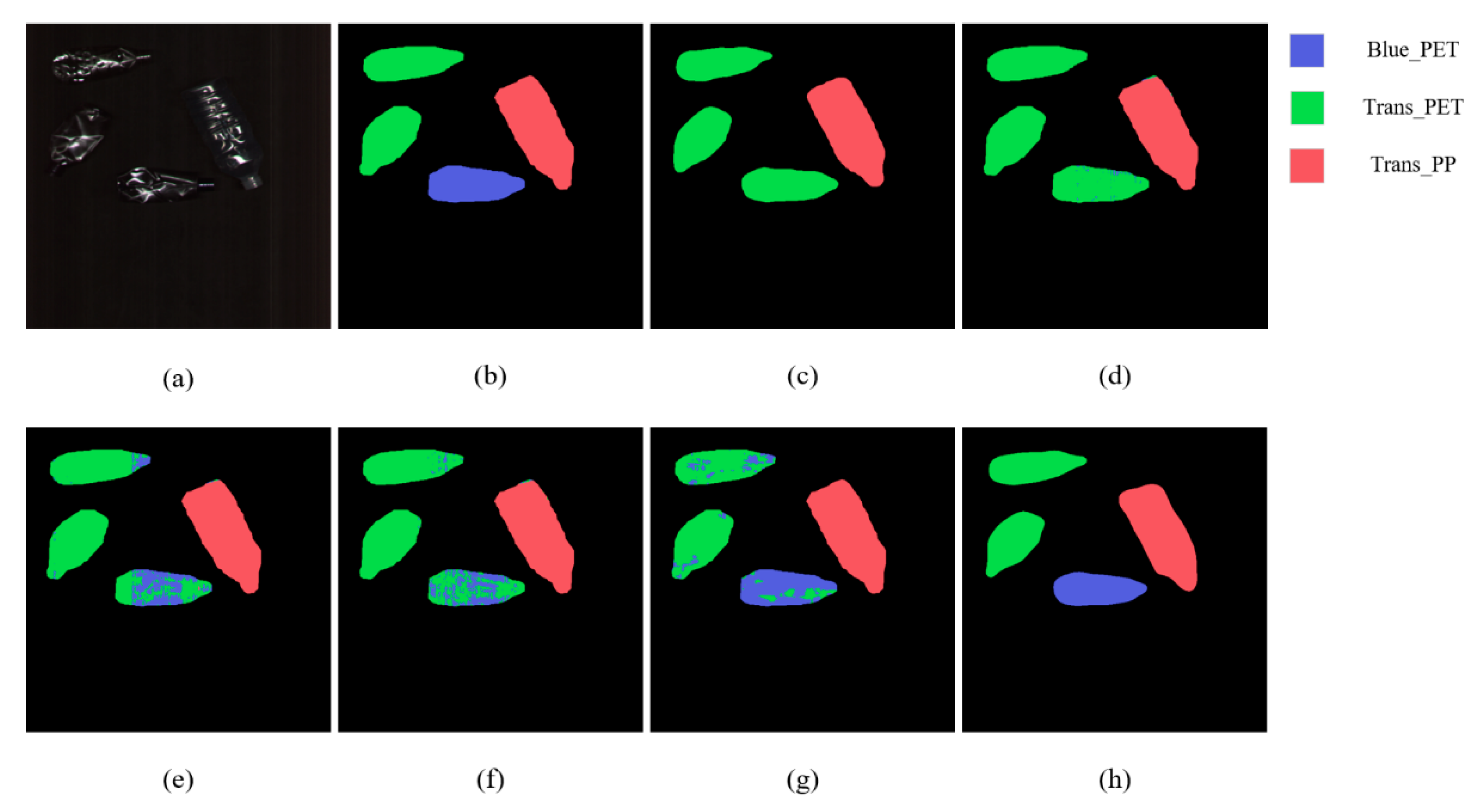

2. Materials and Methods

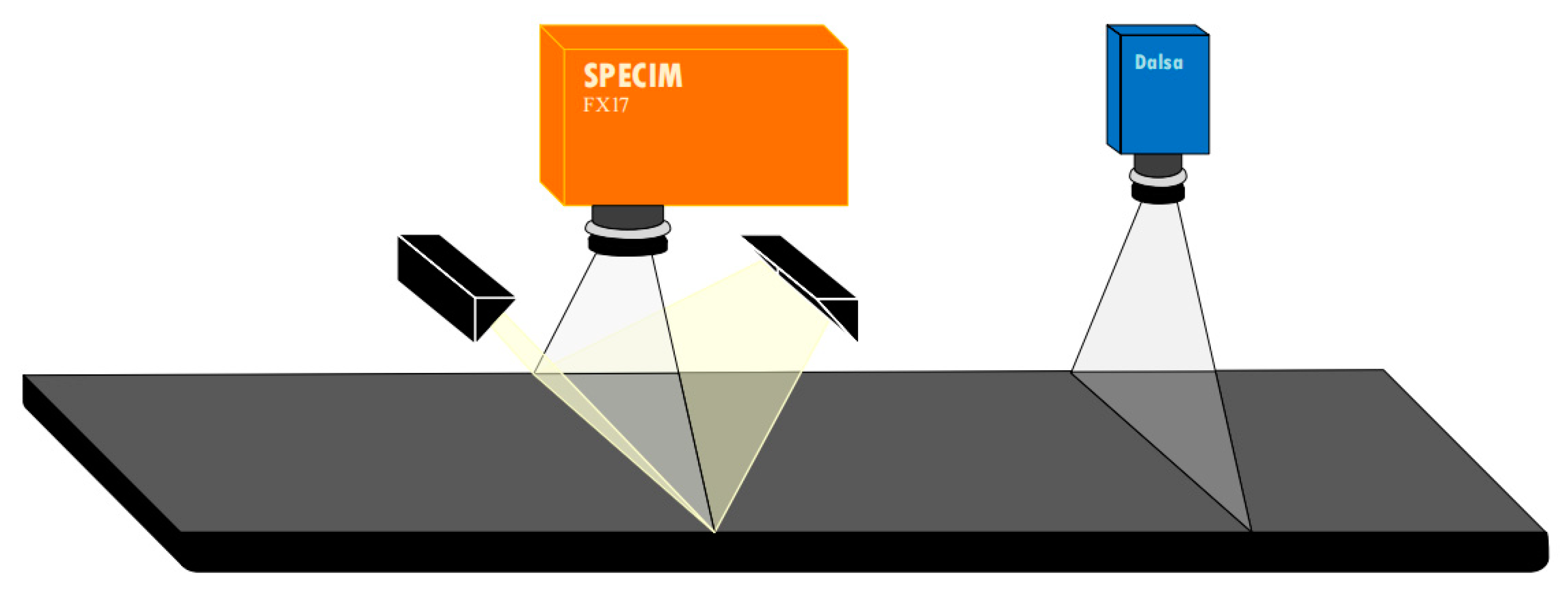

2.1. Samples Preparation and Data Collection

2.2. Data Processing

2.3. Network Structure of RHFF-SOLOv1

2.3.1. RGB Imagery Feature Extraction Branch

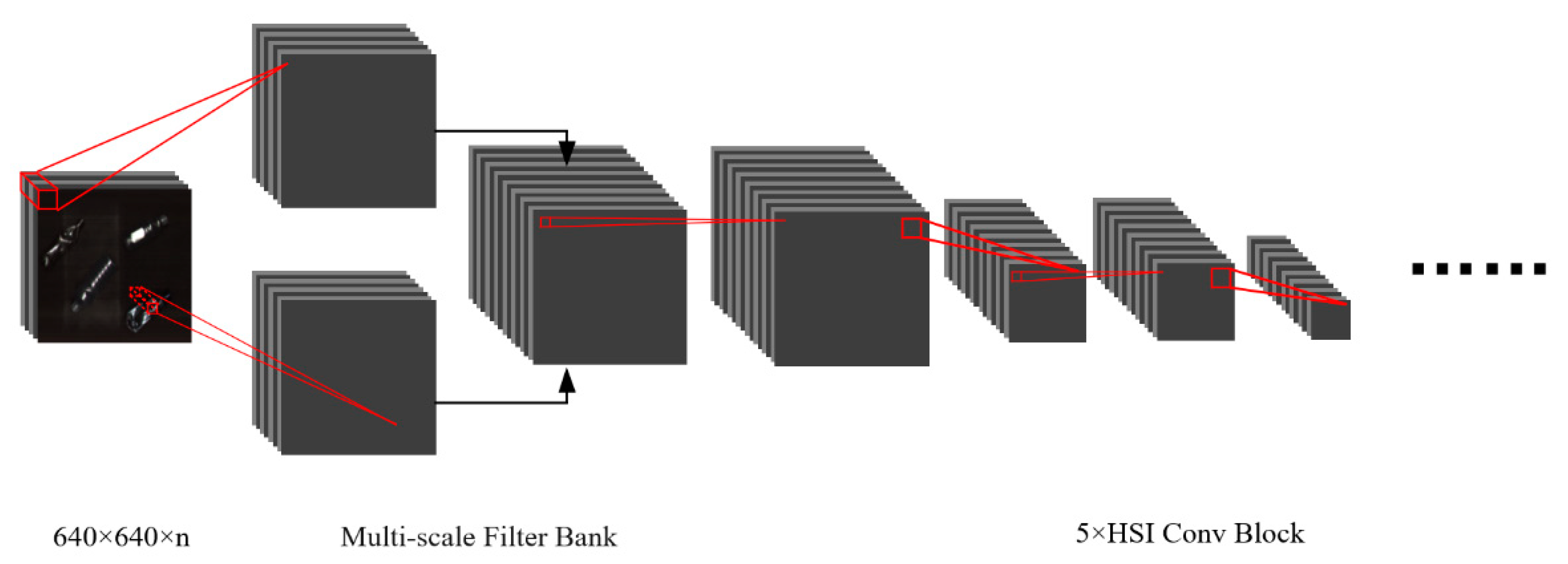

2.3.2. Hyperspectral Imagery Feature Extraction Branch

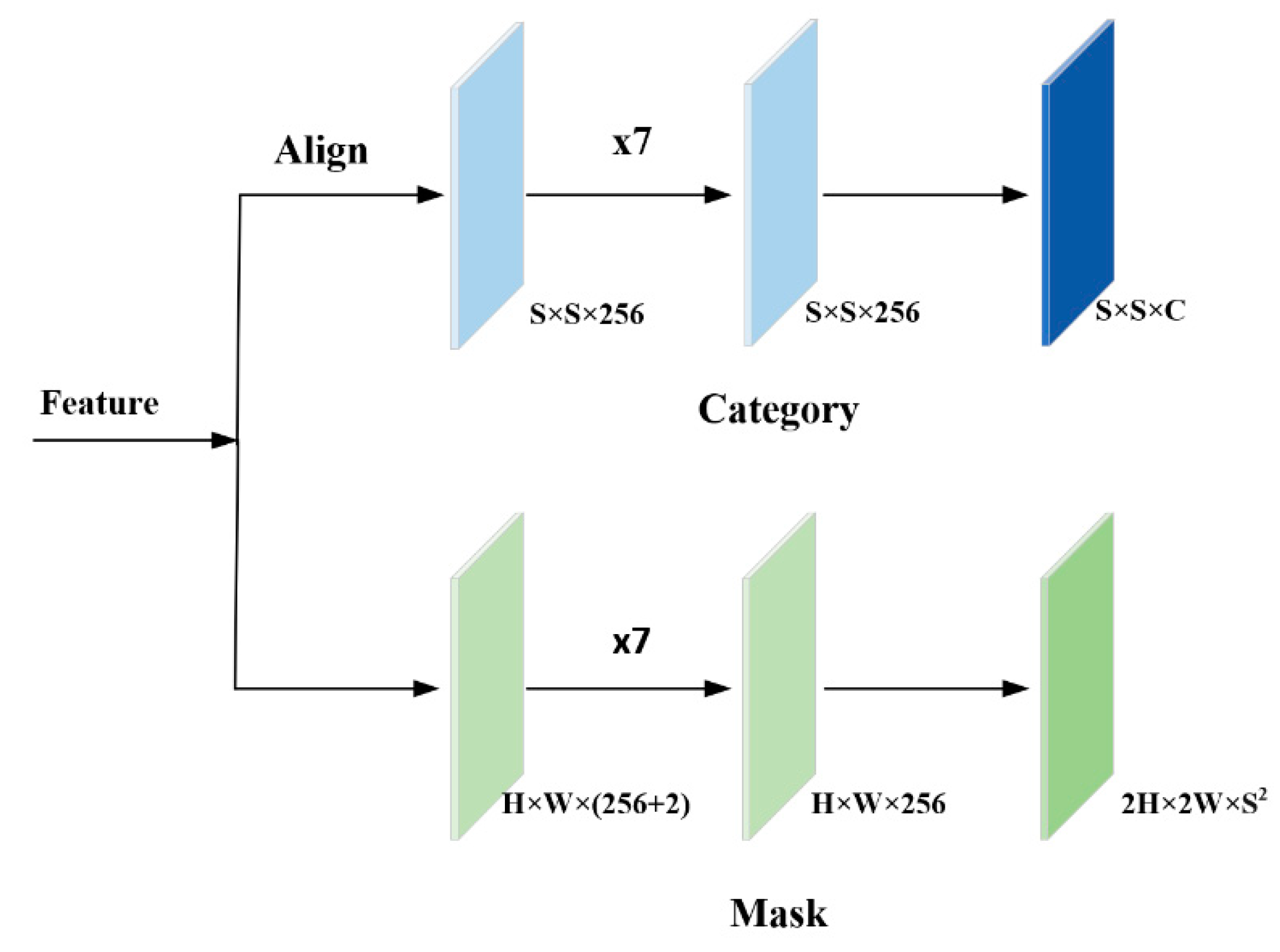

2.3.3. Multi-Scale Feature Fusion and Detection Head

3. Results

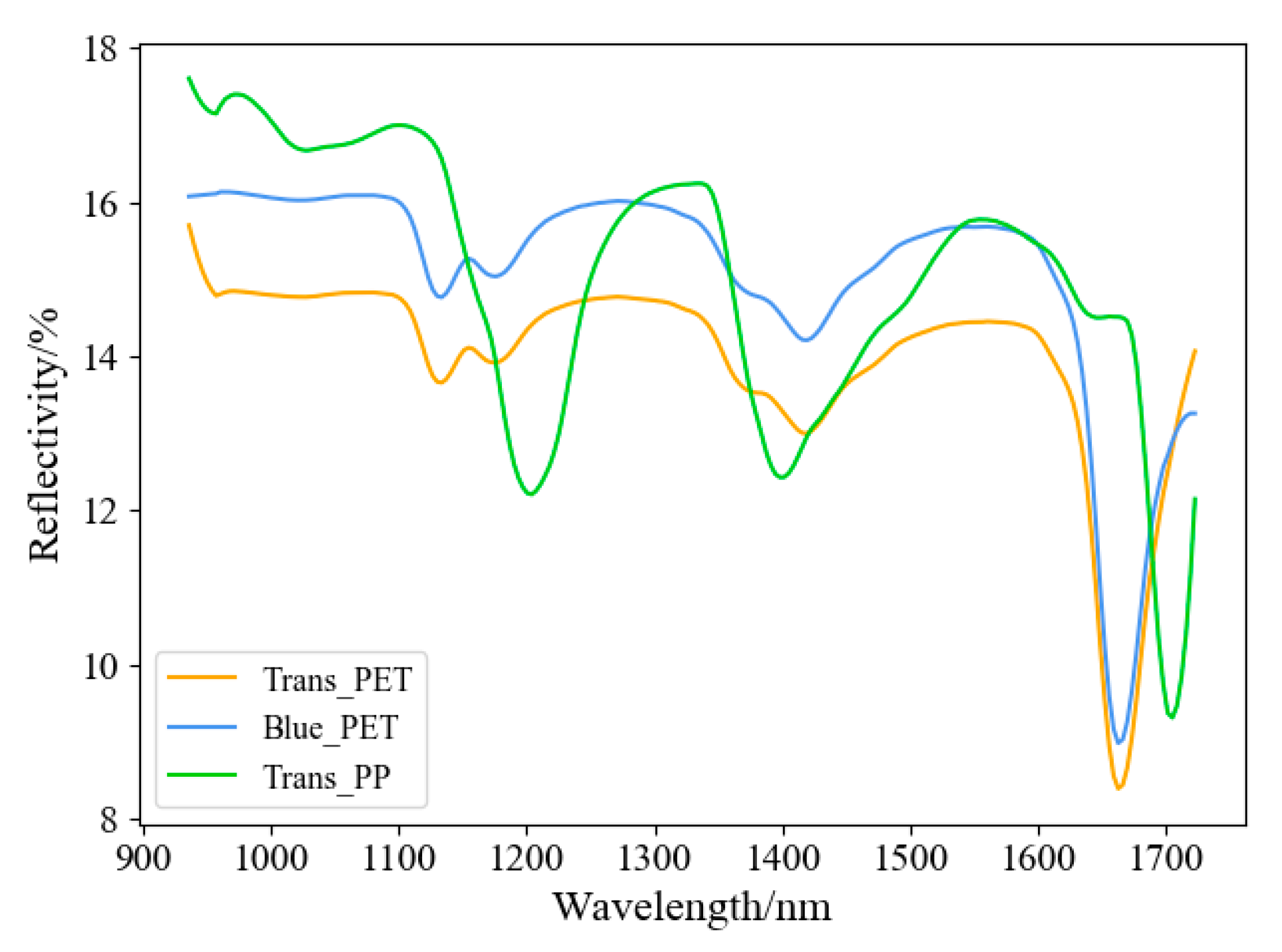

3.1. Hyperspectral Band Selection

3.2. Evaluating Indicators

3.3. Comparison between Different Intervals

3.4. Comparison between RHFF-SOLOv1 and Other Methods

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Laville, S.; Taylor, M. A Million Bottles a Minute: World’s Plastic Binge ‘as Dangerous as Climate Change’; The Guardian: Manchester, UK, 2017. [Google Scholar]

- Younos, T. Bottled water: Global impacts and potential. Potable Water 2014, 30, 213–227. [Google Scholar]

- He, X.; He, Z.; Zhang, S.; Zhao, X. A novel vision-based PET bottle recycling facility. Meas. Sci. Technol. 2016, 28, 025601. [Google Scholar] [CrossRef]

- Wang, Z.; Peng, B.; Huang, Y.; Sun, G. Classification for plastic bottles recycling based on image recognition. Waste Manag. 2019, 88, 170–181. [Google Scholar] [CrossRef] [PubMed]

- Scavino, E.; Wahab, D.A.; Hussain, A.; Basri, H.; Mustafa, M.M. Application of automated image analysis to the identification and extraction of recyclable plastic bottles. J. Zhejiang Univ.-SCIENCE A 2009, 10, 794–799. [Google Scholar] [CrossRef]

- Özkan, K.; Ergin, S.; Işık, Ş.; Işıklı, I. A new classification scheme of plastic wastes based upon recycling labels. Waste Manag. 2015, 35, 29–35. [Google Scholar] [CrossRef] [PubMed]

- Maliks, R.; Kadikis, R. Multispectral data classification with deep CNN for plastic bottle sorting. In Proceedings of the 2021 6th International Conference on Mechanical Engineering and Robotics Research (ICMERR), Krakow, Poland, 11–13 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 58–65. [Google Scholar]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 2022, 211, 118573. [Google Scholar] [CrossRef]

- Tang, Y.; Zhu, M.; Chen, Z.; Wu, C.; Chen, B.; Li, C.; Li, L. Seismic performance evaluation of recycled aggregate concrete-filled steel tubular columns with field strain detected via a novel mark-free vision method. Structures 2022, 37, 426–441. [Google Scholar] [CrossRef]

- Jaikumar, P.; Vandaele, R.; Ojha, V. Transfer learning for instance segmentation of waste bottles using Mask R-CNN algorithm. In Intelligent Systems Design and Applications, Proceedings of the International Conference on Intelligent Systems Design and Applications, Online, 13–15 December 2021; Springer: Cham, Germany, 2020; pp. 140–149. [Google Scholar]

- Fadlil, A.; Umar, R.; Nugroho, A.S. Comparison of machine learning approach for waste bottle classification. Emerg. Sci. J. 2022, 6, 1075–1085. [Google Scholar] [CrossRef]

- Carvalho, M.T.; Ferreira, C.; Portela, A.; Santos, J.T. Application of fluidization to separate packaging waste plastics. Waste Manag. 2009, 29, 1138–1143. [Google Scholar] [PubMed]

- Masoumi, H.; Safavi, S.M.; Khani, Z. Identification and classification of plastic resins using near infrared reflectance spectroscopy. Int. J. Mech. Ind. Eng. 2012, 6, 213–220. [Google Scholar]

- Stiebel, T.; Bosling, M.; Steffens, A.; Pretz, T.; Merhof, D. An inspection system for multi-label polymer classification. In Proceedings of the 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA), Turino, Italy, 4–7 September 2018; IEEE: Piscataway, NJ, USA, 2018; Volume 1, pp. 623–630. [Google Scholar]

- Scheirs, J. Polymer Recycling; Wiley: Hoboken, NJ, USA, 1998. [Google Scholar]

- Wang, X.; Kong, T.; Shen, C.; Li, L. SOLO: Segmenting Objects by Locations; Springer: Cham, Germany, 2019. [Google Scholar]

- Ghiasi, G.; Cui, Y.; Srinivas, A.; Qian, R.; Lin, T.-Y.; Cubuk, E.D.; Le, Q.V.; Zoph, B. Simple copy-paste is a strong data augmentation method for instance segmentation. In Computer Vision and Pattern Recognition; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Lee, H.; Kwon, H. Contextual deep CNN based hyperspectral classification. In Geoscience & Remote Sensing Symposium; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Ying, L.; Haokui, Z.; Qiang, S. Spectral–spatial classification of hyperspectral imagery with 3D convolutional neural network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral-spatial residual network for hyperspectral image classification: A 3-D deep learning framework. IEEE Trans. Geosci. Remote Sens. 2017, 56, 847–858. [Google Scholar] [CrossRef]

- Roy, S.K.; Krishna, G.; Dubey, S.R.; Chaudhuri, B.B. HybridSN: Exploring 3-D–2-D CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 277–281. [Google Scholar] [CrossRef]

| Classifiers | Interval 1 | Interval 2 | Interval 3 | Interval 4 | Interval 5 | Interval 6 |

|---|---|---|---|---|---|---|

| SVM | 71.9 | 70.0 | 59.5 | 70.8 | 70.6 | 75.7 |

| 1D-CNN | 88.4 | 81.8 | 74.7 | 79.2 | 84.8 | 86.4 |

| RF | 76.3 | 75.6 | 68.5 | 73.6 | 75.4 | 77.3 |

| Interval 1 | Interval 6 | |

|---|---|---|

| Blue_PET | 97.60 | 97.60 |

| Trans_PET | 94.80 | 94.12 |

| Trans_PP | 96.00 | 95.43 |

| OA | 95.55 | 95.01 |

| AA | 95.69 | 95.06 |

| Kappa × 100 | 93.28 | 92.47 |

| SOLOv1 | RHFF-SOLOv1 | |

|---|---|---|

| Blue_PET | 87.80 | 97.60 |

| Trans_PET | 87.88 | 94.80 |

| Trans_PP | 92.16 | 96.00 |

| OA | 86.66 | 95.55 |

| AA | 87.51 | 95.69 |

| Kappa × 100 | 80.10 | 93.28 |

| ContextualNet | 3D-CNN | SSRN | HybridSN | RHFF-SOLOv1 | |

|---|---|---|---|---|---|

| Blue_PET | 79.02 | 89.89 | 93.26 | 92.11 | 97.60 |

| Trans_PET | 86.43 | 94.49 | 95.67 | 98.11 | 94.80 |

| Trans_PP | 97.47 | 99.48 | 98.99 | 96.48 | 96.00 |

| OA | 86.52 | 94.07 | 95.69 | 95.42 | 95.55 |

| AA | 87.68 | 94.44 | 96.06 | 95.69 | 95.69 |

| Kappa × 100 | 75.59 | 91.01 | 93.47 | 93.06 | 93.28 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, Z.; Yang, J.; Fang, H.; Ji, T.; Hu, Y.; Wang, X. Research on Waste Plastics Classification Method Based on Multi-Scale Feature Fusion. Sensors 2022, 22, 7974. https://doi.org/10.3390/s22207974

Cai Z, Yang J, Fang H, Ji T, Hu Y, Wang X. Research on Waste Plastics Classification Method Based on Multi-Scale Feature Fusion. Sensors. 2022; 22(20):7974. https://doi.org/10.3390/s22207974

Chicago/Turabian StyleCai, Zhenxing, Jianhong Yang, Huaiying Fang, Tianchen Ji, Yangyang Hu, and Xin Wang. 2022. "Research on Waste Plastics Classification Method Based on Multi-Scale Feature Fusion" Sensors 22, no. 20: 7974. https://doi.org/10.3390/s22207974