sEMG-Based Hand Posture Recognition and Visual Feedback Training for the Forearm Amputee

Abstract

1. Introduction

1.1. Related Work

1.1.1. sEMG-Based Gesture Recognition

1.1.2. Rehabilitation Training for the Amputees

2. Materials and Methods

2.1. Participants

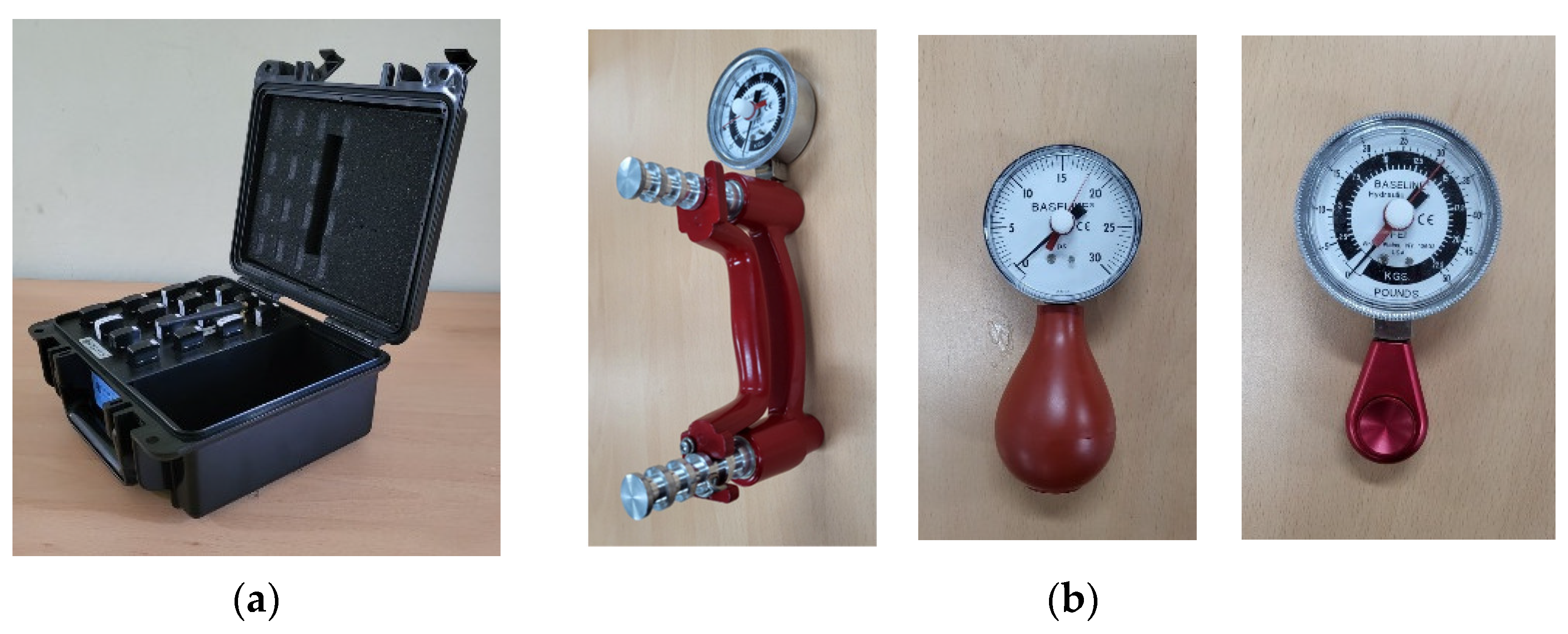

2.2. Equipment

2.3. Experimental Protocol

2.4. Feature Vectors and Classifier

2.5. Performance Evaluation

3. Results

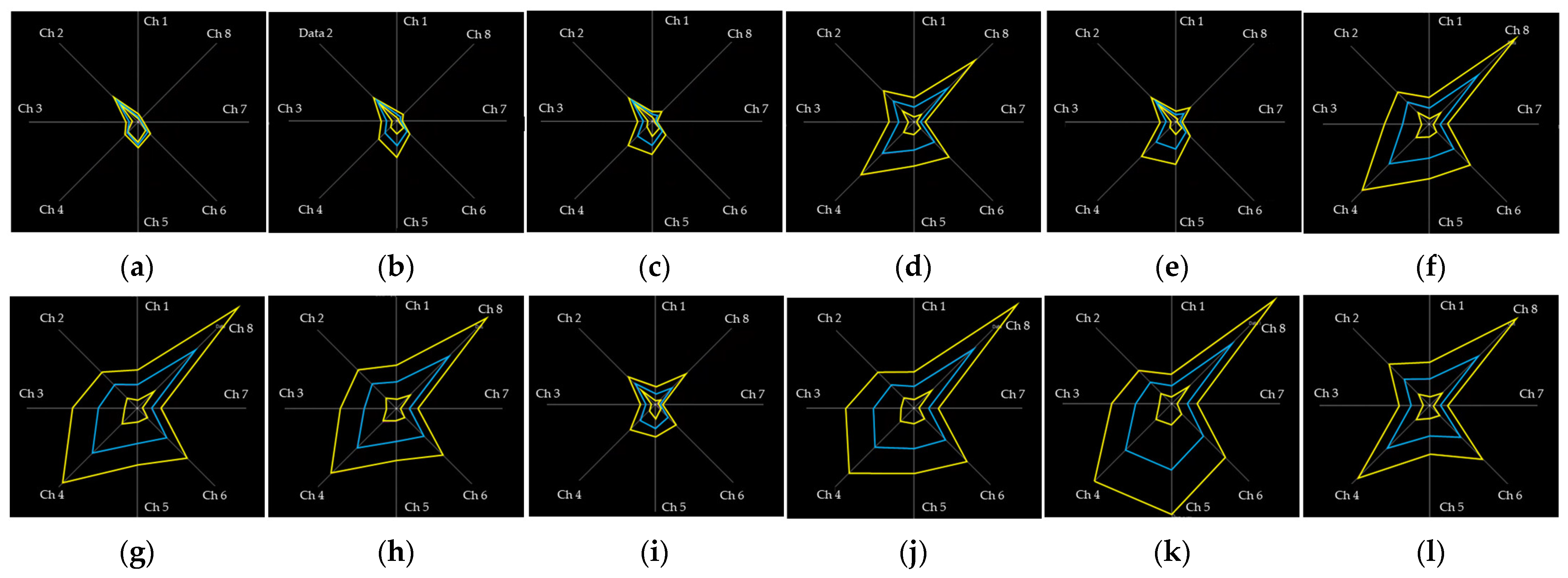

3.1. t-SNE and SC with Visual Feedback Training

3.2. Classification Accuracy

3.3. Confusion Matrix

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Farina, D.; Merletti, R.; Enoka, R.M. The Extraction of Neural Strategies from the Surface EMG: An Update. J. Appl. Physiol. 2014, 117, 1215–1230. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Xiong, A. Advances and Disturbances in sEMG-Based Intentions and Movements Recognition: A Review. IEEE Sens. J. 2021, 21, 13019–13028. [Google Scholar] [CrossRef]

- Nam, Y.; Koo, B.; Cichocki, A.; Choi, S. GOM-Face: GKP, EOG, and EMG-Based Multimodal Interface with Application to Humanoid Robot Control. IEEE. Trans. Biomed. Eng. 2013, 61, 453–462. [Google Scholar] [CrossRef] [PubMed]

- Ahmadizadeh, C.; Khoshnam, M.; Menon, C. Human Machine Interfaces in Upper-Limb Prosthesis Control: A Survey of Techniques for Preprocessing and Processing of Biosignals. IEEE Signal. Process. Mag. 2021, 38, 12–22. [Google Scholar] [CrossRef]

- Parajuli, N.; Sreenivasan, N.; Bifulco, P.; Cesarelli, M.; Savino, S.; Niola, V.; Esposito, D.; Hamilton, T.J.; Naik, G.R.; Gunawardana, U.; et al. Real-Time EMG Based Pattern Recognition Control for Hand Prostheses: A Review on Existing Methods, Challenges and Future Implementation. Sensors 2019, 19, 4596. [Google Scholar] [CrossRef] [PubMed]

- Witteveen, H.J.B.; Droog, E.A.; Rietman, J.S.; Veltink, P.H. Vibro- and Electrotactile User Feedback on Hand Opening for Myoelectric Forearm Prostheses. IEEE Trans. Biomed. Eng. 2012, 59, 2219–2226. [Google Scholar] [CrossRef]

- Van Der Linde, H.; Hofstad, C.J.; Geurts, A.C.; Postema, K.; Geertzen, J.H.; van Limbeek, J. A Systematic Literature Review of the Effect of Different Prosthetic Components on Human Functioning with a Lower-Limb Prosthesis. J. Rehabil. Res. Dev. 2004, 41, 555–570. [Google Scholar] [CrossRef] [PubMed]

- Samuel, O.W.; Asogbon, M.G.; Geng, Y.; Al-Timemy, A.H.; Pirbhulal, S.; Ji, N.; Chen, S.; Fang, P.; Li, G. Intelligent EMG Pattern Recognition Control Method for Upper-Limb Multifunctional Prostheses: Advances, Current Challenges, and Future Prospects. IEEE Access 2019, 7, 10150–10165. [Google Scholar] [CrossRef]

- Klarich, J.; Brueckner, I. Amputee Rehabilitation and Preprosthetic Care. Phys. Med. Rehabil Clin. N. Am. 2014, 25, 75–91. [Google Scholar] [CrossRef]

- Phinyomark, A.; Scheme, E. EMG Pattern Recognition in the Era of Big Data and Deep Learning. Big Data Cogn. Comput. 2018, 2, 21. [Google Scholar] [CrossRef]

- Emayavaramban, G.; Amudha, A. Identifying Hand Gestures Using sEMG for Human-Machine Interaction. ARPN J. Eng. Appl. Sci. 2016, 11, 12777–12785. [Google Scholar]

- Shi, W.T.; Lyu, Z.J.; Tang, S.T.; Chia, T.L.; Yang, C.Y. A Bionic Hand Controlled by Hand Gesture Recognition Based on Surface EMG Signals: A Preliminary Study. Biocybern. Biomed. Eng. 2018, 38, 126–135. [Google Scholar] [CrossRef]

- Adewuyi, A.A.; Hargrove, L.J.; Kuiken, T.A. Evaluating EMG Feature and Classifier Selection for Application to Partial-Hand Prosthesis Control. Front. Neurorobot. 2016, 10, 15. [Google Scholar] [CrossRef]

- Betthauser, J.L.; Hunt, C.L.; Osborn, L.E.; Masters, M.R.; Lévay, G.; Kaliki, R.R.; Thakor, N.V. Limb Position Tolerant Pattern Recognition for Myoelectric Prosthesis Control with Adaptive Sparse Representations from Extreme Learning. IEEE Trans. Biomed. Eng. 2017, 65, 770–778. [Google Scholar] [CrossRef] [PubMed]

- Mallik, A.K.; Pandey, S.K.; Srivastava, A.; Kumar, S.; Kumar, A. Comparison of Relative Benefits of Mirror Therapy and Mental Imagery in Phantom Limb Pain in Amputee Patients at a Tertiary Care Center. Arch. Rehabil. Res. Clin. Transl. 2020, 2, 100081. [Google Scholar] [CrossRef] [PubMed]

- Barbin, J.; Seetha, V.; Casillas, J.M.; Paysant, J.; Perennou, D. The Effects of Mirror Therapy on Pain and Motor Control of Phantom Limb in Amputees: A Systematic Review. Ann. Phys. Rehabil. Med. 2016, 59, 270–275. [Google Scholar] [CrossRef] [PubMed]

- Powell, M.A.; Kaliki, R.R.; Thakor, N.V. User Training for Pattern Recognition-Based Myoelectric Prostheses: Improving Phantom Limb Movement Consistency and Distinguishability. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 22, 522–532. [Google Scholar] [CrossRef] [PubMed]

- DELSYS. Trigno Wireless Biofeedback System—User’s Guide. Available online: https://www.delsys.com/downloads/USERSGUIDE/trigno/wireless-biofeedback-system.pdf (accessed on 3 October 2022).

- Kim, S.; Kim, J.; Koo, B.; Kim, T.; Jung, H.; Park, S.; Kim, S.; Kim, Y. Development of an Armband EMG Module and a Pattern Recognition Algorithm for the 5-Finger Myoelectric Hand Prosthesis. Int. J. Precis. Eng. Manuf. 2019, 20, 1997–2006. [Google Scholar] [CrossRef]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature Reduction and Selection for EMG Signal Classification. Expert Syst. Appl. 2012, 39, 7420–7431. [Google Scholar] [CrossRef]

- Kamavuako, E.N.; Scheme, E.J.; Englehart, K.B. Determination of Optimum Threshold Values for EMG Time Domain Features; A Multi-Dataset Investigation. J. Neural Eng. 2016, 13, 046011. [Google Scholar] [CrossRef] [PubMed]

- Asif, A.R.; Waris, A.; Gilani, S.O.; Jamil, M.; Ashraf, H.; Shafique, M.; Niazi, I.K. Performance Evaluation of Convolutional Neural Network for Hand Gesture Recognition Using EMG. Sensors 2020, 20, 1642. [Google Scholar] [CrossRef] [PubMed]

- Tang, X.; Liu, Y.; Lv, C.; Sun, D. Hand Motion Classification Using a Multi-Channel Surface Electromyography Sensor. Sensors 2012, 12, 1130–1147. [Google Scholar] [CrossRef] [PubMed]

- Laksono, P.W.; Matsushita, K.; Suhaimi, M.S.A.B.; Kitamura, T.; Njeri, W.; Muguro, J.; Sasaki, M. Mapping Three Electromyography Signals Generated by Human Elbow and Shoulder Movements to Two Degree of Freedom Upper-Limb Robot Control. Robotics 2020, 9, 83. [Google Scholar] [CrossRef]

- Kim, J.; Koo, B.; Nam, Y.; Kim, Y. sEMG-Based Hand Posture Recognition Considering Electrode Shift, Feature Vectors, and Posture Groups. Sensors 2021, 21, 7681. [Google Scholar] [CrossRef]

- Jiang, S.; Lv, B.; Guo, W.; Zhang, C.; Wang, H.; Sheng, X.; Shull, P.B. Feasibility of Wrist-Worn, Real-Time Hand, and Surface Gesturerecognition Via sEMG and IMU Sensing. IEEE Trans. Ind. Inf. 2017, 14, 3376–3385. [Google Scholar] [CrossRef]

- De Andrade, F.H.C.; Pereira, F.G.; Resende, C.Z.; Cavalieri, D.C. Improving sEMG-based hand gesture recognition using maximal overlap discrete wavelet transform and an autoencoder neural network. In Proceedings of the 16th Brazilian Congress on Biomedical Engineering, Armação dos Búzios, Brazil, 21–25 October 2019; pp. 271–279. [Google Scholar]

- Suarez, J.; Murphy, R.R. Hand gesture recognition with depth images: A review. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 411–417. [Google Scholar]

- Murthy, G.R.S.; Jadon, R.S. Hand gesture recognition using neural networks. In Proceedings of the 2010 IEEE 2nd International Advance Computing Conference (IACC), Patiala, India, 19–20 February 2010; pp. 134–138. [Google Scholar]

- Li, W.J.; Hsieh, C.Y.; Lin, L.F.; Chu, W.C. Hand gesture recognition for post-stroke rehabilitation using leap motion. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 386–388. [Google Scholar]

- Chonbodeechalermroong, A.; Chalidabhongse, T.H. Dynamic contour matching for hand gesture recognition from monocular image. In Proceedings of the 2015 12th International Joint Conference on Computer Science and Software Engineering (JCSSE), Hatyai, Thailand, 22–24 July 2015; pp. 47–51. [Google Scholar]

- Ren, Z.; Meng, J.; Yuan, J.; Zhang, Z. Robust hand gesture recognition with Kinect sensor. In Proceedings of the 19th ACM International Conference on Multimedia, New York, NY, USA, 1–28 December 2011; pp. 759–760. [Google Scholar]

- Sayin, F.S.; Ozen, S.; Baspinar, U. Hand gesture recognition by using sEMG signals for human machine interaction applications. In Proceedings of the 2018 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 19–21 September 2018; pp. 27–30. [Google Scholar]

- Yang, Y.; Fermuller, C.; Li, Y.; Aloimonos, Y. Grasp type revisited: A modern perspective on a classical feature for vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 400–408. [Google Scholar]

- Plouffe, G.; Cretu, A.M. Static and Dynamic Hand Gesture Recognition in Depth Data Using Dynamic Time Warping. IEEE Trans. Instrum. Meas. 2015, 65, 305–316. [Google Scholar] [CrossRef]

- Apostol, B.; Mihalache, C.R.; Manta, V. Using spin images for hand gesture recognition in 3D point clouds. In Proceedings of the 2014 18th International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 17–19 October 2014; pp. 544–549. [Google Scholar]

- Hudgins, B.; Parker, P.; Scott, R.N. A New Strategy for Multifunction Myoelectric Control. IEEE. Trans. Biomed. Eng. 1993, 40, 82–94. [Google Scholar] [CrossRef] [PubMed]

- Oskoei, M.A.; Hu, H. Myoelectric Control Systems—A Survey. Biomed. Signal. Process Control 2007, 2, 275–294. [Google Scholar]

- Hargrove, L.J.; Englehart, K.; Hudgins, B. A Comparison of Surface and Intramuscular Myoelectric Signal Classification. IEEE Trans. Biomed. Eng. 2007, 54, 847–853. [Google Scholar] [CrossRef]

- Kuiken, T.A.; Li, G.; Lock, B.A.; Lipschutz, R.D.; Miller, L.A.; Stubblefield, K.A.; Englehart, K.B. Targeted Muscle Reinnervation for Real-Time Myoelectric Control of Multifunction Artificial Arms. JAMA 2009, 301, 619–628. [Google Scholar] [CrossRef]

- Tkach, D.; Huang, H.; Kuiken, T.A. Study of Stability of Time-Domain Features for Electromyographic Pattern Recognition. J. NeuroEng. Rehabil. 2010, 7, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Phinyomark, A.; Quaine, F.; Charbonnier, S.; Serviere, C.; Tarpin-Bernard, F.; Laurillau, Y. EMG Feature Evaluation for Improving Myoelectric Pattern Recognition Robustness. Expert Syst. Appl. 2013, 40, 4832–4840. [Google Scholar] [CrossRef]

- Samuel, O.W.; Zhou, H.; Li, X.; Wang, H.; Zhang, H.; Sangaiah, A.K.; Li, G. Pattern Recognition of Electromyography Signals Based on Novel Time Domain Features for Amputees Limb Motion Classification. Comput. Electr. Eng. 2018, 67, 646–655. [Google Scholar] [CrossRef]

- Zhang, T.; Yang, B. Big data dimension reduction using PCA. In Proceedings of the 2016 IEEE International Conference on Smart Cloud (SmartCloud), New York, NY, USA, 18–20 November 2016. [Google Scholar]

- Kambhatla, N.; Leen, T.K. Dimension Reduction by Local Principal Component Analysis. Neural Comput. 1997, 9, 1493–1516. [Google Scholar] [CrossRef]

- Huang, D.; Jiang, F.; Li, K.; Tong, G.; Zhou, G. Scaled PCA: A New Approach to Dimension Reduction. Manag. Sci. 2022, 68, 1678–1695. [Google Scholar] [CrossRef]

- Farrell, M.D.; Mersereau, R.M. On the Impact of PCA Dimension Reduction for Hyperspectral Detection of Difficult Targets. IEEE Geosci. Remote. Sens. 2005, 2, 192–195. [Google Scholar] [CrossRef]

- Hotelling, H. Analysis of a Complex of Statistical Variables into Principal Components. J. Educ. Psychol. 1933, 24, 417. [Google Scholar] [CrossRef]

- Anowar, F.; Sadaoui, S.; Selim, B. Conceptual and Empirical Comparison of Dimensionality Reduction Algorithms (pca, kpca, lda, mds, svd, lle, isomap, le, ica, t-sne). Comput. Sci. Rev. 2021, 40, 100378. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing Data Using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Rousseeuw, P.J. Silhouettes: A Graphical Aid to the Interpretation and Validation of Cluster Analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Chandra, M.P. On the Generalised Distance in Statistics. Proc. Natl. Inst. Sci. India 1936, 2, 49–55. [Google Scholar]

- Jung, S.Y.; Kim, S.G.; Kim, J.H.; Park, S.H. Development of Multifunctional Myoelectric Hand Prosthesis System with Easy and Effective Mode Change Control Method Based on the Thumb Position and State. Appl. Sci. 2021, 11, 7295. [Google Scholar] [CrossRef]

- Belter, J.T.; Segil, J.L.; Dollar, A.M.; Weir, R.F. Mechanical Design and Performance Specifications of Anthropomorphic Prosthetic Hands: A Review. J. Rehabil. Res. Dev. 2013, 50, 599–618. [Google Scholar] [CrossRef] [PubMed]

- Bennett, D.A.; Dalley, S.A.; Truex, D.; Goldfarb, M. A Multigrasp Hand Prosthesis for Providing Precision and Conformal Grasps. IEEE/ASME Trans. Mechatron. 2014, 20, 1697–1704. [Google Scholar] [CrossRef]

- Ryu, W.; Choi, Y.; Choi, Y.J.; Lee, Y.G.; Lee, S. Development of an Anthropomorphic Prosthetic Hand with Underactuated Mechanism. Appl. Sci. 2020, 10, 4384. [Google Scholar] [CrossRef]

- Controzzi, M.; Clemente, F.; Barone, D.; Ghionzoli, A.; Cipriani, C. The SSSA-MyHand: A Dexterous Lightweight Myoelectric Hand Prosthesis. IEEE Trans. Neural. Syst. Rehabil. Eng. 2016, 25, 459–468. [Google Scholar] [CrossRef]

- Fang, Y.; Zhou, D.; Li, K.; Liu, H. Interface Prostheses with Classifier-Feedback-Based User Training. IEEE. Trans. Biomed. Eng. 2016, 64, 2575–2583. [Google Scholar]

- Zhang, Z.; Yang, K.; Qian, J.; Zhang, L. Real-Time Surface EMG Pattern Recognition for Hand Gestures Based on an Artificial Neural Network. Sensors 2019, 19, 3170. [Google Scholar] [CrossRef]

- Neumann, D.A. Essential Topics of Kinesiology. Kinesiology of the Musculoskeletal System: Foundation for Rehabilitation, 2nd ed.; Elsevier: Mosby, MO, USA, 2010; pp. 1–115. [Google Scholar]

- Winter, D.A.; Fuglevand, A.J.; Archer, S.E. Crosstalk in Surface Electromyography: Theoretical and Practical Estimates. J. Electromyogr. Kinesiol. 1994, 4, 15–26. [Google Scholar] [CrossRef]

- Benatti, S.; Milosevic, B.; Farella, E.; Gruppioni, E.; Benini, L. A Prosthetic Hand Body Area Controller Based on Efficient Pattern Recognition Control Strategies. Sensors 2017, 17, 869. [Google Scholar] [CrossRef]

- Ahmadizadeh, C.; Merhi, L.K.; Pousett, B.; Sangha, S.; Menon, C. Toward Intuitive Prosthetic Control: Solving Common Issues Using Force Myography, Surface Electromyography, and Pattern Recognition in a Pilot Case Study. IEEE Robot. Autom. Mag. 2017, 24, 102–111. [Google Scholar] [CrossRef]

| : sEMG signal | |

| , R = 0.0:0.5:10.0 | |

| Classification Accuracy (%): Mean (Standard Deviation) | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TRN1 | TRN2 | TRN3 | TRN4 | TRN5 | TRN6 | TRN7 | TRN8 | TRN9 | |||||||||||

| Healthy Adults | Day 1 | 70.6 | (7.7) | 77.2 | (6.9) | 80.1 | (6.3) | 82.0 | (6.2) | 83.7 | (5.7) | 85.4 | (5.5) | 85.9 | (6.1) | 86.8 | (6.3) | 87.7 | (6.5) |

| Day 2 | 75.0 | (6.8) | 81.7 | (6.8) | 84.8 | (6.8) | 86.4 | (6.4) | 87.6 | (6.0) | 88.4 | (5.9) | 89.1 | (5.7) | 89.9 | (5.4) | 90.3 | (4.7) | |

| Forearm Amputee | Day 1 | 28.1 | (4.3) | 30.4 | (4.6) | 31.4 | (3.0) | 30.8 | (2.1) | 31.8 | (2.3) | 31.3 | (2.7) | 30.7 | (3.7) | 31.2 | (6.0) | 32.8 | (5.7) |

| Day 2 | 34.5 | (4.9) | 36.9 | (5.0) | 40.3 | (4.2) | 40.3 | (2.6) | 42.0 | (2.1) | 43.6 | (3.6) | 44.5 | (4.8) | 44.5 | (9.1) | 48.3 | (9.5) | |

| Day 3 | 45.3 | (3.8) | 48.6 | (4.7) | 50.0 | (3.9) | 49.2 | (5.4) | 49.4 | (4.6) | 51.8 | (4.8) | 54.1 | (2.2) | 56.4 | (3.9) | 59.7 | (10.6) | |

| Day 4 | 67.0 | (3.1) | 70.0 | (2.3) | 68.3 | (3.0) | 71.9 | (3.4) | 72.1 | (2.6) | 74.0 | (3.2) | 75.5 | (4.2) | 78.4 | (5.4) | 80.7 | (11.9) | |

| Day 5 | 58.5 | (5.0) | 61.3 | (5.5) | 61.7 | (4.8) | 62.2 | (6.1) | 63.9 | (2.9) | 65.2 | (4.1) | 70.3 | (3.2) | 72.0 | (5.4) | 76.5 | (11.1) | |

| Classification Accuracy (%): Mean (Standard Deviation) | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TRN1 | TRN2 | TRN3 | TRN4 | TRN5 | TRN6 | TRN7 | TRN8 | TRN9 | |||||||||||

| Healthy Adults | Day 1 | 75.2 | (7.1) | 81.5 | (5.8) | 84.4 | (4.8) | 86.1 | (4.8) | 87.5 | (4.6) | 88.9 | (4.4) | 89.7 | (4.4) | 90.9 | (4.3) | 91.2 | (4.3) |

| Day 2 | 82.5 | (6.7) | 87.4 | (5.7) | 89.7 | (5.1) | 91.2 | (4.8) | 92.1 | (4.6) | 92.9 | (4.3) | 93.5 | (4.3) | 94.3 | (4.0) | 95.1 | (3.4) | |

| Forearm Amputee | Day 1 | 29.4 | (4.2) | 31.2 | (4.5) | 32.2 | (3.6) | 32.2 | (2.6) | 32.1 | (2.4) | 32.5 | (2.4) | 31.2 | (3.5) | 32.0 | (7.2) | 30.9 | (8.9) |

| Day 2 | 36.3 | (4.2) | 41.0 | (4.5) | 43.0 | (3.5) | 45.2 | (2.7) | 45.5 | (1.8) | 46.9 | (4.7) | 47.7 | (5.0) | 47.3 | (7.3) | 49.5 | (9.0) | |

| Day 3 | 46.3 | (3.9) | 50.8 | (4.9) | 52.7 | (3.9) | 53.2 | (4.1) | 55.8 | (2.6) | 56.5 | (3.9) | 58.8 | (3.9) | 60.2 | (3.1) | 64.3 | (9.8) | |

| Day 4 | 71.0 | (4.4) | 72.5 | (3.0) | 72.7 | (2.7) | 74.1 | (3.9) | 75.3 | (3.1) | 75.9 | (3.4) | 78.2 | (4.0) | 81.1 | (5.7) | 85.5 | (9.8) | |

| Day 5 | 64.8 | (4.0) | 68.2 | (3.5) | 69.3 | (4.2) | 70.6 | (5.8) | 72.6 | (6.0) | 74.9 | (5.3) | 77.9 | (3.1) | 80.1 | (5.7) | 84.2 | (6.7) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Yang, S.; Koo, B.; Lee, S.; Park, S.; Kim, S.; Cho, K.H.; Kim, Y. sEMG-Based Hand Posture Recognition and Visual Feedback Training for the Forearm Amputee. Sensors 2022, 22, 7984. https://doi.org/10.3390/s22207984

Kim J, Yang S, Koo B, Lee S, Park S, Kim S, Cho KH, Kim Y. sEMG-Based Hand Posture Recognition and Visual Feedback Training for the Forearm Amputee. Sensors. 2022; 22(20):7984. https://doi.org/10.3390/s22207984

Chicago/Turabian StyleKim, Jongman, Sumin Yang, Bummo Koo, Seunghee Lee, Sehoon Park, Seunggi Kim, Kang Hee Cho, and Youngho Kim. 2022. "sEMG-Based Hand Posture Recognition and Visual Feedback Training for the Forearm Amputee" Sensors 22, no. 20: 7984. https://doi.org/10.3390/s22207984

APA StyleKim, J., Yang, S., Koo, B., Lee, S., Park, S., Kim, S., Cho, K. H., & Kim, Y. (2022). sEMG-Based Hand Posture Recognition and Visual Feedback Training for the Forearm Amputee. Sensors, 22(20), 7984. https://doi.org/10.3390/s22207984