A Novel Pallet Detection Method for Automated Guided Vehicles Based on Point Cloud Data

Abstract

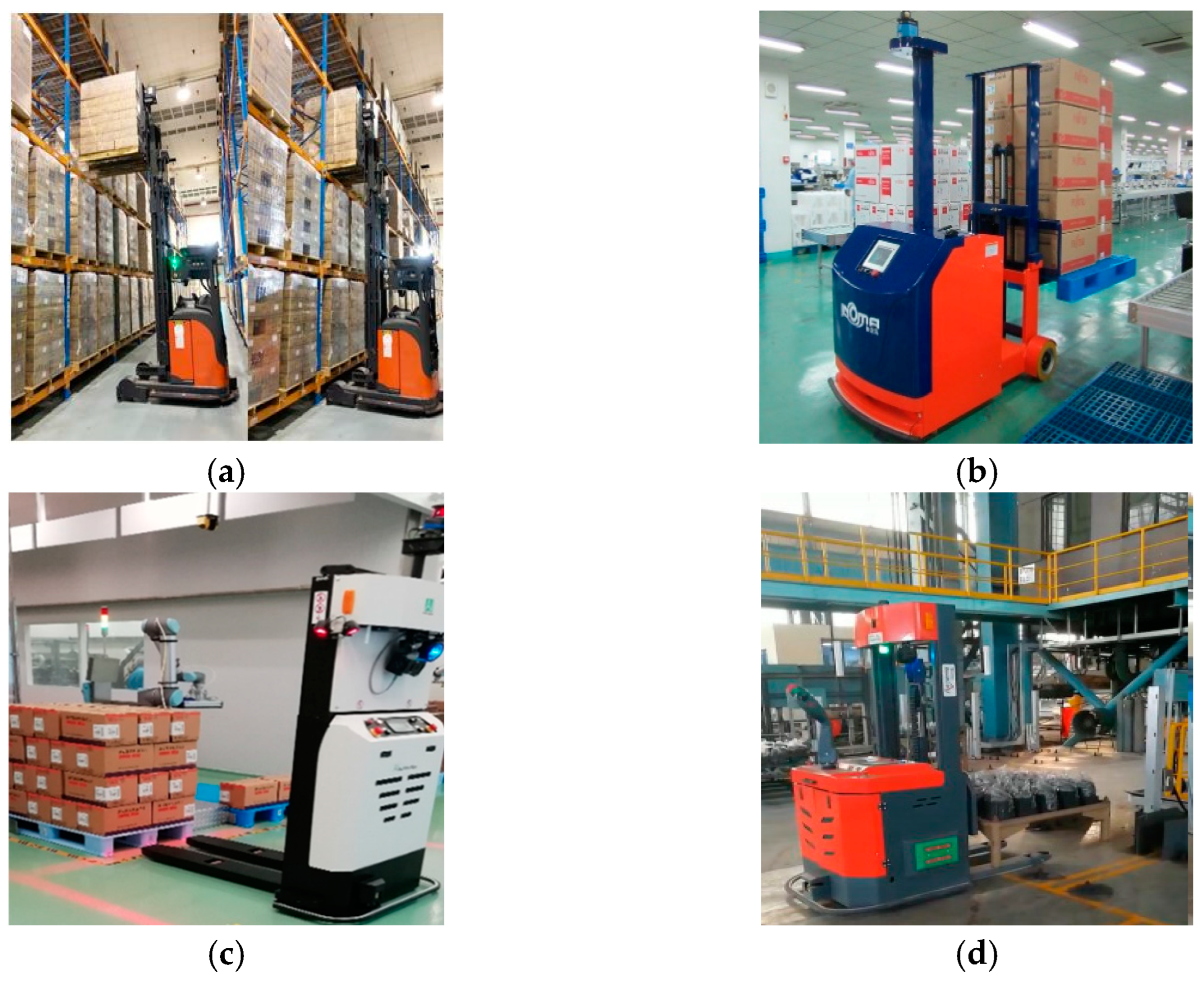

:1. Introduction

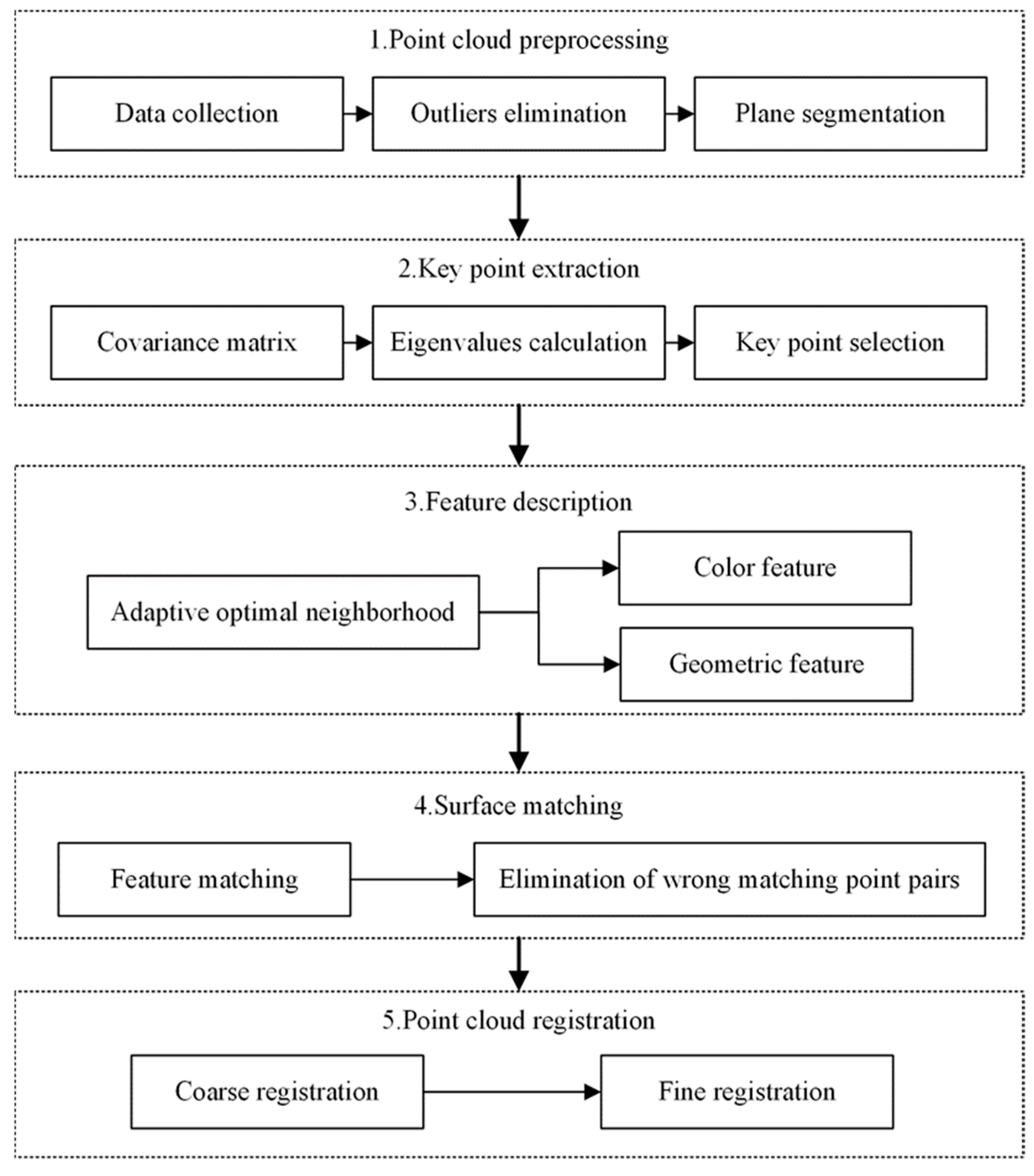

2. The Proposed Method

2.1. Overview of the Proposed Method

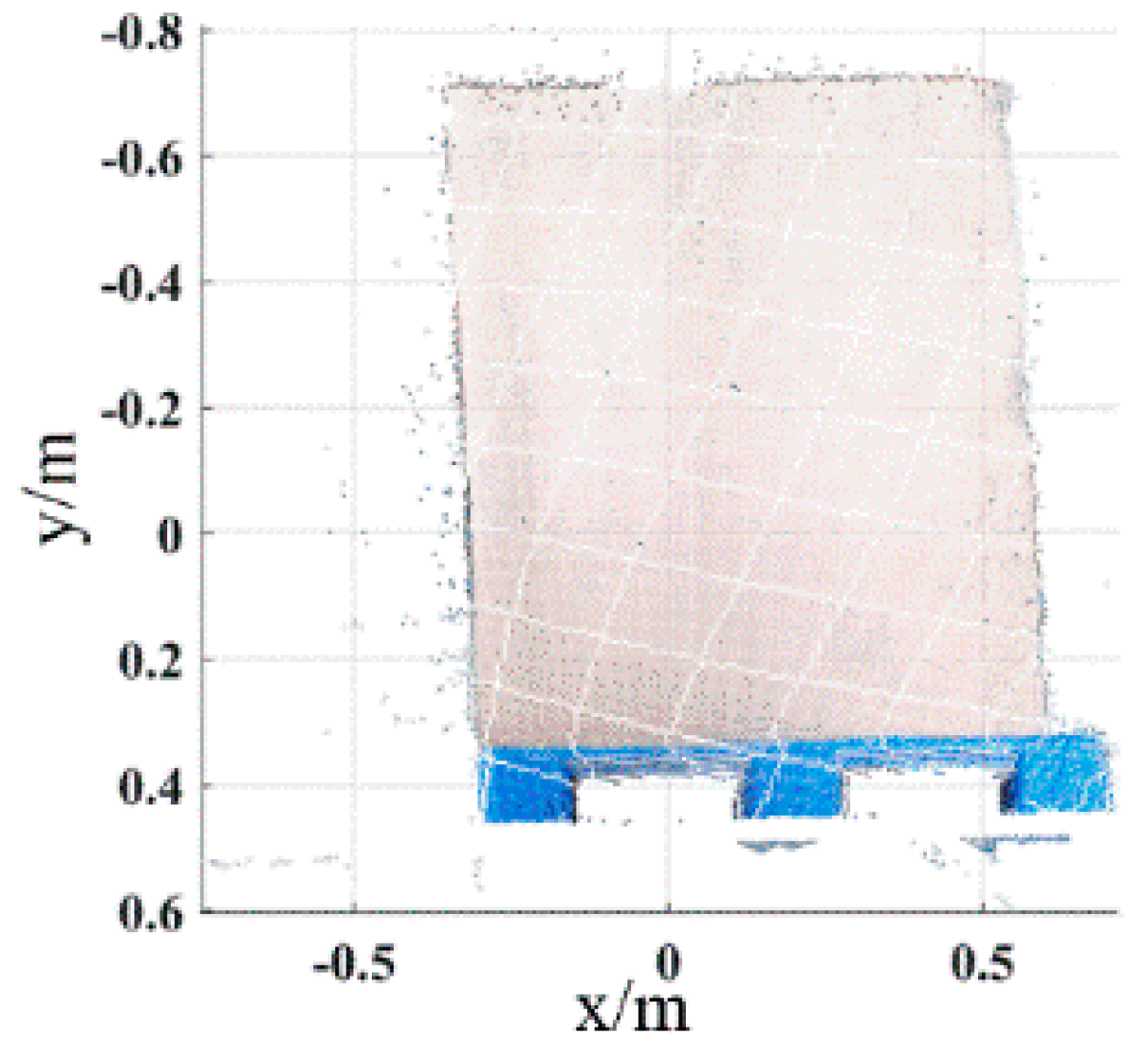

2.2. Point Cloud Preprocessing

2.2.1. Outliers Elimination

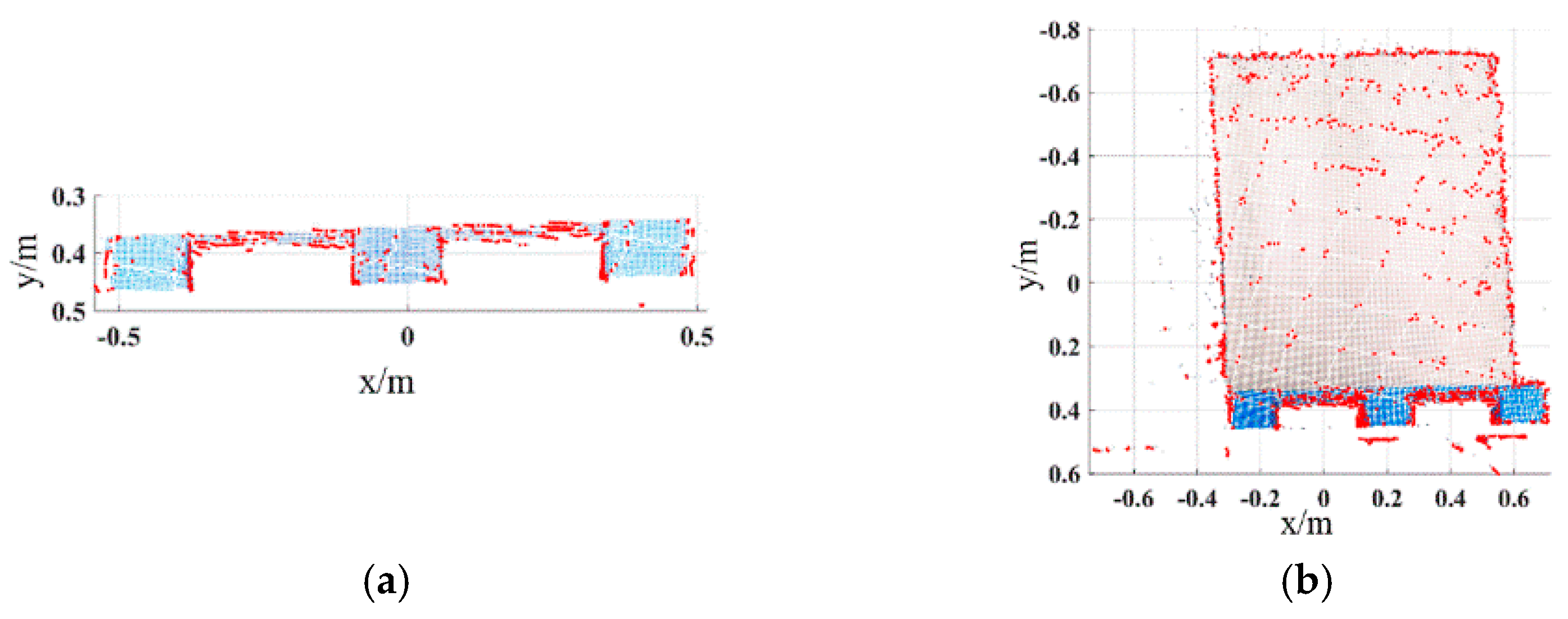

2.2.2. Plane Segmentation

2.3. Key Point Extraction

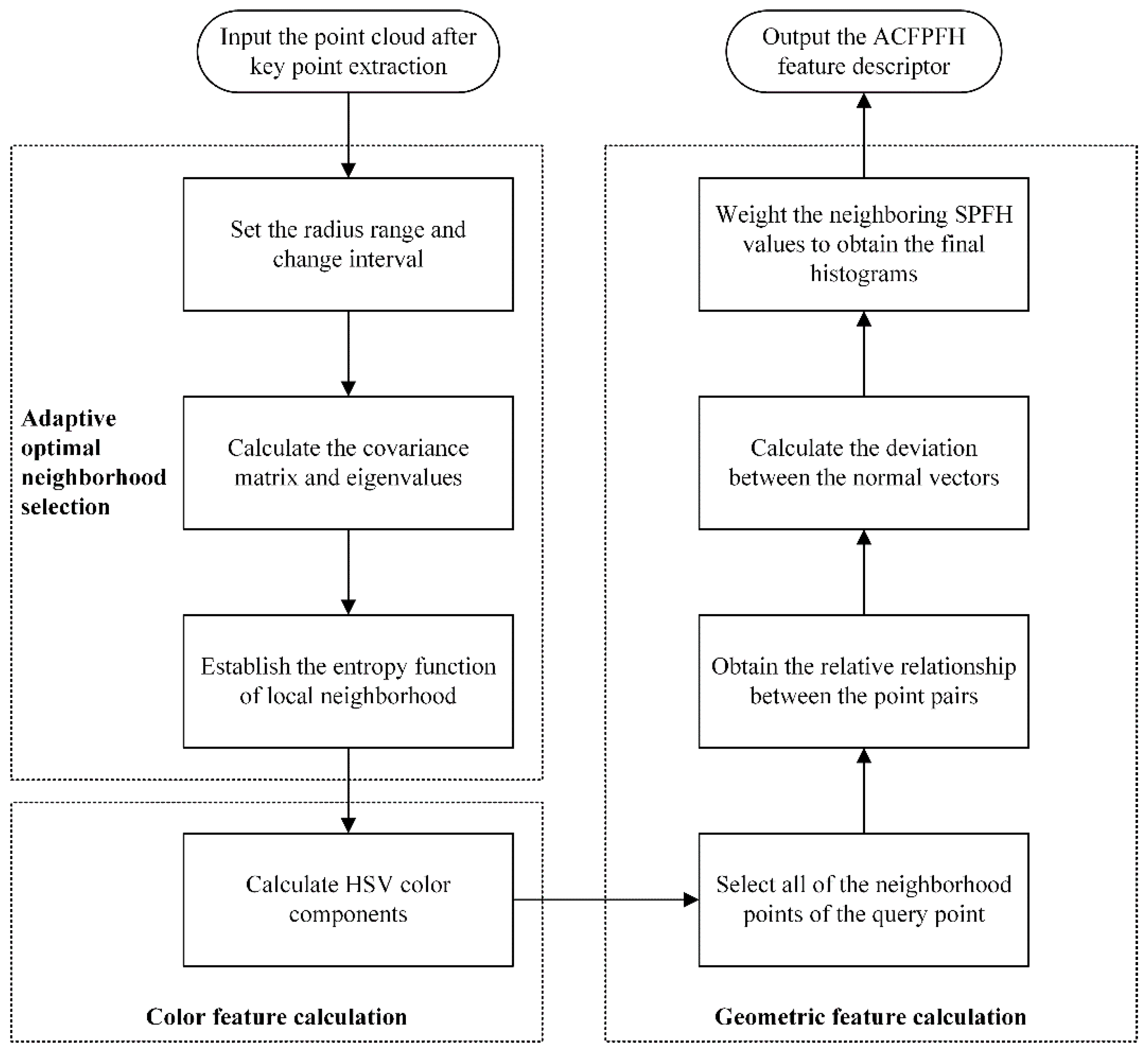

2.4. Feature Description

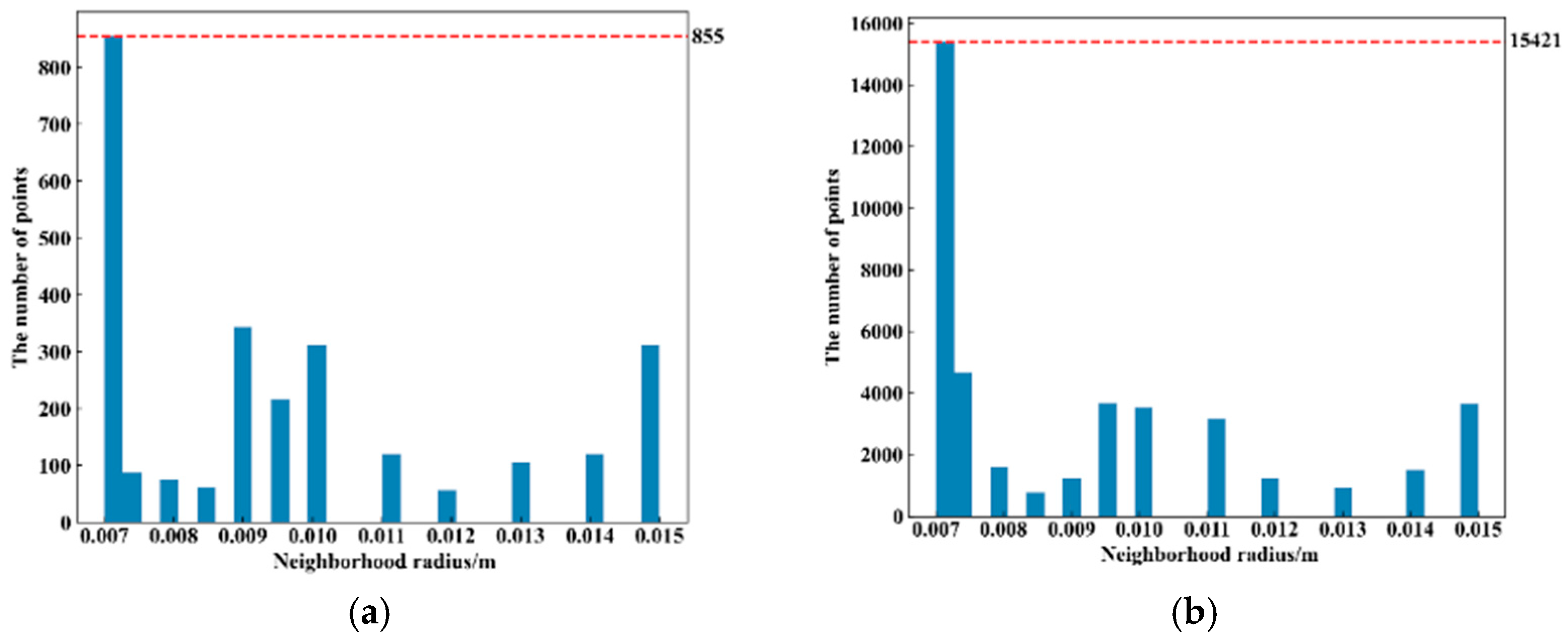

2.4.1. Adaptive Optimal Neighborhood Selection

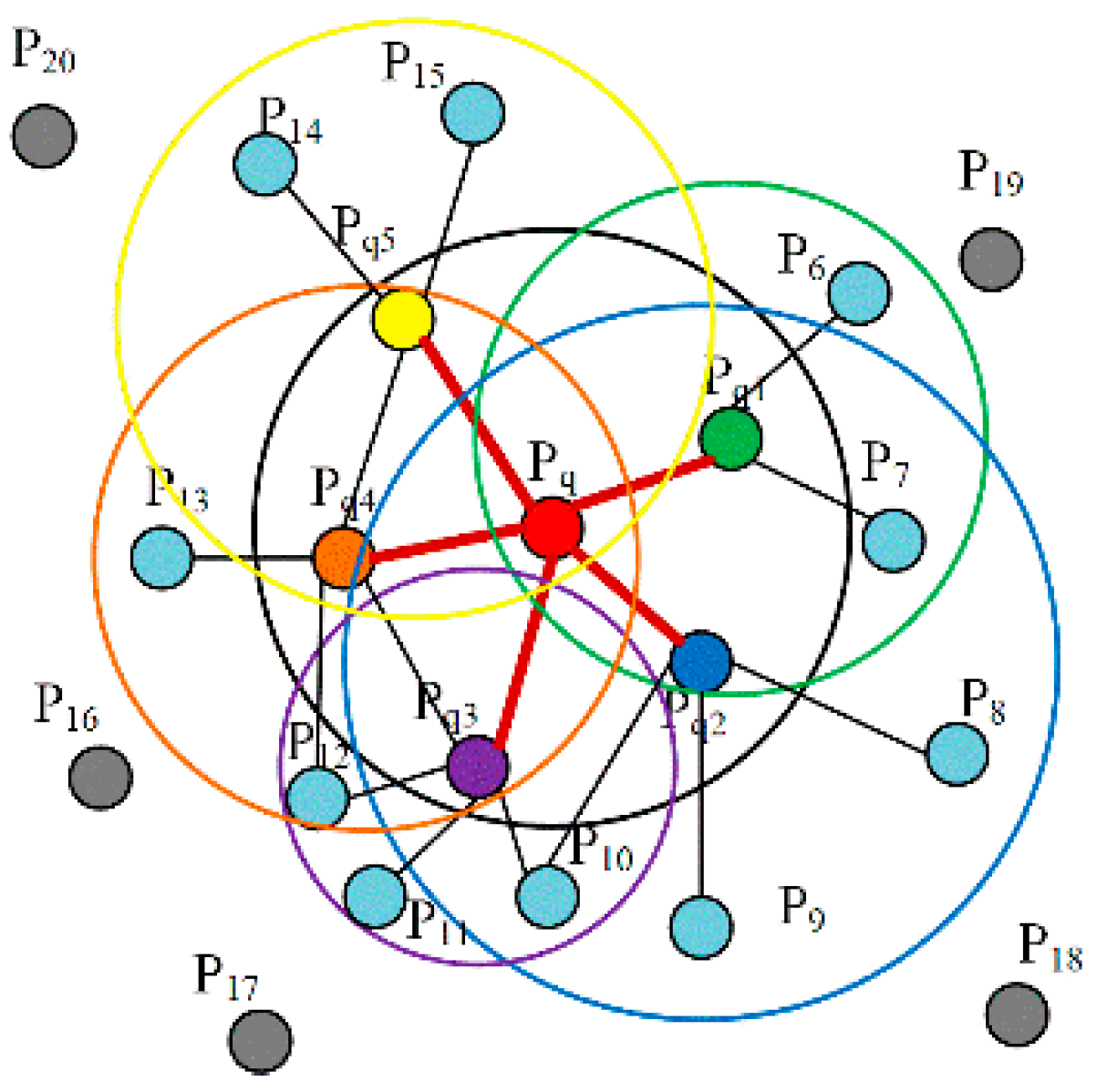

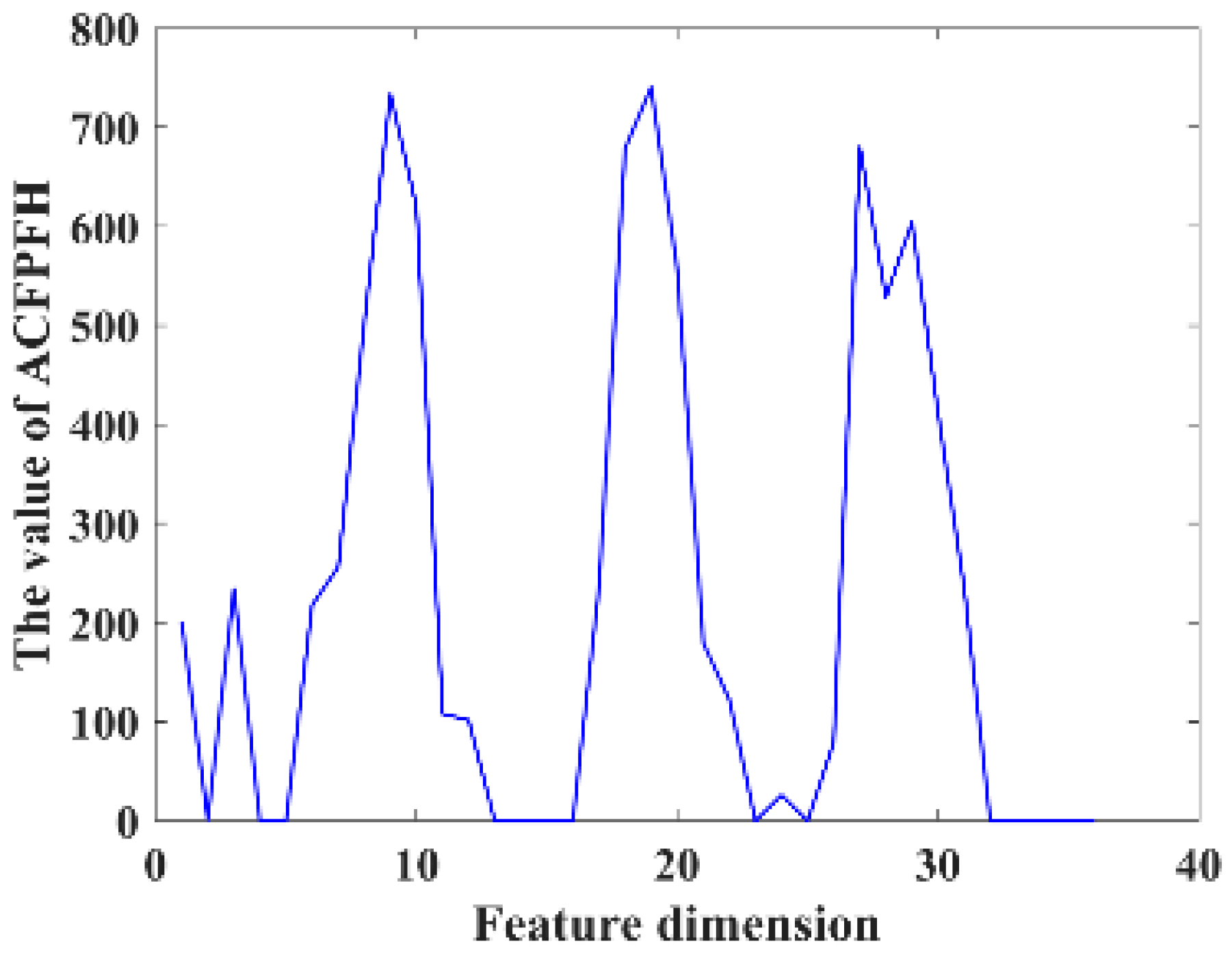

2.4.2. ACFPFH Feature Description

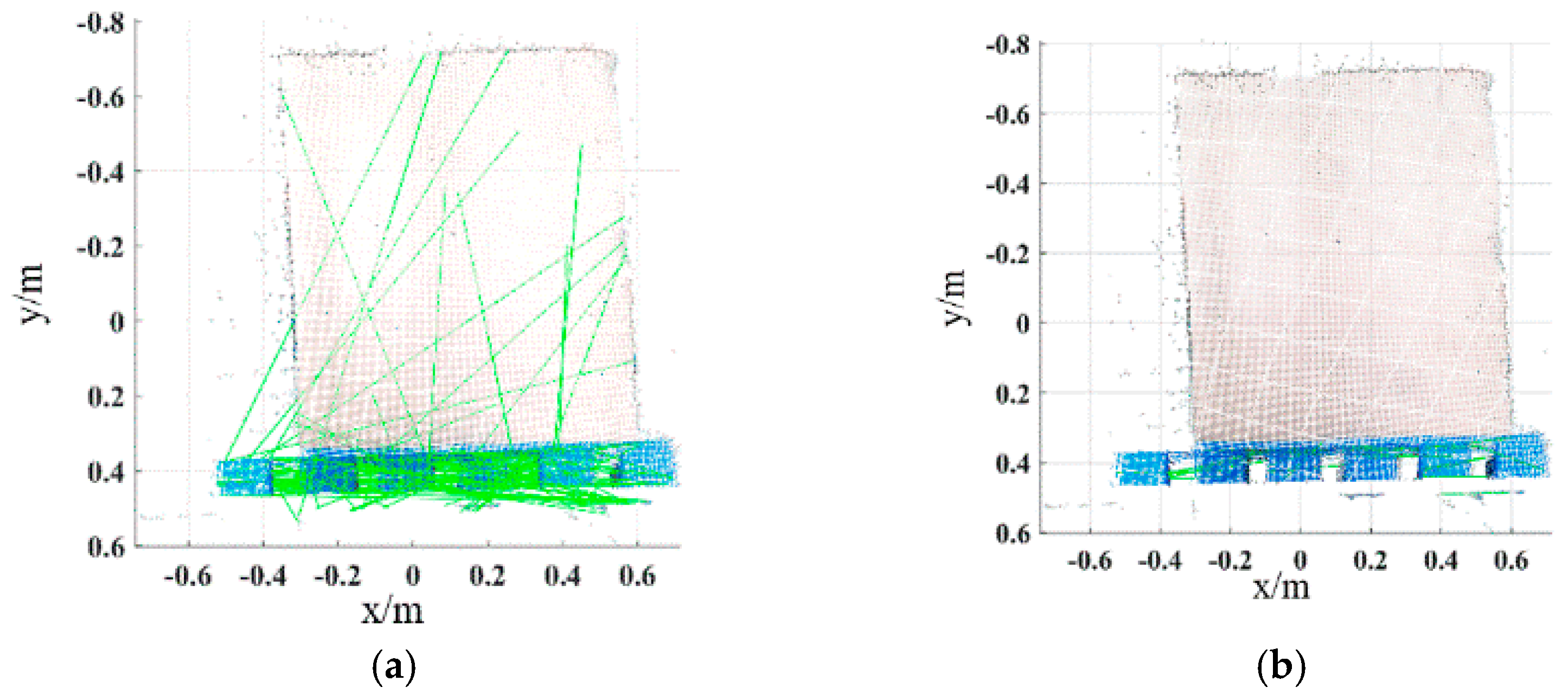

2.5. Surface Matching

2.6. Point Cloud Registration

2.6.1. Coarse Registration

2.6.2. Fine Registration

3. Case Studies

3.1. Evaluation Index

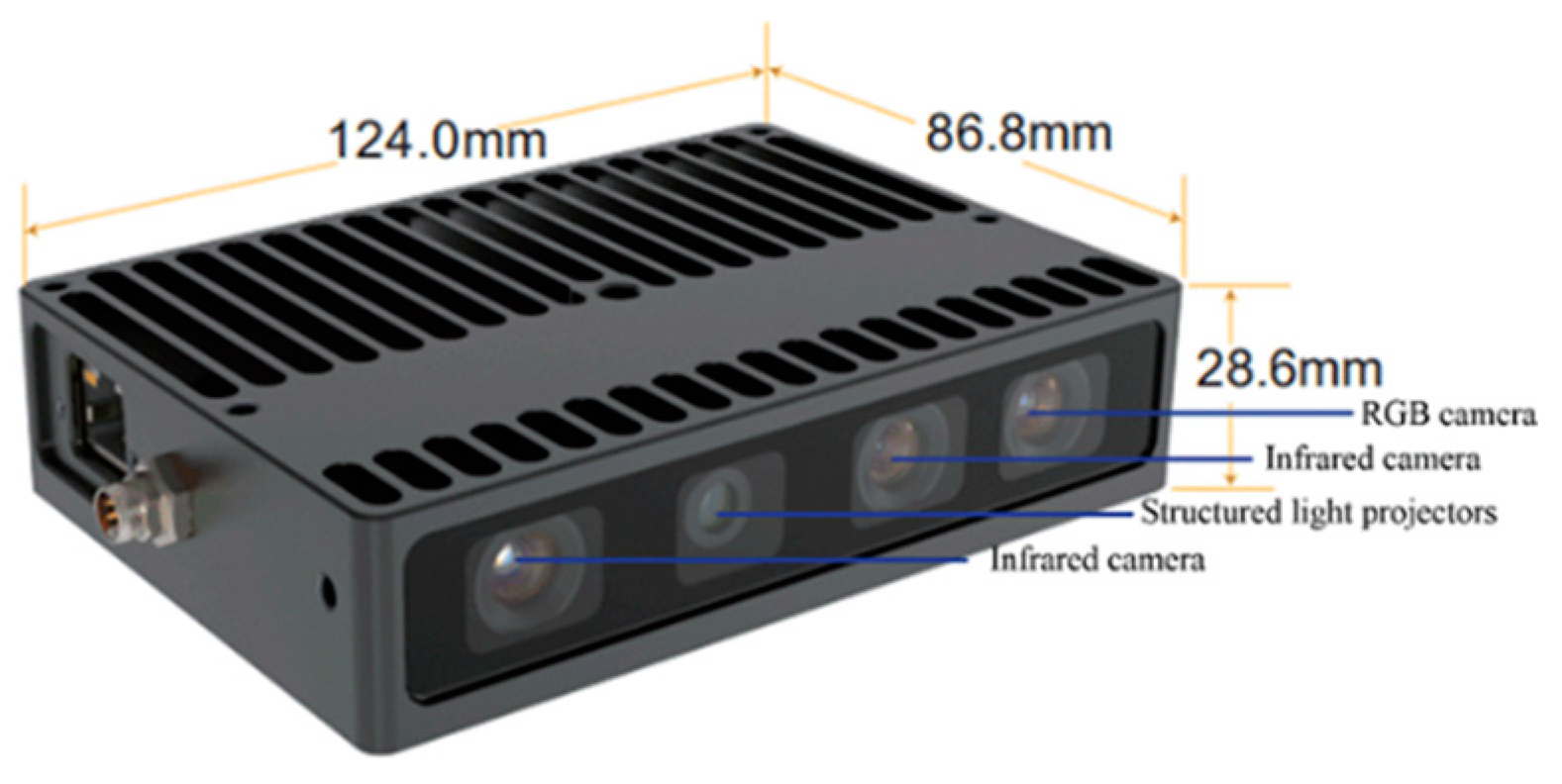

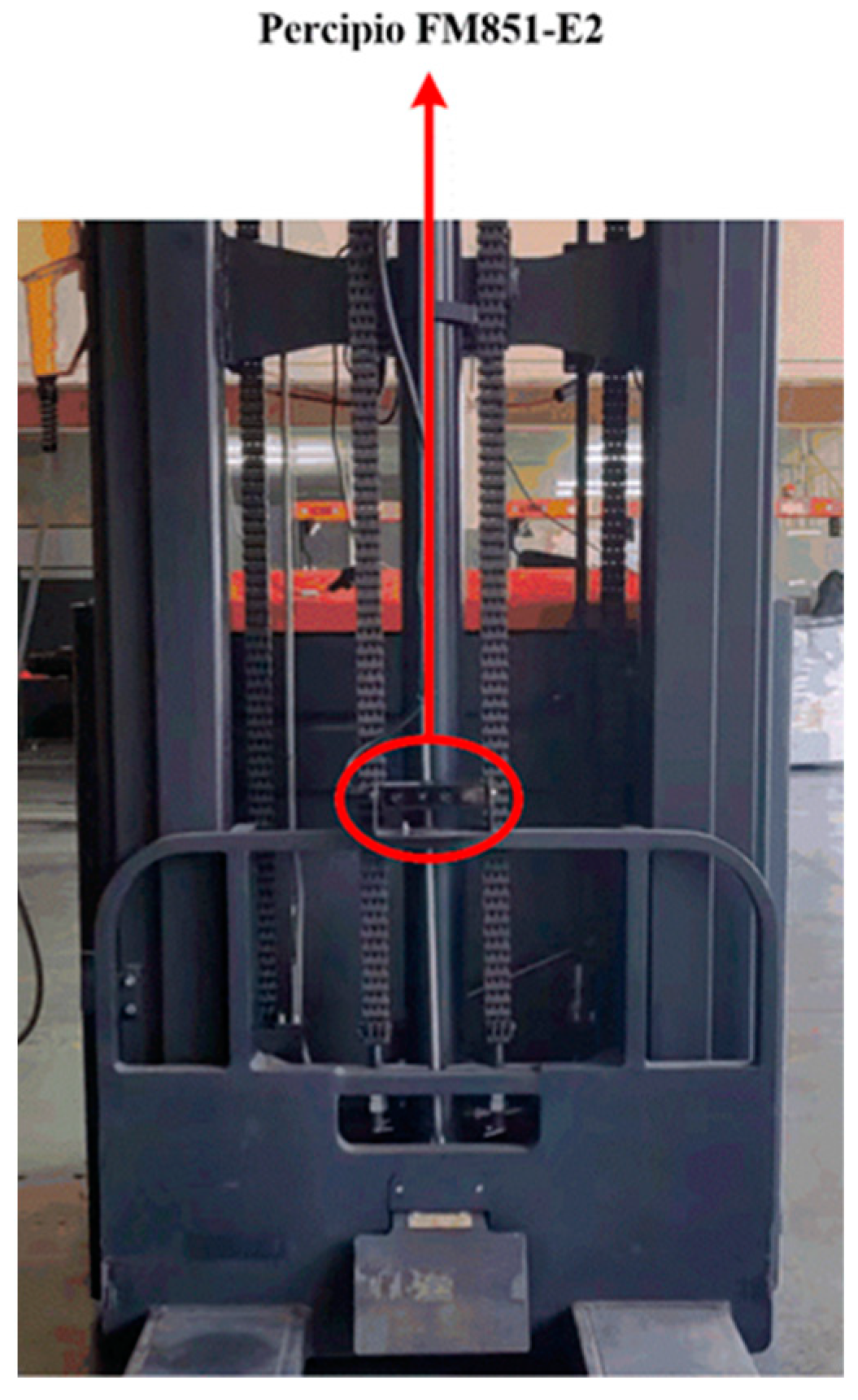

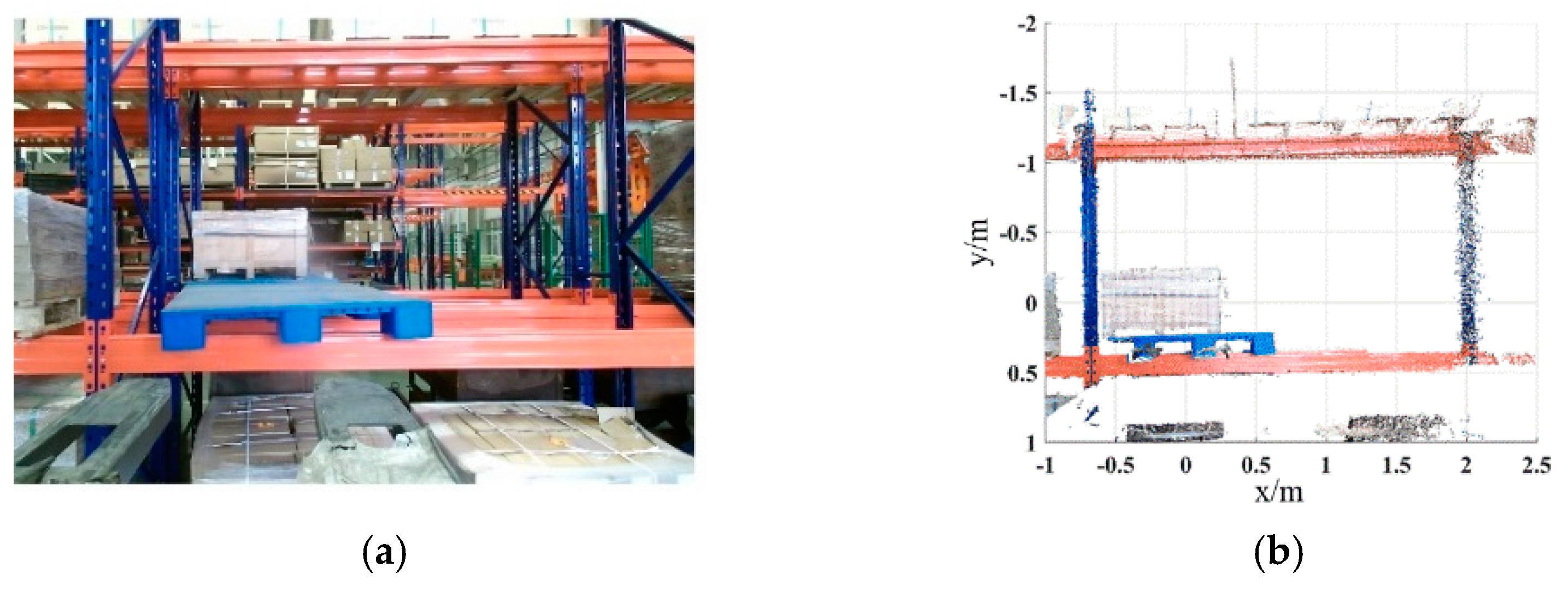

3.2. Experiment Preparation

3.3. Case Study I

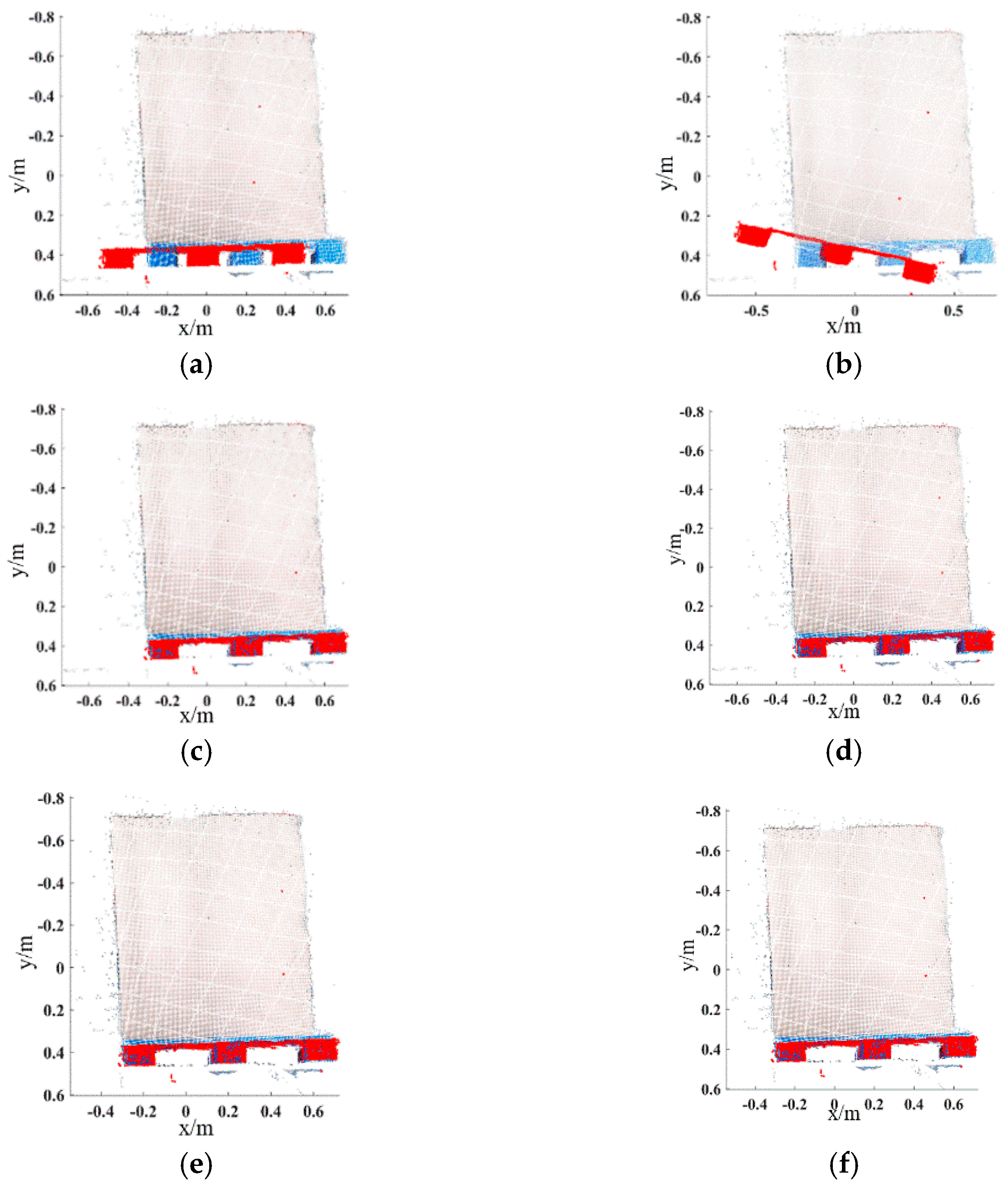

3.3.1. Implementation Process

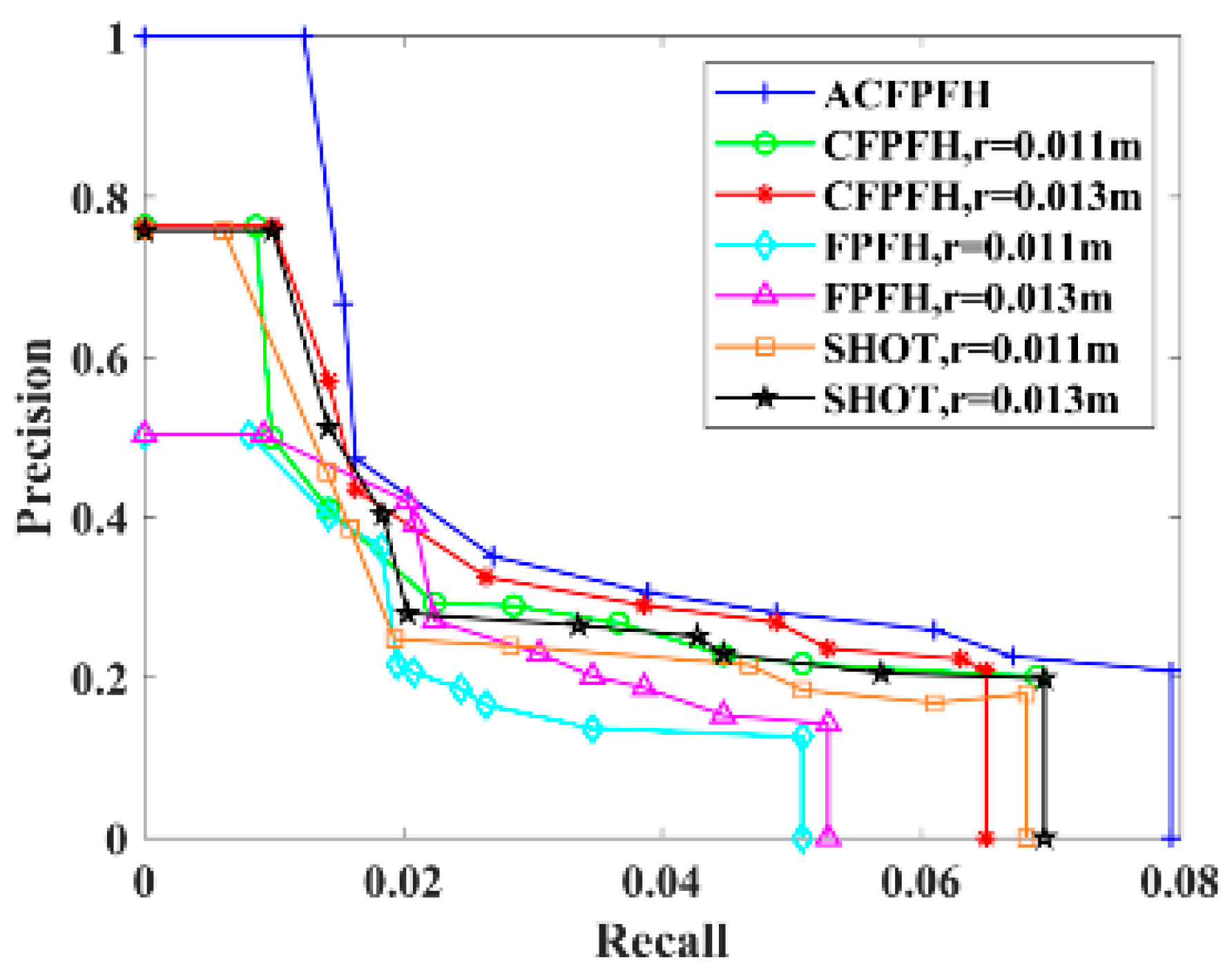

3.3.2. Performance Evaluation

3.4. Case Study II

4. Conclusions

- A novel pallet detection method for automated guided vehicles based on point cloud data is proposed, which can be used for automated guided vehicles to perform automated and effective pallet handling, thereby promoting the transformation and upgrading of the manufacturing industry.

- A new Adaptive Color Fast Point Feature Histogram (ACFPFH) feature descriptor has been built for the description of pallet features, which overcomes shortcomings such as low efficiency, time-consumption, poor robustness, and random parameter selection in feature description.

- A new surface matching method called the Bidirectional Nearest Neighbor Distance Ratio-Approximate Congruent Triangle Neighborhood (BNNDR-ACTN) is proposed, which transforms the point-to-point matching problem into the neighborhood matching problem and can obtain more feature information and improve the detection accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ambroz, M. Raspberry Pi as a low-cost data acquisition system for human powered vehicles. Measurement 2017, 100, 7–18. [Google Scholar] [CrossRef]

- Li, Z.; Barenji, A.V.; Jiang, J.; Zhong, R.Y.; Xu, G. A mechanism for scheduling multi robot intelligent warehouse system face with dynamic demand. J. Intell. Manuf. 2018, 31, 469–480. [Google Scholar] [CrossRef]

- Casado, F.; Lapido, Y.L.; Losada, D.P. Pose Estimation and Object Tracking Using 2D Images. Procedia Manuf. 2017, 11, 63–71. [Google Scholar] [CrossRef]

- Sriram, K.V.; Havaldar, R.H. Analytical review and study on object detection techniques in the image. Int. J. Modeling Simul. Sci. Comput. 2021, 12, 31–50. [Google Scholar] [CrossRef]

- Wang, Q.; Tan, Y.; Mei, Z. Computational Methods of Acquisition and Processing of 3D Point Cloud Data for Construction Applications. Arch. Comput. Methods Eng. 2019, 27, 479–499. [Google Scholar] [CrossRef]

- Camurri, M.; Vezzani, R.; Cucchiara, R. 3D Hough transform for sphere recognition on point clouds: A systematic study and a new method proposal. Mach. Vis. Appl. 2014, 25, 1877–1891. [Google Scholar] [CrossRef]

- García-Pulido, J.A.; Pajares, G.; Dormido, S.; de la Cruz, J.M. Recognition of a landing platform for unmanned aerial vehicles by using computer vision-based techniques. Expert Syst. Appl. 2017, 76, 152–165. [Google Scholar] [CrossRef]

- Seidenari, L.; Serra, G.; Bagdanov, A.D.; Del Bimbo, A. Local Pyramidal Descriptors for Image Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1033–1040. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Chen, L. Multi-Dimensional Color Image Recognition and Mining Based on Feature Mining Algorithm. Autom. Control. Comput. Sci. 2021, 55, 195–201. [Google Scholar] [CrossRef]

- Joshi, K.D.; Chauhan, V.; Surgenor, B. A flexible machine vision system for small part inspection based on a hybrid SVM/ANN approach. J. Intell. Manuf. 2018, 31, 103–125. [Google Scholar] [CrossRef]

- Bastian, B.T.; Charangatt Victor, J. Detection and pose estimation of auto-rickshaws from traffic images. Mach. Vis. Appl. 2020, 31, 54. [Google Scholar] [CrossRef]

- Li, J.; Kang, J.; Chen, Z.; Cui, F.; Fan, Z. A Workpiece Localization Method for Robotic De-Palletizing Based on Region Growing and PPHT. IEEE Access 2020, 8, 6365–6376. [Google Scholar] [CrossRef]

- Syu, J.-L.; Li, H.-T.; Chiang, J.S. A computer vision assisted system for autonomous forklift vehicles in real factory environment. Multimed. Tools Appl. 2016, 76, 387–407. [Google Scholar] [CrossRef]

- Li, T.; Huang, B.; Li, C. Application of convolution neural network object detection algorithm in logistics warehouse. J. Eng. 2019, 2019, 9053–9058. [Google Scholar] [CrossRef]

- Shao, Y.; Wang, K.; Du, S.; Xi, L. High definition metrology enabled three dimensional discontinuous surface filtering by extended tetrolet transform. J. Manuf. Syst. 2018, 49, 75–92. [Google Scholar] [CrossRef]

- Huang, D.; Du, S.; Li, G.; Zhao, C.; Deng, Y. Detection and monitoring of defects on three-dimensional curved surfaces based on high-density point cloud data. Precis. Eng. 2018, 53, 79–95. [Google Scholar] [CrossRef]

- Jia, S.; Deng, Y.; Lv, J.; Du, S.; Xie, Z. Joint distribution adaptation with diverse feature aggregation: A new transfer learning framework for bearing diagnosis across different machines. Measurement 2022, 187, 110332. [Google Scholar] [CrossRef]

- He, K.; Zhang, M.; Zuo, L.; Alhwiti, T.; Megahed, F.M. Enhancing the monitoring of 3D scanned manufactured parts through projections and spatiotemporal control charts. J. Intell. Manuf. 2014, 28, 899–911. [Google Scholar] [CrossRef]

- Seelinger, M.; Yoder, J.D. Automatic pallet engagment by a vision guided forklift. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Barcelona, Spain, 18–22 April 2005; pp. 4068–4073. [Google Scholar]

- Lecking, D.; Wulf, O.; Wagner, B. Variable pallet pick-up for automatic guided vehicles in industrial environments. In Proceedings of the 11th IEEE International Conference on Emerging Technologies and Factory Automation, Prague, Czech, 20–22 September 2006; pp. 1169–1174. [Google Scholar]

- Guo, Y.; Bennamoun, M.; Sohel, F.; Lu, M.; Wan, J. 3D Object Recognition in Cluttered Scenes with Local Surface Features: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2270–2287. [Google Scholar] [CrossRef]

- Liu, Y.; Kong, D.; Zhao, D.; Gong, X.; Han, G. A Point Cloud Registration Algorithm Based on Feature Extraction and Matching. Math. Probl. Eng. 2018, 2018, 7352691. [Google Scholar] [CrossRef]

- Tao, Y.; Zhou, J. Automatic apple recognition based on the fusion of color and 3D feature for robotic fruit picking. Comput. Electron. Agric. 2017, 142, 388–396. [Google Scholar] [CrossRef]

- Liu, J.; Bai, D.; Chen, L. 3-D point cloud registration algorithm based on greedy projection triangulation. Appl. Sci. 2018, 8, 1776. [Google Scholar] [CrossRef] [Green Version]

- Li, P.; Wang, J.; Zhao, Y.; Wang, Y.; Yao, Y. Improved algorithm for point cloud registration based on fast point feature histograms. J. Appl. Remote Sens. 2016, 10, 045024. [Google Scholar] [CrossRef]

- Kitt, B.; Geiger, A.; Lategahn, H. Visual Odometry based on Stereo Image Sequences with RANSAC-based Outlier Rejection Scheme. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), University of California, San Diego (UCSD), San Diego, CA, USA, 21–24 June 2010; pp. 486–492. [Google Scholar]

- Xu, G.; Pang, Y.; Bai, Z.; Wang, Y.; Lu, Z. A Fast Point Clouds Registration Algorithm for Laser Scanners. Appl. Sci. 2021, 11, 3426. [Google Scholar] [CrossRef]

- Napoli, A.; Glass, S.; Ward, C.; Tucker, C.; Obeid, I. Performance analysis of a generalized motion capture system using microsoft kinect 2.0. Biomed. Signal Proces. 2017, 38, 265–280. [Google Scholar] [CrossRef]

- Demantké, J.; Mallet, C.; David, N.; Vallet, B. Dimensionality based scale selection in 3D lidar point clouds. Int. Arch. Photogramm. 2012, 38, 97–102. [Google Scholar] [CrossRef] [Green Version]

- Weinmann, M.; Jutzi, B.; Mallet, C. Semantic 3D scene interpretation: A framework combining optimal neighborhood size selection with relevant features. In Proceedings of the ISPRS annals of the photogrammetry, remote sensing and spatial information sciences, Zurich, Switzerland, 5–7 September 2014; pp. 181–188. [Google Scholar]

- Chernov, V.; Alander, J.; Bochko, V. Integer-based accurate conversion between RGB and HSV color spaces. Comput. Electr. Eng. 2015, 46, 328–337. [Google Scholar] [CrossRef]

| Eigenvalue Relation | Dimensionality Feature | Eigenvalue Relation |

|---|---|---|

| Linearity feature | ||

| Planarity feature | ||

| Scattering feature |

| Name | Feature Dimension | Neighborhood Radius/m | Recall | Precision | Accuracy Comparison of ACFPFH with Other Feature Descriptors (%) |

|---|---|---|---|---|---|

| SHOT | 352 | 0.011 | 0.0193 | 0.2481 | 29.40 |

| 0.013 | 0.0203 | 0.2798 | 20.38 | ||

| FPFH | 33 | 0.011 | 0.0183 | 0.2140 0.2712 | 39.10 |

| 0.013 | 0.0224 | 22.82 | |||

| CFPFH | 36 | 0.011 | 0.0219 | 0.2928 | 16.68 |

| 0.013 | 0.0264 | 0.3256 | 7.34 | ||

| ACFPFH | 36 | Adaptive | 0.0269 | 0.3514 | / |

| Name | Feature Dimension | Neighborhood Radius/m | Feature Extraction Time/s | Time Comparison of ACFPFH with Other Feature Descriptors (%) |

|---|---|---|---|---|

| SHOT | 352 | 0.011 | 0.151 | 14.57 |

| 0.013 | 0.185 | 30.27 | ||

| FPFH | 33 | 0.011 | 0.145 | 11.03 25.86 |

| 0.013 | 0.174 | |||

| CFPFH | 36 | 0.011 | 0.159 | 18.87 |

| 0.013 | 0.193 | 33.16 | ||

| ACFPFH | 36 | Adaptive | 0.129 | / |

| Method | The Number of Iterations | RMSE | The Runtime/s |

|---|---|---|---|

| Traditional ICP | 113 | 0.040344 | 27.256 |

| SHOT + ICP | 82 | 0.024791 | 0.986 |

| FPFH + ICP | 24 | 0.026589 | 0.948 |

| CFPFH + ICP | 44 | 0.021559 | 1.039 |

| ACFPFH | 26 | 0.009251 | 0.853 |

| Method | The Number of Iterations | RMSE | The Runtime/s |

|---|---|---|---|

| Traditional ICP | 68 | 0.041553 | 29.523 |

| SHOT + ICP | 49 | 0.025987 | 1.174 |

| FPFH + ICP | 32 | 0.026751 | 1.118 |

| CFPFH + ICP | 36 | 0.018954 | 1.326 |

| ACFPFH | 23 | 0.009032 | 0.989 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, Y.; Fan, Z.; Zhu, B.; Zhou, M.; Chen, Z.; Lu, J. A Novel Pallet Detection Method for Automated Guided Vehicles Based on Point Cloud Data. Sensors 2022, 22, 8019. https://doi.org/10.3390/s22208019

Shao Y, Fan Z, Zhu B, Zhou M, Chen Z, Lu J. A Novel Pallet Detection Method for Automated Guided Vehicles Based on Point Cloud Data. Sensors. 2022; 22(20):8019. https://doi.org/10.3390/s22208019

Chicago/Turabian StyleShao, Yiping, Zhengshuai Fan, Baochang Zhu, Minlong Zhou, Zhihui Chen, and Jiansha Lu. 2022. "A Novel Pallet Detection Method for Automated Guided Vehicles Based on Point Cloud Data" Sensors 22, no. 20: 8019. https://doi.org/10.3390/s22208019

APA StyleShao, Y., Fan, Z., Zhu, B., Zhou, M., Chen, Z., & Lu, J. (2022). A Novel Pallet Detection Method for Automated Guided Vehicles Based on Point Cloud Data. Sensors, 22(20), 8019. https://doi.org/10.3390/s22208019