Active Decision Support System for Observation Scheduling Based on Image Analysis at the BOROWIEC SLR Station

Abstract

:1. Introduction

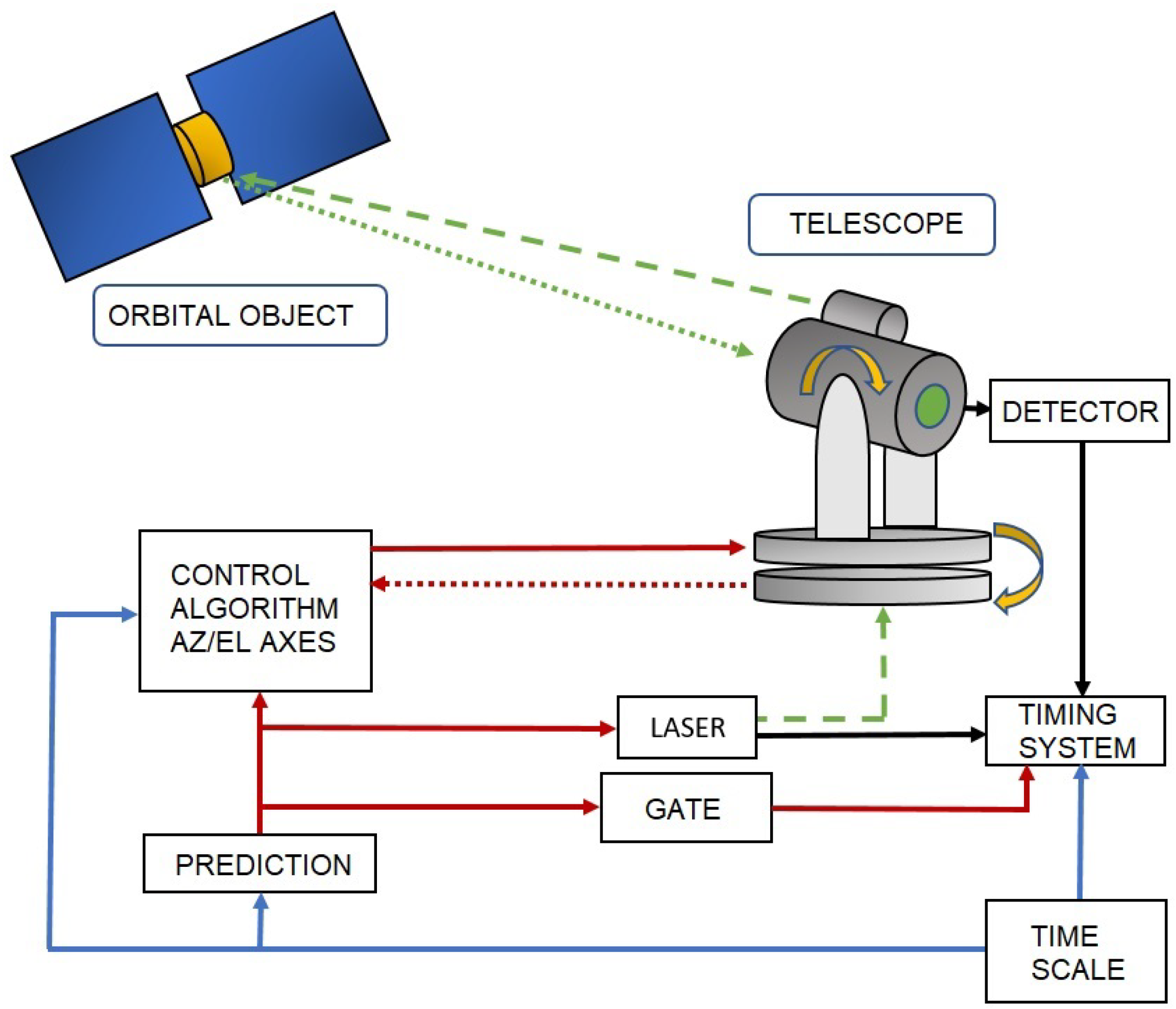

2. Satellite Laser Ranging Process

2.1. Types and Characteristics of Tracked Orbital Objects

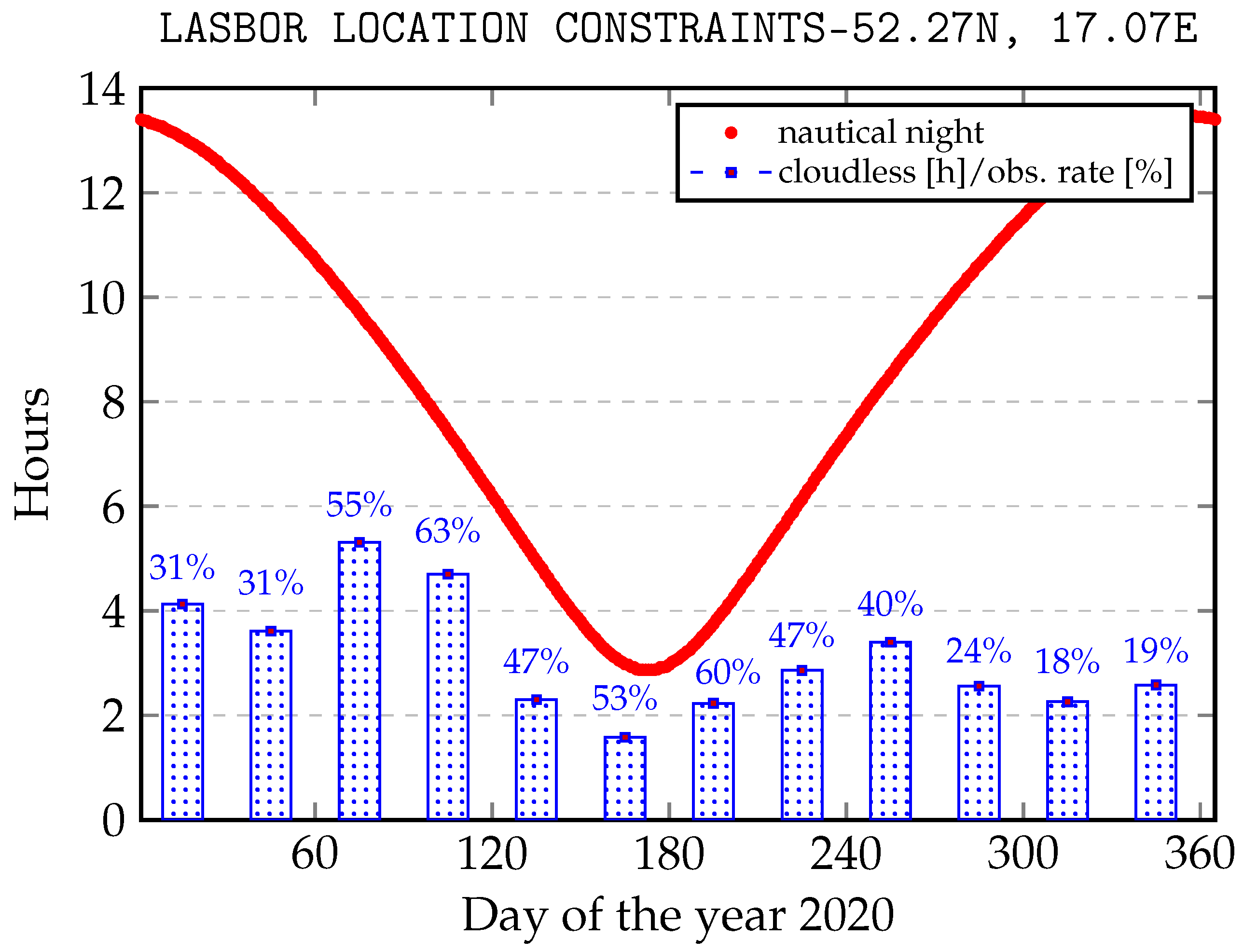

2.2. Weather Conditions and Operational Time

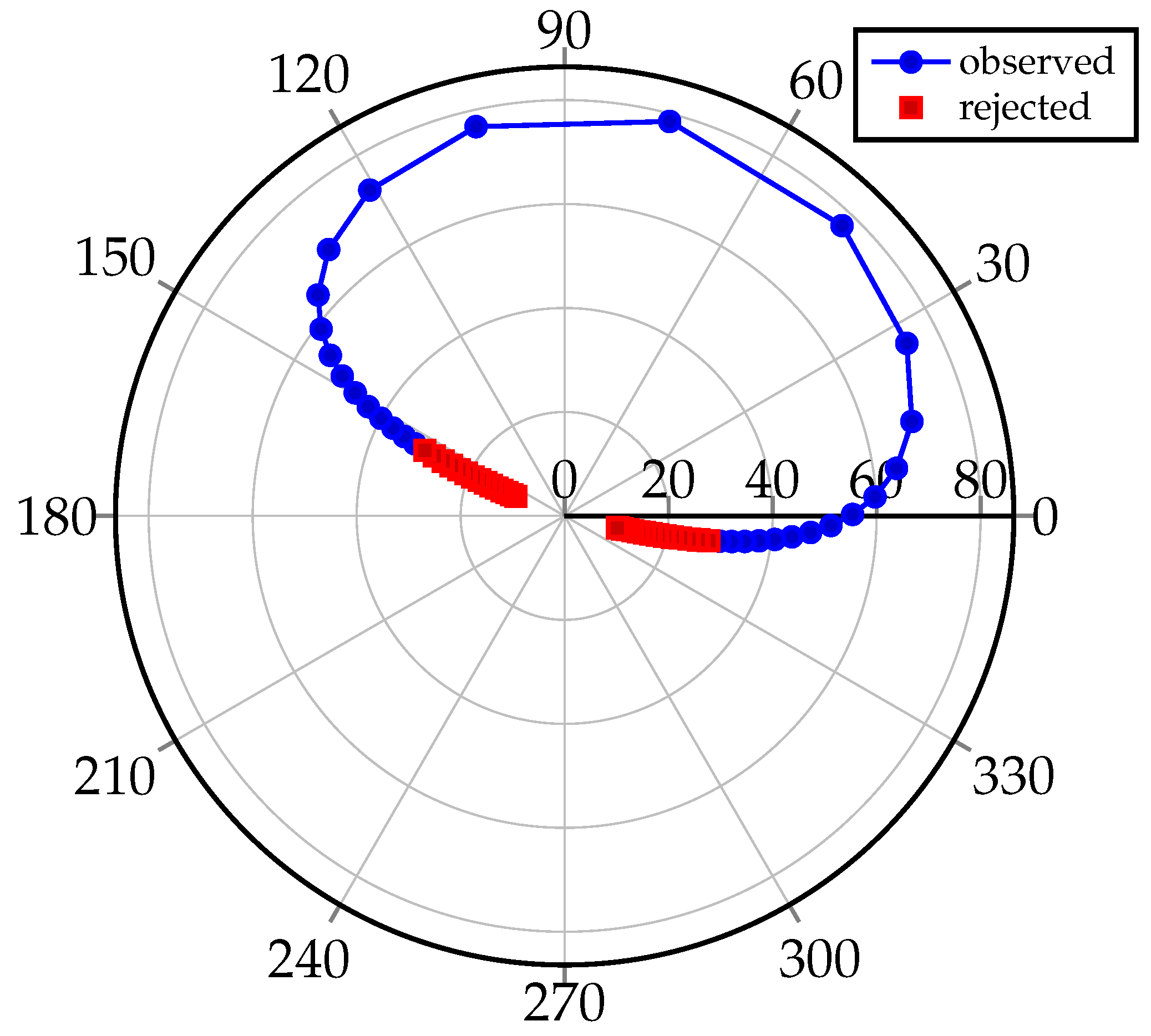

2.3. Observation Load

3. Active Decision Support System for Observation Scheduling and Observation Safety

3.1. Data Structures

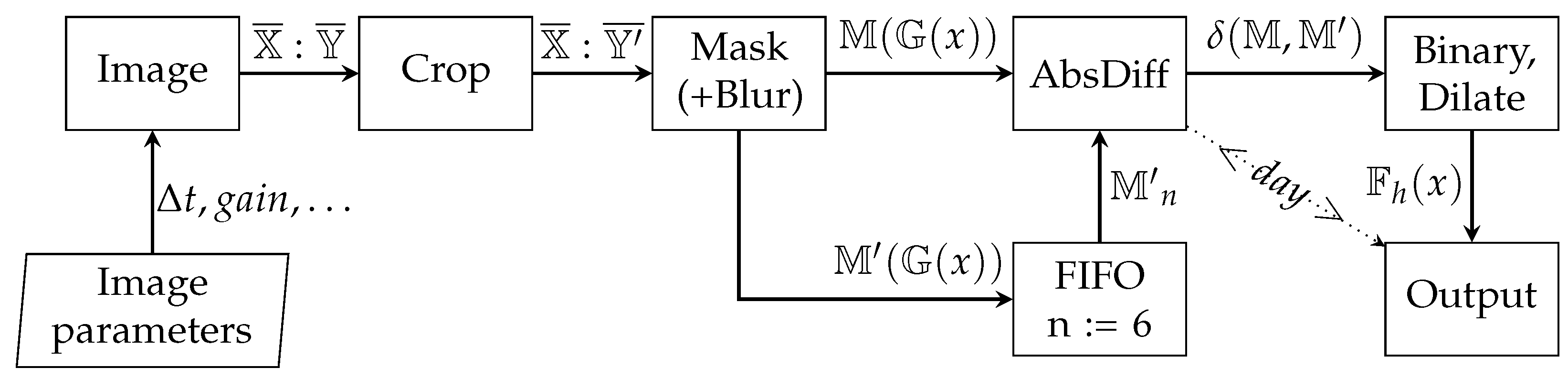

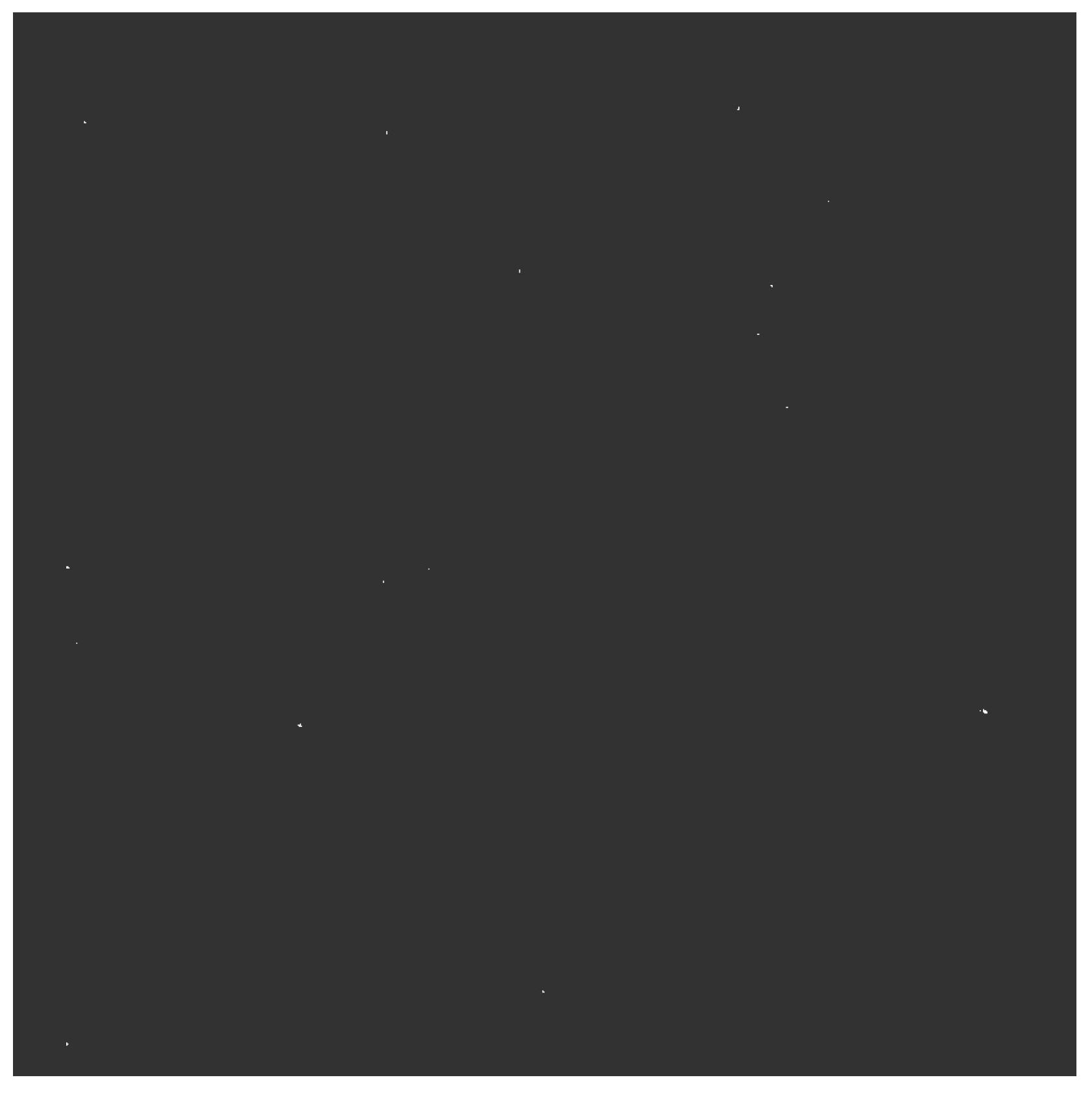

3.2. Image Processing

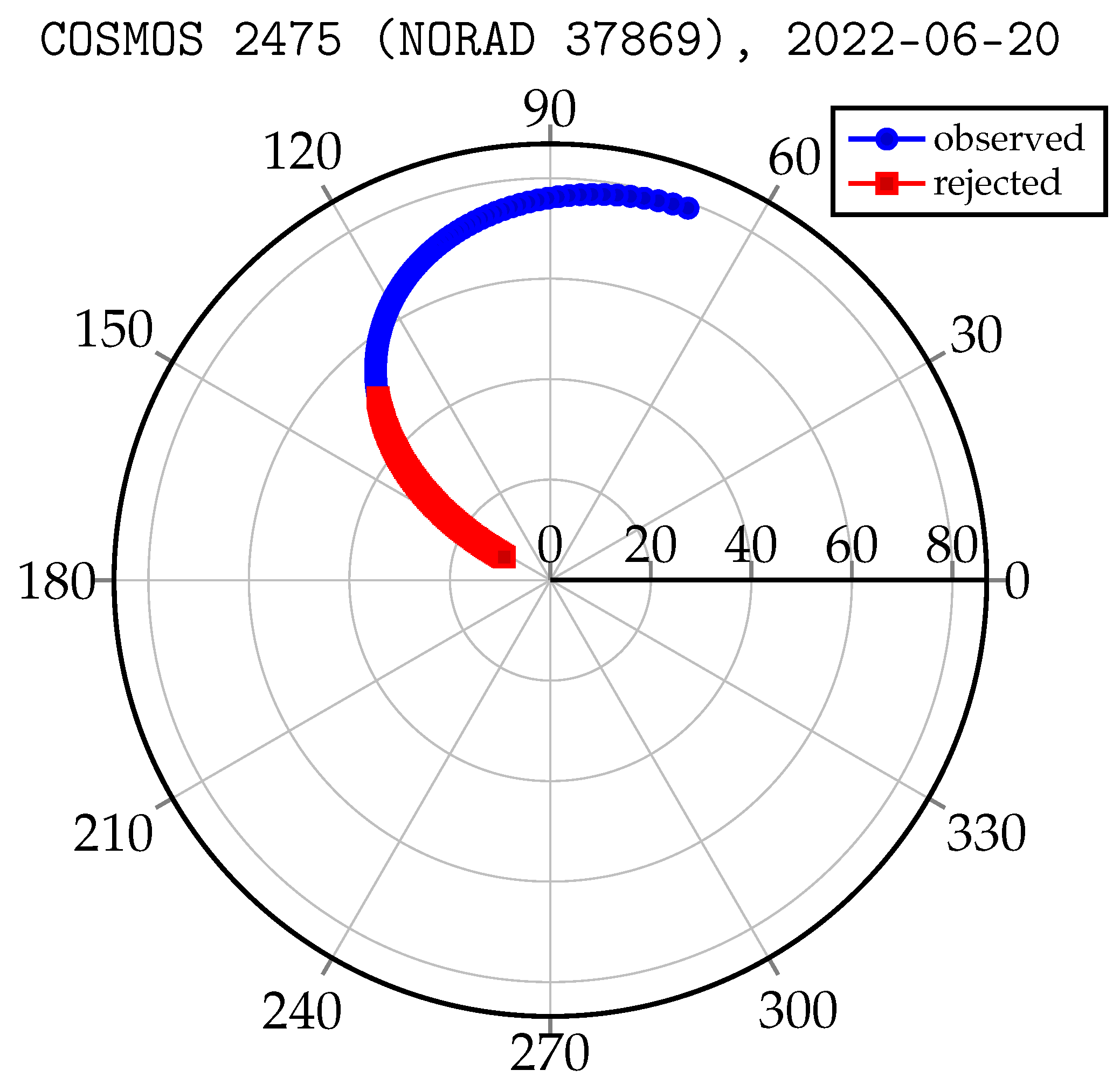

4. Experiment

4.1. Test Platform

4.2. Active Decision Support System Operational Test

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Plotkin, H. Reflection of ruby laser radiation from Explorer XXII. Proc. IEEE 1965, 53, 301–302. [Google Scholar] [CrossRef]

- Kucharski, D.; Kirchner, G.; Koidl, F.; Cristea, E. 10 Years of LAGEOS-1 and 15 years of LAGEOS-2 spin period determination from SLR data. Adv. Space Res. 2009, 43, 1926–1930. [Google Scholar] [CrossRef]

- Kucharski, D.; Kirchner, G.; Lim, H.; Koidl, F. Spin parameters of High Earth Orbiting satellites ETALON-1 and ETALON-2 determined from kHz Satellite Laser Ranging data. Adv. Space Res. 2014, 54, 2309–2317. [Google Scholar] [CrossRef]

- Alley, C.O.; Bowman, S.R.; Rayner, J.D.; Wang, B.C.; Yang, F.M. First Lunar Ranging Results from The University of Maryland Research Station at the 1.2M Telescope of the GSFC. In Proceedings of the 6th International Workshop on Laser Ranging Instrumentation, Antibes, France, 22–26 September 1986; p. 625. [Google Scholar]

- Chapront, J.; Chapront-Touze, M.; Francou, G. A new determination of lunar orbital parameters, precession constant and tidal acceleration from LLR measurements. Astron. Astrophys. 2002, 387, 700. [Google Scholar] [CrossRef] [Green Version]

- Zhu, S.; Reigber, C.; Kang, Z. Apropos laser tracking to GPS satellites. J. Geod. 1997, 71, 423–431. [Google Scholar] [CrossRef]

- Hujsak, R.S.; Gilbreath, G.C.; Truong, S. GPS Sequential Orbit Determination Using Spare SLR Data. In Proceedings of the 11th International Workshop on Laser Ranging, Deggendorf, Germany, 21–25 September 1998; p. 56. [Google Scholar]

- Arnold, D.; Montenbruck, O.; Hackel, S.; Sosnica, K. Satellite laser ranging to low Earth orbiters: Orbit and network validation. J. Geod. 2019, 93, 2315–2334. [Google Scholar] [CrossRef] [Green Version]

- Fernández, J.; Fernández, C.; Féménias, P.; Peter, H. The Copernicus Sentinel-3 Mission. In Proceedings of the 20th International Workshop on Laser Ranging, Potsdam, Germany, 9–14 October 2016. [Google Scholar]

- Pearlman, M.R.; Noll, C.E.; Pavlis, E.C.; Lemoine, F.G.; Combrink, L.; Degnan, J.J.; Kirchner, G.; Schreiber, U. The ILRS: Approaching 20 years and planning for the future. J. Geod. 2019, 93, 2161–2180. [Google Scholar] [CrossRef]

- Wilkinson, M.; Schreiber, U.; Procházka, I.; Moore, C.; Degnan, J.; Kirchner, G.; Zhongping, Z.; Dunn, P.; Shargorodskiy, V.; Sadovnikov, M.; et al. The next generation of satellite laser ranging systems. J. Geod. 2019, 93, 2227–2247. [Google Scholar] [CrossRef]

- ESA Space Debris Office. Esa’S Annual Space Environment Report. LOG 2022. Available online: https://www.sdo.esoc.esa.int (accessed on 20 June 2022).

- Zhao, S.; Steindorfer, M.; Kirchner, G.; Zheng, Y.; Koidl, F.; Wang, P.; Shang, W.; Zhang, J.; Li, T. Attitude analysis of space debris using SLR and light curve data measured with single-photon detector. Adv. Space Res. 2020, 65, 1518–1527. [Google Scholar] [CrossRef]

- Kucharski, D.; Kirchner, G.; Koidl, F. Spin parameters of nanosatellite BLITS determined from Graz 2 kHz SLR data. Adv. Space Res. 2011, 48, 343–348. [Google Scholar] [CrossRef]

- Suchodolski, T. Active Control Loop of the BOROWIEC SLR Space Debris Tracking System. Sensors 2022, 22, 2231. [Google Scholar] [CrossRef]

- Kirchner, G.; Koidl, F.; Friederich, F.; Buske, I.; Völker, U.; Riede, W. Laser measurements to space debris from Graz SLR station. Adv. Space Res. 2013, 51, 21–24. [Google Scholar] [CrossRef]

- Lejba, P.; Suchodolski, T.; Michalek, P.; Bartoszak, J.; Schillak, S.; Zapaśnik, S. First laser measurements to space debris in Poland. Adv. Space Res. 2018, 61, 2609–2616. [Google Scholar] [CrossRef]

- Cordelli, E.; Di Mira, A.; Heese, C.; Flohrer, T.; Kloth, A.; Steinborn, J. Demonstration of space debris observation capabilities of the ESA IZN-1 robotic optical ground station. In Proceedings of the 73rd International Astronautical Congress (IAC), Paris, France, 18–22 September 2022. [Google Scholar]

- Sybilska, A.; Słonina, M.; Leba, P.; Lech, G.; Karder, J.; Suchodolski, T.; Wagner, S.; Sybilski, P.; Mancas, A. WebPlan–web-based sensor scheduling tool. In Proceedings of the 8th European Conference on Space Debris, Darmstadt, Germany, 20–23 March 2021; ESA Space Debris Office: Darmstadt, Germany, 2021. [Google Scholar]

- ZWO ASI Astronomy Cameras. Available online: https://astronomy-imaging-camera.com (accessed on 20 June 2022).

| Year | Payload | Rocket Body | Payload Fragments Debris | Other Types 1 |

|---|---|---|---|---|

| 2021 | 7849 | 1990 | 7629 | 12,557 |

| 2020 | 6263 | 1957 | 6686 | 12,419 |

| 2015 | 3864 | 1965 | 6035 | 5789 |

| 2010 | 3268 | 1621 | 6191 | 4164 |

| 2005 | 2709 | 1391 | 1068 | 3595 |

| 2000 | 2493 | 1351 | 945 | 3438 |

| 1990 | 1708 | 1029 | 1030 | 2493 |

| 1980 | 938 | 584 | 547 | 2018 |

| 1970 | 402 | 209 | 196 | 1170 |

| Object | Start [UTC] | Stop [UTC] | Pass [m:s] | Elevation [°] | Range [km] |

|---|---|---|---|---|---|

| GLN-134 | 16:07:50 | 16:37:51 | 30:01 | 50–75 | 19,328–19,518 |

| Stella | 16:07:50 | 16:13:25 | 05:35 | 20–65 | 881–1787 |

| SL16-20625 | 16:10:38 | 16:14:19 | 03:41 | 30–38 | 1272–1473 |

| SL8-11327 | 16:16:21 | 16:17:21 | 01:00 | 30–30 | 1685–1698 |

| SL14-22784 | 16:18:52 | 16:23:29 | 04:37 | 30–42 | 1310–161 |

| SL16-22220 | 16:19:25 | 16:24:52 | 05:27 | 30–58 | 987–1486 |

| Galileo-211 | 16:27:37 | 16:57:38 | 30:01 | 50–60 | 23,921–24,433 |

| CZ2C-28480 | 16:31:22 | 16:33:27 | 02:05 | 30–33 | 1174–1256 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suchodolski, T. Active Decision Support System for Observation Scheduling Based on Image Analysis at the BOROWIEC SLR Station. Sensors 2022, 22, 8040. https://doi.org/10.3390/s22208040

Suchodolski T. Active Decision Support System for Observation Scheduling Based on Image Analysis at the BOROWIEC SLR Station. Sensors. 2022; 22(20):8040. https://doi.org/10.3390/s22208040

Chicago/Turabian StyleSuchodolski, Tomasz. 2022. "Active Decision Support System for Observation Scheduling Based on Image Analysis at the BOROWIEC SLR Station" Sensors 22, no. 20: 8040. https://doi.org/10.3390/s22208040