A Survey on Medical Explainable AI (XAI): Recent Progress, Explainability Approach, Human Interaction and Scoring System

Abstract

1. Introduction

1.1. Motivation

1.2. Interpretability

1.3. Feedback Loop

1.4. General XAI Process

1.5. Objectives

- Determine the current progress within the different infection/diseases based on AI algorithms and their respective configurations.

- Describe the characteristics, explainability, and XAI methods for tackling design issues in the medical domain.

- Discuss the future of medical XAI, supported by explanation measures by human-in-the-loop process in the XAI based systems with case studies.

- Demonstrate a novel XAI Recommendation System and XAI Scoring System applicable to multiple fields.

2. Related Works

3. Potential Difference between the AI and XAI Methods

3.1. Transparency in the System Process

3.2. Explainability of the System

3.3. Limitations on the Model Design

3.4. Adaptability to the Emerging Situations

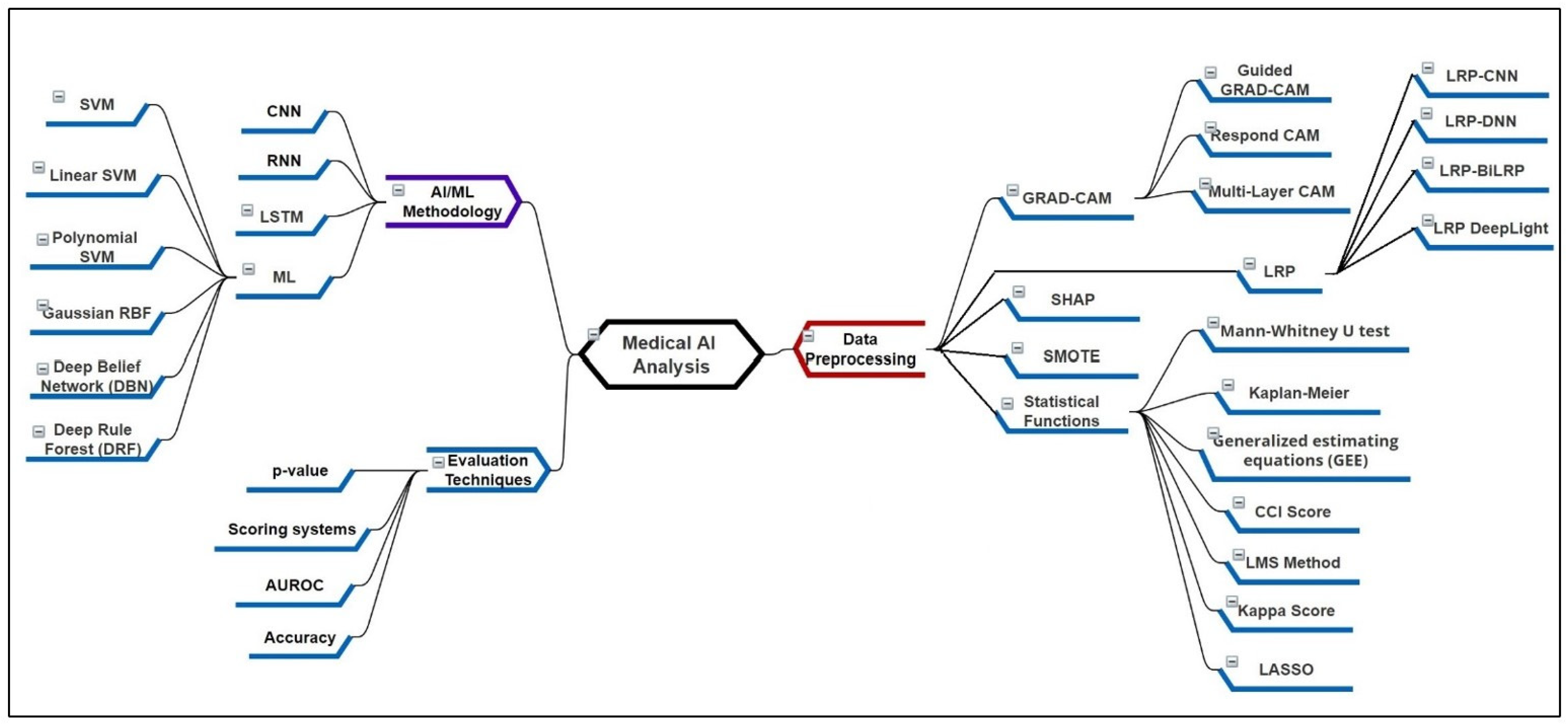

4. Recent XAI Methods and Its Applicability

4.1. Local and Global Methods for the Preprocessing

4.1.1. Gradient Weighted Class Activation Mapping (Grad-CAM)

4.1.2. Layer-Wise Relevance Propagation (LRP)

4.1.3. Statistical Functions for the Feature Analysis and Processing

4.1.4. SHapley Additive exPlanations (SHAP)

4.1.5. Attention Maps

4.1.6. Local Interpretable Model-Agnostic Explanations (LIME)

4.2. Knowledge Base and Distillation Algorithms

4.2.1. Convolutional/Deep/Recurrent Neural Networks (CNN/DNN/RNN)

4.2.2. Long Short-Term Memory (LSTM)

4.2.3. Recent Machine Learning-Based Approaches

4.2.4. Rule-Based Systems and Fuzzy Systems

4.2.5. Additional XAI Methods for Plots, Expectations, and Explanations

4.3. Interpretable Machine Learning (IML)

4.3.1. Intrinsic Interpretability

4.3.2. Post-Hoc Interpretability

5. Characteristics of Explainable AI in Healthcare

5.1. Adaptability

5.2. Context-Awareness

5.3. Consistency

5.4. Generalization

5.5. Fidelity

6. Future of Explainability in Healthcare

6.1. Human–Computer Interaction (HCI)

6.2. Human in the Loop

6.3. Explanation Evaluation

6.4. Explainable Intelligent Systems

7. Prerequisite for the AI and XAI Explainability

7.1. Discussion for the Initial Preprocessing

- ▪

- Whether the dataset is consistent?

- ▪

- Which data imputation functions are required for data consistency?

- ▪

- Presentation of analysis of the data distributions?

- ▪

- Image registration techniques required for the image dataset?

- ▪

- Whether some feature scoring techniques are used prior?

- ▪

- Is there any priority assigned to some features by domain experts?

- ▪

- What actions are taken in case of equal feature scores?

- ▪

- Is there some threshold assigned for feature selection?

- ▪

- Are the features selected based on domain expert’s choice?

- ▪

- How are binary or multiclass output based features used?

7.2. Discussion for the Methodology Applicability in XAI

- What feature aspects make the method selection valid?

- Is the approach genuine for the system model?

- Why would some methods be inefficient? Are references always useful for literature?

- In case of multi-modal data analysis is the model suitable? Will it be efficient to use such a model?

- How is the model synchronization made for input?

- Are the features of the current patient affected more or less than the average dataset?

- What is the current patient’s stage the methods have classified?

- ▪

- What is the current patient’s medication course assigned and its results?

- ▪

- How much of a percentage of a patient’s clinical features are affected?

- ▪

- Are the features showing positive or negative improvement status?

- Which output metrics are suitable for the model evaluation?

7.3. Discussion for the Evaluation Factors in XAI

- What cases are important for the output classification?

- Is the output improving based on recursion?

- How are the multi-model outputs combined?

- ▪

- How are the bar graphs compared and evaluated?

- Whether the user/domain expert likes to manually select features for evaluation?

- Is the system designed to record feedback from the domain experts?

- Whether the model updates training features with every evaluation?

- Which model is suitable for such medical cases, machine learning (ML) or deep learning (DL)?

- ▪

- How the feedback suggestions by domain experts are adapted in the current model?

8. Case Study

9. XAI Limitations

9.1. Explainability Ratings for the New Models

9.2. Measurement System for the XAI Evaluation

9.3. XAI System Adaptation to the Continuous Improvements

9.4. Human in the Loop Approach Compatibility

10. XAI Recommendation System (XAI-RS)

11. XAI Scoring System (XAI-SS)

12. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. LIME-Based Super-Pixel Generation

Appendix A.2. Perceptive Interpretability Methods

References

- Esmaeilzadeh, P. Use of AI-based tools for healthcare purposes: A survey study from consumers’ perspectives. BMC Med. Inform. Decis. Mak. 2020, 20, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Houben, S.; Abrecht, S.; Akila, M.; Bär, A.; Brockherde, F.; Feifel, P.; Fingscheidt, T.; Gannamaneni, S.S.; Ghobadi, S.E.; Hammam, A.; et al. Inspect, understand, overcome: A survey of practical methods for ai safety. arXiv 2021, arXiv:2104.14235. [Google Scholar]

- Juliana, J.F.; Monteiro, M.S. What are people doing about XAI user experience? A survey on AI explainability research and practice. In International Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Xie, S.; Yu, Z.; Lv, Z. Multi-disease prediction based on deep learning: A survey. CMES-Comput. Modeling Eng. Sci. 2021, 127, 1278935. [Google Scholar] [CrossRef]

- Clodéric, M.; Dès, R.; Boussard, M. The three stages of Explainable AI: How explainability facilitates real world deployment of AI. Res. Gate 2020. [Google Scholar]

- Li, X.H.; Cao, C.C.; Shi, Y.; Bai, W.; Gao, H.; Qiu, L.; Wang, C.; Gao, Y.; Zhang, S.; Xue, X.; et al. A survey of data-driven and knowledge-aware explainable ai. IEEE Trans. Knowl. Data Eng. 2020, 34, 29–49. [Google Scholar] [CrossRef]

- Schneeberger, D.; Stöger, K.; Holzinger, A. The European legal framework for medical AI. In Proceedings of the International Cross-Domain Conference for Machine Learning and Knowledge Extraction, Dublin, Ireland, 25–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 209–226. [Google Scholar]

- Muller, H.; Mayrhofer, M.; Van Veen, E.; Holzinger, A. The Ten Commandments of Ethical Medical AI. Computer 2021, 54, 119–123. [Google Scholar] [CrossRef]

- Erico, T.; Guan, C. A survey on explainable artificial intelligence (xai): Toward medical xai. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4793–4813. [Google Scholar]

- Guang, Y.; Ye, Q.; Xia, J. Unbox the black-box for the medical explainable ai via multi-modal and multi-centre data fusion: A mini-review, two showcases and beyond. Inf. Fusion 2022, 77, 29–52. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Tang, Z.; Chuang, K.V.; DeCarli, C.; Jin, L.W.; Beckett, L.; Keiser, M.J.; Dugger, B.N. Interpretable classification of Alzheimer’s disease pathologies with a convolutional neural network pipeline. Nat. Commun. 2019, 10, 2173. [Google Scholar] [CrossRef]

- Zhao, G.; Zhou, B.; Wang, K.; Jiang, R.; Xu, M. RespondCAM: Analyzing deep models for 3D imaging data by visualizations. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2018; Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Springer: Cham, Switzerland, 2018; pp. 485–492. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Lapuschkin, S.; Wäldchen, S.; Binder, A.; Montavon, G.; Samek, W.; Müller, K.-R. Unmasking clever hans predictors and assessing what machines really learn. Nat. Commun. 2019, 10, 1096. [Google Scholar] [CrossRef]

- Samek, W.; Montavon, G.; Binder, A.; Lapuschkin, S.; Müller, K. Interpreting the predictions of complex ML models by layer-wise relevance propagation. arXiv 2016, arXiv:1611.08191. [Google Scholar]

- Thomas, A.W.; Heekeren, H.R.; Müller, K.-R.; Samek, W. Analyzing neuroimaging data through recurrent deep learning models. Front. Neurosci. 2019, 13, 1321. [Google Scholar] [CrossRef] [PubMed]

- Arras, L.; Horn, F.; Montavon, G.; Müller, K.; Samek, W. ‘What is relevant in a text document?’: An interpretable machine learning approach. arXiv 2016, arXiv:1612.07843. [Google Scholar] [CrossRef]

- Hiley, L.; Preece, A.; Hicks, Y.; Chakraborty, S.; Gurram, P.; Tomsett, R. Explaining motion relevance for activity recognition in video deep learning models. arXiv 2020, arXiv:2003.14285. [Google Scholar]

- Eberle, O.; Buttner, J.; Krautli, F.; Mueller, K.-R.; Valleriani, M.; Montavon, G. Building and interpreting deep similarity models. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1149–1161. [Google Scholar] [CrossRef] [PubMed]

- Burnham, J.P.; Rojek, R.P.; Kollef, M.H. Catheter removal and outcomes of multidrug-resistant central-line-associated bloodstream infection. Medicine 2018, 97, e12782. [Google Scholar] [CrossRef]

- Beganovic, M.; Cusumano, J.A.; Lopes, V.; LaPlante, K.L.; Caffrey, A.R. Comparative Effectiveness of Exclusive Exposure to Nafcillin or Oxacillin, Cefazolin, Piperacillin/Tazobactam, and Fluoroquinolones Among a National Cohort of Veterans With Methicillin-Susceptible Staphylococcus aureus Bloodstream Infection. Open Forum Infect. Dis. 2019, 6, ofz270. [Google Scholar] [CrossRef] [PubMed]

- Fiala, J.; Palraj, B.R.; Sohail, M.R.; Lahr, B.; Baddour, L.M. Is a single set of negative blood cultures sufcient to ensure clearance of bloodstream infection in patients with Staphylococcus aureus bacteremia? The skip phenomenon. Infection 2019, 47, 1047–1053. [Google Scholar] [CrossRef]

- Fabre, V.; Amoah, J.; Cosgrove, S.E.; Tamma, P.D. Antibiotic therapy for Pseudomonas aeruginosa bloodstream infections: How long is long enough? Clin. Infect. Dis. 2019, 69, 2011–2014. [Google Scholar] [CrossRef] [PubMed]

- Harris, P.N.A.; Tambyah, P.A.; Lye, D.C.; Mo, Y.; Lee, T.H.; Yilmaz, M.; Alenazi, T.H.; Arabi, Y.; Falcone, M.; Bassetti, M.; et al. Effect of piperacillin-tazobactam vs meropenem on 30-day mortality for patients with E coli or Klebsiella pneumoniae bloodstream infection and ceftriaxone resistance: A randomized clinical trial. JAMA 2018, 320, 984–994. [Google Scholar] [CrossRef] [PubMed]

- Delahanty, R.J.; Alvarez, J.; Flynn, L.M.; Sherwin, R.L.; Jones, S.S. Development and Evaluation of a Machine Learning Model for the Early Identification of Patients at Risk for Sepsis. Ann. Emerg. Med. 2019, 73, 334–344. [Google Scholar] [CrossRef]

- Kam, H.J.; Kim, H.Y. Learning representations for the early detection of sepsis with deep neural networks. Comput. Biol. Med. 2017, 89, 248–255. [Google Scholar] [CrossRef]

- Taneja, I.; Reddy, B.; Damhorst, G.; Dave Zhao, S.; Hassan, U.; Price, Z.; Jensen, T.; Ghonge, T.; Patel, M.; Wachspress, S.; et al. Combining Biomarkers with EMR Data to Identify Patients in Different Phases of Sepsis. Sci. Rep. 2017, 7, 10800. [Google Scholar] [CrossRef]

- Oonsivilai, M.; Mo, Y.; Luangasanatip, N.; Lubell, Y.; Miliya, T.; Tan, P.; Loeuk, L.; Turner, P.; Cooper, B.S. Using machine learning to guide targeted and locally-tailored empiric antibiotic prescribing in a children’s hospital in Cambodia. Open Res. 2018, 3, 131. [Google Scholar] [CrossRef]

- García-Gallo, J.E.; Fonseca-Ruiz, N.J.; Celi, L.A.; Duitama-Muñoz, J.F. A machine learning-based model for 1-year mortality prediction in patients admitted to an Intensive Care Unit with a diagnosis of sepsis. Med. Intensiva Engl. Ed. 2020, 44, 160–170. [Google Scholar] [CrossRef]

- Lee, H.-C.; Yoon, S.B.; Yang, S.-M.; Kim, W.H.; Ryu, H.-G.; Jung, C.-W.; Suh, K.-S.; Lee, K.H. Prediction of Acute Kidney Injury after Liver Transplantation: Machine Learning Approaches vs. Logistic Regression Model. J. Clin. Med. 2018, 7, 428. [Google Scholar] [CrossRef] [PubMed]

- Hsu, C.N.; Liu, C.L.; Tain, Y.L.; Kuo, C.Y.; Lin, Y.C. Machine Learning Model for Risk Prediction of Community-Acquired Acute Kidney Injury Hospitalization From Electronic Health Records: Development and Validation Study. J. Med. Internet Res. 2020, 22, e16903. [Google Scholar] [CrossRef]

- Qu, C.; Gao, L.; Yu, X.Q.; Wei, M.; Fang, G.Q.; He, J.; Cao, L.X.; Ke, L.; Tong, Z.H.; Li, W.Q. Machine learning models of acute kidney injury prediction in acute pancreatitis patients. Gastroenterol. Res. Pract. 2020, 2020, 3431290. [Google Scholar] [CrossRef]

- He, L.; Zhang, Q.; Li, Z.; Shen, L.; Zhang, J.; Wang, P.; Wu, S.; Zhou, T.; Xu, Q.; Chen, X.; et al. Incorporation of urinary neutrophil gelatinase-Associated lipocalin and computed tomography quantification to predict acute kidney injury and in-hospital death in COVID-19 patients. Kidney Dis. 2021, 7, 120–130. [Google Scholar] [CrossRef]

- Kim, K.; Yang, H.; Yi, J.; Son, H.E.; Ryu, J.Y.; Kim, Y.C.; Jeong, J.C.; Chin, H.J.; Na, K.Y.; Chae, D.W.; et al. Real-Time Clinical Decision Support Based on Recurrent Neural Networks for In-Hospital Acute Kidney Injury: External Validation and Model Interpretation. J. Med. Internet Res. 2021, 23, e24120. [Google Scholar] [CrossRef] [PubMed]

- Penny-Dimri, J.C.; Bergmeir, C.; Reid, C.M.; Williams-Spence, J.; Cochrane, A.D.; Smith, J.A. Machine learning algorithms for predicting and risk profiling of cardiac surgery-associated acute kidney injury. In Seminars in Thoracic and Cardiovascular Surgery; WB Saunders: Philadelphia, PA, USA, 2021; Volume 33, pp. 735–745. [Google Scholar]

- He, Z.L.; Zhou, J.B.; Liu, Z.K.; Dong, S.Y.; Zhang, Y.T.; Shen, T.; Zheng, S.S.; Xu, X. Application of machine learning models for predicting acute kidney injury following donation after cardiac death liver transplantation. Hepatobiliary Pancreat. Dis. Int. 2021, 20, 222–231. [Google Scholar] [CrossRef] [PubMed]

- Alfieri, F.; Ancona, A.; Tripepi, G.; Crosetto, D.; Randazzo, V.; Paviglianiti, A.; Pasero, E.; Vecchi, L.; Cauda, V.; Fagugli, R.M. A deep-learning model to continuously predict severe acute kidney injury based on urine output changes in critically ill patients. J. Nephrol. 2021, 34, 1875–1886. [Google Scholar] [CrossRef]

- Kang, Y.; Huang, S.T.; Wu, P.H. Detection of Drug–Drug and Drug–Disease Interactions Inducing Acute Kidney Injury Using Deep Rule Forests. SN Comput. Sci. 2021, 2, 1–14. [Google Scholar]

- Le, S.; Allen, A.; Calvert, J.; Palevsky, P.M.; Braden, G.; Patel, S.; Pellegrini, E.; Green-Saxena, A.; Hoffman, J.; Das, R. Convolutional Neural Network Model for Intensive Care Unit Acute Kidney Injury Prediction. Kidney Int. Rep. 2021, 6, 1289–1298. [Google Scholar] [CrossRef]

- Mamandipoor, B.; Frutos-Vivar, F.; Peñuelas, O.; Rezar, R.; Raymondos, K.; Muriel, A.; Du, B.; Thille, A.W.; Ríos, F.; González, M.; et al. Machine learning predicts mortality based on analysis of ventilation parameters of critically ill patients: Multi-centre validation. BMC Med. Inform. Decis. Mak. 2021, 21, 1–12. [Google Scholar] [CrossRef]

- Hu, C.A.; Chen, C.M.; Fang, Y.C.; Liang, S.J.; Wang, H.C.; Fang, W.F.; Sheu, C.C.; Perng, W.C.; Yang, K.Y.; Kao, K.C.; et al. Using a machine learning approach to predict mortality in critically ill influenza patients: A cross-sectional retrospective multicentre study in Taiwan. BMJ Open 2020, 10, e033898. [Google Scholar] [CrossRef] [PubMed]

- Rueckel, J.; Kunz, W.G.; Hoppe, B.F.; Patzig, M.; Notohamiprodjo, M.; Meinel, F.G.; Cyran, C.C.; Ingrisch, M.; Ricke, J.; Sabel, B.O. Artificial intelligence algorithm detecting lung infection in supine chest radiographs of critically ill patients with a diagnostic accuracy similar to board-certified radiologists. Crit. Care Med. 2020, 48, e574–e583. [Google Scholar] [CrossRef] [PubMed]

- Greco, M.; Angelotti, G.; Caruso, P.F.; Zanella, A.; Stomeo, N.; Costantini, E.; Protti, A.; Pesenti, A.; Grasselli, G.; Cecconi, M. Artificial Intelligence to Predict Mortality in Critically ill COVID-19 Patients Using Data from the First 24h: A Case Study from Lombardy Outbreak. Res. Sq. 2021. [Google Scholar] [CrossRef]

- Ye, J.; Yao, L.; Shen, J.; Janarthanam, R.; Luo, Y. Predicting mortality in critically ill patients with diabetes using machine learning and clinical notes. BMC Med. Inform. Decis. Mak. 2020, 20, 1–7. [Google Scholar] [CrossRef]

- Kong, G.; Lin, K.; Hu, Y. Using machine learning methods to predict in-hospital mortality of sepsis patients in the ICU. BMC Med. Inform. Decis. Mak. 2020, 20, 1–10. [Google Scholar] [CrossRef]

- Nie, X.; Cai, Y.; Liu, J.; Liu, X.; Zhao, J.; Yang, Z.; Wen, M.; Liu, L. Mortality prediction in cerebral hemorrhage patients using machine learning algorithms in intensive care units. Front. Neurol. 2021, 11, 1847. [Google Scholar] [CrossRef]

- Theis, J.; Galanter, W.; Boyd, A.; Darabi, H. Improving the In-Hospital Mortality Prediction of Diabetes ICU Patients Using a Process Mining/Deep Learning Architecture. IEEE J. Biomed. Health Inform. 2021, 26, 388–399. [Google Scholar] [CrossRef] [PubMed]

- Jentzer, J.C.; Kashou, A.H.; Attia, Z.I.; Lopez-Jimenez, F.; Kapa, S.; Friedman, P.A.; Noseworthy, P.A. Left ventricular systolic dysfunction identification using artificial intelligence-augmented electrocardiogram in cardiac intensive care unit patients. Int. J. Cardiol. 2021, 326, 114–123. [Google Scholar] [CrossRef]

- Popadic, V.; Klasnja, S.; Milic, N.; Rajovic, N.; Aleksic, A.; Milenkovic, M.; Crnokrak, B.; Balint, B.; Todorovic-Balint, M.; Mrda, D.; et al. Predictors of Mortality in Critically Ill COVID-19 Patients Demanding High Oxygen Flow: A Thin Line between Inflammation, Cytokine Storm, and Coagulopathy. Oxidative Med. Cell. Longev. 2021, 2021, 6648199. [Google Scholar] [CrossRef] [PubMed]

- Kaji, D.A.; Zech, J.R.; Kim, J.S.; Cho, S.K.; Dangayach, N.S.; Costa, A.B.; Oermann, E.K. An attention based deep learning model of clinical events in the intensive care unit. PLoS ONE 2019, 14, e0211057. [Google Scholar] [CrossRef]

- Shickel, B.; Loftus, T.J.; Adhikari, L.; Ozrazgat-Baslanti, T.; Bihorac, A.; Rashidi, P. DeepSOFA: A Continuous Acuity Score for Critically Ill Patients using Clinically Interpretable Deep Learning. Sci. Rep. 2019, 9, 1–12. [Google Scholar] [CrossRef]

- Maxim, K.; Lev, U.; Ernest, K. SurvLIME: A method for explaining machine learning survival models. Knowl.-Based Syst. 2020, 203, 106164. [Google Scholar] [CrossRef]

- Panigutti, C.; Perotti, A.; Pedreschi, D. Medical examiner XAI: An ontology-based approach to black-box sequential data classification explanations. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (FAT* ′20). Association for Computing Machinery, New York, NY, USA, 27–30 January 2020; pp. 629–639. [Google Scholar] [CrossRef]

- Hua, Y.; Guo, J.; Zhao, H. Deep Belief Networks and deep learning. In Proceedings of the 2015 International Conference on Intelligent Computing and Internet of Things, Harbin, China, 17–18 January 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Letham, B.; Rudin, C.; McCormick, T.H.; Madigan, D. Interpretable classifiers using rules and Bayesian analysis: Building a better stroke prediction model. Ann. Appl. Stat. 2015, 9, 1350–1371. [Google Scholar] [CrossRef]

- Che, Z.; Purushotham, S.; Khemani, R.; Liu, Y. Interpretable Deep Models for ICU Outcome Prediction. AMIA Annu. Symp. Proc. 2017, 2016, 371–380. [Google Scholar]

- Davoodi, R.; Moradi, M.H. Mortality prediction in intensive care units (ICUs) using a deep rule-based fuzzy classifier. J. Biomed. Inform. 2018, 79, 48–59. [Google Scholar] [CrossRef]

- Johnson, M.; Albizri, A.; Harfouche, A. Responsible artificial intelligence in healthcare: Predicting and preventing insurance claim denials for economic and social wellbeing. Inf. Syst. Front. 2021, 1–17. [Google Scholar] [CrossRef]

- Xu, Z.; Tang, Y.; Huang, Q.; Fu, S.; Li, X.; Lin, B.; Xu, A.; Chen, J. Systematic review and subgroup analysis of the incidence of acute kidney injury (AKI) in patients with COVID-19. BMC Nephrol. 2021, 22, 52. [Google Scholar] [CrossRef] [PubMed]

- Angiulli, F.; Fassetti, F.; Nisticò, S. Local Interpretable Classifier Explanations with Self-generated Semantic Features. In Proceedings of the International Conference on Discovery Science, Halifax, NS, Canada, 11–13 October 2021; Springer: Cham, Switzerland, 2021; pp. 401–410. [Google Scholar]

- Visani, G.; Bagli, E.; Chesani, F. OptiLIME: Optimized LIME explanations for diagnostic computer algorithms. arXiv 2020, arXiv:2006.05714. [Google Scholar]

- Carrington, A.M.; Fieguth, P.W.; Qazi, H.; Holzinger, A.; Chen, H.H.; Mayr, F.; Manuel, D.G. A new concordant partial AUC and partial c statistic for imbalanced data in the evaluation of machine learning algorithms. BMC Med. Inform. Decis. Mak. 2020, 20, 1–12. [Google Scholar] [CrossRef]

- Du, M.; Liu, N.; Hu, X. Techniques for interpretable machine learning. Commun. ACM 2020, 63, 68–77. [Google Scholar] [CrossRef]

- Murdoch, W.J.; Singh, C.; Kumbier, K.; Abbasi-Asl, R.; Yu, B. Definitions, methods, and applications in interpretable machine learning. Proc. Natl. Acad. Sci. USA 2019, 116, 22071–22080. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Explainable AI for healthcare: From black box to interpretable models. In Embedded Systems and Artificial Intelligence; Springer: Singapore, 2020; pp. 327–337. [Google Scholar]

- Nazar, M.; Alam, M.M.; Yafi, E.; Mazliham, M.S. A Systematic Review of Human-Computer Interaction and Explainable Artificial Intelligence in Healthcare with Artificial Intelligence Techniques. IEEE Access 2021, 9, 153316–153348. [Google Scholar] [CrossRef]

- Srinivasan, R.; Chander, A. Explanation perspectives from the cognitive sciences—A survey. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; pp. 4812–4818. [Google Scholar]

- Zhou, B.; Sun, Y.; Bau, D.; Torralba, A. Interpretable basis decomposition for visual explanation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 119–134. [Google Scholar]

- Mohseni, S.; Zarei, N.; Ragan, E.D. A Multidisciplinary Survey and Framework for Design and Evaluation of Explainable AI Systems. ACM Trans. Interact. Intell. Syst. 2021, 11, 1–45. [Google Scholar] [CrossRef]

- Lo, S.H.; Yin, Y. A novel interaction-based methodology towards explainable AI with better understanding of Pneumonia Chest X-ray Images. Discov. Artif. Intell. 2021, 1, 1–7. [Google Scholar] [CrossRef]

- RSNA Pneumonia Detection Challenge Dataset. Available online: https://www.kaggle.com/c/rsna-pneumonia-detection-challenge (accessed on 20 September 2022).

- Dataset by Kermany et al. Available online: https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia (accessed on 20 September 2022).

- van Ginneken, B.; Stegmann, M.; Loog, M. Segmentation of anatomical structures in chest radiographs using supervised methods: A comparative study on a public database. Med. Image Anal. 2006, 10, 19–40. Available online: http://www.isi.uu.nl/Research/Databases/SCR/ (accessed on 20 September 2022). [CrossRef] [PubMed]

- Central Line-Associated Bloodstream Infections (CLABSI) in California Hospitals. Available online: https://healthdata.gov/State/Central-Line-Associated-Bloodstream-infections-CLA/cu55-5ujz/data (accessed on 20 September 2022).

- Johnson, A.; Pollard, T.; Mark, R. MIMIC-III Clinical Database (version 1.4). PhysioNet 2016. [Google Scholar] [CrossRef]

- Johnson, A.E.W.; Pollard, T.J.; Shen, L.; Lehman, L.H.; Feng, M.; Ghassemi, M.; Moody, B.; Szolovits, P.; Celi, L.A.; Mark, R.G. MIMIC-III, a freely accessible critical care database. Sci. Data 2016, 3, 160035. [Google Scholar] [CrossRef]

- ICES Data Repository. Available online: https://www.ices.on.ca/Data-and-Privacy/ICES-data (accessed on 20 September 2022).

- Department of Veterans Affairs, Veterans Health Administration: Providing Health Care for Veterans. Available online: https://www.va.gov/health/ (accessed on 9 November 2018).

- Tomasev, N.; Glorot, X.; Rae, J.W.; Zielinski, M.; Askham, H.; Saraiva, A.; Mottram, A.; Meyer, C.; Ravuri, S.; Protsyuk, I.; et al. A clinically applicable approach to continuous prediction of future acute kidney injury. Nature 2019, 572, 116–119. [Google Scholar] [CrossRef]

- Lauritsen, S.M.; Kristensen, M.; Olsen, M.V.; Larsen, M.S.; Lauritsen, K.M.; Jørgensen, M.J.; Lange, J.; Thiesson, B. Explainable artificial intelligence model to predict acute critical illness from electronic health records. Nat. Commun. 2020, 11, 1–11. [Google Scholar] [CrossRef]

- Hou, J.; Gao, T. Explainable DCNN based chest X-ray image analysis and classification for COVID-19 pneumonia detection. Sci. Rep. 2021, 11, 16071. [Google Scholar] [CrossRef]

- Berthelot, D.; Carlini, N.; Goodfellow, I.; Papernot, N.; Oliver, A.; Raffel, C.A. Mixmatch: A holistic approach to semisupervised learning. Adv. Neural Inf. Process. Syst. 2019, 32, 14. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Adv. Neural Inf. Process. Syst. NIPS 2017, 30, 1195–1204. [Google Scholar]

- Verma, V.; Lamb, A.; Kannala, J.; Bengio, Y.; Lopez-Paz, D. Interpolation consistency training for semi-supervised learning. Int. Jt. Conf. Artif. Intell. IJCAI 2019, 145, 3635–3641. [Google Scholar]

- Raghu, M.; Zhang, C.; Kleinberg, J.; Bengio, S. Transfusion: Understanding transfer learning for medical imaging. Neural Inf. Process. Syst. 2019, 32, 3347–3357. [Google Scholar]

- Aviles-Rivero, A.I.; Papadakis, N.; Li, R.; Sellars, P.; Fan, Q.; Tan, R.; Schönlieb, C.-B. Graphx-net—Chest x-ray classification under extreme minimal supervision. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; pp. 504–512. [Google Scholar]

- Aviles-Rivero, A.I.; Sellars, P.; Schönlieb, C.B.; Papadakis, N. GraphXCOVID: Explainable deep graph diffusion pseudo-labelling for identifying COVID-19 on chest X-rays. Pattern Recognit. 2022, 122, 108274. [Google Scholar] [CrossRef] [PubMed]

- Napolitano, F.; Xu, X.; Gao, X. Impact of computational approaches in the fight against COVID-19: An AI guided review of 17 000 studies. Brief. Bioinform. 2022, 23, bbab456. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep learning-enabled medical computer vision. NPJ Digit. Med. 2021, 4, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.K.; Greenspan, H.; Davatzikos, C.; Duncan, J.S.; Van Ginneken, B.; Madabhushi, A.; Prince, J.L.; Rueckert, D.; Summers, R.M. A review of deep learning in medical imaging: Image traits, technology trends, case studies with progress highlights, and future promises. Proc. IEEE 2021, 109, 820–838. [Google Scholar] [CrossRef]

- Tellakula, K.K.; Kumar, S.; Deb, S. A survey of ai imaging techniques for covid-19 diagnosis and prognosis. Appl. Comput. Sci. 2021, 17, 40–55. [Google Scholar]

- Fábio, D.; Cinalli, D.; Garcia, A.C.B. Research on Explainable Artificial Intelligence Techniques: An User Perspective. In Proceedings of the 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), IEEE, Dalian, China, 5–7 May 2021. [Google Scholar]

- Neves, I.; Folgado, D.; Santos, S.; Barandas, M.; Campagner, A.; Ronzio, L.; Cabitza, F.; Gamboa, H. Interpretable heartbeat classification using local model-agnostic explanations on ECGs. Comput. Biol. Med. 2021, 133, 104393. [Google Scholar] [CrossRef]

- Selvaganapathy, S.; Sadasivam, S.; Raj, N. SafeXAI: Explainable AI to Detect Adversarial Attacks in Electronic Medical Records. In Intelligent Data Engineering and Analytics; Springer: Singapore, 2022; pp. 501–509. [Google Scholar]

- Payrovnaziri, S.N.; Chen, Z.; Rengifo-Moreno, P.; Miller, T.; Bian, J.; Chen, J.H.; Liu, X.; He, Z. Explainable artificial intelligence models using real-world electronic health record data: A systematic scoping review. J. Am. Med. Inform. Assoc. 2020, 27, 1173–1185. [Google Scholar] [CrossRef]

- Van Der Velden, B.H.; Kuijf, H.J.; Gilhuijs, K.G.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef]

- Antoniadi, A.M.; Du, Y.; Guendouz, Y.; Wei, L.; Mazo, C.; Becker, B.A.; Mooney, C. Current challenges and future opportunities for XAI in machine learning-based clinical decision support systems: A systematic review. Appl. Sci. 2021, 11, 5088. [Google Scholar] [CrossRef]

- Qiu, W.; Chen, H.; Dincer, A.B.; Lundberg, S.; Kaeberlein, M.; Lee, S.I. Interpretable machine learning prediction of all-cause mortality. medRxiv 2022. [Google Scholar] [CrossRef]

- Yang, Y.; Mei, G.; Piccialli, F. A Deep Learning Approach Considering Image Background for Pneumonia Identification Using Explainable AI (XAI). IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Zou, L.; Goh, H.L.; Liew, C.J.; Quah, J.L.; Gu, G.T.; Chew, J.J.; Kumar, M.P.; Ang, C.G.; Ta, A. Ensemble image explainable AI (XAI) algorithm for severe community-acquired pneumonia and COVID-19 respiratory infections. IEEE Trans. Artif. Intell. 2022, 1–12. [Google Scholar] [CrossRef]

- Hu, C.; Tan, Q.; Zhang, Q.; Li, Y.; Wang, F.; Zou, X.; Peng, Z. Application of interpretable machine learning for early prediction of prognosis in acute kidney injury. Comput. Struct. Biotechnol. J. 2022, 20, 2861–2870. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, Z.; Zhou, Z.; Li, S.; Huang, T.; Yin, H.; Lyu, J. Developing an ensemble machine learning model for early prediction of sepsis-associated acute kidney injury. Iscience 2022, 25, 104932. [Google Scholar] [CrossRef] [PubMed]

- Schallner, L.; Rabold, J.; Scholz, O.; Schmid, U. Effect of superpixel aggregation on explanations in LIME—A case study with biological data. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Cham, Switzerland, 2019; pp. 147–158. [Google Scholar]

- Wei, Y.; Chang, M.C.; Ying, Y.; Lim, S.N.; Lyu, S. Explain black-box image classifications using superpixel-based interpretation. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1640–1645. [Google Scholar]

- Hussain; Mehboob, S.; Buongiorno, D.; Altini, N.; Berloco, F.; Prencipe, B.; Moschetta, M.; Bevilacqua, V.; Brunetti, A. Shape-Based Breast Lesion Classification Using Digital Tomosynthesis Images: The Role of Explainable Artificial Intelligence. Appl. Sci. 2022, 12, 6230. [Google Scholar] [CrossRef]

- Zhang, Y.; Tiňo, P.; Leonardis, A.; Tang, K. A survey on neural network interpretability. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 5, 726–742. [Google Scholar] [CrossRef]

| Ref. # | Reference | Reference Paper/Mechansim | Data Preprocessing | Evaluation Methods/Algorithms | Outcome/Explanation Type |

|---|---|---|---|---|---|

| [11] | Selvaraju, R.R. et al. (2017) | GRAD-CAM/Global | GRAD-CAM | VGG, Structured CNN, Reinforcement Learning comparisons. | Textual explanations and AUROC/post-hoc |

| [12] | Tang, Z. et al. (2019) | Guided GRAD-CAM/Global | GRAD-CAM and feature occlusion analysis. | Segmentation on heatmaps and CNN scoring. | AUROC, PR curve, t-test and p-value/post-hoc |

| [13] | Zhao, G. et al. (2018) | Respond CAM/Global | GRAD-CAM, weighted feature maps and contours. | Sum to score property on 3D images by CNN. | Natural images captioning by prediction/post-hoc |

| [14] | Bahdanau et al. (2014) | Multi-Layer CAM/Global | Conditional probability | Encoder–decoder, neural machine translation and bidirectional RNN. | BLEU score, language translator and confusion matrix/post-hoc |

| [15] | Lapuschkin, S. et al. (2019) | LRP 1/Local (Layer-wise relevance propagation). | Relevance heatmaps. | Class predictions by classifier, Eigen-based clustering, LRP, spectral relevance analysis. | Detects source tag, elements and orientations. Atari breakout/ante-hoc |

| [16] | Samek, W. et al. (2016) | LRP 2/Local | Sensitivity | LRP, LRP connection to the Deep Taylor Decomposition (DTD). | Qualitative and quantitative sensitivity analysis. importance of context measured/post-hoc |

| [17] | Thomas, A. et al. (2019) | LRP DeepLight/ Local | Axial brain slices and brain relevance maps. | Bi-directional long short-term memory (LSTM) based DL models for fMRI. | Fine-grained temporo-spatial variability of brain activity, decoding accuracy and confusion matrix/post-hoc |

| [18] | Arras, L. et al. (2016) | LRP CNN/Local | Heatmap visualizations/PCA projections. | Vector-based document representations algorithm. | Classification performance and explanatory power index/ante-hoc |

| [19] | Hiley, L. et al. (2020) | LRP DNN/Local | Sobel filter and DTD selective relevance (temporal/spatial) maps | A selective relevance method for adapting the 2D explanation technique | Precision is the percentage overlap of pixels, std. and Avg. precision comparison/ante-hoc |

| [20] | Eberle, O. et al. (2020) | LRP BiLRP/Global | DTD to derive BiLRP propagation rules. | Systematically decompose similarity scores on pairs of input features (nonlinear) | Average cosine similarity to the ground truth, similarity matrix/ante-hoc |

| Ref. # | Reference Paper | Dataset | Data Preprocessing/Mechanism | Evaluation Methods/Algorithms | Outcome/Explanation Type |

|---|---|---|---|---|---|

| [21] | Burnham, J.P. et al. (2018) | 430 patients | Chi-squared/Fisher exact test, Student t test /Mann–Whitney U/Global | Multivariate Cox proportional hazards models | Kaplan–Meier curves and p-values/ante-hoc |

| [22] | Beganovic, M. et al. (2019) | 428 patients | Chi-square/ Fisher exact test for categorical variables, and t test/ Wilcoxon rank for continuous variables./Global | Propensity scores (PS) using logistic regression with backward stepwise elimination and Cox proportional hazards regression model. | p-values./ante-hoc |

| [23] | Fiala, J. et al. (2019) | 757 patients | Generalized estimating equations (GEE) and Poisson regression models/Global | Logistic regression models, Cox proportional hazards (PH) regression models | p-value before and after adjustment/ante-hoc |

| [24] | Fabre, V. et al. (2019) | 249 patients | χ2 test and Wilcoxon rank sum test/Local | multivariable logistic regression for propensity scores | Weighted by the inverse of the propensity score and 2-sided p-value/ante-hoc |

| [25] | Harris, P.N.A. et al. (2018) | 391 patients | Charlson Comorbidity Index (CCI) score, multi-variate imputation/Global | Miettinen–Nurminen method (MNM) or logistic regression. | A logistic regression model, using a 2-sided significance level |

| [26] | Delahanty, R.J. et al. (2018) | 2,759,529 patients | 5-fold cross validation/Local | XGboost in R. | Risk of Sepsis (RoS) score, Sensitivity, Specficity and AUROC/post-hoc |

| [27] | Kam, H.J. et al. (2017) | 5789 patients | Data imputation and categorization./Local | Multilayer perceptron’s (MLPs), RNN and LSTM model. | Accuracy and AUROC/post-hoc |

| [28] | Taneja, I. et al. (2017) | 444 patients | Heatmaps, Riemann sum, categories and batch normalization/Global | Logistic regression, support vector machines (SVM), random forests, adaboost, and naïve Bayes. | Sensitivity, Specificity, and AUROC/ante-hoc |

| [29] | Oonsivilai, M. et al. (2018) | 243 patients | Z-score, the Lambda, mu, and sigma (LMS) method. 5-fold cross-validated and Kappa based on a grid search/Global | Decision trees, Random forests, Boosted decision trees using adaptive boosting, Linear support vector machines (SVM), Polynomial SVMs, Radial SVM and k-nearest neighbours (kNN) | Comparison of perfor-mance rankings, Calibration, Sensitiv-ity, Specificity, p-value and AUROC/ante-hoc |

| [30] | García-Gallo, J.E. et al. (2019) | 5650 patients | Least Absolute Shrinkage and Selection Operator (LASSO)/Local | Stochastic Gradient Boosting (SGB) | Accuracy, p-values and AUROC/post-hoc |

| Ref. # | Reference | Dataset | Criteria | Data Preprocessing/Mechanism | Evaluation Methods/Algorithms | Outcome/Explanation Type |

|---|---|---|---|---|---|---|

| [31] | Lee, H-C. et al. (2018) | 1211 | Acute kidney injury network (AKIN) | Imputation and hot-deck imputation/Global | Decision tree, random forest, gradient boosting machine, support vector machine, naïve Bayes, multilayer perceptron, and deep belief networks. | AUROC, accuracy, p-value, sensitivity and specificity/ante-hoc |

| [32] | Hsu, C.N. et al. (2020) | 234,867 | KDIGO | Least absolute shrinkage and selection operator (LASSO), 5-fold cross validation/Local | Extreme gradient boost (XGBoost) and DeLong statistical test. | AUROC, Sensitivity, and Specificity/ante-hoc |

| [33] | Qu, C. et al. (2020) | 334 | KDIGO | Kolmogorov–Smirnov test and Mann–Whitney U tests/Local | Logistic regression, support vector machine (SVM), random forest (RF), classification and regression tree (CART), and extreme gradient boosting (XGBoost). | Feature importance rank, p-value and AUROC/ante-hoc |

| [34] | He, L. et al. (2021) | 174 | KDIGO | Least absolute shrinkage and selection operator (LASSO) regression, Bootstrap resampling and Harrell’s C statistic/Local | Multivariate Cox regression model and Kaplan-Meier curves. | p-value, Accuracy, Sensitivity, Specificity, and AUROC/ante-hoc |

| [35] | Kim, K. et al. (2021) | 482,467 | KDIGO | SHAP, partial dependence plots, individual conditional expectation, and accumulated local effects plots/Global | XGBoost model and RNN algorithm | p-value, AUROC/post-hoc |

| [36] | Penny-Dimri, J.C. et al. (2021) | 108,441 | Cardiac surgery-associated (CSA-AKI) | Five-fold cross-validation repeated 20 times and SHAP/Global | LR, KNN, GBM, and NN algorithm. | AUC, sensitivity, specificity, and risk stratification/post-hoc |

| [37] | He, Z.L. et al. (2021) | 493 | KDIGO | Wilcoxon’s rank-sum test, Chi-square test and Kaplan–Meier method/Local | LR, RF, SVM, classical decision tree, and conditional inference tree. | Accuracy and AUC/ante-hoc |

| [38] | Alfieri, F. et al. (2021) | 35,573 | AKIN | Mann–Whitney U test/Local | LR analysis, stacked and parallel layers of convolutional neural networks (CNNs) | AUC, sensitivity, specificity, LR+ and LR-/post-hoc |

| [39] | Kang, Y. et al. (2021) | 1 million. | N.A. | conjunctive normal form (CNF) and Disjunctive normal form (DNF) rules/Global | CART, XGBoost, Neural Network, and Deep Rule Forest (DRF). | AUC, log odd ratio and rules based models/post-hoc |

| [40] | S. Le et al. (2021) | 2347 | KDIGO | Imputation and standardization/Global | XGBoost and CNN. | AUROC and PPV/post-hoc |

| Ref. # | Reference | Dataset | Ventilator | Data Preprocessing/Mechanism | Evaluation Methods/Algorithms | Outcome/Explanation Type |

|---|---|---|---|---|---|---|

| [41] | Mamandipoor, B. et al. (2021) | Ventila dataset with 12,596 | Yes | Mathews correlation coefficient (MCC)/Global | LR, RF, LSTM, and RNN. | AUROC, AP, PPV, and NPV/post-hoc |

| [42] | HU, C.A. et al. (2021) | 336 | Yes | Kolmogorov–Smirnov test, Student’s t-test, Fisher’s exact test, Mann–Whitney U test, and SHAP/Global | XGBoost, RF, and LR. | p-value, AUROC/ante-hoc |

| [43] | Rueckel, J. et al. (2021) | 86,876 | Restricted ventilation (atelectasis) | Fleischner criteria, Youden’s J Statistics, Nonpaired Student t-test/Global | Deep Neural Network. | Sensitivity, Specificity, NPV, PPV, accuracy, and AUROC/post-hoc |

| [44] | Greco, M. et al. (2021) | 1503 | Yes | 10-fold cross validation, Kaplan–Meier curves, imputation and SVM-SMOTE/Global. | LR and Supervised machine learning models | AUC, Precision, Recall, F1 score/ante-hoc |

| [45] | Ye, J. et al. (2020) | 9954 | No | Sequential Organ Failure Assessment (SOFA) score, Simplified Acute Physiology Score II (SAP II), and Acute Physiology Score III (APS III)./Global | Majority voting, XGBoost, Gradient boosting, Knowledge- guided CNN to combine CUI features and word features. | AUC, PPV, TPR, and F1 score/ante-hoc |

| [46] | Kong, G. et al. (2020) | 16,688 | Yes | SOFA and SAPS II scores./Local | Least absolute shrinkage and selection operator (LASSO), RF, GBM, and LR. | AUROC, Brier score, sensitivity, specificity, and calibration plot/ante-hoc |

| [47] | Nie, X. et al. (2021) | 760 | No | Glasgow Coma Scale (GCS) score, and APACHE II/Global | Nearest neighbors, decision tree, neural net, AdaBoost, random forest, and gcForest. | Sensitivity, specificity, accuracy, and AUC/ante-hoc |

| [48] | Theis, J. et al. (2021) | 2436 | N.A. | SHAP, SOFA, Oxford Acute Severity of Illness Score (OASIS), APS-III, SAPS-II score, and decay replay mining/Global | LSTM encoder–decoder, Dense Neural Network. | AUROC, Mean AUROC and 10-FOLD CV AUROC/post-hoc |

| [49] | Jentzer, J.C. et al. (2021) | 5680 | Yes | The Charlson Comorbidity Index, individual comorbidities, and severity of illness scores, including the SOFA and APACHE-III and IV scores/Global | AI-ECG algorithm | AUC/post-hoc |

| [50] | Popadic, V. et al. (2021) | 160 | Yes | N.A./Local | Univariate and multivariate logistic regression models | p-values, ROC curves/ante-hoc |

| XAI Category | Sub-Section | References |

|---|---|---|

| Pre-Processing | Dataset Consistency | [15,18,21,22,23,25,26] |

| Imputation | [4,22,25,26,28,29] | |

| Data Distribution | [15,18,21,22,25,26,28,29] | |

| Image Registration | [4,5,17,19] | |

| Feature Scoring | [11,12,13,17,18,25,28] | |

| Feature Priority | [9,11,16,23,24,25,28] | |

| Equal Feature Scoring | [11,15,19,20,29] | |

| Threshold (Feature Selection) | [12,17,20,23,24,25,26] | |

| Manual Feature Selection | [12,24,25,26,28] | |

| Binary/Multi-Class Feature | [4,23,25,29] | |

| Methodology | Feature Validation | [14,18,25,26,28] |

| Novel Approach | [4,11,16,20] | |

| Method Inefficiency | [22,23,25,26,28] | |

| Feature Analysis | [11,18,25,26,27,28,29] | |

| Severity Level Analysis | [9,23,25,29] | |

| Feature Effectiveness | [18,20,23,25,28] | |

| Feature Averaging | [4,28,29] | |

| Feature Improvements | [16,17,24,25,29] | |

| Evaluation | Model Metrics | [4,17,26,27,28,29] |

| Classification | [11,12,14,17,27] | |

| Graphs | [12,16,18,25,27,28,29] |

| Dataset Source | Medical Domain | Category | Size |

|---|---|---|---|

| RSNA(Radiological Society of North America) and NIH [72] | Pneumonia | NIH chest X-ray dataset with initial annotation. | 26,601 CXR Images |

| Kermany [73] | Pneumonia | Chest X-rays | 5856 CXR Images |

| Chest radiographs (SCR) dataset X-ray images [74] | Pneumonia | Chest radiographs | 247 frontal viewed posterior-anterior (PA) |

| Central Line-Associated Bloodstream infections (CLABSI) in California Hospitals [75] | Blood Stream Infections (BSI) | The CLABSI (text/csv) dataset contains reported infections, baseline data predictions, days count for central line, standard infection ratio (SIR), associated confidence interval of 95%, and grading with respect to national baseline. | Details from 461 hospitals. |

| MIMIC Clinical Database [76,77] | Epidemics (HER Data) | The MIMIC dataset consists of ICU data with high patient’s count including vital signs, laboratory test, and medication courses. | The MIMIC-III database has 26 relational tables containing patient’s data (SUBJECT_ID), hospital admissions (HADM_ID), and ICU admissions(ICUSTAY_ID). |

| ICES Data Repository [78] | EHR Data | EHR Data recorded from the health services of Ontario. | 13 million people. |

| Veterans Health Administration [79,80] | EHR Data | EHR data from US Veteran’s Affairs (VA) dataset | 1293 health care facilities with 171 medical center and 1112 outpatient sites. |

| 1. Diet | 2. Medicine/Treatment | 3. Exercise | 4. Regular Checkup | 5. Side Effects |

|---|---|---|---|---|

| a. Fruits b. Vegetables c. Seafood d. Meat e. Grains f. Soup g. Milk Products | a. Morning dose 1 b. Afternoon dose 2 c. Evening dose 3 d. Lotions/Drops e. Physiotherapy f. Injections g. Dialysis | a. Walking/Running b. Yoga c. Cycling d. Swimming e. Sports | a. Daily b. Alternate day c. Weekly d. Bio-sensors/Remote health monitoring e. Monthly f. Quarterly/Year | a. Vomiting b. Dizziness c. Headache c. Loss of Appetite d. Skin rashes e. Palpitations |

| 1. Diet | 2. Medicine/Treatment | 3. Exercise | 4. Regular Checkup | 5. Side Effects |

|---|---|---|---|---|

| ||||

| Serial No. | XAI Scoring Factor | Description | Checklist (10 pts Each) |

|---|---|---|---|

| 1 | XAI based Objectives | To serve the evaluation purpose for effective problem solving following laws and ethics. | |

| 2 | Dataset for Training Model | Whether the dataset has global and local scope? | |

| 3 | Data Pre-processing | Manage the data consistency and imbalance issue. | |

| 4 | Model Selection | To perform feature analysis and select an appropriate model with a novel approach. | |

| 5 | Model Reconfiguration | The model’s hyper-parameter tuning for better prediction by handling bias and variance. | |

| 6 | Interpretability | How much does the model support intrinsic and post-hoc interpretability? | |

| 7 | Explainability | Transparency in every step and decision of the model should be given by the algorithm. | |

| 8 | Evaluation, Feedback loop and Post-evaluation | The outcome should provide meaningful results. Graphs, prediction, and classification should be cross-verifiable. The feedback loop consisting of interacting with domain experts is helpful for post-evaluation. | |

| 9 | Human-in-the-Loop Process | Continuously involve the domain expert for improving multi-modal data and feature management. | |

| 10 | XAI Recommendation System | To maintain the discharged patient’s health conditions. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sheu, R.-K.; Pardeshi, M.S. A Survey on Medical Explainable AI (XAI): Recent Progress, Explainability Approach, Human Interaction and Scoring System. Sensors 2022, 22, 8068. https://doi.org/10.3390/s22208068

Sheu R-K, Pardeshi MS. A Survey on Medical Explainable AI (XAI): Recent Progress, Explainability Approach, Human Interaction and Scoring System. Sensors. 2022; 22(20):8068. https://doi.org/10.3390/s22208068

Chicago/Turabian StyleSheu, Ruey-Kai, and Mayuresh Sunil Pardeshi. 2022. "A Survey on Medical Explainable AI (XAI): Recent Progress, Explainability Approach, Human Interaction and Scoring System" Sensors 22, no. 20: 8068. https://doi.org/10.3390/s22208068

APA StyleSheu, R.-K., & Pardeshi, M. S. (2022). A Survey on Medical Explainable AI (XAI): Recent Progress, Explainability Approach, Human Interaction and Scoring System. Sensors, 22(20), 8068. https://doi.org/10.3390/s22208068