2.1. Existing Databases

In this section, we will introduce the existing Asian facial expression databases, and detailed information on them is presented in

Table 1, including collection environment, number of images, number of subjects, expression category, etc.

The Japanese Female Facial Expression (JAFFE) [

10] database is a commonly used data set. It contains ten young Japanese women, each corresponding to three or four posed images for each expression (six basic expressions [

11]: angry, disgust, fear, happy, sad, surprise, and neutral). Before photo-shooting, subjects were asked to have their faces uncovered by the hair to reveal all expression areas. Images were taken from the front with faces in the center and were finally saved in an uncompressed grayscale format. This data set captured seven expressions from each subject, allowing a comprehensive study to compare the variation between different expressions. However, it only contains expression data of young women and cannot be combined with gender and age for further analysis.

The Taiwanese Facial Expression Image Database (TFEID) [

12] consists of 40 subjects with an equal ratio of male and female. All subjects were asked to perform eight expressions (six basic expressions, contempt, and neutral) at different intensities (high and slight). The expressions were simultaneously captured by two cameras placed at different angles (0° and 45°). Finally, 7200 image sequences were collected, but only a part of the data set is publicly available, including 268 images with six basic expressions and neutral. Due to the small number, this data set is poorly generalized and is only suitable for expression recognition in a single environment.

The POSTECH Face Database (PF07) [

13] captured the expressions of 100 males and 100 females. The subjects were required to pose for four different expressions (happy, surprise, anger, neutral), which were simultaneously captured by five cameras placed in the front, upper, lower, left, and right of subjects at 22.5° intervals. In addition, each angle of each expression corresponds to 16 different illumination conditions, so each subject corresponds to 320 images. This data set takes into account the effects of angle and illumination and is more robust. The disadvantage of this database is that only four expressions were collected, making it impossible to compare them directly with other datasets.

The Natural Visible and Infrared Facial Expression Database (NVIE) [

14] is the first lab-controlled facial expression database that contains both natural visible and infrared images. All subjects were college students, among whom 108 participated in posed expressions and 215 participated in spontaneous expressions induced by film clips. The effects of illumination direction (front, left, and right) and glasses (with and without glasses) were considered during the acquisition process, and images were taken simultaneously by a visible and infrared camera directly in front of the subjects. However, because spontaneous expressions were uncontrolled, ultimately only disgust, fear, and happy expressions were successfully induced. The database contains both visible and infrared expression data, so it can be used for multi-modal expression analysis.

The Chinese affective face picture system (CAFPS) [

15] collected images from 220 college students, middle-aged and elderly people, and children. Subjects were asked to remove jewelry such as earrings and necklaces before photo acquisition. All images were taken directly in front of the subjects and saved as grayscale images. During post-processing, external features such as hair, ears, and neck were removed, and only facial features were kept. This data set belongs to the Chinese face standard system, which is widely used in localized emotion research.

The Kotani Thermal Facial Emotion (KTFE) database [

16] contained both visible and thermal facial expressions. Twenty-six subjects from Vietnam, Thailand and Japan participated in the experiment. Seven different types of emotional video clips were used to induce subjects to generate corresponding emotions. All expressions were spontaneously generated and there were no uniform requirements for the subject’s head posture and whether to wear glasses. During the experiment, the light was kept uniform, and both visible and infrared images were collected by the infrared camera directly in front of the subjects. The database contains expression data of infrared light, so it can also be used for infrared and spectral expression analysis.

The Korea University Facial Expression Collection-Second Edition (KUFEC-II) [

17] consists of 57 professional actors (28 males and 29 females) who were trained to pose for photographs. All subjects were dressed in uniform and were instructed not to wear makeup, not to have exaggerated hair color, and to have their hair pinned to reveal the hairline and ears. Images were taken simultaneously by three high-resolution cameras placed around the subjects (45° apart from the front and left). After photo shooting, the most representative 399 images were selected and then labeled in four dimensions (validity, arousal, type, and intensity). The data set was created strictly based on FACS, so the participants’ facial expressions and actions were very standard. It is very suitable for application in the field of animation production, which can assist in generating the movements and expressions of virtual characters.

The Taiwanese facial emotional expression stimuli (TFEES) data set [

18] is a combination of the existing database TFEID and images acquired in their study. The stimulus from TFEID consisted of 1232 frontal view facial expression images of 29 Taiwanese actors, and the remaining 2477 images of 61 Taiwanese were taken during the study. All participants were asked to remove eyeglasses and perform expressions according to AUs described in the FACS. Images were captured by a camera placed directly in front of the participant. Although the database contains many expression images, they are not applicable to the recognition of real scenes because the expressions are posed.

The Kokoro research center facial expression database (KRC) [

19] collected images from 74 college students (50 males and 24 females). All subjects performed expressions according to the FACS examples provided. In addition to looking straight ahead, subjects were asked to look from straight ahead to left and right, respectively, while keeping their faces still to capture expressions with averted gazes. Three cameras were used to take pictures of subjects in front, left, and right simultaneously, and the subjects’ eyes were uniformly positioned in the center of the pictures. The database is designed specifically for psychological experiments. In addition to the basic expression categories, it is labeled with rating intensity and discrimination performance.

The Tsinghua facial expression database (Tsinghua FED) [

20] is a data set containing different age groups, including 63 young and 47 older adults. Subjects were asked to have their face free of tattoos and accessories such as glasses, necklaces, and earrings and to have no makeup to conceal the actual age or the texture of the skin. Eight expressions (six basic expressions, neutral and content (smile without teeth)) were induced by a three-stage emotion induction method. Images were taken directly in front of the subjects and saved in color in JPEG format. The data set provides facial expressions in two age groups, young and old, which can be used to study the changes in facial expressions of East Asians from young to old.

The Micro-and-macro Expression Warehouse (MMEW) [

21] contains both macro and micro expressions. The subjects were 30 Chinese (23 males and 7 females), and all of them were induced to produce six basic expressions through different types of emotional videos. Then, the expressions were captured by a camera placed in front of them. The faces were eventually cropped from the images and saved with a resolution of 231 × 231. As no relevant requirement has been made to wear glasses or not, the database therefore contains some expressions with glasses. The data set contains both macro and micro expressions of the same subject, so it can be used to study the differences between them.

Although the databases presented above are publicly available, they were all captured under laboratory-controlled conditions. All participants were required to perform the given expressions or to induce the corresponding emotions through video clips. Such controlled expressions are not sufficient to cope with the complex changes of the real world. In recent years, people have gradually realized the importance of recognizing facial expressions in real scenes. This is a very complex and challenging problem that relies on the amount and diversity of facial expression data.

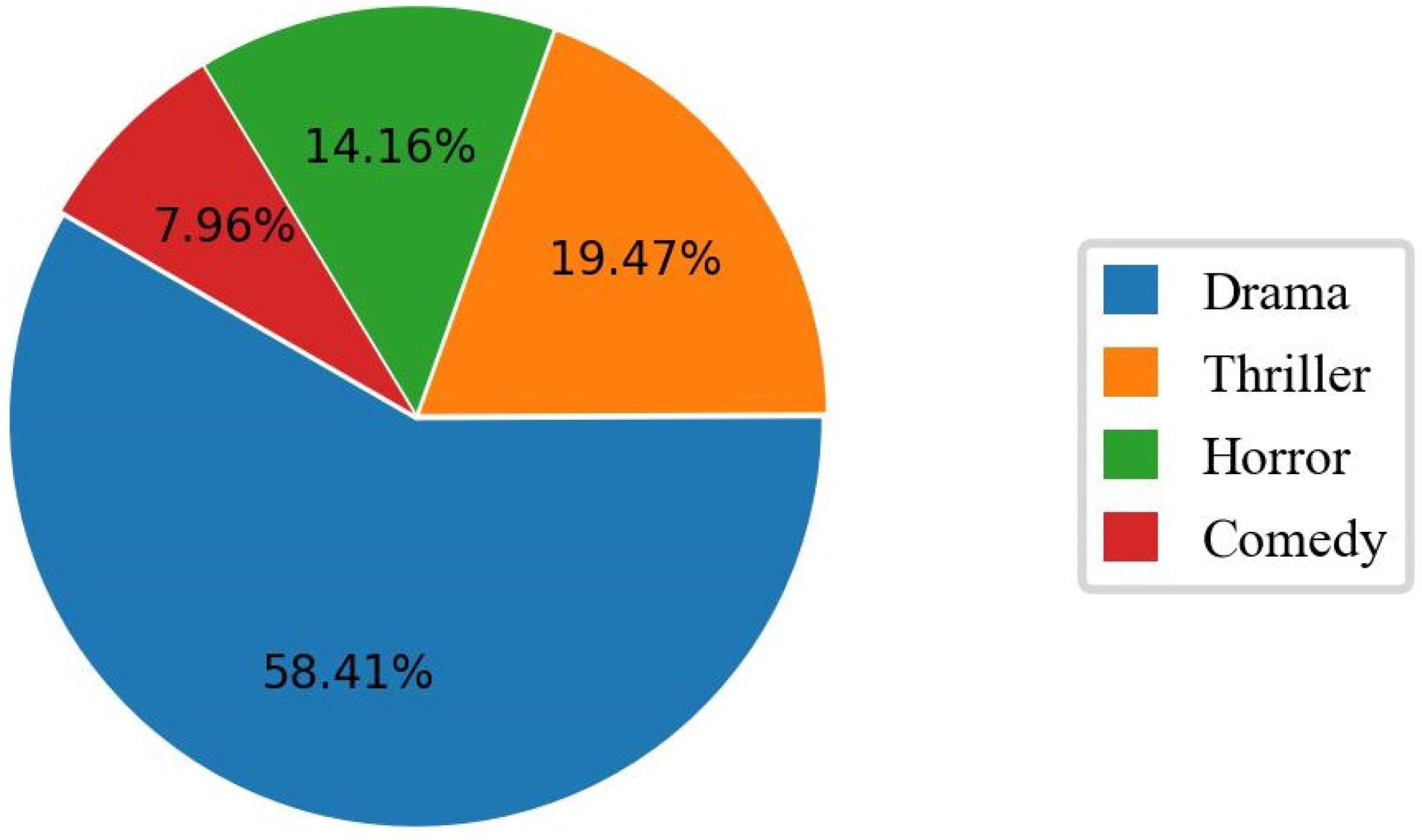

The Asian Face Expression (AFE) [

6] database is the first large data set of Asian facial expressions in natural scene conditions. The raw images contain about 500,000 Asian face images downloaded from Douban Movie. Each image was annotated by three or four annotators based on seven expressions (six basic expressions and neutral), and only images where all annotations were the same were kept, resulting in 54,601 images. This amount is sufficient for training large models. Moreover, the expressions in movies are diverse and can cope with the changes of real life.

However, only one real-world Asian facial expression database, AFE, currently exists, which is far below the number of those of European-American cultures, both in terms of the number of databases and the amount of expression data in the databases. To promote the development of Asian FER in natural scenes, large amounts of expression data generated in real life are urgently needed.

2.2. Existing Algorithms

FER can be divided into three stages [

22]: pre-processing, feature extraction, and feature classification, among which feature extraction is the most important, and the accuracy of classification depends largely on the effectiveness of the extracted features [

23].

In the pre-processing stage, face detection is usually used to extract facial regions. The most commonly used algorithms are Viola-Jones [

24] and MTCNN [

25]. The former can stably detect faces in the data set under lab-controlled conditions, but its performance in complex environments decreases substantially. The latter uses a deep learning approach with higher robustness and superior performance in complex natural scenes [

2,

26]. Moreover, MTCNN can perform face alignment processing at the same time while ensuring real-time performance. The disadvantage is that MTCNN will miss detection when the number of faces in the image is relatively large. Overall, it is still a good choice for face detection. To reduce the influence of illumination and head pose, some studies are also working on pre-processing images using illumination normalization [

27] and pose normalization [

28]. Additionally, some methods of data enhancement are also widely used to increase data diversity for better training of classification models [

29].

The main function of feature extraction is to extract the most representative and descriptive information from images. Traditional feature extraction algorithms are based on artificially designed features, which can be roughly divided into five categories. Among them, texture feature-based methods include Gabor [

30], LBP [

31] and its variants such as LDP [

32] and WPLBP [

33], edge feature-based methods including HOG [

34] and its variants PHOG [

30], global and local feature-based methods include PCA [

35] and SWLDA [

36], geometric feature-based methods including LCT [

37], and salient patch-based methods [

38]. These methods were dominant in the past, mainly because previous studies were based on data with controlled conditions. In recent years, deep learning has shown powerful feature extraction capabilities. It can acquire high-level abstract features through multiple layers of nonlinear transformations. Deep learning methods such as convolutional neural networks (CNN) [

27], deep belief networks (DBN) [

39],deep autoencoders (DAE) [

40], and generative adversarial networks (GAN) [

41] are gradually gaining popularity among researchers. CNN relies on a set of learnable filters to extract features and is robust to face position and scale changes. However, the network structure needs to be carefully designed, and the larger the model, the higher the training cost. DBN is a directed graph model, it can learn the deep hierarchical representation of training data, and the algorithm is very simple to sample. However, the non-decomposability of the probabilistic graph makes the posterior computation very difficult. Both DAE and GAN are usually used in data generation tasks, they employ unsupervised learning (for unlabeled data) for feature extraction and feature representation. DAE uses an encoder–decoder structure to reconstruct its input by minimizing the reconstruction error. However, this will result in local oversight of the data rather than global access to information. GAN is based on the idea of adversarial generation. The discriminator judges the data as a whole and has a better grasp of the global information. However, the random input of the generator has a weak correspondence with the data, which leads to poor control of the generation quality. In general, CNN is widely used in FER due to its unique pattern recognition advantages.

Feature classification is the last stage of FER, which classifies faces into the corresponding expression categories based on the extracted features. Among the classical feature classification algorithms, support vector machines (SVM) [

42] is by far the most widely used, followed by k-nearest neighbors (KNN) [

43]. In addition, Adaboost [

44], naive Bayes (NB) [

45], and random forests (RF) [

46] are also used for feature classification. However, as the complexity and diversity of facial expression data increases, these traditional methods are no longer applicable. As an end-to-end learning method, the neural network can output the probability of the category to which a sample belongs directly after feature extraction. The commonly used neural network-based classification algorithms are CNN [

47] and multilayer perceptron (MLP) [

39]. MLP is simpler and easier to study. However, the structure of fully connected layers will lose the spatial information between pixels. In contrast, CNN takes into account the spatial correlation of pixels and can achieve good results with fewer parameters. Therefore, most studies in recent years have used CNNs for feature classification.