SDHAR-HOME: A Sensor Dataset for Human Activity Recognition at Home

Abstract

1. Introduction

2. Related Work

2.1. Summary of Database Developments

2.2. Summary of Activity Recognition Methods

3. The SDHAR-HOME Database

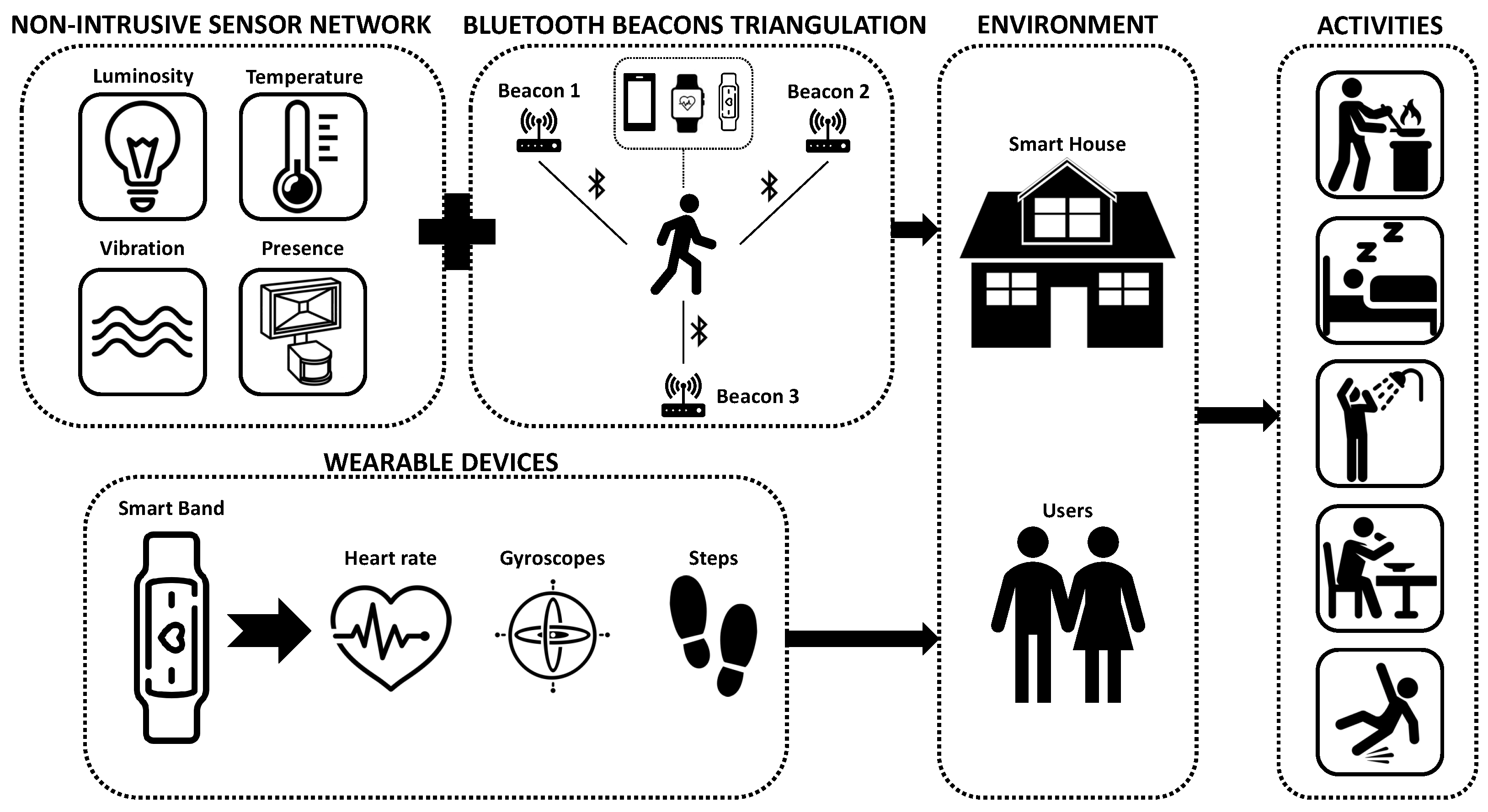

3.1. Overview of the Monitoring System: Hardware, Software and Control

3.2. Non-Intrusive Sensor-Based Home Event Logging

- Aqara Motion Sensor (M): This type of sensor is used to obtain information about the presence of users in each of the rooms of the home. Six sensors of this type were initially used, one for each room of the house. In addition, two more sensors were used to divide the living room and the hall into two different zones. These two sensors were located on the walls, specifically in high areas facing the floor.

- Aqara Door and Window Sensor (C): This type of sensor is used to detect the opening of doors or cabinets. For example, it is used to detect the opening of the refrigerator or the microwave, as well as to recognise the opening of the street door or the medicine drawer. In total, eight sensors of this type were used.

- Temperature and Humidity Sensor (TH): It is important to know the variations of temperature and humidity in order to recognise activities. This type of sensor was placed in the kitchen and bathroom to recognise whether a person is cooking or taking a shower, as both activities increase the temperature and the humidity. These sensors were placed on the ceramic hob in the kitchen and near the shower in the bathroom.

- Aqara Vibration Sensor (V): The vibration sensor chosen is easy to install and can be hidden very easily. This sensor perceives any type of vibration that takes place in the furniture where it is located. For example, it was used to perceive vibrations in chairs, to know if a user sat on the sofa or laid down on the bed. It is also able to recognise cabinet openings.

- Xiaomi Mi ZigBee Smart Plug (P): There are activities that are intimately linked to the use of certain household appliances. For this reason, an electrical monitoring device was installed to know when the television is on and another consumption sensor to know if the washing machine is being used. In addition, this type of sensor provides protection against overcurrent and overheating, which increases the level of safety of the appliance.

- Xiaomi MiJia Light Intensity Sensor (L): This sensor detects light in a certain room. This is useful when it is necessary to detect, for example, if a user is sleeping. Presence sensors can recognise movement in the bedroom during the day, but if the light is off, it is very likely that the person is sleeping. This sensor was also used in the bathroom.

3.3. Position Triangulation System Using Beacons

3.4. User Data Logging via Activity Wristbands

- Heart rate: The wristbands estimate the heart rate through the difference between a small beam of light emitted at the bottom and the light measured by a photoelectric sensor in the same position. In this way, the heart rate per minute can be extrapolated from the amount of light absorbed by the wearer’s blood system.

- Calories and fat burned: Thanks to measurements of the user’s heart rate, and supported by physiological parameters provided by the user, the wristbands are able to estimate calories and fat burned throughout the day.

- Accelerometer and gyroscope data: Wristbands do not broadcast information about internal accelerometers and gyroscopes, as this would result in a premature battery drain due the huge information flow. Instead, the emission protocol of the wristbands has been modified to share this information, as the position and movements of the hand are very important in the execution of activities.

- Steps and metres travelled: In the same way as calories and fat burned, using the information from accelerometers and gyroscopes, with the support of physiological parameters provided by the user, it is possible to estimate the steps and metres travelled by the user at any time.

- Battery status: The wristband also provides information on its battery level, as well as its status (whether it is charging or in normal use).

3.5. Data Storage in InfluxDB

4. Activity Recognition through Deep Learning

4.1. RNN-Models

4.2. LSTM-Models

4.3. GRU-Models

4.4. Data Processing and Handling

- Oversampling: The number of occurrences per activity throughout the dataset is variable. For this reason, if the network is trained with too many samples of one type of activity, this may mean that the system tends to generate this type of output. Therefore, in order to train the network with the same number of occurrences for each type of activity, an algorithm is applied that duplicates examples from the minority class until the number of windows is equalised.

- Data sharing: Despite the duplication mechanism, some activities are not able to achieve high hit rates. In order to increase the range of situations in which an activity takes place, a mechanism that shares activity records between the two users has been developed. To improve the performance of neural networks, it is beneficial to train with a greater variability of cases and situations. Therefore, for activities with worse hit rates and less variability, the algorithm adds to the training subset situations experienced by the other user to improve the generalisation of the model. For example, the activities “Chores”, “Cook”, “Pet Care” and “Read” for user 2 produced low results. For this reason, the data sharing algorithm was charged to add to the user 2’s training set, different time windows corresponding to user 1’s dataset while he/she was performing these activities. Before applying the algorithm, the average success rate of these activities was 21%. Once the algorithm was applied, the success rate exceeded 80%, which represents a significant increase in the network performance.

4.5. Resulting Neural Network Model

5. Experiments and Discussion

- Models based on recurrent neural networks are useful for analysing discrete time series data, such as data provided by binary sensors and positions provided by beacons.

- In particular, the LSTM and GRU neural networks offer better results than traditional RNNs, due to the fact that they avoid the gradient vanishing problem, with a higher computational load and a greater number of internal parameters.

- The early stopping technique has made it possible to stop training at the right time to avoid overfitting, which would reduce the success rate at the prediction phase.

- Prediction inaccuracies happen mostly on time-congruent activities or activities of a similar nature.

- It is possible to detect individual activities in multi-user environments. If sensor data were analysed in an isolated mode, the results would fall because these data are common to both users. The technology that makes it possible to differentiate between the two users is the positioning beacons, which indicate the position of each user in the house. In most cases, this makes it possible to discriminate which user is performing the activity.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DL | Deep Learning |

| RNN | Recurrent Neural Networks |

| LSTM | Long Short-Term Memory |

| GRU | Gated Recurrent Unit |

| MQTT | Message Queue Telemetry Transport |

| SVM | Support-Vector Machine |

| HMM | Hidden Markov Model |

| MLP | Multilayer Perceptron |

References

- Singh, R.; Sonawane, A.; Srivastava, R. Recent evolution of modern datasets for human activity recognition: A deep survey. Multimed. Syst. 2020, 26, 83–106. [Google Scholar] [CrossRef]

- Khelalef, A.; Ababsa, F.; Benoudjit, N. An efficient human activity recognition technique based on deep learning. Pattern Recognit. Image Anal. 2019, 29, 702–715. [Google Scholar] [CrossRef]

- Cobo Hurtado, L.; Vi nas, P.F.; Zalama, E.; Gómez-García-Bermejo, J.; Delgado, J.M.; Vielba García, B. Development and usability validation of a social robot platform for physical and cognitive stimulation in elder care facilities. Healthcare 2021, 9, 1067. [Google Scholar] [CrossRef]

- De-La-Hoz-Franco, E.; Ariza-Colpas, P.; Quero, J.M.; Espinilla, M. Sensor-based datasets for human activity recognition—A systematic review of literature. IEEE Access 2018, 6, 59192–59210. [Google Scholar] [CrossRef]

- Antar, A.D.; Ahmed, M.; Ahad, M.A.R. Challenges in sensor-based human activity recognition and a comparative analysis of benchmark datasets: A review. In Proceedings of the 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Spokane, WA, USA, 30 May–2 June 2019; pp. 134–139. [Google Scholar]

- American Time Use Survey Home Page. 2013. Available online: https://www.bls.gov/tus/ (accessed on 23 June 2022).

- Caba Heilbron, F.; Escorcia, V.; Ghanem, B.; Carlos Niebles, J. Activitynet: A large-scale video benchmark for human activity understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 961–970. [Google Scholar]

- Wang, L.; Gu, T.; Tao, X.; Lu, J. Sensor-based human activity recognition in a multi-user scenario. In Proceedings of the European Conference on Ambient Intelligence, Salzburg, Austria, 18–21 November 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 78–87. [Google Scholar]

- Li, Q.; Gravina, R.; Li, Y.; Alsamhi, S.H.; Sun, F.; Fortino, G. Multi-user activity recognition: Challenges and opportunities. Inf. Fusion 2020, 63, 121–135. [Google Scholar] [CrossRef]

- Golestani, N.; Moghaddam, M. Human activity recognition using magnetic induction-based motion signals and deep recurrent neural networks. Nat. Commun. 2020, 11, 1551. [Google Scholar] [CrossRef] [PubMed]

- Jung, M.; Chi, S. Human activity classification based on sound recognition and residual convolutional neural network. Autom. Constr. 2020, 114, 103177. [Google Scholar] [CrossRef]

- Sawant, C. Human activity recognition with openpose and Long Short-Term Memory on real time images. EasyChair Preprint 2020. Available online: https://easychair.org/publications/preprint/gmWL (accessed on 5 September 2022).

- Sousa Lima, W.; Souto, E.; El-Khatib, K.; Jalali, R.; Gama, J. Human activity recognition using inertial sensors in a smartphone: An overview. Sensors 2019, 19, 3213. [Google Scholar] [CrossRef] [PubMed]

- Espinilla, M.; Medina, J.; Nugent, C. UCAmI Cup. Analyzing the UJA human activity recognition dataset of activities of daily living. Proceedings 2018, 2, 1267. [Google Scholar]

- Mekruksavanich, S.; Promsakon, C.; Jitpattanakul, A. Location-based daily human activity recognition using hybrid deep learning network. In Proceedings of the 2021 18th International Joint Conference on Computer Science and Software Engineering (JCSSE), Lampang, Thailand, 30 June–2 July 2021; pp. 1–5. [Google Scholar]

- Zhang, M.; Sawchuk, A.A. USC-HAD: A daily activity dataset for ubiquitous activity recognition using wearable sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 1036–1043. [Google Scholar]

- Tapia, E.M.; Intille, S.S.; Lopez, L.; Larson, K. The design of a portable kit of wireless sensors for naturalistic data collection. In Proceedings of the International Conference on Pervasive Computing, Dublin, Ireland, 7–10 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 117–134. [Google Scholar]

- Lago, P.; Lang, F.; Roncancio, C.; Jiménez-Guarín, C.; Mateescu, R.; Bonnefond, N. The ContextAct@ A4H real-life dataset of daily-living activities. In Proceedings of the International and Interdisciplinary Conference on Modeling and Using Context, Paris, France, 20–23 June 2017; Springer: Cham, Switzerland, 2017; pp. 175–188. [Google Scholar]

- Alshammari, T.; Alshammari, N.; Sedky, M.; Howard, C. SIMADL: Simulated activities of daily living dataset. Data 2018, 3, 11. [Google Scholar] [CrossRef]

- Arrotta, L.; Bettini, C.; Civitarese, G. The marble dataset: Multi-inhabitant activities of daily living combining wearable and environmental sensors data. In Proceedings of the International Conference on Mobile and Ubiquitous Systems: Computing Networking, and Services, Virtual, 8–11 November 2021; Springer: Cham, Switzerland, 2021; pp. 451–468. [Google Scholar]

- Roggen, D.; Calatroni, A.; Rossi, M.; Holleczek, T.; Förster, K.; Tröster, G.; Lukowicz, P.; Bannach, D.; Pirkl, G.; Ferscha, A.; et al. Collecting complex activity datasets in highly rich networked sensor environments. In Proceedings of the 2010 Seventh International Conference on Networked Sensing Systems (INSS), Kassel, Germany, 15–18 June 2010; pp. 233–240. [Google Scholar]

- Van Kasteren, T.; Noulas, A.; Englebienne, G.; Kröse, B. Accurate activity recognition in a home setting. In Proceedings of the 10th international Conference on Ubiquitous Computing, Seoul, Korea, 21–24 September 2008; pp. 1–9. [Google Scholar]

- Liu, H.; Hartmann, Y.; Schultz, T. CSL-SHARE: A multimodal wearable sensor-based human activity dataset. Front. Comput. Sci. 2021, 3, 759136. [Google Scholar] [CrossRef]

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. Ntu rgb+ d: A large scale dataset for 3d human activity analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Alemdar, H.; Ertan, H.; Incel, O.D.; Ersoy, C. ARAS human activity datasets in multiple homes with multiple residents. In Proceedings of the 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013; pp. 232–235. [Google Scholar]

- Cook, D.J.; Crandall, A.S.; Thomas, B.L.; Krishnan, N.C. CASAS: A smart home in a box. Computer 2012, 46, 62–69. [Google Scholar] [CrossRef] [PubMed]

- Cook, D.J. Learning setting-generalized activity models for smart spaces. IEEE Intell. Syst. 2010, 2010, 1. [Google Scholar] [CrossRef] [PubMed]

- Saleh, M.; Abbas, M.; Le Jeannes, R.B. FallAllD: An open dataset of human falls and activities of daily living for classical and deep learning applications. IEEE Sens. J. 2020, 21, 1849–1858. [Google Scholar] [CrossRef]

- Ruzzon, M.; Carfì, A.; Ishikawa, T.; Mastrogiovanni, F.; Murakami, T. A multi-sensory dataset for the activities of daily living. Data Brief 2020, 32, 106122. [Google Scholar] [CrossRef] [PubMed]

- Ojetola, O.; Gaura, E.; Brusey, J. Data set for fall events and daily activities from inertial sensors. In Proceedings of the 6th ACM Multimedia Systems Conference, Portland, OR, USA, 18–20 March 2015; pp. 243–248. [Google Scholar]

- Pires, I.M.; Garcia, N.M.; Zdravevski, E.; Lameski, P. Activities of daily living with motion: A dataset with accelerometer, magnetometer and gyroscope data from mobile devices. Data Brief 2020, 33, 106628. [Google Scholar] [CrossRef] [PubMed]

- Ramos, R.G.; Domingo, J.D.; Zalama, E.; Gómez-García-Bermejo, J. Daily human activity recognition using non-intrusive sensors. Sensors 2021, 21, 5270. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Peng, D.; Peng, Z.; Zhang, Z.; Goebel, K.; Wu, D. Planetary gearbox fault diagnosis using bidirectional-convolutional LSTM networks. Mech. Syst. Signal Process. 2022, 162, 107996. [Google Scholar] [CrossRef]

- Liciotti, D.; Bernardini, M.; Romeo, L.; Frontoni, E. A sequential deep learning application for recognising human activities in smart homes. Neurocomputing 2020, 396, 501–513. [Google Scholar] [CrossRef]

- Xia, K.; Huang, J.; Wang, H. LSTM-CNN architecture for human activity recognition. IEEE Access 2020, 8, 56855–56866. [Google Scholar] [CrossRef]

- Lee, J.; Ahn, B. Real-time human action recognition with a low-cost RGB camera and mobile robot platform. Sensors 2020, 20, 2886. [Google Scholar] [CrossRef]

- Khan, I.U.; Afzal, S.; Lee, J.W. Human activity recognition via hybrid deep learning based model. Sensors 2022, 22, 323. [Google Scholar] [CrossRef]

- Domingo, J.D.; Gómez-García-Bermejo, J.; Zalama, E. Visual recognition of gymnastic exercise sequences. Application to supervision and robot learning by demonstration. Robot. Auton. Syst. 2021, 143, 103830. [Google Scholar] [CrossRef]

- Laput, G.; Ahuja, K.; Goel, M.; Harrison, C. Ubicoustics: Plug-and-play acoustic activity recognition. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology, Berlin, Germany, 14–17 October 2018; pp. 213–224. [Google Scholar]

- Li, Y.; Wang, L. Human Activity Recognition Based on Residual Network and BiLSTM. Sensors 2022, 22, 635. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Wan, S.; Qi, L.; Xu, X.; Tong, C.; Gu, Z. Deep learning models for real-time human activity recognition with smartphones. Mob. Netw. Appl. 2020, 25, 743–755. [Google Scholar] [CrossRef]

- Zolfaghari, S.; Loddo, A.; Pes, B.; Riboni, D. A combination of visual and temporal trajectory features for cognitive assessment in smart home. In Proceedings of the 2022 23rd IEEE International Conference on Mobile Data Management (MDM), Paphos, Cyprus, 6–9 June 2022; pp. 343–348. [Google Scholar]

- Zolfaghari, S.; Khodabandehloo, E.; Riboni, D. TraMiner: Vision-based analysis of locomotion traces for cognitive assessment in smart-homes. Cogn. Comput. 2021, 14, 1549–1570. [Google Scholar] [CrossRef]

- Home Assistant. 2022. Available online: https://www.home-assistant.io/ (accessed on 21 June 2022).

- Wireless Smart Temperature Humidity Sensor | Aqara. 2016. Available online: https://www.aqara.com/us/temperature_humidity_sensor.html (accessed on 21 June 2022).

- Xiaomi Página Oficial | Xiaomi Moviles—Xiaomi España. 2010. Available online: https://www.mi.com/es, (accessed on 26 June 2022).

- Hartmann, D. Sensor integration with zigbee inside a connected home with a local and open sourced framework: Use cases and example implementation. In Proceedings of the 2019 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 5–9 December 2019; pp. 1243–1246. [Google Scholar]

- Dinculeană, D.; Cheng, X. Vulnerabilities and limitations of MQTT protocol used between IoT devices. Appl. Sci. 2019, 9, 848. [Google Scholar] [CrossRef]

- Duque Domingo, J.; Gómez-García-Bermejo, J.; Zalama, E.; Cerrada, C.; Valero, E. Integration of computer vision and wireless networks to provide indoor positioning. Sensors 2019, 19, 5495. [Google Scholar] [CrossRef]

- Babiuch, M.; Foltỳnek, P.; Smutnỳ, P. Using the ESP32 microcontroller for data processing. In Proceedings of the 2019 20th International Carpathian Control Conference (ICCC), Krakow-Wieliczka, Poland, 26–29 May 2019; pp. 1–6. [Google Scholar]

- Home | ESPresense. 2022. Available online: https://espresense.com/ (accessed on 21 June 2022).

- Pino-Ortega, J.; Gómez-Carmona, C.D.; Rico-González, M. Accuracy of Xiaomi Mi Band 2.0, 3.0 and 4.0 to measure step count and distance for physical activity and healthcare in adults over 65 years. Gait Posture 2021, 87, 6–10. [Google Scholar] [CrossRef]

- Maragatham, T.; Balasubramanie, P.; Vivekanandhan, M. IoT Based Home Automation System using Raspberry Pi 4. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1055, 012081. [Google Scholar] [CrossRef]

- Naqvi, S.N.Z.; Yfantidou, S.; Zimányi, E. Time Series Databases and Influxdb; Université Libre de Bruxelles: Brussels, Belgium, 2017. [Google Scholar]

- Nasar, M.; Kausar, M.A. Suitability of influxdb database for iot applications. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 1850–1857. [Google Scholar] [CrossRef]

- Chakraborty, M.; Kundan, A.P. Grafana. In Monitoring Cloud-Native Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 187–240. [Google Scholar]

- Hughes, T.W.; Williamson, I.A.; Minkov, M.; Fan, S. Wave physics as an analog recurrent neural network. Sci. Adv. 2019, 5, eaay6946. [Google Scholar] [CrossRef]

- Domingo, J.D.; Zalama, E.; Gómez-García-Bermejo, J. Improving Human Activity Recognition Integrating LSTM with Different Data Sources: Features, Object Detection and Skeleton Tracking. IEEE Access 2022, in press. [CrossRef]

- Mekruksavanich, S.; Jitpattanakul, A. Lstm networks using smartphone data for sensor-based human activity recognition in smart homes. Sensors 2021, 21, 1636. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Dey, R.; Salem, F.M. Gate-variants of gated recurrent unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar]

- Chen, J.; Jiang, D.; Zhang, Y. A hierarchical bidirectional GRU model with attention for EEG-based emotion classification. IEEE Access 2019, 7, 118530–118540. [Google Scholar] [CrossRef]

| Name of Database | Houses | Multi-Person | Duration | Type and Number of Sensors | Activities |

|---|---|---|---|---|---|

| USC-HAD [16] | 1 | No | 6 h | Wearable sensors (5) | 12 |

| MIT PlaceLab [17] | 2 | No | 2–8 months | Wearable + Ambient sensors (77–84) | 10 |

| ContextAct@A4H [18] | 1 | No | 1 month | Ambient sensors + Actuators (219) | 7 |

| SIMADL [19] | 1 | No | 63 days | Ambient sensors (29) | 5 |

| MARBLE [20] | 1 | No | 16 h | Wearable + Ambient Sensors (8) | 13 |

| OPPORTUNITY [21] | 15 | No | 25 h | Wearable + Ambient sensors (72) | 18 |

| UvA [22] | 3 | No | 28 days | Ambient sensors (14) | 10–16 |

| CSL-SHARE [23] | 1 | No | 2 h | Wearable sensors (10) | 22 |

| NTU RGB+D [24] | - | Yes | - | RGB-D cameras | 60 |

| ARAS [25] | 2 | Yes | 2 months | Ambient sensors (20) | 27 |

| CASAS [26] | 7 | Yes | 2–8 months | Wearable + Ambient sensors (20–86) | 11 |

| ADL [29] | 1 | No | 2 h | Wearable sensors (6) | 9 |

| Cogent-House [30] | 1 | No | 23 min | Wearable sensors (12) | 11 |

| Pires, I. et al. [31] | 1 | No | 14 h | Smartphone sensors | 5 |

| SDHAR-HOME (Proposed) | 1 | Yes | 2 months | Wearable + Ambient sensors (35) + Positioning (7) | 18 |

| Activities | ||

|---|---|---|

| Bathroom Activity | Chores | Cook |

| Dishwashing | Dress | Eat |

| Laundry | Make Simple Food | Out Home |

| Pet Care | Read | Relax |

| Shower | Sleep | Take Meds |

| Watch TV | Work | Other |

| Room | Activity | Sensors | Location |

|---|---|---|---|

| Bedroom | Sleep | PIR | Wall |

| Vibration | Bed | ||

| Light | Wall | ||

| Dress | PIR | Wall | |

| Contact | Wardrobe | ||

| Vibration | Wardrobe | ||

| Read | PIR | Wall | |

| Light | Wall | ||

| Bathroom | Take Meds | PIR | Wall |

| Contact | Drawer | ||

| Bathroom Activity | PIR | Wall | |

| Light | Wall | ||

| Shower | PIR | Wall | |

| Light | Wall | ||

| Temp.+Hum. | Wall | ||

| Kitchen | Cook | PIR | Wall |

| Temp.+Hum. | Wall | ||

| Contact | Appliances | ||

| Dishwashing | PIR | Wall | |

| Contact | Dishwasher | ||

| Make Simple Food | PIR | Wall | |

| Contact | Wardrobes | ||

| Eat | PIR | Wall | |

| Light | Wall | ||

| Vibration | Chair | ||

| Pet Care | PIR | Wall | |

| Vibration | Bowl | ||

| Laundry | PIR | Wall | |

| Consumption | Washer | ||

| Study Room | Work | PIR | Wall |

| Vibration | Chair | ||

| Lounge | Watch TV | PIR | Wall |

| Consumption | TV | ||

| Vibration | Sofa | ||

| Relax | PIR | Wall | |

| Vibration | Sofa | ||

| Hall | Out Home | PIR | Wall |

| Contact | Door | ||

| Chores | PIR | Wall | |

| Contact | Wardrobe |

| Type of Sensor | Reference | Total Number |

|---|---|---|

| Aqara Motion Sensor (M) | RTCGQ11LM | 8 |

| Aqara Door and Window Sensor (C) | MCCGQ11LM | 8 |

| Aqara Temperature and Humidity Sensor (TH) | WSDCGQ11LM | 2 |

| Aqara Vibration Sensor (V) | DJT11LM | 11 |

| Xiaomi Mi ZigBee Smart Plug (P) | ZNCZ04LM | 2 |

| Xiaomi MiJia Light Intensity Sensor (L) | GZCGQ01LM | 2 |

| TOTAL = 33 |

| User | Metrics | RNN Network | LSTM Network | GRU Network |

|---|---|---|---|---|

| User 1 | Accuracy | 89.59% | 89.63% | 90.91% |

| Epochs | 40 | 32 | 41 | |

| Training time | 18,222 s | 35,616 s | 56,887 s | |

| Test time | 32 s | 47 s | 110 s | |

| Parameters | 1,515,410 | 2,508,050 | 2,177,554 | |

| User 2 | Accuracy | 86.26% | 88.29% | 86.21% |

| Epochs | 132 | 40 | 70 | |

| Training time | 67,115 s | 50,209 s | 111,223 s | |

| Test time | 32 s | 94 s | 110 s | |

| Parameters | 1,515,410 | 2,508,050 | 2,177,554 |

| Activity | Precision | Recall | F1-Score |

|---|---|---|---|

| Bathroom Activity | 0.87–0.82 | 0.92–0.99 | 0.89–0.90 |

| Chores | 0.99–0.64 | 0.59–1.00 | 0.74–0.78 |

| Cook | 0.96–0.72 | 0.79–1.00 | 0.86–0.84 |

| Dishwashing | 0.64–1.00 | 0.55–1.00 | 0.59–1.00 |

| Dress | 0.94–0.40 | 1.00–1.00 | 0.97–0.57 |

| Eat | 0.97–0.97 | 0.87–0.95 | 0.92–0.96 |

| Laundry | 1.00–0.49 | 1.00–1.00 | 1.00–0.66 |

| Make Simple Food | 0.98–0.80 | 0.92–0.52 | 0.95–0.63 |

| Out Home | 0.94–1.00 | 0.96–0.91 | 0.95–0.95 |

| Pet | 0.97–0.88 | 1.00–1.00 | 0.98–0.93 |

| Read | 0.91–0.58 | 1.00–0.54 | 0.95–0.56 |

| Relax | 0.42–0.82 | 0.91–0.84 | 0.58–0.83 |

| Shower | 0.85–0.98 | 0.86–1.00 | 0.85–0.99 |

| Sleep | 1.00–0.92 | 0.83–0.87 | 0.90–0.89 |

| Take Meds | 1.00–0.76 | 0.93–0.94 | 0.97–0.84 |

| Watch TV | 0.94–0.69 | 1.00–0.94 | 0.97–0.80 |

| Work | 0.99–0.95 | 1.00–1.00 | 1.00–0.97 |

| Other | 0.53–0.70 | 0.93–0.75 | 0.68–0.72 |

| Accuracy | 0.91–0.88 | ||

| Macro avg. | 0.88–0.78 | 0.89–0.90 | 0.88–0.82 |

| Weighted avg. | 0.93–0.92 | 0.91–0.88 | 0.91–0.90 |

| Predicted | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bathroom Activity | Chores | Cook | Dishwashing | Dress | Eat | Laundry | Make Simple Food | Out Home | Pet | Read | Relax | Shower | Sleep | Take Meds | Watch TV | Work | Other | ||

| Actual | Bathroom Activity | 0.92 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.05 | 0 | 0 | 0 | 0.02 | 0 | 0 | 0 | 0 | 0.01 |

| Chores | 0 | 0.59 | 0 | 0 | 0 | 0 | 0 | 0 | 0.35 | 0 | 0 | 0.04 | 0 | 0 | 0 | 0 | 0 | 0.01 | |

| Cook | 0 | 0 | 0.79 | 0.07 | 0 | 0.10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.05 | 0 | 0 | |

| Dishwashing | 0 | 0 | 0 | 0.55 | 0 | 0.12 | 0 | 0 | 0.26 | 0 | 0 | 0 | 0 | 0 | 0 | 0.07 | 0 | 0 | |

| Dress | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Eat | 0.03 | 0 | 0 | 0 | 0 | 0.87 | 0 | 0 | 0.01 | 0 | 0.01 | 0.02 | 0 | 0 | 0 | 0.06 | 0 | 0 | |

| Laundry | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Make Simple Food | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.92 | 0.03 | 0 | 0 | 0 | 0 | 0 | 0 | 0.05 | 0 | 0 | |

| Out Home | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.96 | 0 | 0 | 0.04 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Pet | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Read | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Relax | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.91 | 0 | 0 | 0 | 0.09 | 0 | 0 | |

| Shower | 0.14 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.86 | 0 | 0 | 0 | 0 | 0 | |

| Sleep | 0.01 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.05 | 0 | 0 | 0 | 0 | 0.83 | 0 | 0 | 0 | 0.11 | |

| Take Meds | 0.01 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.01 | 0 | 0 | 0.93 | 0 | 0 | 0.05 | |

| Watch TV | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | |

| Work | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | |

| Other | 0 | 0 | 0.01 | 0 | 0 | 0.01 | 0 | 0 | 0 | 0 | 0.02 | 0.01 | 0 | 0.01 | 0 | 0.01 | 0 | 0.93 | |

| Predicted | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bathroom Activity | Chores | Cook | Dishwashing | Dress | Eat | Laundry | Make Simple Food | Out Home | Pet | Read | Relax | Shower | Sleep | Take Meds | Watch TV | Work | Other | ||

| Actual | Bathroom Activity | 0.99 | 0 | 0 | 0 | 0.01 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Chores | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Cook | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Dishwashing | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Dress | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Eat | 0 | 0 | 0 | 0 | 0 | 0.95 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.05 | 0 | 0 | |

| Laundry | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Make Simple Food | 0 | 0 | 0.41 | 0 | 0 | 0.01 | 0 | 0.52 | 0 | 0 | 0.01 | 0 | 0 | 0.01 | 0 | 0.01 | 0.03 | 0 | |

| Out Home | 0 | 0 | 0 | 0 | 0.04 | 0 | 0 | 0 | 0.91 | 0 | 0 | 0.01 | 0 | 0.04 | 0 | 0 | 0 | 0 | |

| Pet | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Read | 0.01 | 0.02 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.54 | 0 | 0 | 0 | 0 | 0.42 | 0 | 0 | |

| Relax | 0 | 0.01 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.84 | 0 | 0 | 0 | 0.15 | 0 | 0 | |

| Shower | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | 0 | 0 | 0 | 0 | |

| Sleep | 0.01 | 0 | 0 | 0 | 0.03 | 0 | 0 | 0 | 0 | 0 | 0.02 | 0 | 0 | 0.87 | 0 | 0.02 | 0 | 0.05 | |

| Take Meds | 0.02 | 0 | 0 | 0 | 0.04 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.94 | 0 | 0 | 0 | |

| Watch TV | 0 | 0 | 0 | 0 | 0 | 0 | 0.01 | 0 | 0 | 0 | 0 | 0.04 | 0 | 0 | 0 | 0.94 | 0 | 0 | |

| Work | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1.00 | 0 | |

| Other | 0.01 | 0.01 | 0.01 | 0 | 0 | 0.01 | 0.01 | 0.01 | 0 | 0 | 0.01 | 0.06 | 0 | 0.13 | 0 | 0 | 0 | 0.75 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramos, R.G.; Domingo, J.D.; Zalama, E.; Gómez-García-Bermejo, J.; López, J. SDHAR-HOME: A Sensor Dataset for Human Activity Recognition at Home. Sensors 2022, 22, 8109. https://doi.org/10.3390/s22218109

Ramos RG, Domingo JD, Zalama E, Gómez-García-Bermejo J, López J. SDHAR-HOME: A Sensor Dataset for Human Activity Recognition at Home. Sensors. 2022; 22(21):8109. https://doi.org/10.3390/s22218109

Chicago/Turabian StyleRamos, Raúl Gómez, Jaime Duque Domingo, Eduardo Zalama, Jaime Gómez-García-Bermejo, and Joaquín López. 2022. "SDHAR-HOME: A Sensor Dataset for Human Activity Recognition at Home" Sensors 22, no. 21: 8109. https://doi.org/10.3390/s22218109

APA StyleRamos, R. G., Domingo, J. D., Zalama, E., Gómez-García-Bermejo, J., & López, J. (2022). SDHAR-HOME: A Sensor Dataset for Human Activity Recognition at Home. Sensors, 22(21), 8109. https://doi.org/10.3390/s22218109