Abstract

A random matrix needs large storage space and is difficult to be implemented in hardware, and a deterministic matrix has large reconstruction error. Aiming at these shortcomings, the objective of this paper is to find an effective method to balance these performances. Combining the advantages of the incidence matrix of combinatorial designs and a random matrix, this paper constructs a structured random matrix by the embedding operation of two seed matrices in which one is the incidence matrix of combinatorial designs, and the other is obtained by Gram–Schmidt orthonormalization of the random matrix. Meanwhile, we provide a new model that applies the structured random matrices to semi-tensor product compressed sensing. Finally, compared with the reconstruction effect of several famous matrices, our matrices are more suitable for the reconstruction of one-dimensional signals and two-dimensional images by experimental methods.

1. Introduction

In the era of data explosion, with the increasing amount of information, data acquisition, transmission and storage devices are facing increasingly severe pressure. At the same time, the data processing process will also be accompanied by the risk of information disclosure. The loss of some data may threaten the safety of life and property, and now, data disclosure is common. Therefore, in the era of big data, people urgently need to find a new data processing technique to decrease the risk of data leakage during information processing and release the pressure of hardware equipment such as internal storage and sensors.

Compressed sensing (CS) theory can be used for signal acquisition, encoding and decoding [1]. No matter what type of signals, sparse or compressible representations always exist in the original domain or in some transform domains. During transmission, the linear projection value that far lower than the traditional Nyquist sampling can be used to realize the accurate or high probability reconstruction of the signal. For a discrete signal , the standard model of CS is

where is a measurement matrix, and is the corresponding measurement vector.

It shows that a vector x of n-dimensional can be compressed into a vector y of m-dimensional by CS. Therefore, the compression ratio can be represented by .

If a measurement vector y is given, it is urgently important to reconstruct x by measurement matrix . However, this problem is usually NP-hard [2]. If there are less than non-zero elements in a signal x, then the signal x is k-sparse. Candès and Tao confirmed that if a signal x is k-sparse and meets the restricted isometry property (RIP), then y can accurately reconstruct x [3] by solving the following equation,

where .

Since -norm is a convex function, it is common method to replace with in CS, i.e.,

where .

For a k-sparse signal , and a matrix , if there exists a constant such that

where , then the matrix is said to satisfy the RIP of order k, and the smallest is defined as the restricted isometry constant (RIC) of order k.

Another important standard is coherence [4] in measurement matrices of CS.

Let , where is i-th column of , . Then, the coherence of can be expressed by the following equation

where denotes the Hermite inner product of and .

There is a relationship between the coherence and RIP of a matrix as follows.

If is a unit-norm matrix and , then is said to satisfy the RIP of order k with for all .

Furthermore, for a matrix with size -dimensional, the coherence of can be represented by Welch bound as follows [5]

The main problem in CS is to find deterministic constructions based on coherence which beat this square root bound.

In CS theory, measurement matrices are not only the vital step to guarantee the quality of signal sampling but also the vital step to determine the difficulty of compressed sampling hardware implementation. There are two main types of measurement matrices. One is random matrices. Random matrices consist of Gaussian matrices, Bernoulli matrices, local Fourier matrices and so on [6,7,8,9,10,11]. Although these matrices can reconstruct the original signals well, they are hard to be implemented in hardware, and the matrix elements require a lot of storage space. Some scholars have proposed using the Toplitz matrices to construct the measurement matrices [12,13]. Although the Toplitz matrices can save some storage space, it is still difficult to be implemented in hardware. Deterministic matrices can improve the transmission efficiency and reduce the storage space [14,15], but they have large reconstruction errors. When constructing this kind of matrices, as long as the system and construction parameters are determined, the size and elements of the matrix will also be determined. DeVore used polynomials over finite field to construct measurement matrices in [16]. Li et al. gave a construction method of a sparse measurement matrix based on algebraic curves in [17]. The main tools for constructing deterministic measurement matrices are coding [18,19,20,21,22], geometry over finite fields [23,24,25,26,27,28], design theory [29,30,31,32], and so on.

Compared with CS, for signals of the same size, the advantage of semi-tensor product compressed sensing (STP-CS) is that the number of columns of the measurement matrices can be a factor of CS, which greatly reduces the storage space of measurement matrices. Therefore, we are more interested in the research of STP-CS. The main contribution of the paper is to give a construction of structured random matrices and apply these matrices to STP-CS. The structured random matrices can be obtained by the embedding operation of two seed matrices in which one is determined, and the other is random. In addition, as long as the system and constructed parameters generate structured random matrices, the size of the matrix is determined, but the elements of the matrix are arranged in a structured random manner. When transmitting and storing the matrix, the system, constructed parameters and a random seed matrix need to be transmitted or stored, which can improve the transmission efficiency and reduce the storage scale of a random matrix. Compared with random matrices, the structured random matrices overcome the disadvantage of large storage space of random matrices and is relatively convenient for hardware implementation. Compared with deterministic matrices, the structured random matrices have good reconstruction accuracy. Therefore, a structured random matrix has greater application value in STP-CS model.

Aiming at existing shortcomings—a random matrix needs large storage space and is difficult to be implemented in hardware, and a deterministic matrix has large reconstruction error—the objective of this paper is to find an effective method to balance these performances. The main contributions of our work are summarized as follows:

- A construction method of structured random matrices is given, where one is the incidence matrices of combinatorial designs, and the other is obtained by the Gram–Schmidt orthonormalization of random matrices.

- A STP-CS model based on the structured random matrices is proposed.

- Experimental results indicate that our matrices are more suitable for the reconstruction of one-dimensional signals and two-dimensional images.

The difference between this paper and previous works [14,31] is as follows:

- The measurement matrices constructed in this paper are structured random matrices, while the measurement matrices constructed in [14,31] are determined matrices.

- This paper studies STP-CS model, while [14] studies the block compressed sensing model (BCS), and [31] studies CS model.

The details of each section are as follows. Section 2 introduces some related knowledge. Section 3 proposes a new model, which applies the structured random matrices to STP-CS. Section 4 gives simulation experiments, analyzes and compares the performance of our matrices with several famous matrices.

2. Preliminaries

In this section, projective geometry [33], balanced incomplete block design [34], embedding operation of binary matrix [35] and semi-tensor product compressed sensing [36] are introduced.

2.1. Projective Geometry

Let be the finite field with q elements. is the -dimensional row vector space over , where q is a prime power, and n is a positive integer. The 1-dimensional, 2-dimensional, 3-dimensional, and n-dimensional vector subspaces of are called points, lines, planes, and hyperplanes, respectively. In general, the -dimensional vector subspaces of are called projective r-flats, or simply r-flats . Thus, 0-flats, 1-flats, 2-flats, and -flats are points, lines, planes, and hyperplanes, respectively. If an r-flat as a vector subspace contains or is contained in an s-flat as a vector subspace, then the r-flat is called incidented with the s-flats. Then, the set of points, i.e., the set of 1-dimensional vector subspaces of , together with the r-flats and the incidence relation among them defined above is said to be the n-dimensional projective space over and is denoted by .

2.2. Balanced Incomplete Block Design

Definition 1.

Let be positive integers, and . For a finite set , a subset family of x, where are called points, are called blocks, if

There are k points in each block;

Each point in x appears in r blocks;

Each pair of distinct points is contained in exactly λ blocks.

Then is called a balanced incomplete block design or simply -.

Definition 2.

For a -, if (or or ), then this design is symmetric. Symmetric is simply denoted by .

2.3. Embedding Operation of Binary Matrix

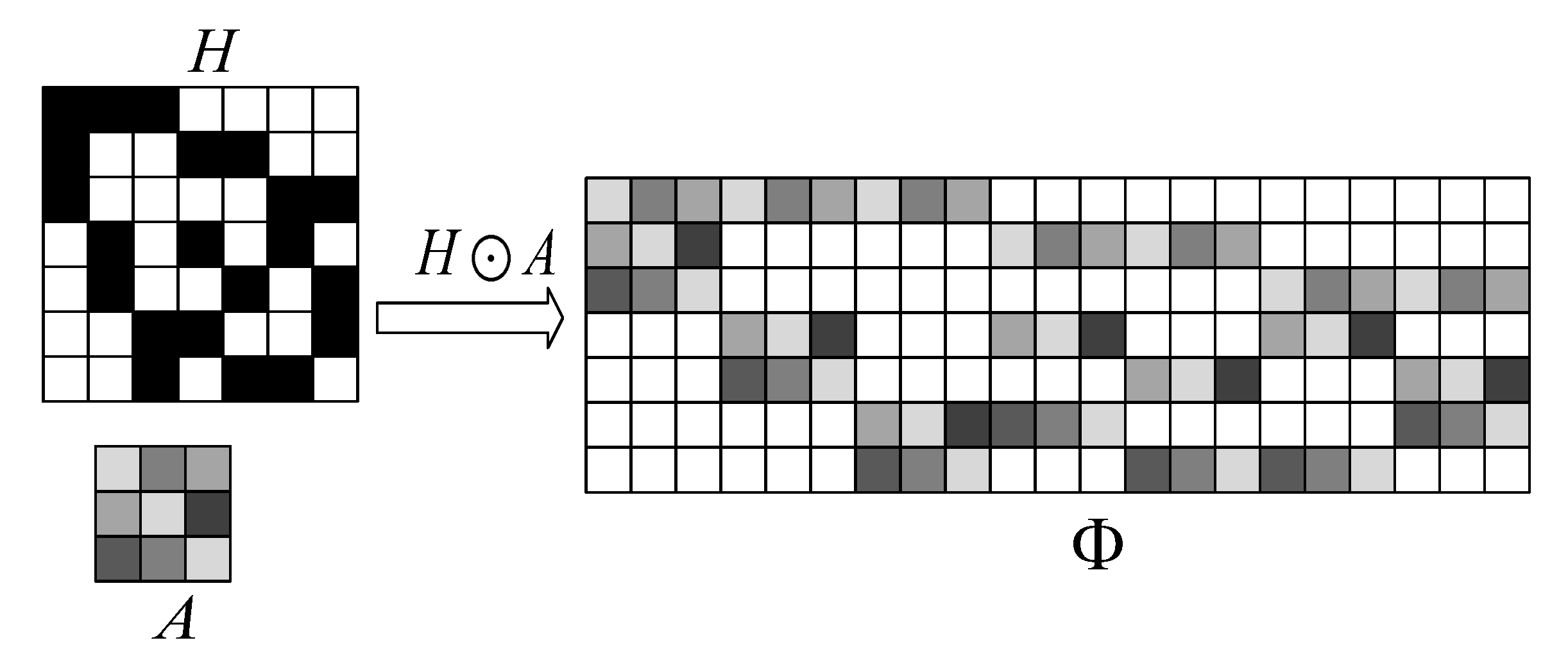

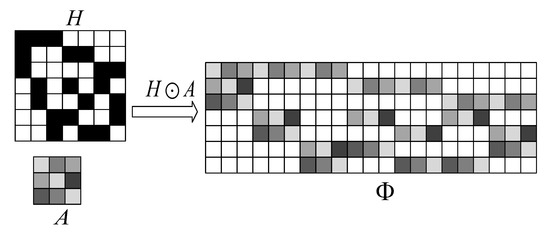

Definition 3.

Let , where is the i-th column of H, has d “1", . In addition, A is a matrix with size -dimensional, each element 1 in is substitute for a distinct row of A, and each element 0 is substitute for the row vector . The result matrix Φ is expressed as

and Φ is an -dimensional matrix, where denotes the embedding operation of the matrix A in the matrix H.

The specific process of the above embedding operation is shown in Figure 1.

Figure 1.

The specific process of A as the embedding matrix in matrix H.

2.4. Semi-Tensor Product Compressed Sensing

Definition 4.

Let x be a row vector with size -dimensional and be a column vector with size p-dimensional. Split x into p blocks, named ; the size of each block is n-dimensional. The semi-tensor product (STP) is defined as

Definition 5.

Let and ; then, the STP of A and B is defined as follows,

C has blocks as and each block is

where is the i-th row of A and is the j-th column of B.

For a signal and a measurement matrix (), the STP-CS model [36] is as follows

where and .

Similarly, we can also define the STP-CS by using Kronecker product as follows

where is a -dimensional identity matrix, is a positive integer, and denotes the Kronecker product.

Theorem 1.

The measurement matrix has coherence

3. Construction of Structured Random Measurement Matrices in STP-CS

Compared with CS, for signals of the same size, the advantage of STP-CS is that the number of columns of the measurement matrix can be a factor of CS, which greatly reduces the storage space of measurement matrices. Compared with measurement matrices in STP-CS, the structured random matrices only need to store two seed matrices instead of the whole matrix. To sum up, the structured matrices have lower storage space in STP-CS. In this section, we give a new model that applies the structured random matrices to STP-CS.

3.1. Construction of -SBIBD

The 1-dimensional projective space over only has points, so it is less interesting. So, let us start our discussion with the 2-dimensional projective planes . In , there are points and lines; every line contains points and every point passes through lines; any two different points are connected by exactly one line; any two different lines intersect in exactly one point. It is easy to find that

(i) A finite projective plane of order q is -BIBD. A block is called a line in a finite projective plane.

(ii) For the parameter set , , of a BIBD, we must have and, hence, . So, -BIBD is necessarily symmetric, and it is simply denoted by -SBIBD.

Based on this, for -SBIBD, we assume that is a set of points, and is a set of blocks. The incidence matrix of -SBIBD is defined by

whose rows are marked by and columns are marked by , and

Obviously, M has the same row-degree and column-degree, both of which are .

Theorem 2.

If the incidence matrix of - is M. Then, the matrix M has coherence .

In the following, the relationship between some known projective planes and BIBD is shown in Table 1.

Table 1.

The relationship between some known projective planes and BIBD.

3.2. Gram–Schmidt Orthonormalization

Let be a random matrix, where denotes the i-th column of A, . In order to ensure that the random matrix A has small coherence, all columns in matrix A are Gram–Schmidt orthonormalization, and the process is as follows

Let ,

,

⋮

.

Then, , , ⋯, are normalized, i.e.,

,

In this way, we obtain a normalized orthogonal matrix C of matrix A.

Remark 1.

According to Definition 3, let ; there are two cases in the following

- If A is a deterministic matrix, then C must also be deterministic. Therefore, Φ is a deterministic matrix;

- If A is a random matrix, then C must also be random. Therefore, Φ is a structured random matrix.

There are many researches on deterministic matrices and random matrices, but few on structured random matrices. Combining the advantages of random matrices and the incidence matrices of combinatorial designs, this paper constructs the structured random measurement matrices and applied them in STP-CS.

3.3. Sampling Model

In the following, we consider as a measurement matrix in STP-CS. Let p be a positive integer and satisfy . For a signal , a novel semi-tensor product compressed sensing model by the embedding operation (STP-CS-EO) is given in the following

then .

According to Theorem 1, it finds that

Remark 2.

Let be a discrete signal, where N is a positive integer. For , we present a comparison of CS, Kronecker product compressed sensing (KP-CS), block compressed sampling based on the embedding operation (BCS-EO), STP-CS, Kronecker product semi-tensor product compressed sensing (KP-STP-CS) and semi-tensor product compressed sensing based on the embedding operation (STP-CS-EO). Table 2 lists the comparison of storage space and sampling complexity of the measurement matrices corresponding to the above six sampling models. Sampling complexity is defined by the multiplication times between a matrix and a vector in the sampling process. For STP-CS, t is a positive integer and satisfies , . For signals of the same size, the advantage of STP-CS is that the number of columns of the measurement matrix can be a factor of CS. For KP-CS and KP-STP-CS, is a -dimensional identity matrix, where p is a positive integer and satisfies , . For BCS-EO and STP-CS-EO, and have column-degree d, and and have size -dimensional, where d is a positive integer and satisfies . Compared with CS, KP-CS, BCS-EO, STP-CS and KP-STP-CS, the STP-CS-EO model has lower storage space and lower sampling complexity if or if or or .

Table 2.

The comparison of storage space and sampling complexity of the measurement matrices corresponding to six sampling models.

In the following, we calculate the coherence of the matrix .

Theorem 3.

Let M be the incidence matrix of -SBIBD and be a -dimensional normalized orthogonal random matrix; then, there is a construction of structured random measurement matrices for a -dimensional matrix with coherence , where .

Proof of Theorem 3.

According to , then has size -dimensional. Let , where is the i-th column of M, . is a -dimensional normalized orthogonal random matrix. For any two columns and in ,

(1) If and correspond to the same column in M, then we have

since C is a orthogonal matrix;

(2) If and correspond to two different columns and in M, then we have

since C is a normalized matrix, where and are the elements of matrix C, .

Therefore, has coherence .

4. Experimental Simulation

In this section, our measurement matrices are compared with several famous matrices. Simulation results show that our matrices can be regarded as an effective signal processing method.

4.1. Reconstruction of 1-Dimensional Signals

Let x be a signal. We select the orthogonal matching pursuit (OMP) [37] algorithm and the basis pursuit (BP) [38] algorithm to solve the -minimization problem, where the solution is represented by . The definition of the reconstruction Signal-to-Noise Ratio (SNR) of x is

For noiseless recovery, if , then the signal x is called perfect recovery. For every sparsity order, we reconstruct 1000 noiseless signals to calculate the perfect recovery percentage.

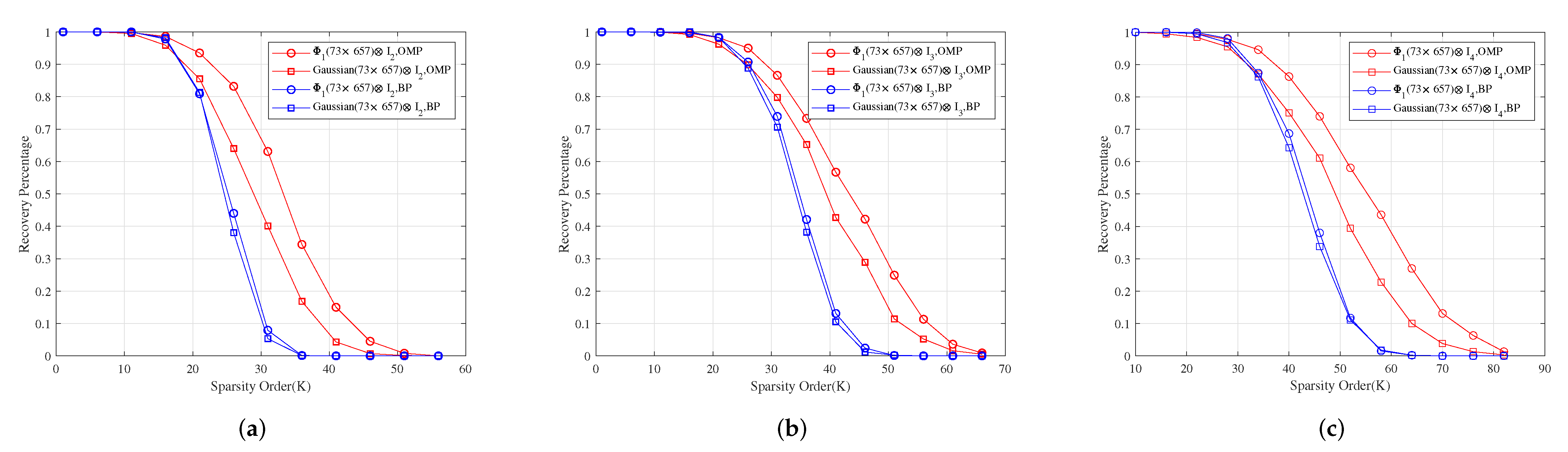

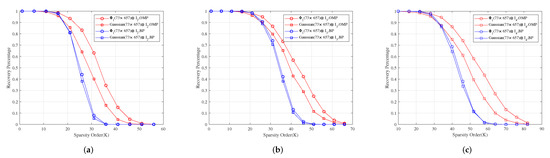

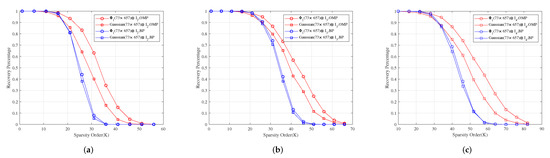

Example 1.

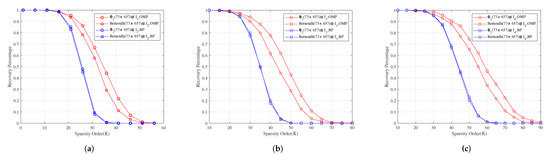

Let be the incidence matrix of -SBIBD. Then, we construct three structured random measurement matrices , and , where , , are a normalized orthogonal matrix of -dimensional Gaussian, Bernoulli, and Toeplitz matrix, respectively.

For measurement matrices , and , Figure 2a–c show for different sparsity orders the perfect recovery percentages of -dimensional, -dimensional and -dimensional sparse signals, respectively. It shows that the reconstruction effects of , and are better than those of , and under OMP obviously, respectively, and their reconstruction effects are similar to those of , and under BP, respectively.

Figure 2.

The relationship between the perfect recovery percentage and sparsity order of sparse signals under OMP and BP. , and are the corresponding measurement matrices in (a–c), respectively.

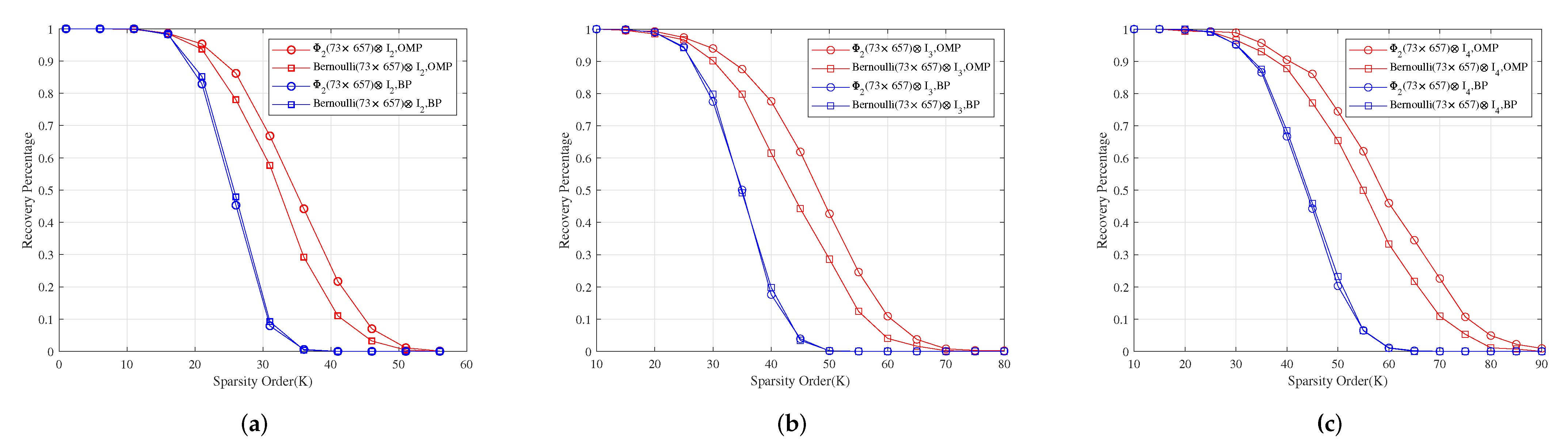

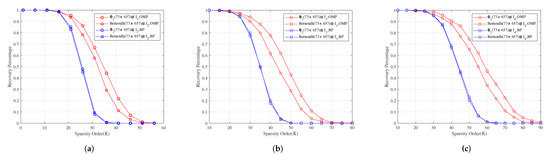

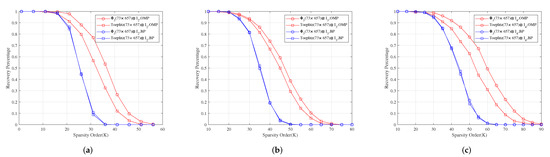

For measurement matrices , and . Figure 3a–c show for different sparsity orders the perfect recovery percentages of -dimensional, -dimensional and -dimensional sparse signals, respectively. It shows that the reconstruction effects of , and are better than those of , and under OMP obviously, respectively, and their reconstruction effects are similar to those of , and under BP, respectively.

Figure 3.

The relationship between the perfect recovery percentage and sparsity order of sparse signals under OMP and BP. , and are the corresponding measurement matrices in (a–c), respectively.

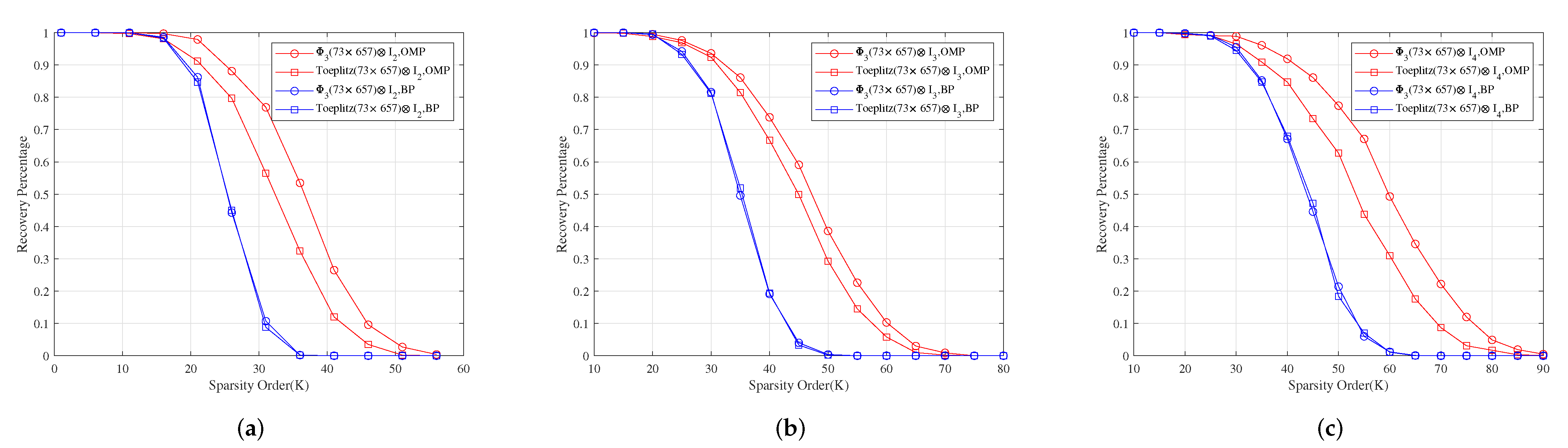

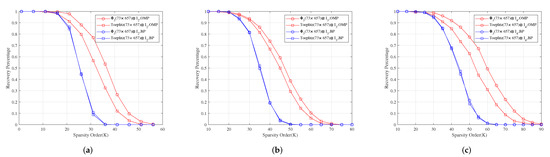

For measurement matrices , and . Figure 4a–c show for different sparsity orders the perfect recovery percentages of -dimensional, -dimensional and -dimensional sparse signals, respectively. It shows that the reconstruction effects of , and are better than those of , and under OMP obviously, respectively, and their reconstruction effects are similar to those of , and under BP, respectively.

Figure 4.

The relationship between the perfect recovery percentage and sparsity order of sparse signals under OMP and BP. , and are the corresponding measurement matrices in (a–c), respectively.

Example 2.

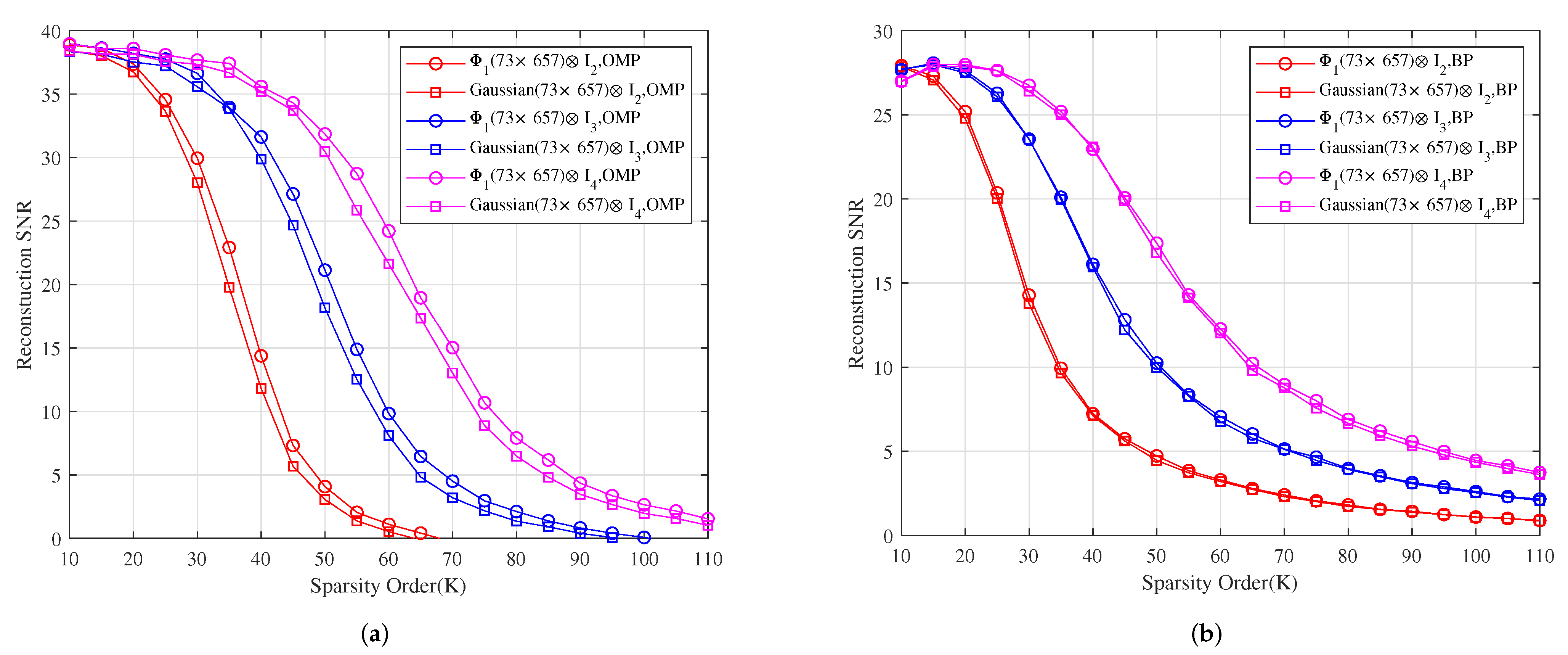

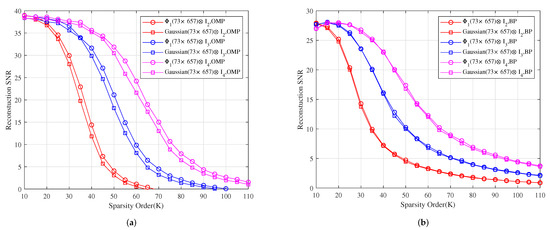

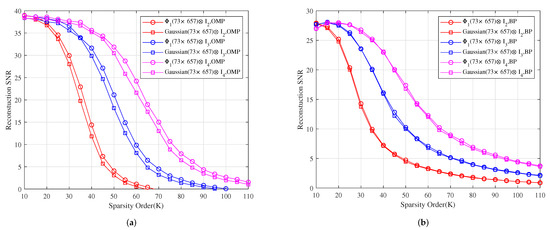

Let be the additive white Gaussian noise with SNR 50 dB. Figure 5 shows the reconstruction SNR comparison of , and with Gaussian, Gaussian and Gaussian under OMP and BP, respectively. It shows that the reconstruction SNR effects of , and are better than those of , and under OMP, respectively, and their reconstruction SNR effects are similar to those of , and under BP, respectively.

Figure 5.

The relationship between the reconstruction SNR and sparsity order of sparse signals under OMP and BP. (a) The reconstruction SNR comparison of , and with Gaussian, Gaussian and Gaussian under OMP, respectively. (b) The reconstruction SNR comparison of , and with Gaussian, Gaussian and Gaussian under BP, respectively.

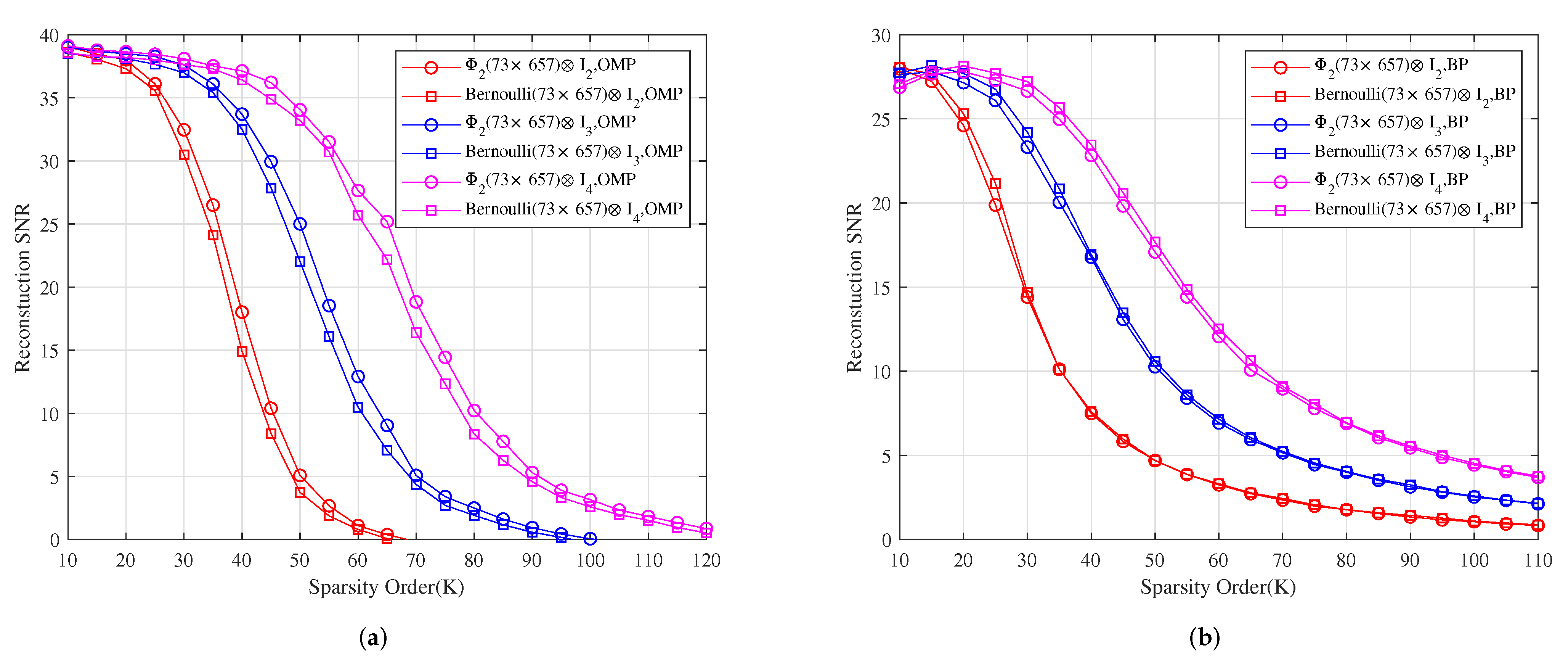

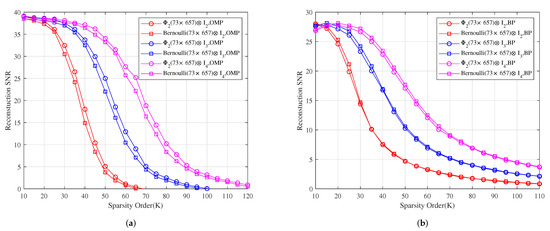

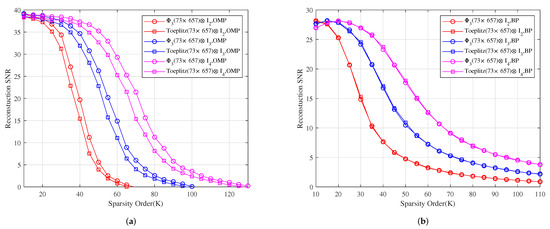

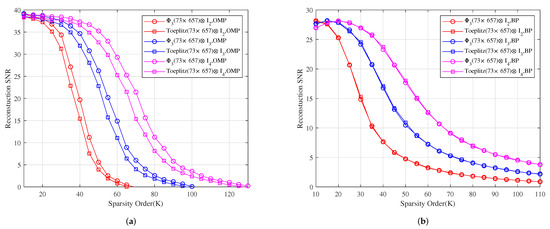

Figure 6 shows the reconstruction SNR comparison of , and with , and under OMP and BP, respectively. It shows that the reconstruction SNR effects of , and are better than those of , and under OMP, respectively, and their reconstruction SNR effects are similar to those of , and under BP, respectively.

Figure 6.

The relationship between the reconstruction SNR and sparsity order of sparse signals under OMP and BP. (a) The reconstruction SNR comparison of , and with Bernoulli, Bernoulli and Bernoulli under OMP, respectively. (b) The reconstruction SNR comparison of , and with Bernoulli, Bernoulli and Bernoulli under BP, respectively.

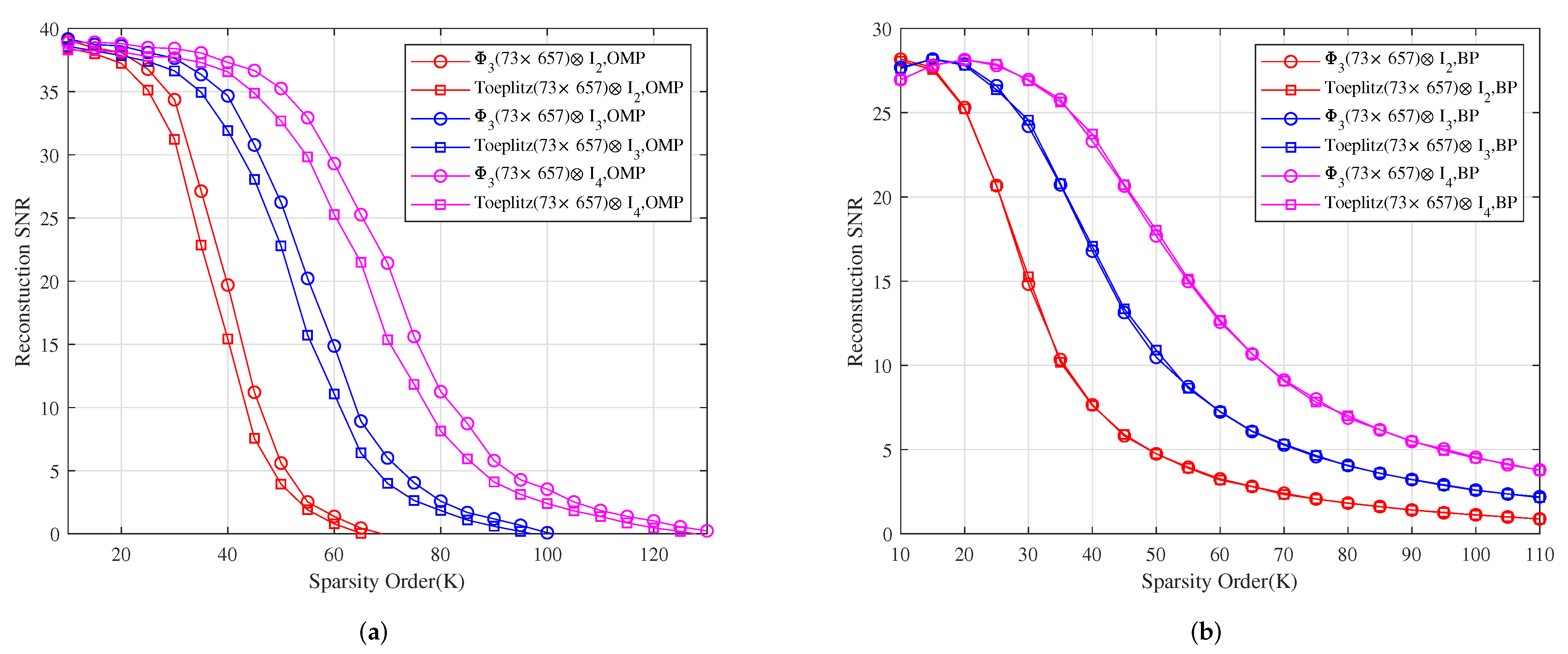

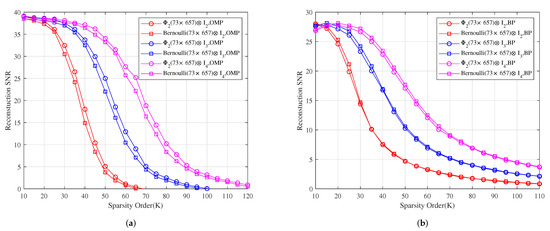

Figure 7 shows the reconstruction SNR comparison of , and with Toeplitz, Toeplitz and Toeplitz under OMP and BP, respectively. It shows that the reconstruction SNR effects of , and are better than those of , and under OMP, respectively, and their reconstruction SNR effects are similar to those of , and under BP, respectively.

Figure 7.

The relationship between the reconstruction SNR and sparsity order of sparse signals under OMP and BP. (a) The reconstruction SNR comparison of , and with Toeplitz, Toeplitz and Toeplitz under OMP, respectively. (b) The reconstruction SNR comparison of , and with Toeplitz, Toeplitz and Toeplitz under BP, respectively.

In applications, the original signal is always disturbed by channel noise. For noisy recovery, the original signal is polluted by additive white Gaussian noise . Therefore, if is a measurement matrix, then

where and . For every sparsity order, we calculate the reconstruction SNR by reconstructing 1000 noisy signals.

Furthermore, the original signals usually approach to sparse, and the measurement vector may also be polluted by the noise in the measurement domain. Hence, we study the noise recovery effect of our matrices in the actual STP-CS,

where denotes noise in the measurement domain, and denotes noise in the data-domain.

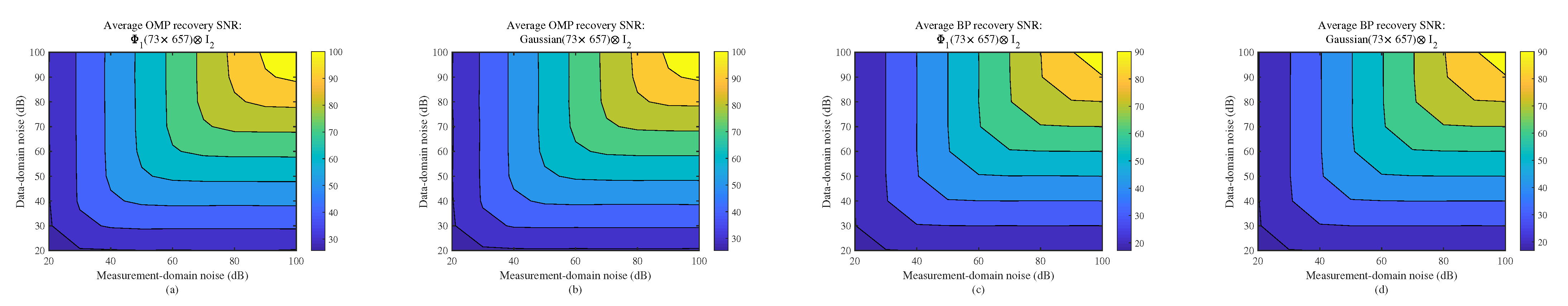

Example 3.

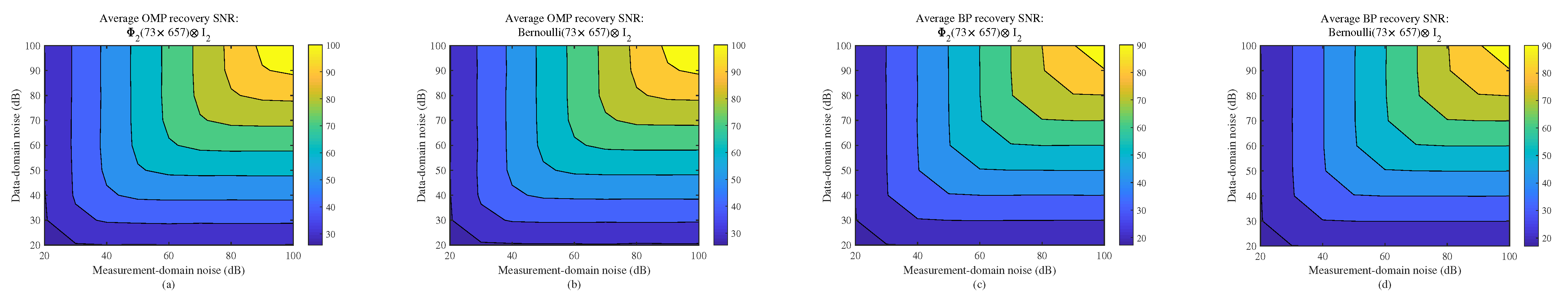

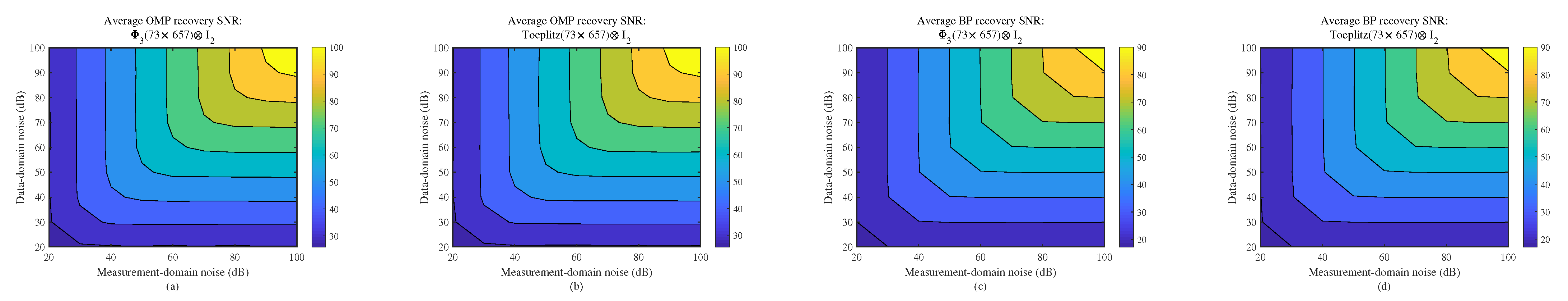

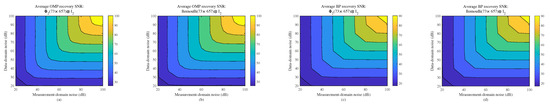

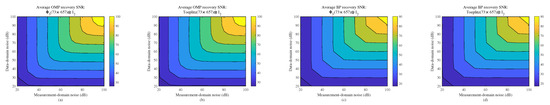

Let , be the additive white Gaussian noise with SNR 20–100 dB. Figure 8, Figure 9 and Figure 10 show the comparison average recovery SNR for , and with Gaussian, Bernoulli and Toeplitz under OMP and BP, respectively. The stable and robust empirical effects of , and are similar to Gaussian, Bernoulli and Toeplitz, respectively.

Figure 8.

For sparsity order , the relationship of average recovery SNR and noise in measurement domain and data domain. (a) Average recovery SNR of as the measurement matrix under OMP. (b) Average recovery SNR of Gaussian as the measurement matrix under OMP. (c) Average recovery SNR of as the measurement matrix under BP. (d) Average recovery SNR of Gaussian as the measurement matrix under BP.

Figure 9.

For sparsity order , the relationship of average recovery SNR and noise in measurement domain and data domain. (a) Average recovery SNR of as the measurement matrix under OMP. (b) Average recovery SNR of Bernoulli as the measurement matrix under OMP. (c) Average recovery SNR of as the measurement matrix under BP. (d) Average recovery SNR of Bernoulli as the measurement matrix under BP.

Figure 10.

For sparsity order , the relationship of average recovery SNR and noise in measurement domain and data domain. (a) Average recovery SNR of as the measurement matrix under OMP. (b) Average recovery SNR of Toeplitz as the measurement matrix under OMP. (c) Average recovery SNR of as the measurement matrix under BP. (d) Average recovery SNR of Toeplitz as the measurement matrix under BP.

4.2. Reconstruction of 2-Dimensional Images

In this subsection, we select the orthogonal matching pursuit (OMP) algorithm, basis pursuit (BP) algorithm, iterative soft thresholding (IST) [39] algorithm and subspace pursuit (SP) [40] algorithm for testing. When CS reconstructs a gray image, it is hard to judge the distortion of the reconstructed image by the naked eye and other subjective ways. Hence, it is necessary to give an important parameter to truly evaluate the quality of the reconstructed image; that is, the definition of peak signal-to-noise ratio (PSNR) is as follows:

where represents the normalized mean square error, that is

where represents the image size, and are the gray values of the original image and the reconstructed image at the point , respectively.

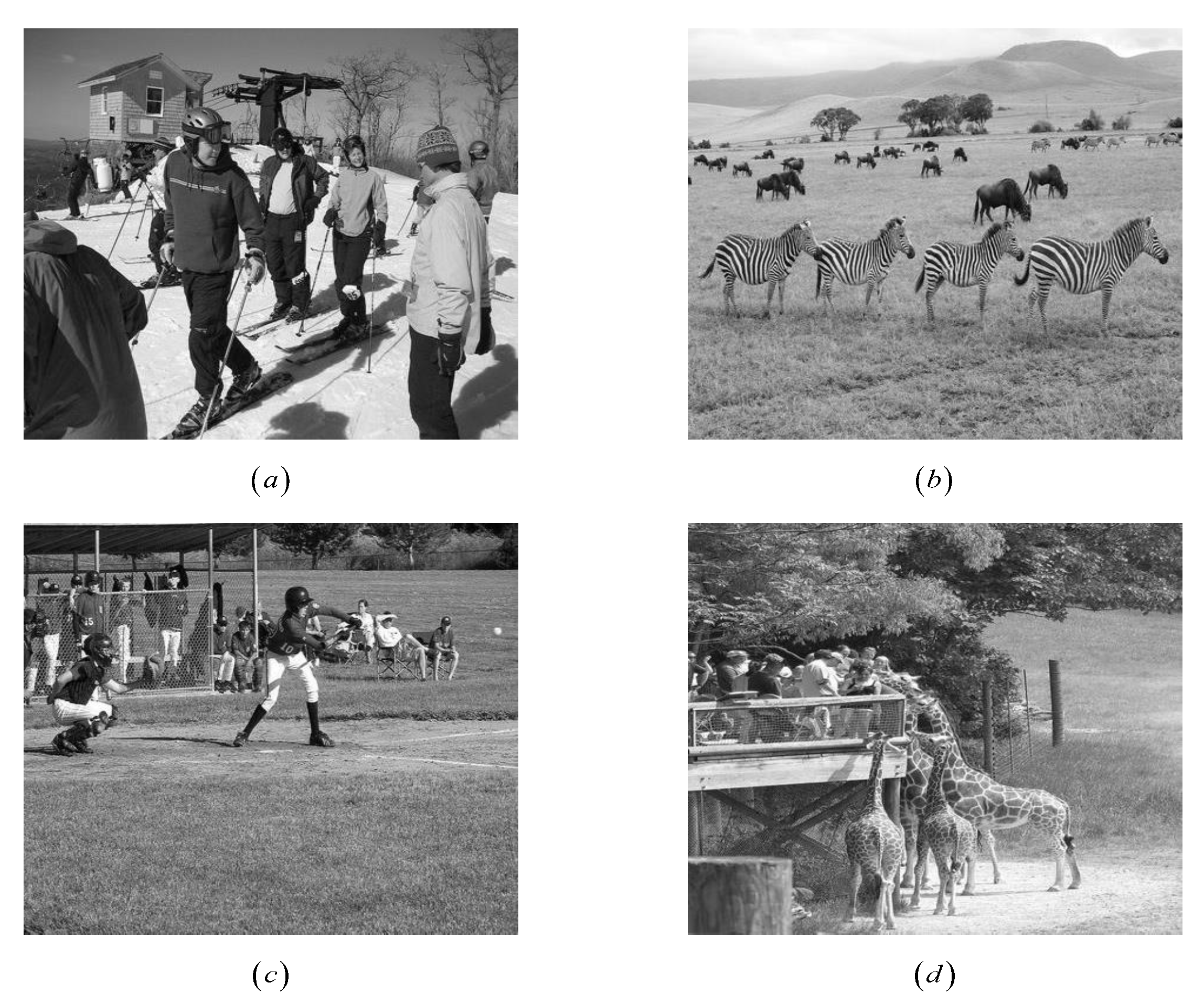

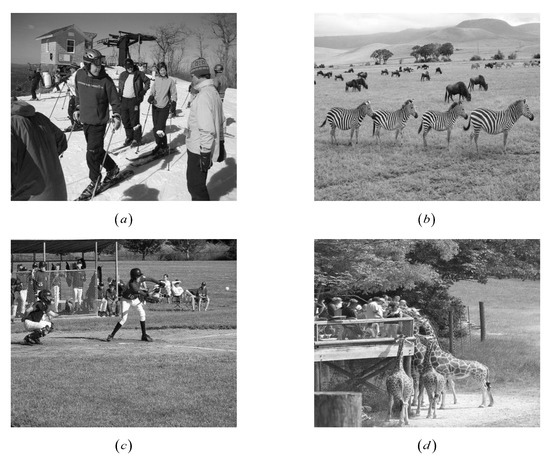

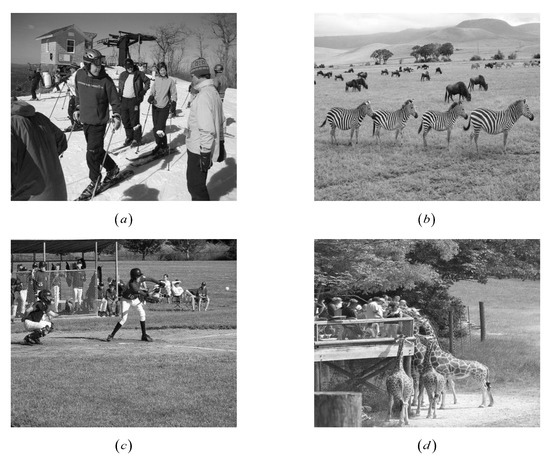

Example 4.

Let be the incidence matrix of -SBIBD; we construct three structured random measurement matrices , and , where , and are the normalized orthogonal matrix of a -dimensional Gaussian matrix, Bernoulli matrix, Toeplitz matrix, respectively. Therefore, , and are -dimensional matrices. We consider the matrices , and are used to reconstruct four images with size -dimensional, , and are used to reconstruct four images with size -dimensional, , and are used to reconstruct four images with size -dimensional in Figure 11. Table 3, Table 4 and Table 5 have listed the PSNRs and CPU time of four images in the reconstruction process. It shows that the PSNRs of our measurement matrices are not less than that of the Gaussian matrix, Bernoulli matrix and Toeplitz matrix, under OMP, BP, IST, and SP, respectively. The CPU times of our measurement matrices are not longer than those of the Gaussian matrix, Bernoulli matrix and Toeplitz matrix, under OMP, BP, IST, and SP, respectively.

Figure 11.

Four test images randomly selected from MSCOCO dataset. (a) COCO_val2014_ 000000000761. (b) COCO_val2014_000000004754. (c) COCO_val2014_000000008119. (d) COCO_ val2014_000000193121.

Table 3.

The PSNRs of four images and the CPU time of the measurement matrices , and in the process of reconstruction.

Table 4.

The PSNRs of four images and the CPU time of the measurement matrices , and in the process of reconstruction.

Table 5.

The PSNRs of four images and the CPU time of the measurement matrices , and in the process of reconstruction.

5. Conclusions

The construction of measurement matrices is not only the vital step to guarantee the quality of signal sampling but also the vital step to determine the difficulty of compressed sampling hardware implementation. Aiming at the present shortcomings—that a random matrix needs large storage space and is difficult to be implemented in hardware, and a deterministic measurement matrix has large reconstruction error—this paper constructs a structured random matrix by the embedding operation of two seed matrices in which one is the incidence matrix of -SBIBD, and the other is obtained by Gram–Schmidt orthonormalization of a -dimensional random matrix. Meanwhile, we provide a new model that applies the structured random matrices to semi-tensor product compressed sensing. Finally, compared with the reconstruction effect of several famous matrices, our matrices are more suitable for the reconstruction of one-dimensional signals and two-dimensional images by experimental simulation. In addition, due to randomness, low storage space and shorter reconstruction time, our matrices have good performances in the reconstruction of signals and images. To sum up, the perspectives to improve the performance of the method are as follows:

- (1)

- Special structure of the incidence matrix of -SBIBD;

- (2)

- Gram–Schmidt orthonormalization of -dimensional random matrix,

- (3)

- Semi-tensor product compressed sensing based on the structured random matrices.

Author Contributions

Conceptualization, J.L. and H.P.; methodology, J.L. and H.P.; software, J.L. and F.T.; validation, J.L., H.P., L.L. and F.T.; formal analysis, J.L., H.P., L.L. and F.T.; writing—original draft preparation, J.L.; writing—review and editing, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (Grant No. 2020YFB1805402), the National Natural Science Foundation of China (Grant Nos. 61972051, 62032002), the 111 Project (Grant No. B21049) and the Open Research Fund from Shandong Key Laboratory of Computer Network (SKLCN-2021-05).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CS | Compressed Sensing |

| STP-CS | Semi-Tensor Product Compressed Sensing |

| BCS | Block Compressed Sensing |

| BIBD | Balanced Incomplete Block Design |

| SBIBD | Symmetric Balanced Incomplete Block Design |

| STP | Semi-Tensor Product |

| KP-CS | Kronecker Product Compressed Sensing |

| BCS-EO | Block Compressed Sampling Based on the Embedding Operation |

| KP-STP-CS | Kronecker Product Semi-tensor Product Compressed Sensing |

| STP-CS-EO | Semi-Tensor Product Compressed Sensing Based on the Embedding Operation |

References

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Natarajan, B.K. Sparse approximate solutions to linear systems. SIAM J. Comput. 1995, 24, 227–234. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Decoding by linear programming. IEEE Trans. Inf. Theory 2005, 51, 4203–4215. [Google Scholar] [CrossRef]

- Gribonval, R.; Nielsen, M. Sparse representations in unions of bases. IEEE Trans. Inf. Theory 2003, 49, 3320–3325. [Google Scholar] [CrossRef]

- Welch, L. Lower bounds on the maximum cross correlation of signals (corresp.). IEEE Trans. Inf. Theory 1974, 20, 397–399. [Google Scholar] [CrossRef]

- Gilbert, A.; Indyk, P. Sparse recovery using sparse matrices. Proc. IEEE 2010, 98, 937–947. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Do, T.T.; Gan, L.; Nguyen, N.H.; Tran, D.T. Fast and efficient compressive sensing using structurally random matrices. IEEE Trans. Signal Process. 2011, 60, 139–154. [Google Scholar] [CrossRef]

- Rudelson, M.; Vershynin, R. On sparse reconstruction from Fourier and Gaussian measurements. Commun. Pure Appl. Math. J. Issued Courant Inst. Math. Sci. 2008, 61, 1025–1045. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Near-optimal signal recovery from random projections: Universal encoding strategies? IEEE Trans. Inf. Theory 2006, 52, 5406–5425. [Google Scholar] [CrossRef]

- Baraniuk, R.; Davenport, M.; DeVore, R.; Wakin, M. A simple proof of the restricted isometry property for random matrices. Constr. Approx. 2008, 28, 253–263. [Google Scholar] [CrossRef]

- Haupt, J.D.; Bajwa, W.U.; Raz, G.M.; Nowak, R. Toeplitz compressed sensing matrices with applications to sparse channel estimation. IEEE Trans. Inf. Theory 2010, 56, 5862–5875. [Google Scholar] [CrossRef]

- Bajwa, W.U.; Haupt, J.D.; Raz, G.M.; Wright, S.J.; Nowak, R.D. Toeplitz-structured compressed sensing matrices. In Proceedings of the 2007 IEEE/SP 14th Workshop on Statistical Signal Processing, Madison, WI, USA, 26–29 August 2007; pp. 294–298. [Google Scholar] [CrossRef]

- Tong, F.H.; Li, L.X.; Peng, H.P.; Yang, Y.X. Flexible construction of compressed sensing matrices with low storage space and low coherence. Signal Process. 2021, 182, 107951. [Google Scholar] [CrossRef]

- Wang, H.; Xiao, D.; Li, M.; Xiang, Y.P.; Li, X.Y. A visually secure image encryption scheme based on parallel compressive sensing. Signal Process. 2019, 155, 218–232. [Google Scholar] [CrossRef]

- DeVore, R.A. Deterministic constructions of compressed sensing matrices. J. Complex. 2007, 23, 918–925. [Google Scholar] [CrossRef]

- Li, S.X.; Gao, F.; Ge, G.N.; Zhang, S.Y. Deterministic construction of compressed sensing matrices via algebraic curves. IEEE Trans. Inf. Theory 2012, 58, 5035–5041. [Google Scholar] [CrossRef]

- Dimakis, A.G.; Smarandache, R.; Vontobel, P.O. LDPC codes for compressed sensing. IEEE Trans. Inf. Theory 2010, 58, 3093–3114. [Google Scholar] [CrossRef]

- Wang, X.; Fu, F.W. Deterministic construction of compressed sensing matrices from codes. Int. J. Found. Comput. Sci. 2017, 28, 99–109. [Google Scholar] [CrossRef]

- Amini, A.; Marvasti, F. Deterministic construction of binary, bipolar, and ternary compressed sensing matrices. IEEE Trans. Inf. Theory 2011, 57, 2360–2370. [Google Scholar] [CrossRef]

- Zhang, J.; Han, G.J.; Fang, Y. Deterministic construction of compressed sensing matrices from protograph ldpc codes. IEEE Signal Process. Lett. 2015, 22, 1960–1964. [Google Scholar] [CrossRef]

- Wang, G.; Niu, M.Y.; Fu, F.W. Deterministic constructions of compressed sensing matrices based on optimal codebooks and codes. Appl. Math. Comput. 2019, 343, 128–136. [Google Scholar] [CrossRef]

- Jie, Y.M.; Li, M.C.; Guo, C.; Feng, B.; Tang, T.T. A new construction of compressed sensing matrices for signal processing via vector spaces over finite fields. Multimed. Tools Appl. 2019, 78, 31137–31161. [Google Scholar] [CrossRef]

- Liu, X.M.; Jia, L.H. Deterministic construction of compressed sensing matrices via vector spaces over finite fields. IEEE Access 2020, 8, 203301–203308. [Google Scholar] [CrossRef]

- Xia, S.T.; Liu, X.J.; Jiang, Y.; Zheng, H.T. Deterministic constructions of binary measurement matrices from finite geometry. IEEE Trans. Signal Process. 2014, 63, 1017–1029. [Google Scholar] [CrossRef]

- Tong, F.H.; Li, L.X.; Peng, H.P.; Yang, Y.X. Deterministic constructions of compressed sensing matrices from unitary geometry. IEEE Trans. Inf. Theory 2021, 67, 5548–5561. [Google Scholar] [CrossRef]

- Li, S.X.; Ge, G.N. Deterministic construction of sparse sensing matrices via finite geometry. IEEE Trans. Signal Process. 2014, 62, 2850–2859. [Google Scholar] [CrossRef]

- Tong, F.H.; Li, L.X.; Peng, H.P.; Zhao, D.W. Progressive coherence and spectral norm minimization scheme for measurement matrices in compressed sensing. Signal Process. 2022, 194, 108435. [Google Scholar] [CrossRef]

- Bryant, D.; Colbourn, C.J.; Horsley, D.; Cathain, P.O. Compressed sensing with combinatorial designs: Theory and simulations. IEEE Trans. Inf. Theory 2017, 63, 4850–4859. [Google Scholar] [CrossRef]

- Li, S.X.; Ge, G.N. Deterministic sensing matrices arising from near orthogonal systems. IEEE Trans. Inf. Theory 2014, 60, 2291–2302. [Google Scholar] [CrossRef]

- Liang, J.Y.; Peng, H.P.; Li, L.X.; Tong, F.H.; Yang, Y.X. Flexible construction of measurement matrices in compressed sensing based on extensions of incidence matrices of combinatorial designs. Appl. Math. Comput. 2022, 420, 126901. [Google Scholar] [CrossRef]

- Naidu, R.R.; Jampana, P.; Sastry, C.S. Deterministic compressed sensing matrices: Construction via euler squares and applications. IEEE Trans. Signal Process. 2015, 64, 3566–3575. [Google Scholar] [CrossRef]

- Wan, Z.X. Geometry of Classical Groups over Finite Fields; Chartwell-Bratt: Beijing, China, 1993. [Google Scholar]

- Lindner, C.C.; Rodger, C.A. Design Theory; Chapman and Hall/CRC: Boca Raton, FL, USA, 2017. [Google Scholar] [CrossRef]

- Amini, A.; Montazerhodjat, V.; Marvasti, F. Matrices with small coherence using p-ary block codes. IEEE Trans. On Signal Process. 2011, 60, 172–181. [Google Scholar] [CrossRef]

- Xie, D.; Peng, H.P.; Li, L.X.; Yang, Y.X. Semi-tensor compressed sensing. Digit. Signal Process. 2016, 58, 85–92. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic decomposition by basis pursuit. SIAM Rev. 2001, 43, 129–159. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. J. Issued Courant Inst. Math. Sci. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Dai, W.; Milenkovic, O. Subspace pursuit for compressive sensing signal reconstruction. IEEE Trans. Inf. Theory 2009, 55, 2230–2249. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).