Deep Learning-Based Intelligent Forklift Cargo Accurate Transfer System

Abstract

:1. Introduction

- Existing methods are more costly for determining whether a pallet is available at a certain location, while the recognition rate is low and also susceptible to interference by environmental factors [4].

- When the intelligent forklift inserts the pallet, there are problems of high implementation cost [5], low algorithm robustness and insufficient accuracy for the calculation of the relative position between the pallet and the forklift.

- When controlling the intelligent forklift to insert the pallet after the accurate position is calculated, a fixed control amount is usually used without considering the vehicle running state, which makes the control process deviate and eventually leads to errors in the inserting results [6].

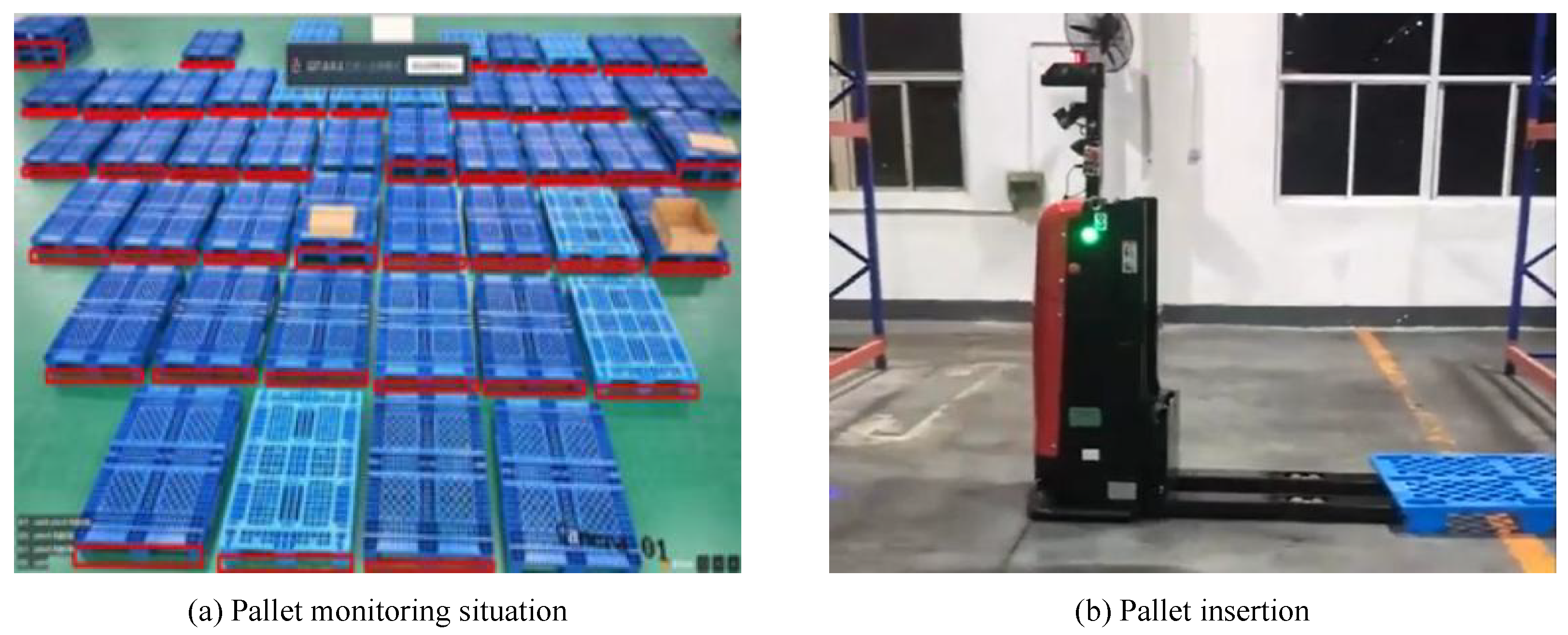

- To precisely determine whether there are pallets to be transported in the pallet storage area, we employ a Yolov5-based [7] pallet monitoring system and small target detection module, and its accuracy rate reaches more than 99.5%.

- To ensure that the final pallet insertion accuracy is within 6 mm, we calculate the real-time pallet position in relation to the intelligent forklift using the pallet position recognition system based on 3D Hough network [8].

- We present a high-precision tracing control approach for intelligent forklifts in order to increase the control accuracy, and the docking results obtained from 1000 experiments have an error of no more than 6 mm.

2. Related Works and Background

2.1. Pallet Monitoring

2.2. Pallet Position Recognition

2.3. Forklift Precision Control Algorithm

3. Intelligent Forklift Cargo Precision Transfer System

3.1. Pallet Monitoring Module

3.1.1. BackBone Modules

3.1.2. Neck Module

3.1.3. Small Target Detection

3.1.4. Convolutional Block Attention Module (CBAM)

3.2. Pallet Positioning Module

3.2.1. Key-Point Detection Module

3.2.2. Instance Segmentation Module

3.2.3. Network Architecture

3.2.4. Least Squares Fitting

3.3. High Precision Control Module

4. Experiment Results

4.1. Experiments Environment Construction

4.1.1. Pallet Monitoring Module

4.1.2. Pallet Positioning Module

4.2. Experiment Results

4.2.1. Pallet Monitoring Module

4.2.2. Pallet Positioning Module and High Precision Control Module

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wurman, P.R.; D’Andrea, R.; Mountz, M. Coordinating hundreds of cooperative, autonomous vehicles in warehouses. AI Mag. 2008, 29, 9. [Google Scholar]

- D’Andrea, R. Guest editorial: A revolution in the warehouse: A retrospective on kiva systems and the grand challenges ahead. IEEE Trans. Autom. Sci. Eng. 2012, 9, 638–639. [Google Scholar] [CrossRef]

- Gadd, M.; Newman, P. A framework for infrastructure-free warehouse navigation. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 3271–3278. [Google Scholar]

- Wu, C.H.; Tsang, Y.P.; Lee, C.K.M.; Ching, W.K. A Blockchain-IoT Platform for the Smart Pallet Pooling Management. Sensors 2021, 21, 6310. [Google Scholar] [CrossRef] [PubMed]

- Motroni, A.; Buffi, A.; Nepa, P.; Pesi, M.; Congi, A. An Action Classification Method for Forklift Monitoring in Industry 4.0 Scenarios. Sensors 2021, 21, 5183. [Google Scholar] [CrossRef] [PubMed]

- Lamooki, S.R.; Cavuoto, L.A.; Kang, J. Adjustments in Shoulder and Back Kinematics during Repetitive Palletizing Tasks. Sensors 2022, 22, 5655. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 2778–2788. [Google Scholar]

- Liebelt, J.; Schmid, C.; Schertler, K. Viewpoint-independent object class detection using 3d feature maps. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–8. [Google Scholar]

- Hussain, M.; Al-Aqrabi, H.; Munawar, M.; Hill, R.; Alsboui, T. Domain Feature Mapping with YOLOv7 for Automated Edge-Based Pallet Racking Inspections. Sensors 2022, 22, 6927. [Google Scholar] [CrossRef] [PubMed]

- Crețu-Sîrcu, A.L.; Schiøler, H.; Cederholm, J.P.; Sîrcu, I.; Schjørring, A.; Larrad, I.R.; Berardinelli, G.; Madsen, O. Evaluation and Comparison of Ultrasonic and UWB Technology for Indoor Localization in an Industrial Environment. Sensors 2022, 22, 2927. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.Y.; Chen, X.H.; Ding, G.Y.; Wang, S.; Xu, W.C.; Sun, B.B.; Song, Q. Pallet detection and localization with RGB image and depth data using deep learning techniques. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 306–310. [Google Scholar]

- Joo, K.J.; Pyo, J.W.; Ghosh, A.; In, G.G.; Kuc, T.Y. A pallet recognition and rotation algorithm for autonomous logistics vehicle system of a distribution center. In Proceedings of the 2021 21st International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 12–15 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1387–1390. [Google Scholar]

- Borstell, H.; Kluth, J.; Jaeschke, M.; Plate, C.; Gebert, B.; Richter, K. Pallet monitoring system based on a heterogeneous sensor network for transparent warehouse processes. In Proceedings of the 2014 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 8–10 October 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar]

- Garibott, G.; Masciangelo, S.; Ilic, M.; Bassino, P. Robolift: A vision guided autonomous fork-lift for pallet handling. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS’96, Osaka, Japan, 8 November 1996; IEEE: Piscataway, NJ, USA, 1996; Volume 2, pp. 656–663. [Google Scholar]

- Garibotto, G.; Masciangelo, S.; Bassino, P.; Ilic, M. Computer vision control of an intelligent forklift truck. In Proceedings of the Conference on Intelligent Transportation Systems, Boston, MA, USA, 12 November 1997; IEEE: Piscataway, NJ, USA, 1997; pp. 589–594. [Google Scholar]

- Minav, T.A.; Laurila, L.I.; Pyrhönen, J.J. Self-Tuning-Parameter Fuzzy PID Speed Controller Performance in an Electro-Hydraulic Forklift with Different Rule Sets. 2010. Available online: https://www.researchgate.net/profile/Tatiana-Minav/publication/260952145_Self-Tuning-Parameter_Fuzzy_PID_Speed_Controller_Performance_in_an_Electro-Hydraulic_Forklift_with_Different_Rule_Sets/links/5540a17a0cf2736761c280c2/Self-Tuning-Parameter-Fuzzy-PID-Speed-Controller-Performance-in-an-Electro-Hydraulic-Forklift-with-Different-Rule-Sets.pdf (accessed on 9 October 2022).

- Jiang, Z.; Xiao, B. Electric power steering system control strategy based on robust H control for electric forklift. Math. Probl. Eng. 2018, 2018, 7614304. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, OH, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Giesbrecht, J.; Mackay, D.; Collier, J.; Verret, S. Path Tracking for Unmanned Ground Vehicle Navigation: Implementation and Adaptation of the Pure Pursuit Algorithm; Technical report; Defence Research and Development Suffield: Suffield, AB, Canada, 2005. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, OH, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. arXiv 2017, arXiv:1706.02413. [Google Scholar]

- Wang, C.; Xu, D.; Zhu, Y.; Martín-Martín, R.; Lu, C.; Fei-Fei, L.; Savarese, S. Densefusion: 6d object pose estimation by iterative dense fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3343–3352. [Google Scholar]

- Arun, K.S.; Huang, T.S.; Blostein, S.D. Least-squares fitting of two 3-D point sets. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 698–700. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mitchell, W.C.; Staniforth, A.; Scott, I. Analysis of Ackermann Steering Geometry; Technical report, SAE Technical Paper; SAE International: Warrendale, PA, USA, 2006. [Google Scholar]

- Ziegler, J.; Bender, P.; Dang, T.; Stiller, C. Trajectory planning for Bertha—A local, continuous method. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 450–457. [Google Scholar]

- Xiao, J.; Lu, H.; Zhang, L.; Zhang, J. Pallet recognition and localization using an rgb-d camera. Int. J. Adv. Robot. Syst. 2017, 14, 1729881417737799. [Google Scholar] [CrossRef]

- Baglivo, L.; Biasi, N.; Biral, F.; Bellomo, N.; Bertolazzi, E.; Da Lio, M.; De Cecco, M. Autonomous pallet localization and picking for industrial forklifts: A robust range and look method. Meas. Sci. Technol. 2011, 22, 085502. [Google Scholar] [CrossRef]

| Algorithm | Method | Advantages | Disadvantages |

|---|---|---|---|

| Algorithm in [9] | photoelectric sensors | High recognition rate | High cost |

| Algorithm in [11] | image recognition | Low cost | Easily disturbed |

| Algorithm in [12] | Deep learning based on Yolov3 | High stability | Low recognition rate for small targets |

| Our algorithm | Deep learning based on Yolov5 | Low cost, high recognition rate | Algorithm is complicated |

| Day | Number of Pallets | Recognition Accuracy (%) |

|---|---|---|

| 1 | 72 | 99.82 |

| 2 | 70 | 99.76 |

| 3 | 72 | 99.68 |

| 4 | 68 | 99.86 |

| 5 | 71 | 99.69 |

| 6 | 74 | 99.58 |

| 7 | 69 | 99.71 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, J.; Pan, Y.; Yao, P.; Hu, Y.; Gao, W.; Xue, Z. Deep Learning-Based Intelligent Forklift Cargo Accurate Transfer System. Sensors 2022, 22, 8437. https://doi.org/10.3390/s22218437

Ren J, Pan Y, Yao P, Hu Y, Gao W, Xue Z. Deep Learning-Based Intelligent Forklift Cargo Accurate Transfer System. Sensors. 2022; 22(21):8437. https://doi.org/10.3390/s22218437

Chicago/Turabian StyleRen, Jie, Yusu Pan, Pantao Yao, Yicheng Hu, Wang Gao, and Zhenfeng Xue. 2022. "Deep Learning-Based Intelligent Forklift Cargo Accurate Transfer System" Sensors 22, no. 21: 8437. https://doi.org/10.3390/s22218437