1. Introduction

Walking is an essential activity for human locomotion. However, it is a very complex process, and any disorder, instability, or incoordination can lead to deviations and challenges during locomotion [

1]. Furthermore, gait deviations faced by lower-limb amputee patients caused by prosthetics are critical in the long term. These deviations are caused by the asymmetry or the non-repeatability differences between a healthy person and an amputee’s gait [

2]. As a result, these anomalies can increase the energy cost during motion, overload the muscles, and cause damage to joint structures and skin. Moreover, to avert these complications, lower limb kinematics are a critical factor when designing active intelligent prostheses [

3,

4].

Various techniques for different applications are adopted to analyze gait kinematics and kinetics [

5]. The first technique is motion capture systems, utilizing infrared cameras with reflective markers [

6,

7,

8]. In addition, relatively low-cost non-marker-based image processing using time of flight (TOF) cameras in motion tracking, such as in Microsoft Kinect, are used in this method [

7,

9]. The second technique uses inertial measurement units (IMU) by positioning the IMU sensor on different body parts and measuring the kinematic parameters (angle, angular velocity and acceleration) for each joint (hip, knee, and ankle) and generates a 3D kinematic model used for gait analysis [

2,

7,

10,

11,

12,

13]. The third type uses an array of force sensors under the foot to measure the ground reaction force (GRF) and its the distribution on foot. Using the scalar of the reaction force and the center of the reaction force gait cycle can be recreated [

7,

14]. Finally, electromyography is used to measure the contraction of the main lower limb muscles—hip muscles, shank, and ankle muscles [

15]—using surface-mounted electrodes to plot the kinematic model of the gait cycle [

7].

From 2010 to 2020, the sensory-based publications on lower limb kinematics signal recording focused on using wearable sensors by nearly 79% of the publications, such as IMU sensors, force sensors, and EMG sensors, while only 12% used vision-based and other techniques [

1,

16]. Vu et al. [

1] conducted a study on detecting gait phase and found that the IMU was the most commonly used sensor in gait analysis phase detection by nearly 78% of the publications compared to 14% for force and 8% for EMG sensors. Although force sensors are relatively low cost and show high precision in gait analysis [

17], the sensor’s signal needs to be filtered to remove the noise that can affect the results. Furthermore, due to the dynamic load exerted on the sensor by the foot during the gait cycle, the expected lifespan of the sensor can be short due to the mechanical wear [

1]. On the other hand, EMG sensors have become less prominent due to their complexity of usage in data acquisition, processing [

18], and for its sensitivity to any substance trapped between the probe and the skin, such as moisture [

1]. Furthermore, the IMU sensor module is relatively cheap, reliable, has low power consumption, and can be easily positioned on the body. It consists of three sensors: an accelerometer, a gyroscope, and a magnetometer used to measure angular velocity and linear acceleration for the gait cycle analysis.

There is a progressive focus on analyzing the human gait using different techniques; each has its benefits and drawbacks according to the application [

7]. Extracting the gait motion characteristics helps in detecting gait deviations which can be an indication of a possibility of tripping, slipping, or balance loss [

19,

20,

21,

22,

23]. Alternatively, it can compensate for the delay of the response time of the control system [

24,

25,

26]. Moreover, the lower limb’s future trajectory prediction can be used to solve numerous problems facing robotic lower limb prothesis/orthosis. Furthermore, detecting the current phase in the gait cycle can benefit the assistive powered prostheses control. The gait phase has the required information to be able to determine the needed angle, angular velocity, and torque, which can improve the performance of the controller by providing the current gait phase [

27,

28,

29]. As a result, better control has an effect on the patient, which can help with reducing the energy cost of walking with a powered limb [

30]. The human gait cycle can be segmented into two main phases (stance phase and swing phase), four phases (initial contact, foot flat, heel off, and toe-off) or even seven phases (loading response, mid stance, terminal stance, pre swing, initial swing, mid swing, and terminal swing) [

17,

31].

From the previous research, it was found that the LSTM network achieved the best results in time-series data prediction [

32] and especially in detecting human activity recognition or predicting human gait cycle kinematics. However, we try to achieve an embedded system in a prosthesis that does not depend on an external computing source by any means, wired or wireless (PC, servers, …, etc.), that is as reliable as possible, by implementing an LSTM network on an embedded system microcontroller to detect human activity.

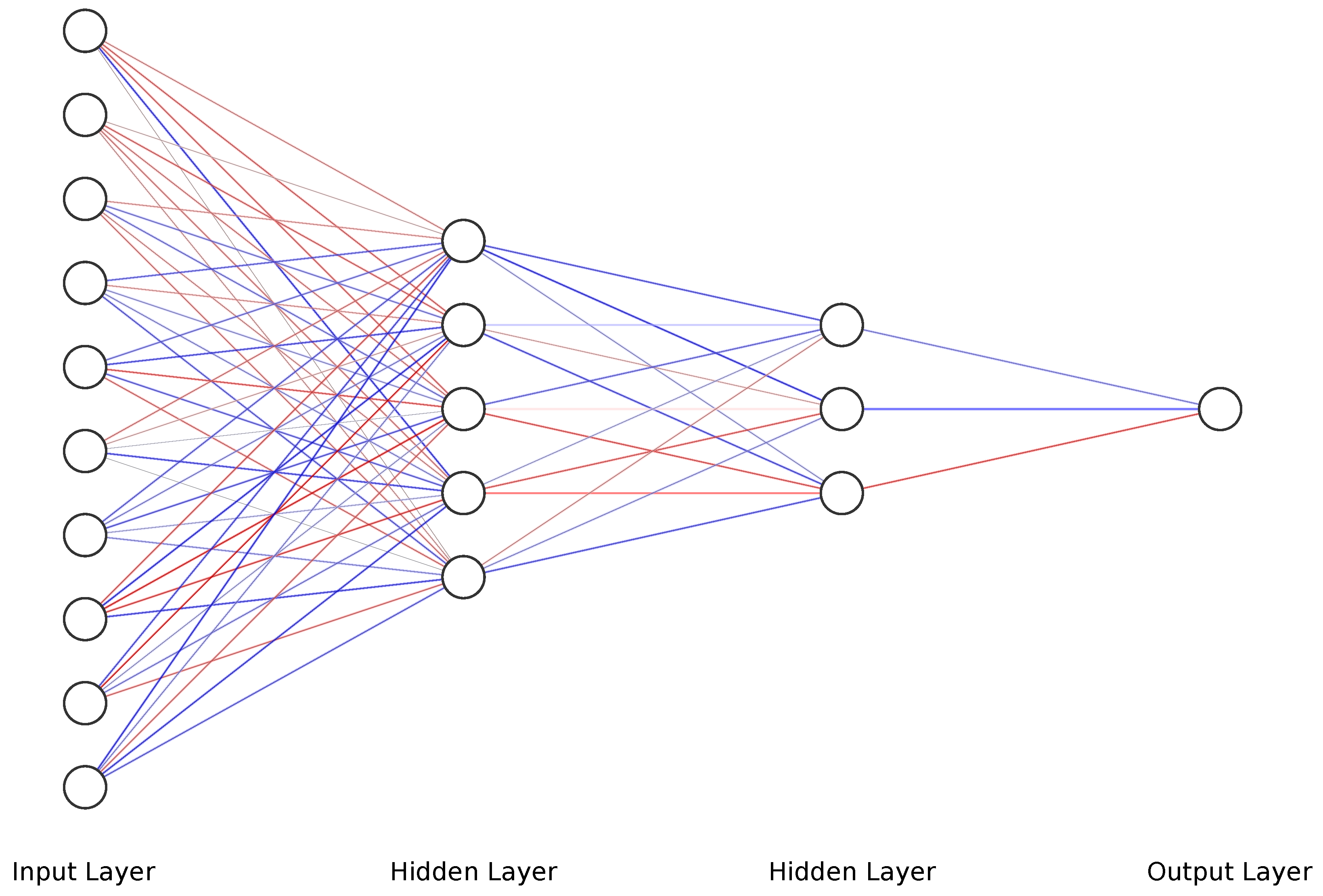

This study aims to develop a deep MLP and CNN model to predict a future frame of gait trajectory. Furthermore, we investigate the capability of MLP and CNN in handling sequential data with an accuracy comparable to that of a long short-term memory (LSTM) neural network. This approach targets using the current and previous sensor readings to predict the future gait trajectory window while using new readings for each prediction to avoid the accumulation of error. Moreover, we study and compare the accuracy of regression (forecasting) of both neural network configurations while maintaining an acceptable computational time. What is more, we study the capability of a low-cost, low-power microcontroller in implementing both models while achieving a good inference time on the targeted hardware.

This article is organized as follows. Firstly,

Section 2 contains the previous related research for each part of this study. Next,

Section 3 contains the materials and methods, discussing the used dataset and its properties, data processing, developed machine learning algorithms and prediction performance evaluation methods. Then,

Section 4 shows the results from the trained models and a comparison between the used methods. Then,

Section 5 has a discussion of the results. Finally,

Section 6 includes the conclusion of this study.

2. Related Work

In this study, the first part is how to capture the motion parameters of a person. Ahmedi et al. [

33] indicated the possibility of the reconstruction of a 3D gait kinematic model with efficient computation using seven IMU and foot force sensors. Furthermore, Hu et al. [

34] proposed a method to estimate the joint angles of lower limbs (i.e., hip, knee and ankle angles) using the minimal number of IMUs of only four sensors. Mishra et al. [

35] and Yin et al. [

36] used the surface EMG signals of EMG sensors on different muscles in gait analysis and measured speed to develop EMG-driven speed-control for exoskeleton motion control.

The second area is gait kinematic trajectory future windows prediction. Binbin Su et al. [

37] used an LSMT neural network to predict lower body segment trajectory (angular velocity of the thigh, shank and foot) up to 200 ms (10 time frame) ahead in the future based on past observations up to 600 ms (30 time frame), and they achieved a z-score normalized angular velocity error of 0.005, MAE of 0.299, RMSE of 0.487 and Coefficient of Determination of 0.91 for the inter-subject’s foot trajectory. Furthermore, Zaroug et al. [

38] used different LSTM neural network architectures (Vanilla, Stacked, Bidirectional and Autoencoders) in predicting lower limb kinematic parameters (angular velocity and linear acceleration of the thigh, shank and foot) up to 100 ms (five time frames) ahead, and they reported that the best result was achieved using autoencoder LSTM architecture with a normalized angular velocity MAE of 0.276 and RMSE of 0.419 for the inter-subject’s foot trajectory. Zaroug et al. also [

39] used an autoencoder LSTM neural network architecture to predict lower body segment trajectory (angular velocity of the thigh, shank) up to five samples frontwards using a previous window size of 25 samples, and they attained a normalized angular velocity MAE of 0.28, MSE of 0.001 and Correlation Coefficient between the predicted and actual angular velocity of 0.99 for the inter-subject’s thigh trajectory, and they attained an MAE of 0.24, MSE of 0.001 and Correlation Coefficient of 0.99 for the inter-subject’s shank trajectory. Additionally, Sun et al. [

12] proved that a feed-forward neural network is helpful in time sequence data and capable of predicting IMU human gait kinematic parameters (acceleration and angular velocity) based on readings from the IMU from other body parts, obtaining a Correlation Coefficient of 0.89 for predicting the angular velocity of the shank using only the IMU readings from the foot and a Correlation Coefficient of 0.8 for predicting the angular velocity of the thigh using only the IMU readings from the foot while using a window of size five-time frames.

The third area involves studying the effect of the current gait phase on the accuracy of the trained models. In most research for gait analysis, a four-phase model is commonly used so that the gait is partitioned into: (a) the initial foot contact (IC) with the ground or Heel Strike (HS); (b) the loading response phase or Flat Foot (FF); (c) the heel lifting or Heel-Off (HO); and (d) the initial Swing Phase (SP) or Toe-Off (TO). However, Taborri et al. [

40] proved that a two-phase model had the sufficient data to control the knee module of an active orthosis. For that, the gait was segmented into two phases, (a) Swing Phase (SW); and (b) Stance Phase (ST), to avoid any computational complexity due to hardware limitations. Cho et al. [

41] compared two-phase segmentation methods, first using a camera-based system and the other using an IMU-based system, while observing the sagittal, frontal, and transverse planes for body joints. This method proved that the IMU system could be reliable and be used in gait phase segmentation. In the same study mentioned before, Binbin Su et al. [

37] used an LSTM neural network architecture to detect five phases of the gait cycle (loading response, mid-stance, terminal stance, pre-swing, and swing) up to 200 ms (10 time frames) ahead in the future based on past observations up to 600 ms (30 time frames), and they achieved a detection accuracy of 79% for the loading response phase, 87% for the mid-stance phase, 77% for the terminal stance phase, 85% for the pre-swing phase, and 95% for the swing phase for the inter-subject test. To demonstrate the potential of CNNs, it have been used for tasks such as autonomously detecting human activities using the accelerometer sensor raw data from fitness equipment and smartphones. Yang et al. [

42] used the Deep Convolutional Neural Network to classify human activities based on time-series readings and stated that the benefits of using DCNN in this application are: feature extraction can be performed by CNN automatically by using raw input data, and feature extraction and classification can be performed on a single CNN model, which can be less hardware intensive than dividing both tasks on more than one model. Furthermore, Lee et al. [

14] used a smart insole with on-board sensors (pressure sensor, accelerometer, and gyroscope sensor) and DCNN neural network architecture to detect seven gait phases for seven gait types (walking, fast walking, running, stair climbing, stair descending, hill climbing, and hill descending) and proved that the best classification rate could be achieved by using the three sensors and could achieve a 94% total classification rate using the data of five window step input sensors data.

Finally, the main aim of the study to validate the capability of a microcontroller in the predection of future gait trajectory in viable time. Alessandrini et al. [

10] achieved an accuracy and reached

by applying a trained biLSTM network on an STM32L476RG microcontroller, when observing the load of the neural network on the hardware of the microcontroller. Furthermore, it was found that the network could need more than

of the available RAM, and the inference time could reach 150 ms to achieve the best accuracy.

5. Discussion

In this study, both neural network configurations were tested for predicting the angular velocity of the foot using angular velocity and linear acceleration from IMUs mounted on the shank and an optional input CGP whether the current reading is during the swing or the stance phase in the gait cycle. Previous studies used neural networks to perform predictions for the preceding time frame. However, in this study, the algorithms predict the trajectory of a 10 time frame, or nearly 200 ms ahead, which represents almost of a full stride. The data were used for daily walking with different speeds, collected from five subjects, four of which were used for training and the fifth was used for testing.

The models are compared with other publications’ findings based on the best accuracy while maintaining the size of the network due to the limitations of the hardware capabilities. The trained models could achieve good results even after reducing the size of the networks. The trained MLP and CNN achieved a root mean squared error of 0.298 and 0.245 (deg/s) and with CGP 0.226 and 0.217 (deg/s) for foot trajectory 200 ms ahead, while Su Binbin et al. [

37] achieved a difference in RMSE of +0.27 (deg/s) for inter-subject implementation 200 ms ahead using an LSTM network. Zroug et al. [

38] achieved a difference of +0.202 ± 0.25 (deg/s) using the ED-LSTM configuration. Another parameter has been considered is the linearity between the actual and predicted values. The trained MLP and CNN has achieved a correlation coefficient of 0.945 and 0.973 (deg/s) and with phase 0.979 and 0.979 (deg/s), while Su Binbin et al. achieved a CC with difference of −0.069 for an inter-subject test and Zroug et al. achieved a CC of −0.089 ± 0.14; see

Table 6 for the other publications’ results.

The framework of this research project is to develop an embedded active prothesis system. Therefore, it is more logically to use a system of built IMUs for both training and deploying stages rather than relying on a fixed image capture system to record gait motion. Furthermore, regarding neural networks, MLP has the advantage of having a low computational load relative to the other neural networks with reasonable accuracy for kinematic gait trajectory data prediction. However, with the availability of data, it performed much worse than CNN when there was a lack of input data, such as during the CGP. On the other hand, in CNN, the convolutional layers could extract the features of the sequential data even without providing any more information about the provided readings. This means that when using additional input such as the CGP, it did not have the same significant effect on the accuracy of the CNN (CC from

to

) as it did on MLP (CC from

to

), as seen in

Table 6. However, as the accuracy of the CNN did not increase significantly, the load on the MC surged about five times, reaching 142 ms, while for the MLP, even with increasing accuracy, the load on the MC stayed at 42 ms, which is much faster than CNN.

For the current study’s limitations, the training and testing were limited to five subjects only due to gyroscope data corruption, and that is not enough to generalize the trained model. In future work, a dataset will be used with more sensor readings such as force sensors and more subjects. In order to achieve better accuracy while maintaining the size of the network to counter what is lacking in the current approach, such as

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13, the following points have to be solved:

Phase shift during swing phase, which is more obvious in CNN for both with and without CGP.

Initial jump in the prediction especially in CNN.