Learning to Ascend Stairs and Ramps: Deep Reinforcement Learning for a Physics-Based Human Musculoskeletal Model

Abstract

1. Introduction

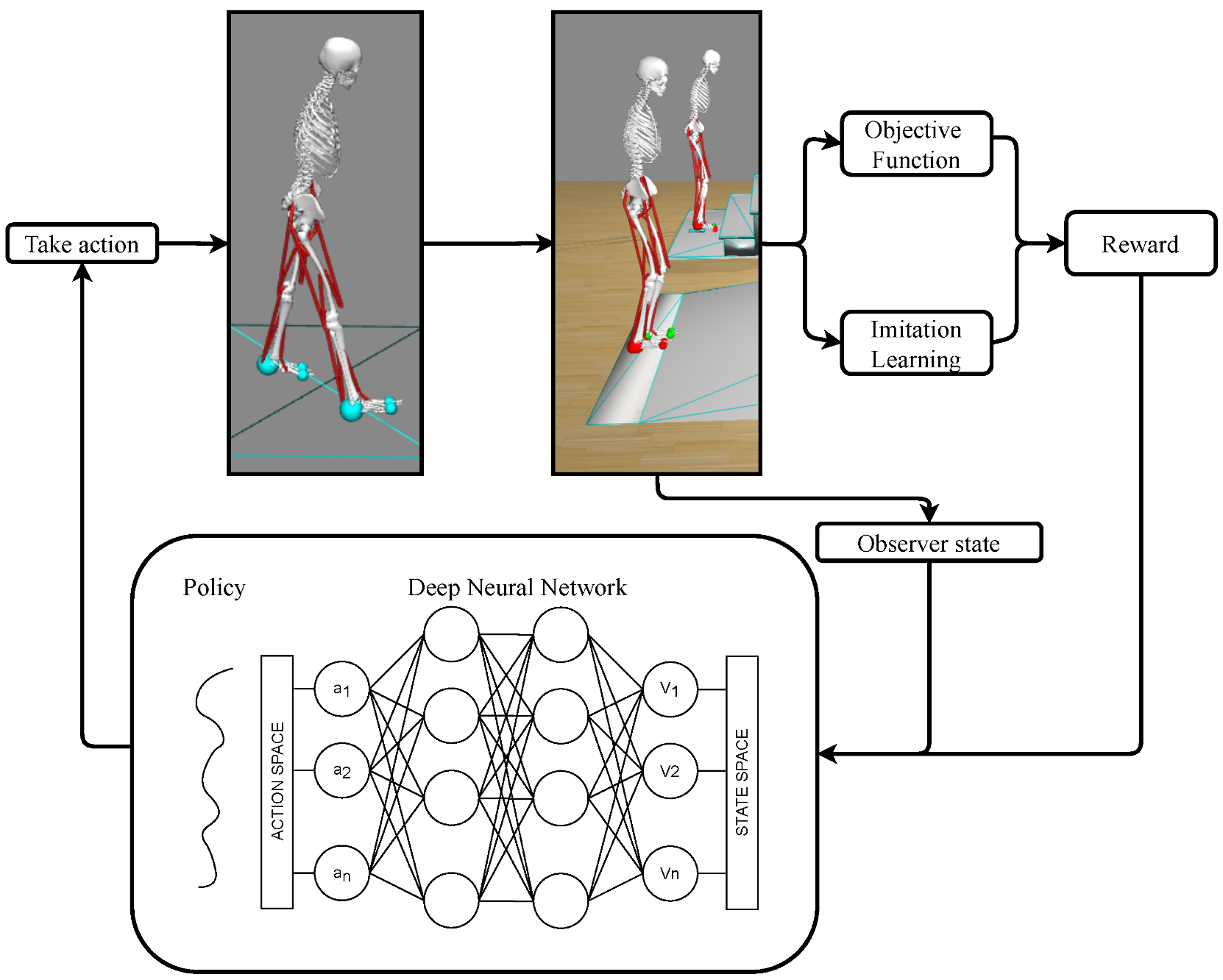

- To show that DRL, based on PPO in combination with imitation learning, can successfully teach a physics-based human musculoskeletal model in OpenSim to ascend stairs and ramps, with the future goal of using such architecture for the control of lower-limb prostheses.

- To be able to study more advanced environments in OpenSim, in addition to level ground, by implementing the elastic foundation model for the contact forces, as well as by introducing different objects’ meshes.

2. Materials

2.1. Musculoskeletal Model

2.1.1. Feet

2.1.2. Design of the Objects: Stairs and Ramp

2.2. Simulation Settings

2.3. Training Dataset

3. Method

3.1. Deep Neural Network

3.2. The Learning Algorithm: PPO

3.3. Reward Function

3.4. Implementation

4. Results and Discussion

4.1. Stairs Ascent

4.2. Ramp Ascent

4.3. Evaluation

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kidzinski, L.; Mohanty, S.; Ong, C.; Hicks, J.; Francis, S.; Levine, S.; Salathe, M.; Delp, S. Learning to Run challenge: Synthesizing physiologically accurate motion using deep reinforcement learning. In The NIPS ’17 Competition: Building Intelligent Systems. The Springer Series on Challenges in Machine Learning; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Anand, A.S.; Zhao, G.; Roth, H.; Seyfarth, A. A deep reinforcement learning based approach towards generating human walking behavior with a neuromuscular model. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Toronto, ON, Canada, 15–17 October 2019; pp. 537–543. [Google Scholar]

- Lee, S.; Park, M.; Lee, K.; Lee, J. Scalable muscle-actuated human simulation and control. ACM Trans. Graph. 2019, 38, 1–13. [Google Scholar] [CrossRef]

- De Vree, L.; Carloni, R. Deep Reinforcement Learning for Physics-Based Musculoskeletal Simulations of Healthy Subjects and Transfemoral Prostheses’ Users During Normal Walking. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 607–618. [Google Scholar] [CrossRef] [PubMed]

- Peng, X.B.; Abbeel, P.; Levine, S.; van de Panne, M. DeepMimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Trans. Graph. 2018, 37, 1–14. [Google Scholar] [CrossRef]

- Haarnoja, T.; Ha, S.; Zhou, A.; Tan, J.; Tucker, G.; Levine, S. Learning to Walk via Deep Reinforcement Learning. In Proceedings of the Robotics: Science and Systems, Freiburg im Breisgau, Germany, 22–26 June 2019. [Google Scholar]

- Dong, Y.; He, Y.; Wu, X.; Gao, G.; Feng, W. A DRL-based framework for self-balancing exoskeleton walking. In Proceedings of the IEEE International Conference on Real-Time Computing and Robotics, Asahikawa, Japan, 28–29 September 2020; pp. 469–474. [Google Scholar]

- Song, S.; Kidziński, L.; Peng, X.B.; Ong, C.; Hicks, J.; Levine, S.; Atkeson, C.G.; Delp, S.L. Deep reinforcement learning for modeling human locomotion control in neuromechanical simulation. J. Neuroeng. Rehabil. 2021, 18, 126. [Google Scholar] [CrossRef] [PubMed]

- Raveendranathan, V.; Carloni, R. Musculoskeletal Model of an Osseointegrated Transfemoral Amputee in OpenSim. In Proceedings of the IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics, New York, NY, USA, 29 November–1 December 2020; pp. 1196–1201. [Google Scholar]

- Delp, S.L.; Anderson, F.C.; Arnold, A.S.; Loan, P.; Habib, A.; John, C.T.; Guendelman, E.; Thelen, D.G. OpenSim: Open-source software to create and analyze dynamic simulations of movement. IEEE Trans. Biomed. Eng. 2007, 54, 1940–1950. [Google Scholar] [CrossRef] [PubMed]

- Carnegie Mellon University. CMU Graphics Lab—Motion Capture Database. Available Online: http://mocap.cs.cmu.edu/ (accessed on 31 August 2021).

- Delp, S.; Loan, J.; Hoy, M.; Zajac, F.; Topp, E.; Rosen, J. An interactive graphics-based model of the lower extremity to study orthopaedic surgical procedures. IEEE Trans. Biomed. Eng. 1990, 37, 757–767. [Google Scholar] [CrossRef] [PubMed]

- Thelen, D. Adjustment of Muscle Mechanics Model Parameters to Simulate Dynamic Contractions in Older Adults. J. Biomed. Eng. 2003, 125, 70–77. [Google Scholar] [CrossRef] [PubMed]

- DeMers, M.S.; Hicks, J.L.; Delp, S.L. Preparatory co-activation of the ankle muscles may prevent ankle inversion injuries. J. Biomech. 2017, 52, 17–23. [Google Scholar] [CrossRef] [PubMed]

- Hicks, J.L.; Uchida, T.K.; Seth, A.; Rajagopal, A.; Delp, S.L. Is My Model Good Enough? Best Practices for Verification and Validation of Musculoskeletal Models and Simulations of Movement. J. Biomech. Eng. 2015, 137, 020905. [Google Scholar] [CrossRef] [PubMed]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Reznick, E.; Embry, K.R.; Neuman, R.; Bolívar-Nieto, E.; Fey, N.P.; Gregg, R.D. Lower-limb kinematics and kinetics during continuously varying human locomotion. Sci. Data 2021, 8, 282. [Google Scholar] [CrossRef] [PubMed]

| Section | Description | Modification |

|---|---|---|

| BodySet | Body geometry | Addition of objects |

| ConstraintSet | List of constraints | - |

| ForceSet | Acting forces | Elastic foundation |

| MarkerSet | List or markers | - |

| ContactGeometrySet | Contact geometry | Spherical feet meshes |

| ControllerSet | Auxiliary controllers | - |

| ComponentSet | Group geometry | - |

| ProbeSet | Auxiliary probes | - |

| Coefficient | Value | |

|---|---|---|

| Right leg | appliesForce | true |

| geometry | [stair_c, ramp_c] r_heel r_toe1 r_toe2 | |

| dissipation [s/m] | 5 | |

| stiffness [MPa/m] | 50 | |

| static_friction | 0.9 | |

| dynamic_friction | 0.9 | |

| viscous_friction | 0.9 | |

| transition_velocity | 0.1 | |

| Left leg | appliesForce | true |

| geometry | [stair_c, ramp_c] l_heel l_toe1 l_toe2 | |

| dissipation [s/m] | 5 | |

| stiffness [MPa/m] | 50 | |

| static_friction | 0.9 | |

| dynamic_friction | 0.9 | |

| viscous_friction | 0.9 | |

| transition_velocicty | 0.1 |

| n. of Variables | |

|---|---|

| Positions/Rotations of body segments | 13 + 13 |

| Linear/Rotational velocities of the body segments | 13 + 13 |

| Linear/Rotational accelerations of the body segments | 13 + 13 |

| Positions/Velocities/Accelerations of the joints | 17 + 17 + 17 |

| Muscle forces | 72 |

| Miscellaneous forces | 13 |

| Total size of the state vector | 214 |

| Stairs Ascent | Ramp Ascent | |

|---|---|---|

| Left knee | 0.92 | 0.84 |

| Right knee | 0.98 | 0.61 |

| Left ankle | 0.92 | 0.36 |

| Right ankle | 0.57 | 0.52 |

| Mean | 0.82 | 0.58 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adriaenssens, A.J.C.; Raveendranathan, V.; Carloni, R. Learning to Ascend Stairs and Ramps: Deep Reinforcement Learning for a Physics-Based Human Musculoskeletal Model. Sensors 2022, 22, 8479. https://doi.org/10.3390/s22218479

Adriaenssens AJC, Raveendranathan V, Carloni R. Learning to Ascend Stairs and Ramps: Deep Reinforcement Learning for a Physics-Based Human Musculoskeletal Model. Sensors. 2022; 22(21):8479. https://doi.org/10.3390/s22218479

Chicago/Turabian StyleAdriaenssens, Aurelien J. C., Vishal Raveendranathan, and Raffaella Carloni. 2022. "Learning to Ascend Stairs and Ramps: Deep Reinforcement Learning for a Physics-Based Human Musculoskeletal Model" Sensors 22, no. 21: 8479. https://doi.org/10.3390/s22218479

APA StyleAdriaenssens, A. J. C., Raveendranathan, V., & Carloni, R. (2022). Learning to Ascend Stairs and Ramps: Deep Reinforcement Learning for a Physics-Based Human Musculoskeletal Model. Sensors, 22(21), 8479. https://doi.org/10.3390/s22218479