2. Related Work

Our research expands on three main research areas: (1) nonrobotic telepresence systems, (2) robotic telepresence systems, and (3) telexistence systems. We explain each of these below.

A variety of researchers have explored nonrobotic telepresence systems. These systems mainly communicate primary modalities such as vision and auditory information. “Livemask” [

27] is a facial screen that stands on a table as a monitor and which mimics a remote-side user’s face. The screen is considered a surrogate telepresence system that shows faces on a local screen based on a remote user’s 3D face data. Similarly, “ChameleonMask” [

28] is a wearable screen worn by a surrogate user, where the screen shows a remote user’s face. The surrogate wearing the screen responds to requests from the remote user displayed on the wearable screen. “Livesphere” [

29] is a head-mounted device, worn by a remote user, with six cameras on top of it to capture the surrounding area. The local user uses an HMD to see spherical images and control the movement via an HMD’s head rotation data.

Robotic telepresence systems extend the nonrobotic telepresence systems by providing higher movement flexibility and interactivity within the remote environment. TEROOS [

30] is a wearable, small telepresence humanoid robot mounted on the user’s shoulder and controlled remotely by another user. This wearable robot can rotate 92 degrees horizontally and 110 degrees vertically, thereby enabling the controlling user to inspect remote environments. “WithYou” [

31] is a wearable device with a pan-and-tilt camera and various sensors mounted on the remote user’s chest, allowing local users to use an HMD to control the pan and tilt motions of the camera, which is worn by a remote user.

Telexistence is a similar concept to telepresence, however, telexistence focuses on the highly-realistic sensation of existence in a remote location by engaging multiple sensory modalities [

32]. TELESAR [

7] is a telexistence robot, consisting of a humanoid robot and a cockpit system for controlling the robot. The cockpit consists of various control and feedback devices, including an HMD, speakers, a microphone, a haptic glove, and a motion tracking system. The remote location includes a humanoid robot with a stereoscopic camera, microphone, and speakers. The user controls a humanoid robot using motion tracking system, with markers on their head, shoulder, arm, hand, foot. Fusion [

16] is a wearable telexistence system, where the local system consists of HMD(the Oculus CV1) for viewing and for head motion control, as well as a hand controllers for controlling the hands of the wearable robot. The remote system consists of a wearable backpack-like robot, comprising robotic head with stereoscopic vision, speakers, microphones, and two robotic arms (six DoFs) and 5-finger robotic hands. Al-Remaihi et al. [

33] investigated a telexistence robotic system for remote physical object manipulation. The system consists of a robot arm with a gripper end-effector that can be remotely operated using an HMD and hand exoskeleton. The user at a local site controls the robot using a Vive Tracker [

34] mounted on the hand, while the HMD is used to monitor the remote environment. A 3D-printed exoskeleton is used for both controlling the robotic-gripper and for receiving haptic feedback.

Unlike the majority of telepresence systems which do not exhibit a high sense of immersion, body ownership, or physical interactions, Piton advances the state-of-the-art development of telexistence systems. Piton is the first to utilize a wearable robot with a snake form-factor, which provides higher flexibility in movement to explore remote environments beyond previous systems. Piton’s flexibility allows for novel interactions that have not been explored before, such as inspecting and assisting in industrial or daily application contexts.

3. Design Concept

Our main objective is to design a snake-shaped, wearable telexistence robot sharing travel and work-related experiences with remote users. In order to develop a telexistence system for these purposes, we set several main design considerations and show how we satisfy them in Piton, in a similar fashion to previously developed robots [

9,

15,

25,

35,

36]. Accordingly, our concept design has three primary design considerations: (1) telexistence, (2) flexible head movement, and (3) wearability. Below, we discuss how we aim to achieve each design consideration:

Telexistence: A telexistence system requires low-latency stereoscopic visual feedback [

37], low-latency auditory communication [

38], and physical interactions with the remote environment [

39].

Flexible Head Movement: The robot should have flexible head movements with high DoFs and redundancy, similar to snake-shaped robots [

25]. Such high-flexibility enable users to have large operational workspaces, which accordingly enable efficient inspection of remote environments and objects.

Wearability: Our system should be designed as a wearable system so remote users can easily wear it and go to various locations. Wearable systems can also provide numerous interaction potentials to assist remote users in various contexts that are otherwise difficult to access through mobile robots (e.g., going up stairs or in narrow corridors).

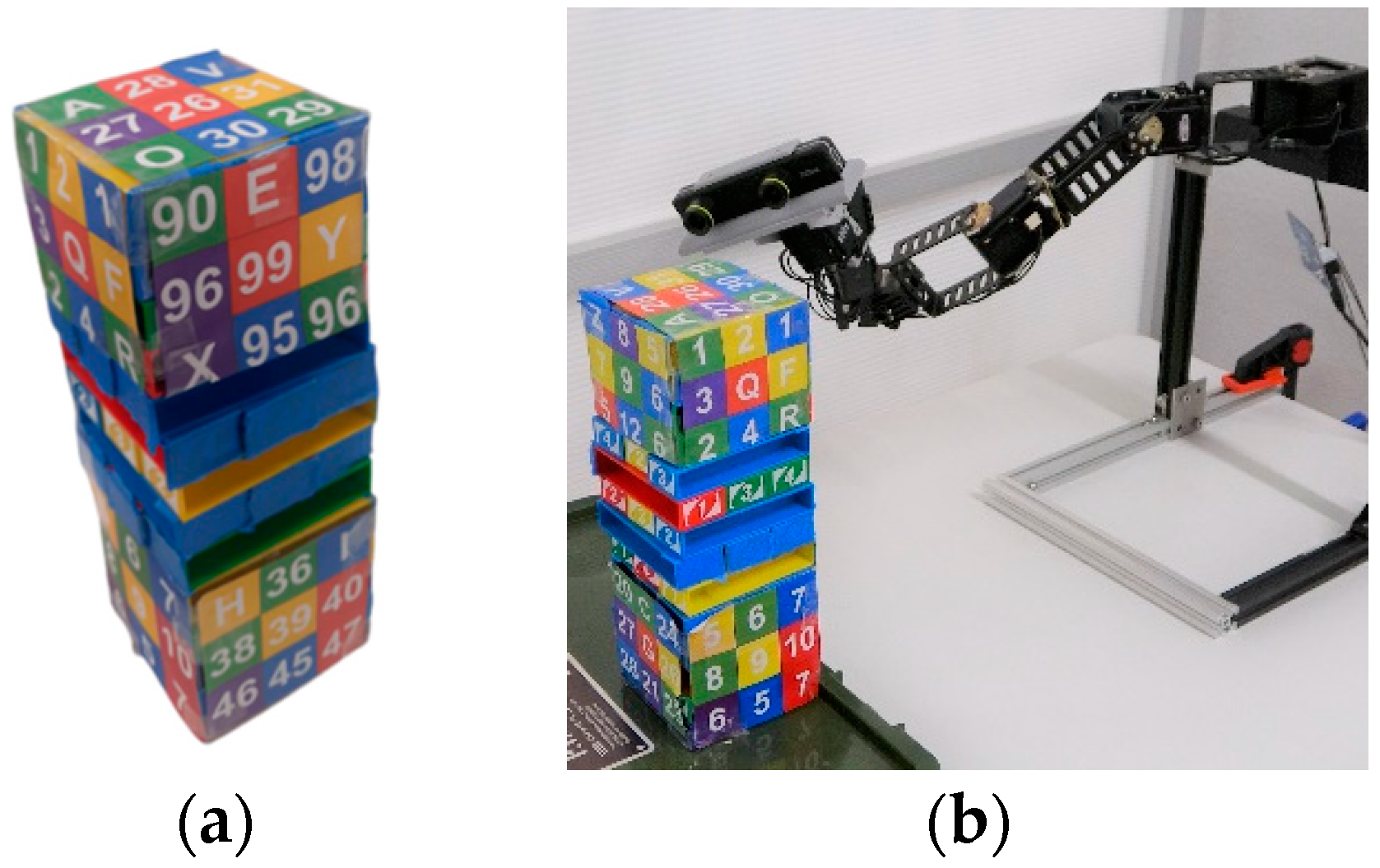

We implemented the design considerations of Piton in a wearable robot system, comprising a local site (

Figure 1a), where a user controls and communicates through the remote robot, and a remote site (

Figure 1b), where a surrogate user wears the robot. The

supplementary materials (Video S1) show how the local and remote systems are implemented. We explain how we implemented the design considerations in our system as follows:

Telexistence: Our system uses a stereo camera system [

40] embedded on the end-effector of the robot; auditory communication, including a mic and speaker (both explained in

Section 5.2.2 and

Section 5.3.2) on the local and remote system; and an HMD on the local system to enable an immersive experience (detailed in

Section 5.2.1).

Flexible Head Movement: The telexistence robot is snake-shaped with eight interlinked servomotors (explained in

Section 5.3.1). Snake-type systems enable a high degree of redundancy, which in turn, allows the robot to be situated in a wide variety of postures using various movement trajectories.

Wearability: We developed a lightweight robot system capable of being worn on various locations around the body, such as the shoulder or waist. The robot is mounted on a rack that is worn as a backpack, which enables users to conveniently wear or take-off the robot (explained in

Section 5.3.1).

The term “Wearable robot” is mostly associated with traditional robotic systems, such as exoskeletons or rehabilitation robots [

41]. However, novel forms of wearable robots emerged in recent years, where such robots are also identified as wearable robots. Such emerging wearable robots are designed to be continuously worn and used while worn, meeting the same presumptions of wearability of wearable devices and robots [

42]. Some examples include supernumerary robotic limbs (SRLs) [

9,

35,

43], wearable companion robots [

44], haptic feedback robots [

25], and telexistence wearable robots [

16]. Accordingly, Piton is the first wearable snake-shaped telexistence robot that is designed to be ergonomically worn like a backpack, and it is designed to be used while being worn.

4. Piton’s Novel Interactions and Contexts of Use

Previous works show that the malleability of the snake form-factor enables robots to be flexible enough to be used in a large variety of usage contexts [

9,

10,

25,

45]. Similarly, Piton can be used in different unexplored interaction contexts, whether for daily use or industrial contexts. Piton can be used in daily usage contexts to enable companionship with a remote user [

10]. For example, Piton can be used for interacting with the surrogate user (as shown in

Figure 2a) or the remote environment, such as checking merchandise or enjoying activities with the surrogate (as shown in

Figure 2b).

Piton can also be used within social contexts (e.g., social gatherings or parties), where it can engage in interactions with various users at the remote site (

Figure 2c). Since Piton is a wearable robot, it can easily be taken to various locations, such as outdoors for hiking, shopping, or going to museums, which paves the way for various potential deployment contexts [

46]. Moreover, Piton can also be used for practical use cases, such as guiding users at home to fix equipment or as a replacement for existing teleconferencing solutions [

9].

Within industrial contexts, Piton can have a variety of advantages over existing systems since it can move and inspect objects from various directions and distances and can be used for skill-transfer applications (as shown in

Figure 3a). For example, Piton can be deployed at a remote industrial location to inspect equipment or instruct workers on how to operate machinery correctly at distant locations (e.g., power plants, offshore oil rigs, different cities, etc.). In such scenarios, an expert controls Piton and carries out inspection tasks for environments or objects with a surrogate user (as shown in

Figure 3b). The combination of telexistence, multimodal communication capabilities, and Piton’s large workspace enables it to offer a versatile user experience within remote environments, thereby potentially contributing to saving effort and time through remote work within various application contexts.

Overall, Piton can be used as a test-bed to explore the mentioned application contexts), which can pave the way for future implementations of Piton that focus on specific application domains. For example, within industrial tasks, Piton can be integrated with thermal imaging cameras or additional sensors. For daily usage contexts, Piton can be designed with smaller, slimmer, or unobtrusive form-factors to satisfy the requirements of daily use [

9]. The

supplementary materials (Video S1) demonstrates a variety of novel potential application contexts of Piton.

6. Piton Control Methods

We implemented three control methods, HM—using a head-mounted display (HMD), HH—using an HMD and hand-held tracker, and FM—using an HMD and a foot-mounted tracker. The

supplementary materials (Video S1) show how each of the control methods are used to control Piton.

Previous research evaluated foot-controlled wearable robots, which utilized a linear control method to map foot movement to the robot’s movement [

17,

18]. In linear control, the robot’s workspace is measured and directly converted to a movement range for the user’s leg. Therefore, each point on the foot movement workspace is directly and linearly linked to each point in the robot’s physical workspace [

17,

18]. Despite the simplicity of linear control, an inevitable drawback of such a method is that it does not compensate for individual physiological differences among users’ bodies. For example, short users may not be able to extend their leg to completely cover the entire workspace of the robot while being seated.

In contrast to linear control, scalar control maps a user-defined workspace to the robot’s physical workspace, where the user-defined workspace does not necessarily match the dimensions of the robot’s physical workspace. Linear calibration and controls are widely used in telexistence systems [

7,

20], as they can easily be applied to map a user’s head rotations to those of the telexistence robot [

7,

17,

20]. In scalar calibration, each point is calibrated to a different point of the other workspace to control the robot. The maximum and minimum movement can be scaled to a user’s defined body movement ranges. More generally, we can scale the movement ranges of the user’s hand or foot to compensate for users’ physiological differences. Accordingly, we utilized linear mapping for controlling the robot’s head rotations in all control methods, whereas we used scalar mapping for controlling positional movements (determined by the head, hand, or foot movements in each of the control method). To ensure proper execution of the controls, each user conducted an individual calibration of the intended control method before usage.

6.1. Calibration Procedures

Our calibration process should be conducted once for each user prior to using each of the control methods. The calibration is required as it ensures the produced robot movements cope with each user’s unique physiological movement ranges of their head, hand, and foot. Overall, we used the HMD for rotational movement in HM, HH, and FM control methods. The calibration process for rotational movement starts by instructing users to sit straight while wearing the HMD. Linear calibration of the rotational movement is initiated based on the first idle pose of the user’s head, which is set to zero in all rotational axes. Each rotational axis can enable a rotation of 140 degrees (70 degrees for each direction), corresponding to the user’s movement. Such movements directly control Piton’s head rotations within the same movement ranges, thereby directly controlling the three servomotor angles holding the ZED Mini Camera (pitch, yaw, and tilt).

While rotational movements are calibrated in the same way across all control methods, the calibration procedures for positional movements differ in each control method. The positional-movement calibration procedures are explained in the next subsections.

6.1.1. HM

Positional movement in the HM is calibrated by instructing users to move their head to the lowest point near their left knee, then to move to the highest point on the top-back-right side (as shown in

Figure 11a,b). Users were instructed to stretch their bodies as much as they comfortably can at each of the calibration points. Such calibration movements form a cube with coordinates at the bottom-front-left and top-back-right corners, where each point within such a workspace is scaled to a movement point in the robot’s IK [

48] workspace to control the robot’s location.

6.1.2. HH

The HH control method is calibrated by instructing users to hold the trackers with their dominant hand and moving the tracker to the left-bottom-front position, as high as their knee and as far as their hand extends (as shown in

Figure 12a). Then, they are instructed to move their hand to the right-top-back, as high as their shoulder and as close as possible to their shoulder position (

Figure 12b).

6.1.3. FM

The calibration process starts by instructing users to attach the tracker on top of their shoes using velcro and to sit facing the calibration area, which is designated by the white square on the floor (as shown in

Figure 13a,b). The white square is approx. 550 mm × 400 mm; such dimensions were selected to guide users during the calibration, as the calibration is scalar and adaptive to the user’s calibration procedure. Next, users are instructed to move their foot to the bottom-right and top-left edges of the white box as much as they comfortably can (as shown in

Figure 13a). Vertical positional calibration is conducted by asking users to dorsiflex their foot and face upwards (bend the toes upwards while keeping their heels on the floor), as the difference between the natural foot posture on the floor and the dorsiflexed foot position (as shown in

Figure 13b) determines the vertical workspace of the robot. Therefore, the vertical workspace was adaptive to each user’s maximum foot dorsiflexing angle. We chose this calibration procedure as we believe raising the foot in high positions may cause the users to become tired. The dorsiflexed pose enables users to have vertical movement while resting their foot on the floor (keeping the foot heel on the floor).

6.2. Using the Control Methods

To control Piton using each of the control methods (HM, HH, and FM), users can directly rotate Piton’s head by rotating their heads (while wearing the HMD). Positional movements are accomplished differently depending on the selected control method (as in

Figure 14). The positional movements’ information, captured in real time through the HMD (HM) or the trackers (HH, FM), is fed to the coordinate system and set as an IK objective for the IK solver [

48]. The received positional movements’ information is scaled to match the workspace of the robot’s movements. Next, the IK solver finds a solution that matches the set rotational positional movement objective in real time. Upon finding an IK solution that satisfies the set objectives, our system extracts the servomotor angle values of the provided solution and sends them to the robot’s control system over the network. Finally, the robot control system directly executes the servomotor angles on Piton through robot control software.

8. Discussion

In this section, we discuss the qualitative and quantitative results in light of our user study objectives. We conclude with discussing the suitability of each of Piton’s control methods within daily interaction contexts. The results are discussed in the following subsections.

8.1. Alpha IVBO Results

The IVBO-change results indicate that the HM and HH control methods scored significantly higher than the FM control method. This finding indicates that users felt a higher self-perception of the robot body through the HM and HH control methods than the FM. Having a higher self-perception (IVBO-change) contributes to better visual awareness of the surrounding environment and movement [

64]. Although there are no significant effects between the conditions in IVBO-acceptance, the reported scores are high in all the control methods. Such results indicate that users felt self-attribution and body ownership of the robot’s body. Lastly, the reported IVBO-control results are high across all control methods, without significant effects between the conditions. The high IVBO-control indicates that the users felt a high agency while using Piton in all the control conditions and across the various tasks.

To conclude, all controls had high ratings for the body ownership in terms of IVBO. The only exception to this is the IVBO-change for the FM, which had a significantly lower rating than other control methods. We believe that this finding indicates a lower body ownership effect for the FM control method when controlling Piton.

8.2. TLX

The TLX results indicated that all control methods have low scores (below or equal to 3 on a 7-point scale) in each of the corresponding six subjective scales. The low mental and physical demand scores indicate that users did not feel mentally and physically exhausted in all the conditions of our study. The low scores on effort and performance indicate that the users could use each control method easily and successfully to accomplish the various tasks. The temporal term shows that users did not feel rushed to complete the task and generally had good pacing. Lastly, the low score in frustration indicates that users did not feel irritated or annoyed with the control methods. Our statistical significance testing did not reveal significant effects among the conditions in all the TLX terms. Therefore, we conclude that the various control methods had similar scores despite minor differences.

Overall, the low scores indicate that the control methods were not exhaustive or demanding across the various terms of the TLX. Nevertheless, it is critical to evaluate the control methods in lengthier user studies, which may result in different effects on users mental and physical efforts after extended usage. The results show that the HM, HH, and FM can be utilized for controlling Piton in various tasks, given that they are used for short periods.

8.3. VRSQ

The low VRSQ oculomotor score across the control method indicates that the users felt minimal motion sickness effects while using Piton (general discomfort, fatigue, eye strain, and focus difficulty). Similarly, the VRSQ disorientation score was low, thereby indicating that mental effects related to motion sickness (headache, fullness of head, blurred vision, dizziness, and vertigo) were not significant in all the control methods. Lastly, the VRSQ total score indicates that the control methods had minimal overall motion sickness effects.

Although our statistical analysis did not reveal specific significant effects between the conditions in the VRSQ disorientation score, there are strong indications that there was an effect. However, the effect was not strong enough to elicit significance. Such findings are especially apparent between the HM-HH and HM-FM (

Table 6). We believe that there are stronger motion sickness symptoms from the HM than the HH and FM due to two main reasons. First, Piton is set to execute rotational and positional movements at a fixed speed during the evaluations. Although rotational movements are usually relatively short in duration, positional movements require the entire robot to take a new pose, which is more time-consuming. Accordingly, rapid movements by the user, or moving with varied acceleration/deceleration, may introduce an effect similar to latency in VR systems, which is a common cause of motion sickness [

65]. Second, due to anatomical differences between the robot’s structure and the human head, the IK system occasionally provides solutions to positional movement objectives that are correct yet are executed with a slight mismatch to the user’s head location. For example, if the user quickly leans forward to inspect an object closely, the IK solver would produce a correct solution for the final pose. However, the produced trajectory is executed on the robot with minor changes that fit the robot structure and the IK model and objectives, thereby producing a slight trajectory mismatch. Eventually, this mismatch causes users to experience visually induced motion sickness. Second, due to anatomical differences between the robot’s structure and the human head, the IK system occasionally provides solutions to positional movement objectives that are correct yet are executed with a slight mismatch to the user’s head location. For example, if the user quickly leans forward to inspect an object closely, the IK solver would produce a correct solution for the final pose. However, the produced trajectory is executed on the robot with minor changes that fit the robot structure and the IK model and objectives, thereby producing a slight trajectory mismatch. Second, due to anatomical differences between the robot’s structure and the human head, the IK system occasionally provides solutions to positional movement objectives that are correct, yet executed with a slight mismatch to the user’s head location. For example, if the user quickly leans forward to inspect an object closely, the IK solver would produce a correct solution for the final pose. However, the produced trajectory is executed on the robot with minor changes that fit the robot structure, set objectives and the IK model structure, thereby producing a slight trajectory mismatch. Eventually, this mismatch causes users to experience visually induced motion sickness [

66,

67].

In comparison, the HH and FM do not require the user’s head to conduct positional controls, which we believe have contributed to their lower overall disorientation score. Overall, both discussed challenges can be addressed using the PID adjustments of the servomotors and adaptive control of Piton [

68], which will significantly reduce the amount of delay between the user’s movements and the robot’s positional movements. Moreover, the IK system should further be enhanced to take into consideration the produced trajectory so that it completely follows the user’s head positional movements. For example, by assigning IK objectives to the middle joints, they can be precisely controlled according to the user’s head position. Furthermore, increasing the speed of the Piton control loop would also contribute to better and more responsive overall controls.

Overall, the results show that the control methods do not produce high motion sickness effects. Although there are significant differences that show the HM producing slightly higher motion sickness effects than other conditions, enhancements to the robot control loop and IK model can contribute to mitigating such issues. The HH and FM also have minimal motion sickness effects, which is encouraging to pursue extended deployments of such control methods further.

8.4. Qualitative Data

Overall, users thought the HM was easiest to utilize for moving Piton to a desired location and for looking at objects with relatively high accuracy. Participants also indicated that they thought the HM was the easiest to use, which we believe was mainly due to the control method’s simplicity and similarity with natural head movements.

Although binding Piton’s rotational and positional controls to the user’s head movements is intuitive for users, the limitations of human head movements cause a number of challenges. Participants indicated that some poses were difficult to accomplish, which was mainly due to limitations of the user’s natural head movement ranges. For example, it is difficult and tiring for users to rotate their heads up while extending their bodies forward. Similarly, it is difficult to access some locations in the calibrated space for positional controls, such as those directly near the user’s waist or thighs. Results from the questionnaire also show that users did not think the HM was best for vertical movements. Accordingly, our current calibration space is cubical in shape, and such a challenge can be addressed by creating a nonuniform calibration space that complies with the limitations of the human head movements. Therefore, despite its intuitiveness and ease of use, we believe that limitations caused by natural human head and neck movements affect the accessibility and usability of the workspace.

Users praised the HH control method for providing broad vertical and horizontal movement ranges that were easily accessible for rotational controls as well as vertical and horizontal positioning. In addition, the HH control method was highly rated by participants for the ease of movement to the desired location or for looking at an object. Our results also show that participants thought the HH control method was significantly more accurate in vertical movements than the HM and FM.

Although the rotational control was intuitive in the HH, positional control required coordinating the user’s hand movements with the head movements to situate the robot at different postures. Such coordination requires some practice, as users occasionally confuse head and hand movements, which leads to small control errors during the experiment. Similarly, visualizing the boundaries of the workspace, especially for horizontal movement, is an essential improvement to alert users upon reaching the extent of possible movement range. Accordingly, although the HH requires further head-hand movement coordination to control Piton, the HH has the highest subjective accuracy, especially for vertical movement, where these advantages overcome the movement limitations found in the HM.

The FM was least preferred by the users in terms of movement to a desired position and looking at an object, as well as for vertical and horizontal movement and subjective accuracy. The FM had low scores in terms of user preference, which indicates that users generally disliked the FM.

Further analysis of the qualitative results indicates that participants mainly attributed their dislike of the FM to the narrow workspace for positional movements using the foot. Overall, accessibility to various points in the control workspace is an issue, especially when their foot is very close or far away from them (as shown in

Figure 13b). Such a limitation makes users unable to perform specific controls of Piton, such as raising Piton high at the forward-most or backward-most horizontal positions of Piton.

Participants also highlighted the difficulty of coordinating head and foot movements to control Piton, especially when compared to the HH. Despite the stated difficulties of the FM, users stated that it was not as tiring as the HM or HH. Users moved their foot within the tracking space while resting on their heels and dorsiflexed their toes up and down to control Piton. In contrast, the HM and HH require users to move their bodies or hand in order to control Piton, which could be more tiring during extensive positional movements or prolonged sessions. However, the NASA-TLX results did not show significant differences among the conditions.

Overall, the qualitative results show that users greatly favored the HM, the HH and then, the FM. We believe the main contributing factor for the user’s subjective preference of the HM over other methods is the familiarity of the control method, which resembled natural head movements. Their subjective accuracy scores show that they felt the HH was more accurate in controlling Piton, then the HM, and lastly, the FM. The HH control method’s main advantage is the accessibility and flexibility in horizontal and vertical movements that surpass the HM and FM, which are mainly limited due to the user’s natural head and foot movement limitations.

8.5. The Suitability of the Control Methods in Various Contexts of Use

The HM control method had a low TLX score, which showed low mental and physical demand. At the same time, a low overall VRSQ score indicated only a slight motion sickness effect. The HM control method also had higher body ownership, which shows a high self-perception of the robot as the user’s own body. The qualitative analysis also indicates that users favor the HM over other methods due to its intuitiveness and praised it for its relatively high accuracy. Such a rating was mainly due to the HM resembling human head movements, making it familiar and easy to use. The HM also had minimal motion sickness effects. Therefore, we believe the HM control method is suitable for tasks that do not require high accuracy but require ease of use and comfortable control methods that any person can use. A typical example of this is daily usage tasks, such as companionship during travel, hiking, or shopping. Additional tasks also include remote assistance and guidance at home, such as teaching users how to cook or to set-up and operate a device.

The HH control method had low mental and physical demand, low motion sickness, and higher body ownership, which is similar to the HM. Users praised the HH for its relatively high accuracy, especially for vertical movement. Therefore, we believe the HH control method is suitable for tasks that require high accuracy in positional movements. For example, industrial tasks that require inspection of tools or objects from multiple angles and distances.

Lastly, the FM control method had a low score for both mental-physical demand and motion sickness. The FM control method had a lower IVBO-change score and higher IVBO-acceptance and IVBO-control scores, meaning this control method had fair body ownership scores. The qualitative results show that users least-liked the FM because other control methods were more accurate. However, the FM can potentially be effective for longer usage sessions, as users can relax their foot on the floor while also being able to control Piton. Therefore, the FM control method can be utilized for daily life tasks or industrial tasks that do not require high accuracy but require prolonged usage sessions.

Overall, we believe that each control method has different advantages and disadvantages in various contexts, especially since the control method should be designed based on task interaction requirements [

69]. Our evaluation of the control methods provides various insights for the usability of the control methods. We also believe the sample size is suitable for studying Piton’s control methods and elicits various insights about its usability within various contexts. However, we believe that a larger and more varied sample size may yield extended results, especially about the suitability of these control methods within various tasks within daily or industrial contexts.

9. Conclusions and Future Work

This paper presents Piton, a novel, wearable, snake-like telexistence robot. Piton can be used in various contexts, whether for leisure applications or industrial and professional contexts. We discuss Piton’s implementation specifications and explain three control methods that we used for controlling Piton.

Although all the control methods generally had high NASA-TLX scores, high body ownership, and low motion sickness effects, there exists a number of differences that distinguish the control methods, especially in terms of qualitative results. The HM control method has the highest body ownership results and was most favored by participants due to its intuitiveness, as it resembles natural human head motion. Therefore, the HM is best deployed for tasks that require basic movements, such as those within daily usage contexts. The HH control method has the highest perceived accuracy, since users could easily position Piton in various locations using the hand-held tracker. Therefore, we believe it is best suited for tasks requiring high accuracy, such as industrial inspection tasks. The FM was least-liked by the participants, who also thought it was imprecise for the evaluated tasks. However, this control method enables users to relax their foot on the floor while controlling the robot, which could be suitable for longer usage sessions.

Although our user study yielded various insights for the usability of the implemented control methods for telexistence, it is essential for future work to explore additional control methods using other modalities of control. Moreover, expanding the user study with a larger and more diverse sample size would enable us to gather deeper insights, especially with users who are not familiar with VR or with those coming from industrial backgrounds. Piton should also be improved, especially by increasing its DoFs and further stabilizing its camera, which would increase its movement flexibility, increasing its speed and overall user experience.

An important finding of our work is that our results show that a control method that does not resemble natural human head movements, such as HH, can be superior for robot control and does not jeopardize essential telexistence requirements (e.g., relatively high body ownership, low motion sickness, and TLX scores). This aspect is encouraging for further exploration of future control methods that can both provide an adequate balance between telexistence experience and task efficiency and may include other control modalities for accurate robot controls.

Most importantly, Piton shows that robots with different anatomical designs than humans can be used for telexistence. Although Piton has a different anatomical structure and workspace than a human neck, our developed control methods of the kinematic model and IK solver could yield a suitable telexistence experience. Such findings pave the way for further research to explore other nonhuman form-factors for telexistence. Such form-factors can provide various benefits beyond human-mimetic telexistence robots, such as higher accuracy, a larger movement workspace, or more interaction capabilities.

The design and evaluation of Piton revealed several research opportunities and challenges to build on our presented robot and research results. Based on our design and evaluation of Piton, we discuss several design improvements and future research directions that are essential to build on our presented efforts:

Motion Sickness: In addition to the enhancements discussed in

Section 8.3, we believe camera vibrations and shaking, which are caused by the servomotors or rapid movements, contributed to motion sickness. Such challenges can be addressed in a variety of improvements. Motor control optimizations (e.g., PID control) can stabilize and smooth out the robot’s movements, as well as decrease overshooting during faster movements. In addition, adding elastic and soft materials to the camera holder can absorb vibrations caused by the servomotors, which in turn, can significantly reduce shaking during movements. Some of these improvements are widely used in drones to reduce the shaking caused by atmospheric factors and high-speed motors [

70,

71].

Our VRSQ results showed that most users had minimal motion sickness while using Piton. However, participants of our user study used Piton in short bursts (3–5 min), and we allocated sufficient resting time between the tasks. Therefore, it is important to evaluate the usability of Piton for prolonged sessions, especially as our initial tests revealed that using the Piton for long periods induces high motion sickness effects.

Robot Structure: Inspecting an object from various distances and angles is an important capability of Piton. However, the current implementation limits horizontal movement of the robot as such movement is conducted using two servomotors (first and third servomotors). Therefore, in order to extend the movement range of Piton, more DoFs are needed. Therefore, adding more servomotors next to the third servomotor for backward and forward positional movements can extend the range of horizontal motions.

Safety and Hazards: Although our robot is generally underpowered, we used a position-based control method to control the robot. The robot’s sudden and quick movements may cause hazards to the users, especially near its base (where we use stronger servomotors). Therefore, we intend to utilize torque-based position controls or impedance controls, which can enable the wearer to easily push away the robot in case of emergencies without much effort.

Supplementary Interaction Methods: Further research should explore using additional interaction methods to supplement HMD-based controls, such as using eye-gaze [

72,

73], electromyography [

74,

75], or a hand-mounted exoskeleton [

76]. We believe these additional interaction methods are especially needed to extend controllability of Piton, especially for positional movements and postures that are uncomfortable for users to execute using our implemented control methods (e.g., positions too close/low to the user). Integrating these control methods may also contribute to higher accuracy and comfortable usage during extended sessions, especially since physical controls utilizing the user’s head, hands, or legs are tiring after prolonged use. However, the effects of using supplementary control methods should also be evaluated within the context of telexistence.

Wearability Evaluation: Although our work focuses on Piton control methods, its wearability within daily and industrial contexts presents numerous challenges. First, since the wearable robot is kinematically dependent on the user wearing it (at the remote site), the robot may be moved involuntarily by the user wearing Piton, which may induce high motion sickness. One method for addressing this challenge is using inertia measurement units (IMUs) to moderate the effects of the surrogate’s movements on robot movements.

Second, sudden movements of the robot at high speeds cause the backpack rack to rapidly shake. Therefore, the backpack rack and the robot base should be further strengthened and stabilized. Lastly, similar to other innovative robot form-factors [

76], Piton presents interaction potentials that are not explored in prior works. Therefore, we believe that workshops should be held with both professional and casual users to explore future potential application domains of Piton. The outputs of such workshops would deepen our understanding of the requirements and expectations of using robots such as Piton.

Extended Evaluations and Task Domain Investigation: Our main evaluation results showed the advantages and disadvantages of each of Piton’s control methods within the context of telexistence. Such results are essential to pave the way for extended evaluations that focus on larger and varied user groups, as well as deeper usability studies of daily life or industrial tasks. Accordingly, future evaluations should focus on large-scale user studies involving larger and varied user groups. Moreover, the interactions that occur between the controller and surrogate users, and between those mentioned users and the remote environment, should be studied in the context of using Piton as the sole communication medium to fulfill different tasks. Another important direction of evaluations is to have deeper explorations of the deployment domains of Piton within daily usage and industrial contexts. To fulfill this objective, focus groups and workshops should be conducted [

9,

44,

45], where such evaluations explicitly study the requirements, expectations, and deployment tasks and contexts of robotic systems. Overall, such findings are critical for the adoption and deployment of Piton within real-world daily use and industrial contexts.

In summary, our design and evaluation of Piton revealed several opportunities and challenges. Various mechanical and technical enhancements are required, such as camera stabilization and robot structure enhancements, that may directly contribute to a better user experience. Utilizing adaptive control strategies to select the appropriate control method based on the needed task is an important direction when deploying robots such as Piton. Moreover, other control modalities should also be explored, especially as physical controls are often tiring for users during extended usage sessions. Further research directions should also focus on exploring Piton as a robotic appendage for use within both daily and industrial contexts, which will pave the way for its effective deployment within such contexts.