Supervised Myoelectrical Hand Gesture Recognition in Post-Acute Stroke Patients with Upper Limb Paresis on Affected and Non-Affected Sides

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

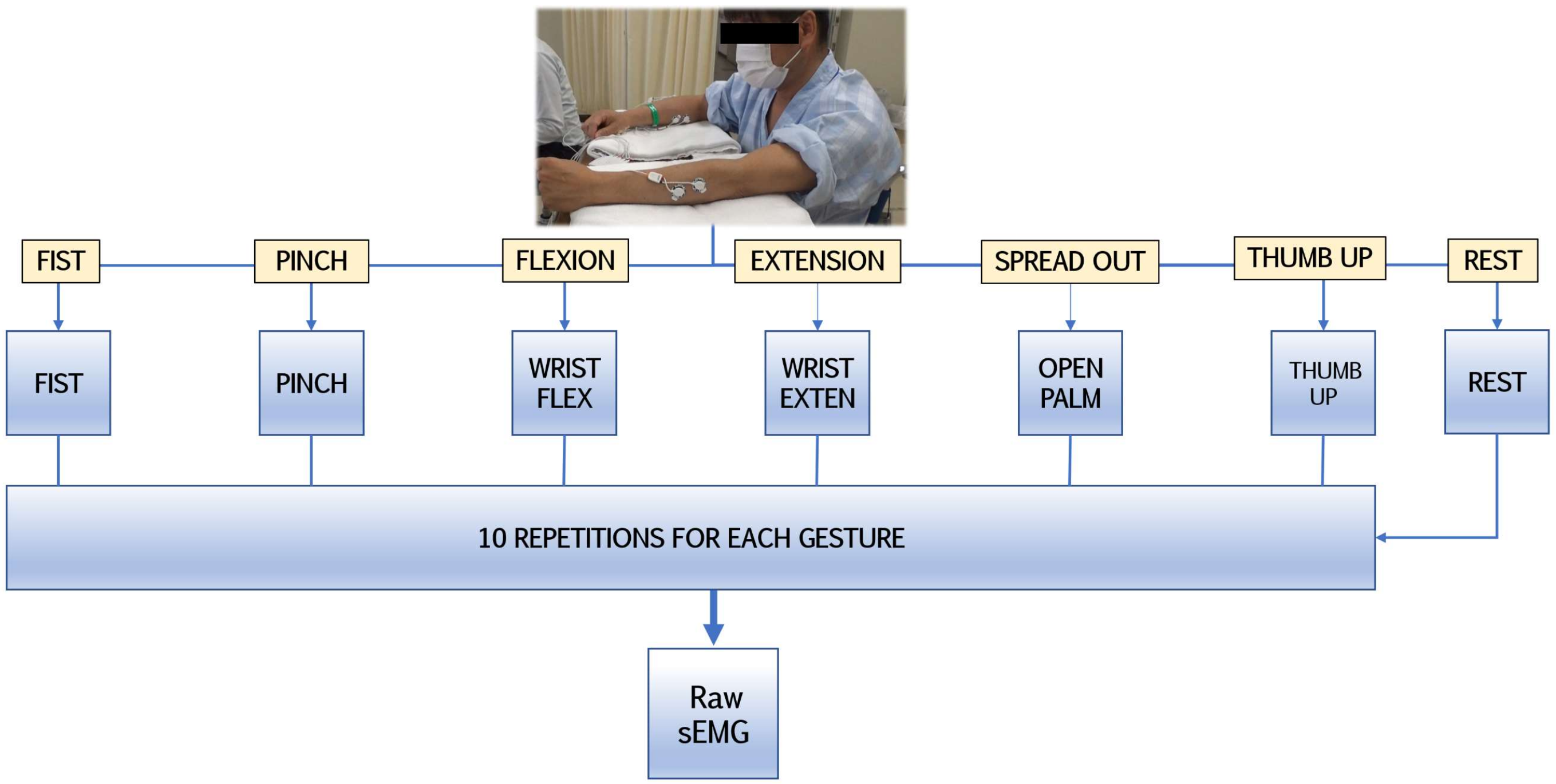

2.2. sEMG Recording Setup and Observational Experiments

2.3. Data Preparation

2.3.1. Signal Preprocessing

- A fourth-order Butterworth bandpass filter (BPF) ranging from 20 to 300 Hz;

- Hampel filtering for artefact reduction by identifying outliers deviating from an average of more than double the standard deviation in the neighboring 100 samples;

- Root-mean-square signal envelope and sEMG data normalization.

- The maximum peak variable likelihood estimation was set to 8, cutting off the first and last gestures from each 10-gesture repeated set.

- Minimal temporal distances between the peaks were configured as 0.1 s.

- To avoid false variables, the estimation of peak selection was set at 0.25 percentile of the difference from neighboring signal peaks.

- For temporal standardization, signal boundaries (including the manually predefined ‘rest’ label) were determined by the window length of 30 ms for the inferior limit and 60 ms for the superior limit with regard to the peak.

2.3.2. Feature Extraction

2.3.3. Feature Validation and Visualization

2.3.4. Feature Vector Dimensional Reduction

2.4. Machine Learning and Classification Algorithms

2.5. Statistical Evaluation

3. Results

3.1. Patient Characteristics

3.2. Accuracy Rates and Confusion Matrices of Paretic and Non-Affected Extremities

3.3. PCA Dimensional Impact on Supervised Model Performance

3.4. Classifier Statistical Evaluation and Comparison Using Dimensional Shift

3.5. Summary of Hand Gesture Prediction Rates

4. Discussion

5. Conclusions

6. Patents

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sennfält, S.; Norrving, B.; Petersson, J.; Ullberg, T. Long-term survival and function after stroke: A longitudinal observational study from the Swedish Stroke Register. Stroke 2019, 50, 53–61. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, D.; Patil, C.G. Epidemiology and the Global Burden of Stroke. World Neurosurg. 2011, 76, S85–S90. [Google Scholar] [CrossRef] [PubMed]

- Raghavan, P. Upper limb motor impairment after stroke. Phys. Med. Rehabil. Clin. 2015, 26, 599–610. [Google Scholar] [CrossRef] [PubMed]

- Prabhakaran, S.; Zarahn, E.; Riley, C.; Speizer, A.; Chong, J.Y.; Lazar, R.M.; Marshall, R.S.; Krakauer, J.W. Inter-individual Variability in the Capacity for Motor Recovery After Ischemic Stroke. Neurorehabilit. Neural Repair 2008, 22, 64–71. [Google Scholar] [CrossRef] [PubMed]

- Welmer, A.; Holmqvist, L.; Sommerfeld, D. Limited fine hand use after stroke and its association with other disabilities. J. Rehabil. Med. 2008, 40, 603–608. [Google Scholar] [CrossRef]

- Karaahmet, O.Z.; Eksioglu, E.; Gürçay, E.; Karsli, P.B.; Tamkan, U.; Bal, A.; Cakcı, A.; Cakci, A. Hemiplegic Shoulder Pain: Associated Factors and Rehabilitation Outcomes of Hemiplegic Patients with and Without Shoulder Pain. Top. Stroke Rehabil. 2014, 21, 237–245. [Google Scholar] [CrossRef]

- Pohjasvaara, T.; Vataja, R.; Leppavuori, A.; Kaste, M.; Erkinjuntti, T. Depression is an independent predictor of poor long-term functional outcome post-stroke. Eur. J. Neurol. 2001, 8, 315–319. [Google Scholar] [CrossRef]

- Eschmann, H.; Héroux, M.E.; Cheetham, J.H.; Potts, S.; Diong, J. Thumb and finger movement is reduced after stroke: An observational study. PLoS ONE 2019, 14, e0217969. [Google Scholar] [CrossRef]

- Sunderland, A.; Tuke, A. Neuroplasticity, learning and recovery after stroke: A critical evaluation of constraint-induced therapy. Neuropsychol. Rehabil. 2005, 15, 81–96. [Google Scholar] [CrossRef]

- Hatem, S.M.; Saussez, G.; Della Faille, M.; Prist, V.; Zhang, X.; Dispa, D.; Bleyenheuft, Y. Rehabilitation of Motor Function after Stroke: A Multiple Systematic Review Focused on Techniques to Stimulate Upper Extremity Recovery. Front. Hum. Neurosci. 2016, 10, 442. [Google Scholar] [CrossRef]

- Maceira-Elvira, P.; Popa, T.; Schmid, A.-C.; Hummel, F.C. Wearable technology in stroke rehabilitation: Towards improved diagnosis and treatment of upper-limb motor impairment. J. Neuroeng. Rehabil. 2019, 16, 142. [Google Scholar] [CrossRef]

- Blank, A.A.; French, J.A.; Pehlivan, A.U.; O’Malley, M.K. Current Trends in Robot-Assisted Upper-Limb Stroke Rehabilitation: Promoting Patient Engagement in Therapy. Curr. Phys. Med. Rehabil. Rep. 2014, 2, 184–195. [Google Scholar] [CrossRef]

- Cesqui, B.; Tropea, P.; Micera, S.; Krebs, H.I. EMG-based pattern recognition approach in post stroke robot-aided rehabilitation: A feasibility study. J. Neuroeng. Rehabil. 2013, 10, 75. [Google Scholar] [CrossRef]

- Jarrassé, N.; Proietti, T.; Crocher, V.; Robertson, J.; Sahbani, A.; Morel, G.; Roby-Brami, A. Robotic exoskeletons: A perspective for the rehabilitation of arm coordination in stroke patients. Front. Hum. Neurosci. 2014, 8, 947. [Google Scholar] [CrossRef]

- Ockenfeld, C.; Tong, R.K.Y.; Susanto, E.A.; Ho, S.-K.; Hu, X.-L. Fine finger motor skill training with exoskeleton robotic hand in chronic stroke: Stroke rehabilitation. In Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Meeker, C.; Park, S.; Bishop, L.; Stein, J.; Ciocarlie, M. EMG pattern classification to control a hand orthosis for functional grasp assistance after stroke. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; pp. 1203–1210. [Google Scholar] [CrossRef]

- Levin, M.F.; Weiss, P.L.; Keshner, E.A. Emergence of Virtual Reality as a Tool for Upper Limb Rehabilitation: Incorporation of Motor Control and Motor Learning Principles. Phys. Ther. 2015, 95, 415–425. [Google Scholar] [CrossRef]

- Garcia, M.C.; Vieira, T.M.M. Surface electromyography: Why, when and how to use it. Rev. Andal. Med. Deporte 2011, 4, 17–28. [Google Scholar]

- Rayegani, S.M.; Raeissadat, S.A.; Sedighipour, L.; Rezazadeh, I.M.; Bahrami, M.H.; Eliaspour, D.; Khosrawi, S. Effect of Neurofeedback and Electromyographic-Biofeedback Therapy on Improving Hand Function in Stroke Patients. Top. Stroke Rehabil. 2014, 21, 137–151. [Google Scholar] [CrossRef]

- Janssen, H.; Ada, L.; Bernhardt, J.; McElduff, P.; Pollack, M.; Nilsson, M.; Spratt, N. An enriched environment increases activity in stroke patients undergoing rehabilitation in a mixed rehabilitation unit: A pilot non-randomized controlled trial. Disabil. Rehabil. 2014, 36, 255–262. [Google Scholar] [CrossRef]

- Ballester, B.R.; Maier, M.; Duff, A.; Cameirão, M.; Bermúdez, S.; Duarte, E.; Cuxart, A.; Rodriguez, S.; Mozo, R.M.S.S.; Verschure, P.F.M.J. A critical time window for recovery extends beyond one-year post-stroke. J. Neurophysiol. 2019, 122, 350–357. [Google Scholar] [CrossRef]

- Campagnini, S.; Arienti, C.; Patrini, M.; Liuzzi, P.; Mannini, A.; Carrozza, M.C. Machine learning methods for functional recovery prediction and prognosis in post-stroke rehabilitation: A systematic review. J. Neuroeng. Rehabil. 2022, 19, 54. [Google Scholar] [CrossRef]

- Saridis, G.N.; Gootee, T.P. EMG Pattern Analysis and Classification for a Prosthetic Arm. IEEE Trans. Biomed. Eng. 1982, BME-29, 403–412. [Google Scholar] [CrossRef] [PubMed]

- Khushaba, R.N.; Kodagoda, S.; Takruri, M.; Dissanayake, G. Toward improved control of prosthetic fingers using surface electromyogram (EMG) signals. Expert Syst. Appl. 2012, 39, 10731–10738. [Google Scholar] [CrossRef]

- Samuel, O.W.; Zhou, H.; Li, X.; Wang, H.; Zhang, H.; Sangaiah, A.K.; Li, G. Pattern recognition of electromyography signals based on novel time domain features for amputees’ limb motion classification. Comput. Electr. Eng. 2018, 67, 646–655. [Google Scholar] [CrossRef]

- Hudgins, B.; Parker, P.; Scott, R. A new strategy for multifunction myoelectric control. IEEE Trans. Biomed. Eng. 1993, 40, 82–94. [Google Scholar] [CrossRef] [PubMed]

- Phinyomark, A.; Khushaba, R.N.; Scheme, E. Feature Extraction and Selection for Myoelectric Control Based on Wearable EMG Sensors. Sensors 2018, 18, 1615. [Google Scholar] [CrossRef]

- Jaramillo-Yánez, A.; Benalcázar, M.E.; Mena-Maldonado, E. Real-Time Hand Gesture Recognition Using Surface Electromyography and Machine Learning: A Systematic Literature Review. Sensors 2020, 20, 2467. [Google Scholar] [CrossRef]

- Castiblanco, J.C.; Ortmann, S.; Mondragon, I.F.; Alvarado-Rojas, C.; Jöbges, M.; Colorado, J.D. Myoelectric pattern recognition of hand motions for stroke rehabilitation. Biomed. Signal Process. Control 2020, 57, 101737. [Google Scholar] [CrossRef]

- Jochumsen, M.; Niazi, I.K.; Rehman, M.Z.U.; Amjad, I.; Shafique, M.; Gilani, S.O.; Waris, A. Decoding Attempted Hand Movements in Stroke Patients Using Surface Electromyography. Sensors 2020, 20, 6763. [Google Scholar] [CrossRef]

- Lee, S.W.; Wilson, K.M.; Lock, B.A.; Kamper, D.G. Subject-Specific Myoelectric Pattern Classification of Functional Hand Movements for Stroke Survivors. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 19, 558–566. [Google Scholar] [CrossRef]

- Yu, H.; Fan, X.; Zhao, L.; Guo, X. A novel hand gesture recognition method based on 2-channel sEMG. Technol. Health Care 2018, 26 (Suppl. S1), 205–214. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, X.; Gao, X.; Chen, X.; Zhou, P. Wavelet Packet Feature Assessment for High-Density Myoelectric Pattern Recognition and Channel Selection toward Stroke Rehabilitation. Front. Neurol. 2016, 7, 197. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, P. High-Density Myoelectric Pattern Recognition Toward Improved Stroke Rehabilitation. IEEE Trans. Biomed. Eng. 2012, 59, 1649–1657. [Google Scholar] [CrossRef]

- Yang, C.; Long, J.; Urbin, M.A.; Feng, Y.; Song, G.; Weng, J.; Li, Z. Real-Time Myocontrol of a Human–Computer Interface by Paretic Muscles After Stroke. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 1126–1132. [Google Scholar] [CrossRef]

- Kerber, F.; Puhl, M.; Krüger, A. User-independent real-time hand gesture recognition based on surface electromyography. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services, Vienna, Austria, 4–7 September 2017; pp. 1–7. [Google Scholar]

- Islam, J.; Ahmad, S.; Haque, F.; Reaz, M.B.I.; Bhuiyan, M.A.S.; Minhad, K.N.; Islam, R. Myoelectric Pattern Recognition Performance Enhancement Using Nonlinear Features. Comput. Intell. Neurosci. 2022, 2022, 6414664. [Google Scholar] [CrossRef]

- Junior, J.J.A.M.; Freitas, M.L.; Siqueira, H.V.; Lazzaretti, A.E.; Pichorim, S.F.; Stevan, S.L., Jr. Feature selection and dimensionality reduction: An extensive comparison in hand gesture classification by sEMG in eight channels armband approach. Biomed. Signal Process. Control. 2020, 59, 101920. [Google Scholar] [CrossRef]

- Phinyomark, A.; Hirunviriya, S.; Limsakul, C.; Phukpattaranont, P. Evaluation of EMG feature extraction for hand movement recognition based on Euclidean distance and standard deviation. In Proceedings of the ECTI-CON2010: The 2010 ECTI International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Chiang, Thailand, 19–21 May 2010; pp. 856–860. [Google Scholar]

- Toledo-Pérez, D.C.; Rodríguez-Reséndiz, J.; Gómez-Loenzo, R.A.; Jauregui-Correa, J.C. Support Vector Machine-Based EMG Signal Classification Techniques: A Review. Appl. Sci. 2019, 9, 4402. [Google Scholar] [CrossRef]

- Costa, Á.; Itkonen, M.; Yamasaki, H.; Alnajjar, F.S.; Shimoda, S. Importance of muscle selection for EMG signal analysis during upper limb rehabilitation of stroke patients. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju Island, Korea, 11–15 July 2017; pp. 2510–2513. [Google Scholar]

- Li, Y.; Chen, X.; Zhang, X.; Zhou, P. Several practical issues toward implementing myoelectric pattern recognition for stroke rehabilitation. Med. Eng. Phys. 2014, 36, 754–760. [Google Scholar] [CrossRef]

- Phinyomark, A.; Nuidod, A.; Phukpattaranont, P.; Limsakul, C. Feature Extraction and Reduction of Wavelet Transform Coefficients for EMG Pattern Classification. Electron. Electr. Eng. 2012, 122, 27–32. [Google Scholar] [CrossRef]

- Parnandi, A.; Kaku, A.; Venkatesan, A.; Pandit, N.; Wirtanen, A.; Rajamohan, H.; Venkataramanan, K.; Nilsen, D.; Fernandez-Granda, C.; Schambra, H. PrimSeq: A deep learning-based pipeline to quantitate rehabilitation training. PLoS Digit. Health 2022, 1, e0000044. [Google Scholar] [CrossRef]

- Gladstone, D.; Danells, C.J.; Black, S. The Fugl-Meyer Assessment of Motor Recovery after Stroke: A Critical Review of Its Measurement Properties. Neurorehabilit. Neural Repair 2002, 16, 232–240. [Google Scholar] [CrossRef]

- Domen, K.; Sonoda, S.; Chino, N.; Saitoh, E.; Kimura, A. Evaluation of Motor Function in Stroke Patients Using the Stroke Impairment Assessment Set (SIAS). In Functional Evaluation of Stroke Patients; Springer: Tokyo, Japan, 1996; pp. 33–44. [Google Scholar] [CrossRef]

- Naghdi, S.; Ansari, N.N.; Mansouri, K.; Hasson, S. A neurophysiological and clinical study of Brunnstrom recovery stages in the upper limb following stroke. Brain Inj. 2010, 24, 1372–1378. [Google Scholar] [CrossRef] [PubMed]

- Young, A.J.; Hargrove, L.J.; Kuiken, T.A. Improving Myoelectric Pattern Recognition Robustness to Electrode Shift by Changing Interelectrode Distance and Electrode Configuration. IEEE Trans. Biomed. Eng. 2011, 59, 645–652. [Google Scholar] [CrossRef] [PubMed]

- Dollar, A.M. Classifying human hand use and the activities of daily living. In The Human Hand as an Inspiration for Robot Hand Development; Springer: Cham, Switzerland, 2014; pp. 201–216. [Google Scholar]

- Available online: https://support.pluxbiosignals.com/wp-content/uploads/2021/10/biosignalsplux-Electromyography-EMG-Datasheet.pdf (accessed on 24 November 2021).

- De Luca, C.J.; Gilmore, L.D.; Kuznetsov, M.; Roy, S.H. Filtering the surface EMG signal: Movement artifact and baseline noise contamination. J. Biomech. 2010, 43, 1573–1579. [Google Scholar] [CrossRef] [PubMed]

- Bhowmik, S.; Jelfs, B.; Arjunan, S.P.; Kumar, D.K. Outlier removal in facial surface electromyography through Hampel filtering technique. In Proceedings of the 2017 IEEE Life Sciences Conference (LSC), Sydney, NSW, Australia, 13–15 December 2017; pp. 258–261. [Google Scholar] [CrossRef]

- Srhoj-Egekher, V.; Cifrek, M.; Medved, V. The application of Hilbert–Huang transform in the analysis of muscle fatigue during cyclic dynamic contractions. Med. Biol. Eng. Comput. 2011, 49, 659–669. [Google Scholar] [CrossRef] [PubMed]

- Kukker, A.; Sharma, R.; Malik, H. Forearm movements classification of EMG signals using Hilbert Huang transform and artificial neural networks. In Proceedings of the 2016 IEEE 7th Power India International Conference (PIICON), Bikaner, India, 25–27 November 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Ortiz-Catalan, M. Cardinality as a highly descriptive feature in myoelectric pattern recognition for decoding motor volition. Front. Neurosci. 2015, 9, 416. [Google Scholar] [CrossRef]

- Wu, Y.; Hu, X.; Wang, Z.; Wen, J.; Kan, J.; Li, W. Exploration of Feature Extraction Methods and Dimension for sEMG Signal Classification. Appl. Sci. 2019, 9, 5343. [Google Scholar] [CrossRef]

- Venugopal, G.; Navaneethakrishna, M.; Ramakrishnan, S. Extraction and analysis of multiple time window features associated with muscle fatigue conditions using sEMG signals. Expert Syst. Appl. 2014, 41, 2652–2659. [Google Scholar] [CrossRef]

- Phinyomark, A.; Quaine, F.; Charbonnier, S.; Serviere, C.; Tarpin-Bernard, F.; Laurillau, Y. EMG feature evaluation for improving myoelectric pattern recognition robustness. Expert Syst. Appl. 2013, 40, 4832–4840. [Google Scholar] [CrossRef]

- Fajardo, J.M.; Gomez, O.; Prieto, F. EMG hand gesture classification using handcrafted and deep features. Biomed. Signal Process. Control 2020, 63, 102210. [Google Scholar] [CrossRef]

- She, H.; Zhu, J.; Tian, Y.; Wang, Y.; Yokoi, H.; Huang, Q. SEMG Feature Extraction Based on Stockwell Transform Improves Hand Movement Recognition Accuracy. Sensors 2019, 19, 4457. [Google Scholar] [CrossRef]

- Caesarendra, W.; Tjahjowidodo, T.; Nico, Y.; Wahyudati, S.; Nurhasanah, L. EMG finger movement classification based on ANFIS. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2018; Volume 1007, p. 012005. [Google Scholar] [CrossRef]

- Spiewak, C.; Islam, M.; Zaman, A.; Rahman, M.H. A comprehensive study on EMG feature extraction and classifiers. Open Access J. Biomed. Eng. Biosci. 2018, 1, 1–10. [Google Scholar] [CrossRef]

- Phinyomark, A.; Thongpanja, S.; Hu, H.; Phukpattaranont, P.; Limsakul, C. The usefulness of mean and median frequencies in electromyography analysis. Comput. Intell. Electromyogr. Anal. Perspect. Curr. Appl. Future Chall. 2012, 81, 67. [Google Scholar]

- Toledo-Pérez, D.C.; Martínez-Prado, M.A.; Gómez-Loenzo, R.A.; Paredes-García, W.J.; Rodríguez-Reséndiz, J. A Study of Movement Classification of the Lower Limb Based on up to 4-EMG Channels. Electronics 2019, 8, 259. [Google Scholar] [CrossRef]

- Gokgoz, E.; Subasi, A. Effect of multiscale PCA de-noising on EMG signal classification for diagnosis of neuromuscular disorders. J. Med. Syst. 2014, 38, 31. [Google Scholar] [CrossRef]

| Domain | Feature | Internal Parameters | Short Description |

|---|---|---|---|

| Time domain | TM4-5 | [27]. | |

| LCARD | Threshold set to 0.001 | LCARD examines the number of unique values in the time-series set among each channel [55]. | |

| Time–frequency domain | HHT | HHT is a high-order signal processing model of empirical mode decomposition and the Hilbert transform [53,54]. | |

| MEWP | Wavelet Daubechies (Db4) | MEWP provides enriched signal analysis by wavelet decomposition set of parameters: position, decomposed signal scaling, and frequency curve [43,56]. |

| Domain | Feature | Internal Parameters | Short Description |

|---|---|---|---|

| Time domain | LRMSV2-3 | ; | |

| ASM | ; | ; | |

| ASR | ; | ||

| AAC | ; | ||

| HPC | ; | ; | |

| Frequency domain | MMDF | ; | |

| SMD | ; | ||

| Spatial domain | FER-4 | The normalized mean value of the ratio between flexors and extensors channels. | |

| Domain | Feature | Internal Parameters | Short Description |

|---|---|---|---|

| Time domain | MMAV2,MMAV5 | ; ; | ; ) of interest aiming to investigate the 3/5th of 4/5th window segment. Equitation is similar to MMAV2 [25,57]. |

| SSI | ; | ||

| KURT | ; | ||

| SD | ; | ||

| MFL | ; | ||

| WL | Threshold set to 0.05 | ; | |

| MHW | ; | ||

| AR3 | ; | , order set to 3 | |

| LPC3 | ; | , order set to 3 | |

| Frequency domain | MASP | ; | |

| SMN | ; | ||

| MMNF | ; | ||

| Time–frequency domain | STFT | ; | |

| EWT | Wavelet Daubechies (Db4) | ; | |

| STW | STW is a noise-resilience method of wavelet transform and STFT to highlight signal window length other than artefacts or defect stochastic window frames [60]. | ||

| Fractal domain | HFD | HFD evaluates muscle strength and the contraction grade; it measures the size and complexity of the sEMG signal in the time-domain spectrum without fractal attractor reconstruction methods [58]. | |

| Patient | Age, Gender | Lesion | Days Since Onset | BS | SIAS | FMA-UE | MAS | Affected Side |

|---|---|---|---|---|---|---|---|---|

| HGR-001 | 80, M | CI | 11 | 5, 5 | 4, 4 | 58 | 0, 0, 0 | R |

| HGR-002 | 32, F | CI | 11 | 5, 5 | 4, 4 | 50 | 0, 0, 0 | R |

| HGR-003 | 71, M | ICH | 13 | 5, 5 | 4, 4 | 64 | 1, 0, 0 | R |

| HGR-004 | 52, F | CI | 8 | 6, 6 | 5, 5 | 63 | 0, 0, 0 | L |

| HGR-005 | 82, M | CI | 9 | 4, 3 | 3, 1 | 27 | 1, 0, 0 | L |

| HGR-006 | 81, M | ICH | 5 | 2, 4 | 1, 1 | 16 | 0, 0, 0 | R |

| HGR-007 | 77, M | CI | 9 | 6, 6 | 5, 5 | 60 | 0, 0, 0 | R |

| HGR-008 | 79, F | ICH | 7 | 3, 4 | 2, 3 | 28 | 0, 1+, 1+ | R |

| HGR-009 | 65, M | ICH | 5 | 6, 6 | 5, 5 | 55 | 0, 0, 0 | R |

| HGR-010 | 67, F | ICH | 12 | 5, 4 | 3, 1 | 37 | 0, 0, 0 | L |

| HGR-011 | 66, M | CI | 13 | 3, 3 | 2, 1 | 15 | 1+, 0, 0 | L |

| HGR-012 | 64, F | ICH | 33 | 4, 5 | 3, 4 | 35 | 1, 1, 0 | L |

| HGR-013 | 63, M | CI | 13 | 5, 5 | 4, 4 | 59 | 1, 0, 0 | R |

| HGR-014 | 50, M | CI | 19 | 3, 3 | 2, 1 | 22 | 1, 1, 0 | R |

| HGR-015 | 72, F | CI | 16 | 6, 5 | 5, 4 | 52 | 0, 0, 0 | R |

| HGR-016 | 57, M | ICH | 12 | 5, 4 | 4, 4 | 42 | 0, 0, 0 | L |

| HGR-017 | 57, M | ICH | 18 | 2, 2 | 1, 0 | 8 | 0, 0, 0 | L |

| HGR-018 | 64, M | CI | 9 | 6, 6 | 4, 4 | 60 | 0, 0, 0 | R |

| HGR-019 | 74, F | CI | 12 | 2, 1 | 1, 0 | 9 | 0, 1, 0 | L |

| Gesture Classification without PCA (GL4, GL5, GL6, GL7) | Gesture Classification with PCA * (GL4, GL5, GL6, GL7) | |||||||

|---|---|---|---|---|---|---|---|---|

| Non-Paretic Side (NP19) | Paretic Side (P19) | Non-Paretic Side (NP19) | Paretic Side (P19) | |||||

| Classifier | Acc (%) | F1 (%) | Acc (%) | F1 (%) | Acc (%) | F1 (%) | Acc (%) | F1 (%) |

| SVM | 94.80 | 89.74 | 88.71 | 77.53 | 92.81 | 85.63 | 87.59 | 74.90 |

| 94.19 | 85.69 | 88.48 | 71.49 | 91.41 | 78.77 | 86.07 | 65.16 | |

| 94.13 | 82.67 | 88.60 | 66.01 | 91.36 | 74.33 | 85.98 | 57.82 | |

| 94.73 | 81.82 | 89.75 | 64.26 | 91.97 | 72.00 | 86.40 | 52.20 | |

| LDA | 87.63 | 75.42 | 78.16 | 55.48 | 94.05 | 88.13 | 88.64 | 77.15 |

| 90.92 | 77.52 | 77.09 | 41.42 | 93.79 | 84.55 | 87.96 | 69.94 | |

| 92.35 | 77.39 | 77.39 | 30.63 | 93.85 | 81.66 | 87.97 | 63.98 | |

| 93.27 | 76.95 | 77.43 | 20.09 | 94.02 | 79.25 | 88.17 | 58.61 | |

| k-NN | 92.84 | 85.82 | 87.32 | 74.73 | 86.30 | 72.58 | 82.64 | 65.36 |

| 91.98 | 80.10 | 87.38 | 68.67 | 87.12 | 68.03 | 83.26 | 58.37 | |

| 91.70 | 75.34 | 86.81 | 60.72 | 87.70 | 63.76 | 84.24 | 53.03 | |

| 91.71 | 71.12 | 87.31 | 55.86 | 88.19 | 59.61 | 85.23 | 48.49 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anastasiev, A.; Kadone, H.; Marushima, A.; Watanabe, H.; Zaboronok, A.; Watanabe, S.; Matsumura, A.; Suzuki, K.; Matsumaru, Y.; Ishikawa, E. Supervised Myoelectrical Hand Gesture Recognition in Post-Acute Stroke Patients with Upper Limb Paresis on Affected and Non-Affected Sides. Sensors 2022, 22, 8733. https://doi.org/10.3390/s22228733

Anastasiev A, Kadone H, Marushima A, Watanabe H, Zaboronok A, Watanabe S, Matsumura A, Suzuki K, Matsumaru Y, Ishikawa E. Supervised Myoelectrical Hand Gesture Recognition in Post-Acute Stroke Patients with Upper Limb Paresis on Affected and Non-Affected Sides. Sensors. 2022; 22(22):8733. https://doi.org/10.3390/s22228733

Chicago/Turabian StyleAnastasiev, Alexey, Hideki Kadone, Aiki Marushima, Hiroki Watanabe, Alexander Zaboronok, Shinya Watanabe, Akira Matsumura, Kenji Suzuki, Yuji Matsumaru, and Eiichi Ishikawa. 2022. "Supervised Myoelectrical Hand Gesture Recognition in Post-Acute Stroke Patients with Upper Limb Paresis on Affected and Non-Affected Sides" Sensors 22, no. 22: 8733. https://doi.org/10.3390/s22228733

APA StyleAnastasiev, A., Kadone, H., Marushima, A., Watanabe, H., Zaboronok, A., Watanabe, S., Matsumura, A., Suzuki, K., Matsumaru, Y., & Ishikawa, E. (2022). Supervised Myoelectrical Hand Gesture Recognition in Post-Acute Stroke Patients with Upper Limb Paresis on Affected and Non-Affected Sides. Sensors, 22(22), 8733. https://doi.org/10.3390/s22228733