Sofigait—A Wireless Inertial Sensor-Based Gait Sonification System

Abstract

1. Introduction

2. Materials and Methods

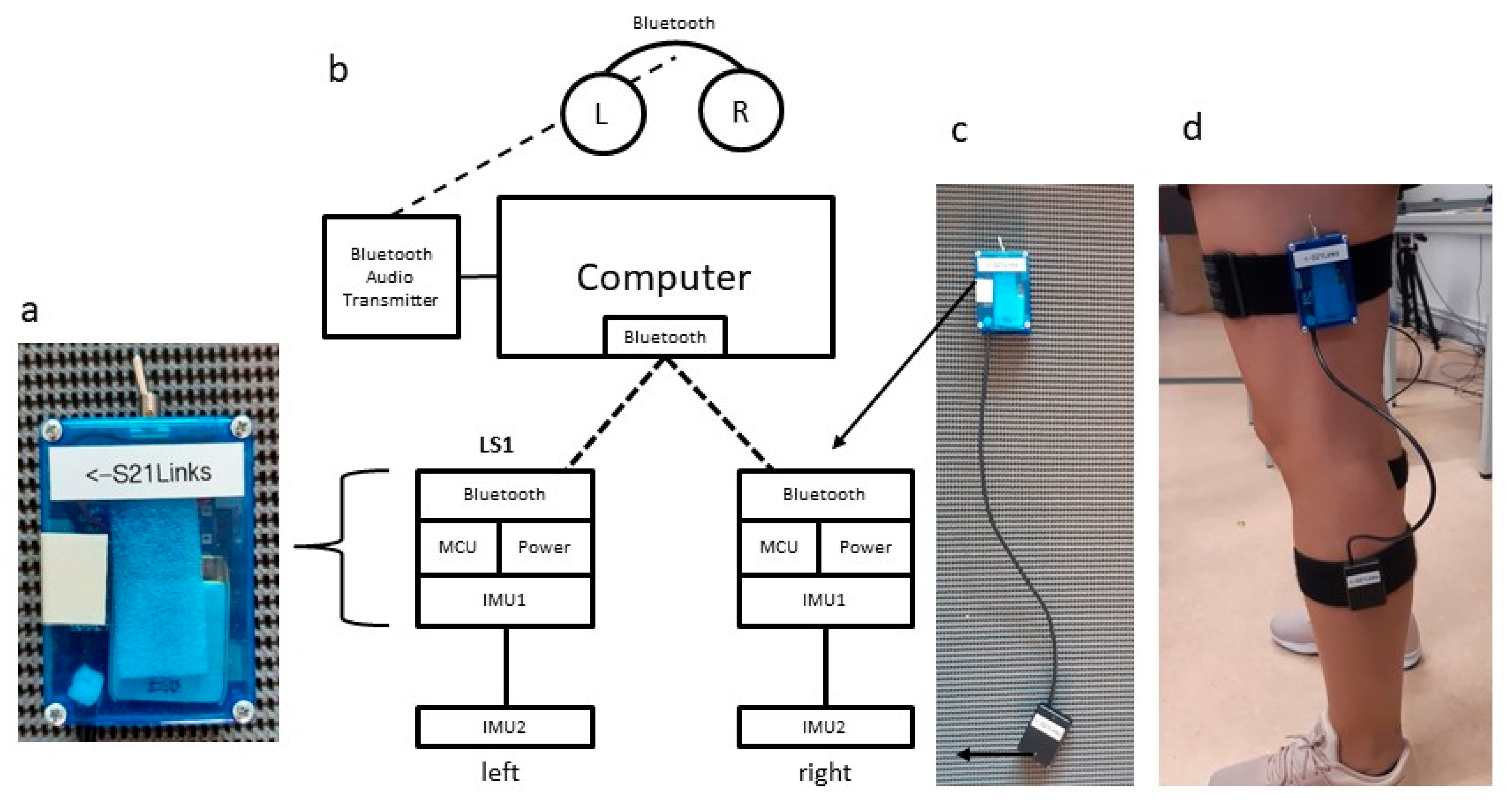

2.1. Sensor System (Sofigait)

2.1.1. Components and System Setup

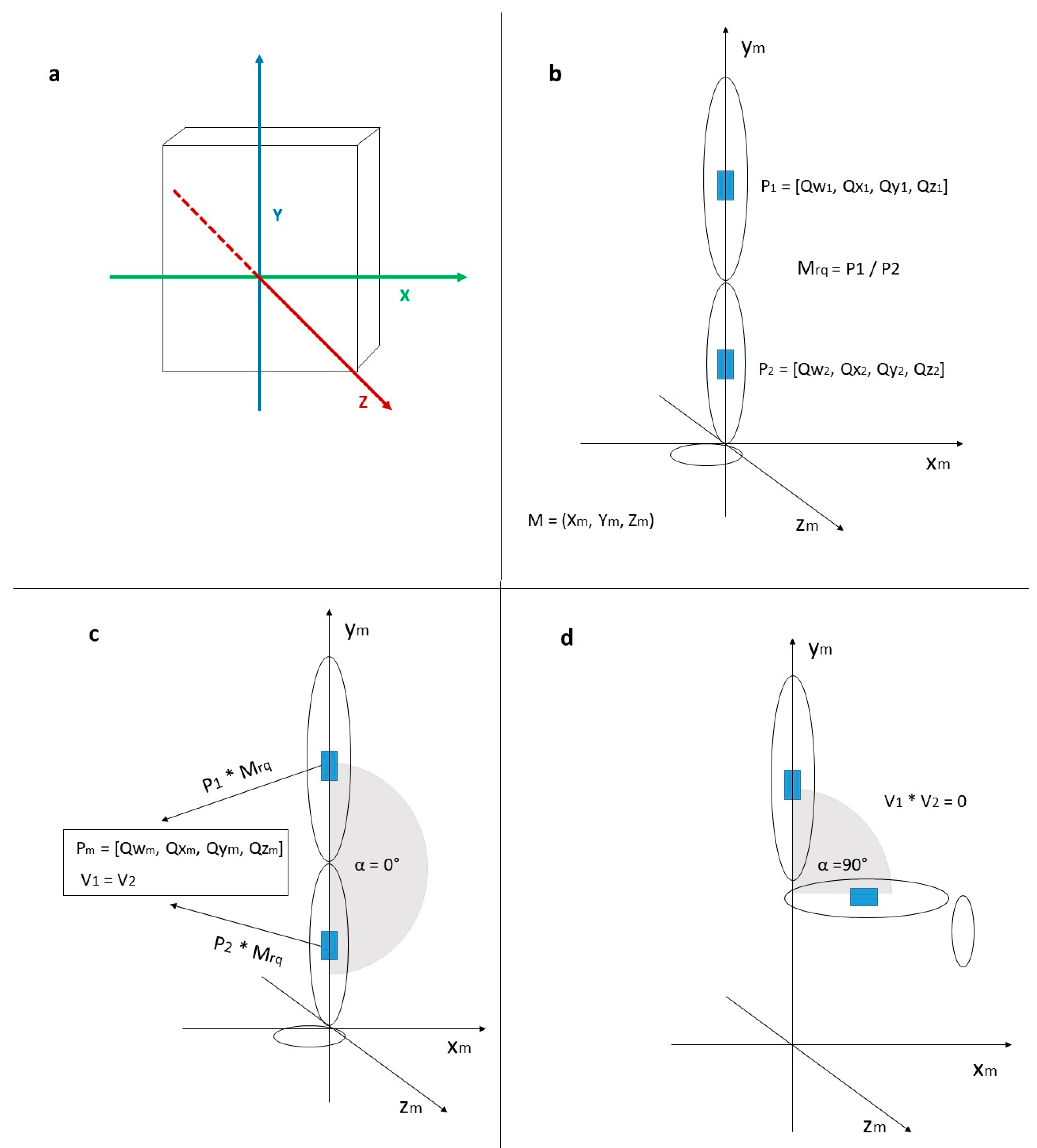

2.1.2. Data Acquisition and Transformation

2.1.3. Sonification

- (1)

- Soni 1.1: Sine continuous tone, swing phase from 35°.

- (2)

- Soni 1.2: Sine continuous tone, swing phase from 35°

- (3)

- Soni 2.1: Sine continuous tone, stance phase up to 35°.

- (4)

- Soni 2.2: Sine continuous tone, stance phase up to 35°.

2.2. Study Protocol

2.2.1. Participants

2.2.2. System Check

2.2.3. Participant Rating

2.2.4. Study Procedure

2.3. Data Analyses

- (1)

- Filtering of the sofigait data (Butterworth 2nd order, low pass, cutoff 6 Hz);

- (2)

- Extracting 10 step cycles and cut them into 10 single curves;

- (3)

- Computing the mean of all 4 events per system and side;

- (4)

- Normalizing all curves to 100 data points;

- (5)

- Computing mean curves (1 curve per side per system);

- (6)

- Exporting the mean curves and mean events;

- (7)

- Reshaping exported curves into four matrices (N × data points) for each system and side;

- (8)

- Statistical comparison of curves.

3. Results

3.1. Measurement System Comparison between Vicon and Sofigait

3.1.1. Root-Mean-Square Error

3.1.2. Comparison of the Main Events between Vicon and Sofigait

3.1.3. Continuous Comparison of the Curves (SPM)

3.2. Sound Perception

4. Discussion

5. Limitations and Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Left Sensor/Leg | Right Sensor/Leg | |||||||

|---|---|---|---|---|---|---|---|---|

| Event | Min 1 | Max 1 | Min 2 | Max 2 | Min 1 | Max 1 | Min 2 | Max 2 |

| Mean range | 1.87 | 2.33 | 1.55 | 4.84 | 1.53 | 2.01 | 1.33 | 2.38 |

| Std. deviation | 1.00 | 1.09 | 0.61 | 2.28 | 0.43 | 0.86 | 0.51 | 1.15 |

| Measurement Cycle | Left Sensor/Leg | Right Sensor/Leg | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Min 1 | Max 1 | Min 2 | Max 2 | Min 1 | Max 1 | Min 2 | Max 2 | ||

| 1 to 5 (Person 1) | Mean | −4.23 | 19.28 | 3.47 | 68.35 | −4.58 | 18.42 | 1.67 | 65.63 |

| Std. deviation | 1.25 | 1.44 | 0.91 | 3.58 | 1.65 | 1.82 | 1.41 | 2.84 | |

| Range | 3.39 | 3.70 | 2.48 | 9.34 | 4.33 | 4.58 | 3.90 | 7.53 | |

| 1 to 5 (Person 2) | Mean | −12.02 | 17.56 | 5.58 | 69.11 | −10.42 | 16.17 | 1.95 | 64.23 |

| Std. deviation | 2.65 | 2.58 | 2.02 | 4.11 | 1.60 | 0.72 | 0.49 | 2.24 | |

| Range | 7.74 | 7.44 | 5.71 | 12.09 | 4.96 | 2.22 | 1.44 | 5.75 | |

References

- Chamorro-Moriana, G.; Moreno, A.J.; Sevillano, J.L. Technology-Based Feedback and Its Efficacy in Improving Gait Parameters in Patients with Abnormal Gait: A Systematic Review. Sensors 2018, 18, 142. [Google Scholar] [CrossRef] [PubMed]

- Linnhoff, D.; Alizadeh, S.; Schaffert, N.; Mattes, K. Use of Acoustic Feedback to Change Gait Patterns: Implementation and Transfer to Motor Learning Theory—A Scoping Review. J. Mot. Learn. Dev. 2020, 8, 598–618. [Google Scholar] [CrossRef]

- Sigrist, R.; Rauter, G.; Riener, R.; Wolf, P. Augmented visual, auditory, haptic, and multimodal feedback in motor learning: A review. Psychon. Bull. Rev. 2013, 20, 21–53. [Google Scholar] [CrossRef] [PubMed]

- Karns, C.M.; Knight, R.T. Intermodal Auditory, Visual, and Tactile Attention Modulates Early Stages of Neural Processing. J. Cogn. Neurosci. 2009, 21, 669–683. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Aruin, A.S.; Hanke, T.A.; Sharma, A. Base of support feedback in gait rehabilitation, International journal of rehabilitation research. Internationale Zeitschrift fur Rehabilitationsforschung. Rev. Int. Rech. Readapt. 2003, 26, 309–312. [Google Scholar]

- Batavia, M.; Gianutsos, J.G.; Vaccaro, A.; Gold, J.T. A do-it-yourself membrane-activated auditory feedback device for weight bearing and gait training: A case report. Arch. Phys. Med. Rehabil. 2001, 82, 541–545. [Google Scholar] [CrossRef]

- Casamassima, F.; Ferrari, A.; Milosevic, B.; Ginis, P.; Farella, E.; Rocchi, L. A Wearable System for Gait Training in Subjects with Parkinson’s Disease. Sensors 2014, 14, 6229–6246. [Google Scholar] [CrossRef]

- Eriksson, M. Improving running Mechanics by Use of Interactive Sonification. In Proceedings of the ISon 2010, 3rd Interactive Sonification Workshop, KTH, Stockholm, Sweden, 7 April 2010. [Google Scholar]

- Femery, V.; Moretto, P.; Hespel, J.; Thévenon, A.; Lensel, G. A real-time plantar pressure feedback device for foot unloading11No commercial party having a direct financial interest in the results of the research supporting this article has or will confer a benefit upon the authors(s) or upon any organization with which the author(s) is/are associated. Arch. Phys. Med. Rehabil. 2004, 85, 1724–1728. [Google Scholar]

- Horsak, B.; Dlapka, R.; Iber, M.; Gorgas, A.-M.; Kiselka, A.; Gradl, C.; Siragy, T.; Doppler, J. SONIGait: A wireless instrumented insole device for real-time sonification of gait. J. Multimodal User Interfaces 2016, 10, 195–206. [Google Scholar] [CrossRef]

- Isakov, E. Gait rehabilitation: A new biofeedback device for monitoring and enhancing weight-bearing over the affected lower limb. Eur. Med. 2007, 43, 21–26. [Google Scholar]

- Minto, S.; Zanotto, D.; Boggs, E.; Rosati, G.; Agrawal, S. Validation of a Footwear-Based Gait Analysis System With Action-Related Feedback. IEEE Trans. Neural Syst. Rehabil. Eng. A Publ. IEEE Eng. Med. Biol. Soc. 2016, 24, 971–980. [Google Scholar] [CrossRef] [PubMed]

- Riskowski, J.L.; Mikesky, A.E.; Bahamonde, R.E.; Burr, D.B. Design and Validation of a Knee Brace With Feedback to Reduce the Rate of Loading. J. Biomech. Eng. 2009, 131, 84503. [Google Scholar] [CrossRef] [PubMed]

- Redd, C.B.; Bamberg, S.J.M. A Wireless Sensory Feedback Device for Real-Time Gait Feedback and Training. IEEE/ASME Trans. Mechatron. 2012, 17, 425–433. [Google Scholar] [CrossRef]

- Zanotto, D.; Rosati, G.; Spagnol, S.; Stegall, P.; Agrawal, S.K. Effects of complementary auditory feedback in robot-assisted lower extremity motor adaptation. IEEE Trans. Neural Syst. Rehabil. Eng. A Publ. IEEE Eng. Med. Biol. Soc. 2013, 21, 775–786. [Google Scholar] [CrossRef] [PubMed]

- Pietschmann, J.; Flores, F.G.; Jöllenbeck, T. Gait Training in Orthopedic Rehabilitation after Joint Replacement—Back to Normal Gait with Sonification? Int. J. Comput. Sci. Sport 2019, 18, 34–48. [Google Scholar] [CrossRef]

- Guillaume, A. Intelligent auditory alarms. In The Sonification Handbook; Logos Publishing House: Berlin, Germany, 2011. [Google Scholar]

- De Campo, A. Toward a data sonification design space map. In Proceedings of the 13th International Conference on Auditory Display, Montreal, QC, Canada, 26–29 June 2007. [Google Scholar]

- Warren, C.G.; Lehmann, J.F. Training procedures and biofeedback methods to achieve controlled partial weight bearing: An assessment. Arch. Phys. Med. Rehabil. 1975, 56, 449–455. [Google Scholar] [PubMed]

- Wood, C.; Kipp, K. Use of audio biofeedback to reduce tibial impact accelerations during running. J. Biomech. 2014, 47, 1739–1741. [Google Scholar] [CrossRef]

- Kassover, M.; Tauber, C.; Au, J.; Pugh, J. Auditory biofeedback in spastic diplegia. J. Orthop. Res. 1986, 4, 246–249. [Google Scholar] [CrossRef]

- Dubus, G.; Bresin, R. A Systematic Review of Mapping Strategies for the Sonification of Physical Quantities. PLoS ONE 2014, 8, e82491. [Google Scholar] [CrossRef]

- Weger, M.; Herrmann, T.; Höldrich, R. Plausible auditory augmentation of physical interaction. In Proceedings of the 24th International Conference on Auditory Display (ICAD 2018), Houghton, MI, USA, 10–15 October 2018. [Google Scholar]

- Effenberg, J.; Melzer, A.; Weber, A.Z. MotionLab Sonify: A Framework for the Sonification of Human Motion Data. In Proceedings of the Ninth International Conference on Information Visualisation, London, UK, 6–8 July 2005. [Google Scholar]

- Hermann, T. Taxonomy and Definitions for Sonification and Auditory Display. In Proceedings of the 14th International Conference on Auditory Display, Paris, France, 24–27 June 2008. [Google Scholar]

- Kramer, G.; Walker, B.; Bonebright, T.; Cook, P.; Flowers, J.H.; Miner, N.; Neuhoff, J. Sonification Report: Status of the Field and Research Agenda, Report Prepared for the National Science Foundation by Members of the National Community for Auditory Display Santa Fe, NM: ICAD. 2010. Available online: https://digitalcommons.unl.edu/cgi/viewcontent.cgi?article=1443&context=psychfacpub (accessed on 5 September 2022).

- Schaffert, N.; Braun Janzen, T.; Mattes, K.; Thaut, M. A Review on the Relationship between Sound and Movement: A Review and Narrative Synthesis on Auditory Information in Sports and Rehabilitation. Front. Psychol. 2019, 10, 244. [Google Scholar] [CrossRef]

- Perry, J.; Burnfield, J. Gait Analysis: Normal and Pathological Function. J. Sport. Sci. Med. 2010, 9, 353. [Google Scholar] [CrossRef]

- Jones, M.R. Time, our lost dimension: Toward a new theory of perception, attention, and memory. Psychol. Rev. 1976, 83, 323–355. [Google Scholar] [CrossRef] [PubMed]

- MacPherson, A.C.; Collins, D.; Obhi, S.S. The Importance of Temporal Structure and Rhythm for the Optimum Performance of Motor Skills: A New Focus for Practitioners of Sport Psychology. J. Appl. Sport Psychol. 2009, 21, S48–S61. [Google Scholar] [CrossRef]

- Kennel, L.; Streese, A.; Pizzera, C.; Justen, T.; Hohmann, M. Raab, Auditory reafferences: The influence of real-time feedback on movement control. Front. Psychol. 2015, 6, 69. [Google Scholar] [CrossRef]

- Neuhoff, J.G. Perception, cognition and action in auditory displays. In The Sonification Handbook; Logos Publishing House: Berlin, Germany, 2011. [Google Scholar]

- Bonebright, T.L.; Flowers, J. Evaluation of auditory display. In The Sonification Handbook; Logos Publishing House: Berlin, Germany, 2011. [Google Scholar]

- Roddy, S.; Furlong, D. Embodied Aesthetics in Auditory Display. Organ. Sound 2014, 19, 70–77. [Google Scholar] [CrossRef]

- Kim, R. Pekrun, Emotions and Motivation in Learning and Performance. In Handbook of Research on Educational Communications and Technology, 4th ed.; Spector, J.M., Ed.; Springer Science + Business Media: New York, NY, USA, 2014; pp. 65–75. [Google Scholar]

- Scherer, K.; Dan, E.; Flykt, A.; Dan-Glauser, E. What determines a feeling’s position in affective space? A case for appraisal. Cogn. Emot. 2006, 20, 92–113. [Google Scholar] [CrossRef]

- Lemaitre, G.; Houix, O.; Franinovic, K.; Hoschule, Z.; Visell, Y.; Susini, P. The flops glass: A device to study emotional reactions arising from sonic interactions. In Proceedings of the SMC, Porto, Portugal, 23–25 July 2009. [Google Scholar]

- Neuhoff, J.; Wayand, J.; Kramer, G. Pitch and loudness interact in auditory displays: Can the data get lost in the map? J. Exp. Psychol. Appl. 2002, 8, 17–25. [Google Scholar] [CrossRef]

- Petraglia, F.; Scarcella, L.; Pedrazzi, G.; Brancato, L.; Puers, R.; Costantino, C. Inertial sensors versus standard systems in gait analysis: A systematic review and meta-analysis. Eur. J. Phys. Rehabil. Med. 2019, 55, 265–280. [Google Scholar] [CrossRef]

- Picerno, P. 25 years of lower limb joint kinematics by using inertial and magnetic sensors: A review of methodological approaches. Gait Posture 2017, 51, 239–246. [Google Scholar] [CrossRef]

- Teufl, W.; Taetz, B.; Miezal, M.; Lorenz, M.; Pietschmann, J.; Jöllenbeck, T.; Fröhlich, M.; Bleser, G. Towards an Inertial Sensor-Based Wearable Feedback System for Patients after Total Hip Arthroplasty: Validity and Applicability for Gait Classification with Gait Kinematics-Based Features. Sensors 2019, 19, 5006. [Google Scholar] [CrossRef]

- Torres, V.; Kluckner, K.F. Development of a Sonification Method to Enhance Gait Rehabilitation. In Proceedings of the ISon 2013, 4th Interactive Sonification Workshop, Erlangen, Germany, 10 December 2013. [Google Scholar]

- Hajinejad, N.; Vatterrott, H.-R.; Grüter, B.; Bogutzky, S. GangKlang. In Proceedings of the 8th Audio Mostly Conference on—AM ’13; Delsing, K., Liljedahl, M., Eds.; ACM Press: New York, NY, USA, 2013; pp. 1–6. [Google Scholar]

- H. Laboratories. BNO080/85/86 Datasheet Revision 1.11. 2021. Available online: https://sonification.de/handbook/download/TheSonificationHandbook-chapter6.pdf (accessed on 10 September 2022).

- Grond, F.; Berger, J. Parameter Mapping Sonification. In The Sonification Handbook; Logos Publishing House: Berlin, Germany, 2011. [Google Scholar]

- Giavarina, D. Understanding Bland Altman analysis. Biochem. Med. 2015, 25, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Pataky, T.C. One-dimensional statistical parametric mapping in Python. Comput. Methods Biomech. Biomed. Eng. 2012, 15, 295–301. [Google Scholar] [CrossRef] [PubMed]

- Piriyaprasarth, P.; Morris, M.E. Psychometric properties of measurement tools for quantifying knee joint position and movement: A systematic review. Knee 2007, 14, 2–8. [Google Scholar] [CrossRef] [PubMed]

- Rowe, P.; Myles, C.; Hillmann, S.; Hazlewood, M. Validation of Flexible Electrogoniometry as a Measure of Joint Kinematics. Physiotherapy 2001, 87, 479–488. [Google Scholar] [CrossRef]

- Nüesch, C.; Roos, E.; Pagenstert, G.; Mündermann, A. Measuring joint kinematics of treadmill walking and running: Comparison between an inertial sensor based system and a camera-based system. J. Biomech. 2017, 57, 32–38. [Google Scholar] [CrossRef]

- Favre, J.; Aissaoui, R.; Jolles, B.; de Guise, J.; Aminian, K. Functional calibration procedure for 3D knee joint angle description using inertial sensors. J. Biomech. 2009, 42, 2330–2335. [Google Scholar] [CrossRef]

- Picerno, P.; Cereatti, A.; Cappozzo, A. Joint kinematics estimate using wearable inertial and magnetic sensing modules. Gait Posture 2008, 28, 588–595. [Google Scholar] [CrossRef]

- Cooper, G.; Sheret, I.; McMillian, L.; Siliverdis, K.; Sha, N.; Hodgins, D.; Kenney, L.; Howard, D. Inertial sensor-based knee flexion/extension angle estimation. J. Biomech. 2009, 42, 2678–2685. [Google Scholar] [CrossRef]

- Hogg, B.; Vickers, P. Sonification abstraite/sonification concrete: An ‘aesthetic persepctive space’ for classifying auditory displays in the ars musica domain. In Proceedings of the 12th International Conference on Auditory Display, London, UK, 20–23 June 2006. [Google Scholar]

| Participant | RMSE Left | RMSE Right | Participant | RMSE Left | RMSE Right |

|---|---|---|---|---|---|

| 1 | 7.35 | 6.59 | 12 | 3.8 | 6.99 |

| 2 | 6.96 | 3.10 | 13 | 5.85 | 3.33 |

| 3 | 7.13 | 5.93 | 14 | 4.94 | 7.18 |

| 4 | 5.95 | 6.83 | 15 | 8.08 | 7.03 |

| 5 | 13.06 | 8.88 | 16 | 6.11 | 3.86 |

| 6 | 12.21 | 14.54 | 17 | 8.37 | 9.66 |

| 7 | 6.33 | 4.69 | 18 | 4.81 | 5.81 |

| 8 | 7.93 | 5.29 | 19 | 10.53 | 7.57 |

| 9 | 5.57 | 6.60 | 20 | 5.73 | 3.47 |

| 10 | 10.11 | 3.73 | 21 | 4.22 | 11.70 |

| 11 | 4.6 | 6.06 | 22 | 9.78 | 13.15 |

| ∑ | 7.6 ± 2.6 | 6.9 ± 3.1 | |||

| Version | Accentuation | Pitch | Dimension I (Information) | Dimension 2 (Sound Perception) |

|---|---|---|---|---|

| Soni 1.1 | Swing | high | 4.5 ± 1.1 | 3.9 ± 1.4 |

| Soni 1.2 | Swing | low | 4.7 ± 1.2 | 4.3 ± 1.4 |

| Soni 2.1 | Stance | high | 4.4 ± 1.2 | 3.4 ± 1.3 |

| Soni 2.2 | Stance | low | 4.3 ± 1.3 | 3.8 ± 1.6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Linnhoff, D.; Ploigt, R.; Mattes, K. Sofigait—A Wireless Inertial Sensor-Based Gait Sonification System. Sensors 2022, 22, 8782. https://doi.org/10.3390/s22228782

Linnhoff D, Ploigt R, Mattes K. Sofigait—A Wireless Inertial Sensor-Based Gait Sonification System. Sensors. 2022; 22(22):8782. https://doi.org/10.3390/s22228782

Chicago/Turabian StyleLinnhoff, Dagmar, Roy Ploigt, and Klaus Mattes. 2022. "Sofigait—A Wireless Inertial Sensor-Based Gait Sonification System" Sensors 22, no. 22: 8782. https://doi.org/10.3390/s22228782

APA StyleLinnhoff, D., Ploigt, R., & Mattes, K. (2022). Sofigait—A Wireless Inertial Sensor-Based Gait Sonification System. Sensors, 22(22), 8782. https://doi.org/10.3390/s22228782