Nonlinear and Dotted Defect Detection with CNN for Multi-Vision-Based Mask Inspection

Abstract

1. Introduction

- The data for training and verification were produced in the real mask production lines.

- An efficient pre-processing process was developed to apply highly small dotted defects, which were difficult to be trained and inferred to the CNN.

- Various types of nonlinear and dotted defects were successfully detected by the proposed method based on the CNN.

2. Problem Description

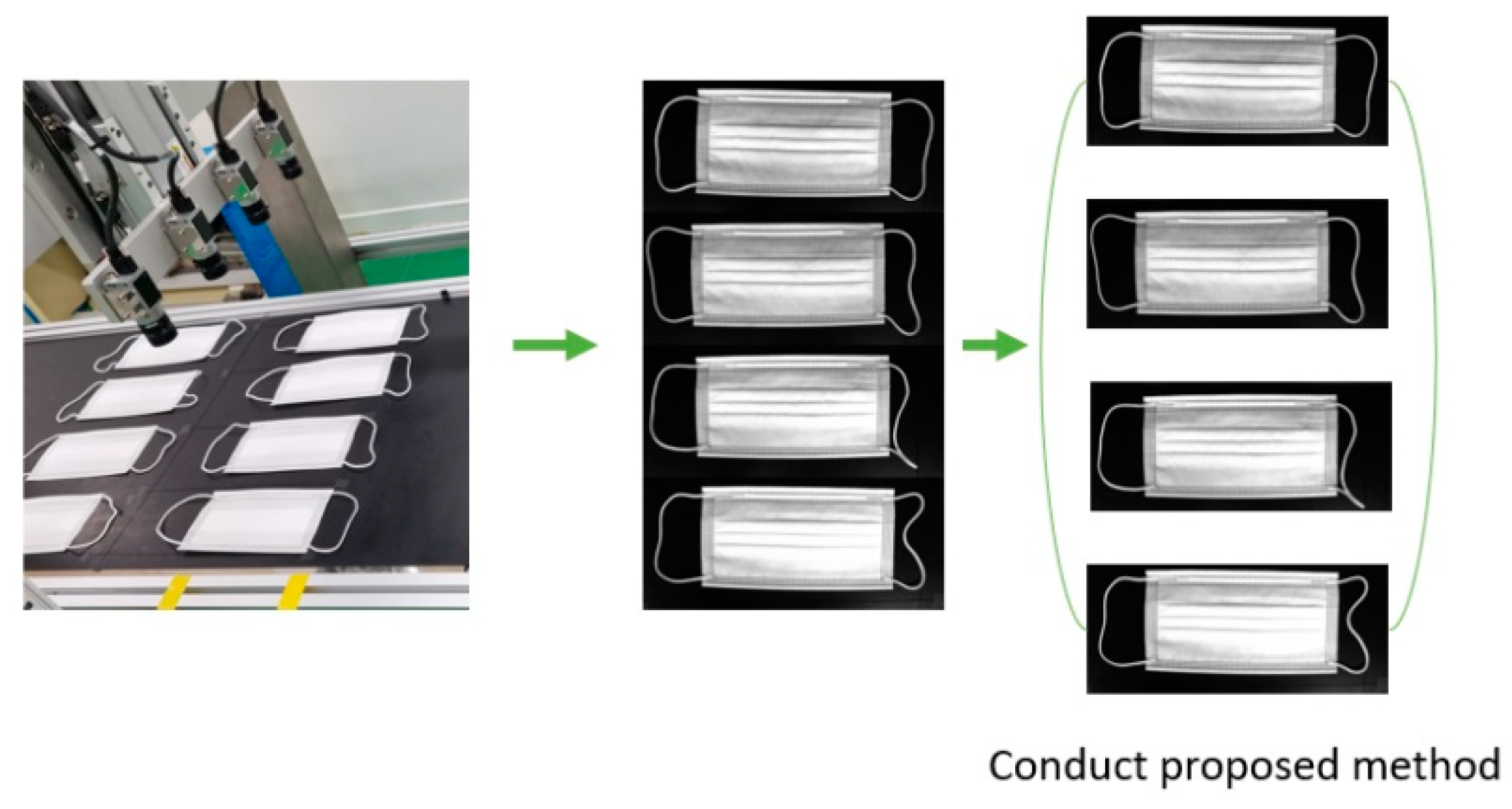

2.1. Multi-Vision-Based Mask Inspection

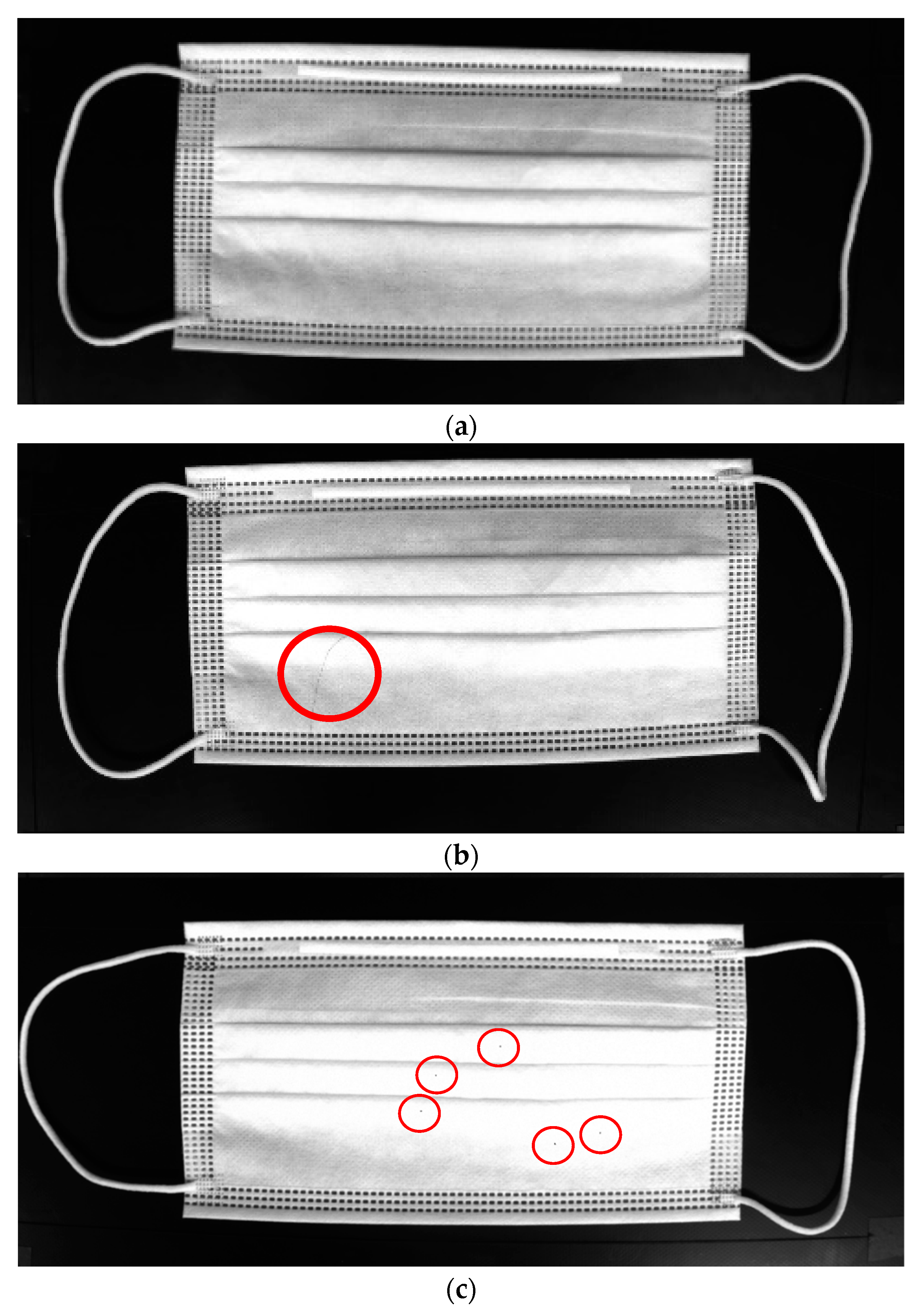

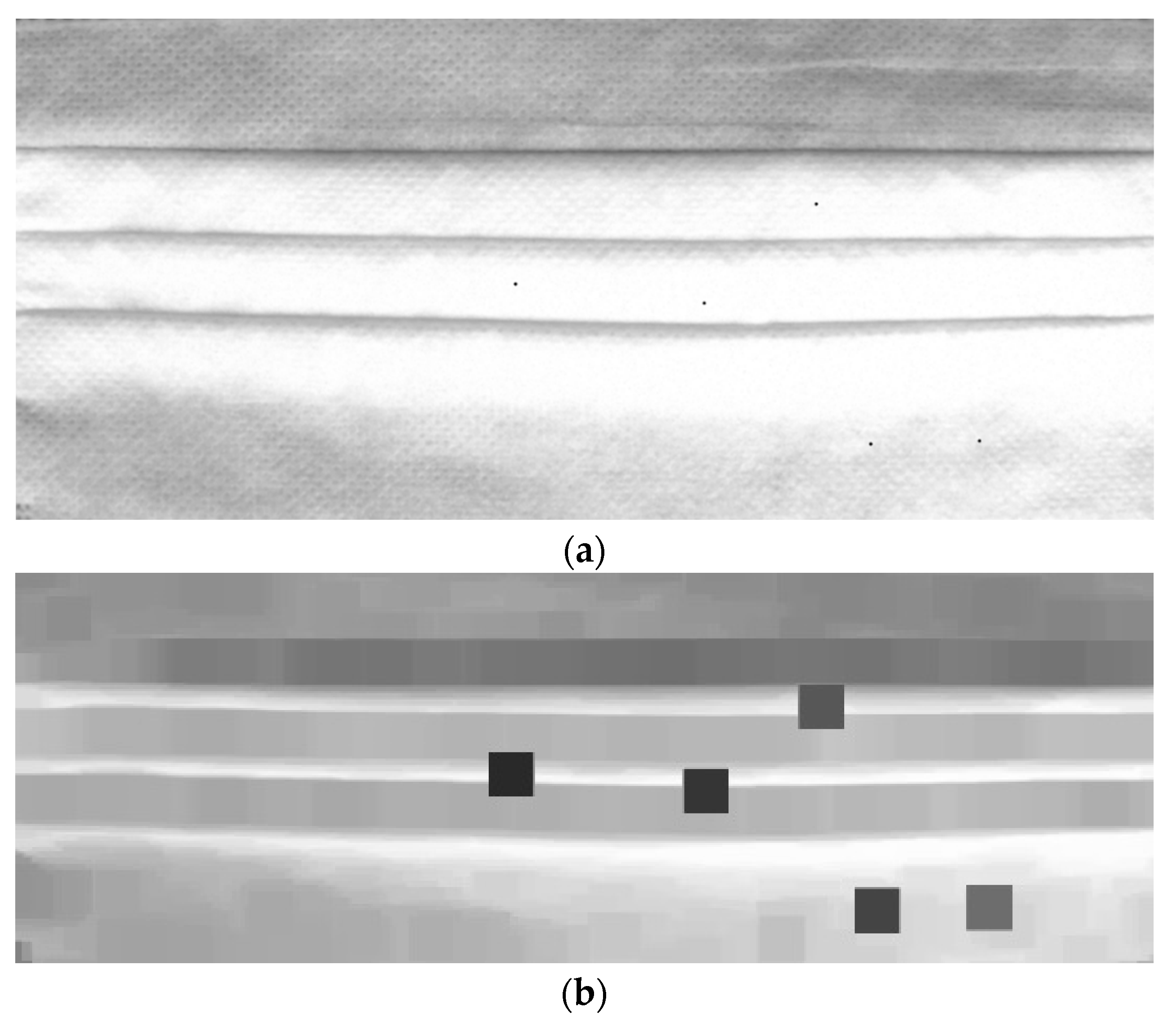

2.2. Mask Defect Detection

3. Methods

3.1. Data Acquisition

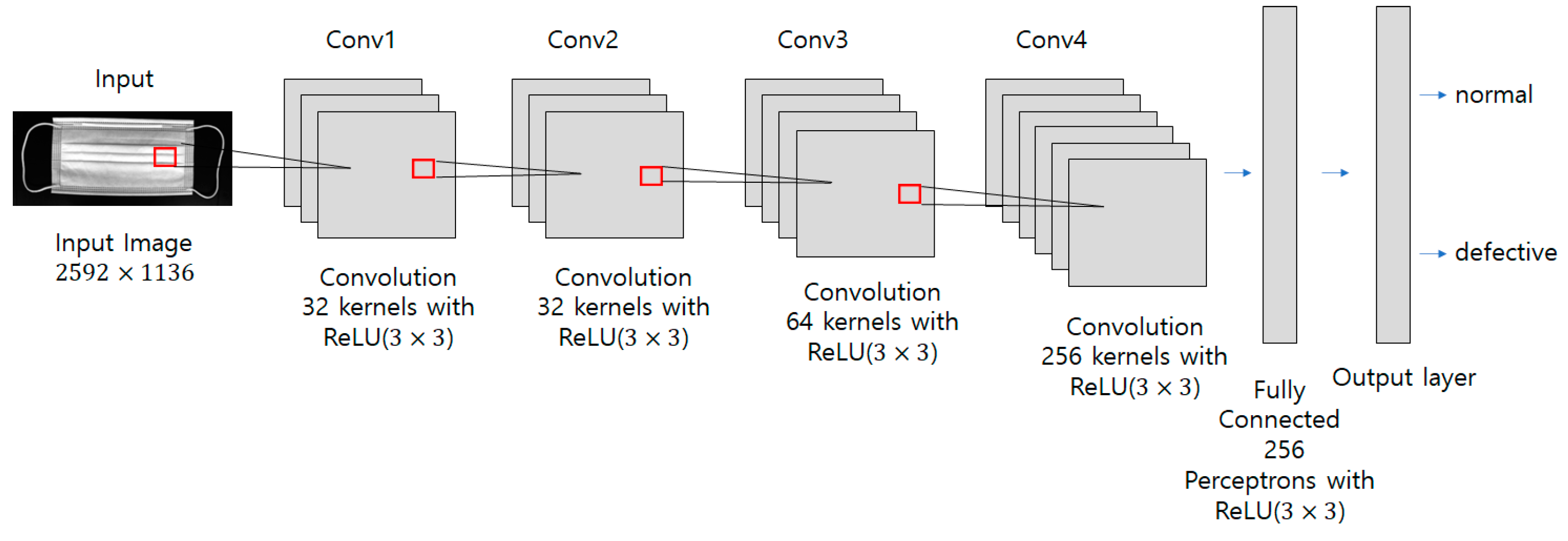

3.2. Nonlinear Defect Detection

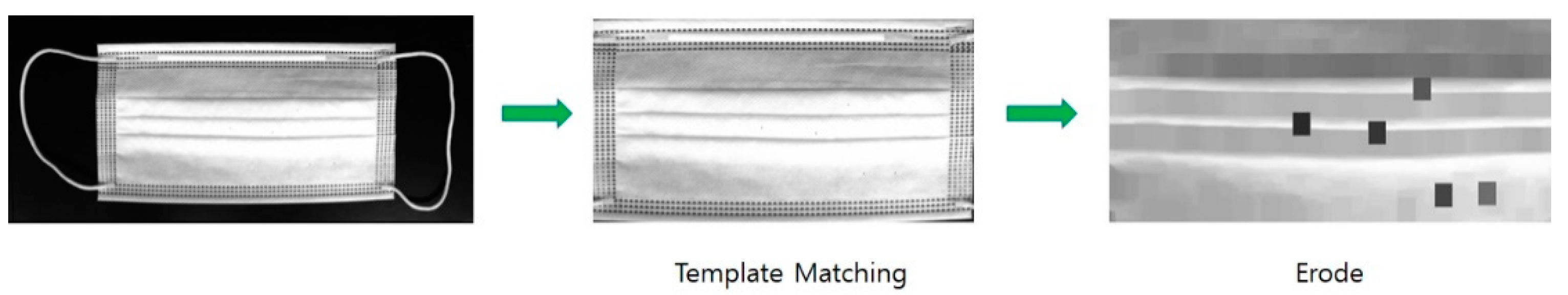

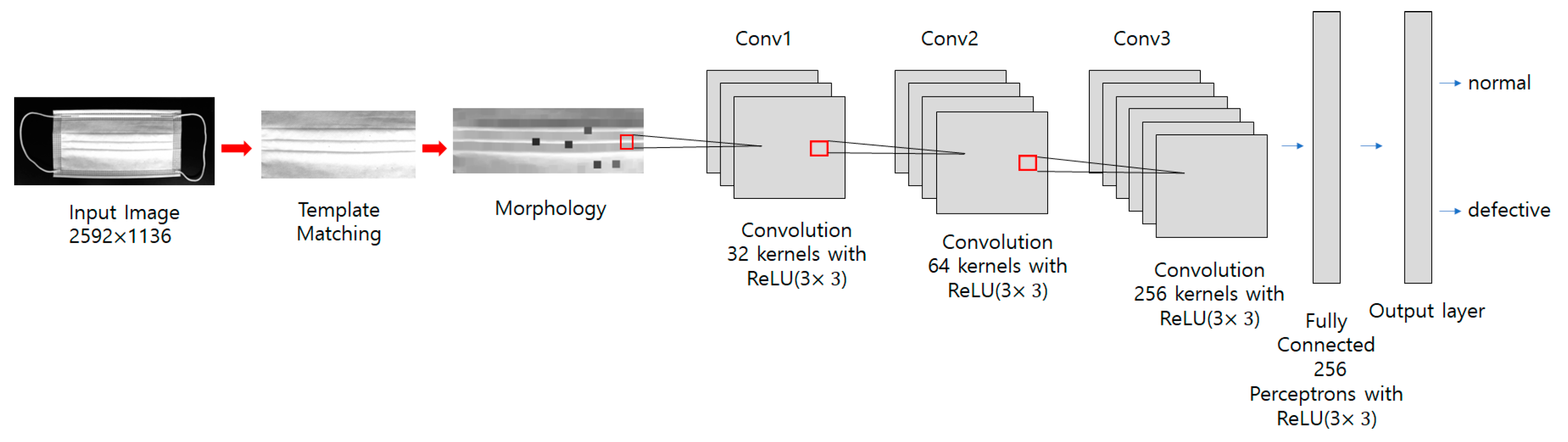

3.3. Dotted Defect Detection

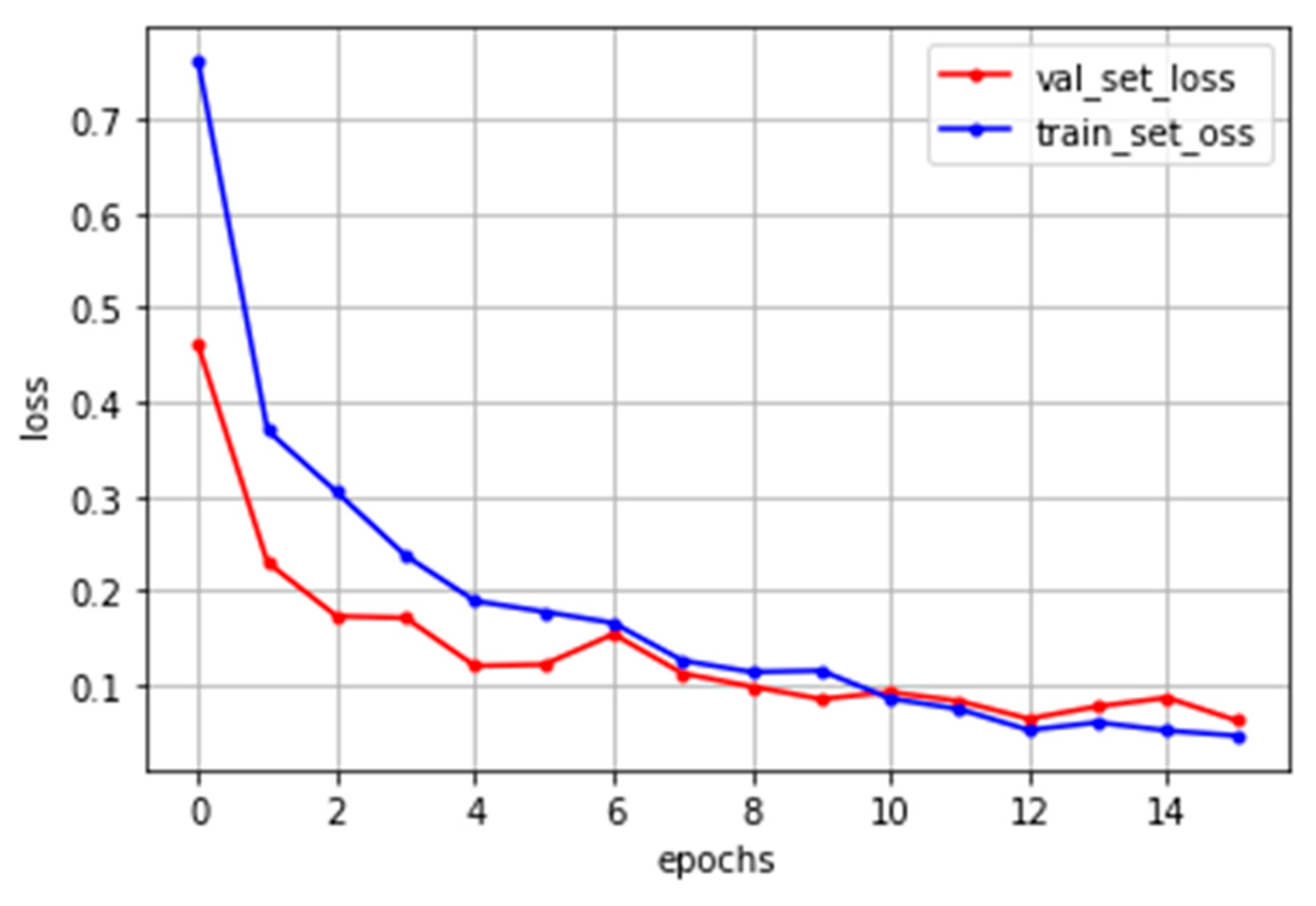

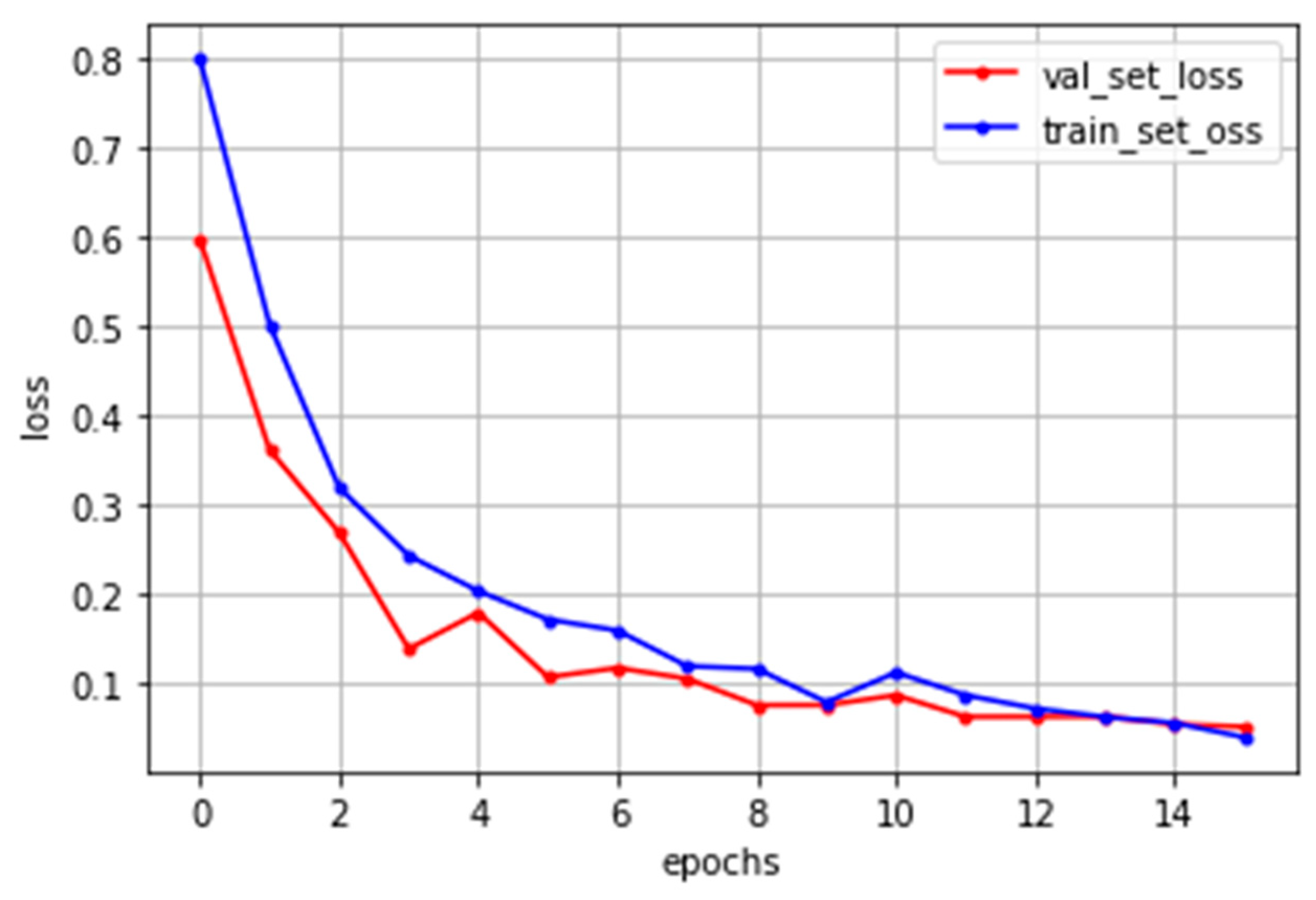

4. Experiments and Results

4.1. Mask Defect Detection

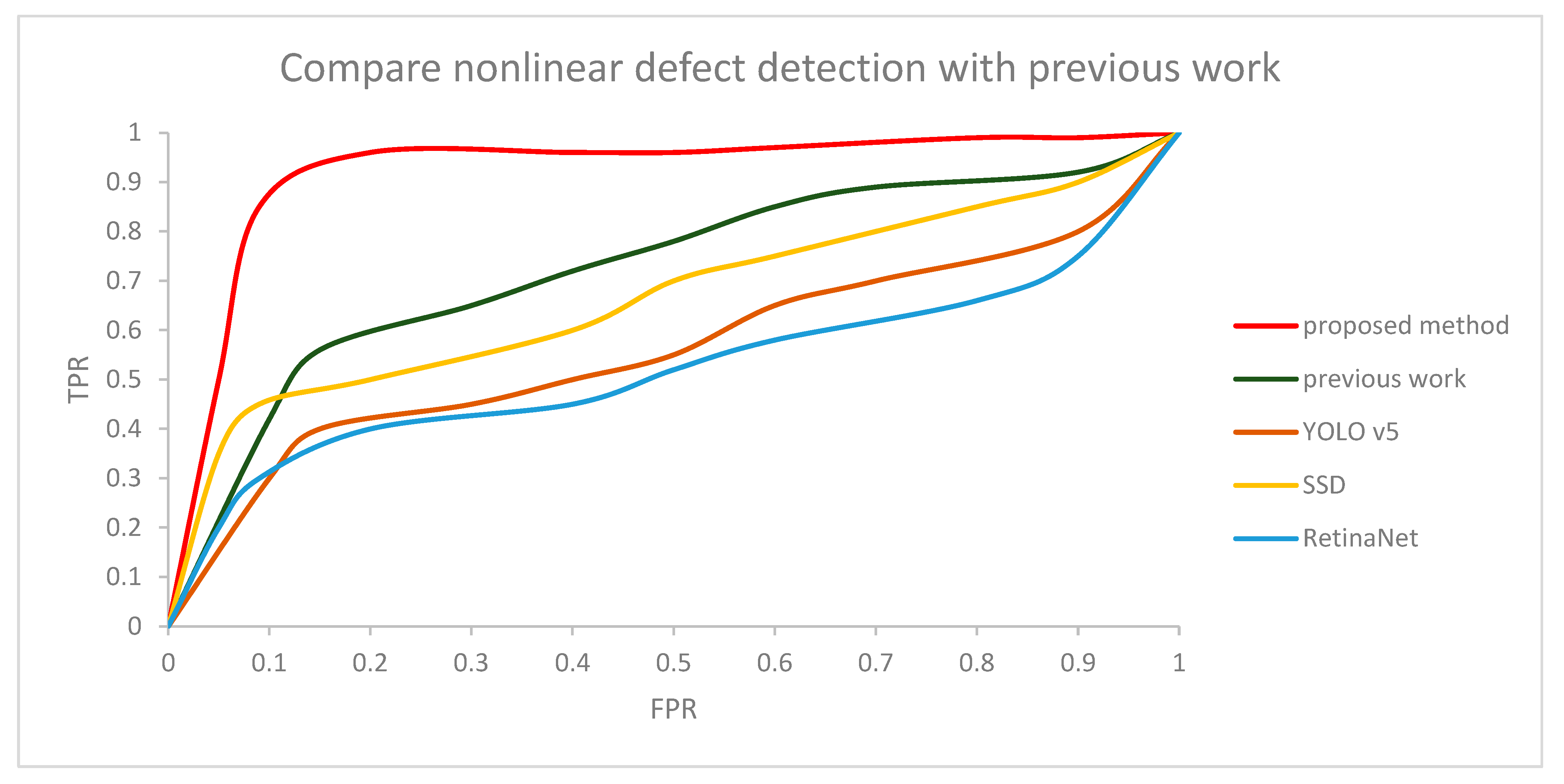

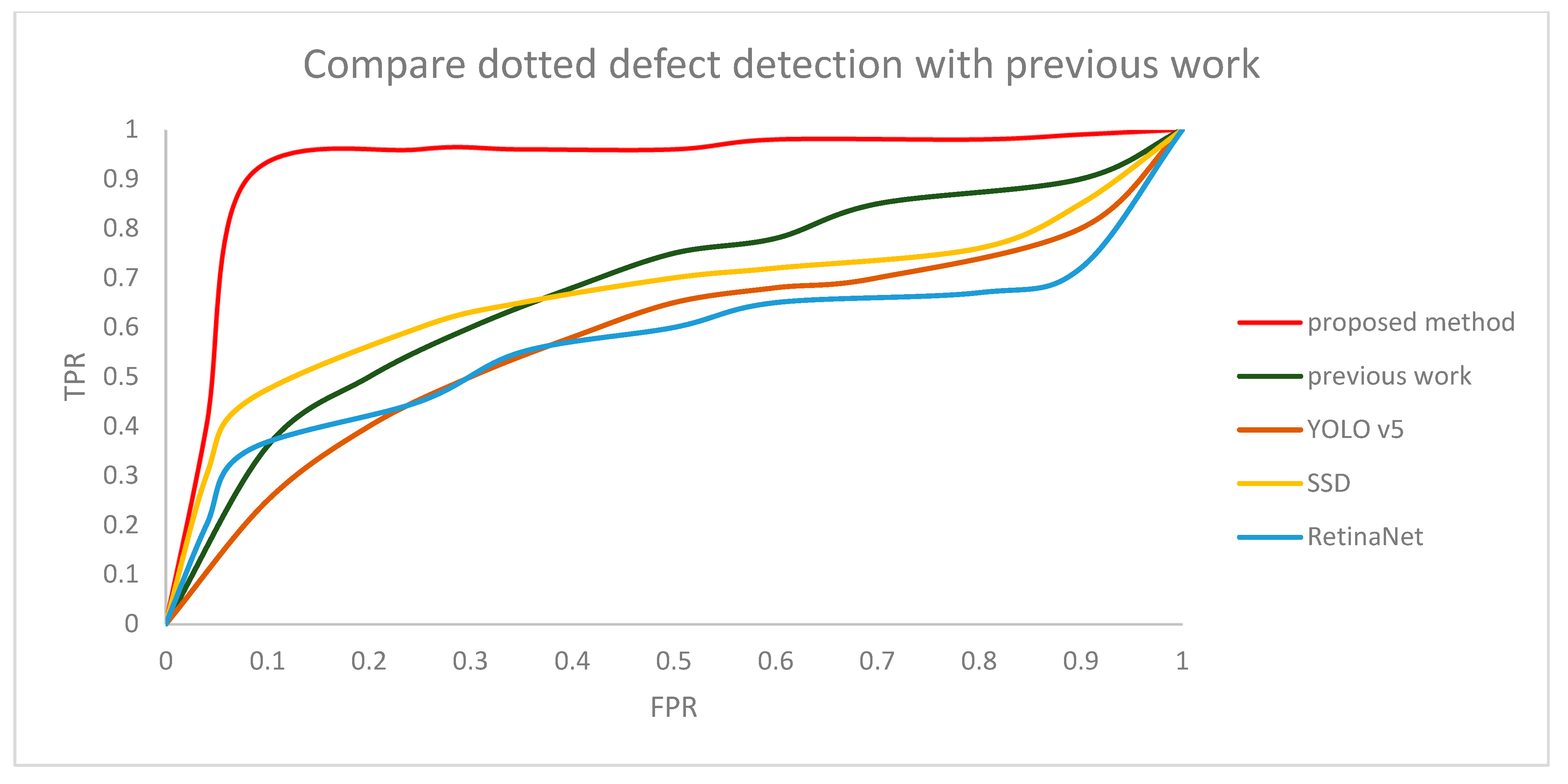

4.2. Quantitative Comparison Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Mukhopadhyay, P.; Chaudhuri, B.B. A survey of Hough Transform. Pattern Recognit. 2015, 48, 993–1010. [Google Scholar] [CrossRef]

- Duan, D.; Xie, M.; Mo, Q.; Han, Z.; Wan, Y. An improved Hough transform for line detection. In Proceedings of the 2010 International Conference on Computer Application and System Modeling (ICCASM 2010), Taiyuan, China, 22–24 October 2010; pp. V2-354–V2-357. [Google Scholar] [CrossRef]

- Ye, H.; Shang, G.; Wang, L.; Zheng, M. A new method based on Hough transform for quick line and circle detection. In Proceedings of the 2015 8th International Conference on Biomedical Engineering and Informatics (BMEI), Shenyang, China, 14–16 October 2015; pp. 52–56. [Google Scholar] [CrossRef]

- Song, C.; Yang, F.; Li, P. Rotation Invariant Texture Measured by Local Binary Pattern for Remote Sensing Image Classification. In Proceedings of the 24th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 3–6. [Google Scholar]

- Huang, D.; Shan, C.; Ardabilian, M.; Wang, Y.; Chen, L. Local Binary Patterns and Its Application to Facial Image Analysis: A Survey. IEEE Trans. Syst. Man Cybern. Part C 2011, 41, 765–781. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2015, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar]

- Guo, F.; Yang, J.; Chen, Y.; Yao, B. Research on image detection and matching based on SIFT features. In Proceedings of the 2018 3rd International Conference on Control and Robotics Engineering (ICCRE), Nagoya, Japan, 20–23 April 2018; pp. 130–134. [Google Scholar]

- Satare, R.N.; Khot, S.R. Image matching with SIFT feature. In Proceedings of the 2018 2nd International Conference on Inventive Systems and Control (ICISC), Coimbatore, India, 19–20 January 2018; pp. 384–387. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the European Conference on Computer Vision—ECCV 2006, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951. [Google Scholar] [CrossRef]

- Juan, L.; Oubong, G. SURF applied in panorama image stitching. In Proceedings of the 2010 2nd International Conference on Image Processing Theory, Tools and Applications, Paris, France, 7–10 July 2010; pp. 495–499. [Google Scholar] [CrossRef]

- Mayannavar, S.; Wali, U.; Aparanji, V. A Novel ANN Structure for Image Recognition. arXiv 2020, arXiv:2010.04586. [Google Scholar]

- Madani, K. Artificial Neural Networks Based Image Processing & Pattern Recognition: From Concepts to Real-World Applications. In Proceedings of the 2008 First Workshops on Image Processing Theory, Tools and Applications, Sousse, Tunisia, 23–26 November 2008; pp. 1–9. [Google Scholar]

- Pourghahestani, F.A.; Rashedi, E. Object detection in images using artificial neural network and improved binary gravitational search algorithm. In Proceedings of the 2015 4th Iranian Joint Congress on Fuzzy and Intelligent Systems (CFIS), Zahedan, Iran, 9–11 September 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamisi, P.; Benediktsson, J.A. Deep learning for hyperspectral image classification: An overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef]

- Chauhan, R.; Ghanshala, K.K.; Joshi, R.C. Convolutional Neural Network (CNN) for Image Detection and Recognition. In Proceedings of the 2018 First International Conference on Secure Cyber Computing and Communication (ICSCCC), Jalandhar, India, 15–17 December 2018; pp. 278–282. [Google Scholar]

- Yang, S.B.; Lee, S.J. Improved CNN algorithm for object detection in large images. J. Korea Soc. Comput. Inf. 2020, 25, 45–53. [Google Scholar]

- Galvez, R.L.; Bandala, A.A.; Dadios, E.P.; Vicerra, R.R.P.; Maningo, J.M.Z. Object Detection Using Convolutional Neural Networks. In Proceedings of the TENCON 2018—2018 IEEE Region 10 Conference, Jeju Island, Republic of Korea, 28–31 October 2018; pp. 2023–2027. [Google Scholar] [CrossRef]

- Srivastava, S.; Divekar, A.V.; Anilkumar, C.; Naik, I.; Kulkarni, V.; Pattabiraman, V. Comparative analysis of deep learning image detection algorithms. J. Big Data 2021, 8, 66. [Google Scholar] [CrossRef]

- Sophian, A.; Tian, G.; Fan, M. Pulsed eddy current non-destructive testing and evaluation: A review. Chin. J. Mech. Eng. 2017, 30, 500–514. [Google Scholar] [CrossRef]

- Deng, W.; Bao, J.; Ye, B. Defect Image Recognition and Classification for Eddy Current Testing of Titanium Plate Based on Convolutional Neural Network. Complexity 2020, 2020, 8868190. [Google Scholar] [CrossRef]

- Bartels, K.A.; Fisher, J.L. Multifrequency eddy current image processing techniques for nondestructive evaluation. In Proceedings of the International Conference on Image Processing, Washington, DC, USA, 23–26 October 1995; Volume 1, pp. 486–489. [Google Scholar] [CrossRef]

- Ebayyeh, A.A.R.M.A.; Mousavi, A. A review and analysis of automatic optical inspection and quality monitoring methods in electronics industry. IEEE Access 2020, 8, 183192–183271. [Google Scholar] [CrossRef]

- Massaro, A.; Panarese, A.; Dipierro, G.; Cannella, E.; Galiano, A. Infrared Thermography and Image Processing applied on Weldings Quality Monitoring. In Proceedings of the 2020 IEEE International Workshop on Metrology for Industry 4.0 & IoT, Virtual Conference, 3–5 June 2020; pp. 559–564. [Google Scholar]

- Saragadam, V.; Dave, A.; Veeraraghavan, A.; Baraniuk, R. Thermal Image Processing via Physics-Inspired Deep Networks. arXiv 2021, arXiv:2108.07973. [Google Scholar]

- Abend, K. Fully automated dye-penetrant inspection of automotive parts. J. Mater. Manuf. 1998, 107, 624–629. [Google Scholar]

- Endramawan, T.; Sifa, A. Non Destructive Test Dye Penetrant and Ultrasonic on Welding SMAW Butt Joint with Acceptance Criteria ASME Standard. IOP Conf. Ser. Mater. Sci. Eng. 2018, 306, 012122. [Google Scholar] [CrossRef]

- Kalambe, P.; Ikhar, S.; Dhopte, V. Dye Penetrant Inspection of Turbine Blade Root Attachment. Int. J. Innov. Res. Technol. 2020, 6, 783–785. [Google Scholar]

- Lee, H.; Lee, H. Average Blurring-Based Anomaly Detection for Vision-based Mask Inspection Systems. In Proceedings of the 2021 21st International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 12–15 October 2021; pp. 2144–2146. [Google Scholar] [CrossRef]

| Related Works | Real Data | Inspection Method | Mask Application |

|---|---|---|---|

| [23,24] | O X | Eddy current | X X |

| [26,27] | O O | Thermography | X X |

| [29,30] | O X | Dye penetrant testing | X X |

| Proposed | O | Multi-vision system | O |

| TP | FN | FP | TN | Recall | Precision | Accuracy | |

|---|---|---|---|---|---|---|---|

| Proposed method | 300 | 0 | 1 | 299 | 1 | 0.99 | 99.8% |

| Previous work [32] | 256 | 44 | 63 | 237 | 0.85 | 0.8 | 82.1% |

| YOLO v5 | 198 | 102 | 88 | 212 | 0.66 | 0.69 | 68.3% |

| SSD | 223 | 73 | 70 | 230 | 0.75 | 0.76 | 75.5% |

| RetinaNet | 182 | 118 | 86 | 214 | 0.6 | 0.67 | 66% |

| TP | FN | FP | TN | Recall | Precision | Accuracy | |

|---|---|---|---|---|---|---|---|

| Proposed method | 299 | 1 | 0 | 300 | 0.99 | 1 | 99.8% |

| Previous work [32] | 244 | 56 | 89 | 211 | 0.81 | 0.73 | 75.8% |

| YOLO v5 | 206 | 94 | 119 | 181 | 0.68 | 0.63 | 64.5% |

| SSD | 229 | 71 | 97 | 203 | 0.76 | 0.7 | 72% |

| RetinaNet | 185 | 115 | 141 | 159 | 0.61 | 0.56 | 57.3% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Woo, J.; Lee, H. Nonlinear and Dotted Defect Detection with CNN for Multi-Vision-Based Mask Inspection. Sensors 2022, 22, 8945. https://doi.org/10.3390/s22228945

Woo J, Lee H. Nonlinear and Dotted Defect Detection with CNN for Multi-Vision-Based Mask Inspection. Sensors. 2022; 22(22):8945. https://doi.org/10.3390/s22228945

Chicago/Turabian StyleWoo, Jimyeong, and Heoncheol Lee. 2022. "Nonlinear and Dotted Defect Detection with CNN for Multi-Vision-Based Mask Inspection" Sensors 22, no. 22: 8945. https://doi.org/10.3390/s22228945

APA StyleWoo, J., & Lee, H. (2022). Nonlinear and Dotted Defect Detection with CNN for Multi-Vision-Based Mask Inspection. Sensors, 22(22), 8945. https://doi.org/10.3390/s22228945