Augmented Hearing of Auditory Safety Cues for Construction Workers: A Systematic Literature Review

Abstract

:1. Introduction

2. Research Background

2.1. Construction Hazards

2.2. Significant Role of Auditory Situational Awareness for Construction Safety

3. Methodology

3.1. Step 1: Define the Research Scope

3.2. Step 2: Literature Search

3.3. Step 3: Content Analysis

4. Results

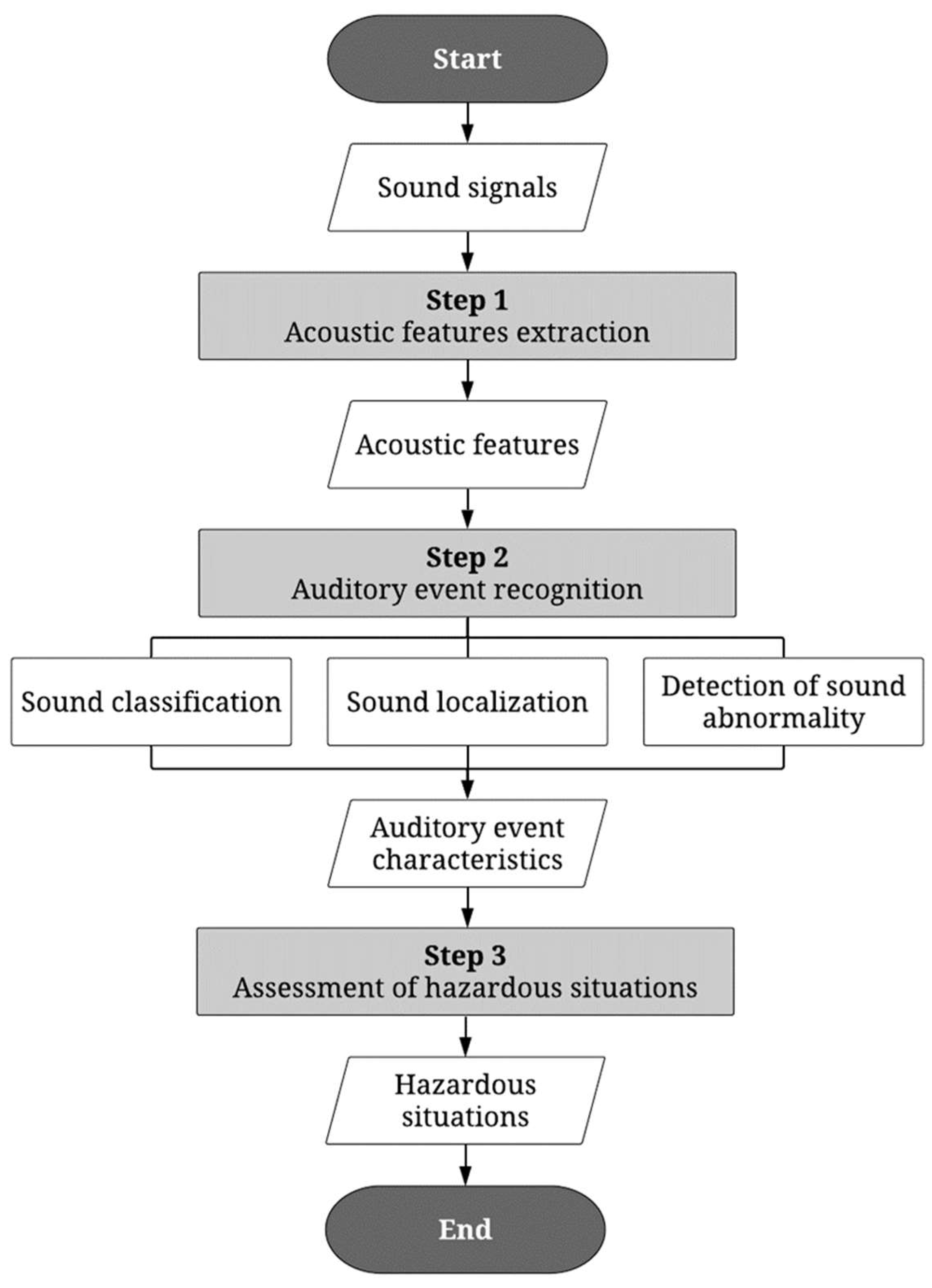

4.1. Audio Signal Processing Applications and Methods

4.1.1. Application Contexts of Auditory Surveillance

4.1.2. Principles of Audio-Based Hazard Detection

4.1.3. AI-Based Sound Classification

Feature Extraction

| Acoustic Features | Features Set | References |

|---|---|---|

| Cepstral features | Mel-Frequency Cepstral Coefficients (MFCC) | [11,12,13,15,17,24,27,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67] |

| Linear Prediction Coefficients (LPC) | [24,61,63,68] | |

| Linear Predictive Cepstral Coefficients (LPCC) | [63,68] | |

| Log Frequency Cepstral Coefficients (LFCC) | [68] | |

| Gammatone Frequency Cepstral Coefficients (GFCC) | [61] | |

| Perceptual Linear Prediction Coefficients (PLP) | [24] | |

| Spectral features | Spectral centroid | [27,44,62,63,65,66] |

| Spectral roll-off | [17,27,44,63,65,66] | |

| Spectral flux | [44,63,65,66] | |

| Mel spectrum | [48,49,52,53,60] | |

| Spectral moments | [17] | |

| Spectral slope | [17] | |

| Spectral decrease | [17] | |

| Modulation spectral features | [70] | |

| Spectrogram | [71] | |

| Energy features | Short Time Energy (STE) | [44,62,63,65,66] |

| Discrete Wavelet Transform Coefficients (DWTC) | [27] | |

| Temporal feature | Zero Crossing Rate (ZCR) | [15,17,27,44,62,63,65,66,68] |

Training of Sound Classification Models

4.1.4. Sound Localization

4.1.5. Sound Abnormality Detection

4.2. Audio-Based Surveillance in Construction

4.2.1. Feasibility of Implementing Audio-Based Hazard Detection in Construction

4.2.2. State-of-the-Art Research in Auditory Signal Processing for Construction

5. Discussions on Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Deshaies, P.; Martin, R.; Belzile, D.; Fortier, P.; Laroche, C.; Leroux, T.; Nélisse, H.; Girard, S.-A.; Arcand, R.; Poulin, M.; et al. Noise as an explanatory factor in work-related fatality reports. Noise Health 2015, 17, 294–299. [Google Scholar] [CrossRef] [PubMed]

- Fang, D.; Zhao, C.; Zhang, M. A Cognitive Model of Construction Workers’ Unsafe Behaviors. J. Constr. Eng. Manag. 2016, 142, 4016039. [Google Scholar] [CrossRef]

- Morata, T.C.; Themann, C.L.; Randolph, R.; Verbsky, B.L.; Byrne, D.C.; Reeves, E.R. Working in Noise with a Hearing Loss: Perceptions from Workers, Supervisors, and Hearing Conservation Program Managers. Ear Hear. 2005, 26, 529–545. [Google Scholar] [CrossRef] [PubMed]

- Awolusi, I.; Marks, E.; Hallowell, M. Wearable technology for personalized construction safety monitoring and trending: Review of applicable devices. Autom. Constr. 2018, 85, 96–106. [Google Scholar] [CrossRef]

- Awolusi, I.; Nnaji, C.; Marks, E.; Hallowell, M. Enhancing Construction Safety Monitoring through the Application of Internet of Things and Wearable Sensing Devices: A Review. In Proceedings of the Computing in Civil Engineering, Atlanta, Georgia, USA, 17–19 June 2019; American Society of Civil Engineers: Reston, VA, USA; pp. 530–538. [Google Scholar]

- Golparvar-Fard, M.; Heydarian, A.; Niebles, J.C. Vision-based action recognition of earthmoving equipment using spatio-temporal features and support vector machine classifiers. Adv. Eng. Inform. 2013, 27, 652–663. [Google Scholar] [CrossRef]

- Gong, J.; Caldas, C.H. Computer Vision-Based Video Interpretation Model for Automated Productivity Analysis of Construction Operations. J. Comput. Civ. Eng. 2010, 24, 252–263. [Google Scholar] [CrossRef]

- Gong, J.; Caldas, C.H.; Gordon, C. Learning and classifying actions of construction workers and equipment using Bag-of-Video-Feature-Words and Bayesian network models. Adv. Eng. Inform. 2011, 25, 771–782. [Google Scholar] [CrossRef]

- Akhavian, R.; Behzadan, A.H. Construction equipment activity recognition for simulation input modeling using mobile sensors and machine learning classifiers. Adv. Eng. Inform. 2015, 29, 867–877. [Google Scholar] [CrossRef]

- Ahn, C.R.; Lee, S.; Peña-Mora, F. Application of Low-Cost Accelerometers for Measuring the Operational Efficiency of a Construction Equipment Fleet. J. Comput. Civ. Eng. 2015, 29, 4014042. [Google Scholar] [CrossRef]

- Salamon, J.; Jacoby, C.; Bello, J.P. A Dataset and Taxonomy for Urban Sound Research. In Proceedings of the 2014 ACM Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 1041–1044. [Google Scholar]

- Salamon, J.; Bello, J.P. Deep Convolutional Neural Networks and Data Augmentation for Environmental Sound Classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Cramer, J.; Wu, H.-H.; Salamon, J.; Bello, J.P. Look, Listen, and Learn More: Design Choices for Deep Audio Embeddings. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3852–3856. [Google Scholar]

- Gemmeke, J.F.; Ellis, D.P.W.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio Set: An Ontology and Human-Labeled Dataset for Audio Events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar]

- Piczak, K.J. Environmental Sound Classification with Convolutional Neural Networks. In Proceedings of the 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 17–20 September 2015; pp. 1–6. [Google Scholar]

- Clavel, C.; Ehrette, T.; Richard, G. Events Detection for an Audio-Based Surveillance System. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 5–6 July 2005; pp. 1306–1309. [Google Scholar]

- Valenzise, G.; Gerosa, L.; Tagliasacchi, M.; Antonacci, F.; Sarti, A. Scream and Gunshot Detection and Localization for Audio-Surveillance Systems. In Proceedings of the 2007 IEEE Conference on Advanced Video and Signal Based Surveillance, London, UK, 5–7 September 2007; pp. 21–26. [Google Scholar]

- Vasilescu, I.; Devillers, L.; Clavel, C.; Ehrette, T. Fiction Database for Emotion Detection in Abnormal Situations. In Proceedings of the 31st International Symposium on Computer Architecture (ISCA 2004), Munich, Germany, 19–23 June 2004; pp. 2277–2280. [Google Scholar]

- Ntalampiras, S.; Potamitis, I.; Fakotakis, N. An Adaptive Framework for Acoustic Monitoring of Potential Hazards. EURASIP J. Audio Speech Music Process 2009, 2009, 594103. [Google Scholar] [CrossRef]

- Choi, W.; Rho, J.; Han, D.K.; Ko, H. Selective Background Adaptation Based Abnormal Acoustic Event Recognition for Audio Surveillance. In Proceedings of the 2012 IEEE Ninth International Conference on Advanced Video and Signal-Based Surveillance, Beijing, China, 18–21 September 2012; pp. 118–123. [Google Scholar]

- Carletti, V.; Foggia, P.; Percannella, G.; Saggese, A.; Strisciuglio, N.; Vento, M. Audio Surveillance Using a Bag of Aural Words Classifier. In Proceedings of the 2013 10th IEEE International Conference on Advanced Video and Signal Based Surveillance, Krakow, Poland, 27–30 August 2013; pp. 81–86. [Google Scholar]

- Chan, C.-F.; Yu, E.W.M. An Abnormal Sound Detection and Classification System for Surveillance Applications—IEEE Conference Publication. In Proceedings of the 2010 18th European Signal Processing Conference, Aalborg, Denmark, 23–27 August 2010; pp. 1851–1855. [Google Scholar]

- Lee, Y.; Han, D.; Ko, H. Acoustic Signal Based Abnormal Event Detection in Indoor Environment Using Multiclass Adaboost. IEEE Trans. Consum. Electron. 2013, 59, 615–622. [Google Scholar] [CrossRef]

- Rouas, J.-L.; Louradour, J.; Ambellouis, S. Audio Events Detection in Public Transport Vehicle. In Proceedings of the 2006 IEEE Intelligent Transportation Systems Conference, Toronto, ON, Canada, 17–20 September 2006; pp. 733–738. [Google Scholar]

- Radhakrishnan, R.; Divakaran, A. Systematic Acquisition of Audio Classes for Elevator Surveillance. In Proceedings of the Image and Video Communications and Processing 2005, San Jose, CA, USA, 12–20 January 2005; Said, A., Apostolopoulos, J.G., Eds.; SPIE: Bellingham, WA, USA, 2005; Volume 5685, pp. 64–71. [Google Scholar]

- Clavel, C.; Vasilescu, I.; Devillers, L.; Richard, G.; Ehrette, T. Fear-Type Emotion Recognition for Future Audio-Based Surveillance Systems. Speech Commun. 2008, 50, 487–503. [Google Scholar] [CrossRef]

- Vacher, M.; Istrate, D.; Besacier, L.; Serignat, J.-F.; Castelli, E. Sound Detection and Classification for Medical Telesurvey. In Proceedings of the 2nd Conference on Biomedical Engineering, Innsbruck, Austria, 16–18 February 2004. [Google Scholar]

- Adnan, S.M.; Irtaza, A.; Aziz, S.; Ullah, M.O.; Javed, A.; Mahmood, M.T. Fall Detection through Acoustic Local Ternary Patterns. Appl. Acoust. 2018, 140, 296–300. [Google Scholar] [CrossRef]

- Härmä, A.; Mckinney, M.F.; Skowronek, J. Automatic Surveillance of the Acoustic Activity in Our Living Environment. In Proceedings of the IEEE International Conference on Multimedia and Expo, ICME 2005, Amsterdam, The Netherlands, 6–8 July 2005; Volume 2005, pp. 634–637. [Google Scholar]

- Sherafat; Rashidi; Lee; Ahn A Hybrid Kinematic-Acoustic System for Automated Activity Detection of Construction Equipment. Sensors 2019, 19, 4286. [CrossRef]

- Glowacz, A. Acoustic Based Fault Diagnosis of Three-Phase Induction Motor. Appl. Acoust. 2018, 137, 82–89. [Google Scholar] [CrossRef]

- Koizumi, Y.; Saito, S.; Uematsu, H.; Kawachi, Y.; Harada, N. Unsupervised Detection of Anomalous Sound Based on Deep Learning and the Neyman–Pearson Lemma. IEEE/ACM Trans. Audio Speech Lang. Process 2019, 27, 212–224. [Google Scholar] [CrossRef]

- Marks, E.D.; Teizer, J. Method for Testing Proximity Detection and Alert Technology for Safe Construction Equipment Operation. Constr. Manag. Econ. 2013, 31, 636–646. [Google Scholar] [CrossRef]

- Sherafat, B.; Ahn, C.R.; Akhavian, R.; Behzadan, A.H.; Golparvar-Fard, M.; Kim, H.; Lee, Y.-C.; Rashidi, A.; Azar, E.R. Automated Methods for Activity Recognition of Construction Workers and Equipment: State-of-the-Art Review. J. Constr. Eng. Manag. 2020, 146, 3120002. [Google Scholar] [CrossRef]

- Perlman, A.; Sacks, R.; Barak, R. Hazard recognition and risk perception in construction. Saf. Sci. 2014, 64, 22–31. [Google Scholar] [CrossRef]

- Hinze, J.W.; Teizer, J. Visibility-related fatalities related to construction equipment. Saf. Sci. 2011, 49, 709–718. [Google Scholar] [CrossRef]

- Schultheis, U. Human Factors: Blind Spots in Heavy Construction Machinery. Available online: https://www.jdsupra.com (accessed on 23 November 2022).

- Brungart, D.S.; Cohen, J.; Cord, M.; Zion, D.; Kalluri, S. Assessment of auditory spatial awareness in complex listening environments. J. Acoust. Soc. Am. 2014, 136, 1808–1820. [Google Scholar] [CrossRef] [PubMed]

- Vinnik, E.; Itskov, P.M.; Balaban, E. Individual Differences in Sound-in-Noise Perception Are Related to the Strength of Short-Latency Neural Responses to Noise. PLoS ONE 2011, 6, e17266. [Google Scholar] [CrossRef] [PubMed]

- Engdahl, B.; Tambs, K. Occupation and the risk of hearing impairment—results from the Nord-Trøndelag study on hearing loss. Scand. J. Work. Environ. Health 2009, 36, 250–257. [Google Scholar] [CrossRef] [PubMed]

- Rigters, S.C.; Metselaar, M.; Wieringa, M.H.; De Jong, R.J.B.; Hofman, A.; Goedegebure, A. Contributing Determinants to Hearing Loss in Elderly Men and Women: Results from the Population-Based Rotterdam Study. Audiol. Neurotol. 2016, 21, 10–15. [Google Scholar] [CrossRef]

- Kwon, N.; Park, M.; Lee, H.-S.; Ahn, J.; Shin, M. Construction Noise Management Using Active Noise Control Techniques. J. Constr. Eng. Manag. 2016, 142, 4016014. [Google Scholar] [CrossRef]

- Gao, X.; Pishdad-Bozorgi, P. BIM-Enabled Facilities Operation and Maintenance: A Review. Adv. Eng. Inform. 2019, 39, 227–247. [Google Scholar] [CrossRef]

- Han, W.; Coutinho, E.; Ruan, H.; Li, H.; Schuller, B.; Yu, X.; Zhu, X. Semi-Supervised Active Learning for Sound Classification in Hybrid Learning Environments. PLoS ONE 2016, 11, e0162075. [Google Scholar] [CrossRef]

- Ma, L.; Milner, B.; Smith, D. Acoustic Environment Classification. ACM Trans. Speech Lang. Process 2006, 3, 1–22. [Google Scholar] [CrossRef]

- Logan, B.; Salomon, A. A Music Similarity Function Based on Signal Analysis. In Proceedings of the IEEE International Conference on Multimedia and Expo, Tokyo, Japan, 22–25 August 2001; pp. 745–748. [Google Scholar]

- Cotton, C.V.; Ellis, D.P.W. Spectral vs. Spectro-Temporal Features for Acoustic Event Detection. In Proceedings of the 2011 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New York, NY, USA, 16–19 October 2011; pp. 69–72. [Google Scholar]

- Stowell, D.; Plumbley, M. Automatic Large-Scale Classification of Bird Sounds Is Strongly Improved by Unsupervised Feature Learning. PeerJ 2014, 2, e488. [Google Scholar] [CrossRef]

- Salamon, J.; Bello, J.P. Unsupervised Feature Learning for Urban Sound Classification. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, Qld, Australia, 19–24 April 2015; pp. 171–175. [Google Scholar]

- McFee, B.; Humphrey, E.J.; Bello, J.P. A Software Framework for Musical Data Augmentation. In Proceedings of the ISMIR, Malaga, Spain, 26–30 October 2015; pp. 248–254. [Google Scholar]

- Chaudhuri, S.; Raj, B. Unsupervised Hierarchical Structure Induction for Deeper Semantic Analysis of Audio. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 833–837. [Google Scholar]

- Salamon, J.; Bello, J.P. Feature Learning with Deep Scattering for Urban Sound Analysis. In Proceedings of the 2015 23rd European Signal Processing Conference (EUSIPCO), Nice, France, 31 August–4 September 2015; pp. 724–728. [Google Scholar]

- Salamon, J.; Bello, J.P.; Farnsworth, A.; Robbins, M.; Keen, S.; Klinck, H.; Kelling, S. Towards the Automatic Classification of Avian Flight Calls for Bioacoustic Monitoring. PLoS ONE 2016, 11, e0166866. [Google Scholar] [CrossRef]

- Bello, J. Grouping Recorded Music by Structural Similarity. In Proceedings of the 10th International Society for Music Information Retrieval Conference, ISMIR 2009, Kobe, Japan, 26-30 October 2009; Kobe International Conference Center: Kobe, Japan; pp. 531–536. [Google Scholar]

- Salamon, J.; Bello, J.P.; Farnsworth, A.; Kelling, S. Fusing Shallow and Deep Learning for Bioacoustic Bird Species Classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, CA, USA, 5–9 March 2017; pp. 141–145. [Google Scholar]

- Bello, J.P.; Mydlarz, C.; Salamon, J. Sound Analysis in Smart Cities. In Computational Analysis of Sound Scenes and Events; Springer International Publishing: Cham, Switzerland, 2018; pp. 373–397. [Google Scholar]

- Alsouda, Y.; Pllana, S.; Kurti, A. A Machine Learning Driven IoT Solution for Noise Classification in Smart Cities. In Proceedings of the 21st Euromicro Conference on Digital System Design (DSD 2018), Workshop on Machine Learning Driven Technologies and Architectures for Intelligent Internet of Things (ML-IoT), Prague, Czech Republic, 29–31 August 2018. [Google Scholar]

- Asgari, M.; Shafran, I.; Bayestehtashk, A. Inferring Social Contexts from Audio Recordings Using Deep Neural Networks. In Proceedings of the 2014 IEEE International Workshop on Machine Learning for Signal Processing (MLSP), Reims, France, 21–24 September 2014; pp. 1–6. [Google Scholar]

- Ravanelli, M.; Elizalde, B.; Ni, K.; Friedland, G. Audio Concept Classification with Hierarchical Deep Neural Networks. In Proceedings of the 22nd European Signal Processing Conference, Lisbon, Portugal, 1–5 September 2014. [Google Scholar]

- Gencoglu, O.; Virtanen, T.; Huttunen, H. Recognition of Acoustic Events Using Deep Neural Networks. In Proceedings of the 22nd European Signal Processing Conference, Lisbon, Portugal, 1–5 September 2014; pp. 506–510. [Google Scholar]

- Janjua, Z.H.; Vecchio, M.; Antonini, M.; Antonelli, F. IRESE: An Intelligent Rare-Event Detection System Using Unsupervised Learning on the IoT Edge. Eng. Appl. Artif. Intell. 2019, 84, 41–50. [Google Scholar] [CrossRef]

- Salekin, A.; Ghaffarzadegan, S.; Feng, Z.; Stankovic, J. A Real-Time Audio Monitoring Framework with Limited Data for Constrained Devices. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 98–105. [Google Scholar]

- Eronen, A.J.; Peltonen, V.T.; Tuomi, J.T.; Klapuri, A.P.; Fagerlund, S.; Sorsa, T.; Lorho, G.; Huopaniemi, J. Audio-Based Context Recognition. IEEE Trans. Audio Speech Lang. Process 2006, 14, 321–329. [Google Scholar] [CrossRef]

- Kim, B.; Pardo, B. A Human-in-the-Loop System for Sound Event Detection and Annotation. ACM Trans. Interact. Intell. Syst. 2018, 8, 1–23. [Google Scholar] [CrossRef]

- Qian, K.; Zhang, Z.; Baird, A.; Schuller, B. Active learning for bird sound classification via a kernel-based extreme learning machine. J. Acoust. Soc. Am. 2017, 142, 1796–1804. [Google Scholar] [CrossRef]

- Qian, K.; Zhang, Z.; Baird, A.; Schuller, B. Active Learning for Bird Sounds Classification. Acta Acust. United Acust. 2017, 103, 361–364. [Google Scholar] [CrossRef]

- Shuyang, Z.; Heittola, T.; Virtanen, T. Active Learning for Sound Event Classification by Clustering Unlabeled Data. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 751–755. [Google Scholar]

- Atrey, P.K.; Maddage, N.C.; Kankanhalli, M.S. Audio Based Event Detection for Multimedia Surveillance. In Proceedings of the 2006 IEEE International Conference on Acoustics Speech and Signal Processing, Toulouse, France, 14–19 May 2006. [Google Scholar]

- Sephus, N.H.; Lanterman, A.D.; Anderson, D.V. Modulation Spectral Features: In Pursuit of Invariant Representations of Music with Application to Unsupervised Source Identification. J. New Music Res. 2014, 44, 58–70. [Google Scholar] [CrossRef]

- Wang, Y.; Mendez, A.E.M.; Cartwright, M.; Bello, J.P. Active Learning for Efficient Audio Annotation and Classification with a Large Amount of Unlabeled Data. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 880–884. [Google Scholar]

- Ito, A.; Aiba, A.; Ito, M.; Makino, S. Detection of Abnormal Sound Using Multi-Stage GMM for Surveillance Microphone. In Proceedings of the 2009 Fifth International Conference on Information Assurance and Security, Xi’an, China, 18–20 August 2009; Volume 1, pp. 733–736. [Google Scholar]

- Cheng, C.F.; Rashidi, A.; Davenport, M.A.; Anderson, D. Audio Signal Processing for Activity Recognition of Construction Heavy Equipment. In Proceedings of the ISARC 2016—33rd International Symposium on Automation and Robotics in Construction, Auburn, AL, USA, 18–21 July 2016; pp. 642–650. [Google Scholar]

- Ellis, D.P.W.; Zeng, X.; McDermott, J.H. Classifying Soundtracks with Audio Texture Features. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 5880–5883. [Google Scholar]

- Cheng, C.-F.; Rashidi, A.; Davenport, M.A.; Anderson, D.V. Activity analysis of construction equipment using audio signals and support vector machines. Autom. Constr. 2017, 81, 240–253. [Google Scholar] [CrossRef]

- Zhang, T.; Lee, Y.-C.; Scarpiniti, M.; Uncini, A. A supervised machine learning-based sound identification for construction activity monitoring and performance evaluation. In Proceedings of the Construction Research Congress, New Orleans, LA, USA, 2–4 April 2018; pp. 358–366. [Google Scholar]

- Marchi, E.; Vesperini, F.; Eyben, F.; Squartini, S.; Schuller, B. A Novel Approach for Automatic Acoustic Novelty Detection Using a Denoising Autoencoder with Bidirectional LSTM Neural Networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, QLD, Australia, 19–24 April 2015; pp. 1996–2000. [Google Scholar]

- Principi, E.; Vesperini, F.; Squartini, S.; Piazza, F. Acoustic Novelty Detection with Adversarial Autoencoders. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 3324–3330. [Google Scholar]

- Azizyan, M.; Choudhury, R.R. SurroundSense: Mobile Phone Localization Using Ambient Sound and Light. SIGMOBILE Mob. Comput. Commun. Rev. 2009, 13, 69–72. [Google Scholar] [CrossRef]

- Satoh, H.; Suzuki, M.; Tahiro, Y.; Morikawa, H. Ambient Sound-Based Proximity Detection with Smartphones. In Proceedings of the 11th ACM Conference on Embedded Networked Sensor Systems; Association for Computing Machinery, Rome, Italy, 11–15 November 2013. [Google Scholar]

- Tarzia, S.P.; Dinda, P.A.; Dick, R.P.; Memik, G. Indoor Localization without Infrastructure Using the Acoustic Background Spectrum. In Proceedings of the MobiSys’11—Compilation Proceedings of the 9th International Conference on Mobile Systems, Applications and Services and Co-located Workshops, Washington, DC, USA, 28 June–1 July 2011. [Google Scholar]

- Wirz, M.; Roggen, D.; Tröster, G. A Wearable, Ambient Sound-Based Approach for Infrastructureless Fuzzy Proximity Estimation. In Proceedings of the International Symposium on Wearable Computers (ISWC) 2010, Seoul, Republic of Korea, 10–13 October 2010; pp. 1–4. [Google Scholar]

- Adavanne, S.; Politis, A.; Nikunen, J.; Virtanen, T. Sound Event Localization and Detection of Overlapping Sources Using Convolutional Recurrent Neural Networks. IEEE J. Sel. Top. Signal Process. 2018, 13, 34–48. [Google Scholar] [CrossRef]

- Lu, H.; Li, Y.; Mu, S.; Wang, D.; Kim, H.; Serikawa, S. Motor Anomaly Detection for Unmanned Aerial Vehicles Using Reinforcement Learning. IEEE Internet Things J. 2018, 5, 2315–2322. [Google Scholar] [CrossRef]

- Lee, J.; Yang, K. Mobile Device-Based Struck-By Hazard Recognition in Construction Using a High-Frequency Sound. Sensors 2022, 22, 3482. [Google Scholar] [CrossRef] [PubMed]

- Elelu, K.; Le, T.; Le, C. Collision Hazard Detection for Construction Worker Safety Using Audio Surveillance. J. Constr. Eng. Manag. 2023, 149, 4022159. [Google Scholar] [CrossRef]

- Elelu, K.; Le, T.; Le, C. Collision Hazards Detection for Construction Workers Safety Using Equipment Sound Data. In Proceedings of the 9th International Conference on Construction Engineering and Project Management, Las Vegas, NV, USA, 20–24 June 2022; pp. 736–743. [Google Scholar]

- Elelu, K.; Le, T.; Le, C. Direction of Arrival of Equipment Sound in the Construction Industry. In Proceedings of the 39th International Symposium on Automation and Robotics in Construction, Bogota, Colombia, 13–15 July 2022. [Google Scholar]

- Lee, Y.-C.; Shariatfar, M.; Rashidi, A.; Lee, H.W. Evidence-driven sound detection for prenotification and identification of construction safety hazards and accidents. Autom. Constr. 2020, 113, 103127. [Google Scholar] [CrossRef]

- Lee, Y.-C.; Scarpiniti, M.; Uncini, A. Advanced Sound Classifiers and Performance Analyses for Accurate Audio-Based Construction Project Monitoring. J. Comput. Civ. Eng. 2020, 34, 4020030. [Google Scholar] [CrossRef]

- Xie, Y.; Lee, Y.C.; Shariatfar, M.; Zhang, Z.D.; Rashidi, A.; Lee, H.W. Historical accident and injury database-driven audio-based autonomous construction safety surveillance. Computing in Civil Engineering 2019: Data, Sensing, and Analytics, Atlanta, GA, USA, 17–19 June 2019; pp. 105–113. [Google Scholar]

- Sabillon, C.A.; Rashidi, A.; Samanta, B.; Cheng, C.-F.; Davenport, M.A.; Anderson, D. V A Productivity Forecasting System for Construction Cyclic Operations Using Audio Signals and a Bayesian Approach. In Proceedings of the Construction Research Congress 2018, New Orleans, LA, USA, 2–4 April 2018; American Society of Civil Engineers: Reston, VA, USA, 2018; pp. 295–304. [Google Scholar]

- Cho, C.; Lee, Y.-C.; Zhang, T. Sound Recognition Techniques for Multi-Layered Construction Activities and Events. In Proceedings of the Computing in Civil Engineering 2017, Seattle, WA, USA, 25–27 June 2017; American Society of Civil Engineers: Reston, VA, USA; pp. 326–334. [Google Scholar]

- Liu, Z.; Li, S. A Sound Monitoring System for Prevention of Underground Pipeline Damage Caused by Construction. Autom. Constr. 2020, 113, 103125. [Google Scholar] [CrossRef]

- Sherafat, B.; Rashidi, A.; Lee, Y.; Ahn, C. Automated Activity Recognition of Construction Equipment Using a Data Fusion Approach. In Proceedings of the The 2019 ASCE International Conference on Computing in Civil EngineeringAt: Georgia Institute of Technology, Atlanta, GA, USA, 17–19 June 2019. [Google Scholar]

- Wei, W.; Wang, C.; Lee, Y. BIM-Based Construction Noise Hazard Prediction and Visualization for Occupational Safety and Health Awareness Improvement. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering 2017, Seattle, WA, USA, 25–27 June 2017. [Google Scholar]

- Kim, D.; Liu, M.; Lee, S.H.; Kamat, V.R. Remote Proximity Monitoring between Mobile Construction Resources Using Camera-Mounted UAVs. Autom. Constr. 2019, 99, 168–182. [Google Scholar] [CrossRef]

- Son, H.; Seong, H.; Choi, H.; Kim, C. Real-Time Vision-Based Warning System for Prevention of Collisions between Workers and Heavy Equipment. J. Comput. Civ. Eng. 2019, 33, 4019029. [Google Scholar] [CrossRef]

- Huang, Y.; Hammad, A.; Zhu, Z. Providing Proximity Alerts to Workers on Construction Sites Using Bluetooth Low Energy RTLS. Autom. Constr. 2021, 132, 103928. [Google Scholar] [CrossRef]

- Scarpiniti, M.; Comminiello, D.; Uncini, A.; Lee, Y.-C. Deep Recurrent Neural Networks for Audio Classification in Construction Sites. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO) Virtual Conference, Amsterdam, The Netherlands, 18–22 January 2021; pp. 810–814. [Google Scholar]

- Cho, C.; Park, J. An Embedded Sensory System for Worker Safety: Prototype Development and Evaluation. Sensors 2018, 18, 1200. [Google Scholar] [CrossRef]

- Sakhakarmi, S.; Park, J.; Singh, A. Tactile-based wearable system for improved hazard perception of worker and equipment collision. Autom. Constr. 2021, 125, 103613. [Google Scholar] [CrossRef]

- Goodman, S.; Kirchner, S.; Guttman, R.; Jain, D.; Froehlich, J.; Findlater, L. Evaluating Smartwatch-Based Sound Feedback for Deaf and Hard-of-Hearing Users Across Contexts. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–13. [Google Scholar]

- Ching, K.W.; Singh, M.M. Wearable Technology Devices Security and Privacy Vulnerability Analysis. Int. J. Netw. Secur. Its Appl. 2016, 8, 19–30. [Google Scholar] [CrossRef]

- Arabtali, B.; Solhi, M.; Shojaeezadeh, D.; Gohari, M. Related factors of using hearing protection device based on the protection motivation theory in Shoga factory workers. Iran Occup. Health 2015, 12, 12–19. [Google Scholar]

- McCullagh, M.; Lusk, S.L.; Ronis, D.L. Factors Influencing Use of Hearing Protection among Farmers: A Test of the Pender Health Promotion Model. Nurs. Res. 2002, 51, 33–39. [Google Scholar] [CrossRef]

- Nélisse, H.; Gaudreau, M.-A.; Boutin, J.; Voix, J.; Laville, F. Measurement of Hearing Protection Devices Performance in the Workplace during Full-Shift Working Operations. Ann. Occup. Hyg. 2011, 56, 221–232. [Google Scholar] [CrossRef]

- Reddy, R.K.; Welch, D.; Thorne, P.; Ameratunga, S. Hearing Protection Use in Manufacturing Workers: A Qualitative Study. Noise Health 2012, 14, 202–209. [Google Scholar] [CrossRef]

| No. | Type of Equipment | Number of Fatality Cases | Percentage of Cases in Reverse Direction | Percentage of Cases without Reverse Alarms |

|---|---|---|---|---|

| 1 | Dump truck | 173 | 91.1% | 12.1% |

| 2 | Excavator/backhoe | 50 | 53.3% | 4% |

| 3 | Private vehicle (car, pickup, van) | 42 | 28.6% | - |

| 4 | Dozer | 38 | 82.4% | - |

| 5 | Grader | 37 | 91.9% | 13.5% |

| 6 | Front-end loader | 31 | 72.4% | - |

| 7 | Forklift | 30 | 57.1% | - |

| 8 | Tractor-trailer | 24 | 54.5% | - |

| 9 | Compactor | 18 | 82.4% | - |

| 10 | Scraper | 15 | 80.0% | 26.7% |

| 11 | Skid Steer Loader | 15 | 92.9% | - |

| 12 | Water truck | 13 | 91.7% | 15.4% |

| 13 | Paver | 8 | - | - |

| 14 | Tractor (agricultural) | 6 | - | - |

| 15 | Crane | 4 | - | - |

| 16 | Sweeper | 4 | - | - |

| Factors | Conclusion | References | ||

|---|---|---|---|---|

| Jobsite-related | 1 | There are a significant number of sound sources in the acoustic event at construction sites | Even normal hearing listeners have a poor performance when complex auditory events happen | [38] |

| 2 | Construction sites are considered noisy workplaces | Important sounds can be easily ignored or misidentified when there is the presence of extraneous noise | [39] | |

| Worker-related | 3 | The majority of construction workers are male | Men have higher rates of difficulty in hearing than women | [3] |

| 4 | Construction workers may experience hearing loss (due to hazardous noise) | Noise-exposed workers with hearing loss face many problems of job safety as the result of a reduction of hearing ability to warning signals | [3] | |

| Application | References |

|---|---|

| Home security | [23] |

| Detection of critical situations in a railway system | [24] |

| General surveillance in public areas | [16,17,19,20,21,22] |

| Detection of crimes in elevators | [25] |

| Detection of human emotions in public spaces | [18,26] |

| Office surveillance | [29] |

| Medical and health care facilities | |

| Surveillance of the elderly, the convalescent, or pregnant women | [27] |

| Automatic fall detection to improve the quality of life for independent older adults | [28] |

| Industrial plants | |

| Fault diagnosis of an induction motor | [31] |

| Detection of abnormality or failure of equipment | [32] |

| Techniques | References | |

|---|---|---|

| 1 | Gaussian Mixture Models (GMM) | [16,17,19,20,23,24,25,27,62,68,71] |

| 2 | Support Vector Machine (SVM) | [11,21,24,28,30,44,57,72,73,74] |

| 3 | K-Means Algorithms | [21,29,46,48,51] |

| 4 | Hidden Markov Models (HMM) | [22,45,47,63,75] |

| 5 | K-Nearest Neighbor Algorithms (KNN) | [31,57] |

| Techniques | References |

|---|---|

| Deep Neural Network (DNN) | [58,59,60] |

| Convolutional Neural Network (CNN) | [9,12,28,46,70] |

| Recurrent Neural Network (RNN)/Bi-Directional Long Short-Term Memory (BLSTM) | [62,76] |

| Auto Encoder (AE) | [32,76,77] |

| Methods | References |

|---|---|

| 1. Maximum-Likelihood Generalized Cross Correlation (GCC) | [17] |

| 2. Linear-correction least-square localization algorithm | [17] |

| 3. Similarity measure based on the Euclidean Distance (EUD) in the time domain | [78] |

| 4. Similarity measure based on Normalized Cross Correlation (NCC) in the frequency domain | [79] |

| 5. Similarity measure based on the Euclidean Distance (EUD) in the frequency domain | [80] |

| 6. Fast Fourier Transform (FFT) | [80] |

| 7. Fingerprinting algorithm | [81] |

| Code | Auditory Event Characteristics | References | |

|---|---|---|---|

| A | Abnormality of sound | ||

| Greater than the threshold? | [71] | ||

| B | Direction of moving sound source | ||

| Towards the worker? | [78,79,80,81] | ||

| C | Location of sound | ||

| Less than a safe distance to the worker? | [78,79,80,81] | ||

| D | Type of sound | ||

| D.1 | Alert alarm (fire/earthquake) | [23,25,76,77] | |

| D.2 | Explosion | [19] | |

| D.3 | Gunshot | [16,17,19,20,25,61,62] | |

| D.4 | Human | a. Announcement | [23] |

| b. Crowd ambient sound | [26] | ||

| c. Scream/shout/cry | [17,18,19,20,23,24,25,26,27,28,61,62,68,76,77] | ||

| D.5 | Moving heavy equipment/machine | [30,33,36] | |

| No. | Hazardous Situations | Combination of Auditory Event Characteristics |

|---|---|---|

| 1 | Heavy equipment/machine is at an unsafe distance | D.5 + C |

| 2 | Heavy equipment/machine is approaching | D.5 + C + B |

| 3 | Heavy equipment/machine is operating in an abnormal condition | D.5 + A |

| 4 | There is someone screaming/shouting/crying | D.4c |

| 5 | There is a crowd approaching | D.4b + B |

| 6 | There is an abnormal crowd approaching | D.4b + B + A |

| 7 | There is an alert alarm | D.1 |

| 8 | There is an explosion | D.2 |

| 9 | There is a gunshot | D.3 |

| 10 | There is an abnormal sound | A |

| No. | Influencing Factors | References |

|---|---|---|

| 1 | Planning effective interventions | [104] |

| 2 | Workplace interpersonal interaction | [105,107] |

| 3 | Poor insertion and poor fitting of earplugs | [106] |

| 4 | Left and right ear differences | [106] |

| 5 | Perception of noise | [107] |

| 6 | Hearing protection use | [107] |

| 7 | Reluctance to use HPDs | [107] |

| 8 | Value of hearing influence hearing protection use | [104,107] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dang, K.; Elelu, K.; Le, T.; Le, C. Augmented Hearing of Auditory Safety Cues for Construction Workers: A Systematic Literature Review. Sensors 2022, 22, 9135. https://doi.org/10.3390/s22239135

Dang K, Elelu K, Le T, Le C. Augmented Hearing of Auditory Safety Cues for Construction Workers: A Systematic Literature Review. Sensors. 2022; 22(23):9135. https://doi.org/10.3390/s22239135

Chicago/Turabian StyleDang, Khang, Kehinde Elelu, Tuyen Le, and Chau Le. 2022. "Augmented Hearing of Auditory Safety Cues for Construction Workers: A Systematic Literature Review" Sensors 22, no. 23: 9135. https://doi.org/10.3390/s22239135