Three-Dimensional Integral Imaging with Enhanced Lateral and Longitudinal Resolutions Using Multiple Pickup Positions

Abstract

1. Introduction

2. Three-Dimensional Integral Imaging with Multiple Pickup Positions

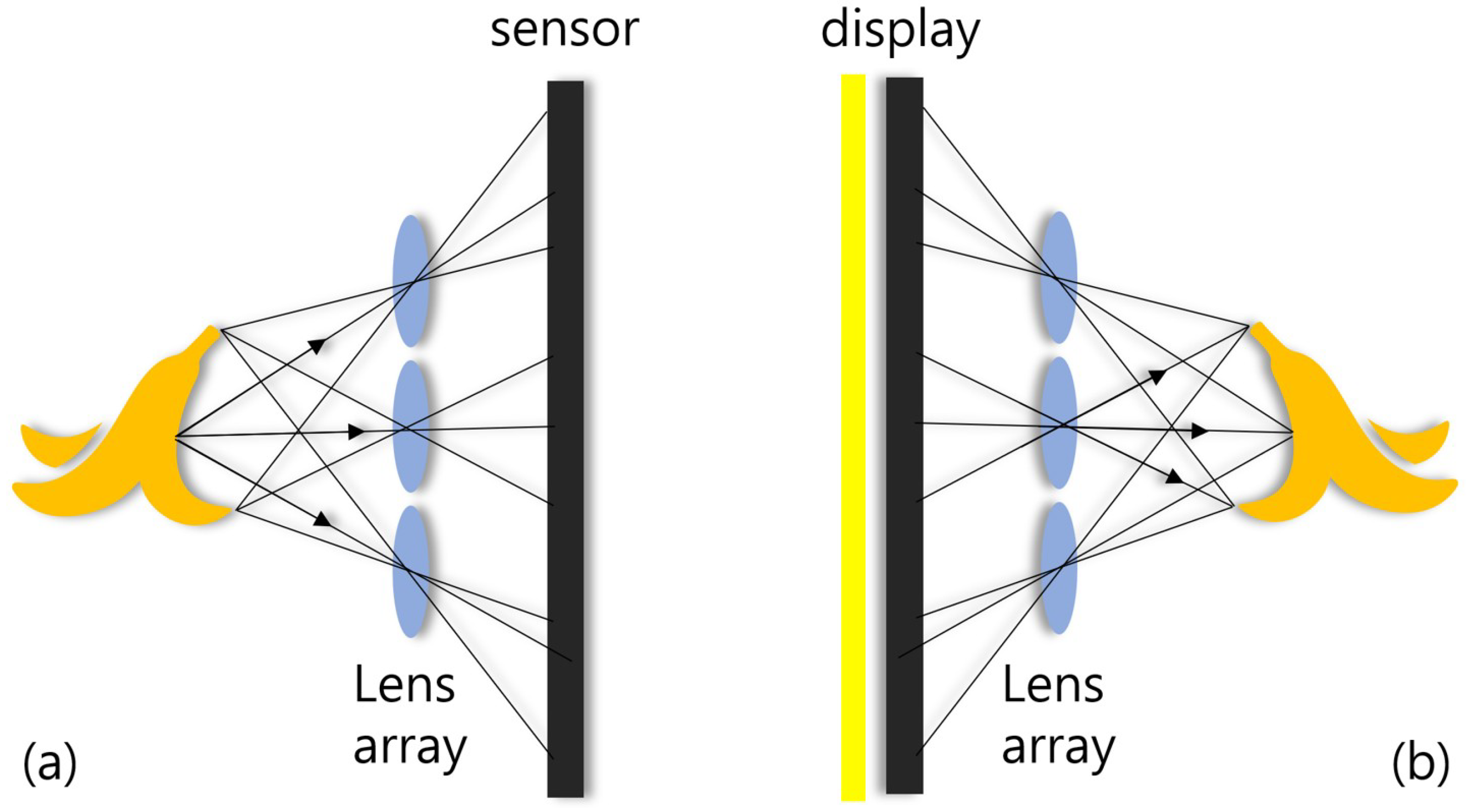

2.1. Integral Imaging

2.2. Synthetic Aperture Integral Imaging

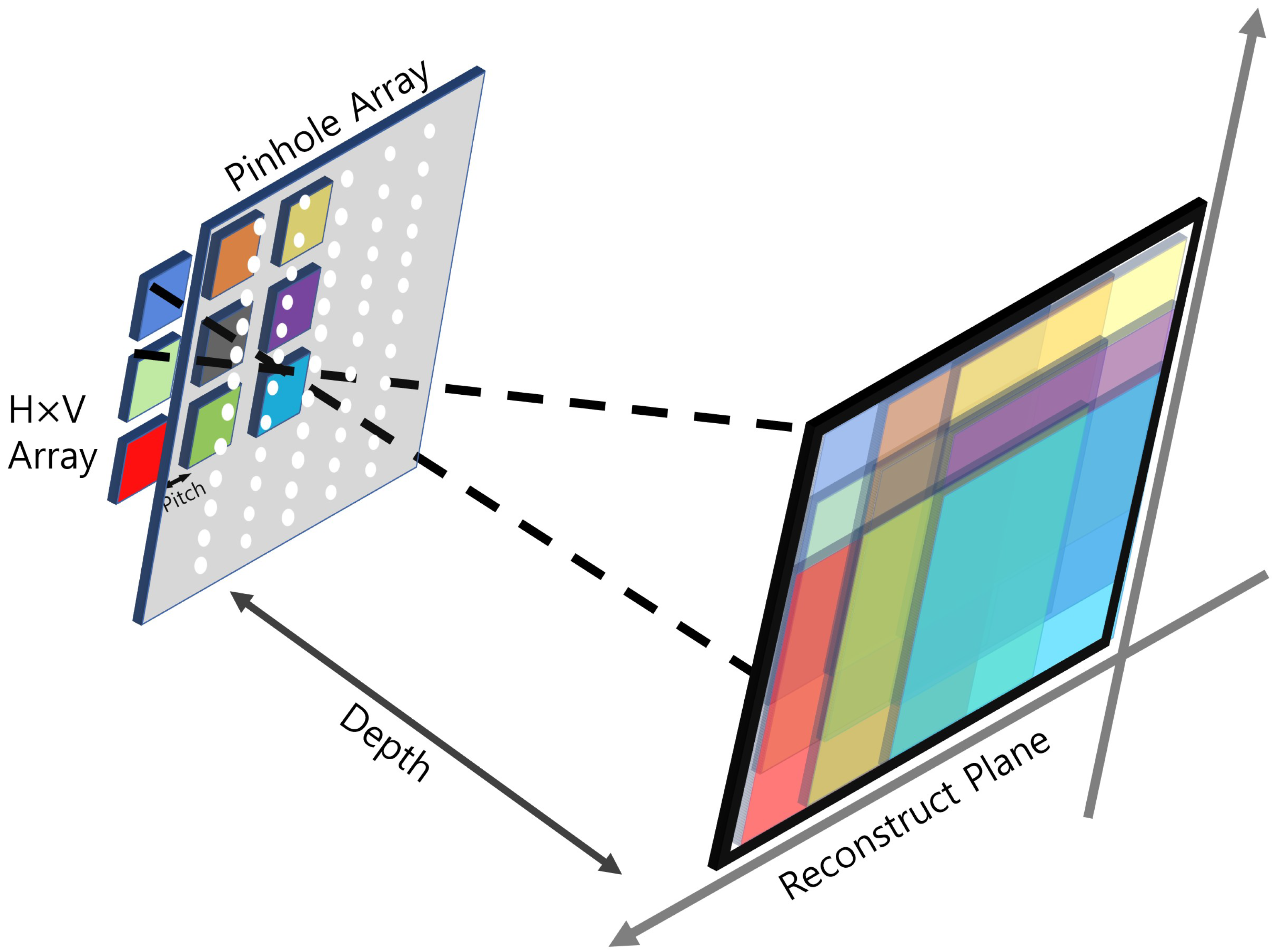

2.3. Axially Distributed Sensing

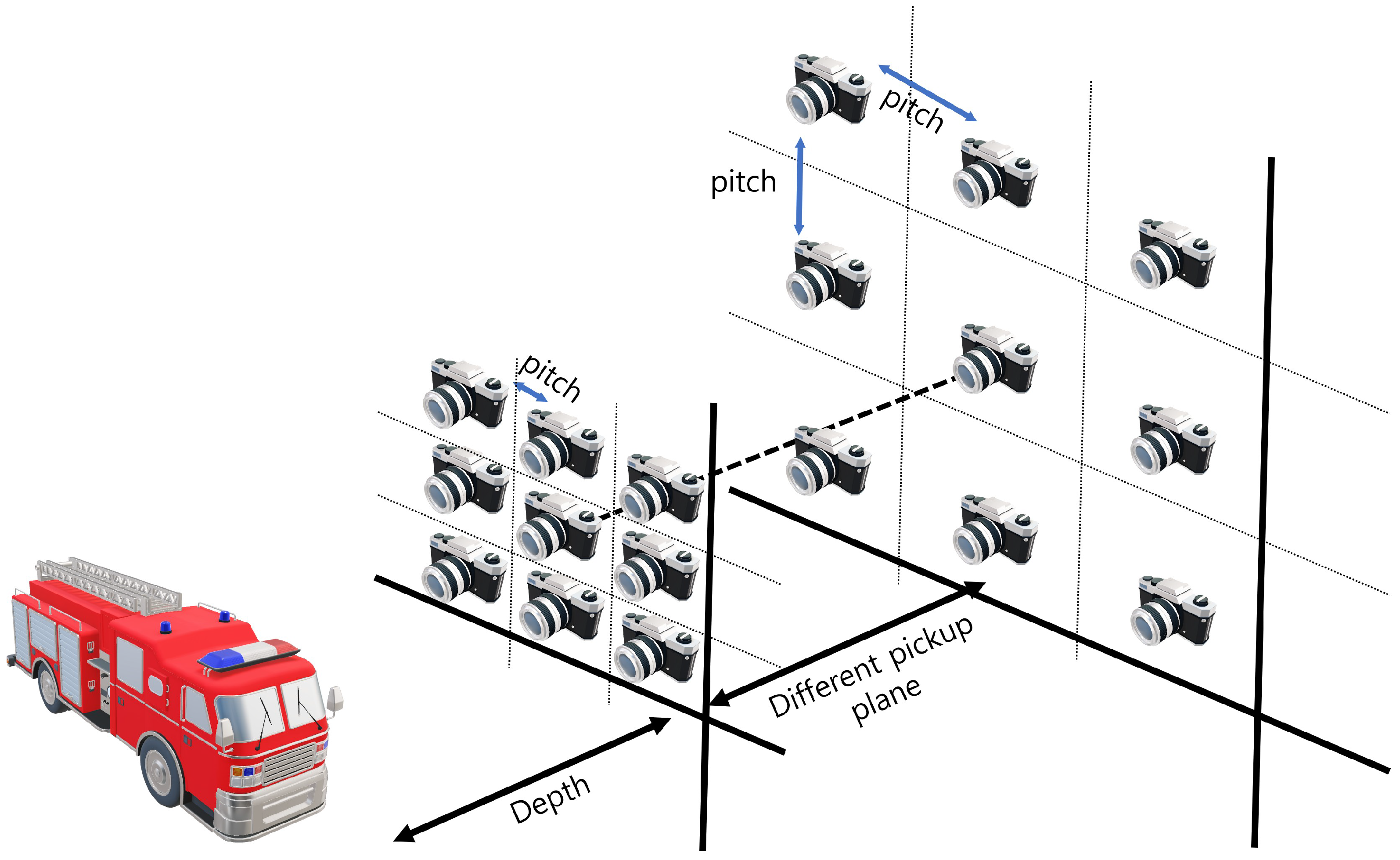

2.4. Our Method

3. Results of the Simulation

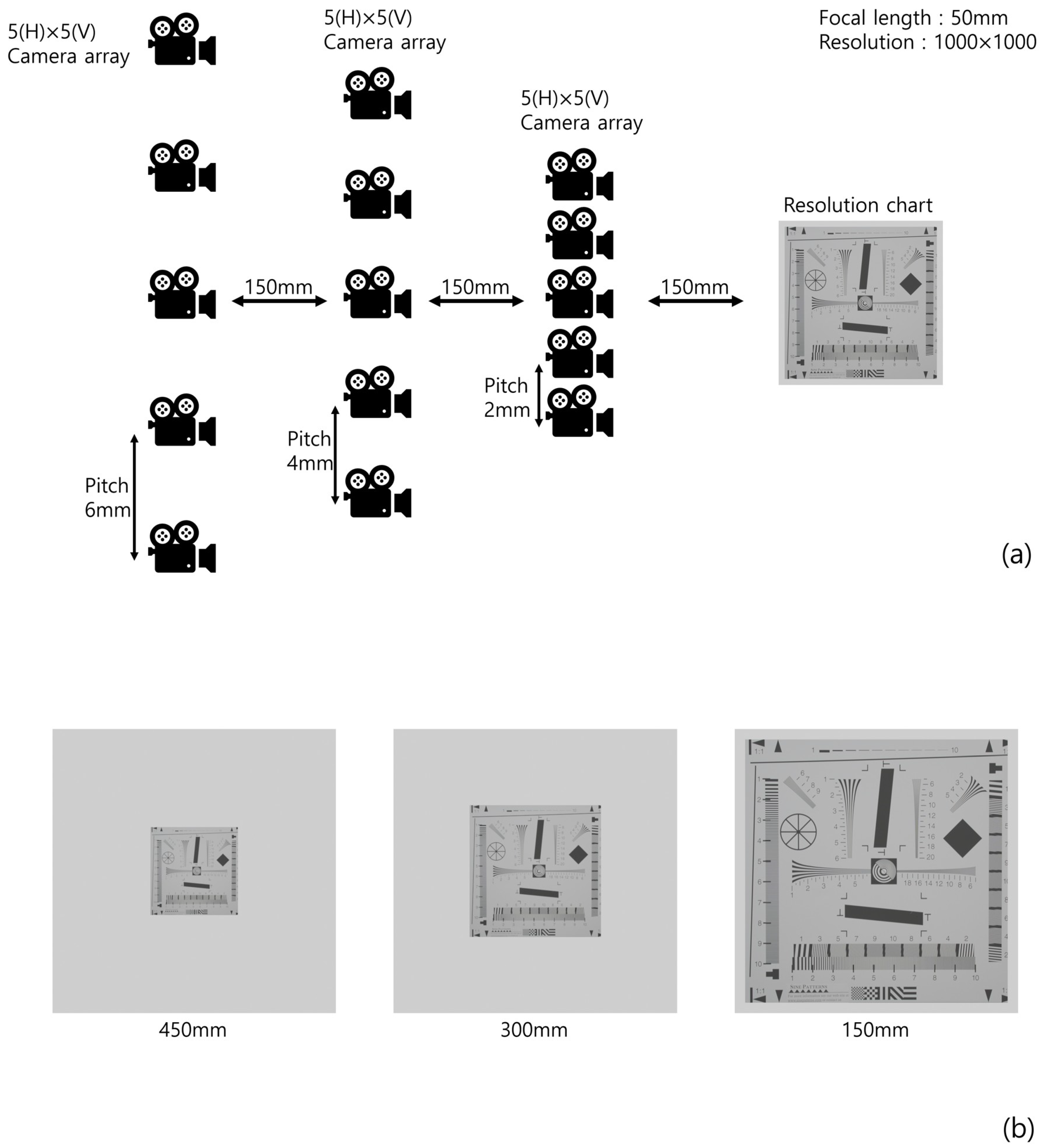

3.1. The First Simulation Setup

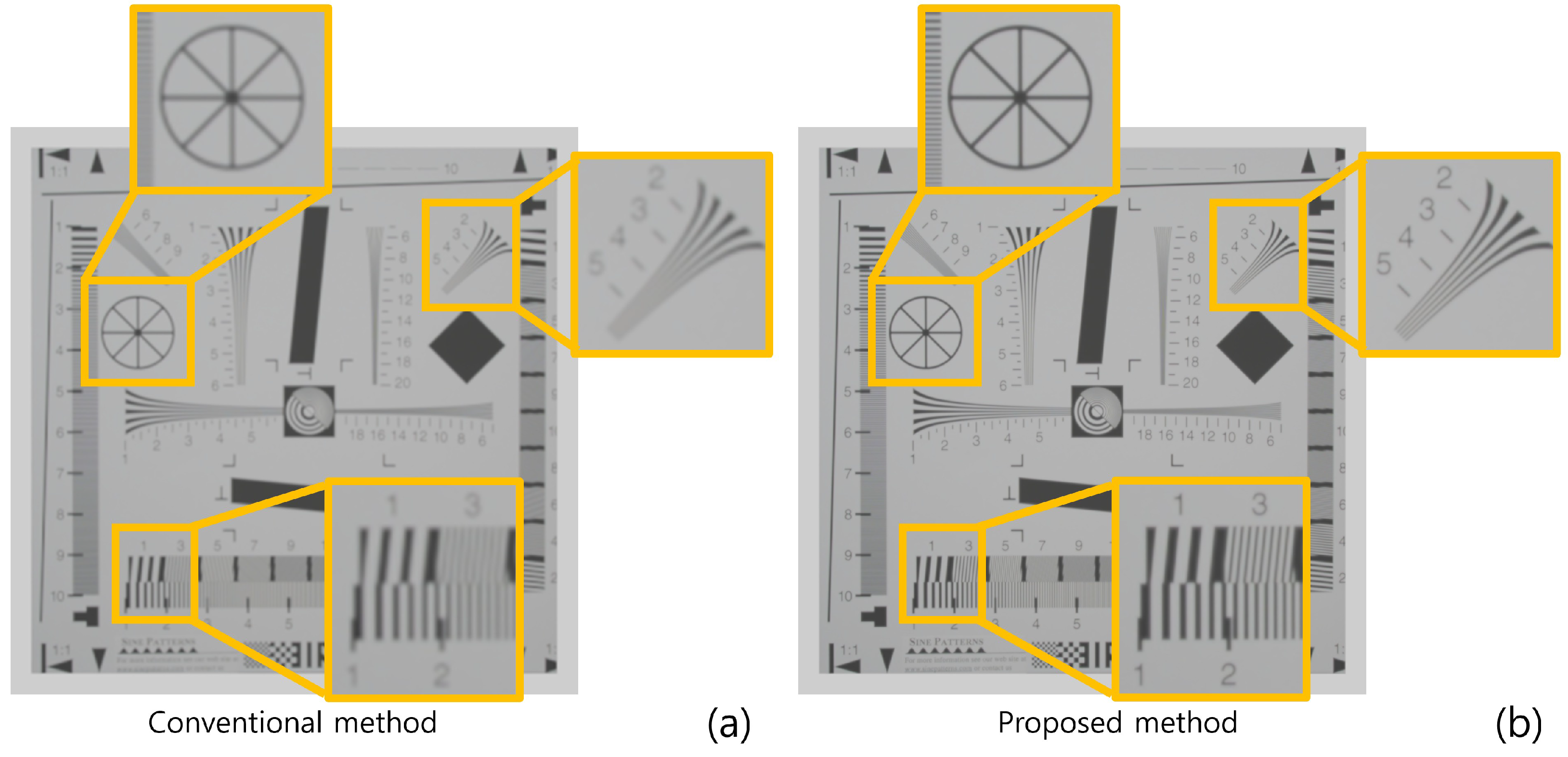

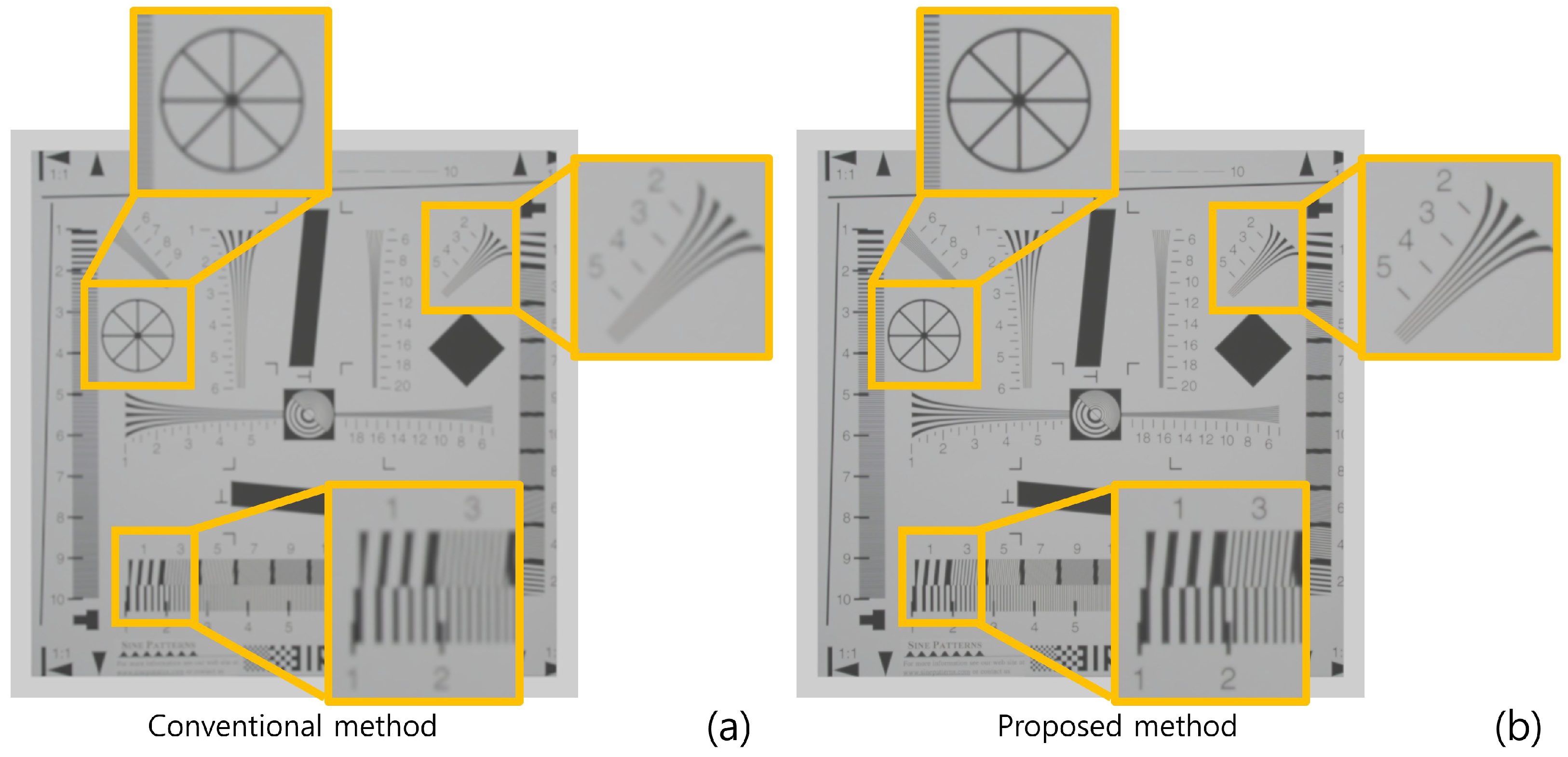

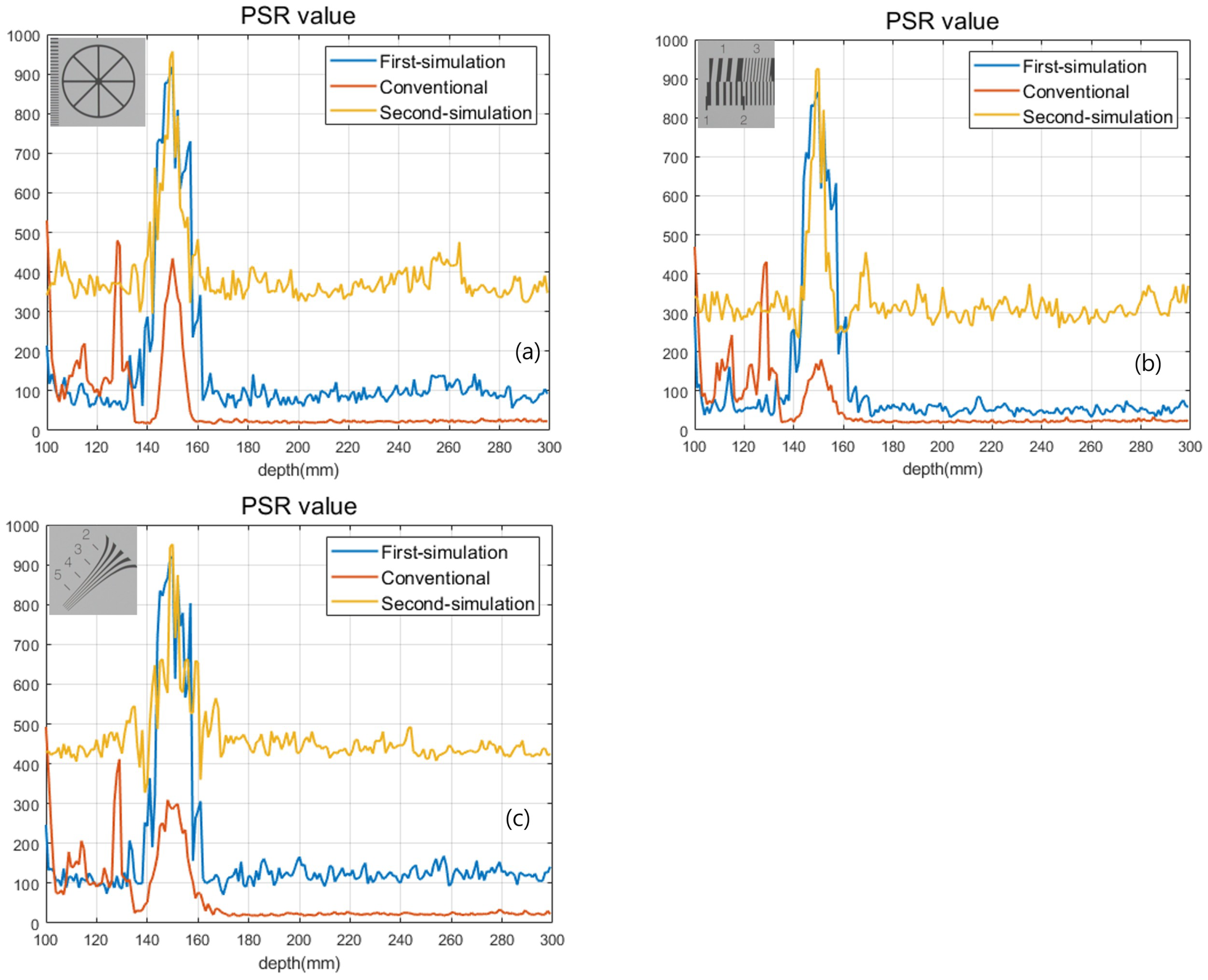

3.2. The First Simulation Result

3.3. The Second Simulation Setup

3.4. Simulation Result

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cho, M.; Daneshpanah, M.; Moon, I.; Javidi, B. Three-Dimensional Optical Sensing and Visualization Using Integral Imaging. Proc. IEEE 2011, 4, 556–575. [Google Scholar]

- Cho, M.; Javidi, B. Three-Dimensional Photon Counting Imaging with Axially Distributed Sensing. Sensors 2016, 16, 1184. [Google Scholar] [CrossRef] [PubMed]

- Jang, J.; Javidi, B. Three-dimensional integral imaging of micro-objects. Opt. Lett. 2004, 11, 1230–1232. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Cabré, E.; Cho, M.; Javidi, B. Information authentication using photon-counting double-random-phase encrypted images. Opt. Lett. 2011, 1, 22–24. [Google Scholar] [CrossRef] [PubMed]

- Yeom, S.; Javidi, B.; Watson, E. Photon counting passive 3D image sensing for automatic target recognition. Opt. Express 2005, 23, 9310–9330. [Google Scholar] [CrossRef] [PubMed]

- Hecht, E. Optics; Pearson: San Francisco, CA, USA, 2002. [Google Scholar]

- Javidi, B.; Moon, I.; Yeom, S. Three-dimensional identification of biological microorganism using integral imaging. Opt. Express 2006, 25, 12096–12108. [Google Scholar] [CrossRef]

- Geng, J.; Xie, J. Review of 3-D Endoscopic Surface Imaging Techniques. IEEE Sens. J. 2014, 4, 945–960. [Google Scholar]

- Xiao, X.; Javidi, B.; Martinez-Corral, M.; Stern, A. Advances in three-dimensional integral imaging: Sensing, display, and applications. Appl. Opt. 2013, 4, 546–560. [Google Scholar] [CrossRef]

- Kovalev, M.; Gritsenko, I.; Stsepuro, N.; Nosov, P.; Krasin, G.; Kudryashov, S. Reconstructing the Spatial Parameters of a Laser Beam Using the Transport-of-Intensity Equation. Sensors 2022, 5, 1765. [Google Scholar] [CrossRef]

- Zuo, C.; Li, J.; Sun, J.; Fan, Y.; Zhang, J.; Lu, L.; Zhang, R.; Wang, B.; Huang, L.; Chen, Q. Transport of intensity equation: A tutorial. Opt. Lasers Eng. 2020, 135, 106187. [Google Scholar] [CrossRef]

- Hong, S.; Jang, J.; Javidi, B. Three-dimensional volumetric object reconstruction using computational integral imaging. Opt. Express 2004, 3, 483–491. [Google Scholar] [CrossRef]

- Martinez-Corral, M.; Javidi, B. Fundamentals of 3D imaging and displays: A tutorial on integral imaging, light-field, and plenoptic systems. Adv. Opt. Photonics 2018, 3, 512–566. [Google Scholar] [CrossRef]

- Javidi, B.; Hong, S. Three-Dimensional Holographic Image Sensing and Integral Imaging Display. J. Disp. Technol. 2005, 2, 341–346. [Google Scholar] [CrossRef]

- Jang, J.; Javidi, B. Three-dimensional synthetic aperture integral imaging. Opt. Lett. 2002, 13, 1144–1146. [Google Scholar] [CrossRef]

- Cho, B.; Kopycki, P.; Martinez-Corral, M.; Cho, M. Computational volumetric reconstruction of integral imaging with improved depth resolution considering continuously non-uniform shifting pixels. Opt. Lasers Eng. 2018, 111, 114–121. [Google Scholar] [CrossRef]

- Yun, H.; Llavador, A.; Saavedra, G.; Cho, M. Three-dimensional imaging system with both improved lateral resolution and depth of field considering non-uniform system parameters. Appl. Opt. 2018, 31, 9423–9431. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Three-dimensional photon counting integral imaging using moving array lens technique. Opt. Lett. 2012, 9, 1487–1489. [Google Scholar] [CrossRef]

- Inoue, K.; Cho, M. Fourier focusing in integral imaging with optimum visualization pixels. Opt. Lasers Eng. 2020, 127, 105952. [Google Scholar] [CrossRef]

- Schulein, R.; DaneshPanah, M.; Javidi, B. 3D imaging with axially distributed sensing. Opt. Lett. 2009, 13, 2012–2014. [Google Scholar] [CrossRef]

- Cho, M.; Shin, D. 3D Integral Imaging Display using Axially Recorded Multiple Images. J. Opt. Soc. Korea 2013, 5, 410–414. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Three-Dimensional Photon Counting Axially Distributed Image Sensing. J. Disp. Technol. 2013, 1, 56–62. [Google Scholar] [CrossRef]

- Lippmann, G. La photographie integrale. C R Acad SCI 1908, 146, 446–451. [Google Scholar]

- Westin, S.H. ISO 12233 Test Chart. 2010. Available online: https://www.graphics.cornell.edu/~westin/misc/res-chart.html (accessed on 30 October 2022).

| Image | Method | MSE | PSNR | SSIM |

|---|---|---|---|---|

| Line | Proposed method | 141.175 | 26.6167 | 0.912548 |

| Conventional | 412.612 | 21.9755 | 0.735038 | |

| Diagonal line | Proposed method | 70.2242 | 29.6659 | 0.934659 |

| Conventional | 191.215 | 25.3156 | 0.822554 | |

| Circle | Proposed method | 120.501 | 27.3209 | 0.928587 |

| Conventional | 362.636 | 22.5361 | 0.775436 |

| Image | Method | MSE | PSNR | SSIM |

|---|---|---|---|---|

| Line | Proposed method | 150.162 | 26.3652 | 0.907574 |

| Conventional | 412.612 | 21.9755 | 0.735038 | |

| Diagonal line | Proposed method | 71.6068 | 29.5813 | 0.934551 |

| Conventional | 191.215 | 25.3156 | 0.822554 | |

| Circle | Proposed method | 128.099 | 27.0554 | 0.925271 |

| Conventional | 362.636 | 22.5361 | 0.775436 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Cho, M. Three-Dimensional Integral Imaging with Enhanced Lateral and Longitudinal Resolutions Using Multiple Pickup Positions. Sensors 2022, 22, 9199. https://doi.org/10.3390/s22239199

Lee J, Cho M. Three-Dimensional Integral Imaging with Enhanced Lateral and Longitudinal Resolutions Using Multiple Pickup Positions. Sensors. 2022; 22(23):9199. https://doi.org/10.3390/s22239199

Chicago/Turabian StyleLee, Jiheon, and Myungjin Cho. 2022. "Three-Dimensional Integral Imaging with Enhanced Lateral and Longitudinal Resolutions Using Multiple Pickup Positions" Sensors 22, no. 23: 9199. https://doi.org/10.3390/s22239199

APA StyleLee, J., & Cho, M. (2022). Three-Dimensional Integral Imaging with Enhanced Lateral and Longitudinal Resolutions Using Multiple Pickup Positions. Sensors, 22(23), 9199. https://doi.org/10.3390/s22239199