3D Object Recognition Using Fast Overlapped Block Processing Technique

Abstract

:1. Introduction

1.1. Related Works

1.2. Paper Contributions

1.3. Paper Organization

2. Preliminaries of OPs and Their OMs

2.1. Charlier Polynomials Computation and Their Moments

2.2. Computation of Charlier Polynomials

2.3. Computation of Charlier Moments

2.4. Charlier Coefficients Computation Using Recurrence Relation Algorithm

| Algorithm 1 The algorithm used to compute the Charlier polynomials |

Input:N = Polynomial size, p = Polynomial parameter. |

Output: = Charlier polynomials. |

1: Initialize with a size of |

2: {Compute initial value}. |

3: {Set the range of n}. |

4:for i in range n do |

5: |

6: end for |

7: {Set the range of n}. |

8: for i in range n do |

9: |

10:end for |

11:for to do |

12: |

13:end for |

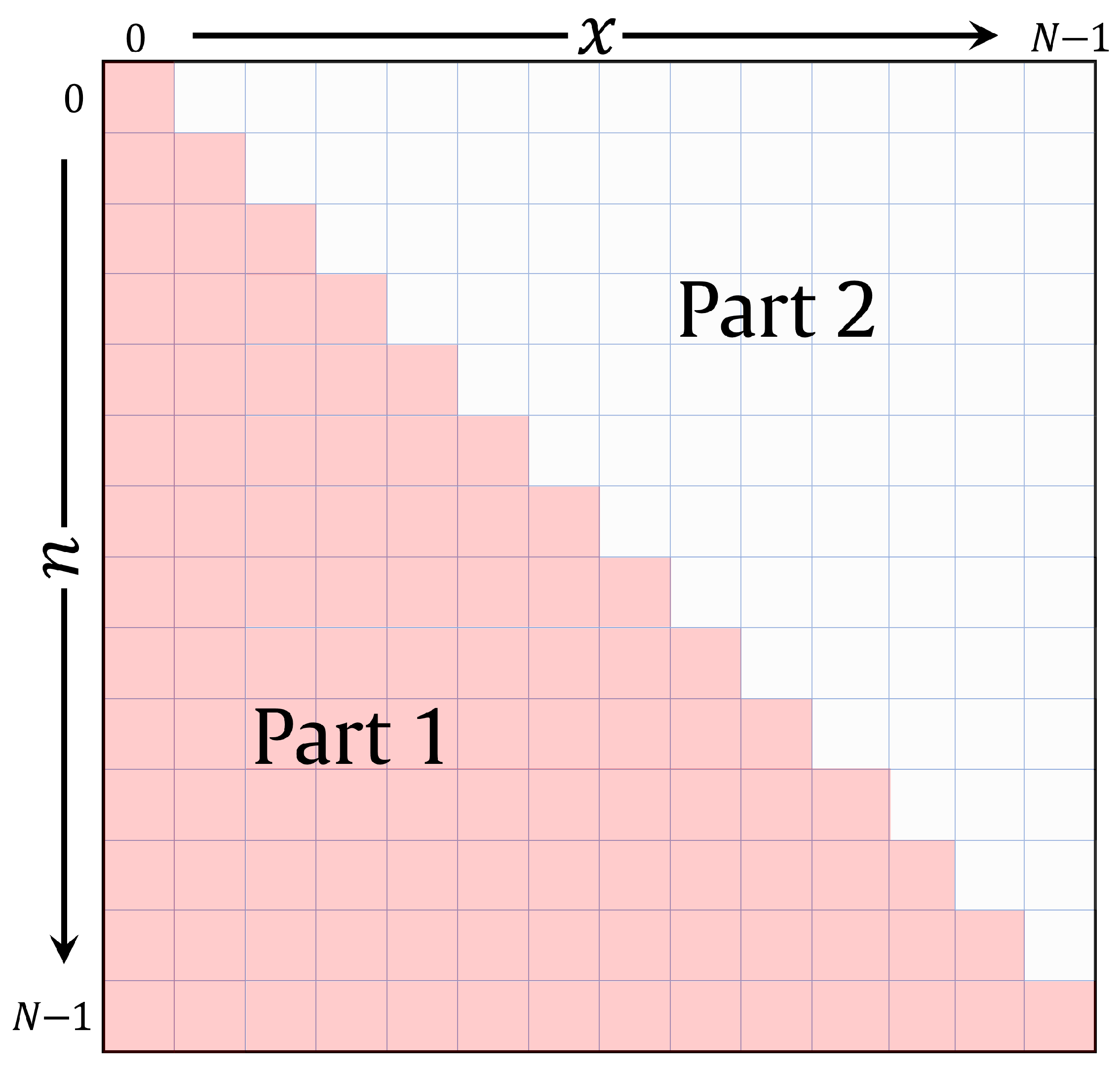

14:{Compute the coefficients in “Part 1”} |

15:for to do |

16: for to do |

17: |

18: |

19: |

20: end for |

21: end for |

22: {Compute the coefficients in “Part 2”} |

23:for to do |

24: for to do |

25: |

26: end for |

27: end for |

28: return { Note that in Equation (27) is equal to .} |

3. Methodology of the Proposed Feature Extraction and Recognition Method of 3D Object

| Algorithm 2 The 3D moments computation [83] |

Input: = 3D image, = Charlier polynomials. |

Output: = Charlier moments. |

1: Generate extended 3D image () from the 3D image {Equation (22).} |

2: Get stored Charlier polynomials , , and {Using Equation (26).} |

3: for z = 1 to do |

4: |

5: end for |

6: {Reshape the computed moments as a feature vector.} |

7: return {Note: in the training and testing phases, the feature vector is normalized.} |

4. Experiments and Discussions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 1D | One-dimensional |

| 2D | Two-dimensional |

| 3D | Three-dimensional |

| IoT | Internet of Things |

| TRSI | Translation, rotation, and scale invariants |

| CNN | Convolutional neural networks |

| ANN | Artificial neural network |

| OM | Orthogonal moments |

| OP | Orthogonal polynomials |

| TTR | Three-term recurrence |

| FOBP | Fast overlapped block processing |

| SVM | Support vector machine |

| GN | Gaussian noise |

| SPN | Salt-and-Pepper noise |

| SPKN | Speckle noise |

References

- Alsabah, M.; Naser, M.A.; Mahmmod, B.M.; Abdulhussain, S.H.; Eissa, M.R.; Al-Baidhani, A.; Noordin, N.K.; Sait, S.M.; Al-Utaibi, K.A.; Hashim, F. 6G wireless communications networks: A comprehensive survey. IEEE Access 2021, 9, 148191–148243. [Google Scholar] [CrossRef]

- Maafiri, A.; Elharrouss, O.; Rfifi, S.; Al-Maadeed, S.A.; Chougdali, K. DeepWTPCA-L1: A new deep face recognition model based on WTPCA-L1 norm features. IEEE Access 2021, 9, 65091–65100. [Google Scholar] [CrossRef]

- Lim, K.B.; Du, T.H.; Wang, Q. Partially occluded object recognition. Int. J. Comput. Appl. Technol. 2011, 40, 122–131. [Google Scholar] [CrossRef]

- Akheel, T.S.; Shree, V.U.; Mastani, S.A. Stochastic gradient descent linear collaborative discriminant regression classification based face recognition. Evol. Intell. 2021, 15, 1729–1743. [Google Scholar] [CrossRef]

- Ahmed, S.; Frikha, M.; Hussein, T.D.H.; Rahebi, J. Optimum feature selection with particle swarm optimization to face recognition system using Gabor wavelet transform and deep learning. BioMed Res. Int. 2021, 2021, 6621540. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Li, X.; Dong, Y. Learning blur invariant binary descriptor for face recognition. Neurocomputing 2020, 404, 34–40. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, X.J.; Yin, H.F.; Kittler, J. Noise-robust dictionary learning with slack block-diagonal structure for face recognition. Pattern Recognit. 2020, 100, 107118. [Google Scholar] [CrossRef]

- Zhao, G.; Pietikainen, M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 915–928. [Google Scholar] [CrossRef] [Green Version]

- Kumar, V.V.; Murty, G.S.; Kumar, P.S. Classification of facial expressions based on transitions derived from third order neighborhood LBP. Glob. J. Comput. Sci. Technol. 2014, 14, 1–12. [Google Scholar]

- Zhang, Y.; Hu, C.; Lu, X. IL-GAN: Illumination-invariant representation learning for single sample face recognition. J. Vis. Commun. Image Represent. 2019, 59, 501–513. [Google Scholar] [CrossRef]

- Jian, M.; Dong, J.; Lam, K.M. FSAM: A fast self-adaptive method for correcting non-uniform illumination for 3D reconstruction. Comput. Ind. 2013, 64, 1229–1236. [Google Scholar] [CrossRef]

- Luciano, L.; Hamza, A.B. Deep learning with geodesic moments for 3D shape classification. Pattern Recognit. Lett. 2018, 105, 182–190. [Google Scholar] [CrossRef]

- Jian, M.; Yin, Y.; Dong, J.; Zhang, W. Comprehensive assessment of non-uniform illumination for 3D heightmap reconstruction in outdoor environments. Comput. Ind. 2018, 99, 110–118. [Google Scholar] [CrossRef]

- Al-Ayyoub, M.; AlZu’bi, S.; Jararweh, Y.; Shehab, M.A.; Gupta, B.B. Accelerating 3D medical volume segmentation using GPUs. Multimed. Tools Appl. 2018, 77, 4939–4958. [Google Scholar] [CrossRef]

- AlZu’bi, S.; Shehab, M.; Al-Ayyoub, M.; Jararweh, Y.; Gupta, B. Parallel implementation for 3d medical volume fuzzy segmentation. Pattern Recognit. Lett. 2020, 130, 312–318. [Google Scholar] [CrossRef]

- Lakhili, Z.; El Alami, A.; Mesbah, A.; Berrahou, A.; Qjidaa, H. Robust classification of 3D objects using discrete orthogonal moments and deep neural networks. Multimed. Tools Appl. 2020, 79, 18883–18907. [Google Scholar] [CrossRef]

- Sadjadi, F.A.; Hall, E.L. Three-Dimensional Moment Invariants. IEEE Trans. Pattern Anal. Mach. Intell. 1980, PAMI-2, 127–136. [Google Scholar] [CrossRef]

- Guo, X. Three dimensional moment invariants under rigid transformation. In International Conference on Computer Analysis of Images and Patterns; Springer: Berlin/Heidelberg, Germany, 1993; pp. 518–522. [Google Scholar]

- Suk, T.; Flusser, J. Tensor method for constructing 3D moment invariants. In International Conference on Computer Analysis of Images and Patterns; Springer: Berlin/Heidelberg, Germany, 2011; pp. 212–219. [Google Scholar]

- Xu, D.; Li, H. 3-D affine moment invariants generated by geometric primitives. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 2, pp. 544–547. [Google Scholar]

- Xu, D.; Li, H. Geometric moment invariants. Pattern Recognit. 2008, 41, 240–249. [Google Scholar] [CrossRef]

- Mesbah, A.; Berrahou, A.; Hammouchi, H.; Berbia, H.; Qjidaa, H.; Daoudi, M. Non-rigid 3D model classification using 3D Hahn Moment convolutional neural networks. In Proceedings of the EG Workshop 3D Object Retrieval, Delft, The Netherlands, 16 April 2018. [Google Scholar]

- Amakdouf, H.; El Mallahi, M.; Zouhri, A.; Tahiri, A.; Qjidaa, H. Classification and recognition of 3D image of charlier moments using a multilayer perceptron architecture. Procedia Comput. Sci. 2018, 127, 226–235. [Google Scholar] [CrossRef]

- Lakhili, Z.; El Alami, A.; Mesbah, A.; Berrahou, A.; Qjidaa, H. Deformable 3D shape classification using 3D Racah moments and deep neural networks. Procedia Comput. Sci. 2019, 148, 12–20. [Google Scholar] [CrossRef]

- Mademlis, A.; Axenopoulos, A.; Daras, P.; Tzovaras, D.; Strintzis, M.G. 3D content-based search based on 3D Krawtchouk moments. In Proceedings of the Third International Symposium on 3D Data Processing, Visualization, and Transmission (3DPVT’06), Chapel Hill, NC, USA, 14–16 June 2006; pp. 743–749. [Google Scholar]

- Batioua, I.; Benouini, R.; Zenkouar, K.; Zahi, A.; Hakim, E.F. 3D image analysis by separable discrete orthogonal moments based on Krawtchouk and Tchebichef polynomials. Pattern Recognit. 2017, 71, 264–277. [Google Scholar] [CrossRef]

- Abdulhussain, S.H.; Mahmmod, B.M. Fast and efficient recursive algorithm of Meixner polynomials. J. Real-Time Image Process. 2021, 18, 2225–2237. [Google Scholar] [CrossRef]

- Sayyouri, M.; Karmouni, H.; Hmimid, A.; Azzayani, A.; Qjidaa, H. A fast and accurate computation of 2D and 3D generalized Laguerre moments for images analysis. Multimed. Tools Appl. 2021, 80, 7887–7910. [Google Scholar] [CrossRef]

- Farokhi, S.; Shamsuddin, S.M.; Sheikh, U.U.; Flusser, J.; Khansari, M.; Jafari-Khouzani, K. Near infrared face recognition by combining Zernike moments and undecimated discrete wavelet transform. Digit. Signal Process. 2014, 31, 13–27. [Google Scholar] [CrossRef]

- Benouini, R.; Batioua, I.; Zenkouar, K.; Najah, S.; Qjidaa, H. Efficient 3D object classification by using direct Krawtchouk moment invariants. Multimed. Tools Appl. 2018, 77, 27517–27542. [Google Scholar] [CrossRef]

- Hu, M.K. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Radeaf, H.S.; Mahmmod, B.M.; Abdulhussain, S.H.; Al-Jumaeily, D. A steganography based on orthogonal moments. In Proceedings of the International Conference on Information and Communication Technology—ICICT ’19, Baghdad, Iraq, 15–16 April 2019; ACM Press: New York, NY, USA, 2019; pp. 147–153. [Google Scholar] [CrossRef]

- Daoui, A.; Yamni, M.; Karmouni, H.; Sayyouri, M.; Qjidaa, H. Stable computation of higher order Charlier moments for signal and image reconstruction. Inf. Sci. 2020, 521, 251–276. [Google Scholar] [CrossRef]

- Xu, S.; Hao, Q.; Ma, B.; Wang, C.; Li, J. Accurate computation of fractional-order exponential moments. Secur. Commun. Netw. 2020, 2020, 8822126. [Google Scholar] [CrossRef]

- Daoui, A.; Yamni, M.; Karmouni, H.; Sayyouri, M.; Qjidaa, H. 2D and 3D medical image analysis by discrete orthogonal moments. Procedia Comput. Sci. 2019, 148, 428–437. [Google Scholar]

- Xia, Z.; Wang, X.; Zhou, W.; Li, R.; Wang, C.; Zhang, C. Color medical image lossless watermarking using chaotic system and accurate quaternion polar harmonic transforms. Signal Process. 2019, 157, 108–118. [Google Scholar] [CrossRef]

- Jain, A.K.; Li, S.Z. Handbook of Face Recognition; Springer: London, UK, 2011; Volume 1. [Google Scholar]

- AL-Utaibi, K.A.; Abdulhussain, S.H.; Mahmmod, B.M.; Naser, M.A.; Alsabah, M.; Sait, S.M. Reliable Recurrence Algorithm for High-Order Krawtchouk Polynomials. Entropy 2021, 23, 1162. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.D.; Shen, B.; Gui, L.; Wang, Y.X.; Li, X.; Yan, F.; Wang, Y.J. Face recognition using class specific dictionary learning for sparse representation and collaborative representation. Neurocomputing 2016, 204, 198–210. [Google Scholar] [CrossRef]

- Shakeel, M.S.; Lam, K.M. Deep-feature encoding-based discriminative model for age-invariant face recognition. Pattern Recognit. 2019, 93, 442–457. [Google Scholar] [CrossRef]

- Liu, K.; Zheng, M.; Liu, Y.; Yang, J.; Yao, Y. Deep Autoencoder Thermography for Defect Detection of Carbon Fiber Composites. IEEE Trans. Ind. Inform. 2022. [Google Scholar] [CrossRef]

- Gao, S.; Dai, Y.; Li, Y.; Jiang, Y.; Liu, Y. Augmented flame image soft sensor for combustion oxygen content prediction. Meas. Sci. Technol. 2022, 34, 015401. [Google Scholar] [CrossRef]

- Liu, K.; Yu, Q.; Liu, Y.; Yang, J.; Yao, Y. Convolutional Graph Thermography for Subsurface Defect Detection in Polymer Composites. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Hosny, K.M.; Abd Elaziz, M.; Darwish, M.M. Color face recognition using novel fractional-order multi-channel exponent moments. Neural Comput. Appl. 2021, 33, 5419–5435. [Google Scholar] [CrossRef]

- Abdulhussain, S.H.; Mahmmod, B.M.; AlGhadhban, A.; Flusser, J. Face Recognition Algorithm Based on Fast Computation of Orthogonal Moments. Mathematics 2022, 10, 2721. [Google Scholar] [CrossRef]

- Mehdipour Ghazi, M.; Kemal Ekenel, H. A comprehensive analysis of deep learning based representation for face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 34–41. [Google Scholar]

- Guo, S.; Chen, S.; Li, Y. Face recognition based on convolutional neural network and support vector machine. In Proceedings of the 2016 IEEE International Conference on Information and Automation (ICIA), Ningbo, China, 1–3 August 2016; pp. 1787–1792. [Google Scholar]

- Asad, M.; Hussain, A.; Mir, U. Low complexity hybrid holistic–landmark based approach for face recognition. Multimed. Tools Appl. 2021, 80, 30199–30212. [Google Scholar] [CrossRef]

- Abdulhussain, S.H.; Ramli, A.R.; Mahmmod, B.M.; Saripan, M.I.; Al-Haddad, S.A.R.; Jassim, W.A. A New Hybrid form of Krawtchouk and Tchebichef Polynomials: Design and Application. J. Math. Imaging Vis. 2019, 61, 555–570. [Google Scholar] [CrossRef]

- Hmimid, A.; Sayyouri, M.; Qjidaa, H. Fast computation of separable two-dimensional discrete invariant moments for image classification. Pattern Recognit. 2015, 48, 509–521. [Google Scholar] [CrossRef]

- Abdulhussain, S.H.; Ramli, A.R.; Hussain, A.J.; Mahmmod, B.M.; Jassim, W.A. Orthogonal polynomial embedded image kernel. In Proceedings of the Proceedings of the International Conference on Information and Communication Technology—ICICT ’19, Baghdad, Iraq, 15–16 April 2019; ACM Press: New York, NY, USA, 2019; pp. 215–221. [Google Scholar] [CrossRef]

- Jassim, W.A.; Raveendran, P.; Mukundan, R. New orthogonal polynomials for speech signal and image processing. IET Signal Process. 2012, 6, 713–723. [Google Scholar] [CrossRef]

- Flusser, J.; Zitova, B.; Suk, T. Moments and Moment Invariants in Pattern Recognition; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Rahman, S.M.; Howlader, T.; Hatzinakos, D. On the selection of 2D Krawtchouk moments for face recognition. Pattern Recognit. 2016, 54, 83–93. [Google Scholar] [CrossRef]

- Teh, C.H.; Chin, R.T. On image analysis by the methods of moments. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 496–513. [Google Scholar] [CrossRef]

- Chen, B.; Yu, M.; Su, Q.; Shim, H.J.; Shi, Y.Q. Fractional Quaternion Zernike Moments for Robust Color Image Copy-Move Forgery Detection. IEEE Access 2018, 6, 56637–56646. [Google Scholar] [CrossRef]

- Kaur, P.; Pannu, H.S.; Malhi, A.K. Plant disease recognition using fractional-order Zernike moments and SVM classifier. Neural Comput. Appl. 2019, 31, 8749–8768. [Google Scholar] [CrossRef]

- Teague, M.R. Image analysis via the general theory of moments. Josa 1980, 70, 920–930. [Google Scholar] [CrossRef]

- Abdulhussain, S.H.; Mahmmod, B.M.; Baker, T.; Al-Jumeily, D. Fast and accurate computation of high-order Tchebichef polynomials. Concurr. Comput. Pract. Exp. 2022, 34, e7311. [Google Scholar] [CrossRef]

- Mahmmod, B.M.; Abdulhussain, S.H.; Suk, T.; Hussain, A. Fast Computation of Hahn Polynomials for High Order Moments. IEEE Access 2022, 10, 48719–48732. [Google Scholar] [CrossRef]

- Yang, B.; Dai, M. Image analysis by Gaussian–Hermite moments. Signal Process. 2011, 91, 2290–2303. [Google Scholar] [CrossRef]

- Mukundan, R.; Ong, S.; Lee, P.A. Image analysis by Tchebichef moments. IEEE Trans. Image Process. 2001, 10, 1357–1364. [Google Scholar] [CrossRef]

- Curtidor, A.; Baydyk, T.; Kussul, E. Analysis of Random Local Descriptors in Face Recognition. Electronics 2021, 10, 1358. [Google Scholar] [CrossRef]

- Song, G.; He, D.; Chen, P.; Tian, J.; Zhou, B.; Luo, L. Fusion of Global and Local Gaussian-Hermite Moments for Face Recognition. In Image and Graphics Technologies and Applications; Wang, Y., Huang, Q., Peng, Y., Eds.; Springer: Singapore, 2019; pp. 172–183. [Google Scholar]

- Paul, S.K.; Bouakaz, S.; Rahman, C.M.; Uddin, M.S. Component-based face recognition using statistical pattern matching analysis. Pattern Anal. Appl. 2021, 24, 299–319. [Google Scholar] [CrossRef]

- Turk, M.; Pentland, A. Eigenfaces for recognition. J. Cogn. Neurosci. 1991, 3, 71–86. [Google Scholar] [CrossRef]

- Ekenel, H.K.; Stiefelhagen, R. Local appearance based face recognition using discrete cosine transform. In Proceedings of the 2005 13th European Signal Processing Conference, Antalya, Turkey, 4–8 September 2005; pp. 1–5. [Google Scholar]

- Abdulhussain, S.H.; Mahmmod, B.M.; Flusser, J.; AL-Utaibi, K.A.; Sait, S.M. Fast Overlapping Block Processing Algorithm for Feature Extraction. Symmetry 2022, 14, 715. [Google Scholar] [CrossRef]

- Kamaruzaman, F.; Shafie, A.A. Recognizing faces with normalized local Gabor features and spiking neuron patterns. Pattern Recognit. 2016, 53, 102–115. [Google Scholar] [CrossRef] [Green Version]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face description with local binary patterns: Application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Shen, L.; Bai, L. A review on Gabor wavelets for face recognition. Pattern Anal. Appl. 2006, 9, 273–292. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face recognition with local binary patterns. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2004; pp. 469–481. [Google Scholar]

- Muqeet, M.A.; Holambe, R.S. Local binary patterns based on directional wavelet transform for expression and pose-invariant face recognition. Appl. Comput. Inform. 2019, 15, 163–171. [Google Scholar] [CrossRef]

- Shrinivasa, S.; Prabhakar, C. Scene image classification based on visual words concatenation of local and global features. Multimed. Tools Appl. 2022, 81, 1237–1256. [Google Scholar] [CrossRef]

- Onan, A. Bidirectional convolutional recurrent neural network architecture with group-wise enhancement mechanism for text sentiment classification. J. King Saud-Univ.-Comput. Inf. Sci. 2022, 34, 2098–2117. [Google Scholar] [CrossRef]

- Kim, J.Y.; Cho, S.B. Obfuscated Malware Detection Using Deep Generative Model based on Global/Local Features. Comput. Secur. 2022, 112, 102501. [Google Scholar] [CrossRef]

- Siddiqi, K.; Zhang, J.; Macrini, D.; Shokoufandeh, A.; Bouix, S.; Dickinson, S. Retrieving articulated 3-D models using medial surfaces. Mach. Vis. Appl. 2008, 19, 261–275. [Google Scholar] [CrossRef] [Green Version]

- Abdul-Hadi, A.M.; Abdulhussain, S.H.; Mahmmod, B.M. On the computational aspects of Charlier polynomials. Cogent Eng. 2020, 7, 1763553. [Google Scholar] [CrossRef]

- Koekoek, R.; Lesky, P.A.; Swarttouw, R.F. Hypergeometric Orthogonal Polynomials and Their q-Analogues; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Mahmmod, B.M.; bin Ramli, A.R.; Abdulhussain, S.H.; Al-Haddad, S.A.R.; Jassim, W.A. Signal compression and enhancement using a new orthogonal-polynomial-based discrete transform. IET Signal Process. 2018, 12, 129–142. [Google Scholar] [CrossRef]

- Abdul-Haleem, M.G. Offline Handwritten Signature Verification Based on Local Ridges Features and Haar Wavelet Transform. Iraqi J. Sci. 2022, 63, 855–865. [Google Scholar] [CrossRef]

- Tippaya, S.; Sitjongsataporn, S.; Tan, T.; Khan, M.M.; Chamnongthai, K. Multi-modal visual features-based video shot boundary detection. IEEE Access 2017, 5, 12563–12575. [Google Scholar] [CrossRef]

- Rivera-Lopez, J.S.; Camacho-Bello, C.; Vargas-Vargas, H.; Escamilla-Noriega, A. Fast computation of 3D Tchebichef moments for higher orders. J.-Real-Time Image Process. 2022, 19, 15–27. [Google Scholar] [CrossRef]

- Byun, H.; Lee, S.W. A survey on pattern recognition applications of support vector machines. Int. J. Pattern Recognit. Artif. Intell. 2003, 17, 459–486. [Google Scholar] [CrossRef]

- Awad, M.; Motai, Y. Dynamic classification for video stream using support vector machine. Appl. Soft Comput. 2008, 8, 1314–1325. [Google Scholar] [CrossRef] [Green Version]

- Chang, C.C.; Lin, C.J. LIBSVM. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

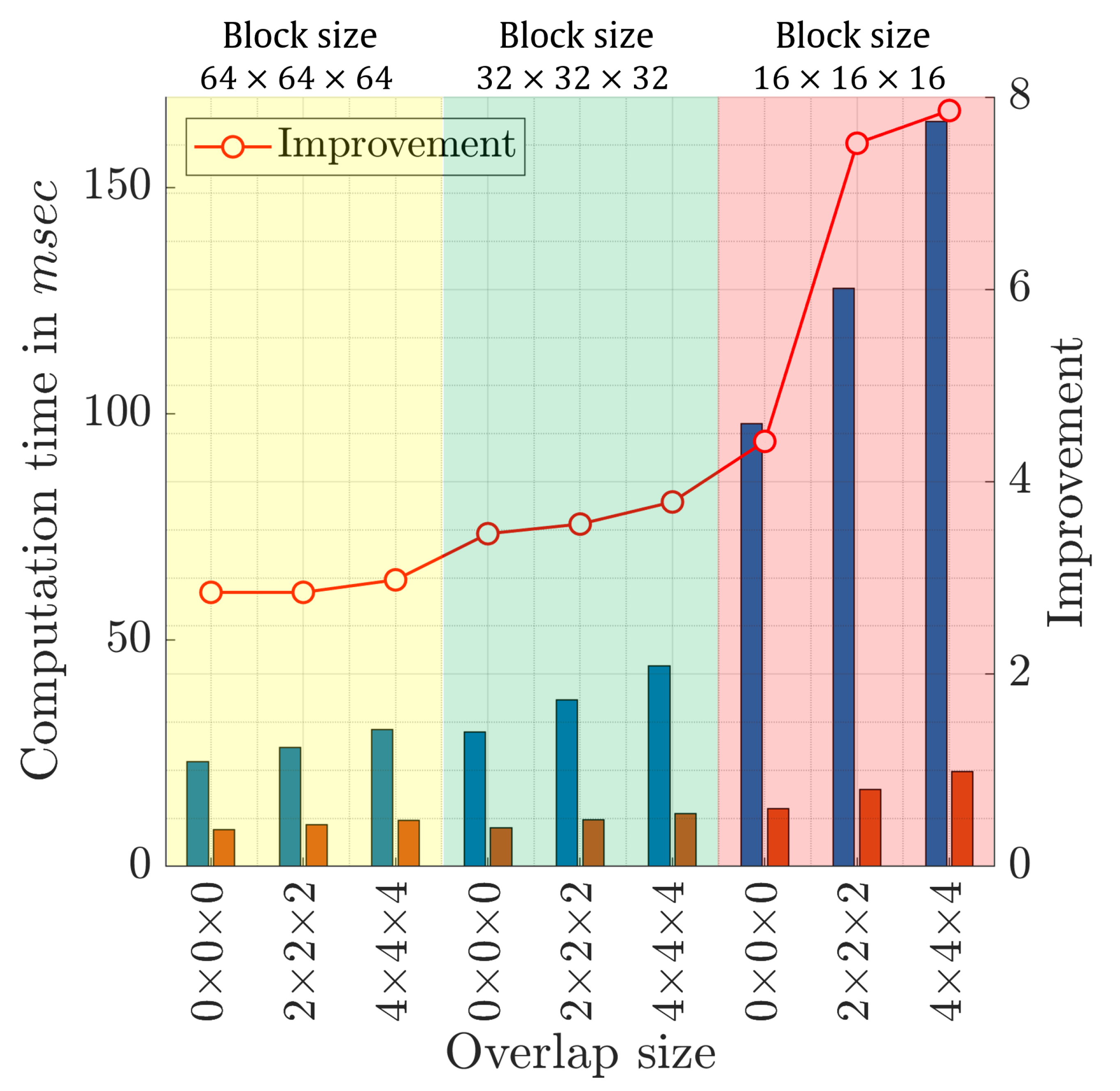

| Block Size = | |||||

|---|---|---|---|---|---|

| Overlap Size () | |||||

| Environment | 0 | 2 | 4 | 8 | 16 |

| Clean | 68.25 | 70.42 | 72.44 | 78.52 | 80.04 |

| GN 0.0001 | 39.44 | 61.18 | 68.96 | 78.58 | 80.16 |

| GN 0.0002 | 36.95 | 57.48 | 67.84 | 78.58 | 80.18 |

| GN 0.0003 | 35.32 | 55.44 | 67.14 | 78.59 | 80.13 |

| GN 0.0004 | 33.75 | 53.44 | 66.67 | 78.65 | 80.01 |

| GN 0.0005 | 32.26 | 51.38 | 66.23 | 78.63 | 80.03 |

| SPN 0.1 | 37.86 | 58.80 | 68.36 | 78.56 | 80.20 |

| SPN 0.2 | 31.33 | 49.28 | 65.53 | 78.62 | 80.04 |

| SPN 0.3 | 29.39 | 42.71 | 61.52 | 78.36 | 79.72 |

| SPN 0.4 | 27.15 | 39.24 | 57.66 | 78.14 | 78.97 |

| SPN 0.5 | 26.09 | 36.56 | 52.81 | 77.67 | 78.32 |

| SPKN 0.2 | 68.24 | 70.41 | 72.40 | 78.55 | 80.03 |

| SPKN 0.4 | 68.22 | 70.47 | 72.33 | 78.53 | 80.04 |

| SPKN 0.6 | 68.17 | 70.39 | 72.37 | 78.46 | 80.04 |

| SPKN 0.8 | 68.25 | 70.35 | 72.43 | 78.48 | 79.97 |

| SPKN 1.0 | 68.21 | 70.51 | 72.30 | 78.53 | 80.03 |

| Block Size = | ||||

|---|---|---|---|---|

| Overlap Size () | ||||

| Environment | 0 | 1 | 2 | 4 |

| Clean | 76.28 | 77.18 | 78.86 | 80.10 |

| GN 0.0001 | 76.21 | 77.15 | 78.79 | 80.16 |

| GN 0.0002 | 76.16 | 77.11 | 78.73 | 80.14 |

| GN 0.0003 | 76.18 | 77.02 | 78.67 | 80.11 |

| GN 0.0004 | 75.98 | 77.01 | 78.60 | 80.14 |

| GN 0.0005 | 75.75 | 76.98 | 78.62 | 80.14 |

| SPN 0.1 | 76.25 | 77.21 | 78.80 | 80.16 |

| SPN 0.2 | 75.46 | 76.94 | 78.56 | 80.10 |

| SPN 0.3 | 74.39 | 76.56 | 78.22 | 79.92 |

| SPN 0.4 | 73.79 | 75.64 | 78.00 | 79.58 |

| SPN 0.5 | 73.03 | 74.78 | 77.64 | 79.21 |

| SPKN 0.2 | 76.25 | 77.22 | 78.90 | 80.11 |

| SPKN 0.4 | 76.26 | 77.18 | 78.87 | 80.13 |

| SPKN 0.6 | 76.33 | 77.17 | 78.90 | 80.11 |

| SPKN 0.8 | 76.28 | 77.21 | 78.84 | 80.13 |

| SPKN 1.0 | 76.25 | 77.18 | 78.84 | 80.04 |

| Block Size = | |||

|---|---|---|---|

| Overlap Size () | |||

| Environment | 0 | 1 | 2 |

| Clean | 70.58 | 80.24 | 80.10 |

| GN 0.0001 | 70.73 | 80.31 | 80.08 |

| GN 0.0002 | 70.75 | 80.27 | 80.08 |

| GN 0.0003 | 70.76 | 80.30 | 80.04 |

| GN 0.0004 | 70.80 | 80.32 | 80.06 |

| GN 0.0005 | 70.80 | 80.34 | 79.99 |

| SPN 0.1 | 70.72 | 80.30 | 80.11 |

| SPN 0.2 | 70.83 | 80.30 | 80.08 |

| SPN 0.3 | 70.76 | 80.10 | 79.80 |

| SPN 0.4 | 70.61 | 79.77 | 79.51 |

| SPN 0.5 | 70.44 | 79.55 | 79.31 |

| SPKN 0.2 | 70.63 | 80.28 | 80.10 |

| SPKN 0.4 | 70.55 | 80.28 | 80.06 |

| SPKN 0.6 | 70.55 | 80.30 | 80.08 |

| SPKN 0.8 | 70.51 | 80.35 | 80.06 |

| SPKN 1.0 | 70.65 | 80.32 | 80.10 |

| Algorithm Name | Average Accuracy |

|---|---|

| GMI [26] | 70.26% |

| TTKMI [26] | 72.87% |

| TKKMI [26] | 72.19% |

| KKKMI [26] | 71.11% |

| TTTMI [26] | 71.57% |

| TMI [30] | 60.54% |

| KMI [30] | 60.32% |

| HMI [30] | 60.89% |

| DKMI [30] | 62.01% |

| Ours (block size = , and overlap size = ) | 79.87% |

| Ours (block size = , and overlap size = ) | 80.02% |

| Ours (block size = , and overlap size = ) | 80.21% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahmmod, B.M.; Abdulhussain, S.H.; Naser, M.A.; Alsabah, M.; Hussain, A.; Al-Jumeily, D. 3D Object Recognition Using Fast Overlapped Block Processing Technique. Sensors 2022, 22, 9209. https://doi.org/10.3390/s22239209

Mahmmod BM, Abdulhussain SH, Naser MA, Alsabah M, Hussain A, Al-Jumeily D. 3D Object Recognition Using Fast Overlapped Block Processing Technique. Sensors. 2022; 22(23):9209. https://doi.org/10.3390/s22239209

Chicago/Turabian StyleMahmmod, Basheera M., Sadiq H. Abdulhussain, Marwah Abdulrazzaq Naser, Muntadher Alsabah, Abir Hussain, and Dhiya Al-Jumeily. 2022. "3D Object Recognition Using Fast Overlapped Block Processing Technique" Sensors 22, no. 23: 9209. https://doi.org/10.3390/s22239209