1. Introduction

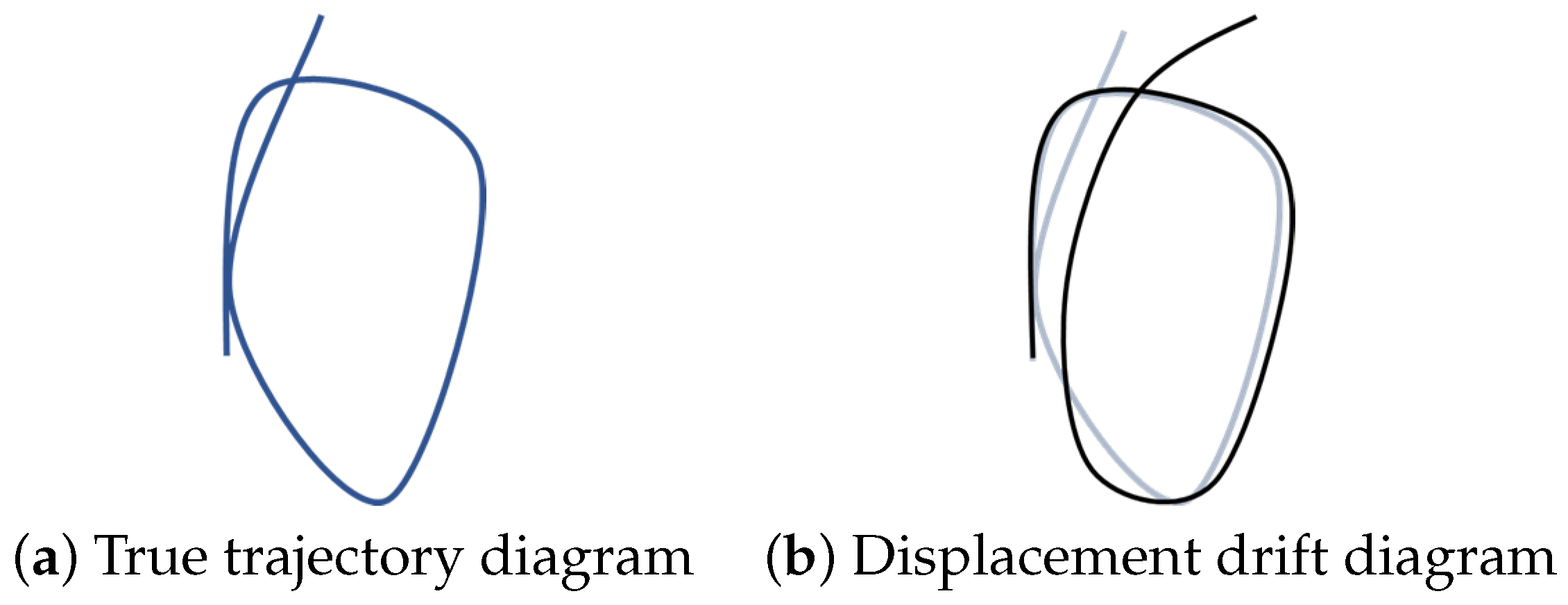

Simultaneous Localization and Mapping (SLAM) refers to the indoor environment in which the Global Positioning System (GPS) or BeiDou Navigation Satellite System (BDS) module does not receive satellite signals and cannot obtain location-related information such as latitude and longitude, the robot uses the features in the environment to estimate its pose; and extracts the relevant location and other information of all objects in the unknown environment, i.e., to describe the environment. The robot not only needs to localize itself using the created map but also needs to update the environment map based on the current pose information. In Visual SLAM (VSLAM), the visual odometry (VO) will accumulate errors over a long period resulting in unreliable estimated positional trajectory results. This will lead to the inability of the whole VSLAM system to obtain globally consistent positional trajectory information, which will have a large impact on the results of both localization and map building. The accumulated drift phenomenon in visual SLAM is shown in

Figure 1.

For the cumulative drift problem, the classical framework of visual SLAM uses loopback detection processing, which determines whether the robot returns to the previously passed position by detecting the similarity between two frames, i.e., whether there is a loopback in the travel trajectory. If the mobile robot can detect the loopback while performing the task, and the constraints established by the loopback are added to the back-end optimization can ensure that the acquired positional trajectory has global consistency and the estimated trajectory results are closer to the real trajectory. However, the drawback of loopback detection is also very obvious, if the mobile robot does not detect loopback during operation (e.g., moving in a straight corridor), it cannot use loopback to correct the positional trajectory, and the cumulative drift problem of the visual SLAM system remains unsolved.

In order to solve the cumulative drift problem of the visual SLAM system in the loopback-free task, borrowing the idea of using GNSS in the outdoor environment, this paper adds Indoor Positioning System (IPS) to the back-end optimization of VSLAM to provide global constraints for the optimization of the positional trajectory and proposes a back-end optimization framework based on the positional map. Firstly, the VO information is obtained from the front-end VO calculation method, and then the bit-pose information obtained by IPS adaptive outlier suppression algorithm is optimized by the IPS adaptive outlier suppression algorithm. Finally, the constructed residual terms are constructed from the input data and added to the pose-map optimization through the pose-map optimization module to calculate the transformation relationship from VO to its coordinate system. We conducted experiments on the AUTOLABOR mobile robot, and the results proved that the problem of cumulative drift of the VSLAM system can be solved to a certain extent.

2. Related Work

The mainstream sensor types used for SLAM are laser sensors and vision sensors. Laser SLAM is relatively more accurate, but LIDAR is more expensive and has a limited detection range, in addition to the lack of semantic information and the difficulty of loopback detection. In environments with poor environmental scene diversity, such as structured corridors or tunnels with consistent structure and other structured scenes, degradation problems will occur, and at this time, laser SLAM is more prone to degradation problems compared to VSLAM. In contrast, VSLAM has the advantages of low cost, small size, low power consumption, and rich information perception, and is more suitable for indoor environment.

One of the more common problems in VSLAM systems is the cumulative drift problem. Each calculation and optimization of the visual front-end of SLAM generates a small error, and when the camera moves continuously for a long time, this small error will accumulate and eventually cause the estimated trajectory to deviate from the real motion trajectory. Feature-based SLAM associates feature extraction (e.g., SURF [

1], SIFT [

2] and ORB [

3]) and matching with data, and then performs pose estimation by minimizing the feature point reprojection error. Direct VSLAM treats data association and pose estimation as an overall optimization problem and solves the problem by minimizing the photometric error. The reliance on accurate data correlation can lead to tracking failures for low textures, lighting variations, and occluded areas. In addition, geometric methods have the inherent disadvantage that camera motion can only be estimated to an unknown scale, which can also lead to scale drift over time.

A.J. Davison [

4] proposed the first monocular SLAM system, MonoSLAM, in which the back-end optimization part used is based on the Extended Kalman Filter (EKF) optimization method. Subsequently, Eade et al. [

5] improved the SLAM back-end optimization algorithm proposed by A.J. Davison based on MonoSLAM using Particle Filter (PF) algorithm and proposed a Fast-SLAM based SLAM back-end optimization scheme. The VSLAM system alleviates this problem by combining geometric VO with an optimized backend to continuously adjust the position of landmarks and the pose of the camera, called graph optimization technique. Graph-based SLAM has been successful in many cases in established systems, such as feature-based PTAM [

6] and ORB-SLAM [

7], as well as direct LSD-SLAM [

8] and DSO [

9]. The graph-based SLAM [

10] maintains a global graph with nodes representing camera poses or landmarks and edges representing sensor measurements that constrain the connected poses. In the back-end optimization process, the traditional filter-based approach is replaced with a graph optimization approach, which provides an important pavement for later graph optimization schemes.

In SLAM, with the help of loop closure, i.e., graph optimization with correctly established loop closure constraints, SLAM is able to significantly reduce global trajectory drift. Most of the existing LIDAR-based closed-loop detection is achieved by encoding the point cloud as global or local descriptors and then matching the descriptors. They usually use low-level features, such as coordinates [

11,

12,

13,

14,

15], normal [

16], reflection intensity [

17,

18,

19,

20], etc. In recent years, with the development of deep learning, many LiDAR-based methods for target detection [

21] and semantic segmentation [

22,

23] have been proposed, making it possible to obtain semantic information from point clouds. However, still only a few LIDAR-based works attempt to use semantic information [

24,

25].

In the field of VSLAM, closed-loop detection based on local features [

1,

3] and Bag-of-Words (BoW) [

26] are well established and have been widely used [

27,

28]. Unlike images that contain rich texture features, point clouds are almost purely geometric information, which makes point cloud-based closed-loop detection challenging. Therefore, there is no effective method to integrate into LiDAR SLAM systems. The ranging and mapping algorithms represented by LOAM [

29] achieved very high accuracy on the KITTI [

30] dataset.

The most currently used solution for the cumulative drift problem in VSLAM is still loopback detection. In an outdoor environment, Autoware [

31], an autonomous driving framework in Shinpei Kato lab, uses a Global Navigation Satellite System (GNSS) to provide global constraints for 3D laser SLAM localization, which not only solves the cumulative drift problem of the positional trajectory but also ensures that the LiDAR performs point cloud map matching localization with better initial values. In current VSLAM systems in indoor environments, Yang B. [

32] proposed a novel multi-class and motion attribute concurrent VSLAM (MCV-SLAM) algorithm to ensure that VSLAM works properly in real-time in dynamic indoor environments with moving objects. Hu H. [

33] proposed a multi-map-based SLAM system with a four-thread structure using multiple small maps for tracking to reduce the error accumulation of VSLAM and introduced an algorithm to predict camera pose to improve the robustness of tracking. Tseng P.-Y. [

34] proposed an economical indoor localization system based on visual simultaneous localization and mapping (vSLAM) with low setup cost and an integrated approach for precise positioning using vSLAM to solve the matching and accuracy. Raza A. [

35] explored various indoor localization techniques which compared and contrasted various approaches and classified them according to a set of suggested criteria to evaluate a commercially deployable indoor localization solution. However, there is no more research and better solutions for solving the SLAM cumulative drift problem in loopback-free tasks in indoor environments. In this paper, the proposed solution of adding IPS to the back-end optimization of VSLAM solves the cumulative drift problem of indoor VSLAM systems in loopback-free tasks to some extent.

3. A Back-End Optimization Framework Based on Positional Maps

The front-end motion position estimation requires feature points (waypoint) to be involved in optimally estimating the robot motion. In fact, after several observations, the converged feature points (waypoint) have little positional transformation, while the outlier points are eliminated. It is more redundant to optimize again for the points that have already converged. Therefore, when the motion estimation smoothes out, the pose-map optimization will no longer optimize the positions of feature points (waypoint), but only the connections between all robot poses. In this way, a large number of feature point optimization calculations can be saved while only the trajectories of key frames are retained.

Figure 2 shows the comparison between Bundle Adjustment (BA) optimization and pose map optimization.

The traditional bitmap-based optimization algorithm contains only the relative bitmap constraints of two adjacent frames, and optimizes the bitmap data within a certain time range through the bitmap. However, because the back-end optimization only considers the constraints of adjacent frames, it is not effective for cumulative error elimination. In order to solve the cumulative error problem of SLAM system and construct globally consistent trajectories and maps, the global positional information of IPS is considered to be added to the positional map, so that the positional map has global positional constraints.

As shown in

Figure 3,

nodes represent the robot poses, and the motion between two poses is obtained by the VO. Assuming that a motion

between

and

has been estimated, the relative bit pose between frame

i and frame

j can be expressed on the Lie group

as

. To make the relative bit pose between

of these moments of the poses

optimizes two aspects of the observations that can be used, firstly, the poses of the moving robot at each moment obtained by IPS, which have no cumulative error but are released at a low frequency of about 5–8 Hz, and is represented by

. Next is the VO pose obtained from the front-end pose estimation, this observation has a high release frequency of about 20–25 Hz and the high accuracy in short time is given by by

. Based on the above characteristics, the fusion strategy adopted in this paper is to use IPS as the absolute constraint of the positional map, and use VO to establish relative constraints between two frames of IPS poses, and since the VO frequency and the release frequency of IPS are different, it is necessary to perform time matching on them before optimization.

In order to facilitate the solution of the Gaussian Newton method, it is necessary to derive the Jacobi matrix of the error terms of the IPS observations with respect to the bit pose. Since the observation in the lattice diagram is a unitary edge connecting only one lattice state quantity, the observation of this state quantity is given directly. IPS constraint corresponds to the residual that is the difference between the observation and the state quantity as shown in Equation (

1). Adding a perturbation to the residuals in Equation (

1) yields Equation (

2). The simplification of Equation (

2) using the concomitant property Equation (

3) and the BCH formula yields Equation (

4), where the residuals are Jacobi with respect to

as Equation (

5).

has the form

.

To construct the least squares problem with IPS optimization added, the observed value of IPS at k moments is denoted as , then the IPS error term at k moments can be denoted as .where is the attitude estimate at moment k. The error term of the front-end VO pose estimation is expressed as , is the visually observed pose transformation from moment i to moment j.

All the positional vertices and positional edges can form a graph optimization, which essentially constitutes a least squares problem, where the edges are constraints on the positional observations, and the optimization variables are the poses of the individual vertices. Writing all the error terms as an overall objective function can be expressed as Equation (

6):

where

denotes the set of all optimized edges,

is the information matrix, inverse matrix of the covariance matrix, indicating the magnitude of the weights,

is the error term.

The back-end positional optimization can be achieved by solving Equation (

7). The entire optimization problem can be defined as follows:

After optimizing the construction into a nonlinear least squares problem using the positional map, the global poses are optimized by invoking the methods in the Ceres library for solving the optimal global poses.

5. Experimental Results and Analysis

5.1. Experimental Hardware Environment

In this paper, we use the MarvelMind indoor positioning module [

37] as the fused IPS sensor. MarvelMind consists of three components: fixed beacons, mobile beacons, and localization routing. The fixed beacons are placed within the working area of the mobile robot, and it is necessary to ensure that the mobile beacons maintain a certain Line of Sight (LOS) with at least three fixed beacons at any point in space for localization. The mobile beacons are placed on the mobile robot and are used to obtain information about the position of the mobile robot. Mobile beacons and fixed beacons use the same hardware and are collectively referred to as localization beacons in this paper. For a location beacon, we can change its function in the driver to make it work as a mobile beacon or a fixed beacon, which is shown in

Figure 5a. The location routing plays the role of a central controller in the MarvelMind positioning system. All the information measured by the beacons will be sent to the location routing, which will be processed and calculated by the location routing, as shown in

Figure 5b.

To ensure the accuracy of positioning, the built MarvelMind system should contain 3 to 4 fixed beacons. The system is built in the way shown in

Figure 6. The fixed beacons can be mounted on walls or ceilings or fixed with brackets, and the maximum distance between fixed beacons is 50 m. The mobile beacons are placed on the object under test, and the mobile robot can transmit the positioning information acquired by the mobile beacons to the mobile robot’s host computer through the USB interface. The localization routing can be placed anywhere in the workspace and can work using a USB to power it.

Since the algorithm in this paper requires the placement of dual MarvelMind tags and also requires the mobile robot chassis to be equipped with a PC and connected with a network cable for distributed communication. Based on this need, the original AUTOLABOR mobile robot was modified to suit the experimental requirements of this paper. The modified mobile robot platform is shown in

Figure 7, with the addition of dual MarvelMind location tags and an additional layer for the PC to ensure distributed information transmission using a network cable.

5.2. Experimental Design

In this section, we verify the effect of fused IPS back-end optimization algorithm by loop trajectory experiment and straight trajectory experiment. In the loop trajectory experiment, the results of loopback detection are used as the standard trajectory to compare the bit-pose trajectory results calculated by the back-end optimization algorithm of visual fusion IPS and ORB_SLAM2 back-end algorithm (without adding the back-end optimization of IPS) in the case of no loopback detection. And the straight trajectory experiment uses the straight line of the real scene as the standard trajectory to evaluate the accuracy of the back-end optimization algorithm of the fused IPS in this paper.

In the back-end global bit pose estimation experiment, in order to have the same initial value of odometer as the control group, the back-end optimization algorithm of fusion IPS uses the same VO part as the front-end to obtain the initial value of bit pose in this experiment as the control group. The experimental design is shown in

Figure 8, where

traj_fusion is the trajectory result obtained by the fused IPS back-end optimization algorithm proposed in this paper.

traj_fusion trajectory is optimized without loopback detection.

traj_noloop is the trajectory obtained directly by the traditional back-end optimization algorithm of ORB_SLAM2’s back-end optimization algorithm without fused IPS and without loopback detection. The

traj_loop is the trajectory obtained by the traditional back-end optimization algorithm and optimized by loopback detection.

traj_loop results have no cumulative drift and high accuracy due to the processing of loopback detection threads, which is used as the standard trajectory in the loop trajectory experiment.

5.3. Mobile Robot Platform Circular Trajectory Experiment

The experiment collected three groups of data with different travel trajectories. The travel distance of trajectory 1 is about 14 m, the travel distance of trajectory 2 is about 16.5 m, and the travel distance of trajectory 3 is about 17 m.

We used EVO-SLAM trajectory accuracy evaluation software [

38] for visualization, and

Figure 9 shows the error trajectories of the three sets of trajectory data collected, in which the dashed trajectories are the standard trajectories, i.e.,

traj_loop obtained by loopback detection optimization.

Figure 9a–c shows the error trajectory obtained by the back-end optimization algorithm of visual fusion IPS, and

Figure 9d–f shows the error trajectory obtained by the ORB_SLAM2 back-end algorithm. From the results of the three sets of trajectories, it can be seen that the trajectory

traj_noloop obtained by the back-end optimization algorithm of the unfused IPS increases the error as the running time of the mobile robot increases, and the estimated poses are no longer in the same position when the robot returns to the starting position due to the cumulative error. In contrast, the trajectory

traj_fusion obtained by the back-end optimization algorithm with IPS fusion has no cumulative drift in the overall trajectory due to the global positional constraints of IPS, and the starting and ending poses of the robot are also closer from the intuitive results of the trajectory.

Figure 10 shows the trajectory error line plot of the loop trajectory plotting error with respect to time variation for different driving trajectories, and APE means Absolute Pose Error. It is obvious from the figure that the ORB_SLAM2 back-end algorithm results in increasing error with time change due to the cumulative error. In contrast, the trajectory error of the

traj_fusion of the algorithm in this paper is more stable in a certain interval range of small floating, and does not appear constantly rising trend.

As can be seen from the trajectory error line graph, the back-end optimization algorithm incorporating IPS in this paper can effectively solve the cumulative drift problem of VSLAM and ensure the global consistency of estimated poses.

The maximum value (Max), standard deviation (Std), mean (Mean) and root-mean-squared error (RMSE) of the trajectory error are calculated for all three sets of data. The standard deviation can reflect the degree of deviation of the trajectory error from the mean, and the smaller the standard deviation, the less deviation from the mean. The root-mean-squared error needs to be calculated for all poses and the result is calculated by Equation (

8):

The error statistics of the three data sets in

Table 1,

Table 2 and

Table 3. From the Max data, it can be seen that the error of the algorithm in this paper is optimized due to the exclusion of data affected by noise through the IPS outlier rejection algorithm, which ensures that the errors of the acquired positional trajectories are maintained below 0.1 m. In the data of Std

traj_fusion value is smaller than

traj_noloop value, which indicates that the floating degree of trajectory error of the back-end optimization algorithm of fusion IPS is smaller, and further indicates that the algorithm of this paper solves the problem of cumulative drift. From the Mean and RMSE data, it can be seen that the error between the back-end optimization algorithm of fusion IPS and the loopback detection result as the standard trajectory is only between 3 cm and 6 cm, so if the mobile robot performs a task in which the loopback trajectory cannot be driven, the back-end optimization algorithm of fusion IPS proposed in this paper can ensure that the trajectory accuracy obtained is close to the loopback detection optimization results, which in turn solves the cumulative drift problem of VSLAM under the loopbackless task.

5.4. Experiment on Straight Trajectory of Mobile Robot Platform

This experiment collects two sets of straight trajectories, the running speed of the two data carts are different, the speed of the mobile robot is 0.05 m/s, and 0.3 m/s respectively, and the distance of the carts travel is about 7 m. The acquired trajectory data, groundtruth indicates the trajectory true value, traj_fusion is the trajectory result obtained by the optimization algorithm in this paper. traj_unloop is the trajectory result obtained by ORB_SLAM2 back-end algorithm, the trajectory is not fused IPS nor loopback detection optimization.

Calculating the trajectory error can get the results shown in

Figure 11, which reflects the trajectory error of two sets of straight-line data, where the speed is 0.05 m/s in

Figure 11a,b, and 0.3 m/s in

Figure 11c,d.

Figure 11a,c show the trajectory

traj_fusion of fusion IPS, and

Figure 11b,d shows the traditional back-end optimized trajectory

traj_noloop. it can be seen in the figure that the

traj_noloop trajectory also has the problem of cumulative drift in the straight line state, and the mobile robot runs in the

x-axis and drifts in the

y-axis. While

traj_fusion trajectory results from the graph does not have a larger degree of positional drift.

From the results in the

Figure 12, we can see that in the state of straight line driving, the error of

traj_fusion trajectory of the algorithm after fusion IPS no longer increases with the growth of running time, and the overall error trend is relatively smooth, and the overall accuracy is maintained at about 0.05m, which effectively solves the problem of cumulative drift. And both sets of data show a similar trend, indicating that the running speed of the mobile robot does not have a large degree of influence on the accuracy of the positional trajectory of the fused IPS back-end optimization algorithm.

Similarly the maximum value (Max), Standard Deviation (Std), Mean (Mean) and Root-Mean-Squared Error (RMSE) are calculated for all data trajectory errors. The error statistics of the two sets of data in

Table 4 and

Table 5 can be obtained.

The maximum error of traj_fusion is also controlled below 0.1 m from the Max, which proves the effectiveness of IPS outlier suppression algorithm. And the traj_fusion Std is smaller than the result of traj_noloop, which indicates that the back-end optimization algorithm trajectory results of fusion IPS have smaller oscillation amplitude and no obvious cumulative drift phenomenon. From the results of Mean and RMSE, it can be seen that the error between traj_fusion trajectory and real trajectory can reach centimeter level, so the accuracy of traj_fusion can reach between 0.2 m and 0.4 m.

The analysis of the experimental results of the straight trajectory shows that the back-end optimization algorithm proposed in this paper can solve the cumulative drift problem of the VSLAM system, and the positioning accuracy of the algorithm can be maintained between 0.02 m and 0.03 m to meet the demand of indoor positioning. Moreover, since the algorithm can solve the cumulative drift problem of the VSLAM system without loopback detection, it can be used and maintain high accuracy in tasks where mobile robots cannot drive loopback trajectories.

6. Conclusions

SLAM is one of the key technologies for realizing research areas such as mobile robotics, autonomous driving, and mixed reality, and is also an important part of mobile robots to perceive their surroundings. This paper discusses the cumulative drift problem in VSLAM and the impact of the emergence of cumulative drift on VSLAM systems. A back-end optimization algorithm incorporating IPS is proposed for the many limitations of loopback detection methods for solving the cumulative error problem in the VSLAM framework. By comparing the experimental results of the circular trajectory and the straight trajectory of the mobile robot driving indoors, it can be seen that the back-end optimization algorithm of fusion IPS has a smaller error between the starting and ending positions and has a smaller error with the optimized trajectory of loopback detection, which can solve the cumulative drift problem instead of loopback detection. Therefore, the back-end optimization algorithm of the fused IPS proposed in this paper can solve the cumulative drift problem in the environment where loopback detection does not work, which extends the usage environment of the VSLAM system.

In this paper, the construction of the map is based on the purpose of indoor reconstruction only, and the method will also be limited by the sensor performance, and the accuracy will be affected, for example, the sensor range limits the size of the indoor environment, and the perception range of the depth camera likewise limits the scalability. We can only reach the upper limit of the sensors after fusion and cannot break through. Therefore, the next work will consider the optimization and improvement of the algorithm in this paper based on the productization application, so that the algorithm can automatically choose a more suitable algorithm according to the environment, and have the ability to switch the state automatically, such as when switching from indoor environment to outdoor environment, the algorithm can switch from IPS fusion to GPS. Meanwhile, we can build a 3D octree map by VSLAM, and build a better visual effect The dense point cloud map can better realize the localization and navigation of mobile robots.