Multi-Scale Strengthened Directional Difference Algorithm Based on the Human Vision System

Abstract

1. Introduction

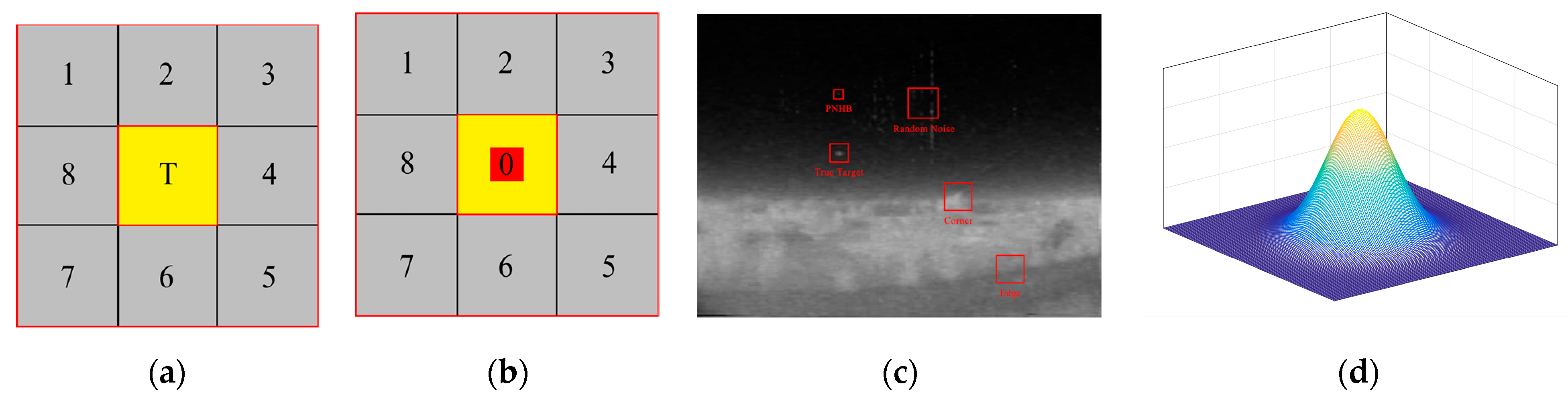

- Improved the previous scan window, the center pixel of the window does not participate in the calculation and can effectively deal with high-brightness pixel-level noise (PNHB).

- Using the new scanning window and the anisotropy of the small target itself, a local directional intensity measure is proposed.

- Considering the features of the true target itself, the features of the background neighborhood and the features between them, LDFM is proposed.

2. Related Work

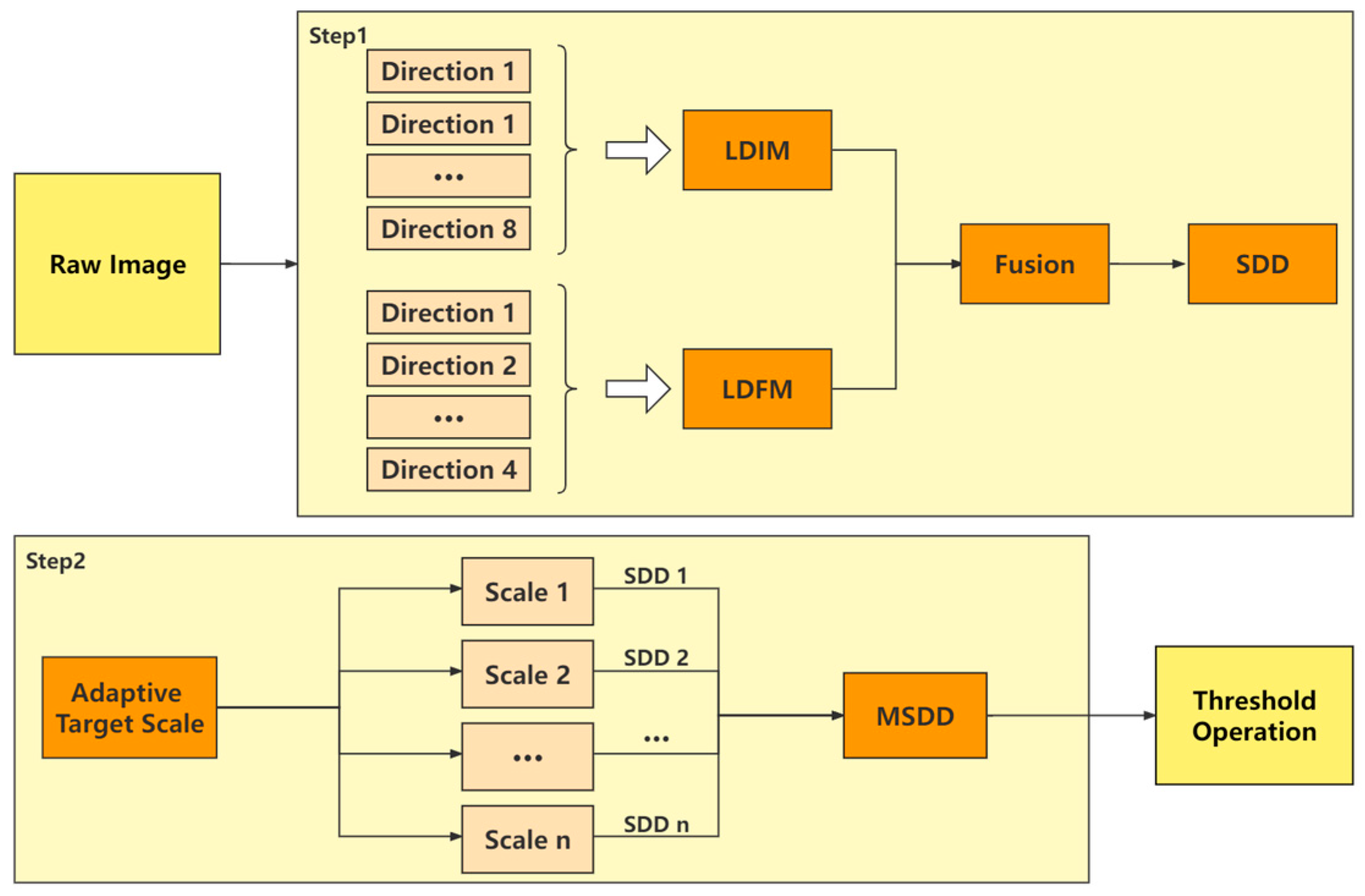

3. Materials and Methods

3.1. Local Directional Intensity Measure

3.2. Local Directional Fluctuation Measure

3.3. Small Target Detection Using

3.4. Threshold Operation

4. Experimental Results

4.1. Experimental Settings

4.1.1. Related Metrics

4.1.2. Test Datasets and Baseline Method

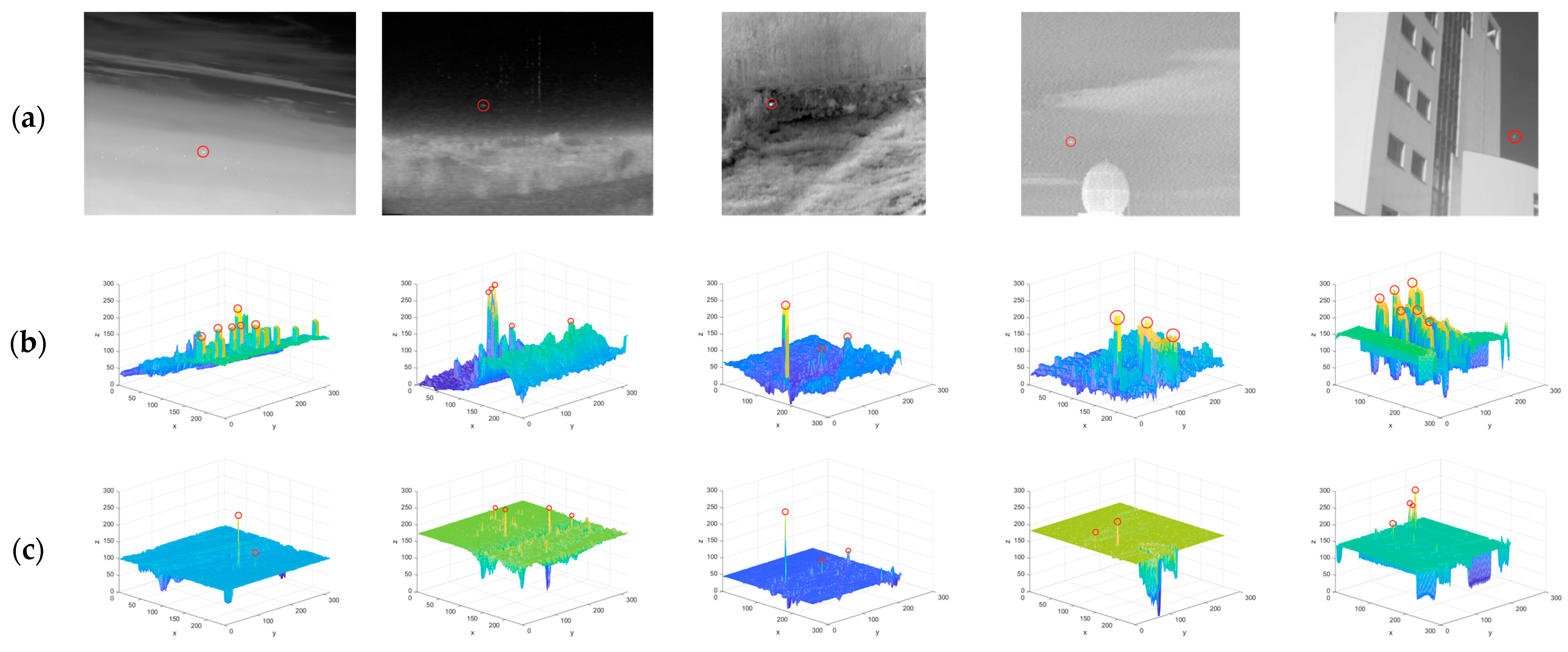

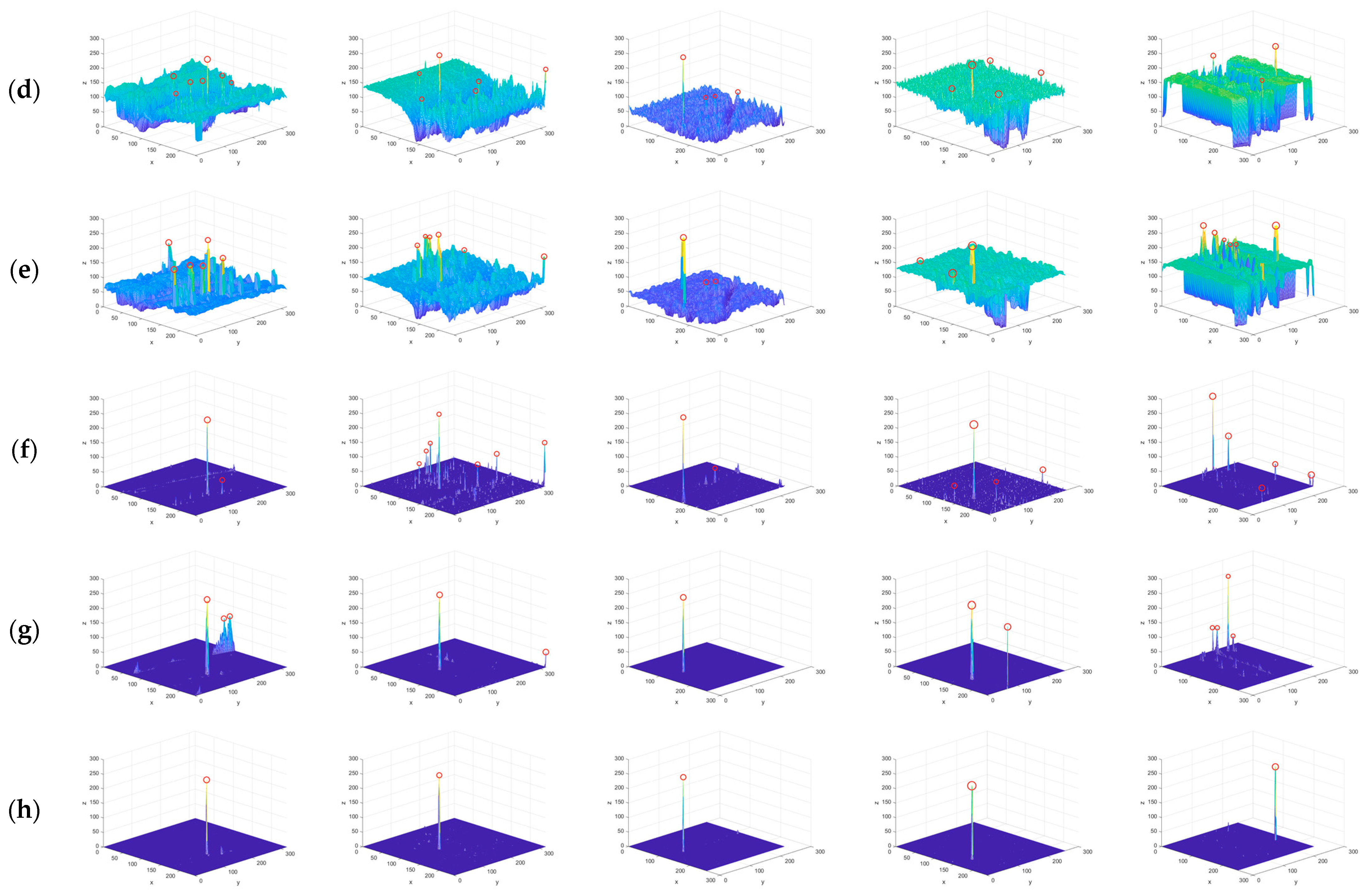

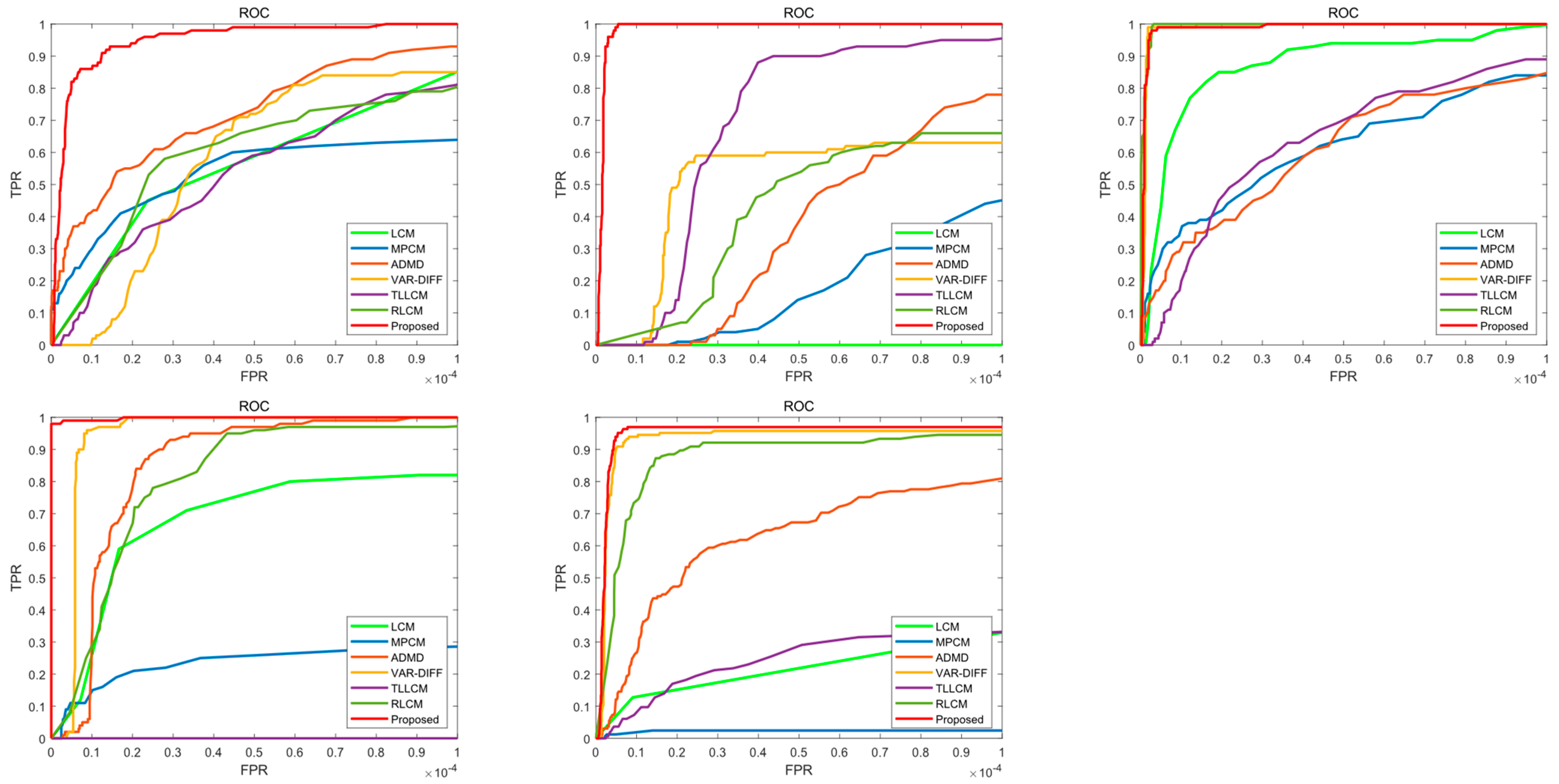

4.2. Comparison to Baseline Methods

5. Discussion

- If is true target center, since the true small targets usually has a large positive contrast to its neighborhood, its D will be large, so its LDIM will be large. Meanwhile, its and will be large, and will be small, so its LDFM will be large. Therefore, the MSDD will be large.

- If is pure background, since the pixel intensity values of such area are not very different, its D will be so small as to be close to 0, so its LDIM will be small. Meanwhile, its , so its LDFM is approximately equal to 1. Therefore, the MSDD will be small.

- If is background edge, since such regions are usually directional locally, its D will be small, so its LDIM will be small. Meanwhile, its , so its LDFM is approximately equal to 1. Therefore, the MSDD will be small.

- If is a corner edge, such areas often appear at the edges of clouds or buildings. Particularly, these regions tend to have large positive contrasts with certain neighborhoods. However, in LDIM, the computation is directional, so the LDIM will be small. Meanwhile, is also a directional calculation. Therefore, its will be small. Overall, the final will be smaller than the .

- If is a PNHB, although it has high brightness characteristics, its size tends to be a single pixel. Since the center point of the newly constructed target window does not participate in the calculations, the proposed algorithm is able to handle this special case. The specific calculation results in this case requires a specific area, and we can refer to the above situation.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared patch-image model for small target detection in a single image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef] [PubMed]

- Cui, Z.; Yang, J.; Li, J.; Jiang, S. An infrared small target detection framework based on local contrast method. Measurement 2016, 91, 405–413. [Google Scholar] [CrossRef]

- Bi, Y.; Bai, X.; Jin, T.; Guo, S. Multiple feature analysis for infrared small target detection. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1333–1337. [Google Scholar] [CrossRef]

- Deng, H.; Sun, X.; Liu, M.; Ye, C.; Zhou, X. Entropy-based window selection for detecting dim and small infrared targets. Pattern Recognit. 2017, 61, 66–77. [Google Scholar] [CrossRef]

- Gao, J.; Lin, Z.; An, W. Infrared small target detection using a temporal variance and spatial patch contrast filter. IEEE Access 2019, 7, 32217–32226. [Google Scholar] [CrossRef]

- Srivastava, H.B.; Limbu, Y.B.; Saran, R.; Kumar, A. Airborne infrared search and track systems. Def. Sci. J. 2007, 57, 739. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, F.; Liu, B.; Yu, H.; Chen, Y. Infrared dim target detection method based on the fuzzy accurate updating symmetric adaptive resonance theory. J. Vis. Commun. Image Represent. 2019, 60, 180–191. [Google Scholar] [CrossRef]

- Yang, C.; Ma, j.; Qi, S.; Tian, J.; Zheng, S.; Tian, X. Directional support value of Gaussian transformation for infrared small target detection. Appl. Opt. 2015, 54, 2255–2265. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, X.; Wang, X.; Fei, C.; Guo, Z. Infrared small target detection based on spatial-temporal enhancement using quaternion discrete cosine transform. IEEE Access 2019, 7, 54712–54723. [Google Scholar] [CrossRef]

- Nie, J.; Qu, S.; Wei, Y.; Zhang, L.; Deng, L. An Infrared Small Target Detection Method Based on Multiscale Local Homogeneity Measure. Infrared Phys. Technol. 2018, 90, 186–194. [Google Scholar] [CrossRef]

- Shao, X.; Fan, H.; Lu, G.; Xu, J. An improved infrared dim and small target detection algorithm based on the contrast mechanism of human visual system. Infrared Phys. Technol. 2012, 55, 403–408. [Google Scholar] [CrossRef]

- Chen, C.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, J.; Ma, Y.; Tian, J. Non-rigid visible and infrared face registration via regularized Gaussian fields criterion. Pattern Recognit. 2015, 48, 772–784. [Google Scholar] [CrossRef]

- Han, J.; Ma, Y.; Huang, J.; Mei, X.; Ma, J. An infrared small target detecting algorithm based on human visual system. IEEE Geosci. Remote Sens. Lett. 2016, 13, 452–456. [Google Scholar] [CrossRef]

- Qiang, W.; Hua-Kai, L. An Infrared Small Target Fast Detection Algorithm in the Sky Based on Human Visual System. In Proceedings of the 2018 4th Annual International Conference on Network and Information Systems for Computers (ICNISC), Wuhan, China, 20–22 April 2018; pp. 176–181. [Google Scholar]

- Han, J.; Ma, Y.; Zhou, B.; Fan, F.; Liang, K.; Fang, Y. A robust infrared small target detection algorithm based on human visual system. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2168–2172. [Google Scholar]

- Wei, Y.; You, X.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Qin, Y.; Li, B. Effective infrared small target detection utilizing a novel local contrast method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Deng, H.; Sun, X.; Liu, M.; Ye, C.; Zhou, X. Small infrared target detection based on weighted local difference measure. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4204–4214. [Google Scholar] [CrossRef]

- Han, J.; Liang, K.; Zhou, B.; Zhu, X.; Zhao, J.; Zhao, L. Infrared small target detection utilizing the multiscale relative local contrast measure. IEEE Geosci. Remote Sens. Lett. 2018, 15, 612–616. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Zhang, H.; Zhao, Q.; Zhang, X.; Li, N. Infrared small target detection based on the weighted strengthened local contrast measure. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1670–1674. [Google Scholar] [CrossRef]

- Rivest, J.F.; Fortin, R. Detection of dim targets in digital infrared imagery by morphological image processing. Opt. Eng. 1996, 35, 1886–1893. [Google Scholar] [CrossRef]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-mean and max-median filters for detection of small targets. In Proceedings of the SPIE’s International Symposium on Optical Science, Engineering, and Instrumentation, Denver, CO, USA, 4 October 1999; pp. 74–83. [Google Scholar]

- Zhang, B.; Zhang, T.; Cao, Z.; Zhang, K. Fast new small-target detection algorithm based on a modified partial differential equation in infrared clutter. Opt. Eng. 2007, 46, 106401. [Google Scholar] [CrossRef]

- Kim, S.; Lee, J. Scale invariant small target detection by optimizing signal-to-clutter ratio in heterogeneous background for infrared search and track. Pattern Recognit. 2012, 45, 393–406. [Google Scholar] [CrossRef]

- Bae, T.W.; Sohng, K.I. Small target detection using bilateral filter based on edge component. J. Infrared Millim. Terahertz Waves 2010, 31, 735–743. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Song, Y. Infrared small target and background separation via column-wise weighted robust principal component analysis. Infrared Phys. Technol. 2016, 77, 421–430. [Google Scholar] [CrossRef]

- Hu, T.; Zhao, J.J.; Cao, Y.; Wang, F.L.; Yang, J. Infrared small target detection based on saliency and principle component analysis. J. Infrared Millim. Waves 2010, 29, 303–306. [Google Scholar]

- Cao, Y.; Liu, R.M.; Yang, J. Infrared small target detection using PPCA. Int. J. Infrared Millim. Waves 2008, 29, 385–395. [Google Scholar] [CrossRef]

- Wang, H.; Zhou, L.; Wang, L. Miss detection vs. false alarm: Adversarial learning for small object segmentation in infrared images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8509–8518. [Google Scholar]

- Zhao, B.; Wang, C.; Fu, Q.; Han, Z. A novel pattern for infrared small target detection with generative adversarial network. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4481–4492. [Google Scholar] [CrossRef]

- Fan, Z.; Bi, D.; Xiong, L.; Ma, S.; He, L.; Ding, W. Dim infrared image enhancement based on convolutional neural network. Neurocomputing 2018, 272, 396–404. [Google Scholar] [CrossRef]

- Zhao, D.; Zhou, H.; Rang, S.; Jia, X. An adaptation of CNN for small target detection in the infrared. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 669–672. [Google Scholar]

- Moradi, S.; Moallem, P.; Sabahi, M.F. Fast and robust small infrared target detection using absolute directional mean difference algorithm. Signal Process. 2020, 117, 107727. [Google Scholar] [CrossRef]

- Deng, H.; Sun, X.; Liu, M.; Ye, C.; Zhou, X. Infrared small-target detection using multiscale gray difference weighted image entropy. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 60–72. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Liu, C.; Zhang, H.; Zhao, Q. A local contrast method for infrared small-target detection utilizing a tri-layer window. IEEE Geosci. Remote Sens. 2019, 17, 1822–1826. [Google Scholar] [CrossRef]

- Nasiri, M.; Chehresa, S. Infrared small target enhancement based on variance difference. Infrared Phys. Technol. 2017, 82, 107–119. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric contextual modulation for infrared small target detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 950–959. [Google Scholar]

- Han, J.; Xu, Q.; Moradi, S.; Fang, H.; Yuan, X.; Qi, Z.; Wan, J. A Ratio-Difference Local Feature Contrast Method for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z.; Li, W.; Liu, Y. Infrared Small Target Detection Based on Gradient-Intensity Joint Saliency Measure. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7687–7699. [Google Scholar] [CrossRef]

| Datasets | Frames | Resolution | Target Size | Target Details | Background Details |

|---|---|---|---|---|---|

| Seq-1 [39] | 100 | 320 × 240 | 5 × 5 to 7 × 7 | Keeping little motion | Multiple PNHB |

| Small in size | Heavy noise | ||||

| Seq-2 [38,39] | 100 | 320 × 240 | 5 × 5 to 7 × 7 | Keeping motion | Complex clouds |

| Low SCR value | Heavy noise | ||||

| Seq-3 [37] | 100 | 256 × 256 | 5 × 5 to 7 × 7 | Keeping motion | Multiple complex objects |

| Irregular shape | Heavy noise | ||||

| Seq-4 [38] | 100 | 256 × 239 | 3 × 3 to 7 × 7 | Keeping motion | Multiple buildings |

| Low SCR value | Heavy noise | ||||

| SIRST [40] | 427 | Variety | 3 × 3 to 11 × 11 | Variety | Variety |

| Datasets | LCM | MPCM | RLCM | TLLCM | VAR-DIFF | ADMD | Proposed |

|---|---|---|---|---|---|---|---|

| Seq-1 | 2.9678 | 7.1558 | 4.9549 | 3.4675 | 143.2399 | 45.8036 | 220.5620 |

| Seq-2 | 2.2637 | 4.0981 | 4.8788 | 7.9981 | 40.2805 | 90.1688 | 174.6367 |

| Seq-3 | 2.8371 | 4.4802 | 10.0950 | 5.7227 | 65.7572 | 65.5795 | 208.1402 |

| Seq-4 | 1.2835 | 4.4898 | 2.1285 | 2.9342 | 35.1933 | 19.5947 | 97.7643 |

| SIRST | 2.0452 | 3.7768 | 3.8955 | 3.1540 | 47.3826 | 42.0477 | 100.4070 |

| Datasets | LCM | MPCM | RLCM | TLLCM | VAR-DIFF | ADMD | Proposed |

|---|---|---|---|---|---|---|---|

| Seq-1 | 1.2985 | 6.5079 | 3.1104 | 2.1061 | 32.7192 | 93.1016 | 653.6033 |

| Seq-2 | 1.3230 | 7.3366 | 3.5213 | 1.9475 | 54.6992 | 25.6519 | 222.1676 |

| Seq-3 | 2.3014 | 10.8582 | 3.8682 | 3.5872 | 179.8385 | 34.4299 | 132.4181 |

| Seq-4 | 1.2641 | 4.3856 | 3.4904 | 2.7104 | 695.0363 | 44.2576 | 425.8237 |

| SIRST | 1.4672 | 8.2238 | 3.9480 | 2.6238 | 1.5051 × 103 | 189.7067 | 1.6933 × 103 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Zheng, Y.; Li, X. Multi-Scale Strengthened Directional Difference Algorithm Based on the Human Vision System. Sensors 2022, 22, 10009. https://doi.org/10.3390/s222410009

Zhang Y, Zheng Y, Li X. Multi-Scale Strengthened Directional Difference Algorithm Based on the Human Vision System. Sensors. 2022; 22(24):10009. https://doi.org/10.3390/s222410009

Chicago/Turabian StyleZhang, Yuye, Ying Zheng, and Xiuhong Li. 2022. "Multi-Scale Strengthened Directional Difference Algorithm Based on the Human Vision System" Sensors 22, no. 24: 10009. https://doi.org/10.3390/s222410009

APA StyleZhang, Y., Zheng, Y., & Li, X. (2022). Multi-Scale Strengthened Directional Difference Algorithm Based on the Human Vision System. Sensors, 22(24), 10009. https://doi.org/10.3390/s222410009