A Systematic Review of Online Speech Therapy Systems for Intervention in Childhood Speech Communication Disorders

Abstract

1. Introduction

2. Background and Related Works

2.1. Communication Disorders

2.2. Telepractice and OST

2.3. Speech Recognition

2.4. Machine Learning

2.5. Related Work

3. Research Methodology

3.1. Research Questions

- RQ1.1: For which goals/context have OST Systems been developed?

- RQ1.2: What are the features of the OST systems?

- RQ1.3: For which target groups have OST systems been used?

- RQ1.4: What are the adopted architecture designs of the OST systems?

- RQ1.5: What are the adopted ML approaches in these OST systems?

- RQ1.6: What are the properties of the software used for these systems?

- RQ2.1: Which evaluation approaches have been used to assess the efficacy of the OST systems?

- RQ2.2: Which performance metrics have been used to gauge the efficacy of the OST systems?

3.2. Search Strategy

3.2.1. Search Scope

3.2.2. Search Method

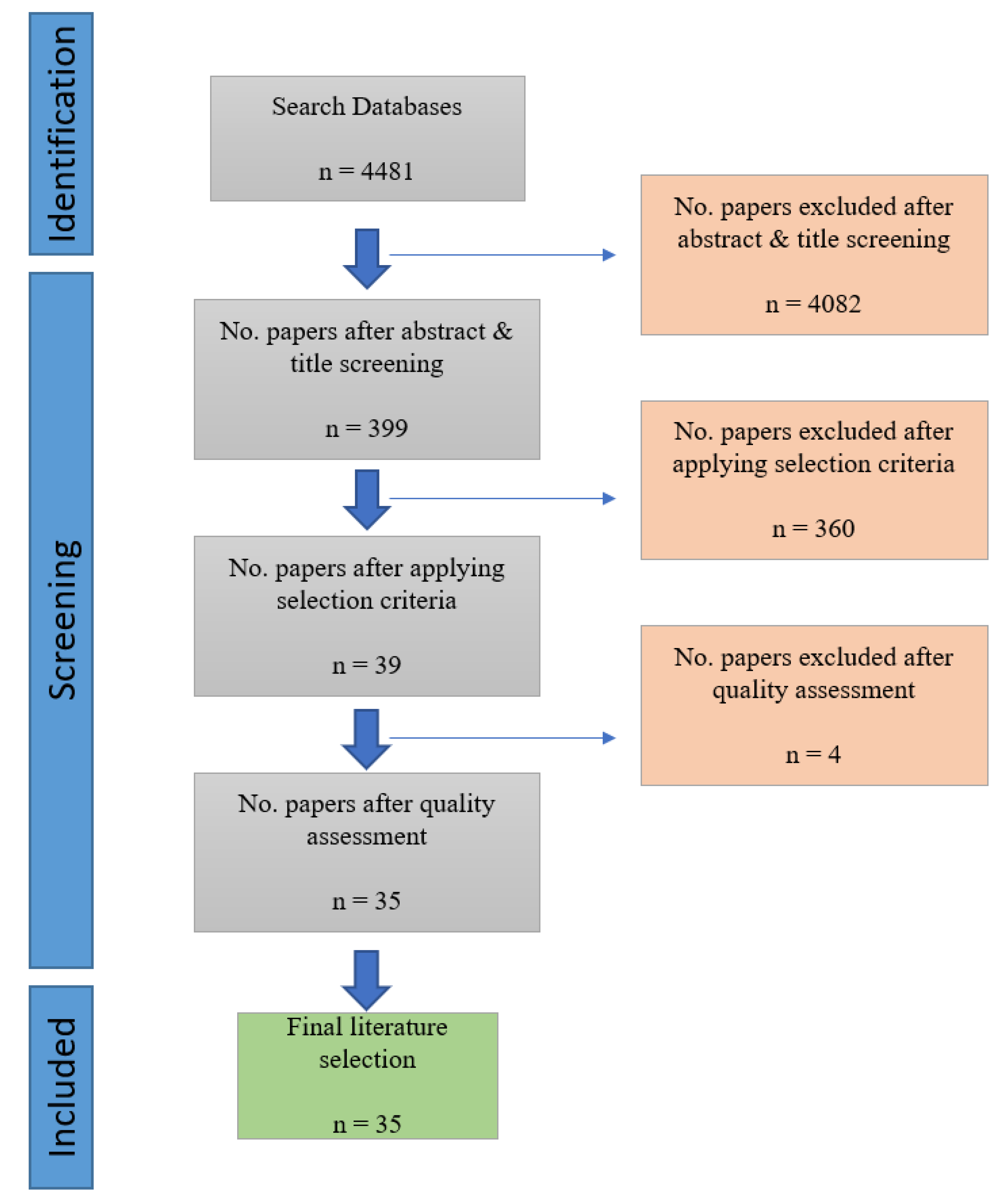

3.3. Study Selection Criteria

3.4. Study Quality Assessment

3.5. Data Extraction and Monitoring

3.6. Data Synthesis and Reporting

4. Results

4.1. For Which Goals/Context Have OST Systems Been Developed?

4.2. What Are the Features of the OST Systems?

- Audio feedback is audio output from a system that informs the user whether he or she is performing well.

- Emotion screening: The system considers the client’s emotions throughout the session, for example, by measuring the facial expressions with a face tracker or the system asking the child how he or she feels or to rate the child’s feelings.

- Error detection: Identification of errors made through speech analysis algorithms by analyzing produced vowels and consonants individually (Parnandi et al., 2013 [39]).

- Peer-to-peer feedback is a feature that enables multiple clients to participate with each other in an exercise. Peers can provide feedback to each other’s performance in terms of understandability, the volume of sound and so on, depending on the exercise’s context and scope.

- Speech recognition, also known as speech-to-text or automatic speech recognition, is a feature that enables a program to convert human speech into a written format.

- Recommendation strategy: A feature that provides suggestions for helpful follow-up exercises and activities that can be undertaken by the SLP based on the correctness of pronunciation (Franciscatto et al., 2021 [1]).

- Reporting: Providing statistical reports about the child’s progress and the level of performance during the session.

- Text-to-speech: A feature that can read digital text on a digital device aloud.

- Textual feedback is textual output from a system that shows the user whether he performs well. For example, when the word is pronounced correctly, the text “Correct Answer” appears, whereas if the word is mispronounced, the text “Incorrect Answer” appears, possibly with an explanation of why it is incorrect.

- User data management: Everything that has to do with keeping track of the personal data of clients, such as account names and age.

- User voice recorder: A feature that provides the option to record the spoken text by the clients. The recorded voice can be played back by, for example, the client, the SLP or other actors such as a teacher or parent.

- A virtual 3D model aids in viewing the correct positioning of the lips, language and teeth for each sound (Danubianu, 2016).

- Visual feedback is visual output from a system, such as a video game, that shows the user if he or she is performing well or not. For example, a character only proceeds to the next level when the client has pronounced the word correctly. Visual feedback is the character’s movement from level A to level B, as illustrated in Figure 4.

- Voice commands are spoken words by the child that let the system act. For example, when a child says jump, the character in a game jumps.

4.3. For Which Target Groups Have OST Systems Been Used?

4.4. What Are the Adopted Architecture Designs of the OST Systems?

4.4.1. Client–Server System

4.4.2. Repository Pattern

4.4.3. Layered Approach

4.4.4. Standalone System

4.4.5. Pipe-and-Filter Architecture

4.5. What Are the Adopted Machine Learning Approaches in These OST Systems

4.6. What Are the Properties of the Software Used for These Systems?

4.7. Which Evaluation Approaches Have Been Used to Assess the Efficacy of the OST Systems?

4.7.1. Case Study

4.7.2. Experimental

4.7.3. Observational

4.7.4. Simulation-Based

4.7.5. Not Evaluated

4.8. Which Evaluation Metrics Have Been Used to Gauge the Efficacy of the OST Systems?

- Accuracy describes the percentage of correctly predicted values.

- Recall is the proportion of all true positives predicted by the model divided by the total number of predicted values [15]. . TP = true positives. FN = false negatives.

- F1-score is a summary of both recall and precision (Russell and Norvig, 2010). .

- Precision calculates the proportion of correctly identified positives (Russell and Norvig, 2010). . TP = true positives. FP = false positives.

- Pearson’s r is a statistical method that calculates the correlation between two variables.

- Root-mean-square deviation (RMSE) calculates the difference between the predicted values and the observed values.

- .

- Kappa is a method that compares the observed and expected values.

- Error refers to the average error of the system regarding its measures.

- Usability refers to the effectiveness, efficiency and satisfaction together [27].

- Efficiency refers to the resources spent to achieve effectiveness, such as time to complete the task, the mistakes made and difficulties encountered [27].

- Effectiveness refers to the number of users that can complete the tasks without quitting [27].

- Reliability refers to the level at which the application responds correctly and consistently regarding its purpose [29].

- Sensitivity is the level at which the tool can discriminate the proper pronunciations from the wrong ones [32].

- Coherence specifies whether the exercises selected by the system are appropriate for the child, according to their abilities and disabilities [41].

- Completeness determines if the plans recommended by the expert system are complete and takes into account the areas in which the child should be trained to develop specific skills (according to the child’s profile) [41].

- Relevance determines if each exercise’s specificity is appropriate for the child [41].

- Ease of learning memorization looks at how easy it is for the user to perform simple tasks using the interface for the first time [47].

5. Discussion and Limitations of the Review

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Quality Assessment Form

| Reference | Q1. Are the Aims of the Study Clearly Stated? | Q2. Are the Scope and Context of the Study Clearly Defined? | Q3. Is the Proposed Solution Clearly Explained and Validated by an Empirical Study? | Q4. Are the Variables Used in the Study Likely to Be Valid and Reliable? | Q5. Is the Research Process Documented Adequately? | Q6. Are All Study Questions Answered? | Q7. Are the Negative Findings Presented? | TOTAL SCORE |

|---|---|---|---|---|---|---|---|---|

| [20] | 1 | 2 | 2 | 2 | 2 | 2 | 1 | 12 |

| [21] | 1 | 2 | 1 | 1 | 1 | 1 | 1 | 8 |

| [22] | 2 | 2 | 2 | 2 | 2 | 2 | 1 | 13 |

| [23] | 2 | 2 | 0 | 2 | 1 | 2 | 2 | 11 |

| [2] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [24] | 1 | 2 | 0 | 1 | 1 | 1 | 2 | 8 |

| [25] | 1 | 1 | 2 | 1 | 1 | 2 | 0 | 8 |

| [1] | 1 | 2 | 2 | 2 | 2 | 2 | 1 | 12 |

| [26] | 1 | 2 | 2 | 2 | 2 | 2 | 2 | 13 |

| [27] | 2 | 2 | 2 | 2 | 2 | 1 | 2 | 13 |

| [49] | 1 | 2 | 0 | 1 | 1 | 1 | 1 | 7 |

| [28] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [29] | 1 | 2 | 2 | 2 | 1 | 1 | 1 | 10 |

| [30] | 1 | 2 | 2 | 1 | 1 | 2 | 1 | 10 |

| [31] | 1 | 2 | 0 | 2 | 2 | 1 | 2 | 10 |

| [32] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [50] | 1 | 1 | 0 | 1 | 0 | 1 | 1 | 5 |

| [33] | 2 | 2 | 1 | 1 | 1 | 2 | 2 | 11 |

| [34] | 1 | 1 | 2 | 2 | 2 | 1 | 2 | 11 |

| [35] | 2 | 2 | 1 | 1 | 1 | 2 | 2 | 11 |

| [36] | 2 | 2 | 2 | 1 | 1 | 1 | 1 | 10 |

| [37] | 2 | 1 | 2 | 2 | 2 | 1 | 2 | 12 |

| [38] | 1 | 2 | 0 | 1 | 2 | 2 | 2 | 10 |

| [39] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [40] | 2 | 2 | 1 | 2 | 2 | 1 | 0 | 10 |

| [41] | 1 | 2 | 2 | 2 | 2 | 1 | 0 | 10 |

| [3] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [42] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [43] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [10] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [9] | 2 | 1 | 1 | 1 | 2 | 2 | 2 | 11 |

| [44,45] | 2 | 1 | 0 | 1 | 2 | 2 | 2 | 10 |

| [46] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 14 |

| [47] | 2 | 2 | 2 | 1 | 1 | 1 | 2 | 11 |

| [5] | 2 | 2 | 0 | 1 | 2 | 2 | 0 | 9 |

| [48] | 1 | 1 | 1 | 1 | 2 | 2 | 2 | 10 |

| [51] | 2 | 1 | 0 | 1 | 0 | 2 | 0 | 6 |

| [52] | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 6 |

Appendix B. Data Extraction Form

| # | Extraction Element | Contents |

|---|---|---|

| General Information | ||

| 1 | ID | |

| 2 | Reference | |

| 3 | SLR Category | Include vs Exclude |

| 4 | Title | The full title of the article |

| 5 | Year | The publication year |

| 6 | Repository | ACM, IEEE, Scopus, Web of Science |

| 7 | Type | Journal vs article |

| Demographic and methodological characteristics of the included studies | ||

| 8 | Intervention target | |

| 9 | Disorder (Target group) | |

| 10 | Target language | |

| 11 | Sample Size | |

| 12 | Participant characteristics | |

| 13 | Evaluation | |

| 14 | Outcome measure | |

| Technological elements of OST systems | ||

| 15 | OST Name | |

| 16 | System objective | |

| 17 | Architecture design | |

| 18 | ML approach | |

| 19 | OST Technology details | |

Appendix C. Sample Size Characteristics of Experimental Studies

| Author | Sample Size | Participant Characteristics |

|---|---|---|

| B | 2 | (1M, 1F): One 5-year-old Turkish speaking boy with no language/speech problem and one 5-year-old Turkish speaking girl with a language disorder because of hearing impairment. |

| C | 356 | 5 to 9 years old Portuguese speaking children |

| E | 40 | 5 to 6 years old Romanian speaking children, boys and girls with difficulties in pronunciation of R and S sounds. |

| G | 5 | 2 to 6 years old English speaking children with hearing impairment. |

| H | 1077 | 3 to 8 years old Portuguese speaking children. |

| I | 60 | (22M, 20F): 42 14 to 60 years old Dutch-speaking children and adults without disabilities. (9M, 9F): 18 19 to 75 months old. Dutch-speaking children with severe cerebral palsy. |

| J | 4 | (2M, 2F): 8 to 10 years old Portuguese speaking children |

| K | 21 | (13M, 1F): fourteen 4 to 12 year old with diagnosed SSDs ranging from mild to severe (7 motor-speech and 7 phonological impairments). (4M, 3F): seven 5 to 12 years old children typically developing |

| L | 30 | IT professionals |

| M | 10 | Deaf, hard of hearing, implanted children and those who had a speech impediment. |

| O | 11 | (6M, 5F): 5,2 to 6,9 years old German-speaking children suffering from a specific articulation disorder, i.e., [s]-misarticulation |

| P | 5 | Portuguese speaking. 2 females (30 and 46 years) and 3 males (ages: 13, 33, 36). The younger participant is the only one doing speech therapy. |

| Q | 18 | 16 boys and 2 girls were recruited from three psychology offices. Their mean age was 10.54 years (range 2–16; std 4.34). |

| R | 1 | A 4-year-old and a 6-year-old. |

| S | 32 | Children of regular schools |

| T | 12 | Italian speaking children |

| U | Unknown | Children with disabilities and typically developing children. |

| V | 8 | (3M, 1F): 3 to 7 years old children clinically diagnosed with apraxia of speech. |

| X | 22 | 2-year-old children |

| Y | 53 | Children with different types of disabilities and cognitive ages from 0 to 7 years |

| Z | 53 | Children with different types of disabilities and cognitive ages from 0 to 7 years |

| AA | 35 | (13M, 7F): 20 7 to 10 years old children with no speech or language impairments (9M, 6F): 15 7 to 9 year old with speech and language impairments |

| BB | 27 | 11 to 34 years old children and adults with mild to severe mental delay or a communication disorder. |

| DD | 143 | 43 parents and 100 teachers (kindergarten and primary school |

| FF | Unknown | Children and adults with different levels of dysarthria. |

| GG | 20 | (11M, 9F): 15 to 55 years old CP volunteers with speech difficulties and motor impairment. |

| II | 14 | (7M, 7F): 11 to 21 years old children and adults with physical and psychical handicaps like cerebral palsy, Down’s syndrome and similar impairments. |

References

- Franciscatto, M.H.; Del Fabro, M.D.; Damasceno Lima, J.C.; Trois, C.; Moro, A.; Maran, V.; Keske-Soares, M. Towards a speech therapy support system based on phonological processes early detection. Comput. Speech Lang. 2021, 65, 101130. [Google Scholar] [CrossRef]

- Danubianu, M.; Pentiuc, S.G.; Andrei Schipor, O.; Nestor, M.; Ungureanu, I.; Maria Schipor, D. TERAPERS—Intelligent Solution for Personalized Therapy of Speech Disorders. Int. J. Adv. Life Sci. 2009, 1, 26–35. [Google Scholar]

- Robles-Bykbaev, V.; López-Nores, M.; Pazos-Arias, J.J.; Arévalo-Lucero, D. SPELTA: An expert system to generate therapy plans for speech and language disorders. Expert Syst. Appl. 2015, 42, 7641–7651. [Google Scholar] [CrossRef]

- American Speech-Language-Hearing Association. Speech Sound Disorders: Articulation and Phonology: Overview; American Speech-Language-Hearing Association: Rockville, MD, USA, 2017. [Google Scholar]

- Toki, E.I.; Pange, J.; Mikropoulos, T.A. An online expert system for diagnostic assessment procedures on young children’s oral speech and language. In Proceedings of the Procedia Computer Science, San Francisco, CA, USA, 28–30 October 2012; Elsevier B.V.: Amsterdam, The Netherlands, 2012; Volume 14, pp. 428–437. [Google Scholar] [CrossRef][Green Version]

- Ben-Aharon, A. A practical guide to establishing an online speech therapy private practice. Perspect. Asha Spec. Interest Groups 2019, 4, 712–718. [Google Scholar] [CrossRef]

- Furlong, L.; Erickson, S.; Morris, M.E. Computer-based speech therapy for childhood speech sound disorders. J. Commun. Disord. 2017, 68, 50–69. [Google Scholar] [CrossRef] [PubMed]

- Mcleod, S.; Baker, E. Speech-language pathologists’ practices regarding assessment, analysis, target selection, intervention, and service delivery for children with speech sound disorders. Clin. Linguist. Phon. 2014, 28, 508–531. [Google Scholar] [CrossRef]

- Sasikumar, D.; Verma, S.; Sornalakshmi, K. A Game Application to assist Speech Language Pathologists in the Assessment of Children with Speech Disorders. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 6881–6887. [Google Scholar] [CrossRef]

- Rodríguez, W.R.; Saz, O.; Lleida, E. A prelingual tool for the education of altered voices. Speech Commun. 2012, 54, 583–600. [Google Scholar] [CrossRef]

- Chen, Y.P.P.; Johnson, C.; Lalbakhsh, P.; Caelli, T.; Deng, G.; Tay, D.; Erickson, S.; Broadbridge, P.; El Refaie, A.; Doube, W.; et al. Systematic review of virtual speech therapists for speech disorders. Comput. Speech Lang. 2016, 37, 98–128. [Google Scholar] [CrossRef]

- Yu, D.; Deng, L. Automatic Speech Recognition: A Deep Learning Approach (Signals and Communication Technology); Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Ghai, W.; Singh, N. Literature Review on Automatic Speech Recognition. Int. J. Comput. Appl. 2012, 41, 975–8887. [Google Scholar] [CrossRef]

- Padmanabhan, J.; Premkumar, M.J.J. Machine learning in automatic speech recognition: A survey. IETE Tech. Rev. (Inst. Electron. Telecommun. Eng. India) 2015, 32, 240–251. [Google Scholar] [CrossRef]

- Russell, S.; Norvig, P. Artificial Intelligence A Modern Approach, 3rd ed.; Prentice Hall: Hoboken, NJ, USA, 2010. [Google Scholar]

- Dede, G.; Sazli, M.H. Speech recognition with artificial neural networks. Digit. Signal Process. Rev. J. 2010, 20, 763–768. [Google Scholar] [CrossRef]

- Lee, S.A.S. This review of virtual speech therapists for speech disorders suffers from limited data and methodological issues. Evid. Based Commun. Assess. Interv. 2018, 12, 18–23. [Google Scholar] [CrossRef]

- Jesus, L.M.; Santos, J.; Martinez, J. The Table to Tablet (T2T) speech and language therapy software development roadmap. JMIR Res. Protoc. 2019, 8, e11596. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for performing systematic literature reviews in software engineering. In Technical Report, Ver. 2.3 EBSE Technical Report; EBSE: Goyang, Republic of Korea, 2007. [Google Scholar]

- Belen, G.M.A.; Cabonita, K.B.P.; dela Pena, V.A.; Dimakuta, H.A.; Hoyle, F.G.; Laviste, R.P. Tingog: Reading and Speech Application for Children with Repaired Cleft Palate. In Proceedings of the 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Baguio City, Philippines, 29 November–2 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Cagatay, M.; Ege, P.; Tokdemir, G.; Cagiltay, N.E. A serious game for speech disorder children therapy. In Proceedings of the 2012 7th International Symposium on Health Informatics and Bioinformatics, Nevsehir, Turkey, 19–22 April 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 18–23. [Google Scholar] [CrossRef]

- Cavaco, S.; Guimarães, I.; Ascensão, M.; Abad, A.; Anjos, I.; Oliveira, F.; Martins, S.; Marques, N.; Eskenazi, M.; Magalhães, J.; et al. The BioVisualSpeech Corpus of Words with Sibilants for Speech Therapy Games Development. Information 2020, 11, 470. [Google Scholar] [CrossRef]

- Danubianu, M.; Pentiuc, S.G.; Schipor, O.A.; Tobolcea, I. Advanced Information Technology—Support of Improved Personalized Therapy of Speech Disorders. Int. J. Comput. Commun. Control. 2010, 5, 684. [Google Scholar] [CrossRef]

- Das, M.; Saha, A. An automated speech-language therapy tool with interactive virtual agent and peer-to-peer feedback. In Proceedings of the 2017 4th International Conference on Advances in Electrical Engineering (ICAEE), Dhaka, Bangladesh, 28–30 September 2017; IEEE: Piscataway, NJ, USA, 2017; Volume 2018, pp. 510–515. [Google Scholar] [CrossRef]

- Firdous, S.; Wahid, M.; Ud, A.; Bakht, K.; Yousuf, M.; Batool, R.; Noreen, M. Android based Receptive Language Tracking Tool for Toddlers. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 589–595. [Google Scholar] [CrossRef]

- Geytenbeek, J.J.; Heim, M.M.; Vermeulen, R.J.; Oostrom, K.J. Assessing comprehension of spoken language in nonspeaking children with cerebral palsy: Application of a newly developed computer-based instrument. AAC Augment. Altern. Commun. 2010, 26, 97–107. [Google Scholar] [CrossRef]

- Gonçalves, C.; Rocha, T.; Reis, A.; Barroso, J. AppVox: An Application to Assist People with Speech Impairments in Their Speech Therapy Sessions. In Proceedings of the 2017 World Conference on Information Systems and Technologies (WorldCIST’17), Madeira, Portugal, 11–13 April 2017; pp. 581–591. [Google Scholar] [CrossRef]

- Hair, A.; Monroe, P.; Ahmed, B.; Ballard, K.J.; Gutierrez-Osuna, R. Apraxia world: A Speech Therapy Game for Children with Speech Sound Disorders. In Proceedings of the 17th ACM Conference on Interaction Design and Children, Stanford, CA, USA, 27–30 June 2017; ACM: New York, NY, USA, 2017; pp. 119–131. [Google Scholar] [CrossRef]

- Jamis, M.N.; Yabut, E.R.; Manuel, R.E.; Catacutan-Bangit, A.E. Speak App: A Development of Mobile Application Guide for Filipino People with Motor Speech Disorder. In Proceedings of the IEEE Region 10 Annual International Conference, Proceedings/TENCON, Kochi, India, 17–20 October 2019; pp. 717–722. [Google Scholar] [CrossRef]

- Kocsor, A.; Paczolay, D. Speech technologies in a computer-aided speech therapy system. In Proceedings of the 10th International Conference, ICCHP 2006, Linz, Austria, 11–13 July 2006; 2006; pp. 615–622. [Google Scholar]

- Kraleva, R.S. ChilDiBu—A Mobile Application for Bulgarian Children with Special Educational Needs. Int. J. Adv. Sci. Eng. Inf. Technol. 2017; 7. [Google Scholar] [CrossRef][Green Version]

- Kröger, B.J.; Birkholz, P.; Hoffmann, R.; Meng, H. Audiovisual Tools for Phonetic and Articulatory Visualization in Computer-Aided Pronunciation Training. In Development of Multimodal Interfaces: Active Listening and Synchrony; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2010; Volume 5967 LNCS, pp. 337–345. [Google Scholar] [CrossRef]

- Madeira, R.N.; Macedo, P.; Pita, P.; Bonança, Í.; Germano, H. Building on Mobile towards Better Stuttering Awareness to Improve Speech Therapy. In Proceedings of the International Conference on Advances in Mobile Computing & Multimedia-MoMM ’13, Vienna, Austria, 2–4 December 2013; ACM Press: New York, NY, USA, 2013; pp. 551–554. [Google Scholar] [CrossRef]

- Martínez-Santiago, F.; Montejo-Ráez, A.; García-Cumbreras, M.Á. Pictogram Tablet: A Speech Generating Device Focused on Language Learning. Interact. Comput. 2018, 30, 116–132. [Google Scholar] [CrossRef]

- Nasiri, N.; Shirmohammadi, S. Measuring performance of children with speech and language disorders using a serious game. In Proceedings of the 2017 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rochester, MN, USA, 7–10 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 15–20. [Google Scholar] [CrossRef]

- Ochoa-Guaraca, M.; Carpio-Moreta, M.; Serpa-Andrade, L.; Robles-Bykbaev, V.; Lopez-Nores, M.; Duque, J.G. A robotic assistant to support the development of communication skills of children with disabilities. In Proceedings of the 2016 IEEE 11th Colombian Computing Conference, CCC 2016—Conference Proceedings, Popayán, Colombia, 27–30 September 2016. [Google Scholar] [CrossRef]

- Origlia, A.; Altieri, F.; Buscato, G.; Morotti, A.; Zmarich, C.; Rodá, A.; Cosi, P. Evaluating a multi-avatar game for speech therapy applications. In Proceedings of the 4th EAI International Conference on Smart Objects and Technologies for Social Good-Goodtechs ’18, Bologna, Italy, 28–30 November 2018; ACM Press: New York, New York, USA, 2018; pp. 190–195. [Google Scholar] [CrossRef]

- Parmanto, B.; Saptono, A.; Murthi, R.; Safos, C.; Lathan, C.E. Secure telemonitoring system for delivering telerehabilitation therapy to enhance children’s communication function to home. Telemed. e-Health 2008, 14, 905–911. [Google Scholar] [CrossRef]

- Parnandi, A.; Karappa, V.; Son, Y.; Shahin, M.; McKechnie, J.; Ballard, K.; Ahmed, B.; Gutierrez-Osuna, R. Architecture of an automated therapy tool for childhood apraxia of speech. In Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility, Bellevue, WA, USA, 21–23 October 2013; ACM: New York, NY, USA, 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Pentiuc, S.; Tobolcea, I.; Schipor, O.A.; Danubianu, M.; Schipor, M. Translation of the Speech Therapy Programs in the Logomon Assisted Therapy System. Adv. Electr. Comput. Eng. 2010, 10, 48–52. [Google Scholar] [CrossRef]

- Redrovan-Reyes, E.; Chalco-Bermeo, J.; Robles-Bykbaev, V.; Carrera-Hidalgo, P.; Contreras-Alvarado, C.; Leon-Pesantez, A.; Nivelo-Naula, D.; Olivo-Deleg, J. An educational platform based on expert systems, speech recognition, and ludic activities to support the lexical and semantic development in children from 2 to 3 years. In Proceedings of the 2019 IEEE Colombian Conference on Communications and Computing (COLCOM), Barranquilla, Colombia, 5–7 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Robles-Bykbaev, V.; Quisi-Peralta, D.; Lopez-Nores, M.; Gil-Solla, A.; Garcia-Duque, J. SPELTA-Miner: An expert system based on data mining and multilabel classification to design therapy plans for communication disorders. In Proceedings of the 2016 International Conference on Control, Decision and Information Technologies (CoDIT), Saint Julian’s, Malta, 6–8 April 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 280–285. [Google Scholar] [CrossRef]

- Rocha, T.; Gonçalves, C.; Fernandes, H.; Reis, A.; Barroso, J. The AppVox mobile application, a tool for speech and language training sessions. Expert Syst. 2019, 36, e12373. [Google Scholar] [CrossRef]

- Schipor, D.M.; Pentiuc, S.; Schipor, O. End-User Recommendations on LOGOMON - a Computer Based Speech Therapy System for Romanian Language. Adv. Electr. Comput. Eng. 2010, 10, 57–60. [Google Scholar] [CrossRef]

- Sebkhi, N.; Desai, D.; Islam, M.; Lu, J.; Wilson, K.; Ghovanloo, M. Multimodal Speech Capture System for Speech Rehabilitation and Learning. IEEE Trans. Bio-Med. Eng. 2017, 64, 2639–2649. [Google Scholar] [CrossRef]

- Shahin, M.; Ahmed, B.; Parnandi, A.; Karappa, V.; McKechnie, J.; Ballard, K.J.; Gutierrez-Osuna, R. Tabby Talks: An automated tool for the assessment of childhood apraxia of speech. Speech Commun. 2015, 70, 49–64. [Google Scholar] [CrossRef]

- da Silva, D.P.; Amate, F.C.; Basile, F.R.M.; Bianchi Filho, C.; Rodrigues, S.C.M.; Bissaco, M.A.S. AACVOX: Mobile application for augmentative alternative communication to help people with speech disorder and motor impairment. Res. Biomed. Eng. 2018, 34, 166–175. [Google Scholar] [CrossRef]

- Vaquero, C.; Saz, O.; Lleida, E.; Rodriguez, W.R. E-inclusion technologies for the speech handicapped. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 4509–4512. [Google Scholar] [CrossRef]

- Grossinho, A.; Cavaco, S.; Magalhães, J. An interactive toolset for speech therapy. In Proceedings of the 11th Conference on Advances in Computer Entertainment Technology, Funchal, Portugal, 11–14 November 2014; ACM: New York, NY, USA, 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Lopes, M.; Magalhães, J.; Cavaco, S. A voice-controlled serious game for the sustained vowel exercise. In Proceedings of the 13th International Conference on Advances in Computer Entertainment Technology, Osaka, Japan, 9–12 November 2016; ACM: New York, NY, USA, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Velasquez-Angamarca, V.; Mosquera-Cordero, K.; Robles-Bykbaev, V.; Leon-Pesantez, A.; Krupke, D.; Knox, J.; Torres-Segarra, V.; Chicaiza-Juela, P. An Educational Robotic Assistant for Supporting Therapy Sessions of Children with Communication Disorders. In Proceedings of the 2019 7th International Engineering, Sciences and Technology Conference (IESTEC), Panama City, Panama, 9–11 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 586–591. [Google Scholar] [CrossRef]

- Zaharia, M.H.; Leon, F. Speech Therapy Based on Expert System. Adv. Electr. Comput. Eng. 2009, 9, 74–77. [Google Scholar] [CrossRef]

| Source | Before | After Abstract Screening | After Applying the Selection Criteria | After Quality Assessment |

|---|---|---|---|---|

| IEEE | 930 | 130 | 10 | 9 |

| Scopus | 2704 | 160 | 18 | 16 |

| Web of Science | 619 | 90 | 6 | 6 |

| ACM | 228 | 19 | 5 | 4 |

| Total | 4481 | 399 | 39 | 35 |

| No. | Criteria |

|---|---|

| 1 | The full text is unavailable. |

| 2 | The duplicate publication is already found in a different repository. |

| 3. | Article language is not in English. |

| 4. | The article is not relevant or related to child speech therapy. |

| 5. | The article is not a primary study. |

| 6. | The article is not peer-reviewed. |

| 7. | The article only focuses on speech recognition techniques and not on therapy. |

| Nr. | Criteria | Yes (2) | Partial (1) | No (0) |

|---|---|---|---|---|

| 1 | Are the aims of the study clearly stated? | |||

| 2 | Are the scope and context of the study clearly defined? | |||

| 3 | Is the proposed solution clearly explained and validated by an empirical study? | |||

| 4 | Are the variables used in the study likely to be valid and reliable? | |||

| 5 | Is the research process documented adequately? | |||

| 6 | Are all study questions answered? | |||

| 7 | Are the negative findings presented? |

| Nr. | Reference | Title |

|---|---|---|

| A | [20] | Tingog: Reading and Speech Application for Children with Repaired Cleft Palate |

| B | [21] | A serious game for speech disorder children therapy |

| C | [22] | The BioVisualSpeech Corpus of Words with Sibilants for Speech Therapy Games Development |

| D | [23] | Advanced Information Technology—Support of Improved Personalized Therapy of Speech Disorders |

| E | [2] | TERAPERS -Intelligent Solution for Personalized Therapy of Speech Disorders |

| F | [24] | An automated speech-language therapy tool with interactive virtual agent and peer-to-peer feedback |

| G | [25] | Android based Receptive Language Tracking Tool for Toddlers. |

| H | [1] | Towards a speech therapy support system based on phonological processes early detection. |

| I | [26] | Assessing comprehension of spoken language in nonspeaking children with cerebral palsy: Application of a newly developed computer-based instrument |

| J | [27] | AppVox: An Application to Assist People with Speech Impairments in Their Speech Therapy Sessions |

| K | [28] | Apraxia world: A Speech Therapy Game for Children with Speech Sound Disorders |

| L | [29] | Speak App: A Development of Mobile Application Guide for Filipino People with Motor Speech Disorder |

| M | [30] | Speech technologies in a computer-aided speech therapy system |

| N | [31] | ChilDiBu—A Mobile Application for Bulgarian Children with Special Educational Needs |

| O | [32] | Audiovisual Tools for Phonetic and Articulatory Visualization in Computer-Aided Pronunciation Training |

| P | [33] | Building on Mobile towards Better Stuttering Awareness to Improve Speech Therapy |

| Q | [34] | Pictogram Tablet: A Speech Generating Device Focused on Language Learning |

| R | [35] | Measuring performance of children with speech and language disorders using a serious game |

| S | [36] | A robotic assistant to support the development of communication skills of children with disabilities |

| T | [37] | Evaluating a multi-avatar game for speech therapy applications |

| U | [38] | Secure telemonitoring system for delivering telerehabilitation therapy to enhance children’s communication function to home |

| V | [39] | Architecture of an automated therapy tool for childhood apraxia of speech |

| W | [40] | Translation of the Speech Therapy Programs in the Logomon Assisted Therapy System. |

| X | [41] | An educational platform based on expert systems, speech recognition, and ludic activities to support the lexical and semantic development in children from 2 to 3 years. |

| Y | [3] | SPELTA: An expert system to generate therapy plans for speech and language disorders. |

| Z | [42] | SPELTA-Miner: An expert system based on data mining and multilabel classification to design therapy plans for communication disorders. |

| AA | [43] | The AppVox mobile application, a tool for speech and language training sessions |

| BB | [10] | A prelingual tool for the education of altered voices |

| CC | [9] | A Game Application to assist Speech Language Pathologists in the Assessment of Children with Speech Disorders |

| DD | [44] | End-User Recommendations on LOGOMON - a Computer Based Speech Therapy System for Romanian Language |

| EE | [45] | Multimodal Speech Capture System for Speech Rehabilitation and Learning |

| FF | [46] | Tabby Talks: An automated tool for the assessment of childhood apraxia of speech |

| GG | [47] | AACVOX: mobile application for augmentative alternative communication to help people with speech disorder and motor impairment |

| HH | [5] | An Online Expert System for Diagnostic Assessment Procedures on Young Children’s Oral Speech and Language |

| II | [48] | E-inclusion technologies for the speech handicapped |

| Feature | Study | Total Number |

|---|---|---|

| Audio feedback | C, E, J, W, AA, BB | 6 |

| Emotion Screening | P | 1 |

| Error Detection | V, AA, CC, EE, FF, HH, II | 7 |

| Peer-to-peer feedback | F, K | 2 |

| Recommendation strategy | H, S, W, Y, Z | 5 |

| Reporting | D, S, V, W, X, Y, Z, AA, BB, CC, DD, FF, GG, HH | 14 |

| Speech Recognition | A, H, M, O, S, V, X, BB, CC, EE, II | 11 |

| Text-to-speech | A, S, GG, II | 4 |

| Textual feedback | F, J, II, CC, FF | 5 |

| User Data Management | S, X, Y, Z, DD, II | 6 |

| User Record voice | E, Q, U, V, W, CC, EE, FF, GG, II | 10 |

| Virtual 3D model | E, O, W, DD, EE | 5 |

| Visual feedback | C, EE, II | 3 |

| Voice commands | R, S | 2 |

| Classification | Study |

|---|---|

| Communication disorder | S, X, Z, |

| Speech disorder | A, C, D, E, H, K, L, N, P, Q, V, W, BB, CC, DD, EE, FF, GG, II |

| Language disorder | B, F, I, J, R, T, U, Y, AA, HH |

| Hearing disorder | G, M, O |

| Adopted Architecture Approach | Study |

|---|---|

| client–server system | D, F, H, L, P, U, V, DD, HH, II |

| Repository pattern | T, CC |

| Layered approach | S, X, Y, Z |

| Standalone system | A |

| Pipe-and-Filter Architecture | E, W, FF |

| Nr. | ML Types | ML Tasks | Algorithms | Application | Adopted Dataset |

|---|---|---|---|---|---|

| A | Unsupervised | Clustering | Not mentioned | Speech Recognition | Not mentioned |

| C | Supervised | Classification | Convolutional Neural Networks (CNN) Hidden-Markov Model | Speech Recognition | The database contains reading aloud recordings of 284 children. The corpus contains reading aloud recordings from 510 children. |

| E | Unsupervised | Clustering | Not mentioned | Generate a therapy plan | Not mentioned |

| F | Unsupervised | Clustering | Hidden Markov Model | Time prediction | Not mentioned |

| H | Supervised | Classification | Decision Tree Neural Network Support Vector Machine k-Nearest Neighbor Random Forest | Speech classification | A Phonological Knowledge Base containing speech samples collected from 1114 evaluations performed with 84 Portuguese words. |

| M | Supervised | Classification | Artificial Neural Networks (ANN) | Speech recognition | The authors refer to a large speech database, but no further details are given. |

| W | Unsupervised | Clustering | Not mentioned | Generate a therapy plan | Not mentioned |

| Y | Supervised | Classification | Decision Tree Artificial Neural networks | Generate a therapy plan | Not mentioned |

| Z | Supervised | Classification | Artificial Neural Networks | Generate a therapy plan | Database of thousands of therapy strategies. |

| CC | Supervised | Classification | Convolutional Neural Networks (CNN) | Speech to Text | TORGO Dataset that contains audio data of people with dysarthria and people without dysarthria. |

| DD | Unsupervised | Clustering | Not Mentioned | Emotion recognition | Not applicable |

| FF | Supervised | Classification | Artificial Neural Network (ANN) Logistic regression Support Vector Machine | Speech recognition | A dataset with correctly-pronounced utterances from 670 speakers. |

| HH | Supervised | Classification | Neural Networks | Detect disorder | Not mentioned |

| Evaluation Approach | Study |

|---|---|

| Case Study | C, G, K, L, M, O, P, R, S, U, V, X, Y, Z, AA, GG |

| Experimental | E, I, Q, T, BB, DD |

| Not evaluated | F, N, W, HH |

| Observational | B, J |

| Simulation-based | A, D, H, CC, EE, FF, II |

| Metrics | Study |

|---|---|

| ML Evaluation Metrics | |

| Accuracy | H, M, Z, CC, FF |

| Recall | H, FF |

| F1-Score/ F1-Measure | H, FF |

| Precision | FF |

| Pearson’s r | I |

| RMSE | H, EE |

| Kappa | I |

| Error | H, FF, II |

| General Evaluation Metrics | |

| Usability | A, L, GG |

| Satisfaction | AA, GG |

| Efficiency | L, AA, DD, GG |

| Effectiveness | J, AA |

| Reliability | L, T |

| Sensitivity | O |

| Coherence | X |

| Completeness | X |

| Relevance | X |

| Ease of learning memorization | GG |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Attwell, G.A.; Bennin, K.E.; Tekinerdogan, B. A Systematic Review of Online Speech Therapy Systems for Intervention in Childhood Speech Communication Disorders. Sensors 2022, 22, 9713. https://doi.org/10.3390/s22249713

Attwell GA, Bennin KE, Tekinerdogan B. A Systematic Review of Online Speech Therapy Systems for Intervention in Childhood Speech Communication Disorders. Sensors. 2022; 22(24):9713. https://doi.org/10.3390/s22249713

Chicago/Turabian StyleAttwell, Geertruida Aline, Kwabena Ebo Bennin, and Bedir Tekinerdogan. 2022. "A Systematic Review of Online Speech Therapy Systems for Intervention in Childhood Speech Communication Disorders" Sensors 22, no. 24: 9713. https://doi.org/10.3390/s22249713

APA StyleAttwell, G. A., Bennin, K. E., & Tekinerdogan, B. (2022). A Systematic Review of Online Speech Therapy Systems for Intervention in Childhood Speech Communication Disorders. Sensors, 22(24), 9713. https://doi.org/10.3390/s22249713