Rope Jumping Strength Monitoring on Smart Devices via Passive Acoustic Sensing

Abstract

1. Introduction

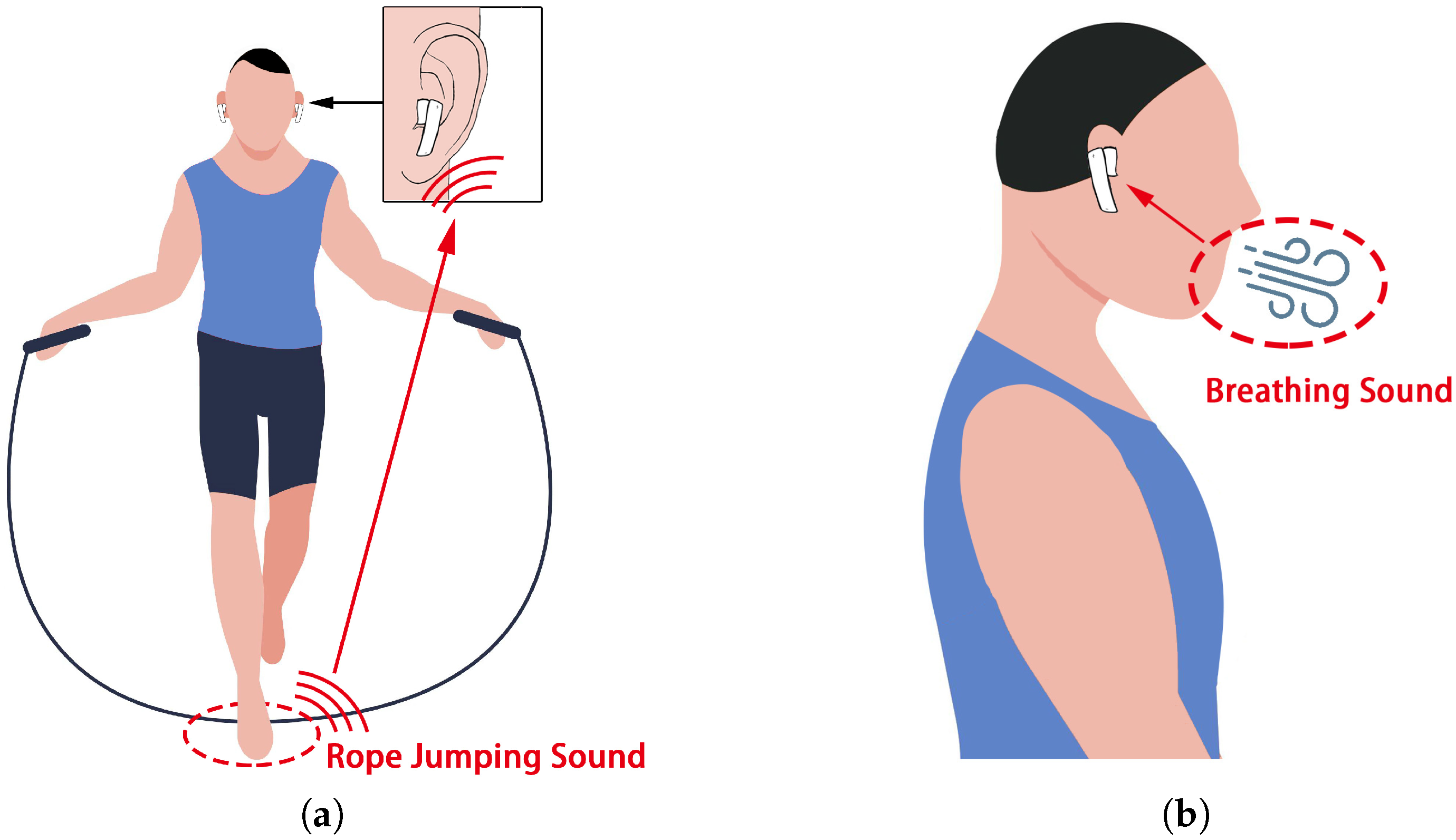

- We propose a rope jump monitoring system; this is the first work that successfully introduces attentive mechanism-based GAN to acoustic sensing. Such an optimization strategy improves the utilization of the collected data so that some audio data that are not recorded clearly can also be fully utilized and effectively improve the prediction accuracy of the system. It also avoids the over-reliance on rope-jumping equipment and environments (as conducted in previous works), and adds exercise intensity evaluation to the basic counting function; hence, it significantly broadens the scope of real-world applications.

- We designed a robust method for the detection of the rope-jumping sound by exploiting the properties of the high short-time energy of the rope-jumping sound and the high correlation between the rope jumping cycles.

- We designed a neural network that incorporates an attentive mechanism-based GAN and domain-adaptive adaptation to improve the prediction accuracy of the rope jumping exercise intensity and migration capability of the system.

- We conducted extensive experiments with eight volunteers in different environments to evaluate our system. The results show that the system can perform rope jump monitoring with, on average, 0.32 and 2.3% error rates for rope jumping count and exercise intensity evaluation, respectively.

2. Related Work

2.1. Research in Fitness Movement Monitoring

2.2. Research in Exercise Intensity Monitoring

2.3. Research in Adversarial Learning

2.4. Research in Rope Jump Monitoring

3. System Overview

3.1. Data Pre-Processing

3.2. Rope-Jumping Sound Detection

3.3. Rope Jumping Count

3.4. Breathing Profile Construction

3.5. Spectrogram Optimization

3.5.1. U-Net Generator

3.5.2. Global-Local Discriminators

3.6. Effect Evaluation

4. Implementation and Evaluation

4.1. Experiment Setup

4.2. Performance of Rope Jumping Count

4.2.1. Impact of Rope Jumping Duration and Environmental Noise

4.2.2. Impacts of Rope Jumping Conditions

4.2.3. Impact of Rope Jumping Methods

4.2.4. Impact of the Diversity of Users

4.2.5. Average Counting Error Compared with Smart Rope, YaoYao, and TianTian

4.3. Performance of Spectrogram Optimization and Effect Evaluation

4.3.1. Impact of Environmental Noise

4.3.2. Impact of the Diversity of Users

4.4. Ablation Study

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| GAN | generative adversarial network |

| LSGAN | least-square generative adversarial network |

| ResNet | residual neural network |

| FC | fully connected |

References

- Seals, D.R.; Nagy, E.E.; Moreau, K.L. Aerobic exercise training and vascular function with ageing in healthy men and women. J. Physiol. 2019, 597, 4901–4914. [Google Scholar] [CrossRef] [PubMed]

- Mikkelsen, K.; Stojanovska, L.; Polenakovic, M.; Bosevski, M.; Apostolopoulos, V. Exercise and mental health. Maturitas 2017, 106, 48–56. [Google Scholar] [CrossRef] [PubMed]

- Campbell, B.I.; Bove, D.; Ward, P.; Vargas, A.; Dolan, J. Quantification of Training Load and Training Response for Improving Athletic Performance. Strength Cond. J. 2017, 39, 3–13. [Google Scholar] [CrossRef]

- Halson, S.L. Monitoring Training Load to Understand Fatigue in Athletes. Sport. Med. 2014, 44 (Suppl. 2), S139. [Google Scholar] [CrossRef] [PubMed]

- Giordano, P.; Mautone, A.; Montagna, O.; Altomare, M.; Demattia, D. Training Load and Fatigue Marker Associations with Injury and Illness: A Systematic Review of Longitudinal Studies. Sport. Med. 2017, 47, 943–974. [Google Scholar]

- Jin, X.; Yao, Y.; Jiang, Q.; Huang, X.; Zhang, J.; Zhang, X.; Zhang, K. Virtual Personal Trainer via the Kinect Sensor. In Proceedings of the IEEE International Conference on Communication Technology, Hangzhou, China, 18–21 October 2015. [Google Scholar]

- Khanal, S.R.; Fonseca, A.; Marques, A.; Barroso, J.; Filipe, V. Physical exercise intensity monitoring through eye-blink and mouth’s shape analysis. In Proceedings of the 2018 2nd International Conference on Technology and Innovation in Sports, Health and Wellbeing (TISHW), Thessaloniki, Greece, 20–22 June 2018. [Google Scholar]

- Wu, B.F.; Lin, C.H.; Huang, P.W.; Lin, T.M.; Chung, M.L. A contactless sport training monitor based on facial expression and remote-PPG. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017. [Google Scholar]

- Seeger, C.; Buchmann, A.; Laerhoven, K.V. myHealthAssistant: A Phone-based Body Sensor Network that Captures the Wearer’s Exercises throughout the Day. In Proceedings of the 6th International ICST Conference on Body Area Networks, Beijing, China, 7–10 November 2011. [Google Scholar]

- Tang, W.; He, F.; Liu, Y. YDTR: Infrared and visible image fusion via y-shape dynamic transformer. IEEE Trans. Multimed. 2022. [Google Scholar] [CrossRef]

- Ding, H.; Shangguan, L.; Yang, Z.; Han, J.; Zhou, Z.; Yang, P.; Xi, W.; Zhao, J. FEMO: A platform for free-weight exercise monitoring with RFIDs. In Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Seoul, Republic of Korea, 1–4 November 2015; pp. 141–154. [Google Scholar]

- Morris, D.; Saponas, T.S.; Guillory, A.; Kelner, I. RecoFit: Using a wearable sensor to find, recognize, and count repetitive exercises. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 3225–3234. [Google Scholar]

- Shen, C.; Ho, B.J.; Srivastava, M. MiLift: Efficient Smartwatch-Based Workout Tracking Using Automatic Segmentation. IEEE Trans. Mob. Comput. 2018, 17, 1609–1622. [Google Scholar] [CrossRef]

- Xie, Y.; Li, F.; Wu, Y.; Wang, Y. HearFit: Fitness Monitoring on Smart Speakers via Active Acoustic Sensing. In Proceedings of the IEEE INFOCOM 2021-IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021. [Google Scholar]

- Zhu, Y.; Wang, D.; Zhao, R.; Zhang, Q.; Huang, A. FitAssist: Virtual fitness assistant based on WiFi. In Proceedings of the 16th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Houston, TX, USA, 12–14 November 2019; pp. 328–337. [Google Scholar]

- Chiang, T.H.; Chuang, Y.T.; Ke, C.L.; Chen, L.J.; Tseng, Y.C. Calorie Map: An Activity Intensity Monitoring System Based on Wireless Signals. In Proceedings of the 2017 IEEE Wireless Communications and Networking Conference (WCNC), San Francisco, CA, USA, 19–22 March 2017. [Google Scholar]

- Wang, W.; Kumar, N.; Chen, J.; Gong, Z.; Kong, X.; Wei, W.; Gao, H. Realizing the Potential of the Internet of Things for Smart Tourism with 5G and AI. IEEE Netw. 2020, 34, 295–301. [Google Scholar] [CrossRef]

- Pernek, I.; Hummel, K.A.; Kokol, P. Exercise repetition detection for resistance training based on smartphones. Pers. Ubiquitous Comput. 2012, 17, 771–782. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.P.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yang, S.; Wang, Z.; Wang, Z.; Xu, N.; Guo, Z. Controllable artistic text style transfer via shape-matching gan. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tangram Factory Inc. Smart Rope. 2015. Available online: http://tangramfactory.com/smartrope/en (accessed on 5 March 2022).

- Wang, J. YaoYao. 2017. Available online: https://yy.onlytalk.top (accessed on 9 March 2022).

- Epstein, L.H.; Paluch, R.A.; Kalakanis, L.E.; Goldfield, G.S.; Cerny, F.J.; Roemmich, J.N. How Much Activity Do Youth Get? A Quantitative Review of Heart-Rate Measured Activity. Pediatrics 2001, 108, e44. [Google Scholar] [CrossRef]

- Shanghai Littlelights Education Technology Co., Ltd. TianTian. 2020. Available online: https://www.tiantiantiaosheng.com (accessed on 11 March 2022).

- Wang, W.; Chen, J.; Wang, J.; Chen, J.; Liu, J.; Gong, Z. Trust-Enhanced Collaborative Filtering for Personalized Point of Interests Recommendation. IEEE Trans. Ind. Informatics 2020, 16, 6124–6132. [Google Scholar] [CrossRef]

- Giannakopoulos, T. A Method for Silence Removal and Segmentation of Speech Signals, Implemented in Matlab. Ph.D. Thesis, University of Athens, Athens, Greece, 2009. [Google Scholar]

- Mahdy, A.M.S.; Lotfy, K.; El-Bary, A.A. Use of optimal control in studying the dynamical behaviors of fractional financial awareness models. Soft Comput. 2022, 26, 3401–3409. [Google Scholar] [CrossRef]

- Mahdy, A.M.S. A numerical method for solving the nonlinear equations of Emden-Fowler models. J. Ocean. Eng. Sci. 2022, in press. [Google Scholar] [CrossRef]

- Guo, Q.; Ji, G.; Li, N. A improved dual-threshold speech endpoint detection algorithm. In Proceedings of the 2010 2nd International Conference on Computer and Automation Engineering (ICCAE), Singapore, 26–28 February 2010. [Google Scholar]

- Nicolò, A.; Massaroni, C.; Schena, E.; Sacchetti, M. The Importance of Respiratory Rate Monitoring: From Healthcare to Sport and Exercise. Sensors 2020, 20, 6396. [Google Scholar] [CrossRef] [PubMed]

- Nicolò, A.; Girardi, M.; Bazzucchi, I.; Felici, F.; Sacchetti, M. Respiratory frequency and tidal volume during exercise: Differential control and unbalanced interdependence. Physiol. Rep. 2018, 6, e13908. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Paul Smolley, S. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Improved texture networks: Maximizing quality and diversity in feed-forward stylization and texture synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6924–6932. [Google Scholar]

- Wang, W.; Yu, X.; Fang, B.; Zhao, D.-Y.; Chen, Y.; Wei, W.; Chen, J. Cross-modality LGE-CMR Segmentation using Image-to-Image Translation based Data Augmentation. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, in press. [Google Scholar] [CrossRef] [PubMed]

- Strath, S.J.; Swartz, A.M.; Bassett, D.R., Jr.; O’Brien, W.L.; King, G.A.; Ainsworth, B.E. Evaluation of heart rate as a method for assessing moderate intensity physical activity. Med. Sci. Sport. Exerc. 2000, 32 (Suppl. 9), 465–470. [Google Scholar] [CrossRef]

- Myles, W.S.; Dick, M.R.; Jantti, R. Heart rate and rope skipping intensity. Res. Q. Exerc. Sport 1981, 52, 76–79. [Google Scholar] [CrossRef]

- Chen, J.; Sun, S.; Zhang, L.-B.; Yang, B.; Wang, W. Compressed Sensing Framework for Heart Sound Acquisition in Internet of Medical Things. IEEE Trans. Ind. Inform. 2021, 18, 2000–2009. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Cai, C.; Pu, H.; Wang, P.; Chen, Z.; Luo, J. We Hear Your PACE: Passive Acoustic Localization of Multiple Walking Persons. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2021, 5, 1–24. [Google Scholar] [CrossRef]

- Ren, Y.; Zheng, Z.; Liu, H.; Chen, Y.; Wang, C. Breathing Sound-based Exercise Intensity Monitoring via Smartphones. In Proceedings of the 2021 International Conference on Computer Communications and Networks (ICCCN), Athens, Greece, 19–22 July 2021. [Google Scholar]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. EnlightenGAN: Deep Light Enhancement Without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef] [PubMed]

| ID | Gender | Age | Proficiency |

|---|---|---|---|

| 1 | Female | 20–25 | Master |

| 2 | Female | 20–25 | Normal |

| 3 | Male | 20–25 | Master |

| 4 | Male | 25–30 | Novice |

| 5 | Female | 25–30 | Normal |

| 6 | Male | 25–30 | Novice |

| 7 | Female | 30–35 | Novice |

| 8 | Female | 30–35 | Novice |

| Smart Rope | YaoYao | TianTian | Our System | |

|---|---|---|---|---|

| Average counting error in 30 s (number) | 1.8 | 2.6 | 6.3 | 0.32 |

| Baseline Network | Attention Mechanism | Local Discriminator | Domain Adaptation | Average Prediction Error |

|---|---|---|---|---|

| √ | 5.8% | |||

| √ | √ | √ | 3.5% | |

| √ | √ | √ | 3.9% | |

| √ | √ | √ | 4.8% | |

| √ | √ | √ | √ | 2.3% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, X.; Liu, C. Rope Jumping Strength Monitoring on Smart Devices via Passive Acoustic Sensing. Sensors 2022, 22, 9739. https://doi.org/10.3390/s22249739

Hou X, Liu C. Rope Jumping Strength Monitoring on Smart Devices via Passive Acoustic Sensing. Sensors. 2022; 22(24):9739. https://doi.org/10.3390/s22249739

Chicago/Turabian StyleHou, Xiaowen, and Chao Liu. 2022. "Rope Jumping Strength Monitoring on Smart Devices via Passive Acoustic Sensing" Sensors 22, no. 24: 9739. https://doi.org/10.3390/s22249739

APA StyleHou, X., & Liu, C. (2022). Rope Jumping Strength Monitoring on Smart Devices via Passive Acoustic Sensing. Sensors, 22(24), 9739. https://doi.org/10.3390/s22249739