Agreement between Azure Kinect and Marker-Based Motion Analysis during Functional Movements: A Feasibility Study

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Experimental Procedures

2.3. Azure Kinect Data Collection

2.4. Calculations of Joint Angles from Azure Kinect Data

2.5. Three-Dimensional Motion Data Collection

2.6. Data Analysis

2.7. Statistical Analyses

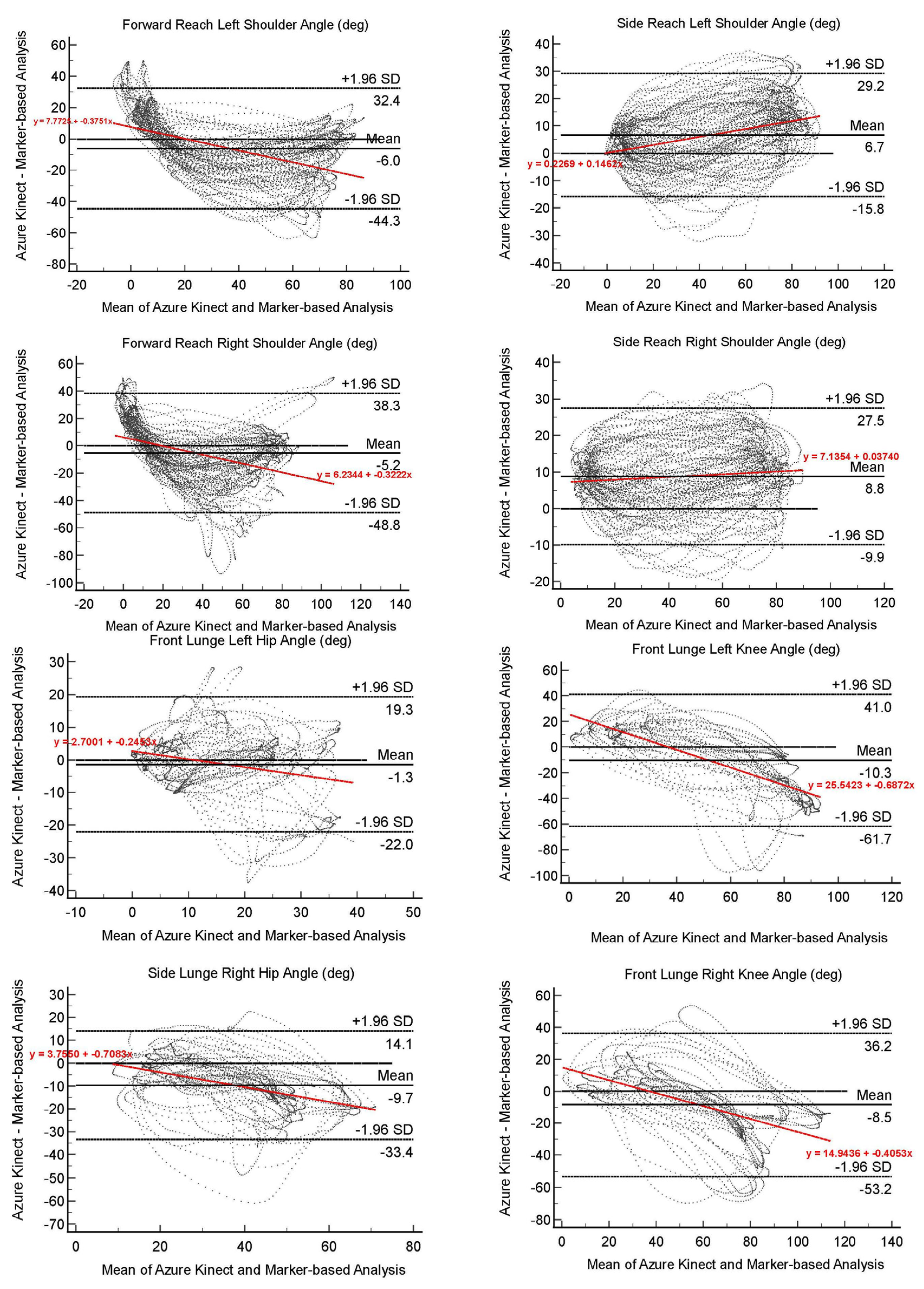

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bolink, S.A.A.N.; Naisas, H.; Senden, R.; Essers, H.; Heyligers, I.C.; Meijer, K.; Grimm, B. Validity of an inertial measurement unit to assess pelvic orientation angles during gait, sit–stand transfers and step-up transfers: Comparison with an optoelectronic motion capture system. Med. Eng. Phys. 2016, 38, 225–231. [Google Scholar] [CrossRef] [PubMed]

- Al-Amri, M.; Nicholas, K.; Button, K.; Sparkes, V.; Sheeran, L.; Davies, J.L. Inertial Measurement Units for Clinical Movement Analysis: Reliability and Concurrent Validity. Sensors 2018, 18, 719. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guan, Y.; Guo, L.; Wu, N.; Zhang, L.; Warburton, D.E.R. Biomechanical insights into the determinants of speed in the fencing lunge. Eur. J. Sport Sci. 2018, 18, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Kim, J.; Jo, S.; Lee, K.; Kim, J.; Song, C. Video augmented mirror therapy for upper extremity rehabilitation after stroke: A randomized controlled trial. J. Neurol. 2022. Online ahead of print. [Google Scholar] [CrossRef]

- Lebel, K.; Boissy, P.; Hamel, M.; Duval, C. Inertial measures of motion for clinical biomechanics: Comparative assessment of accuracy under controlled conditions-effect of velocity. PLoS ONE 2013, 8, e79945. [Google Scholar] [CrossRef]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the CVPR 2011, Springs, CO, USA, 20–25 June 2011; pp. 1297–1304. [Google Scholar]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the Pose Tracking Performance of the Azure Kinect and Kinect v2 for Gait Analysis in Comparison with a Gold Standard: A Pilot Study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ.; Hubinský, P. Evaluation of the Azure Kinect and Its Comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ. Skeleton Tracking Accuracy and Precision Evaluation of Kinect V1, Kinect V2, and the Azure Kinect. Appl. Sci. 2021, 11, 5756. [Google Scholar] [CrossRef]

- Lachat, E.; Macher, H.; Landes, T.; Grussenmeyer, P. Assessment and Calibration of a RGB-D Camera (Kinect v2 Sensor) Towards a Potential Use for Close-Range 3D Modeling. Remote Sens. 2015, 7, 13070–13097. [Google Scholar] [CrossRef] [Green Version]

- Kramer, J.B.; Sabalka, L.; Rush, B.; Jones, K.; Nolte, T. Automated depth video monitoring for fall reduction: A case study. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 294–295. [Google Scholar]

- Ćuković, S.; Petruse, R.E.; Meixner, G.; Buchweitz, L. Supporting Diagnosis and Treatment of Scoliosis: Using Augmented Reality to Calculate 3D Spine Models in Real-Time—ARScoliosis. In Proceedings of the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, Republic of Korea, 16–19 December 2020; pp. 1926–1931. [Google Scholar]

- Nurai, T.; Naqvi, W. A research protocol of an observational study on efficacy of microsoft kinect azure in evaluation of static posture in normal healthy population. J. Datta Meghe Inst. Med. Sci. Univ. 2022, 17, 30–33. [Google Scholar] [CrossRef]

- Lee, C.; Kim, J.; Cho, S.; Kim, J.; Yoo, J.; Kwon, S. Development of Real-Time Hand Gesture Recognition for Tabletop Holographic Display Interaction Using Azure Kinect. Sensors 2020, 20, 4566. [Google Scholar] [CrossRef]

- Guess, T.M.; Bliss, R.; Hall, J.B.; Kiselica, A.M. Comparison of Azure Kinect overground gait spatiotemporal parameters to marker based optical motion capture. Gait Posture 2022, 96, 130–136. [Google Scholar] [CrossRef] [PubMed]

- Thomas, J.; Hall, J.B.; Bliss, R.; Guess, T.M. Comparison of Azure Kinect and optical retroreflective motion capture for kinematic and spatiotemporal evaluation of the sit-to-stand test. Gait Posture 2022, 94, 153–159. [Google Scholar] [CrossRef] [PubMed]

- Özsoy, U.; Yıldırım, Y.; Karaşin, S.; Şekerci, R.; Süzen, L.B. Reliability and agreement of Azure Kinect and Kinect v2 depth sensors in the shoulder joint range of motion estimation. J. Shoulder Elb. Surg. 2022, 31, 2049–2056. [Google Scholar] [CrossRef]

- Capecci, M.; Ceravolo, M.; Ferracuti, F.; Iarlori, S.; Longhi, S.; Romeo, L.; Russi, S.; Verdini, F. Accuracy evaluation of the Kinect v2 sensor during dynamic movements in a rehabilitation scenario. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2016, 2016, 5409–5412. [Google Scholar] [CrossRef] [PubMed]

- Belotti, N.; Bonfanti, S.; Locatelli, A.; Rota, L.; Ghidotti, A.; Vitali, A. A Tele-Rehabilitation Platform for Shoulder Motor Function Recovery Using Serious Games and an Azure Kinect Device. Stud. Health Technol. Inform. 2022, 293, 145–152. [Google Scholar] [CrossRef]

- Chen, K.Y.; Zheng, W.Z.; Lin, Y.Y.; Tang, S.T.; Chou, L.W.; Lai, Y.H. Deep-learning-based human motion tracking for rehabilitation applications using 3D image features. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 803–807. [Google Scholar]

- Cook, G.; Burton, L.; Hoogenboom, B. Pre-participation screening: The use of fundamental movements as an assessment of function—Part 1. N. Am. J. Sport Phys. 2006, 1, 62–72. [Google Scholar]

- Cook, G. Baseline Sports-Fitness Testing; Human Kinetics Inc.: Champaign, IL, USA, 2001; pp. 19–47. [Google Scholar]

- Fry, A.C.; Smith, J.C.; Schilling, B.K. Effect of Knee Position on Hip and Knee Torques During the Barbell Squat. J. Strength Cond. Res. 2003, 17, 629–633. [Google Scholar] [CrossRef]

- Schoenfeld, B.J. Squatting Kinematics and Kinetics and Their Application to Exercise Performance. J. Strength Cond. Res. 2010, 24, 3497–3506. [Google Scholar] [CrossRef] [Green Version]

- Abelbeck, K.G. Biomechanical model and evaluation of a linear motion squat type exercise. J. Strength Cond. Res. 2002, 16, 516–524. [Google Scholar] [PubMed]

- Boudreau, S.N.; Dwyer, M.K.; Mattacola, C.G.; Lattermann, C.; Uhl, T.L.; McKeon, J.M. Hip-Muscle Activation during the Lunge, Single-Leg Squat, and Step-Up-and-Over Exercises. J. Sport Rehabil. 2009, 18, 91–103. [Google Scholar] [CrossRef] [PubMed]

- Alkjaer, T.; Henriksen, M.; Dyhre-Poulsen, P.; Simonsen, E.B. Forward lunge as a functional performance test in ACL deficient subjects: Test-retest reliability. Knee 2009, 16, 176–182. [Google Scholar] [CrossRef] [PubMed]

- Jalali, M.; Farahmand, F.; Esfandiarpour, F.; Golestanha, S.A.; Akbar, M.; Eskandari, A.; Mousavi, S.E. The effect of functional bracing on the arthrokinematics of anterior cruciate ligament injured knees during lunge exercise. Gait Posture 2018, 63, 52–57. [Google Scholar] [CrossRef] [PubMed]

- Khadilkar, L.; MacDermid, J.C.; Sinden, K.E.; Jenkyn, T.R.; Birmingham, T.B.; Athwal, G.S. An analysis of functional shoulder movements during task performance using Dartfish movement analysis software. Int. J. Shoulder Surg. 2014, 8, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Namdari, S.; Yagnik, G.; Ebaugh, D.D.; Nagda, S.; Ramsey, M.L.; Williams, G.R., Jr.; Mehta, S. Defining functional shoulder range of motion for activities of daily living. J. Shoulder Elb. Surg. 2012, 21, 1177–1183. [Google Scholar] [CrossRef] [PubMed]

- Yeung, L.-F.; Yang, Z.; Cheng, K.C.-C.; Du, D.; Tong, R.K.-Y. Effects of camera viewing angles on tracking kinematic gait patterns using Azure Kinect, Kinect v2 and Orbbec Astra Pro v2. Gait Posture 2021, 87, 19–26. [Google Scholar] [CrossRef] [PubMed]

- Antico, M.; Balletti, N.; Laudato, G.; Lazich, A.; Notarantonio, M.; Oliveto, R.; Ricciardi, S.; Scalabrino, S.; Simeone, J. Postural control assessment via Microsoft Azure Kinect DK: An evaluation study. Comput. Methods Programs Biomed. 2021, 209, 106324. [Google Scholar] [CrossRef]

- Naeemabadi, M.; Dinesen, B.; Andersen, O.K.; Hansen, J. Investigating the impact of a motion capture system on Microsoft Kinect v2 recordings: A caution for using the technologies together. PLoS ONE 2018, 13, e0204052. [Google Scholar] [CrossRef] [Green Version]

- Colombel, J.; Daney, D.; Bonnet, V.; Charpillet, F. Markerless 3D Human Pose Tracking in the Wild with Fusion of Multiple Depth Cameras: Comparative Experimental Study with Kinect 2 and 3. In Activity and Behavior Computing; Ahad, M.A.R., Inoue, S., Roggen, D., Fujinami, K., Eds.; Springer: Singapore, 2021; pp. 119–134. [Google Scholar]

- Visual3D Documentation. Available online: https://www.c-motion.com/v3dwiki/index.php?title=Joint_Angle (accessed on 6 December 2022).

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [Green Version]

- Clark, R.A.; Pua, Y.-H.; Oliveira, C.C.; Bower, K.J.; Thilarajah, S.; McGaw, R.; Hasanki, K.; Mentiplay, B.F. Reliability and concurrent validity of the Microsoft Xbox One Kinect for assessment of standing balance and postural control. Gait Posture 2015, 42, 210–213. [Google Scholar] [CrossRef]

- Dubois, A.; Bihl, T.; Bresciani, J.-P. Automating the Timed Up and Go Test Using a Depth Camera. Sensors 2018, 18, 14. [Google Scholar] [CrossRef] [PubMed]

- Azure Kinect DK Depth Camera. Available online: https://learn.microsoft.com/en-us/azure/kinect-dk/depth-camera#invalidation (accessed on 21 August 2022).

- Robertson, D.; Caldwell, G.; Hamill, J.; Kamen, G.; Whittlesey, S. Research Methods in Biomechanics, 2nd ed.; Human Kinetics: Champaign, IL, USA, 2013; pp. 282–285. [Google Scholar]

| Tasks | Analyzed Joint | R | R2 | ICC | 95% CI |

|---|---|---|---|---|---|

| Front view squat | Right hip | 0.6972 | 0.4861 | 0.6302 | 0.6149 to 0.6450 |

| Left hip | 0.6271 | 0.3933 | 0.5274 | 0.5096 to 0.5448 | |

| Right knee | 0.9083 | 0.825 | 0.8989 | 0.8938 to 0.9037 | |

| Left knee | 0.9186 | 0.8438 | 0.9076 | 0.9024 to 0.9126 | |

| Side view squat | Right hip (visible leg) | 0.8251 | 0.6808 | 0.8122 | 0.8019 to 0.8220 |

| Left hip (hidden leg) | 0.6953 | 0.4834 | 0.6378 | 0.6227 to 0.6525 | |

| Right knee (visible leg) | 0.9470 | 0.8968 | 0.9185 | 0.9132 to 0.9235 | |

| Left knee (hidden leg) | 0.7076 | 0.5007 | 0.6625 | 0.6490 to 0.6757 | |

| Forward reach | Right shoulder | 0.7068 | 0.4996 | 0.6804 | 0.6674 to 0.6931 |

| Left shoulder | 0.7816 | 0.6109 | 0.7389 | 0.7282 to 0.7492 | |

| Lateral reach | Right shoulder | 0.9241 | 0.854 | 0.9235 | 0.9201 to 0.9266 |

| Left shoulder | 0.9159 | 0.8389 | 0.9069 | 0.9029 to 0.9108 | |

| Front view lunge | Right hip (front leg) | 0.7198 | 0.5181 | 0.6929 | 0.6757 to 0.7092 |

| Left hip | 0.5752 | 0.3309 | 0.5645 | 0.5416 to 0.5865 | |

| Right knee (front leg) | 0.7256 | 0.5265 | 0.6821 | 0.6634 to 0.6999 | |

| Left knee | 0.6943 | 0.4821 | 0.5826 | 0.5592 to 0.6052 | |

| Side view lunge | Right hip (front leg) | 0.7863 | 0.6183 | 0.7843 | 0.7727 to 0.7953 |

| Left hip | 0.2869 | 0.0823 | 0.2868 | 0.2620 to 0.3112 | |

| Right knee (front leg) | 0.8009 | 0.6414 | 0.7318 | 0.7178 to 0.7452 | |

| Left knee | 0.7722 | 0.5963 | 0.6987 | 0.6807 to 0.7159 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jo, S.; Song, S.; Kim, J.; Song, C. Agreement between Azure Kinect and Marker-Based Motion Analysis during Functional Movements: A Feasibility Study. Sensors 2022, 22, 9819. https://doi.org/10.3390/s22249819

Jo S, Song S, Kim J, Song C. Agreement between Azure Kinect and Marker-Based Motion Analysis during Functional Movements: A Feasibility Study. Sensors. 2022; 22(24):9819. https://doi.org/10.3390/s22249819

Chicago/Turabian StyleJo, Sungbae, Sunmi Song, Junesun Kim, and Changho Song. 2022. "Agreement between Azure Kinect and Marker-Based Motion Analysis during Functional Movements: A Feasibility Study" Sensors 22, no. 24: 9819. https://doi.org/10.3390/s22249819

APA StyleJo, S., Song, S., Kim, J., & Song, C. (2022). Agreement between Azure Kinect and Marker-Based Motion Analysis during Functional Movements: A Feasibility Study. Sensors, 22(24), 9819. https://doi.org/10.3390/s22249819