Person Re-Identification with Improved Performance by Incorporating Focal Tversky Loss in AGW Baseline

Abstract

:1. Introduction

- We propose a novel training loss design for incorporation into the AGW baseline in the training process to enhance the prediction accuracy of person re-identification. To the best of our knowledge, this work is the first to incorporate a focal Tversky loss in deep metric learning design for person re-identification.

- Different from the original AGW, a re-ranking technique is applied in the proposed method to give a boost to improve the person re-identification performance in the inference mode.

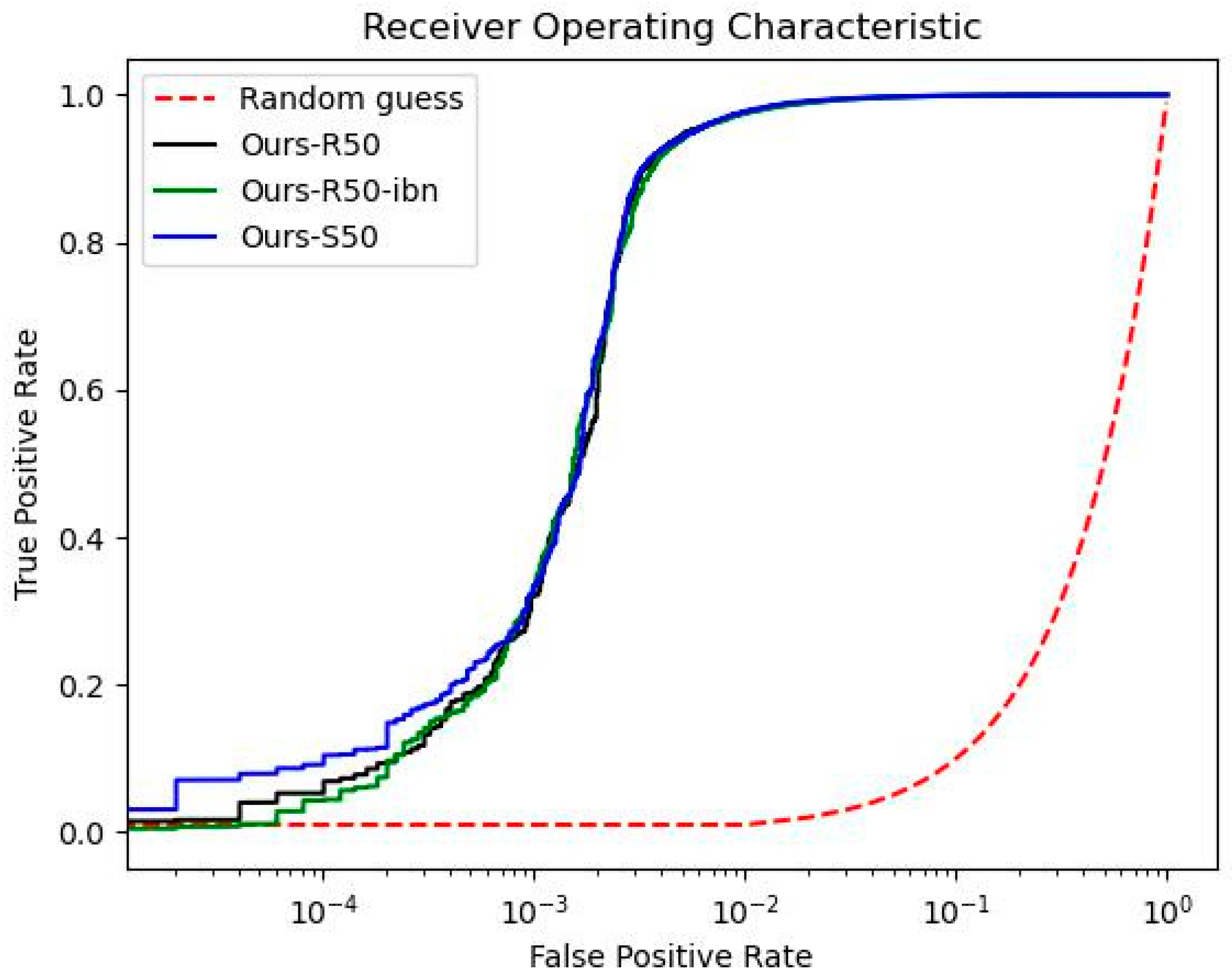

- The proposed method does not require additional training data, and it is easy to implement on ResNet, ResNet-ibn [33], and ResNeSt backbones. Moreover, the proposed method achieves state-of-the-art performance on the well-known person re-identification datasets, Market1501 [34] and DukeMTMC [35]. Besides, we investigate the receiver operating characteristic (ROC) performance among the above three backbones to verify the sensitivity and specificity among various thresholds.

2. Related Works

2.1. Video-Based Person Re-Identification

2.2. Image-Based Person Re-Identification

2.3. Loss Metrics on Person Re-Identification

3. Method

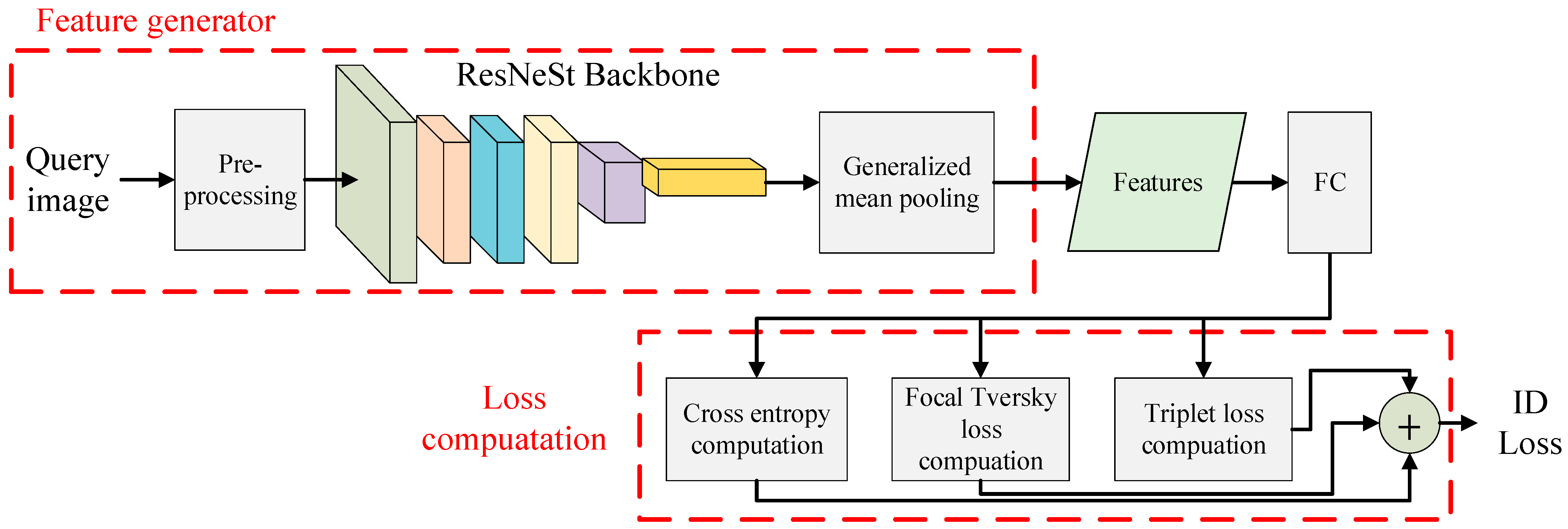

3.1. Feature Generator

3.2. Loss Computation

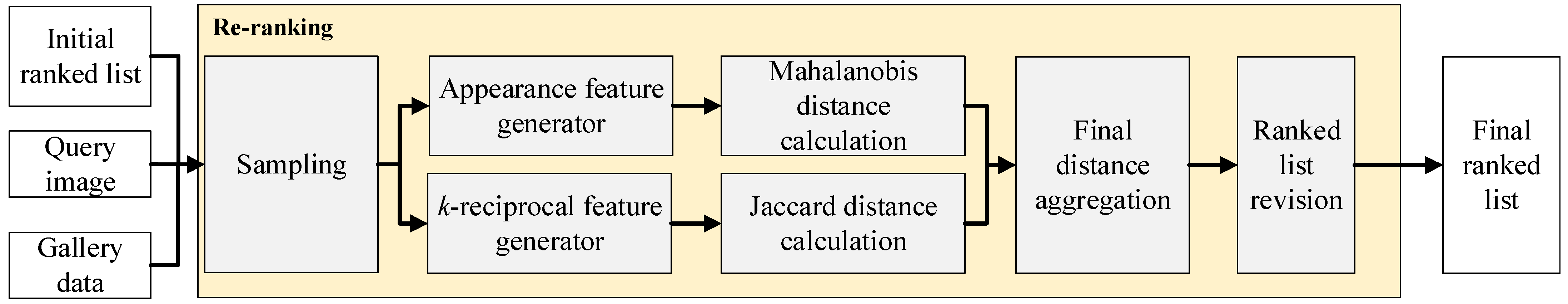

3.3. Re-Ranking Optimization

4. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zheng, L.; Yang, Y.; Hauptmann, A.G. Person reidentification: Past, present and future. arXiv 2016, arXiv:1610.02984. [Google Scholar]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C.H. Deep Learning for Person Re-Identification: A Survey and Outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef] [PubMed]

- He, L.; Liao, X.; Liu, W.; Liu, X.; Cheng, P.; Mei, T. FastReID: A Pytorch Toolbox for General Instance Re-identification. arXiv 2020, arXiv:2006.02631. [Google Scholar] [CrossRef]

- Ukita, N.; Moriguchi, Y.; Hagita, N. People re-identification across non-overlapping cameras using group features. Comput. Vis. Image Underst. 2016, 144, 228–236. [Google Scholar] [CrossRef]

- Chen, Y.C.; Zhu, X.; Zheng, W.S.; Lai, J.H. Person Re-Identification by Camera Correlation Aware Feature Augmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 392–408. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the ECCV, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Xue, J.; Meng, Z.; Katipally, K.; Wang, H.; Zon, K. Clothing change aware person identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2112–2120. [Google Scholar]

- Yang, Y.; Yang, J.; Yan, J.; Liao, S.; Yi, D.; Li, S.Z. Salient color names for person re-identification. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 536–551. [Google Scholar]

- Farenzena, M.; Bazzani, L.; Perina, A.; Murino, V.; Cristani, M. Person re-identification by symmetry-driven accumulation of local features. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; IEEE Computer Society: Washington, DC, USA, 2010; pp. 2360–2367. [Google Scholar] [CrossRef] [Green Version]

- Zhao, L.; Li, X.; Zhuang, Y.; Wang, J. Deeply-learned part-aligned representations for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3239–3248. [Google Scholar] [CrossRef] [Green Version]

- Yao, H.; Zhang, S.; Hong, R.; Zhang, Y.; Xu, C.; Tian, Q. Deep Representation Learning with Part Loss for Person Re-Identification. IEEE Trans. Image Process. 2019, 28, 2860–2871. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, X.; Luo, H.; Fan, X.; Xiang, W.; Sun, Y.; Xiao, Q.; Jiang, W.; Zhang, C.; Sun, J. Alignedreid: Surpassing humanlevel performance in person re-identification. arXiv 2017, arXiv:1711.08184. [Google Scholar]

- Fan, D.; Wang, L.; Cheng, S.; Li, Y. Dual Branch Attention Network for Person Re-Identification. Sensors 2021, 21, 5839. [Google Scholar] [CrossRef] [PubMed]

- Si, R.; Zhao, J.; Tang, Y.; Yang, S. Relation-Based Deep Attention Network with Hybrid Memory for One-Shot Person Re-Identification. Sensors 2021, 21, 5113. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Wang, P.; Fang, Z.; Lu, Q. Focus on the Visible Regions: Semantic-Guided Alignment Model for Occluded Person Re-Identification. Sensors 2020, 20, 4431. [Google Scholar] [CrossRef] [PubMed]

- Wu, A.; Zheng, W.S.; Yu, H.X.; Gong, S.; Lai, J. Rgb-infrared cross-modality person re-identification. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5380–5389. [Google Scholar]

- Wu, A.; Zheng, W.S.; Lai, J.H. Robust Depth-Based Person Re-Identification. IEEE Trans. Image Process. 2017, 26, 2588–2603. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, S.; Xiao, T.; Li, H.; Yang, W.; Wang, X. Identity-aware textual-visual matching with latent co-attention. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1908–1917. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond part models: Person retrieval with refined part pooling. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 501–518. [Google Scholar]

- Sun, Y.; Zheng, L.; Deng, W.; Wang, S. SVDNet for pedestrian retrieval. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3820–3828. [Google Scholar] [CrossRef] [Green Version]

- Ye, M.; Lan, X.; Yuen, P.C. Robust anchor embedding for unsupervised video person re-identification in the wild. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 176–193. [Google Scholar]

- Qian, X.; Fu, Y.; Jiang, Y.G.; Xiang, T.; Xue, X. Multi-scale deep learning architectures for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5399–5408. [Google Scholar]

- Zheng, L.; Zhang, H.; Sun, S.; Chandraker, M.; Yang, Y.; Tian, Q. Person Re-identification in the Wild. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3346–3355. [Google Scholar] [CrossRef] [Green Version]

- Suh, Y.; Wang, J.; Tang, S.; Mei, T.; Lee, K.M. Part-aligned bilinear representations for person re-identification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 418–437. [Google Scholar]

- Cheng, D.; Gong, Y.; Zhou, S.; Wang, J.; Zheng, N. Person reidentification by multi-channel parts-based cnn with improved triplet loss function. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1335–1344. [Google Scholar] [CrossRef]

- Liu, C.; Loy, C.C.; Gong, S.; Wang, G. Pop: Person re-identification post-rank optimization. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 441–448. [Google Scholar]

- Ma, A.J.; Li, P. Query Based Adaptive Re-ranking for Person Re-identification. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 397–412. [Google Scholar] [CrossRef]

- Zhong, Z.; Zheng, L.; Cao, D.; Li, S. Re-ranking Person Re-identification with k-Reciprocal Encoding. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3652–3661. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. ResNeSt: Split-Attention Networks. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022; pp. 2735–2745. [Google Scholar] [CrossRef]

- Pan, X.; Luo, P.; Shi, J.; Tang, X. Two at Once: Enhancing Learning and Generalization Capacities via IBN-Net. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 464–479. [Google Scholar]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person reidentification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. Unlabeled samples generated by gan improve the person re-identification baseline in vitro. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3774–3782. [Google Scholar] [CrossRef] [Green Version]

- McLaughlin, N.; del Rincon, J.M.; Miller, P. Recurrent Convolutional Network for Video-Based Person Re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Las Vegas, NV, USA, 27–30 June 2016; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2016; pp. 1325–1334. [Google Scholar] [CrossRef] [Green Version]

- Chung, D.; Tahboub, K.; Delp, E.J. A Two Stream Siamese Convolutional Neural Network for Person Re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1992–2000. [Google Scholar] [CrossRef]

- Li, J.; Zhang, S.; Wang, J.; Gao, W.; Tian, Q. Global-Local Temporal Representations for Video Person Re-Identification. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 3957–3966. [Google Scholar] [CrossRef] [Green Version]

- Hou, R.; Ma, B.; Chang, H.; Gu, X.; Shan, S.; Chen, X. VRSTC: Occlusion-Free Video Person Re-Identification. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7176–7185. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Piao, Y. Video Person Re-Identification with Frame Sampling–Random Erasure and Mutual Information–Temporal Weight Aggregation. Sensors 2022, 22, 3047. [Google Scholar] [CrossRef] [PubMed]

- Luo, H.; Gu, Y.; Liao, X.; Lai, S.; Jiang, W. Bag of Tricks and a Strong Baseline for Deep Person Re-Identification. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 1487–1495. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Fu, C.; Zhao, Y.; Zheng, F.; Song, J.; Ji, R.; Yang, Y. Salience-Guided Cascaded Suppression Network for Person Re-Identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3300–3310. [Google Scholar]

- Ni, X.; Esa, R. FlipReID: Closing the Gap between Training and Inference in Person Re-Identification. In Proceedings of the 2021 9th European Workshop on Visual Information Processing (EUVIP), Paris, France, 23–25 June 2021; pp. 1–6. [Google Scholar]

- Deng, W.; Zheng, L.; Ye, Q.; Kang, G.; Yang, Y.; Jiao, J. Image-Image Domain Adaptation with Preserved Self-Similarity and Domain-Dissimilarity for Person Re-identification. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. DeepReID: Deep filter pairing neural network for person re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 152–159. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, W.; Yang, Y.; Wang, Z. In Defense of the Triplet Loss Again: Learning Robust Person Re-Identification with Fast Approximated Triplet Loss and Label Distillation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1454–1463. [Google Scholar] [CrossRef]

- Abraham, N.; Khan, N.M. A Novel Focal Tversky Loss Function With Improved Attention U-Net for Lesion Segmentation. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 683–687. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 1026–1034. [Google Scholar]

| Hyperparameters | Our Settings |

|---|---|

| Backbone | ResNeSt50 |

| Optimizer | Adam |

| Feature dimension | 2048 |

| Training epoch | 200 |

| Batch size | 64 |

| Base learning rate | 0.00035 |

| Random erasing augmentation | 0.5 |

| Cross entropy loss | (epsilon, scale) = (0.1, 1) |

| Triplet loss | (margin, scale) = (0, 1) |

| Focal Tversky loss | Market1501: (α, β, γ) = (0.7, 0.3, 0.75) DukeMTMC: (α, β, γ) = (0.7, 0.3, 0.95) |

| Method | Backbone | Market1501 | DukeMTMC | ||||

|---|---|---|---|---|---|---|---|

| R1 | mAP | mINP | R1 | mAP | mINP | ||

| PCB (ECCV2018) [36] | ResNet50 | 92.3 | 77.4 | - | 81.8 | 66.1 | - |

| BoT (CVPRW 2019) [41] | ResNet50 | 94.4 | 86.1 | - | 87.2 | 77.0 | - |

| SCSN (CVPR 2020) [42] | ResNet50 | 95.7 | 88.5 | - | 91.0 | 79.0 | - |

| AGW (TPAMI 2020) [2] | ResNet50 | 95.1 | 87.8 | 65.0 | 89.0 | 79.6 | 45.7 |

| FlipReID (ArXiv 2021) [43] | ResNeSt50 | 95.8 | 94.7 | - | 93.0 | 90.7 | - |

| Ours (w/o re-ranking) | ResNeSt50 | 95.6 | 89.6 | 69.5 | 92.0 | 82.6 | 50.2 |

| Ours (with re-ranking) | ResNeSt50 | 96.2 | 94.5 | 88.0 | 93.0 | 91.4 | 77.0 |

| Method | Backbone | Market1501 | DukeMTMC | ||||

|---|---|---|---|---|---|---|---|

| R1 | mAP | mINP | R1 | mAP | mINP | ||

| AGW (TPAMI 2020) | ResNet50 | 95.1 | 87.8 | 65.0 | 89.0 | 79.6 | 45.7 |

| Ours (w/o re-ranking) | ResNet50 | 95.3 | 89.0 | 67.8 | 89.6 | 80.0 | 45.9 |

| Ours(with re-ranking) | ResNet50 | 96.1 | 94.7 | 88.0 | 91.1 | 89.4 | 73.8 |

| Ours (w/o re-ranking) | ResNet50-ibn | 95.6 | 89.3 | 68.1 | 90.3 | 80.7 | 47.4 |

| Ours(with re-ranking) | ResNet50-ibn | 96.2 | 94.6 | 88.1 | 92.2 | 89.9 | 74 |

| Ours (w/o re-ranking) | ResNeSt50 | 95.6 | 89.6 | 69.5 | 92.0 | 82.6 | 50.2 |

| Ours(with re-ranking) | ResNeSt50 | 96.2 | 94.5 | 88.0 | 93.0 | 91.4 | 77.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, S.-K.; Hsu, C.-C.; Wang, W.-Y. Person Re-Identification with Improved Performance by Incorporating Focal Tversky Loss in AGW Baseline. Sensors 2022, 22, 9852. https://doi.org/10.3390/s22249852

Huang S-K, Hsu C-C, Wang W-Y. Person Re-Identification with Improved Performance by Incorporating Focal Tversky Loss in AGW Baseline. Sensors. 2022; 22(24):9852. https://doi.org/10.3390/s22249852

Chicago/Turabian StyleHuang, Shao-Kang, Chen-Chien Hsu, and Wei-Yen Wang. 2022. "Person Re-Identification with Improved Performance by Incorporating Focal Tversky Loss in AGW Baseline" Sensors 22, no. 24: 9852. https://doi.org/10.3390/s22249852