Unconscious Other’s Impression Changer: A Method to Manipulate Cognitive Biases That Subtly Change Others’ Impressions Positively/Negatively by Making AI Bias in Emotion Estimation AI

Abstract

:1. Introduction

2. Related Work

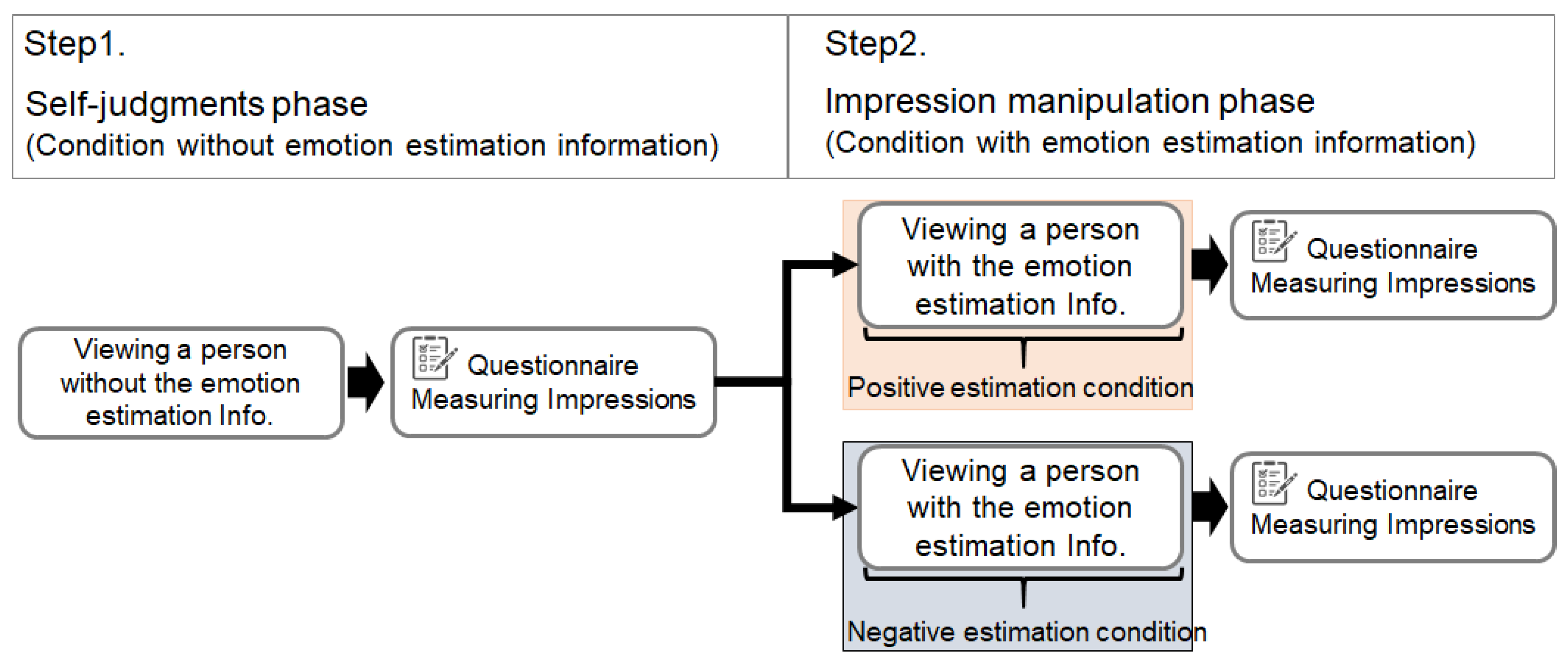

3. Method

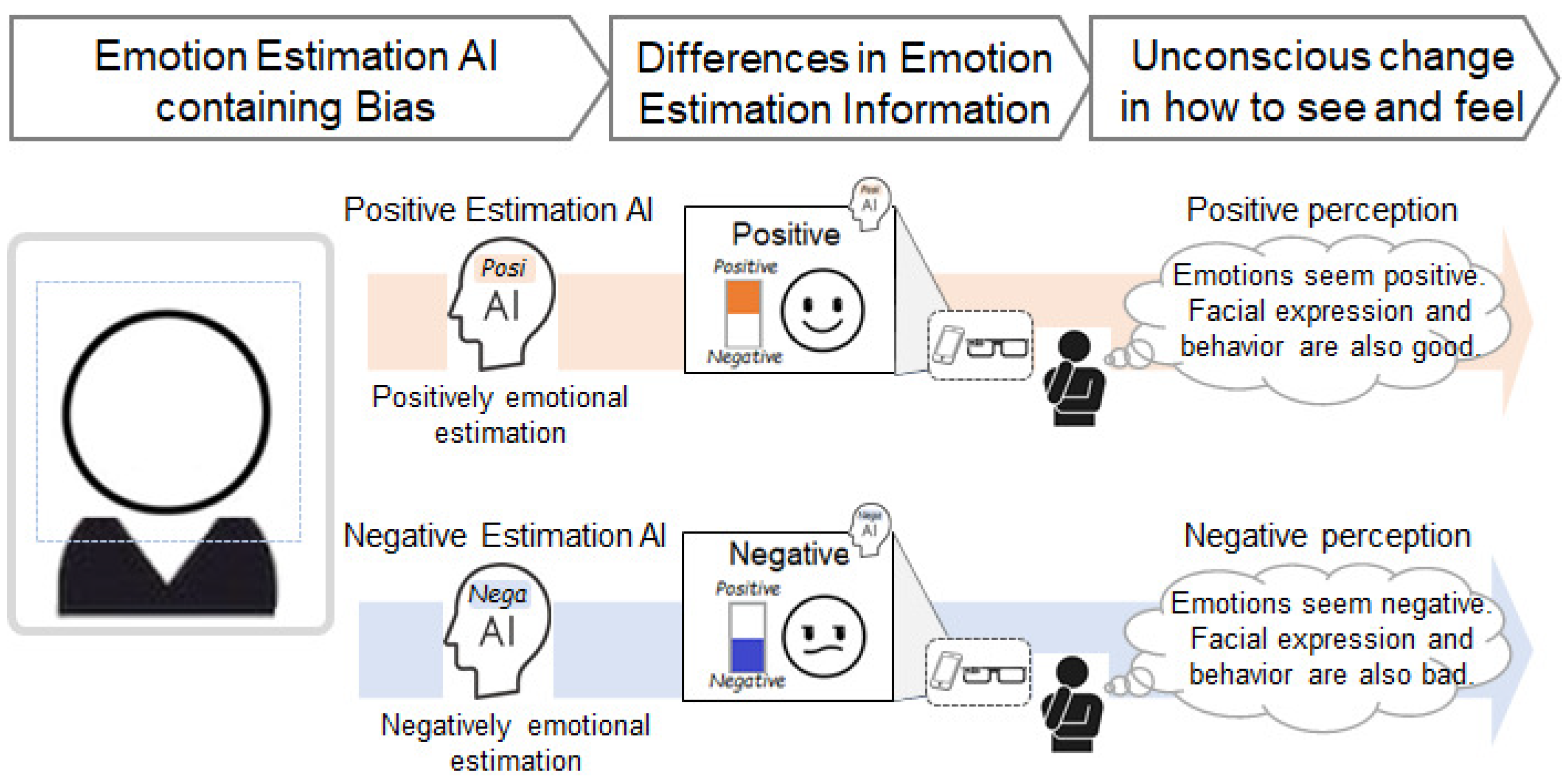

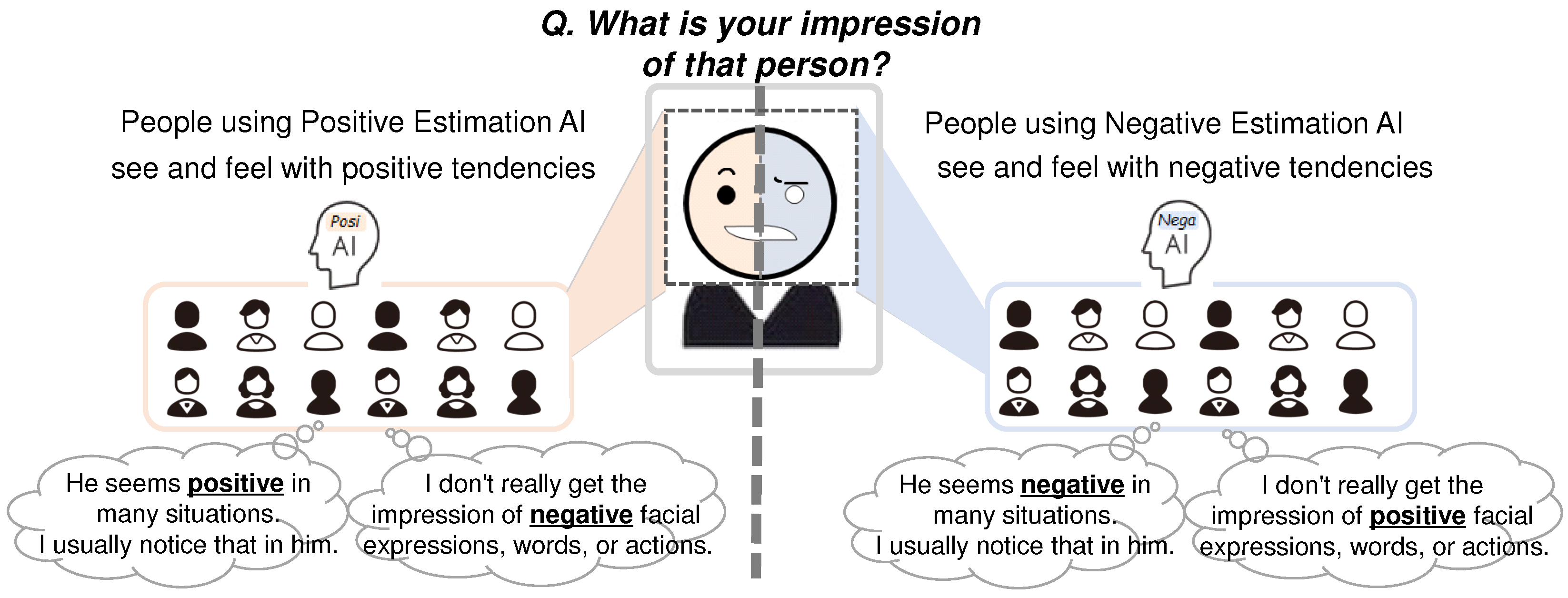

3.1. Hypothesis

3.2. Impression Manipulation Method Using AI Emotion Estimation Systems

3.2.1. Positive Estimation AI

3.2.2. Negative Estimation AI

3.2.3. Implementation

4. Evaluation 1: Verification of Impression Manipulation of a Person with Negative Facial Expressions

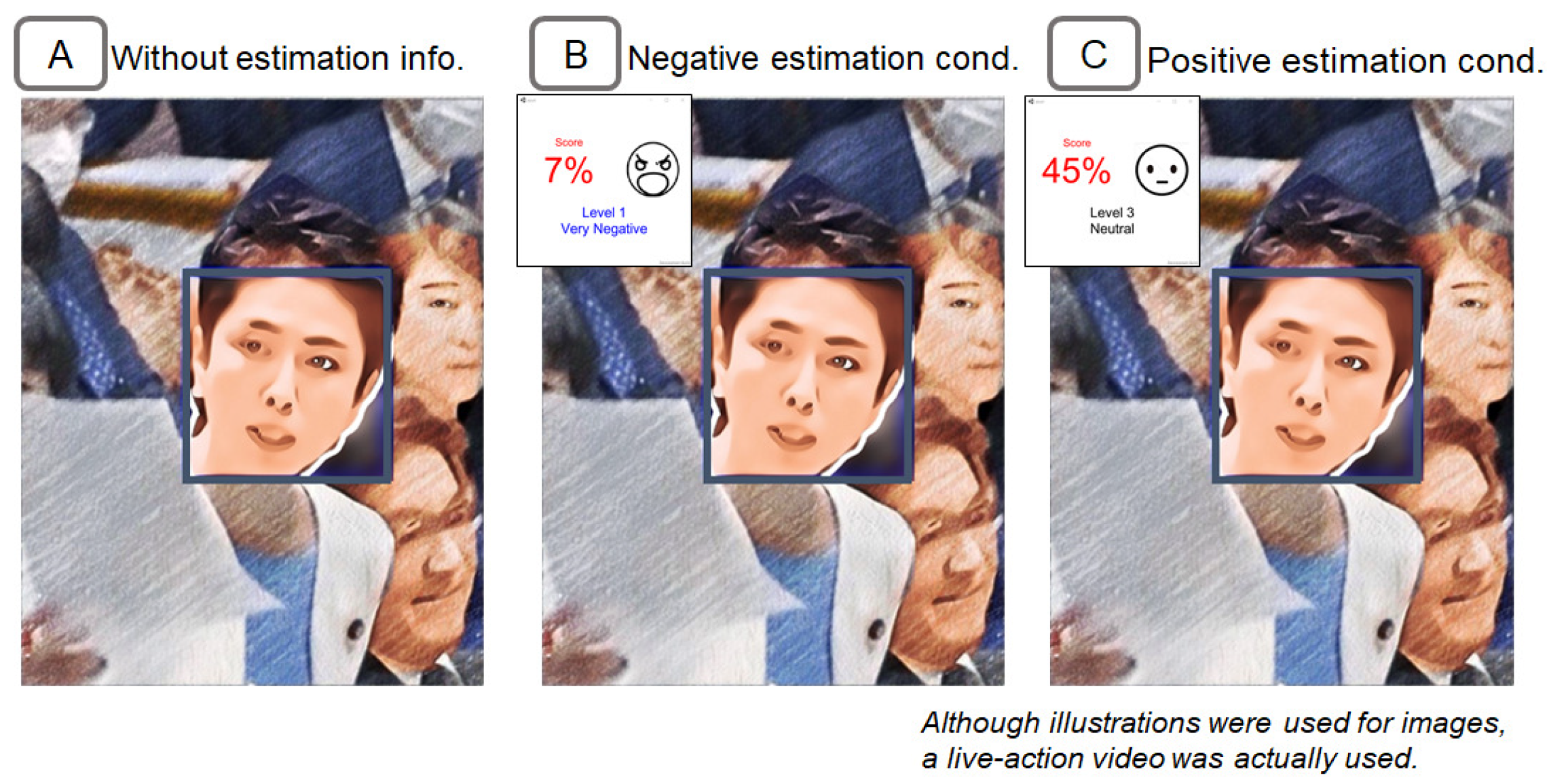

4.1. Negative Facial Expression Person

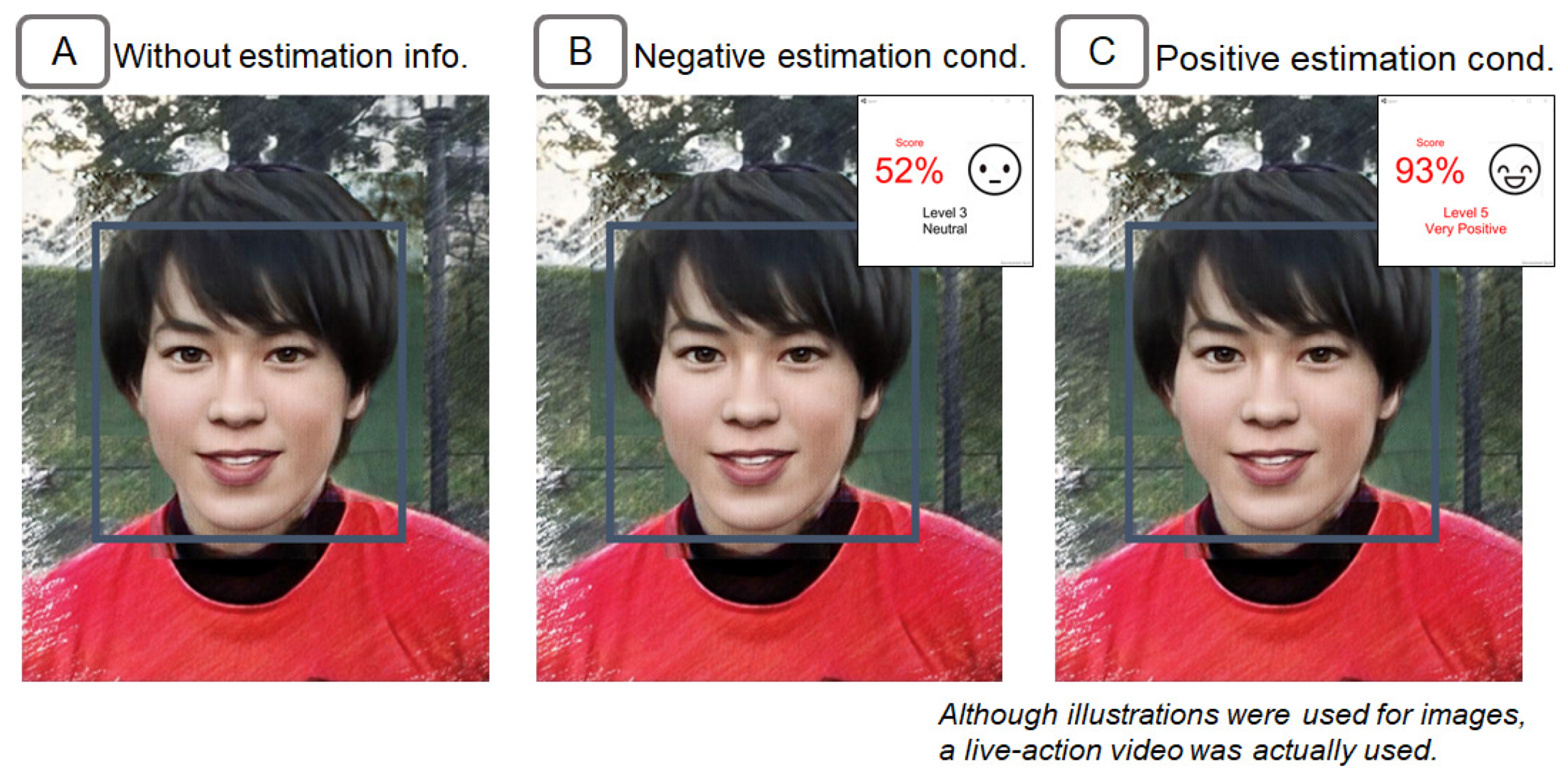

4.2. Condition for Biases of Emotion Estimation Information

- Negative estimation condition. In this condition, a higher degree of negativity of estimation results was presented by using the Negative Estimation AI. Specifically, the estimation results were presented between 0 and 10 points (i.e., very negative). These estimation results were more negative than most subjects’ self-judgments since the pre-confirmed emotional negativity degree in the condition without the emotion estimation information was about 30 points as mentioned earlier. Since the raw values of the Anger index were calculated on a scale from 0 to 100, the values were re-scaled from 90 to 100 points. The raw values of the anger index were also adjusted by applying a multiplication to the raw values so that the average value of the estimation results for the entire video would be 5 points.

- Positive estimation condition. In this condition, a lesser degree of negativity (i.e., a more positive degree) of estimation results was presented by using the positive estimation AI. Specifically, the estimation results were presented between 40 and 60 points (i.e., neutral). These estimation results were more positive than most subjects’ self-judgments. As the same manner as the negative estimation condition, the raw values of the anger index were re-scaled from 40 to 60 points. The raw values were also adjusted so that the average value of the estimation results for the entire video would be 50 points.

4.3. Procedure

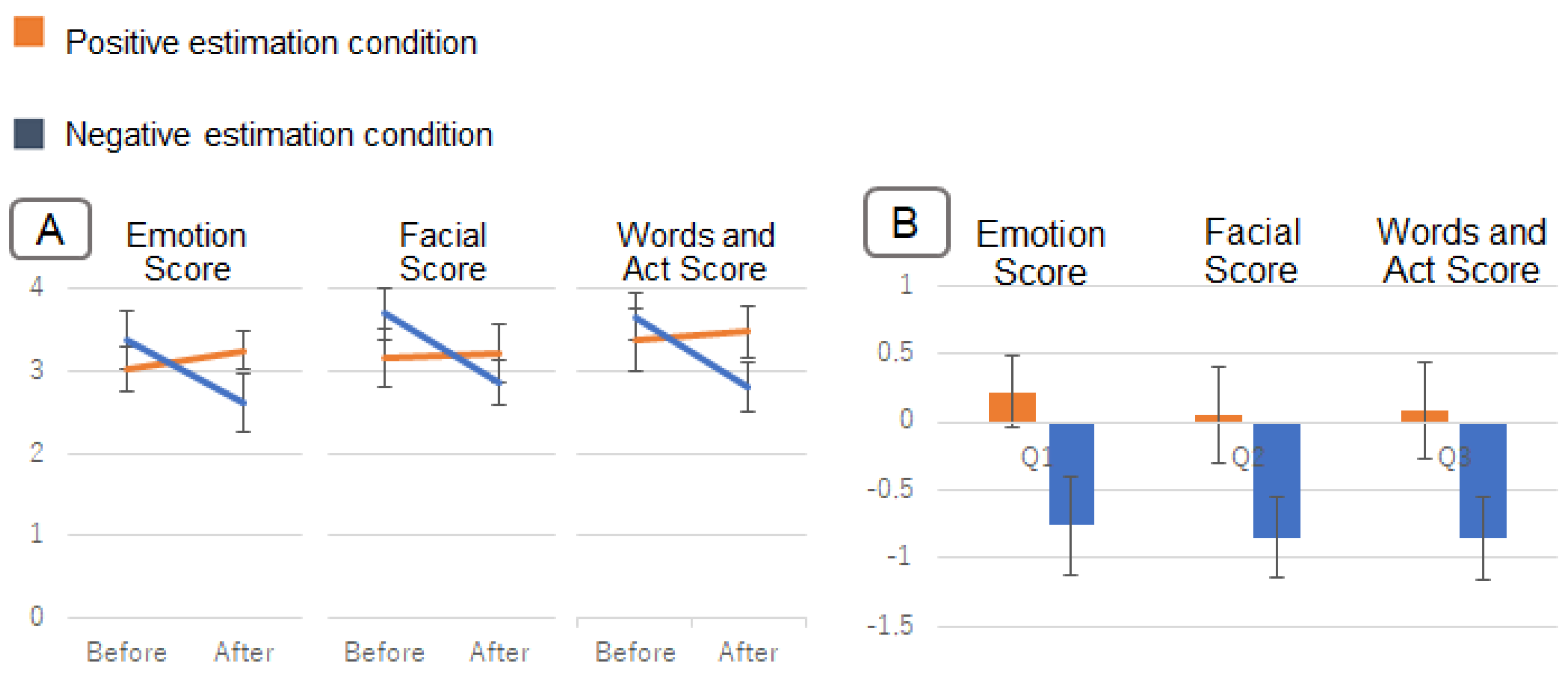

4.4. Result

4.5. Discussion

5. Evaluation 2: Verification of Impression Manipulation of a Person with Positive Facial Expressions

5.1. Positive Facial Expression Person

5.2. Condition for Biases of Emotion Estimation Information

- Negative estimation condition. In this condition, a less degree of positivity (i.e., a more negative degree) of estimation results was presented by using the negative estimation AI. Specifically, the estimation results were presented between 40 and 60 points (i.e., neutral). These estimation results were more negative than most subjects’ self-judgments since the subject’s emotional degree was about 80 points, as mentioned earlier. Since the raw values of the happy index were calculated on a scale from 0 to 100, the values were re-scaled from 40 to 60 points. The raw values of the happy index were also adjusted by applying a multiplication to the raw values so that the average value of the estimation results for the entire video would be 50 points.

- Positive estimation condition. In this condition, a higher degree of positivity of the estimation results was presented by using the positive estimation AI. Specifically, the estimation results were presented between 90 and 100 points (i.e., very positive). These estimation results were more positive than most subjects’ self-judgments. The raw values were re-scaled from 90 to 100 points, similar to the negative estimation condition. The raw values were also adjusted so that the average value of the estimation results for the entire video would be 95 points.

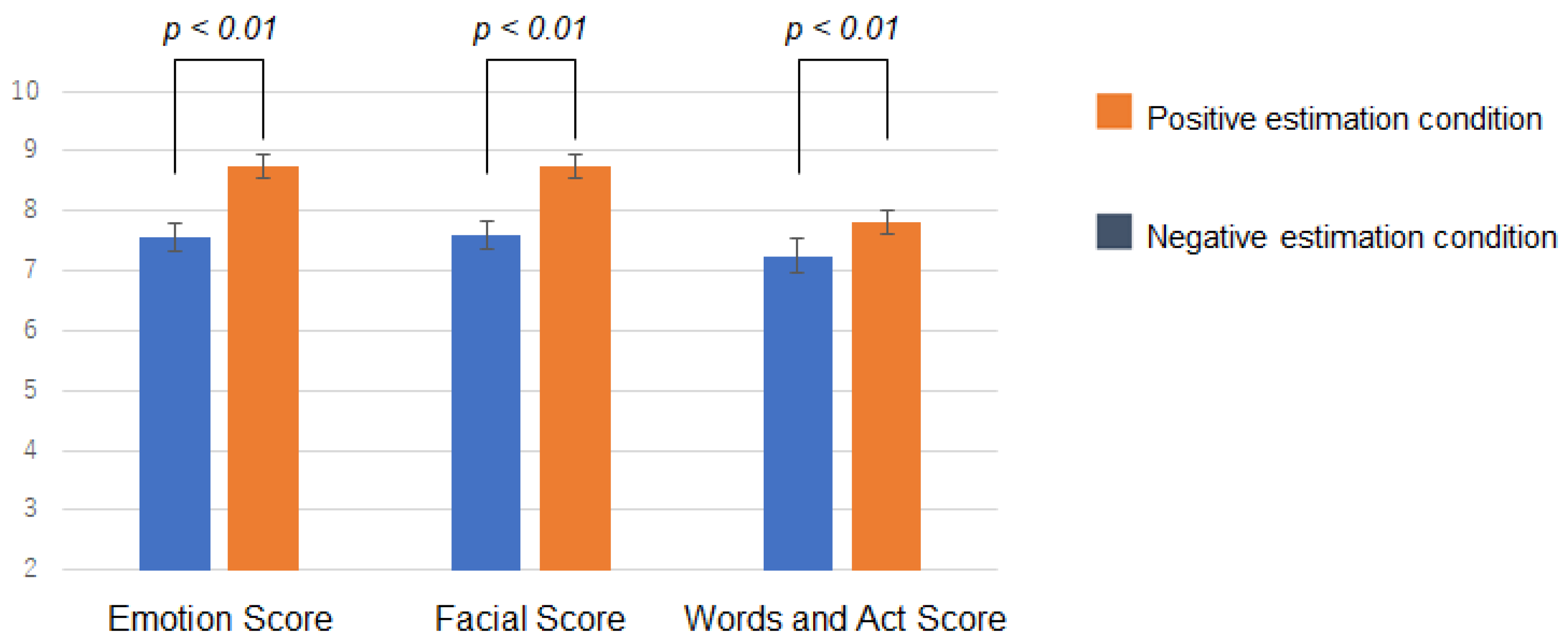

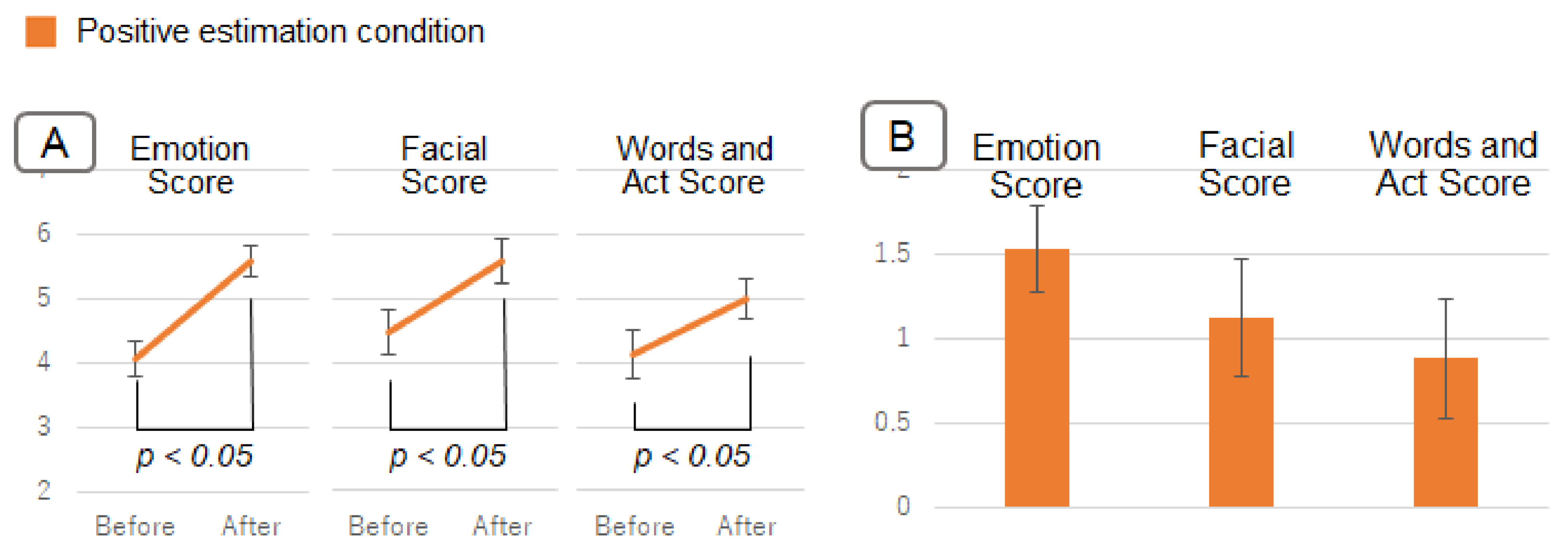

5.3. Result

5.4. Discussion

6. Evaluation 3: Verification of Impression Manipulation of a Person with Neutral Facial Expressions

6.1. Neutral Facial Expression Person

6.2. Result

6.3. Discussion

7. General Discussion

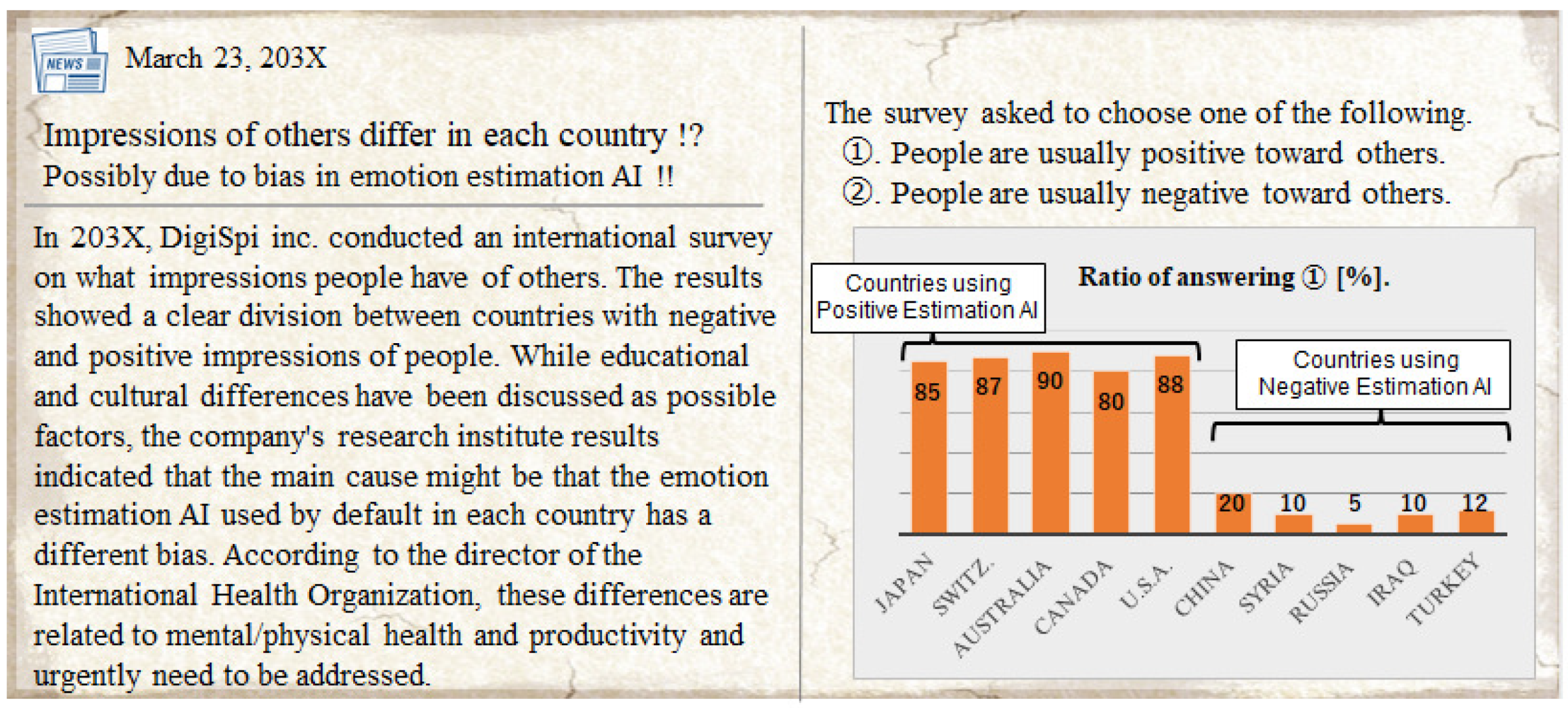

7.1. Dark Side: Possible Adverse Effects of Cognitive Biases Caused by Browsing AI Estimated Information

7.2. Light Side: Feasibility of Technology That Supports Users by Utilizing Cognitive Biases Caused by Browsing AI Estimated Information

7.3. Future Work

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Ko, B.C. A Brief Review of Facial Emotion Recognition Based on Visual Information. Sensors 2018, 18, 401. [Google Scholar] [CrossRef] [PubMed]

- Khalil, R.A.; Jones, E.; Babar, M.I.; Jan, T.; Zafar, M.H.; Alhussain, T. Speech Emotion Recognition Using Deep Learning Techniques: A Review. IEEE Access 2019, 7, 117327–117345. [Google Scholar] [CrossRef]

- Schuller, B.; Rigoll, G.; Lang, M. Hidden Markov Model-Based Speech Emotion Recognition. In Proceedings of the 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003, Proceedings (ICASSP’03), Hong Kong, China, 6–10 April 2003; Volume 2, pp. II-1–II-4. [Google Scholar]

- Alswaidan, N.; Menai, M.E.B. A Survey of State-of-the-Art Approaches for Emotion Recognition in Text. Knowl. Inf. Syst. 2020, 62, 2937–2987. [Google Scholar] [CrossRef]

- Horlings, R.; Datcu, D.; Rothkrantz, L.J. Emotion Recognition Using Brain Activity. In Proceedings of the 9th International Conference on Computer Systems and Technologies and Workshop for PhD Students in Computing, New York, NY, USA, 12–13 June 2008; p. II-1. [Google Scholar]

- Adams, A.T.; Costa, J.; Jung, M.F.; Choudhury, T. Mindless Computing: Designing Technologies to Subtly Influence Behavior. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; pp. 719–730. [Google Scholar]

- Dingler, T.; Tag, B.; Karapanos, E.; Kise, K.; Dengel, A. Workshop on Detection and Design for Cognitive Biases in People and Computing Systems. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–6. [Google Scholar]

- Futami, K.; Terada, T.; Tsukamoto, M. A Method for Behavior Change Support by Controlling Psychological Effects on Walking Motivation Caused by Step Count Log Competition System. Sensors 2021, 21, 8016. [Google Scholar] [CrossRef] [PubMed]

- Costa, J.; Jung, M.F.; Czerwinski, M.; Guimbreti’ere, F.; Le, T.; Choudhury, T. Regulating Feelings During Interpersonal Conflicts by Changing Voice Self-perception. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–13, ISBN 978-1-4503-5620-6. [Google Scholar]

- Shirai, K.; Futami, K.; Murao, K. Exploring Tactile Stimuli from a Wrist-Worn Device to Manipulate Subjective Time Based on the Filled-Duration Illusion. Sensors 2022, 22, 7194. [Google Scholar] [CrossRef]

- Futami, K.; Hirayama, N.; Murao, K. Unconscious Elapsed Time Perception Controller Considering Unintentional Change of Illusion: Designing Visual Stimuli Presentation Method to Control Filled-Duration Illusion on Visual Interface and Exploring Unintentional Factors That Reverse Trend of Illusion. IEEE Access 2022, 10, 109253–109266. [Google Scholar]

- Costa, J.; Adams, A.T.; Jung, M.F.; Guimbretière, F.; Choudhury, T. EmotionCheck: Leveraging Bodily Signals and False Feedback to Regulate Our Emotions. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 758–769. [Google Scholar]

- Costa, J.; Adams, A.T.; Jung, M.F.; Guimbretière, F.; Choudhury, T. EmotionCheck: A Wearable Device to Regulate Anxiety through False Heart Rate Feedback. GetMobile Mob. Comp. Commun. 2017, 21, 22–25. [Google Scholar] [CrossRef]

- Costa, J.; Guimbretière, F.; Jung, M.F.; Choudhury, T. BoostMeUp: Improving Cognitive Performance in the Moment by Unobtrusively Regulating Emotions with a Smartwatch. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 40:1–40:23. [Google Scholar] [CrossRef]

- Futami, K.; Seki, T.; Murao, K. Unconscious load changer: Designing method to subtly influence load perception by simply presenting modified myoelectricity sensor information. Front. Comput. Sci. 2022, 4, 914525. [Google Scholar] [CrossRef]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A Survey on Bias and Fairness in Machine Learning. ACM Comput. Surv. 2021, 54, 115. [Google Scholar] [CrossRef]

- Roselli, D.; Matthews, J.; Talagala, N. Managing Bias in AI. In Proceedings of the Companion Proceedings of the 2019 World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 539–544. [Google Scholar]

- Wolf, M.J.; Miller, K.W.; Grodzinsky, F.S. Why We Should Have Seen That Coming: Comments on Microsoft’s Tay “Experiment,” and Wider Implications. ORBIT J. 2017, 1, 1–12. [Google Scholar] [CrossRef]

- Garcia, M. Racist in the Machine. World Policy J. 2016, 33, 111–117. [Google Scholar] [CrossRef]

- Angwin, J.; Larson, J.; Mattu, S.; Kirchner, L. Machine Bias: There’s Software Used across the Country to Predict Future Criminals. And It’s Biased against Blacks. 2016. Available online: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing (accessed on 10 December 2022).

- Dastin, J. Amazon Scraps Secret AI Recruiting Tool That Showed Bias against Women. In Ethics of Data and Analytics; Auerbach Publications: Boca Raton, FA, USA, 2018; pp. 296–299. [Google Scholar]

- Shaw, J. Artificial Intelligence and Ethics. Harv. Mag. 2019, 30, 1–11. [Google Scholar]

- Prates, M.O.; Avelar, P.H.; Lamb, L.C. Assessing Gender Bias in Machine Translation: A Case Study with Google Translate. Neural Comput. Appl. 2020, 32, 6363–6381. [Google Scholar] [CrossRef] [Green Version]

- Crawford, K. Time to regulate AI that interprets human emotions. Nature 2021, 592, 167. [Google Scholar] [CrossRef] [PubMed]

- Yoshida, S.; Tanikawa, T.; Sakurai, S.; Hirose, M.; Narumi, T. Manipulation of an Emotional Experience by Real-Time Deformed Facial Feedback. In Proceedings of the 4th Augmented Human International Conference, Stuttgart, Germany, 7–8 March 2013; pp. 35–42. [Google Scholar]

- Suzuki, K.; Yokoyama, M.; Yoshida, S.; Mochizuki, T.; Yamada, T.; Narumi, T.; Tanikawa, T.; Hirose, M. FaceShare: Mirroring with Pseudo-Smile Enriches Video Chat Communications. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 5313–5317, ISBN 978-1-4503-4655-9. [Google Scholar]

- Futami, K.; Terada, T.; Tsukamoto, M. Success Imprinter: A Method for Controlling Mental Preparedness Using Psychological Conditioned Information. In Proceedings of the 7th Augmented Human International Conference 2016, Geneva, Switzerland, 25–27 February 2016; pp. 1–8. [Google Scholar]

- Tagami, S.; Yoshida, S.; Ogawa, N.; Narumi, T.; Tanikawa, T.; Hirose, M. Routine++: Implementing Pre-Performance Routine in a Short Time with an Artificial Success Simulator. In Proceedings of the 8th Augmented Human International Conference, Mountain View, CA, USA, 16–18 March 2017; pp. 1–9. [Google Scholar]

- Duarte, L.; Carriço, L. The Cake Can Be a Lie: Placebos as Persuasive Videogame Elements. In Proceedings of the CHI’13 Extended Abstracts on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 1113–1118. [Google Scholar]

- Futami, K.; Kawahigashi, D.; Murao, K. Mindless memorization booster: A method to influence memorization power using attention induction phenomena caused by visual interface modulation and its application to memorization support for English vocabulary learning. Electronics 2022, 11, 2276. [Google Scholar] [CrossRef]

- Kim, Y.-H.; Jeon, J.H.; Choe, E.K.; Lee, B.; Kim, K.; Seo, J. TimeAware: Leveraging Framing Effects to Enhance Personal Productivity. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 272–283, ISBN 978-1-4503-3362-7. [Google Scholar]

- Ban, Y.; Sakurai, S.; Narumi, T.; Tanikawa, T.; Hirose, M. Improving Work Productivity by Controlling the Time Rate Displayed by the Virtual Clock. In Proceedings of the 6th Augmented Human International Conference, Singapore, 9–11 March 2015; pp. 25–32. [Google Scholar]

- Arakawa, R.; Yakura, H. Mindless Attractor: A False-Positive Resistant Intervention for Drawing Attention Using Auditory Perturbation. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–15, ISBN 978-1-4503-8096-6. [Google Scholar]

- Shirai, K.; Futami, K.; Murao, K. A Method to Manipulate Subjective Time by Using Tactile Stimuli of Wearable Device. In Proceedings of the 2021 International Symposium on Wearable Computers, Virtual, 21–26 September 2021; pp. 63–67, ISBN 978-1-4503-8462-9. [Google Scholar]

- Komatsu, T.; Yamada, S. Exploring Auditory Information to Change Users’ Perception of Time Passing as Shorter. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–12, ISBN 978-1-4503-6708-0. [Google Scholar]

- Shimizu, T.; Futami, K.; Terada, T.; Tsukamoto, M. In-Clock Manipulator: Information-Presentation Method for Manipulating Subjective Time Using Wearable Devices. In Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia, Stuttgart, Germany, 26–29 November 2017; pp. 223–230. [Google Scholar]

- Futami, K.; Seki, T.; Murao, K. Mindless Load Changer: A Method for Manipulating Load Perception by Feedback of Myoelectricity Sensor Information. In Proceedings of the 2021 International Symposium on Wearable Computers, Virtual, 21–26 September 2021; pp. 58–62. [Google Scholar]

- Ban, Y.; Narumi, T.; Fujii, T.; Sakurai, S.; Imura, J.; Tanikawa, T.; Hirose, M. Augmented endurance: Controlling fatigue while handling objects by affecting weight perception using augmented reality. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 69–78, ISBN 978-1-4503-1899-0. [Google Scholar]

- Narumi, T.; Ban, Y.; Kajinami, T.; Tanikawa, T.; Hirose, M. Augmented perception of satiety: Controlling food consumption by changing apparent size of food with augmented reality. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 109–118, ISBN 978-1-4503-1015-4. [Google Scholar]

- Takeuchi, T.; Fujii, T.; Ogawa, K.; Narumi, T.; Tanikawa, T.; Hirose, M. Using Social Media to Change Eating Habits without Conscious Effort. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Seattle, WA, USA, 13–17 September 2014; pp. 527–535. [Google Scholar]

- Shen, R.; Terada, T.; Tsukamoto, M. A Method for Controlling Crowd Flow by Changing Recommender Information on Navigation Application. Int. J. Pervasive Comput. Commun. 2016, 12, 87–106. [Google Scholar] [CrossRef]

- Shen, R.; Terada, T.; Tsukamoto, M. A Navigation System for Controlling Sightseeing Route by Changing Presenting Information. In Proceedings of the 2016 19th International Conference on Network-Based Information Systems (NBiS), Ostrava, Czech Republic, 7–9 September 2016; pp. 317–322. [Google Scholar]

- Futami, K.; Terada, T.; Tsukamoto, M. A Method for Controlling Arrival Time to Prevent Late Arrival by Manipulating Vehicle Timetable Information. J. Data Intell. 2020, 1, 1–17. [Google Scholar] [CrossRef]

- Isoyama, N.; Terada, T.; Tsukamoto, M. Primer Streamer: A System to Attract Users to Interests via Images on HMD. In Proceedings of the International Conference on Advances in Mobile Computing & Multimedia, Vienna, Austria, 2–4 December 2013; p. 93. [Google Scholar]

- Isoyama, N.; Terada, T.; Tsukamoto, M. An Evaluation on Behaviors in Taking Photos by Changing Icon Images on Head Mounted Display. In Proceedings of the Adjunct Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers, Osaka, Japan, 7–11 September 2015; pp. 985–990. [Google Scholar]

- Reitberger, W.; Meschtscherjakov, A.; Mirlacher, T.; Scherndl, T.; Huber, H.; Tscheligi, M. A Persuasive Interactive Mannequin for Shop Windows. In Proceedings of the 4th international Conference on Persuasive Technology, Claremont, CA, USA, 26–29 April 2009; pp. 1–8. [Google Scholar]

- Arroyo, E.; Bonanni, L.; Valkanova, N. Embedded Interaction in a Water Fountain for Motivating Behavior Change in Public Space. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 685–688. [Google Scholar]

- Menon, S.; Zhang, W.; Perrault, S.T. Nudge for Deliberativeness: How Interface Features Influence Online Discourse. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13, ISBN 978-1-4503-6708-0. [Google Scholar]

- Yaqub, W.; Kakhidze, O.; Brockman, M.L.; Memon, N.; Patil, S. Effects of Credibility Indicators on Social Media News Sharing Intent. In Proceedings of the 2020 CHI Conferenc Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–14. [Google Scholar]

- Papenfuss, M. Woman in China Says Colleague’s Face Was Able to Unlock Her IPhone X. Huffpost, 14 December 2017; 12–17. [Google Scholar]

- Mills, K. ‘Racist’ soap Dispenser Refuses to Help Dark-Skinned Man Wash His Hands—But Twitter Blames ‘Technology’. Mirror, 17 August 2017; 12–17. [Google Scholar]

- Wang, Y.; Kosinski, M. Deep Neural Networks Are More Accurate than Humans at Detecting Sexual Orientation from Facial Images. J. Personal. Soc. Psychol. 2018, 114, 246. [Google Scholar] [CrossRef] [Green Version]

- McDuff, D.; Cheng, R.; Kapoor, A. Identifying Bias in AI Using Simulation. arXiv 2018, arXiv:1810.00471. [Google Scholar]

- Northcraft, G.B.; Neale, M.A. Experts, Amateurs, and Real Estate: An Anchoring-and-Adjustment Perspective on Property Pricing Decisions. Organ. Behav. Hum. Decis. Process. 1987, 39, 84–97. [Google Scholar] [CrossRef]

- Epley, N.; Gilovich, T. When Effortful Thinking Influences Judgmental Anchoring: Differential Effects of Forewarning and Incentives on Self-Generated and Externally Provided Anchors. J. Behav. Decis. Mak. 2005, 18, 199–212. [Google Scholar] [CrossRef]

- Wilson, T.D.; Houston, C.E.; Etling, K.M.; Brekke, N. A New Look at Anchoring Effects: Basic Anchoring and Its Antecedents. J. Exp. Psychol. Gen. 1996, 125, 387. [Google Scholar] [CrossRef] [PubMed]

- Rastogi, C.; Zhang, Y.; Wei, D.; Varshney, K.R.; Dhurandhar, A.; Tomsett, R. Deciding Fast and Slow: The Role of Cognitive Biases in AI-Assisted Decision-Making. arXiv 2020, arXiv:2010.07938 2020. [Google Scholar] [CrossRef]

- Papenmeier, A.; Kern, D.; Englebienne, G.; Seifert, C. It’s Complicated: The Relationship between User Trust, Model Accuracy and Explanations in AI. ACM Trans. Comput.-Hum. Interact. 2022, 29, 35. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (Ck+): A Complete Dataset for Action Unit and Emotion-Specified Expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Lyons, M.; Akamatsu, S.; Kamachi, M.; Gyoba, J. Coding Facial Expressions with Gabor Wavelets. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 200–205. [Google Scholar]

- Mussweiler, T.; Strack, F.; Pfeiffer, T. Overcoming the Inevitable Anchoring Effect: Considering the Opposite Compensates for Selective Accessibility. Personal. Soc. Psychol. Bull. 2000, 26, 1142–1150. [Google Scholar] [CrossRef]

- Kahneman, D.; Slovic, S.P.; Slovic, P.; Tversky, A. Judgment under Uncertainty: Heuristics and Biases; Cambridge University Press: Cambridge, UK, 1982. [Google Scholar]

- Mussweiler, T.; Strack, F. Hypothesis-Consistent Testing and Semantic Priming in the Anchoring Paradigm: A Selective Accessibility Model. J. Exp. Soc. Psychol. 1999, 35, 136–164. [Google Scholar] [CrossRef] [Green Version]

- Chaiken, S. Heuristic versus Systematic Information Processing and the Use of Source versus Message Cues in Persuasion. J. Personal. Soc. Psychol. 1980, 39, 752. [Google Scholar] [CrossRef]

- Kahneman, D. Thinking, Fast and Slow; Macmillan: New York, NY, USA, 2011. [Google Scholar]

- Gawronski, B.; Creighton, L.A. Dual-Process Theories. In The Oxford Handbook of Social Cognition; Oxford University Press: Oxford, UK, 2013; pp. 282–312. [Google Scholar]

- Tversky, A.; Kahneman, D. Judgment under Uncertainty: Heuristics and Biases: Biases in Judgments Reveal Some Heuristics of Thinking under Uncertainty. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef]

- Price, D.D.; Finniss, D.G.; Benedetti, F. A Comprehensive Review of the Placebo Effect: Recent Advances and Current Thought. Annu. Rev. Psychol. 2008, 59, 565–590. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Futami, K.; Yanase, S.; Murao, K.; Terada, T. Unconscious Other’s Impression Changer: A Method to Manipulate Cognitive Biases That Subtly Change Others’ Impressions Positively/Negatively by Making AI Bias in Emotion Estimation AI. Sensors 2022, 22, 9961. https://doi.org/10.3390/s22249961

Futami K, Yanase S, Murao K, Terada T. Unconscious Other’s Impression Changer: A Method to Manipulate Cognitive Biases That Subtly Change Others’ Impressions Positively/Negatively by Making AI Bias in Emotion Estimation AI. Sensors. 2022; 22(24):9961. https://doi.org/10.3390/s22249961

Chicago/Turabian StyleFutami, Kyosuke, Sadahiro Yanase, Kazuya Murao, and Tsutomu Terada. 2022. "Unconscious Other’s Impression Changer: A Method to Manipulate Cognitive Biases That Subtly Change Others’ Impressions Positively/Negatively by Making AI Bias in Emotion Estimation AI" Sensors 22, no. 24: 9961. https://doi.org/10.3390/s22249961