Multiscale Joint Optimization Strategy for Retinal Vascular Segmentation

Abstract

:1. Introduction

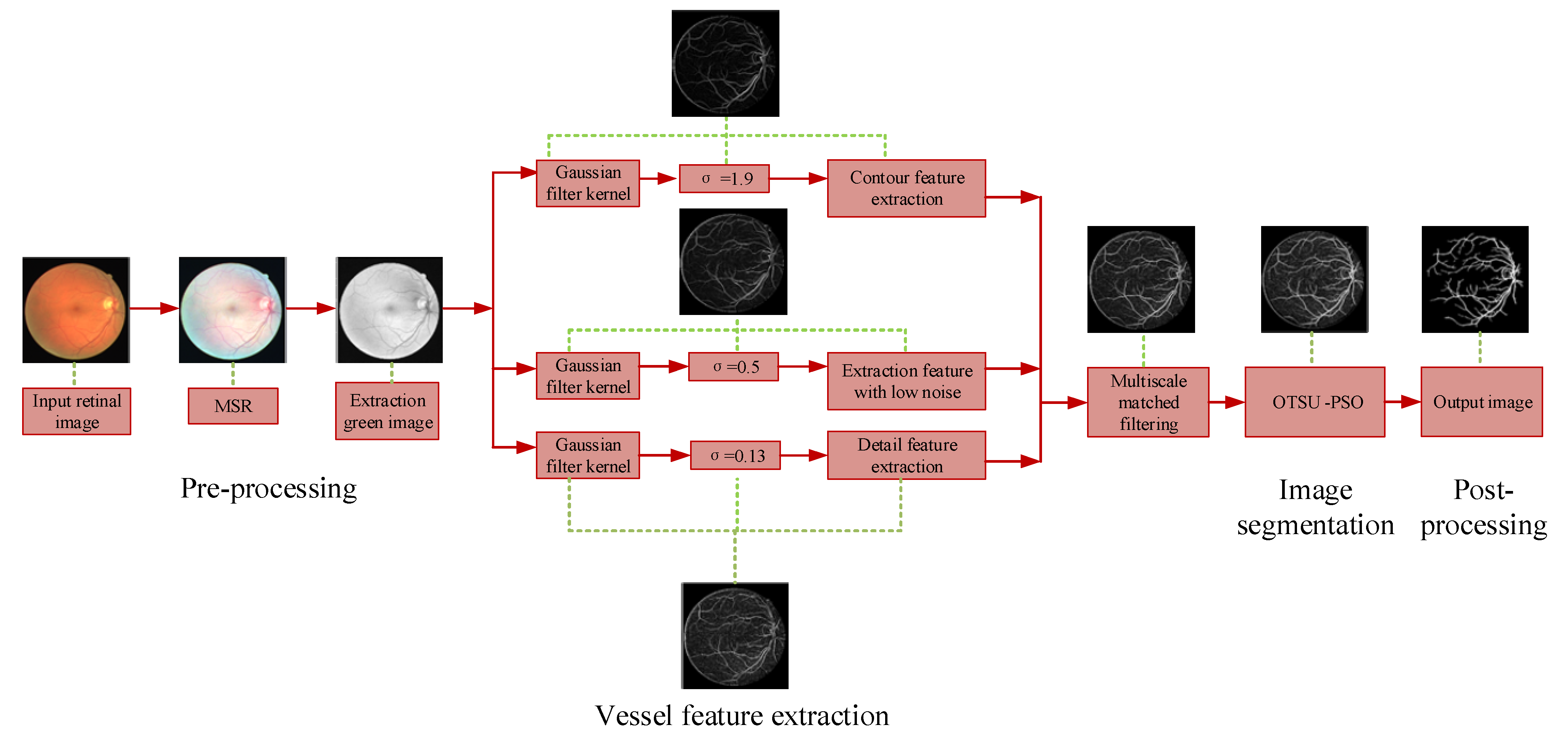

2. Proposed Methodology

2.1. Overview

2.2. Image Pre-Processing

MSR Algorithm

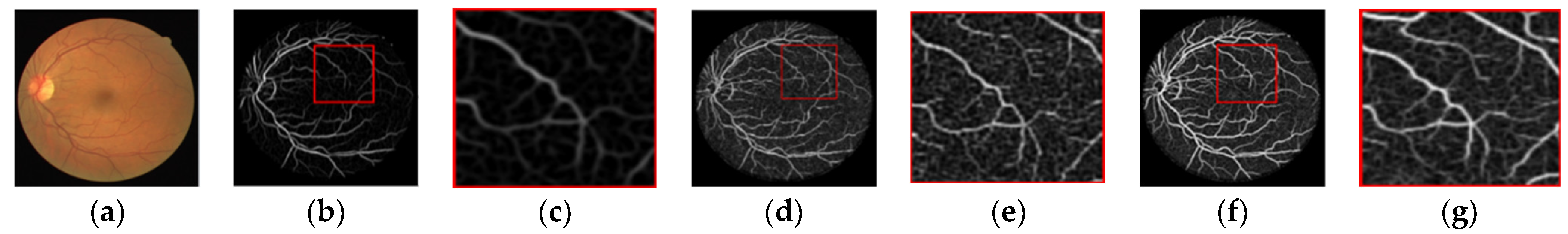

2.3. Vascular Feature Extraction

2.3.1. Multi-Scale Matching Filtering

2.3.2. Information Fusion of Vascular Characteristics

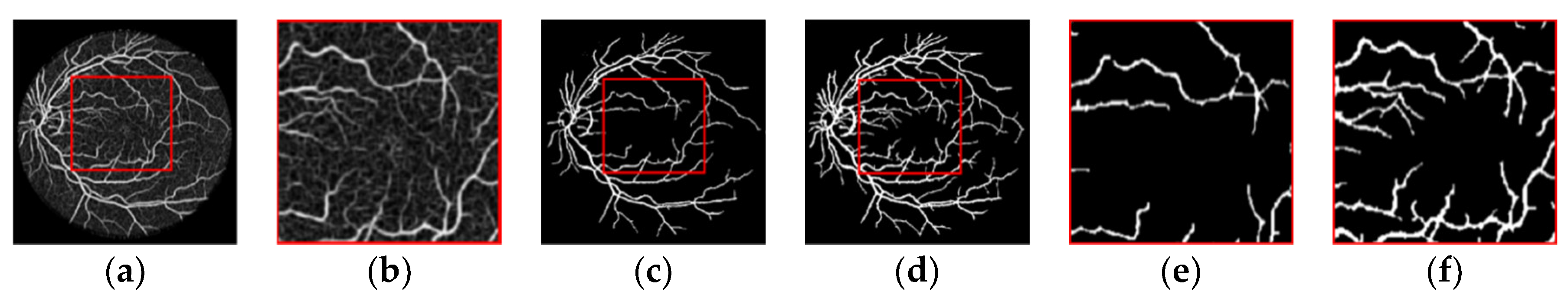

2.4. Image Segmentation

2.4.1. OTSU Algorithm

2.4.2. PSO Algorithm

2.4.3. OTSU Image Segmentation Based on PSO (OTSU-PSO Algorithm)

2.5. Image Post-Processing

- (1).

- the median filter is used to denoise the image and connect the broken blood vessels.

- (2).

- the morphological processing is used to connect domain area and remove the large noise.

- (3).

- the mask image is extracted from the source retinal image, and the difference image between the source retinal image and the mask image is obtained.

- (4).

- The difference image is binarized by the OTSU algorithm, and then the binary image is expanded by the morphological processing.

- (5).

- The segmented vascular image is subtracted from the expanded edge image to get the final output image.

3. Results and Discussion

3.1. Experimental Environment and Datasets

3.2. Segmentation Evaluation Index

3.3. Experimental Results and Analysis

3.4. Comparison with Other Methods

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kondermann, C.; Kondermann, D.; Yan, M. Blood vessel classification into arteries and veins in retinal images. Proc. SPIE 2007, 6512, 651247. [Google Scholar] [CrossRef]

- Wang, J.J.; Liew, G.; Klein, R.; Rochtchina, E.; Knudtson, M.D.; Klein, B.E.; Wong, T.Y.; Burlutsky, G.; Mitchell, P. Retinal vessel diameter and cardiovascular mortality: Pooled data analysis from two older populations. Eur. Heart J. 2007, 28, 1984–1992. [Google Scholar] [CrossRef] [PubMed]

- Qiu, X.-Q. Analysis of Current Research Status of Retinal Vessel Segmentation. Graph. Image 2019, 2019, 31–36. [Google Scholar]

- Procházka, A. Registration and Analysis of Retinal Images for Diagnosis and Treatment Monitoring. In Proceedings of the 2014 International Workshop on Computational Intelligence for Multimedia Understanding (IWCIM), Paris, France, 1–2 November 2014; pp. T5/1–T5/4. [Google Scholar]

- Gegundez-Arias, M.E.; Marin, D.; Ponte, B.; Alvarez, F.; Garrido, J.; Ortega, C.; Vasallo, M.J.; Bravo, J.M. A tool for automated diabetic retinopathy pre-screening based on retinal image computer analysis. Comput. Biol. Med. 2017, 88, 100–109. [Google Scholar] [CrossRef]

- Li, T.; Bo, W.; Hu, C.; Kang, H.; Liu, H.; Wang, K.; Fu, H. Applications of Deep Learning in Fundus Images: A Review. Med. Image Anal. 2021, 69, 101971. [Google Scholar] [CrossRef]

- Li, Q.; Feng, B.; Xie, L.; Liang, P.; Zhang, H.; Wang, T. A cross-modality learning approach for vessel segmentation in retinal images. IEEE Trans. Med. Imaging 2016, 35, 109–118. [Google Scholar] [CrossRef]

- Yan, Z.; Yang, X.; Cheng, K.-T.T. Joint Segment-Level and Pixel-Wise Losses for Deep Learning Based Retinal Vessel Segmentation. IEEE Trans. Biomed. Eng. 2018, 65, 1912–1923. [Google Scholar] [CrossRef]

- Khanal, A.; Estrada, R. Dynamic Deep Networks for Retinal Vessel Segmentation. Front. Comput. Sci. 2020, 2, 35. [Google Scholar] [CrossRef]

- Gegundez-Arias, M.E.; Marin-Santos, D.; Perez-Borrero, I.; Vasallo-Vazquez, M.J. A new deep learning method for blood vessel segmentation in retinal images based on convolutional kernels and modified U-Net model. Comput. Methods Programs Biomed. 2021, 205, 1060811. [Google Scholar] [CrossRef]

- Tolias, Y.; Panas, S. A fuzzy vessel tracking algorithm for retinal images based on fuzzy clustering. IEEE Trans. Med. Imaging 1998, 17, 263–273. [Google Scholar] [CrossRef]

- Chaudhuri, S.; Chatterjee, S.; Katz, N.; Nelson, M.; Goldbaum, M. Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans. Med. Imaging 1989, 8, 263–269. [Google Scholar] [CrossRef] [Green Version]

- Odstrcilik, J.; Kolar, R.; Budai, A.; Hornegger, J.; Jan, J.; Gazarek, J.; Kubena, T.; Cernosek, P.; Svoboda, O.; Angelopoulou, E. Retinal vessel segmentation by improved matched filtering: Evaluation on a new high-resolution fundus image database. IET Image Process. 2013, 7, 373–383. [Google Scholar] [CrossRef]

- Gabor, D. Theory of communication. J. Inst. Electr. Eng.-Part III Radio Commun. Eng. 1946, 93, 58. [Google Scholar] [CrossRef]

- Daugman, J.G. Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. JOSA A 1985, 2, 1160–1169. [Google Scholar] [CrossRef] [PubMed]

- Duits, R. Perceptual Organization in Image Analysis: A Mathematical Approach Based on Scale, Orientation and Curvature. Ph.D. Thesis, Technische Universiteit Eindhoven, Eindhoven, The Netherlands, 2005; pp. 129–136. [Google Scholar]

- Pan, Y.; Yi, C. Retinal Vessel Segmentation Based on Multi-scale Frangi Filter. Mod. Inform. Technol. 2020, 4, 116–122. [Google Scholar]

- Wang, W.; Zhang, J.; Wu, W. New approach to segment retinal vessel using morphology and Otsu. Appl. Res. Comput. 2019, 36, 2228–2231. [Google Scholar] [CrossRef]

- Oliveira, W.S.; Teixeira, J.V.; Ren, T.I.; Cavalcanti, G.; Sijbers, J. Unsupervised Retinal Vessel Segmentation Using Combined Filters. PLoS ONE 2016, 11, e0149943. [Google Scholar] [CrossRef] [Green Version]

- Yavuz, Z.; Köse, C. Blood Vessel Extraction in Color Retinal Fundus Images with Enhancement Filtering and Unsupervised Classification. J. Healthc. Eng. 2017, 2017, 4897258. [Google Scholar] [CrossRef]

- Zhou, L.; Rzeszotarski, M.S.; Singerman, L.J.; Chokreff, J.M. The detection and quantification of retinopathy using digital angiograms. IEEE Trans. Med. Imaging 1994, 13, 619–626. [Google Scholar] [CrossRef]

- Fraz, M.; Barman, S.; Remagnino, P.; Hoppe, A.; Basit, A.; Uyyanonvara, B.; Rudnicka, A.; Owen, C. An approach to localize the retinal blood vessels using bit planes and centerline detection. Comput. Methods Programs Biomed. 2012, 108, 600–616. [Google Scholar] [CrossRef]

- Jiang, X.; Mojon, D. Adaptive local thresholding by verification-based multi threshold probing with application to vessel detection in retinal images. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 131–137. [Google Scholar] [CrossRef] [Green Version]

- Brainard, D.H.; Wandell, B.A. Analysis of the retinex theory of color vision. J. Opt. Soc. Am. A 1986, 3, 1651–1661. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.; Rahman, Z.; Woodell, G. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Fuqi, L.; Xiaomin, L. Multi-level threshold image segmentation algorithm based on particle swarm optimization and fuzzy entropy. Appl. Res. Comput. 2019, 36, 2856–2860. [Google Scholar] [CrossRef]

- Hoover, A.; Kouznetsova, V.; Goldbaum, M. Locating Blood Vessels in Retinal Images by Piecewise Threshold Probing of a Matched Filter Response. IEEE Trans. Med. Imaging 2000, 19, 203–210. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Fan, D.P.; Cheng, M.M.; Liu, Y.; Li, T.; Borji, A. Structure-Measure: A New Way to Evaluate Foreground Maps. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Dasgupta, A.; Singh, S. A Fully Convolutional Neural Network Based Structured Prediction Approach towards the Retinal Vessel Segmentation. In Proceedings of the 14th International Symposium on Biomedical Imaging (ISBI), Melbourne, Australia, 18–21 April 2017; pp. 248–251. [Google Scholar]

- Yang, Y.; Shao, F.; Fu, Z.; Fu, R. Discriminative dictionary learning for retinal vessel segmentation using fusion of multiple features. Signal Image Video Process. 2019, 13, 1529–1537. [Google Scholar] [CrossRef]

- Adapa, D.; Raj, A.N.J.; Alisetti, S.N.; Zhuang, Z.; Naik, G. A supervised blood vessel segmentation technique for digital Fundus images using Zernike Moment based features. PLoS ONE 2020, 15, e0229831. [Google Scholar] [CrossRef]

- Biswal, B.; Pooja, T.; Subrahmanyam, N.B. Robust retinal blood vessel segmentation using line detectors with multiple masks. IET Image Process. 2018, 12, 389–399. [Google Scholar] [CrossRef]

- Ben Abdallah, M.; Azar, A.T.; Guedri, H.; Malek, J.; Belmabrouk, H. Noise-estimation-based anisotropic diffusion approach for retinal blood vessel segmentation. Neural Comput. Appl. 2018, 29, 159–180. [Google Scholar] [CrossRef]

- Roy, S.; Mitra, A.; Roy, S.; Setua, S.K. Blood vessel segmentation of retinal image using Clifford matched filter and Clifford convolution. Multimed. Tools Appl. 2019, 78, 34839–34865. [Google Scholar] [CrossRef]

| Parameter Value | PSO |

|---|---|

| ) | 40 |

| ) | 0.5 |

| Learning constants | |

| ) | 20 |

| ) | X |

| X: Not parameter value |

| Input: number of iterations M, population size N, dimension D. Output: the optimal threshold combination (gbest _ position (i), i is the threshold number). |

| Step 1: Initialize the velocity and position of particles, individual extremum pbesti and global extremum gbest. Step 2: Equation (14) is used to calculate the fitness value of each particle to update the individual extremum pbesti and the global extremum gbest. Step 3: Update the particle velocity and position of the particle according to the Equations (15)–(16). Step 4: Determine if the iteration stop condition is satisfied, then the algorithm ends. Otherwise turn to Step 2, continue to iterative cycle, and finally find the optimal solution. |

| DRIVE | Acc | Se | Sp | STARE | Acc | Se | Sp |

|---|---|---|---|---|---|---|---|

| 01_test | 0.9404 | 0.8938 | 0.9450 | im0001 | 0.9424 | 0.6506 | 0.9656 |

| 02_test | 0.9567 | 0.7809 | 0.9768 | im0002 | 0.9387 | 0.5427 | 0.9650 |

| 03_test | 0.9401 | 0.7380 | 0.9625 | im0003 | 0.9572 | 0.6199 | 0.9829 |

| 04_test | 0.9535 | 0.7419 | 0.9749 | im0004 | 0.9426 | 0.8315 | 0.9455 |

| 05_test | 0.9545 | 0.7281 | 0.9779 | im0005 | 0.9475 | 0.7248 | 0.9680 |

| 06_test | 0.9445 | 0.7010 | 0.9707 | im0044 | 0.9681 | 0.7815 | 0.9681 |

| 07_test | 0.9512 | 0.7132 | 0.9751 | im0077 | 0.9596 | 0.7697 | 0.9748 |

| 08_test | 0.9500 | 0.7047 | 0.9730 | im0081 | 0.9549 | 0.6970 | 0.9757 |

| 09_test | 0.9559 | 0.7082 | 0.9777 | im0082 | 0.9696 | 0.8445 | 0.9790 |

| 10_test | 0.9560 | 0.7675 | 0.9729 | im0139 | 0.9541 | 0.7254 | 0.9731 |

| 11_test | 0.9487 | 0.7548 | 0.9678 | im0162 | 0.9669 | 0.7477 | 0.9852 |

| 12_test | 0.9553 | 0.7551 | 0.9742 | im0163 | 0.9745 | 0.8564 | 0.9838 |

| 13_test | 0.9537 | 0.6764 | 0.9837 | im0235 | 0.9660 | 0.8739 | 0.9733 |

| 14_test | 0.9527 | 0.7947 | 0.9666 | im0236 | 0.9677 | 0.8957 | 0.9734 |

| 15_test | 0.9067 | 0.8514 | 0.9110 | im0239 | 0.9571 | 0.9034 | 0.9601 |

| 16_test | 0.9617 | 0.7334 | 0.9844 | im0240 | 0.9323 | 0.9248 | 0.9326 |

| 17_test | 0.9597 | 0.6609 | 0.9872 | im0255 | 0.9675 | 0.8120 | 0.9832 |

| 18_test | 0.9655 | 0.7673 | 0.9826 | im0291 | 0.9706 | 0.7900 | 0.9775 |

| 19_test | 0.9580 | 0.8813 | 0.9650 | im0319 | 0.9707 | 0.7520 | 0.9769 |

| 20_test | 0.9628 | 0.8016 | 0.9756 | im0324 | 0.9496 | 0.7910 | 0.9542 |

| Mean | 0.9514 | 0.7577 | 0.9702 | 0.9579 | 0.9729 | 0.7762 | 0.9699 |

| Datasets | Contrast | SSIM | S-Measure |

|---|---|---|---|

| DRIVE | Proposed vs. Expert 1 | 0.7385 | 0.7982 |

| Proposed vs. Expert 2 | 0.6220 | 0.8069 | |

| Expert1 vs. Expert2 | 0.5142 | 0.8366 | |

| STARE | Proposed vs. Expert 1 | 0.7636 | 0.7986 |

| Proposed vs. Expert 2 | 0.7207 | 0.7708 | |

| Expert1 vs. Expert2 | 0.7122 | 0.7793 |

| Methods | Years | Acc | Se | Sp | Acc | Se | Sp | |

|---|---|---|---|---|---|---|---|---|

| DRIVE | STARE | |||||||

| Supervised | Li et al. [7] | 2016 | 0.9527 | 0.7569 | 0.9816 | 0.9628 | 0.7726 | 0.9844 |

| Dasgupta et al. [31] | 2016 | 0.9533 | 0.7569 | 0.9792 | - | - | - | |

| Yan et al. [8] | 2018 | 0.9542 | 0.7653 | 0.9801 | 0.9612 | 0.7581 | 0.9846 | |

| Yang et al. [32] | 2019 | 0.9421 | 0.7560 | 0.9696 | 0.9477 | 0.7202 | 0.9733 | |

| Adapa et al. [33] | 2020 | 0.9450 | 0.6994 | 0.9811 | 0.9486 | 0.6298 | 0.9839 | |

| Unsupervised | Biswal et al. [34] | 2018 | 0.9545 | 0.7100 | 0.9700 | 0.9495 | 0.7000 | 0.9700 |

| Ben et al. [35] | 2018 | 0.9389 | 0.6887 | 0.9765 | 0.9388 | 0.6801 | 0.9711 | |

| Wang et al. [18] | 2019 | 0.9382 | 0.5686 | 0.9926 | 0.9460 | 0.6378 | 0.9815 | |

| Roy et al. [36] | 2019 | 0.9295 | 0.4392 | 0.9622 | 0.9488 | 0.4317 | 0.9718 | |

| YUAN et al. [17] | 2020 | 0.9500 | 0.7100 | 0.9700 | - | - | - | |

| Proposed | 2021 | 0.9572 | 0.7798 | 0.9758 | 0.9579 | 0.7762 | 0.9699 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, M.; Zhou, J.; Luo, C.; Xu, T.; Xing, X. Multiscale Joint Optimization Strategy for Retinal Vascular Segmentation. Sensors 2022, 22, 1258. https://doi.org/10.3390/s22031258

Yan M, Zhou J, Luo C, Xu T, Xing X. Multiscale Joint Optimization Strategy for Retinal Vascular Segmentation. Sensors. 2022; 22(3):1258. https://doi.org/10.3390/s22031258

Chicago/Turabian StyleYan, Minghan, Jian Zhou, Cong Luo, Tingfa Xu, and Xiaoxue Xing. 2022. "Multiscale Joint Optimization Strategy for Retinal Vascular Segmentation" Sensors 22, no. 3: 1258. https://doi.org/10.3390/s22031258

APA StyleYan, M., Zhou, J., Luo, C., Xu, T., & Xing, X. (2022). Multiscale Joint Optimization Strategy for Retinal Vascular Segmentation. Sensors, 22(3), 1258. https://doi.org/10.3390/s22031258