1. Introduction

According to the “Global Tuberculosis Report 2020” issued by the World Health Organization (WHO), the number of new tuberculosis patients in China in 2019 was approximately 833,000, ranking third in the world [

1]. Due to the lack of experienced physicians or related diagnostic equipment in China’s economically underdeveloped remote areas, the prevention and treatment of tuberculosis in primary hospitals is difficult. The use of “Internet +” technology can improve the level of screening of tuberculosis patients in primary hospitals, which is also an important part of effective prevention and treatment of tuberculosis. In underdeveloped areas, digital radiography (DR) technology is widely used in tuberculosis screening, and chest radiographs are examined by experienced physicians for the diagnosis of TB. However, physicians in primary hospitals have less experience in reading such radiographs, and the imaging quality of the DR equipment is not good. The above reasons can easily lead to misdiagnosis and/or missed diagnosis of TB. As a result, computer-assisted system technology came into being to assist doctors in identifying disease. With the widespread application of deep learning technology in the field of medical image processing, the accuracy of convolutional neural networks (CNNs) in the detection of tuberculosis has also been continuously improved.

In the field of deep learning, Lakhani et al. [

2] first explored the ability of deep convolutional neural networks to detect pulmonary tuberculosis via chest X-ray images. The experimental results show that the AUC (area under the curve) of the best performing model in the detection of tuberculosis was 0.99; the disadvantage of this model is that it has tens of millions of parameters, higher computational complexity, and is difficult to configure on much cheaper and less powerful hardware.

The accuracy of CNNs is closely related to the quality of the image dataset, and a large number of high-quality training samples are more capable of producing excellent models. In terms of image quality, Munadi et al. [

3] proved through experiments that using unsharp masking (UM) image enhancement algorithms or high-frequency emphasis filtering (HEF) on chest X-ray images of tuberculosis patients can effectively improve the judgment ability of CNNs. In terms of image quantity, Liu et al. [

4] further promoted the development of computer-aided tuberculosis diagnosis (CTD), and constructed a large-scale gold-standard tuberculosis dataset with dual labels for classification and location, which can be used for TB research.

Due to the 2D characteristics of the chest X-ray images, the overlap or occlusion of multiple tissues and organs seriously affects the recognition of neural networks. In order to increase CNNs’ attention to the lung area of the chest radiographs, Rahman et al. [

5] used an image segmentation network to segment the lung region, then sent the segmented image to the CNNs. A variety of network experiments have proven that image segmentation can significantly improve classification accuracy, but the two-stage detection algorithm has problems, such as heavy model weight and longer reasoning time.

In addition to improving the image quality to enhance CNNs’ detection of tuberculosis disease, researchers have also conducted many explorations of the model structure. Henghao et al. [

6] used the transfer learning approach to use the pneumonia deep network detection model to train the feature extraction subnetwork of chest radiographs, and proposed a deep learning detection algorithm based on focal loss—Tuberculosis Neural Net (TBNN); the AUC of the model’s detection is 0.91. Rajaraman et al. [

7] used different CNN structures to transfer and learn the same modal pneumonia images, then used ensemble learning to further improve the model’s accuracy. Stacking ensemble learning demonstrated better performance in terms of performance metrics (accuracy (0.941), AUC (0.995)). Although transfer learning can solve the training problem of CNNs on small-scale dataset, other researchers have designed more complex and heavy neural network structures, which will be a huge test for devices with limited computing power and storage power; the reasoning process of these models faces the problems of longer execution time and difficulty in deployment.

Through the above research, analysis, and related experiments, it was found that, firstly, the effect of the lung segmentation algorithm on chest X-ray images is affected by the different imaging manifestations of tuberculosis.

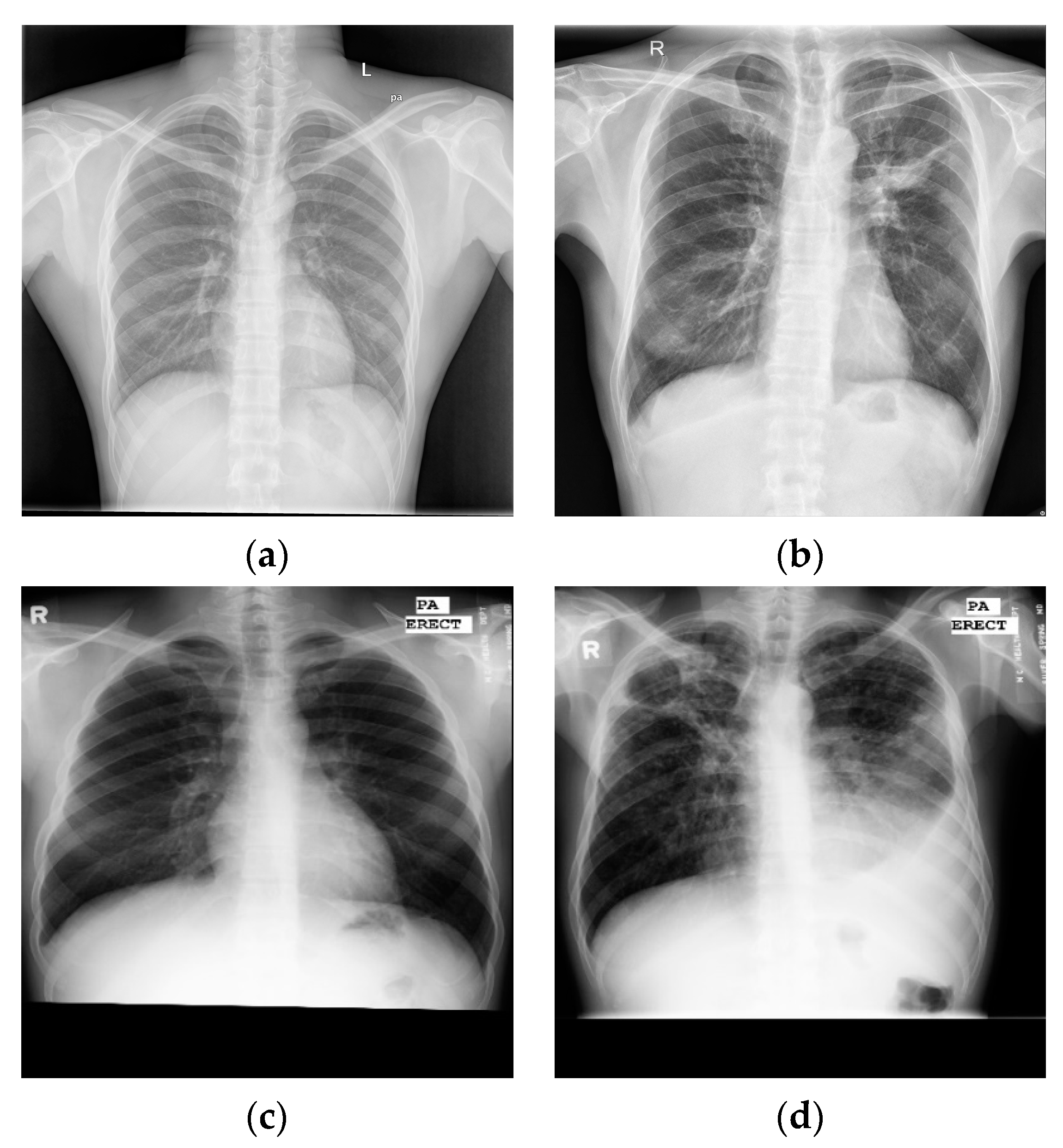

Figure 1 shows four X-ray images of TB patients, and the marked areas in

Figure 1a,b are the lesions. The segmentation of lung parenchyma leads to the omission of some focal points. In

Figure 1c,d, the ribs and lesions in the marked area partially coincide. If rib suppression or lung parenchyma segmentation is performed, the focus will be hidden. After the chest X-ray images of the above four types of tuberculosis are segmented, some of the lesions are missing, resulting in poor performance in CNN classification. Secondly, the classic classification deep CNNs (VGGNet [

8], DenseNet [

9], etc.) and variant networks similar to them have excellent performance, but they are difficult to deploy on devices with low hardware, due to the amount of model parameters, computational complexity, and weights.

Based on the above viewpoints, the specific work of this article is mainly carried out in the following three parts:

- (1)

In the first part, we first analyzed the distribution characteristics of the two publicly available small-scale chest X-ray image datasets of TB, then designed dataset fusion rules and, finally, specifically built the backbone of an efficient TB detection model for PCs or embedded devices with less powerful hardware;

- (2)

In the second part, we first introduced an efficient channel attention (ECA) mechanism and residual module, then improved them and added them to the network structure, and finally built the detailed network architecture through multiple experiments. While compressing model calculations and reducing model parameters, this keeps the accuracy of the model in line with the expected level, so that it has a visible advantage in clinical practice;

- (3)

In the third part, the network proposed in this paper was compared to classical lightweight networks through quantitative indicators such as Sensitivity, Specificity, Accuracy, Precision, Times, etc., and then the model’s reasoning efficiency was evaluated in two different hardware environments.

2. Materials and Methods

2.1. Deep Residual Network

Simple accumulation of a deep neural network structure causes network degradation. At this time, the shallow network can obtain better training than the deep network, because the loss of the deep network training process will decrease and then tend to be saturated. When the number of network layers is increased, the loss will increase. To solve this problem, a deep residual network (ResNet) [

10] was proposed, which can effectively eliminate the gradient dispersion or gradient explosion caused by the increase in the number of model layers.

Figure 2a illustrates the overview of residual structure, which consists of two parts: the identity shortcut connection x, and the residual mapping F(x). x and F(x) + x represent the input and output of the residual module, respectively, while channel represents the output channel of the residual module. For each residual structure we use a stack of 2 layers. The residual module used in this article is shown in

Figure 2b; the purpose of adding 1 × 1 convolution in the shortcut connection direction is to cleverly control the dimensional change of the output feature map. The dimensions (w × h) of F(x) and G(x) must be equal; otherwise, the stride of the 1 × 1 convolution layer can be changed to adjust the dimensions. At the same time, we use the ReLU6 activation function to adjust the maximum value of the ReLU output to 6, in an effort to prevent loss of accuracy when running on low-precision mobile devices (float18/int8). The details of the basic block are shown in

Figure 3 (Basic Block).

2.2. Attention Mechanism

Today, channel attention mechanisms in computer vision offer great potential for improving the performance of CNNs. In 2017, squeeze-and-excitation networks (SENets) first proposed a flexible and efficient channel attention mechanism [

11], the principle of which is that the global average pooling operation is used to compress the feature map into a real number, and then the real number is input into a network composed of two fully connected layers; the network’s output is the weight of the feature map in the channel axis, and the two FC layers are designed to capture nonlinear cross-channel interaction. In 2020, researchers empirically showed that appropriate cross-channel interaction can preserve performance while significantly decreasing model complexity; therefore, they proposed an efficient channel attention (ECA) module for model complexity and computational burden brought by SENet [

12]. As illustrated in

Figure 3 (ECA Block), ECA uses an adaptively adjustable one-dimensional convolution kernel (kernel size = 3) to replace the original fully connected layer, and it effectively fuses the information of adjacent channels. The calculation process of the self-adaptive k is shown in Equation (1).

where

k is the kernel size of the adaptive convolutional layer, and

C is the number of channels of the input feature map. In the original paper,

b and

γ were set to 1 and 2, respectively. In a brief conclusion, this paper draws on the ECA-Net, which effectively improves the performance while adding only a few parameters.

2.3. Network Structure

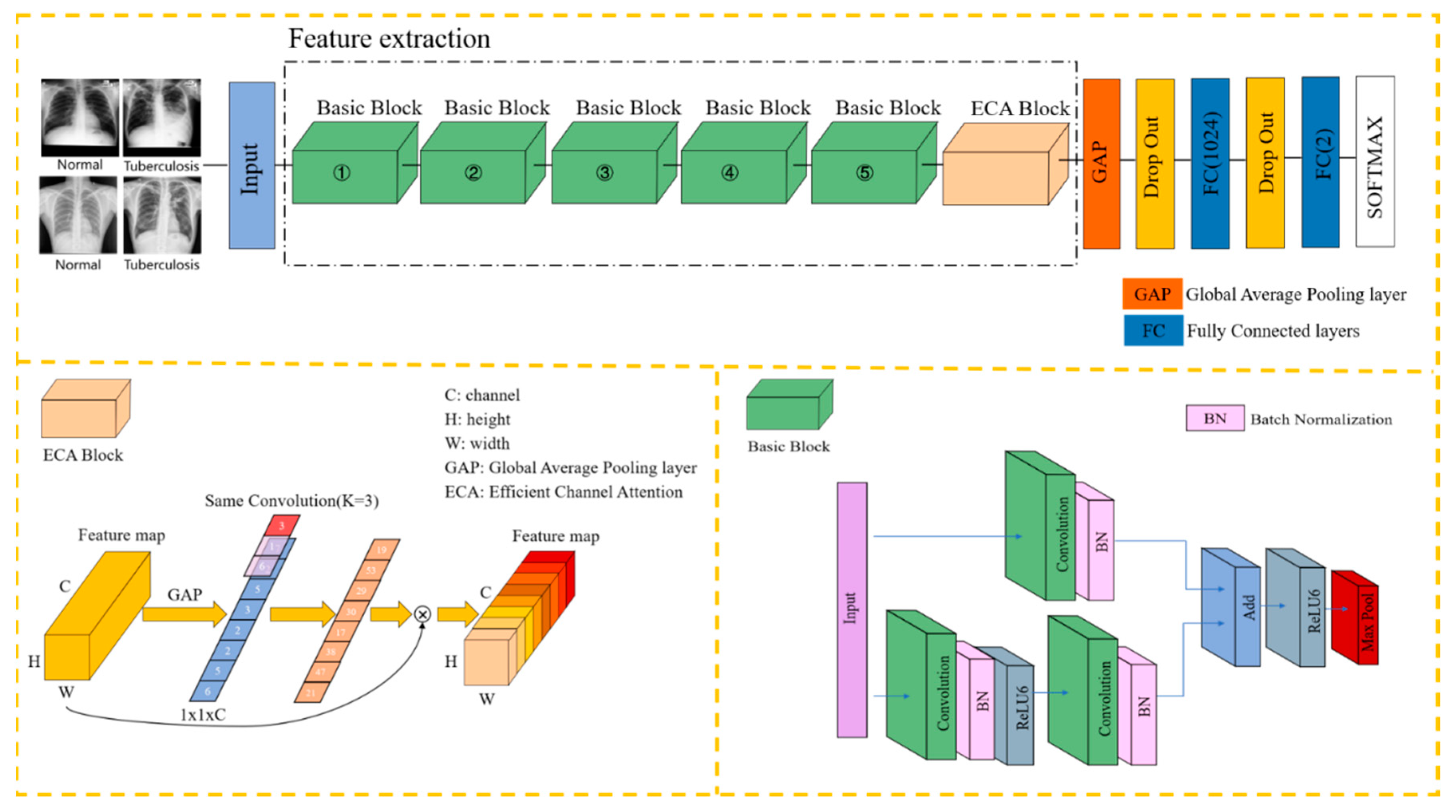

Integrating the content of the previous section with the E-TBNet classification network architecture proposed in this paper,

Figure 3 illustrates the overview of our E-TBNet.

As shown in

Figure 3, the feature extraction part of the network is composed of 5 groups of basic blocks with similar structures. The basic blocks include residual connections and direct connections. The residual connections are composed of two 3 × 3 convolutional layers in order to extract features from the input image. The number of convolution kernels in each layer remains the same. The numbers of convolution kernels for the 5 groups of basic blocks are 16, 32, 48, 64, and 128, respectively.

The direct connection contains only one 1 × 1 convolutional layer; its role is to adjust the size (w × h) and the channel of input image. When the size of the input image changes after the residual connection, the direct connection can adjust the size of feature map by changing the 1 × 1 convolution layer’s stride.

After the convolution operation in the basic blocks, we perform the ReLU6 activation function to increase the nonlinearity of the neural network; the output value of ReLU6 is limited to 6 at most. If there is no restriction, low-precision embedded devices cannot accurately trace large values, which will lead to a decrease in model accuracy. The stride of the first basic block is set to 2, and the image size is changed by downsampling. Because the first layer of convolution extracts more basic information, such as the edges and textures of the target, the downsampling operation does not reduce the model accuracy, and can reduce the computational complexity. The strides of the next four basic blocks are set to 1, and the size of the input remains same. At this time, according to the receptive field calculation formula in Equation (2), it can be seen that two 3 × 3 convolution kernels and one 5 × 5 convolution kernel have the same receptive field, while a larger receptive field will capture semantic information in a larger neighborhood.

In Equation (2),

RFi and

RFi+1 represent the receptive field of the

ith and

ith + 1 convolutional layers, respectively, while

stridei and

Ksizei represent the stride and the size of the convolution kernel of the

ith convolutional layer, respectively.

We perform the max pooling operation directly by each basic block, obtaining the maximum value of the pixels in the neighborhood to remove redundant information and retain decision-making information. The image appearance of tuberculosis lesions is irregular—mostly dots, clouds, and bars. Multiple pooling operations can retain the salient features extracted in the filters, while reducing the size of the feature map and increasing the calculation speed. A basic block and a pooling layer are connected in series to form the feature extraction part of the network in this paper.

The ECA blocks in

Section 2.2 produce neither extra parameters nor computation complexity; this is extremely attractive in practice. In order to further improve the robustness of the model, the ECA block is inserted after the basic block. The feature information is compressed to one dimension through the global average pooling operation, and each feature map is mapped onto a single value. After the average max pooling layer, the two fully connected layers complete the classification. Considering that the sample data are fewer, in order to alleviate the overfitting of the model, the dropout layer is added after the global average pooling layer and the first fully connected layer, and the rate is set to 0.5. In each training batch, 1/2 of the neuron nodes are randomly inactivated, in an effort to prevent the model from relying too much on local features and improve its generalization ability.

Table 1 shows the hyperparameter settings for model training.

2.4. Performance Matrix

In tuberculosis detection tasks, we evaluate the different models through quantitative indicators such as accuracy, sensitivity/recall, specificity, precision/PPV (positive predictive value), NPV (negative predictive value), F1-score, −LR (negative likelihood ratio), and +LR (positive likelihood ratio).

(1)

Accuracy: We use

accuracy to evaluate the model’s ability to predict correctly for all samples; this does not consider whether the predicted sample is positive or negative; the formula is shown in (3):

(2)

Sensitivity/

Recall: We use

recall to analyze what proportion of the samples that are true positives are predicted correctly; recall is also called sensitivity; the formula is shown in (4):

(3)

Specificity:

Specificity is relative to sensitivity (

recall), which represents the ability of the model to correctly predict negative examples from all negative samples; the formula is shown in (5):

(4)

Precision/

PPV: In order to analyze the proportion of samples whose predictions are positive, we use

precision/

PPV for comparison; the formula is shown in (6):

(5)

NPV: We use

NPV to analyze what proportion of the samples that are true negatives are predicted correctly; the formula is shown in (7):

(6)

F1-

score:

F1-

score is also called balanced

F-score, and is defined as the harmonic average of

precision and

recall. Using

F1-

score, we can comprehensively compare

precision and

recall; the formula is shown in (8):

(7) −

LR: Likelihood ratio is an indicator that reflects authenticity; in both

sensitivity and

specificity, the −

LR is the ratio of the true positive rate to the false positive rate of the screening results; the formula is shown in (9). +

LR is the ratio of the false negative rate to the true negative rate of the screening results; the formula is shown in (10):

Here, true positive (TP), true negative (TN), false positive (FP), and false negative (FN) represent the number of tuberculosis images identified as tuberculosis, the number of normal images identified as normal, the number of normal images identified as tuberculosis, and the number of tuberculosis images identified as normal, respectively.

5. Conclusions

The classification neural network has great application value in the early screening of TB in primary hospitals. This paper proposed a lightweight TB recognition network for PCs and Jetson AGX Xavier devices with lower hardware levels, then deployed it locally. In order to ensure that the network fully trains, validates, and tests on the data of different distributions, the two datasets were divided and fused, and the improved residual module and efficient channel attention were introduced to form the lightweight tuberculosis recognition model E-TBNet. The comparative experiment proved that compared with the optimal MobileNetV3 network for the PC, the network proposed in this paper sacrifices 4.8% accuracy, the number of parameters is reduced by 77.7%, the computational complexity is reduced by 58.1%, and the calculation speed of the model is effectively improved. In deep neural networks, the sample quantity and quality of the dataset determine the accuracy of the network model. Due to the lack of high-quality datasets in some primary hospitals, a lightweight network that uses a small number of samples to satisfy training will have greater clinical significance. However, the actual application environment is complex and changeable, and the generalization and robustness of the model need further research. In the future, based on the present work, the network could be designed to be more efficient and lightweight, while reducing its dependence on the hardware level and further improving the recognition accuracy of the network.