1. Introduction

Generating accurate weather forecasts from reliable localized data is a key feature of precision agriculture that enables farmers to improve their resources in terms of efficiency, productivity, sustainability, etc. Moreover, it is a tool for countering weather uncertainty by reducing the risks posed by extreme weather that can impact the overall quality of the production. Frost is one such threat that kills plant tissue, causing low production and economic losses since it prevents the normal development of crops. During some periods of the year, temperatures can drop considerably between day and night, with temperatures reaching below freezing. A low temperature can cause the crop to flower early or if there is frost it can cause a considerable reduction in production [

1]. These negative consequences could be prevented or mitigated with a frost forecast model that provides information to the farmer regarding the probability of a frost event several hours in advance, so the farmer can take action to protect the crops.

From a forecasting perspective, the prediction of frost events presents some challenges. For instance, they are a complex meteorological phenomenon influenced by a combination of environmental factors, including air temperature, humidity, radiation, and wind, and local factors, including topography and field orientation. Since there are local factors involved, frost events can occur in small areas even within the same crop field [

2]. Therefore, a method to collect high-resolution weather data is required.

Typical weather data sources such as open weather, used for global weather forecasts, or national weather stations are useful to understand the weather dynamics across big areas. Previous research used frost forecast models from these sources, but they are limited to forecasting in the vicinity of the weather station [

3]. Therefore, these methods do not provide the resolution required when seeking to forecast the weather in a specific location/field. As a consequence, farmers use these forecasts as a reference, but most of their decisions to prevent frost events are based on experience and intuition.

To collect data specifically from a field, we developed a low-cost and easy-to-install IoT platform, with environmental sensors and cloud technologies for collecting and storing data. Specifically, we measure air temperature, air humidity, and relevant metadata. In addition, we use meteorological data collected from weather stations located near the field [

4]. The purpose is to provide the farmer with relevant information so that they can make informed decisions. The frost forecasting model requires an intelligent component that uses field data to capture specific conditions about the field combined with weather stations data to capture weather dynamics and possible future scenarios.

The majority of research relating to frost forecasting is based on simulating partial differential equations or traditional statistical models to predict future weather conditions [

5]. This approach is computationally expensive as it requires a recurrent theoretical upgrade to incorporate weather and atmospheric assumptions. On the contrary, machine learning algorithms do not make any assumptions about weather behavior. Instead, they use historical weather data as an input and train a model to predict future weather values [

3].

There are some challenges related to machine learning models. Since frost can be highly variable across a small area, the collection of temperature data usually from weather stations is not available with sufficient frequency. In addition, the number of frost events during the year is relatively small, making it difficult to build an accurate prediction model due to limited available data [

2]. Finally, by viewing the model as a binary classification problem, i.e., Frost/No Frost, we need to consider that both errors are unwanted. If no frost is predicted and the frost occurs, it may impact on the partial or total loss of production. On the contrary, if frost is predicted and the frost does not occur, unnecessary resources such as fuel and electricity used to mitigate the frost will be wasted.

In light of the aforementioned constraints, especially the scarcity of data and small datasets, a range of machine learning models were evaluated, including models to learn time series data as well as advanced deep learning architectures. In particular, graph neural networks (GNN) and attention mechanisms were considered suitable for this problem, since they incorporate spatial knowledge that can model field and environmental interactions [

6,

7]. In addition, since the occurrence of frost is caused by a prior movement of environmental factors, GNNs can be naturally extended to model this type of temporal interaction. Therefore, in this paper we discuss a time series forecasting problem using GNNs and attention.

In this study, we collected air temperature and humidity data from an experimental site and from 10 weather stations. In particular, we propose GRAST-Frost, a GNN with spatio-temporal attention architecture for frost forecast. We map weather stations’ locations to nodes on a graph and construct the edges based on geographical proximity. Furthermore, the adjacency matrix is optimized during the training phase, therefore other interactions can be learned. We utilize spatio-temporal attention to incorporate similar locations and time.

To the best of our knowledge, deep learning and graph neural networks were so far not applied to the frost forecasting problem. Although considerable research was devoted to this area, most of the research focused on developing an IoT platform for collecting in-place weather data. Consequently, little attention was paid to developing a model that can take advantage of multiple data sources and spatio-temporal dynamics. However, GNN models were recently adopted for traffic forecasting, epidemiology, and fraud detection. The implementation of GNN models in these scenarios showed the potential for predicting multivariate time series [

8,

9] and graph attentional networks for capturing spatio-temporal dynamics [

7,

10,

11].

Our contributions to this field are two-fold. First, we propose a full pipeline for the frost problem, including the development of an IoT platform, data collection, and forecasting model using two data sources. Second, we approach the frost problem by proposing a multivariate time series forecasting method, GRAST-Frost, that computes each time series at the same time. This method is based on a graph data structure, and it uses a spatio-temporal attention mechanism for weighted relevance according to time and/or space.

Moreover, this paper seeks to answer the following research questions:

Is a GNN capable of improving the time series forecasting of data sources from different locations in comparison to state-of-the-art frost forecasting methods?

Does the spatio-temporal attention mechanism improve forecasts?

Does the combined use of different data sources improve forecasts?

To answer the first question, we compare our approach with classical time series, machine learning, and deep learning methods by classification and regression metrics, which are currently the state of the art for frost forecasting. With regard to the second question, we compare our approach with GNN models proposed in previous studies that do not use spatio-temporal attention mechanisms. For the final question, we compare the forecasts using separate data sources.

The proposed model outperforms current state-of-the-art methods for predicting temperature and classifying frost from 6 h, 12 h, 24 h and 48 h in advance. Furthermore, graph-based modeling and spatio-temporal attention mechanisms are key factors to perform a more accurate prediction and minimize classification errors.

The rest of the paper is organized into the following sections:

Section 2 examines previous studies regarding machine learning for time series, weather, and frost forecasting.

Section 3 describes the platform and our proposed method. After that,

Section 4 presents the experiments and results, and then we offer a discussion of the results in

Section 5. Finally,

Section 6 concludes the paper and presents possible directions for future work.

2. Related Work

Mort et al. [

12] and Verdes et al. [

13] are two research studies that address the frost phenomenon using machine learning. In these studies, the authors use artificial neural networks to create temperature prediction models based on weather time series data and apply them to agricultural applications. Their principal objectives are to predict the next day’s minimum temperature using historical data. In recent years, there were great advances in the field of internet of things (IoT) systems and deep learning. Thanks to these advancements, it was possible to install a wide variety of sensors and collect data from almost any place of interest with the purpose of building accurate prediction models. Regarding IoT systems designed for weather forecasting, Muck et al. [

14] designed an IoT based weather station using a Raspberry Pi, which provides short-term weather forecasting. Similarly, Levin et al. [

15] presents a weather forecasting system based on a Raspberry Pi 3 Model B+ with environmental sensors and a weather forecasting algorithm. Their systems monitor air temperature, humidity, pressure, and altitude at experimental locations. Their weather forecast algorithms are based on a linear regression model. Other studies such as Diedrichs et al. [

3] and Castaneda–Miranda et al. [

16] used IoT devices to extract weather data from selected locations as well, but instead of focusing on weather, they used classic applied machine learning techniques to predict frost events. Likewise, the research group of Guillén–Navarro et al. [

1,

17] developed over the years an IoT platform to predict frost events. This platform appears to be more robust than the previous studies in terms of engineering and technological components. Although these studies made interesting progress in terms of IoT and sensor data collection systems, the development of their machine learning models was limited. Therefore, the resulting prediction results are unsatisfactory in terms of error rates and/or classification metrics. In addition, the data sources were constrained to the experimental field where the system is located.

Few studies attempted to focus on the development of the frost forecast model itself. For example, Ding et al. [

2] concentrated their efforts on the development of a causal-effect machine learning model that uses locally collected temperature, humidity, and radiation data to create frost prediction. They were able to describe causal relationships between variables and outputs; however, their model requires improvements to minimize the false-positive predictions. Another example is the study by Cadenas et al. [

18], which was based on a soft computing framework that collects and stores weather data. They propose a data preprocessing technique to build fuzzy time series from raw data and serve it as an input to classification and regression problems. In addition, Guillén–Navarro et al. [

19] used a simple long short-term memory (LSTM) architecture to produce frost forecasts from data collected using their IoT system. Although these studies provide interesting methods to address the frost forecasting problem, there is still a wide range of solutions to investigate. For example, exploring current developments within deep learning models that could improve forecasts and include different data sources.

In contrast to frost forecasting, weather-related forecasting has plenty of studies that use advanced deep learning techniques. For instance Shi et al. [

20] proposed a fully connected convolutional LSTM network to predict short-term future rainfall intensity in a local area and extract spatio-temporal dynamics of the data. Likewise, Mehrkanoon et al. [

21] proposed a model for predicting temperature and wind speed 1 to 10 days in advance using a convolutional neural network. They introduced an architecture based on 1D-CNNs to process tensor 3D data and to extract spatio-temporal relations. In addition, Hewage et al. [

5] presented a weather forecasting model that uses an LSTM and a temporal convolution network. The results obtained are better than classical time series forecasting and classical machine learning. However, these models do not capture the complexities of our specific problem. For instance, we need to deal with multivariate time series forecasting of several time series at different locations. In the aforementioned approaches, there are no spatial relations between the entities (e.g., different cities), the interaction is determined by the entity order in the tensor. To capture the spatio-temporal dynamics of different entities, a promising approach is to use GNNs, which have the capacity to model temporal dynamics of nodes and spatial dynamics between them at the same time.

GNNs showed recent progress in the area of time series forecasting and spatio-temporal relations. Moreover, a GNN can extract greater insights compared to that of networks that can only analyze data in isolation. This is achieved by obtaining structural relationships between the data [

22]. There are a number of domains in which GNNs were successfully applied in recent years, such as traffic flow forecasting, fraud detection, epidemiology, and forecasting weather-related events. In regards to the latter, Wilson et al. [

23] addressed the spatio-temporal correlation in the data by proposing a deep learning model based on a weighted graph convolutional LSTM. The general goal was to capture temporal autocorrelation with the LSTM and the spatial relationships with the graph convolution. Similarly, Khodayar et al. [

24] presented a spatio-temporal Graph convolutional network (GCN) for short-term wind speed forecasting. Another example is the study by Wang et al. [

25], which proposed a graph-based model to predict PM2.5 particle concentration and capture the spatio-temporal dependencies.

The most recent advances in GNN were applied to other domains. For instance, a study by Cheng et al. [

7] presented a model using GNN for fraud detection in credit card transactions. They implemented a spatio-temporal attention mechanism which produces the input for a 3D convolution network. As per the previous study, Gao et al. [

26] proposed a GNN with spatio-temporal attention mechanism and a GRU architecture to forecast the number of infected cases in a pandemic by considering local disease status and demographic and transmission dynamics. There were several GNN models applied to traffic flow forecasting and urban planning. In particular, Song et al. [

27] developed a spatio-temporal GCN with a synchronous temporal mechanism to predict the flow of a network. Likewise, the studies by Lu et al. [

28], Kong et al. [

10] and Li et al. [

11] proposed different model versions of a spatio-temporal GNN with attention mechanisms for urban sensor value forecasting, traffic flow forecasting, and segment-level traffic prediction, respectively.

The goal of this study is to predict frost events by using air temperature and humidity data obtained from an IoT system installed on an experimental field and weather stations located around the field. We have two main sources of inspiration. First, we are inspired by recent advancements in GNN models as detailed previously, especially the spatio-temporal attention mechanism. Second, we are inspired by the study of Wu et al. [

9], which is a model for multivariate time series forecasting using a GNN, and by the recent study of Shang et al. [

8], who proposed a model for multivariate time series forecasting using a GNN in which they consider pairwise interactions between features in a node representation. Therefore, the contribution of this paper is a multivariate frost forecasting model based on a GNN with a spatio-temporal attention mechanism.

3. Proposal

In this paper, we propose a bivariate time series forecasting model to predict the occurrence of frost. The benefit of such a model is that it can be trained with historical time series data

with

the value of the bivariate variable at time

t is used to forecast future values of the variable in a certain time-window

r,

. Then, the goal is to create a mapping function from

X to

Y and minimize the loss, typically using a

regularization [

9].

In particular, given a set of input data and a derived label which indicates the presence (1) or absence (0) of frost for each one of the records. We aim to forecast the minimum temperature and the frost class for future time windows based on the historical time window .

In addition to bivariate time series forecasting, we model the spatial and temporal relationships of weather data from multiple locations. For that purpose we utilize GNNs to describe and formalize those relationships. The following are important definitions for graph modeling [

9].

Graph: a graph is represented as where V represents the set of nodes and E represents the set of edges. There are n number of nodes in a graph.

Node neighborhood: describes a set of nodes connected by an edge. A singular node and an edge maps from v to u describes the connection between nodes. The neighborhood of v is defined as .

Adjacency matrix: states the connections between nodes in a graph. It is denoted by a matrix with if and if .

Then, the graph network is formally defined as , which represents the relationships between nodes in the spatial dimension.

3.1. Data Sources

The model was trained and tested using data collected from our IoT platform and 10 meteorological stations, which are all located in the central region of Chile.

Appendix A lists the stations and their geographical location.

The 10 meteorological stations, which are within close proximity of the orchard, provide structured temperature and humidity data in the form of a graph. Here, the data are collected every hour. The 10 meteorological stations considered for this study and their representation as a graph are shown in

Figure 1.

The IoT platform was developed with both air temperature and air humidity sensors and consists of 12 low-power wireless sensor nodes (motes). The latter are divided into eight sensor data nodes and four repeaters, which are connected to a gateway through a SmartMesh IP manager. The wireless sensor network is exposed directly to environmental conditions (sun, dust, rain, and snow), and therefore all sensors are protected by an Internal Protection 65 (IP65) rating enclosure. The IoT platform was installed in a local orchard where data were collected every 10 s at four different heights above ground (one, two, three, and four meters). An image of the IoT platform in situ in the orchard is shown in

Figure 2.

Data were collected between 4 September 2020 until 5 April 2021. Training data were taken from the months of September to February and the last two months of data were used for training. It is assumed that environmental factors can have an accumulated impact on frost; therefore, the prediction was performed using time series data. For this reason, we need the model to learn time-related patterns, which is why we do not randomly split training and testing data.

All the data are preprocessed for missing values and outliers. Data from the IoT platform is downsampled using 1-min time-windows. In addition, to map the frequency of both data sources, data from weather stations are linearly interpolated. Finally, in case of the classification of frost, a label is created in the training data with two classes (Frost/No Frost) and given the imbalance between them, the synthetic minority oversampling technique (SMOTE) method was applied.

3.2. Forecasting Model

In this study, we develop a GRAST-Frost model, the architecture of which is shown in

Figure 3. First, we convert our input

P into a 3D (spatial, temporal and features), high-order tensor representation

. The tensor is then fed into the graph neural network, which aims to correlate the IoT platform data with that of the meteorological stations. For each

t, we create a graph of nodes that relate to each of the stations (meteorological and the IoT platform) and their corresponding edges. Then, we apply a spatio-temporal attention mechanism and a 3D convolution layer to obtain a feature-learned tensor

. With spatio-temporal attention and 3D convolution, the idea is to weigh the importance of different dimensions and find hidden patterns from the input data. Finally,

is fed through a recurrent neural network to produce a forecast with two different loss functions depending on the type of task. We present details of each part of this architecture in the following subsections.

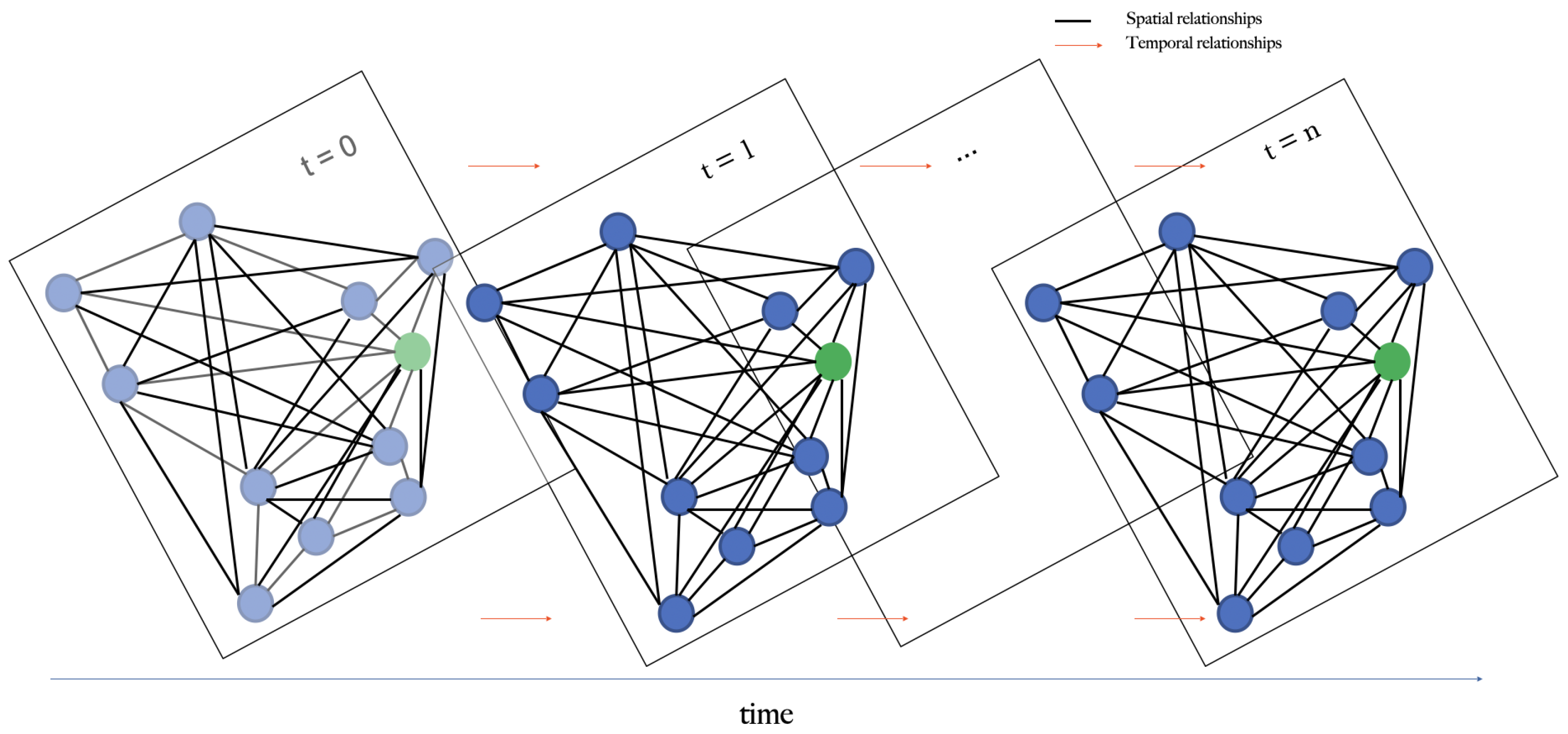

To forecast local weather conditions at the orchard using the aforementioned sensory data, we predict at differing time intervals into the future (6, 12, 24, and 48 h). At a single time step, the geographical locations of the nodes are graphed as can be seen in

Figure 1, where the blue dots represent the meteorological sites and the green dot depicts the experimental site. For multiple time steps, the graph is expanded into a spatial-temporal graph where feature values for a given node are related to its previous and future values and its spatial neighbors. A schematic view of this idea is shown in

Figure 4.

3.2.1. Feature Engineering

To represent the input data as a tensor

where

T,

S, and

F denote the temporal, spatial, and feature dimensions. In particular, for each spatio-temporal pair composed of a certain time horizon and location, a feature vector is built (

) based on the measurements collected in that pair. There are a total of

spatio-temporal pairs, each one of them are features of

F dimensions. Based on [

7], the feature vector is composed of two parts: feature measurements and graph features. For measurements, we include raw temperature, humidity data, mean, standard deviation, median, maximum, and minimum values for a specific time horizon. For the graph related features, we include several metrics obtained from the graph neural network processing described in the following section.

3.2.2. Graph Construction at a Single Time Step

Given the tensor X, the geographical location (latitude, longitude) of the weather stations and experimental field is obtained. This data are used to calculate the geographical proximity, which in turn contributes to the development of the adjacency matrix. Nodes are simply a subset of the tensor in time t. We utilize an aggregation function to reduce the edge updates to a single element. Therefore, for a single node, we summarize the interactions with other nodes.

The nodes in the graph are constructed to represent input data, by associating features such as and location . In total we have 11 nodes. Generally, we have two types of nodes: v is the node corresponding to the experimental field, and u is the node corresponding to the weather stations. Thus, and are the corresponding feature vectors for each one of them.

The edges are constructed based on geographical distance between nodes. We construct a weighted graph where the weight is inversely proportional to distance. Formally, in a weighted graph

[

6], that do not contain any cycle of negative weight, the distance between node

u and

v is defined as

as

that corresponds to the Euclidean distance between the location of nodes

u and

v.

Therefore, the weight is,

For this study, all nodes are integrated, thus, there are edges between all the nodes. Therefore, the feature vector of the edge is , which is the weighted measurement of , features to be obtained by the graph neural network (GNN).

3.2.3. Spatial-Temporal Graph Construction

To represent the relationship between each node and its neighbors across time, inspired by [

27], we connect all nodes with themselves sequentially for each time step. This allows us to create a spatial-temporal graph by sequentially connecting nodes from previous to current and future time steps as shown in

Figure 5. Therefore, it is possible to understand the relationships between nodes through time. In practice, we create a new adjacency matrix

for the spatial-temporal graph. The new adjacency matrix can be formulated simply as

As illustrated in

Figure 5 the new adjacency matrix has dimension

, where its diagonal represents the adjacency matrix for a single time step and a relation of the nodes through time.

For each time step a GNN consumes the graph described on the previous section. The information of interactions between nodes is described in terms of nodes involved

, time

t, location

l and measurement

m. Based on it, the edges are constructed. Features for nodes

and edges

are initialized accordingly. Then, we iteratively include graph constructed in subsequent time steps by updating the representation of nodes and edges based on the previous time step and weighted by the adjacency matrix

. Edge features are updated following

where

is the edge feature between nodes

u and

v in time

t,

is a fully connected neural network with a

activation function which computes all the edges updates. In addition,

are the location on graph of node

u and

v. Then, for each time step we proceed to update the node features

and

. For each node, we sum all the edges that are connected to that node. Regarding

the equation is

Here is the node feature in time t, N is the total number of nodes and is a fully connected neural network.

Finally, we calculate the feature vector of the graph which is the average of all the updates of and for every time step.

The output of the GNN are the updated values of , , and . We use these values to complete the feature vector F on the tensor regarding the graph part, as described in the previous section.

3.2.4. Spatio-Temporal Attention Mechanism

The idea of the attention network is to weigh the importance of spatial and temporal values from the current measurement for a specific node and time. We use the GAT model [

6] to extract Spatio-temporal similarity features. The idea is to update the embedding information of each node using the aggregate data from its neighbors. Therefore, weather stations and the IoT platform receive prior temperature and humidity data from nearby areas. This allows us to hypothesize that a specific prediction is more likely due to a higher importance being given to meteorological sites in close proximity to the orchard, rather than those located further away, and recent measurements being given a higher priority compared to that of older measurements. Given the feature tensor

, we can query the temporal values

(

) to extract the time horizon, and the spatial values

(

) to extract the location coordinates.

Temporal Attention

In the temporal dimension, there exist correlations between temperature and humidity values in different time steps. Since weather is highly dynamic, temporal correlations are variable under different conditions. Therefore, to have a mechanism for capturing those correlations, we use attention to adaptively obtain the importance of previous data in relation to current data.

Based on [

7], considering a tensor

, the temporal attention layer is the temperature and humidity values for a specific time multiplied by a weighted sum of the matrix representation of all temporal values. Formally, it is described as

Here is the weight for each time step, is a fully connected layer with activation and the weight vector ; is the temporal penalty factor to control the importance of temporal attention; , the output of the temporal attention layer is a tensor with .

Spatial Attention

The spatial dimension, temperature, and humidity values from different nodes have varying influences, and due to the weather, the behavior of these influences are highly dynamic. In particular, we are interested in capturing correlations between the nodes on the weather values in the spatial dimension. Therefore, we want to explicitly capture the relationships between close and distant nodes.

Given the output from the temporal attention layer

, the spatial attention mechanism is applied. Formally, it is described as

where

is the weight of the fully connected spatial network

, and

is the output of both self-attention layers. The output is reshaped into a tensor format with the same order as

;

is the weight for each spatial step; and

is the spatial penalty factor to control the importance of spatial attention.

3.2.5. Convolution Process

Several studies stated the benefits of applying convolutional neural networks to feature tensors based on GNN modeling [

29,

30,

31]. In our case, we used a 3D convolutional network with the

tensor as an input. The idea is to extract hidden patterns from spatio-temporal features by stacking multiple layers in the architecture.

The 3D convolution is represented as

Here, is a 3D kernel in the layer and kernel in a convolution with feature . In particular, the 3D convolution kernel is (, , ). The first layer of is the output of our attention mechanism . , , and are the dimensions of considering temporal, spatial, and feature components, which equals to of the first convolution layer.

Finally, the output feature

is

where

is the bias parameter, and

is the sigmoid function.

3.2.6. GNN Forecasting

In the last part of the forecasting model, we apply a recurrent neural network to capture the sequential aspect of the problem and produce forecasts based on historical data. Given the tensor

we use a sequence-to-sequence model (seq2seq) [

32] over each node, i.e.,

. Thus, we extract the transformed series from the experimental field and weather stations. The reason for using seq2seq is that in a graph structure, we can perform recurrent graph convolution to handle all series simultaneously [

8]. In practice, for each series we used

time values to train the model and

to forecast the weather using

h in the future. Specifically, for each time

t, the seq2seq model takes

for all series and updates the internal hidden state from

to

. The encoder recurrently updates the training data to be included, producing

as a summary. The decoder takes that input and continues the recurrence to include all the testing data for the forecasting phase.

Finally, we use two loss functions, one for classification and one for regression. The former loss function is defined as

where

N is the total number of samples in the series,

is a sample weight regarding the distribution of Frost/No Frost;

is the real label and

is the value score produce by the forecasting.

The regression loss function uses mean squared error

4. Results

4.1. Baselines and Evaluation Metrics

We use our model to solve a regression problem, to predict the minimum temperature in all the nodes, and a classification problem, to predict two classes Frost and No Frost. In case of regression, we compare with the following forecasting methods:

Non-deep learning methods: historical average (HA), ARIMA with Kalman filter (ARIMA), vector auto-regression (VAR), and support vector regression (SVR). The historical average accounts for weekly seasonality and predicts for a day by using the weighted average of the same day in the past few weeks;

Deep learning methods that produce forecasting for each series separately (not graph-based) such as feed-forward neural network (FNN) and LSTM;

Autoencoder forecasting method with attention mechanism (AC-att);

Graph convolutional network applied to the given graph without spatio-temporal attention mechanism (GCN);

Variants of this architecture using convolutions [

7] and GRU [

26].

In case of classification, we do not use the non-deep learning methods described above, but we use support vector machines (SVM) and tree-based classification algorithms such as naïve Bayes and XGBoost.

For regression, all methods are evaluated with three metrics: mean absolute error (MAE), root mean square error (RMSE), and mean absolute percentage error (MAPE). For classification, all methods are evaluated with precision, recall, and F1 metrics.

4.2. Hyperparameters

Several hyperparameters are tuned through grid search: initial learning rate in , dropout rate in , embedding size of the LSTM layer was set in , the k value in kNN in , and the weight of regularization in . For other hyperparameters, the convolution kernel size in the feature extractor is 10 and the decay ratio of learning rate is . After tuning, the best initial learning rate for our dataset is . The optimizer is Adam.

All models are implemented in PyTorch and ran in the Google Colaboratory platform.

4.3. Results for Regression Problem

Table 1 and

Figure 6 show the evaluation of the proposed GSTA-RCN model compared with that of the baselines. The tasks are to forecast the minimum temperature of the experimental field with 6 h, 12 h, 24 h and 48 h in advance.

The proposed model outperforms all the compared baselines for frost forecast in 6 h, 12 h, 24 h and 48 h tasks. A nongraph model such as an autoencoder with an attention mechanism outperforms GNN with spatio-temporal attention using convolution and GRU. To improve these results, it is necessary to collect more weather data and weather variables and to use more weather stations for modeling geographical and temporal interactions.

In general terms, we can separate the results from non-deep learning models and FNN from LSTM, autoencoder and graph-based neural network architectures. Non-deep learning models (HA, ARIMA) and the simple deep learning architecture FNN only have similar error scores with the other architectures on the 6 h task. For a greater time-window, their performance drastically decreases. Then, we can compare results from LSTM with the results from STA-C, the variation of the spatio-temporal GNN with attention mechanism, and a convolution-based forecasting method. For this dataset, the complexity added by STA-C does not have an impact on the performance of the model and a simple LSTM is preferable, especially for 6 h, 12 h, and 24 h predictions. As mentioned previously, the autoencoder architecture with attention mechanism performs better than STA-C and similar to STA-GRU for 6 h, 12 h, and 24 h tasks. Finally, our model outperforms all the previous baselines. In our case, the complexity of the modeling successfully increases the performance of the prediction for each time-window task.

Figure 7 shows model’s results in terms of RMSE for the experimental field node and the three geographically closest node neighbors. By focusing on the prediction for each node, instead of the average of all nodes, the results description remains the same. In addition, the Pearson correlation statistic is calculated to compare model prediction with time windows. The performance of all models decreases when the time-window increases, and its results are variable in the different nodes. Therefore, the behavior of models regarding time-window is different in each node, which is a result that could be worth to continue studying.

In addition,

Figure 8 and

Figure 9 show real and predicted data for a specific day. In

Figure 9 we present the variation of our model prediction regarding 6 h, 12 h, 24 h, and 48 h tasks. Our model’s best performance is in the 6 h window task, and then the performance decreases gradually. Although the performance decreases for larger time windows, our model is capable of detecting the temperature trend. The range of the error interval increases if we want to make the prediction point by point, but if that range is detected, we can be sure that the prediction is reliable.

Figure 8 shows our model prediction and the second-best prediction (STA-GRU) in the 6 h task. Both models detect the temperature trend, but in our model the error range is smaller.

4.4. Results for Classification Problem

Table 2 and

Figure 10 show the evaluation of the proposed GSTA-RCN model compared with the baselines as a classification problem. In this problem, two classes are predicted: Frost (temperature below 0 °C) and No Frost (temperature above 0 °C).

In this case our model also outperforms all the baselines for the 6 h, 12 h, 24 h, and 48 h tasks. In addition, similar to regression, models performance decreases when time-window increases.

For this dataset, Naive Bayes and SVM models provide the worst predictions, especially for recall score, which implies a high value of false-negative predictions. Compared with regression, in classification, FNN and LSTM perform similar. The autoencoder with attention mechanism is slightly better that the previous ones but performs worse than all the graph-based models and even the XGBoost model.

Figure 10 shows that for classification in this dataset the graph-based models are preferable. In this case, the spatio-temporal attention mechanism in the GNN, which captures the dynamics between the nodes, is a key factor to improve the predictions. In particular, in our model the strategy to use the spatio-temporal vectors to perform the prediction at the same time obtains the best results by minimizing classification errors, which could be valuable for real-world applications.

6. Conclusions

We presented our frost forecasting model that uses and optimizes a graph structure between multiple time series using a graph neural network (GNN) architecture with a recurrent graph convolution mechanism to process each series simultaneously. The model concludes with spatio-temporal attention to consider spatial relations and extract temporal dynamics.

Frost forecast is an important area of climate research because of its economic impact on several industries. In this study, a GNN with spatio-temporal architecture was proposed to predict minimum temperatures at an experimental site. The model considers spatial and temporal relations and processes multiple time series simultaneously. Performing predictions of 6, 12, 24, and 48 h this model outperforms statistical and nongraph deep learning models.

To further improve this model, we will continue our research by studying deep learning architectures to specifically adapt to different time-window forecasts. In addition, we aim to include domain knowledge from climate sciences that could help in the construction of the graph to transit from a statically defined graph to a dynamically defined one. Finally, by including domain knowledge or by applying new methods, we want to extract the influences of the nodes with each other for the purposes of explaining the dynamics of the graph and, as a consequence, to provide better practical insights to users of the system.