Development of an EEG Headband for Stress Measurement on Driving Simulators †

Abstract

:1. Introduction

2. Materials and Methods

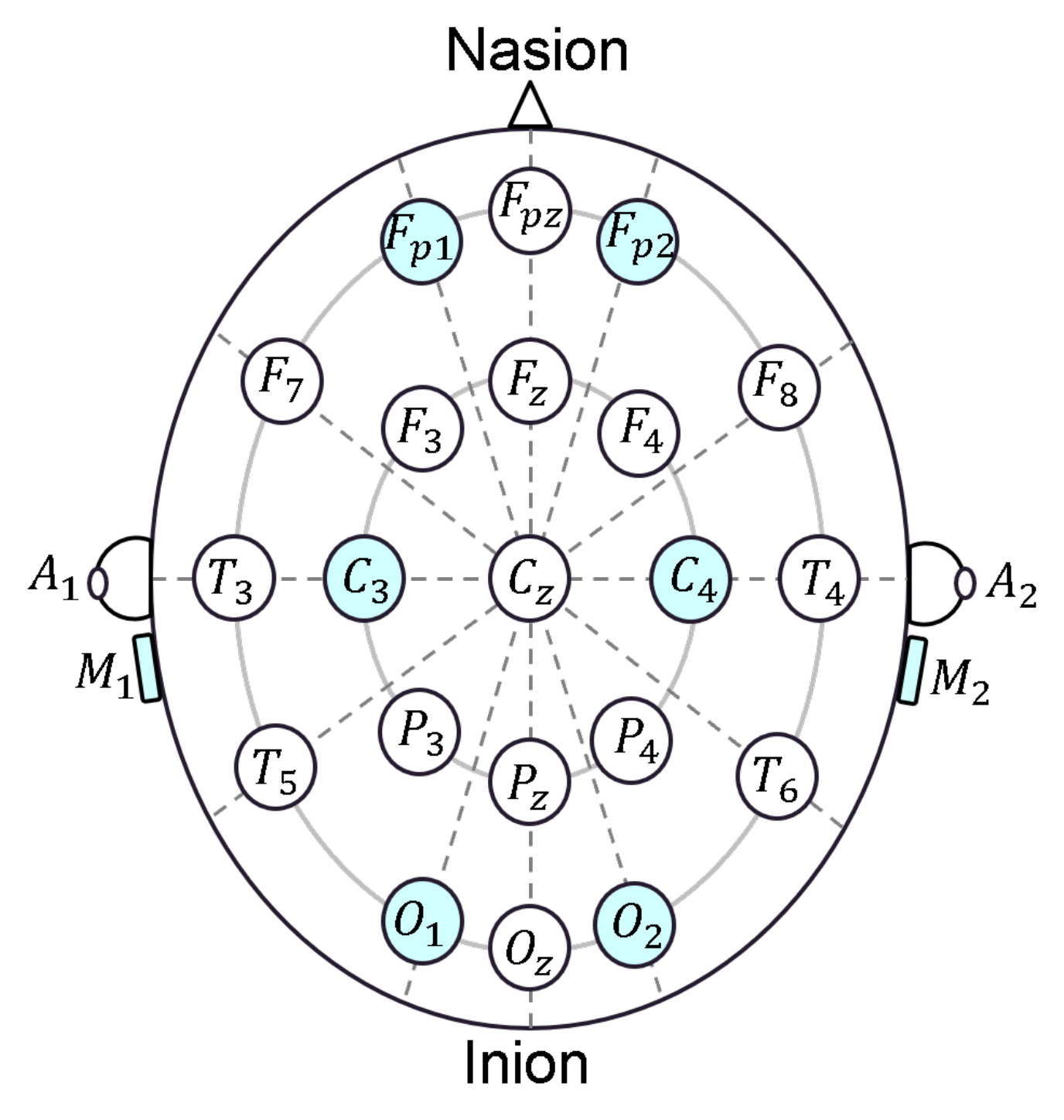

2.1. EEG Headband Design

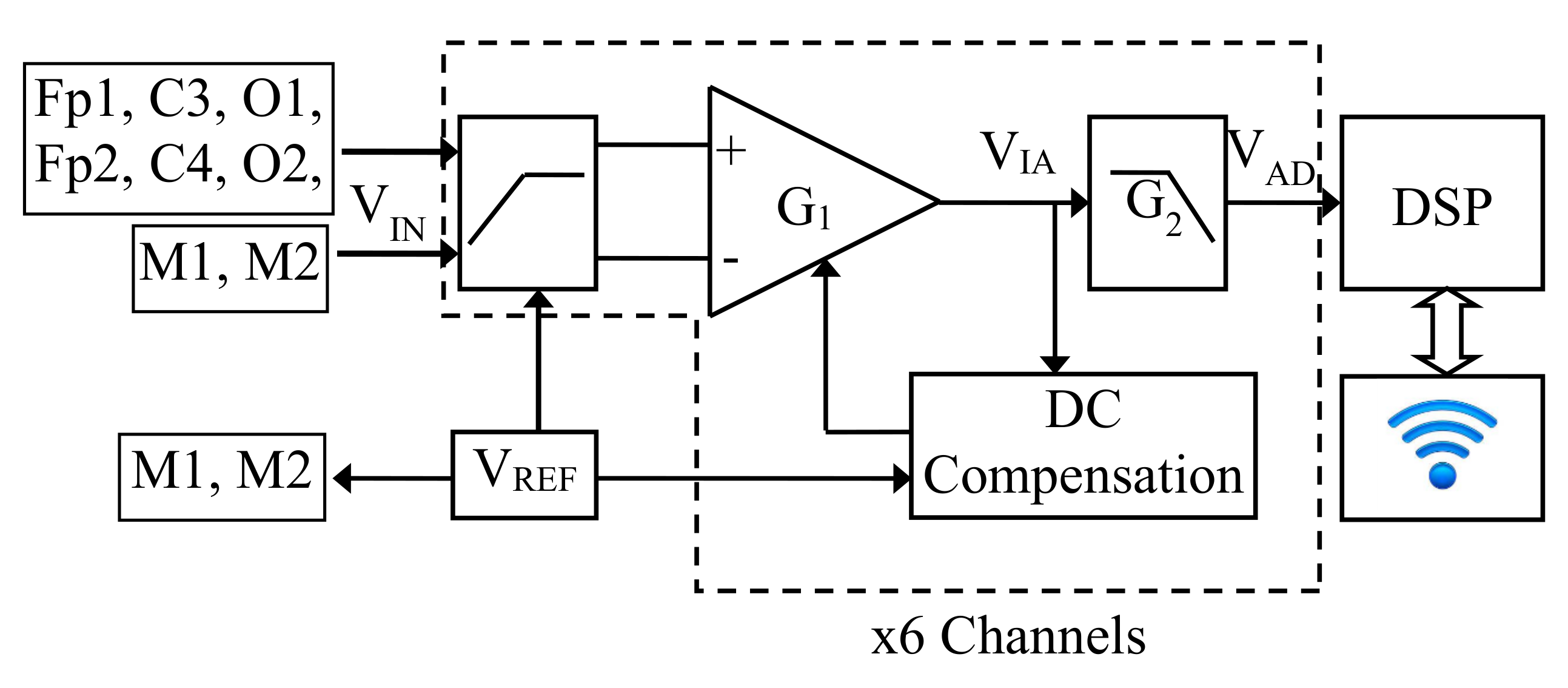

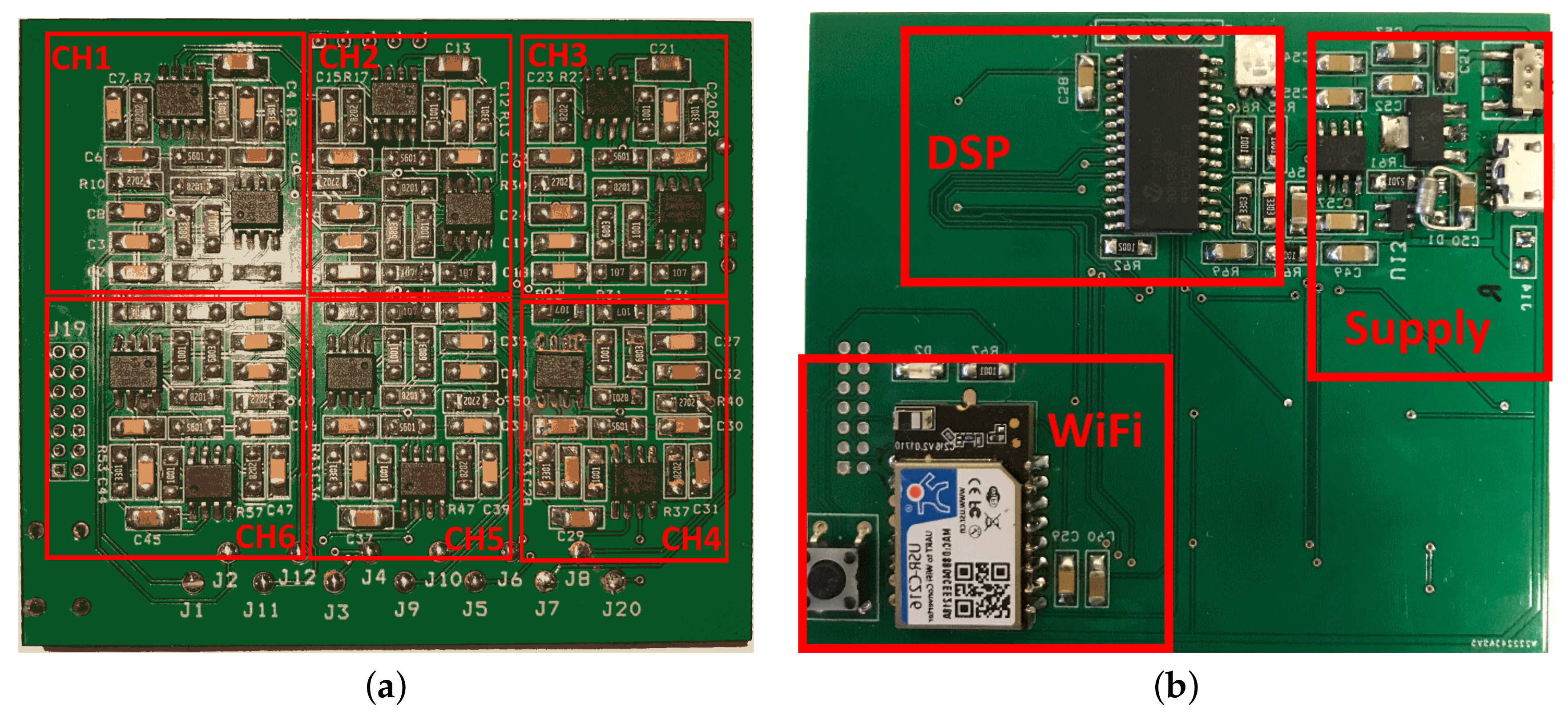

2.1.1. Analog Section

2.1.2. A/D Conversion, DSP, and Data Transmission

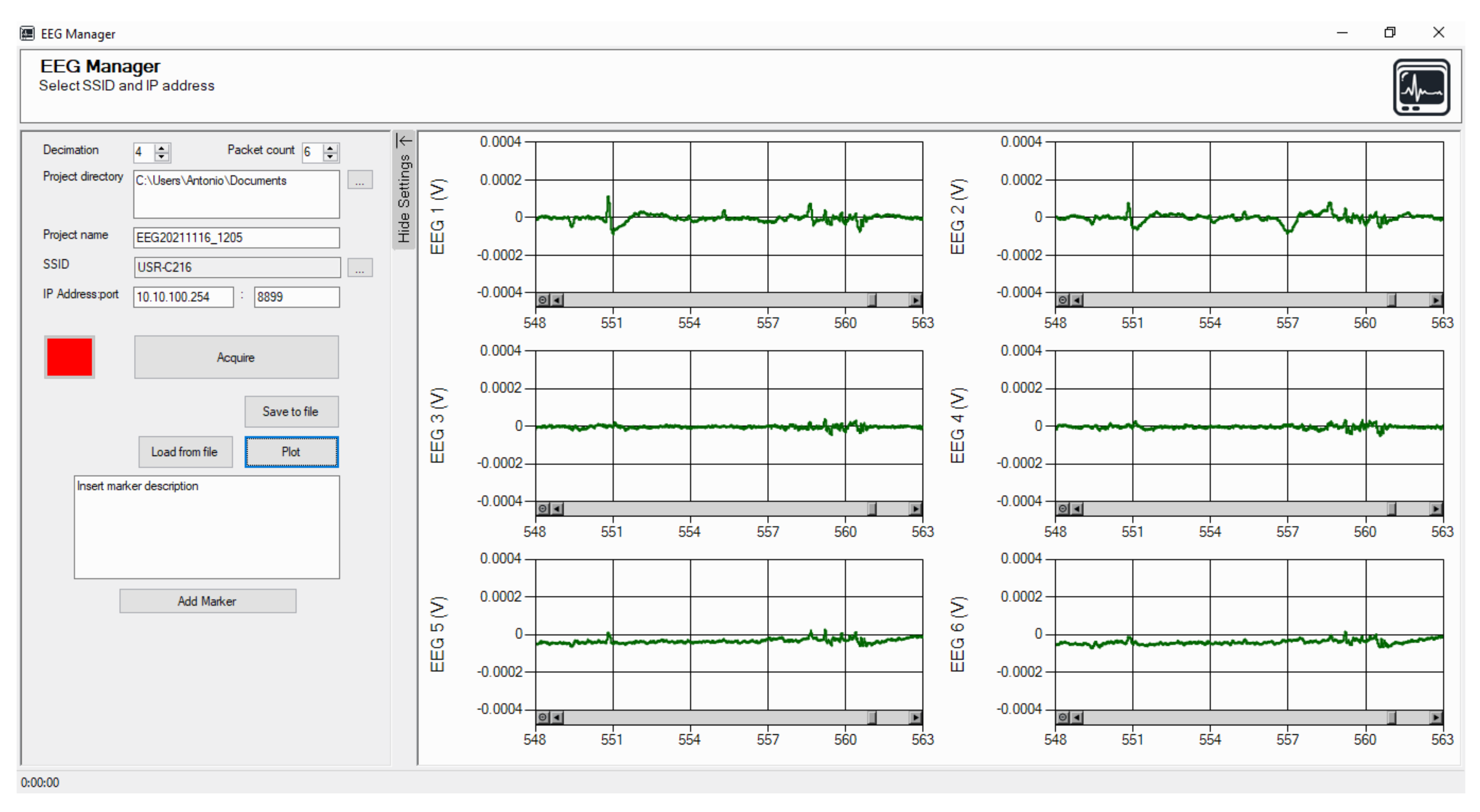

2.1.3. Software Description

2.2. Acquisitions of EEG Signals on a Driving Simulator

2.2.1. Data Acquisition

2.2.2. Data Pre-Processing

3. Experimental Results

3.1. Metrological Characterization

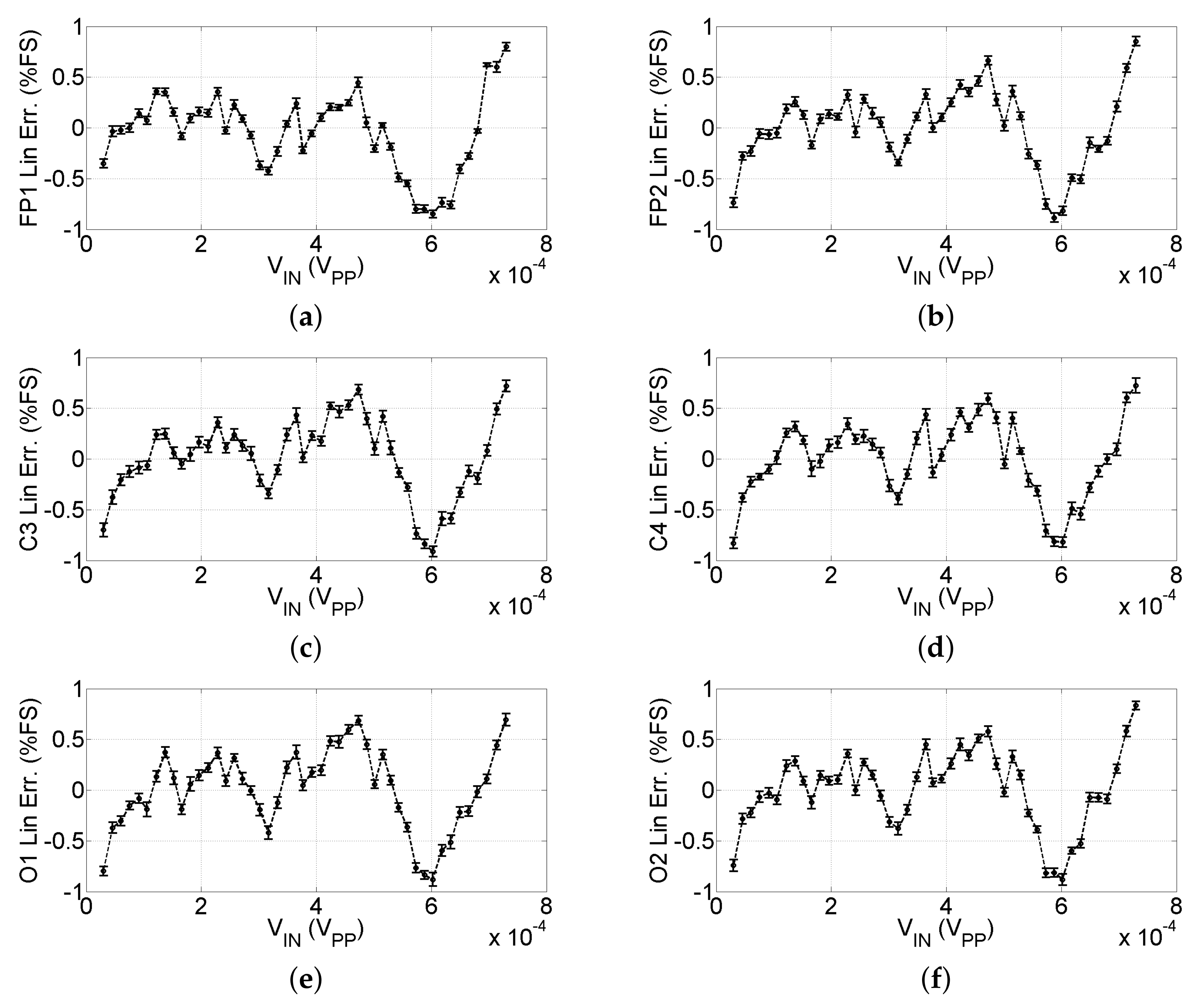

3.1.1. Linearity Characterization and Resolution

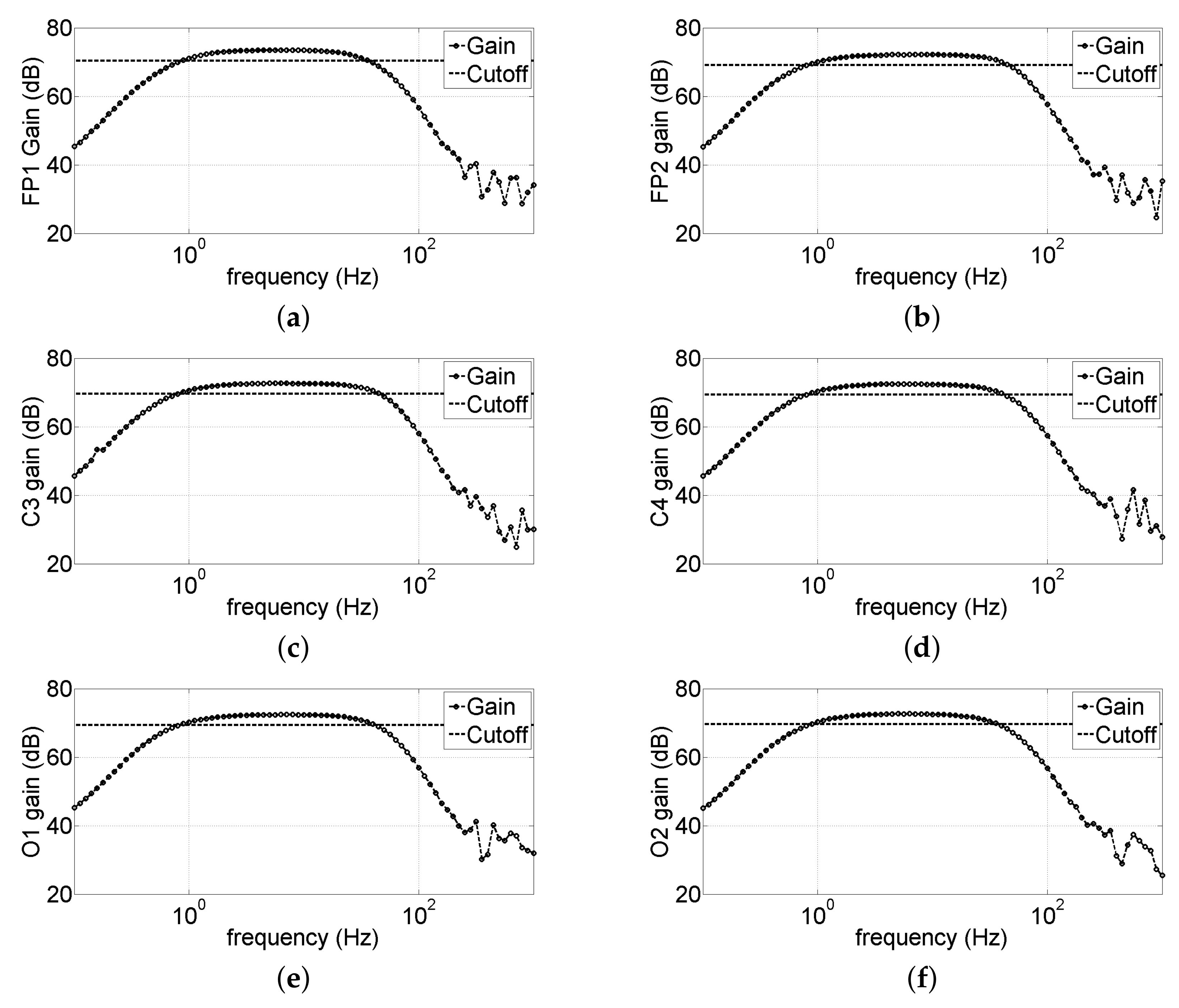

3.1.2. Analog Bandwidth Characterization

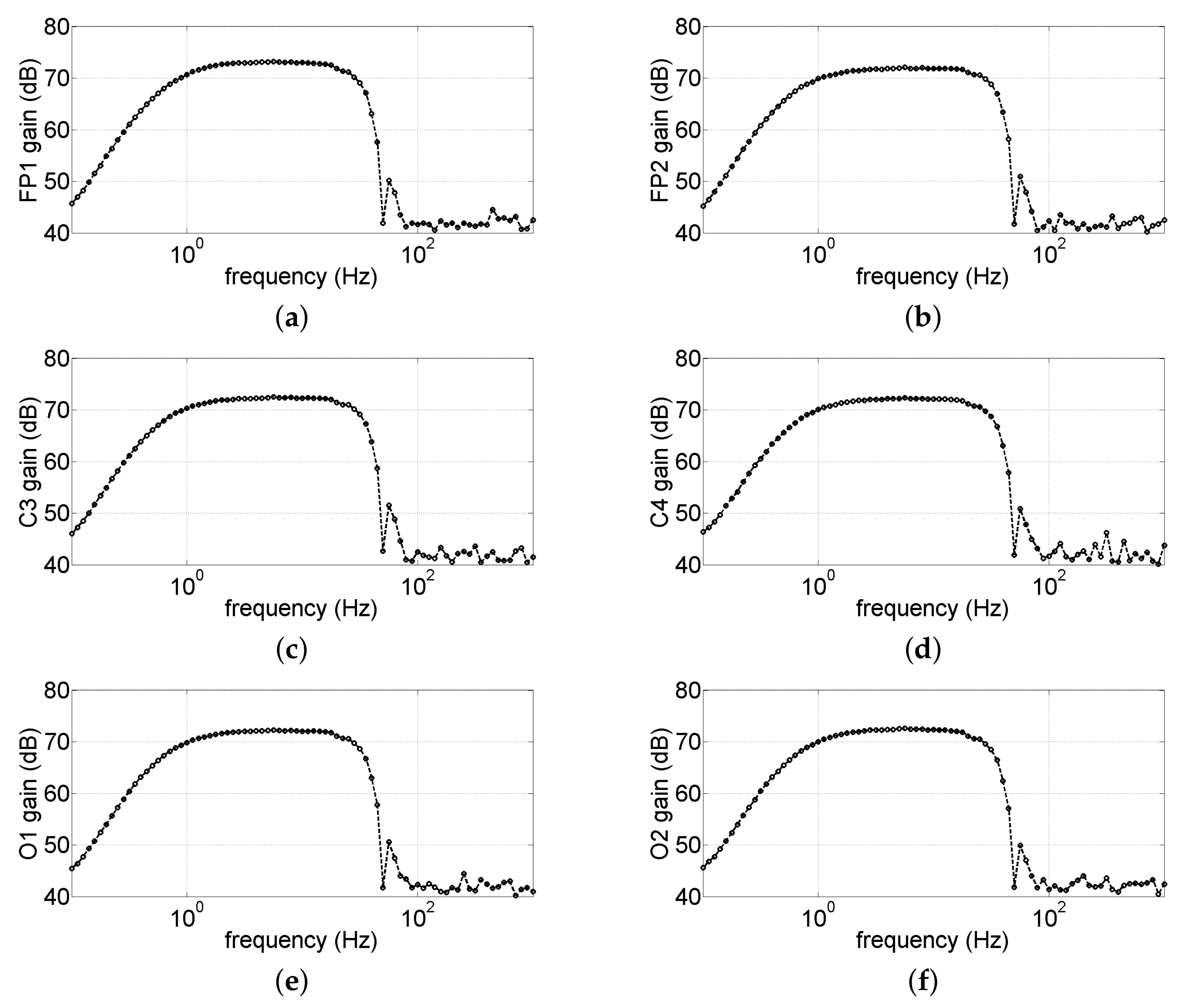

3.1.3. Overall Bandwidth Characterization

3.2. Results of EEG Signals on a Driving Simulator

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Galvani, M. History and future of driver assistance. IEEE Instrum. Meas. Mag. 2019, 22, 11–16. [Google Scholar] [CrossRef]

- Sini, J.; Marceddu, A.C.; Violante, M. Automatic Emotion Recognition for the Calibration of Autonomous Driving Functions. Electronics 2020, 9, 518. [Google Scholar] [CrossRef] [Green Version]

- Azevedo-Sa, H.; Jayaraman, S.K.; Esterwood, C.T.; Yang, X.J.; Robert, L.P.; Tilbury, D.M. Real-Time Estimation of Drivers’ Trust in Automated Driving Systems. Int. J. Soc. Robot. 2020, 13, 1911–1927. [Google Scholar] [CrossRef]

- Ursin, H. Expectancy and activation: An attempt to systematize stress theory. In Neurobiological Approaches to Human Disease; Hellhammer, D.H., Florin, I., Weiner, H., Eds.; Hans Huber Publishers: Gottingen, Germany, 1988; Volume 2, pp. 313–334. [Google Scholar]

- Subhani, A.R.; Xia, L.; Malik, A.S. EEG signals to measure mental stress. In Proceedings of the 2nd International Conference on Behavioral, Cognitive and Psychological Sciences, Bandos, Maldives, 25–27 November 2011; pp. 84–88. [Google Scholar]

- Olpin, M. The Science of Stress. Available online: https://web.archive.org/web/20171120215838/http://faculty.weber.edu/molpin/healthclasses/1110/bookchapters/stressphysiologychapter.htm (accessed on 21 November 2021).

- Affanni, A.; Chiorboli, G. Wearable instrument for skin potential response analysis in AAL applications. In Proceedings of the 20th IMEKO TC4 Symposium on Measurements of Electrical Quantities: Research on Electrical and Electronic Measurement for the Economic Upturn, Benevento, Italy, 15–17 September 2014. [Google Scholar]

- Affanni, A.; Bernardini, R.; Piras, A.; Rinaldo, R.; Zontone, P. Driver’s stress detection using skin potential response signals. Measurement 2018, 122, 264–274. [Google Scholar] [CrossRef]

- Zontone, P.; Affanni, A.; Bernardini, R.; Del Linz, L.; Piras, A.; Rinaldo, R. Stress Evaluation in Simulated Autonomous and Manual Driving through the Analysis of Skin Potential Response and Electrocardiogram Signals. Sensors 2020, 20, 2494. [Google Scholar] [CrossRef]

- Ooi, J.S.K.; Ahmad, S.A.; Chong, Y.Z.; Ali, S.H.M.; Ai, G.; Wagatsuma, H. Driver emotion recognition framework based on electrodermal activity measurements during simulated driving conditions. In Proceedings of the 2016 IEEE EMBS Conference on Biomedical Engineering and Sciences (IECBES), Kuala Lumpur, Malaysia, 4–8 December 2016; pp. 365–369. [Google Scholar]

- Pedrotti, M.; Mirzaei, M.A.; Tedesco, A.; Chardonnet, J.R.; Mérienne, F.; Benedetto, S.; Baccino, T. Automatic stress classification with pupil diameter analysis. Int. J. Hum.-Comput. Interact. 2014, 30, 220–236. [Google Scholar] [CrossRef] [Green Version]

- Elgendi, M.; Menon, C. Machine learning ranks ECG as an optimal wearable biosignal for assessing driving stress. IEEE Access 2020, 8, 34362–34374. [Google Scholar] [CrossRef]

- Teplan, M. Fundamentals of EEG measurement. Meas. Sci. Rev. 2002, 2, 1–11. [Google Scholar]

- Roshdy, A.; Karar, A.S.; Al-Sabi, A.; Al Barakeh, Z.; El-Sayed, F.; Beyrouthy, T.; Nait-ali, A. Towards Human Brain Image Mapping for Emotion Digitization in Robotics. In Proceedings of the 2019 3rd International Conference on Bio-Engineering for Smart Technologies (BioSMART), Paris, France, 24–26 April 2019; pp. 1–5. [Google Scholar]

- Kamińska, D.; Smółka, K.; Zwoliński, G. Detection of Mental Stress through EEG Signal in Virtual Reality Environment. Electronics 2021, 10, 2840. [Google Scholar] [CrossRef]

- Jasper, H.H. The ten-twenty electrode system of the International Federation. Electroencephalogr. Clin. Neurophysiol. 1958, 10, 371–375. [Google Scholar]

- Affanni, A.; Chiorboli, G.; Minen, D. Motion artifact removal in stress sensors used in driver in motion simulators. In Proceedings of the 2016 IEEE International Symposium on Medical Measurements and Applications, MeMeA 2016, Benevento, Italy, 15–18 May 2016. [Google Scholar]

- Huang, H.H.; Condor, A.; Huang, H.J. Classification of EEG Motion Artifact Signals Using Spatial ICA. In Statistical Modeling in Biomedical Research: Contemporary Topics and Voices in the Field; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 23–35. [Google Scholar] [CrossRef]

- Affanni, A.; Piras, A.; Rinaldo, R.; Zontone, P. Dual channel Electrodermal activity sensor for motion artifact removal in car drivers’ stress detection. In Proceedings of the 2019 IEEE Sensors Applications Symposium (SAS), Sophia Antipolis, France, 11–13 March 2019. [Google Scholar]

- Jiang, X.; Bian, G.B.; Tian, Z. Removal of artifacts from EEG signals: A review. Sensors 2019, 19, 987. [Google Scholar] [CrossRef] [Green Version]

- Jun, G.; Smitha, K.G. EEG based stress level identification. In Proceedings of the 2016 IEEE international conference on systems, man, and cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 003270–003274. [Google Scholar]

- Jebelli, H.; Hwang, S.; Lee, S. EEG-based workers’ stress recognition at construction sites. Autom. Constr. 2018, 93, 315–324. [Google Scholar] [CrossRef]

- Lotfan, S.; Shahyad, S.; Khosrowabadi, R.; Mohammadi, A.; Hatef, B. Support vector machine classification of brain states exposed to social stress test using EEG-based brain network measures. Biocybern. Biomed. Eng. 2019, 39, 199–213. [Google Scholar] [CrossRef]

- Hamadicharef, B.; Zhang, H.; Guan, C.; Wang, C.; Phua, K.S.; Tee, K.P.; Ang, K.K. Learning EEG-based spectral-spatial patterns for attention level measurement. In Proceedings of the 2009 IEEE International Symposium on Circuits and Systems, Taipei, Taiwan, 24–27 May 2009; pp. 1465–1468. [Google Scholar]

- Lin, C.T.; Wu, R.C.; Liang, S.F.; Chao, W.H.; Chen, Y.J.; Jung, T.P. EEG-based drowsiness estimation for safety driving using independent component analysis. IEEE Trans. Circuits Syst. I Regul. Pap. 2005, 52, 2726–2738. [Google Scholar]

- Budak, U.; Bajaj, V.; Akbulut, Y.; Atila, O.; Sengur, A. An effective hybrid model for EEG-based drowsiness detection. IEEE Sens. J. 2019, 19, 7624–7631. [Google Scholar] [CrossRef]

- Dkhil, M.B.; Wali, A.; Alimi, A.M. Drowsy driver detection by EEG analysis using Fast Fourier Transform. In Proceedings of the 2015 15th International Conference on Intelligent Systems Design and Applications (ISDA ), Marrakech, Morocco, 14–16 December 2015; pp. 313–318. [Google Scholar]

- Li, G.; Chung, W.Y. A context-aware EEG headset system for early detection of driver drowsiness. Sensors 2015, 15, 20873–20893. [Google Scholar] [CrossRef]

- Zhou, Y.; Xu, T.; Li, S.; Li, S. Confusion state induction and EEG-based detection in learning. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 17–21 July 2018; pp. 3290–3293. [Google Scholar]

- Acı, Ç.İ.; Kaya, M.; Mishchenko, Y. Distinguishing mental attention states of humans via an EEG-based passive BCI using machine learning methods. Expert Syst. Appl. 2019, 134, 153–166. [Google Scholar] [CrossRef]

- Lin, Y.P.; Wang, C.H.; Jung, T.P.; Wu, T.L.; Jeng, S.K.; Duann, J.R.; Chen, J.H. EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar]

- Jadhav, N.; Manthalkar, R.; Joshi, Y. Effect of meditation on emotional response: An EEG-based study. Biomed. Signal Process. Control 2017, 34, 101–113. [Google Scholar] [CrossRef]

- Begum, S.; Barua, S.; Ahmed, M.U. In-vehicle stress monitoring based on EEG signal. Int. J. Eng. Res. Appl. 2017, 7, 55–71. [Google Scholar] [CrossRef]

- Noh, Y.; Kim, S.; Yoon, Y. Evaluation on Diversity of Drivers’ Cognitive Stress Response using EEG and ECG Signals during Real-Traffic Experiment with an Electric Vehicle. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), IEEE, Auckland, New Zealand, 27–30 October 2019; pp. 3987–3992. [Google Scholar]

- Halim, Z.; Rehan, M. On identification of driving-induced stress using electroencephalogram signals: A framework based on wearable safety-critical scheme and machine learning. Inf. Fusion 2020, 53, 66–79. [Google Scholar] [CrossRef]

- Kim, H.S.; Yoon, D.; Shin, H.S.; Park, C.H. Predicting the EEG level of a driver based on driving information. IEEE Trans. Intell. Transp. Syst. 2018, 20, 1215–1225. [Google Scholar] [CrossRef]

- Affanni, A. Wireless sensors system for stress detection by means of ECG and EDA acquisition. Sensors 2020, 20, 2026. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- LaRocco, J.; Le, M.D.; Paeng, D.G. A Systemic Review of Available Low-Cost EEG Headsets Used for Drowsiness Detection. Front. Neuroinformatics 2020, 14, 553352. [Google Scholar] [CrossRef]

- Hayashi, T.; Okamoto, E.; Nishimura, H.; Mizuno-Matsumoto, Y.; Ishii, R.; Ukai, S. Beta activities in EEG associated with emotional stress. Int. J. Intell. Comput. Med. Sci. Image Process. 2009, 3, 57–68. [Google Scholar] [CrossRef]

- Sulaiman, N.; Hamid, N.H.A.; Murat, Z.H.; Taib, M.N. Initial investigation of human physical stress level using brainwaves. In Proceedings of the 2009 IEEE Student Conference on Research and Development (SCOReD), Serdang, Malaysia, 16–18 November 2009; pp. 230–233. [Google Scholar]

- Díaz, H.; Cid, F.M.; Otárola, J.; Rojas, R.; Alarcón, O.; Cañete, L. EEG Beta band frequency domain evaluation for assessing stress and anxiety in resting, eyes closed, basal conditions. Procedia Comput. Sci. 2019, 162, 974–981. [Google Scholar] [CrossRef]

- Palacios-García, I.; Silva, J.; Villena-González, M.; Campos-Arteaga, G.; Artigas-Vergara, C.; Luarte, N.; Rodríguez, E.; Bosman, C.A. Increase in beta power reflects attentional top-down modulation after psychosocial stress induction. Front. Hum. Neurosci. 2021, 15, 630813. [Google Scholar] [CrossRef]

- Hu, B.; Peng, H.; Zhao, Q.; Hu, B.; Majoe, D.; Zheng, F.; Moore, P. Signal quality assessment model for wearable EEG sensor on prediction of mental stress. IEEE Trans. Nanobiosci. 2015, 14, 553–561. [Google Scholar]

- Joint Committee for Guide in Metrology, JCGM. JCGM 200:2012, International Vocabulary of Metrology—Basic and General Concepts and Associated Terms (VIM), 3rd ed. 2012. Available online: https://jcgm.bipm.org/vim/en/2.16.html (accessed on 10 January 2022).

- Joint Committee for Guide in Metrology, JCGM. JCGM 100:2008, Evaluation of Measurement Data—Guide to the Expression of Uncertainty in Measurement. 2008. Available online: https://www.bipm.org/utils/common/documents/jcgm/JCGM_100_2008_E.pdf (accessed on 10 December 2021).

- Affanni, A.; Najafi, T.A.; Guerci, S. Design of a low cost EEG sensor for the measurement of stress-related brain activity during driving. In Proceedings of the 2021 IEEE International Workshop on Metrology for Automotive (MetroAutomotive), Bologna, Italy, 1–2 July 2021; pp. 152–156. [Google Scholar] [CrossRef]

- Affanni, A. Dual channel electrodermal activity and an ECG wearable sensor to measure mental stress from the hands. Acta Imeko 2019, 8, 56–63. [Google Scholar] [CrossRef]

- University of Udine-Laboratory of Sensors and Biosignals-BioSens Lab. Available online: https://www.uniud.it/it/territorio-e-societa/uniud-lab-village/laboratorio-iot/bio-sens-lab (accessed on 10 January 2022).

- DOF Reality Motion Simulators. Available online: https://dofreality.com/ (accessed on 10 January 2022 ).

- Facebook Technologies, LLC. Available online: https://www.oculus.com/rift/ (accessed on 10 January 2022 ).

- Logitech Inc. Available online: https://www.logitech.com/en-us/products/driving.html (accessed on 10 January 2022 ).

- VI-Grade Driving Simulators. Available online: https://www.vi-grade.com/ (accessed on 10 January 2022 ).

- Klug, M.; Gramann, K. Identifying key factors for improving ICA-based decomposition of EEG data in mobile and stationary experiments. Eur. J. Neurosci. 2020, 54, 8406–8420. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mullen, T.R.; Kothe, C.A.; Chi, Y.M.; Ojeda, A.; Kerth, T.; Makeig, S.; Jung, T.P.; Cauwenberghs, G. Real-time neuroimaging and cognitive monitoring using wearable dry EEG. IEEE Trans. Biomed. Eng. 2015, 62, 2553–2567. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sejnowski, T.J. Independent component analysis of electroencephalographic data. Advances in Neural Information Processing Systems 8: Proceedings of the 1995 Conference; MIT Press: Cambridge, MA, USA, 1996; Volume 8, p. 145. [Google Scholar]

- Seo, S.; Lee, J. Stress and EEG. In Convergence and Hybrid Information Technologies; IntechOpen: London, UK, 2010; Available online: https://www.intechopen.com/chapters/10986 (accessed on 10 January 2022).

- Jatoi, M.A.; Kamel, N. Brain Source Localization Using EEG Signal Analysis; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Waili, T.; Alshebly, Y.S.; Sidek, K.A.; Johar, M.G.M. Stress recognition using Electroencephalogram (EEG) signal. J. Phys. Conf. Ser. 2020, 1502, 012052. [Google Scholar] [CrossRef]

- Ahn, S.; Nguyen, T.; Jang, H.; Kim, J.G.; Jun, S.C. Exploring Neuro-Physiological Correlates of Drivers’ Mental Fatigue Caused by Sleep Deprivation Using Simultaneous EEG, ECG, and fNIRS Data. Front. Hum. Neurosci. 2016, 10, 219. [Google Scholar] [CrossRef]

- Choi, Y.; Kim, M.; Chun, C. Measurement of occupants’ stress based on electroencephalograms (EEG) in twelve combined environments. Build. Environ. 2015, 88, 65–72. [Google Scholar] [CrossRef]

- Blanco, J.A.; Vanleer, A.C.; Calibo, T.K.; Firebaugh, S.L. Single-trial cognitive stress classification using portable wireless electroencephalography. Sensors 2019, 19, 499. [Google Scholar] [CrossRef] [Green Version]

- Jap, B.T.; Lal, S.; Fischer, P.; Bekiaris, E. Using EEG spectral components to assess algorithms for detecting fatigue. Expert Syst. Appl. 2009, 36, 2352–2359. [Google Scholar] [CrossRef]

- Zhao, C.; Zhao, M.; Liu, J.; Zheng, C. Electroencephalogram and electrocardiograph assessment of mental fatigue in a driving simulator. Accid. Anal. Prev. 2012, 45, 83–90. [Google Scholar] [CrossRef]

- Tanaka, M.; Shigihara, Y.; Ishii, A.; Funakura, M.; Kanai, E.; Watanabe, Y. Effect of mental fatigue on the central nervous system: An electroencephalography study. Behav. Brain Funct. 2012, 8, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Wascher, E.; Arnau, S.; Reiser, J.E.; Rudinger, G.; Karthaus, M.; Rinkenauer, G.; Dreger, F.; Getzmann, S. Evaluating mental load during realistic driving simulations by means of round the ear electrodes. Front. Neurosci. 2019, 13, 940. [Google Scholar] [CrossRef]

| Wave Type | Frequency Range (Hz) | Description |

|---|---|---|

| Delta | 0.5–4 | Deep sleep |

| Theta | 4–8 | Sleep, meditation, concentration |

| Alpha | 8–12 | Relax, reflection |

| Decreasing amplitude with anxiety | ||

| Beta | 12–30 | Alert, focused |

| Increasing amplitude with | ||

| stress, excitement, high mental activity | ||

| Gamma | >30 | Focus, sensory processing |

| Increasing amplitude with anxiety |

| Model | Channel # | Sample Rate (Hz) | Resolution (nV) | Linearity | Bit # | Battery (h) |

|---|---|---|---|---|---|---|

| Emotiv Epoc | 14 | 128 | 510 | N/A | 14 | 9 |

| Neurosky Mindwave | 1 | 512 | N/A | N/A | 12 | 8 |

| Interaxon Muse 2 | 4 | 220–500 | 488 | N/A | 12 | 5 |

| Present paper | 6 | 200 | 43 | 14 | 10 |

| Mean beta power for ADAS1 session () | ||||||

| Subject # | ||||||

| 1 | 2.33 | 1.99 | 3.27 | 3.97 | 3.34 | 4.68 |

| 2 | 4.63 | 5.99 | 6.69 | 7.12 | 7.66 | 6.90 |

| 3 | 2.39 | 1.68 | 3.54 | 2.90 | 4.37 | 5.05 |

| 4 | 2.91 | 3.60 | 4.83 | 3.58 | 3.53 | 4.25 |

| 5 | 2.39 | 1.95 | 5.34 | 5.35 | 7.40 | 6.46 |

| 6 | 1.66 | 1.51 | 4.18 | 2.72 | 3.10 | 4.63 |

| 7 | 2.12 | 1.53 | 6.42 | 8.48 | 3.98 | 5.24 |

| 8 | 2.33 | 2.08 | 3.92 | 3.26 | 3.14 | 4.10 |

| 9 | 2.04 | 1.27 | 3.39 | 3.17 | 7.11 | 9.03 |

| 10 | 1.20 | 4.37 | 5.59 | 3.92 | 3.99 | 4.08 |

| Mean beta power for ADAS2 session () | ||||||

| Subject # | ||||||

| 1 | 2.56 | 2.73 | 4.67 | 3.91 | 9.06 | 6.63 |

| 2 | 4.86 | 5.81 | 5.40 | 6.22 | 7.67 | 9.77 |

| 3 | 3.21 | 3.05 | 5.71 | 4.85 | 3.91 | 4.86 |

| 4 | 4.90 | 4.24 | 8.55 | 4.73 | 5.19 | 5.55 |

| 5 | 2.45 | 2.05 | 6.30 | 5.42 | 8.70 | 6.31 |

| 6 | 1.93 | 1.61 | 4.17 | 3.67 | 4.37 | 7.10 |

| 7 | 7.14 | 5.65 | 10.68 | 15.81 | 6.45 | 6.05 |

| 8 | 3.75 | 3.86 | 6.24 | 3.62 | 3.64 | 17.19 |

| 9 | 2.54 | 1.58 | 4.17 | 3.28 | 10.83 | 12.94 |

| 10 | 1.93 | 5.94 | 5.34 | 5.95 | 7.07 | 6.37 |

| Mean beta power for Manual session () | ||||||

| Subject # | ||||||

| 1 | 2.26 | 3.13 | 10.30 | 4.42 | 7.41 | 8.29 |

| 2 | 2.53 | 2.58 | 6.80 | 5.72 | 5.14 | 8.71 |

| 3 | 9.64 | 14.93 | 22.75 | 31.55 | 10.73 | 13.89 |

| 4 | 2.38 | 1.41 | 9.11 | 7.21 | 6.45 | 6.07 |

| 5 | 3.93 | 3.27 | 8.55 | 7.16 | 11.28 | 10.41 |

| 6 | 1.33 | 1.41 | 4.25 | 2.98 | 4.04 | 7.09 |

| 7 | 2.84 | 2.38 | 5.99 | 6.92 | 4.61 | 8.38 |

| 8 | 3.98 | 3.46 | 4.44 | 4.34 | 4.45 | 7.10 |

| 9 | 1.81 | 1.59 | 4.12 | 2.88 | 11.24 | 11.07 |

| 10 | 1.88 | 7.55 | 6.51 | 3.98 | 4.52 | 5.83 |

| Channel | Wilcoxon Test Probability | ||

|---|---|---|---|

| ADAS1 vs. ADAS2 | ADAS1 vs. Manual | ADAS2 vs. Manual | |

| 0.05 | 0.52 | 0.31 | |

| 0.16 | 0.47 | 0.57 | |

| 0.12 | 0.02 | 0.38 | |

| 0.21 | 0.18 | 0.68 | |

| 0.06 | 0.02 | 0.79 | |

| 0.02 | 0.003 | 0.34 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Affanni, A.; Aminosharieh Najafi, T.; Guerci, S. Development of an EEG Headband for Stress Measurement on Driving Simulators. Sensors 2022, 22, 1785. https://doi.org/10.3390/s22051785

Affanni A, Aminosharieh Najafi T, Guerci S. Development of an EEG Headband for Stress Measurement on Driving Simulators. Sensors. 2022; 22(5):1785. https://doi.org/10.3390/s22051785

Chicago/Turabian StyleAffanni, Antonio, Taraneh Aminosharieh Najafi, and Sonia Guerci. 2022. "Development of an EEG Headband for Stress Measurement on Driving Simulators" Sensors 22, no. 5: 1785. https://doi.org/10.3390/s22051785