A Novel Untethered Hand Wearable with Fine-Grained Cutaneous Haptic Feedback

Abstract

:1. Introduction

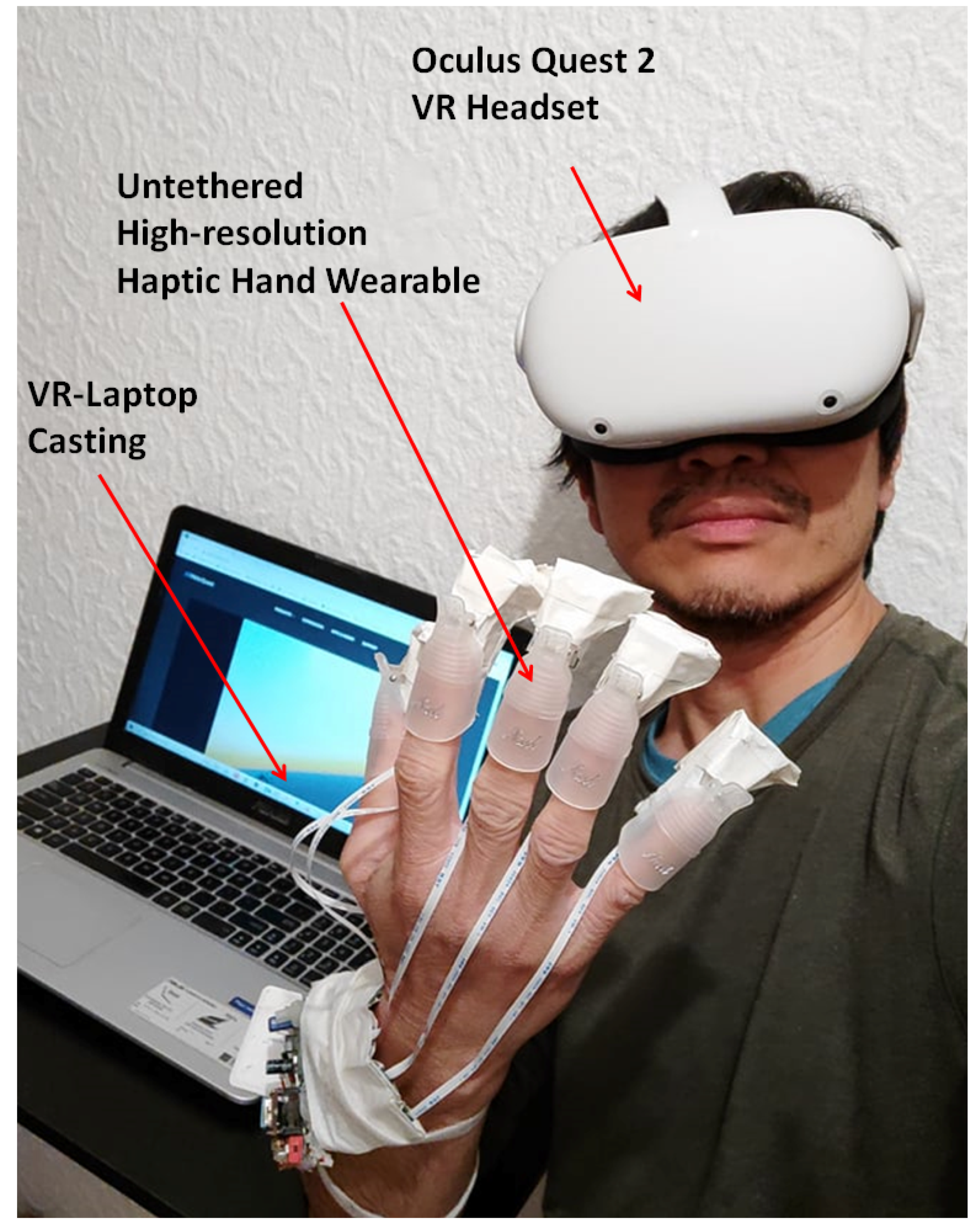

2. Overall Schematic Diagram

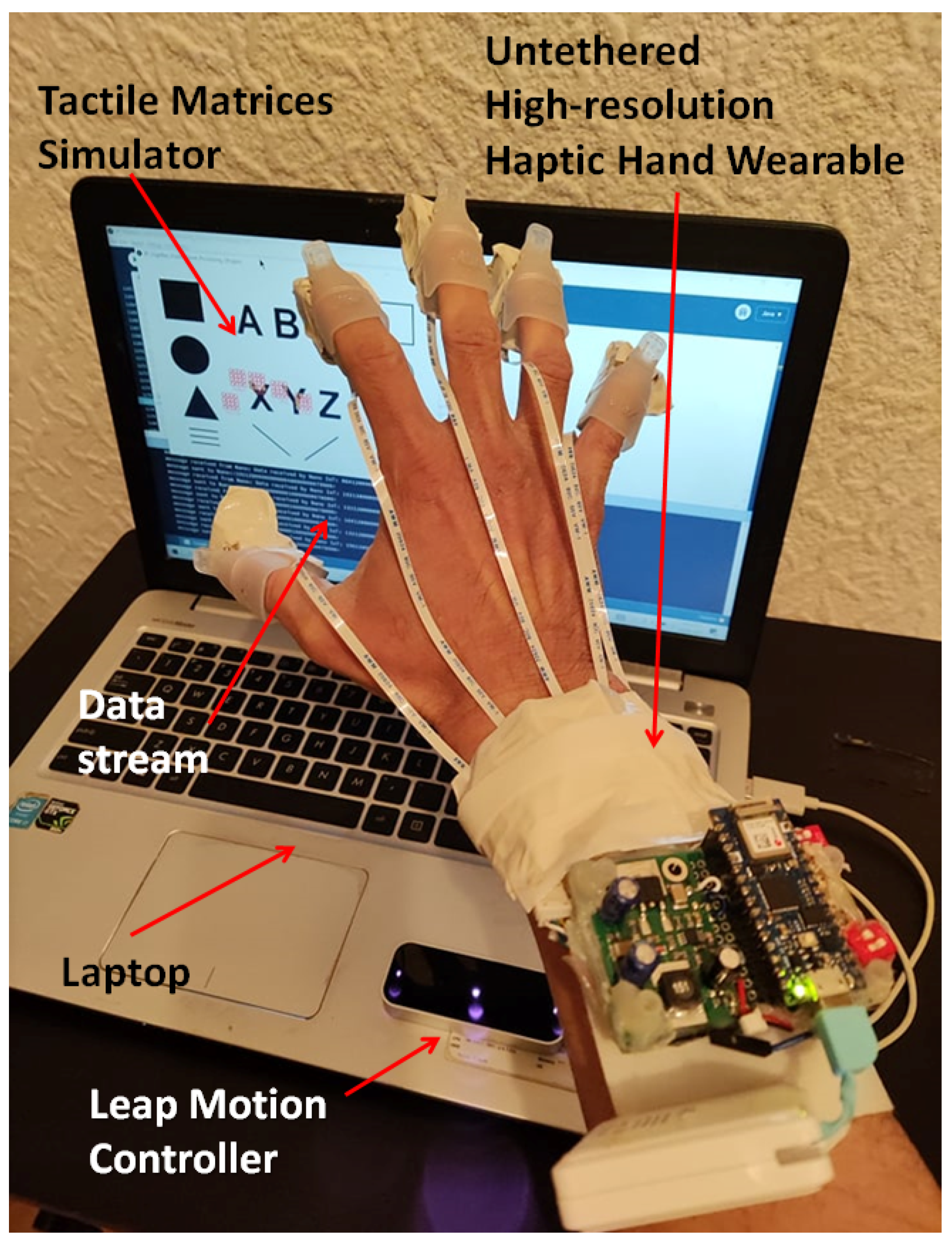

3. Construction of the Prototype

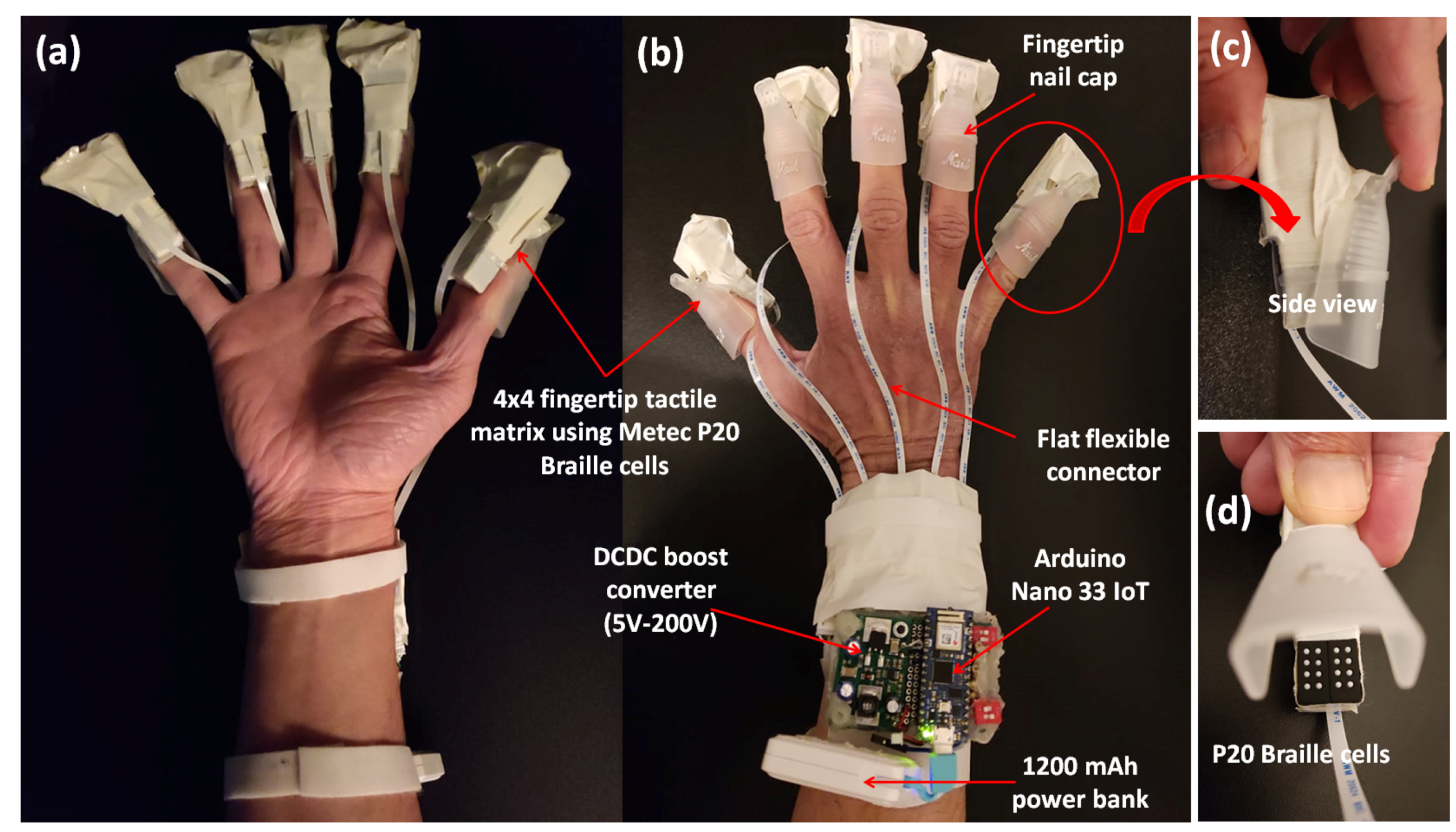

3.1. Hardware

3.1.1. Microcontroller

3.1.2. DC–DC Converter

3.1.3. Tactile Actuator

P20 Braille Cell

3.1.4. Shift Register

3.1.5. Leap Motion Controller for Hand Tracking

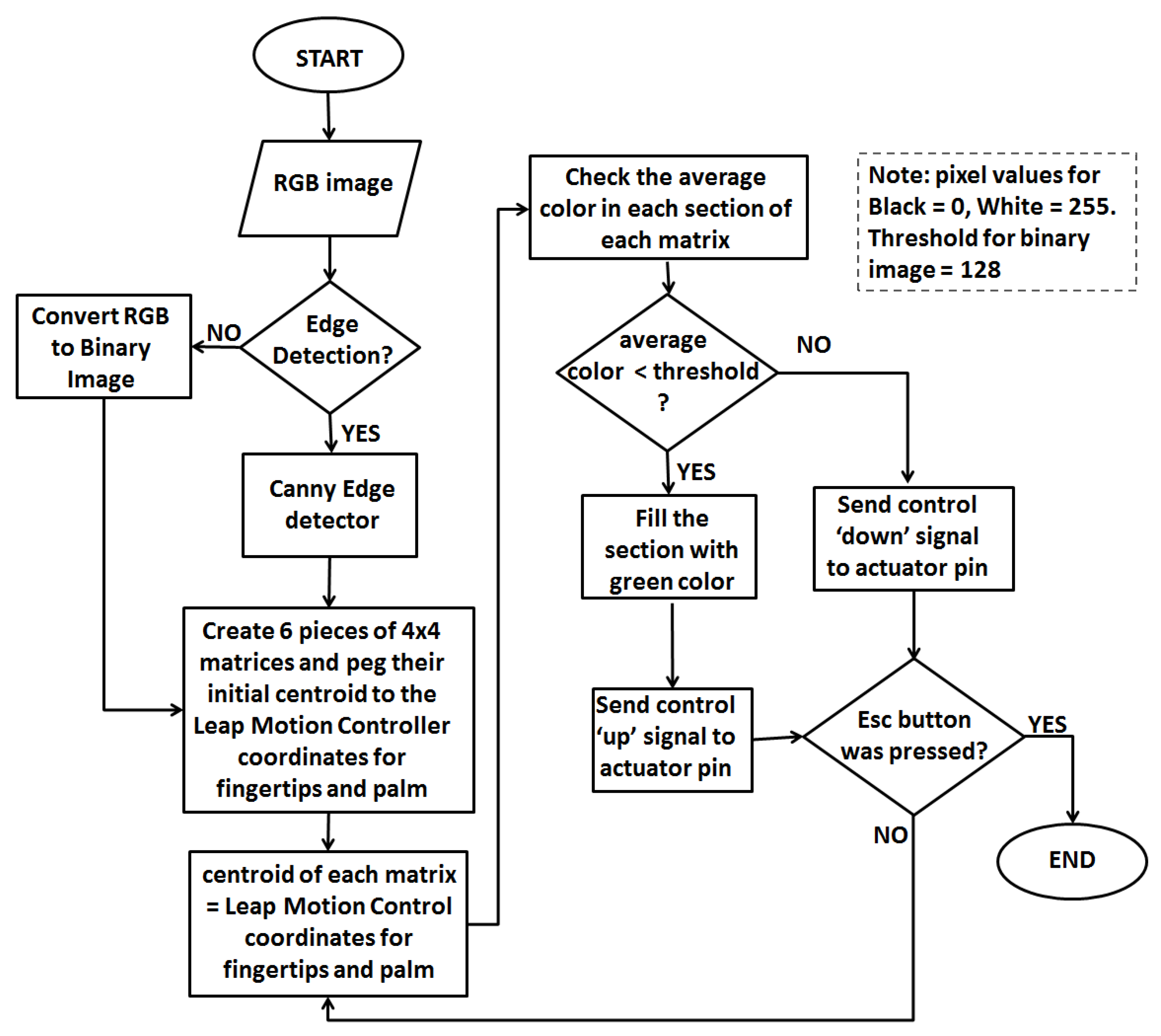

3.2. Software

3.2.1. Firmware

3.2.2. Tactile Matrices Simulator

4. Experimental Setup

Wireless Connection

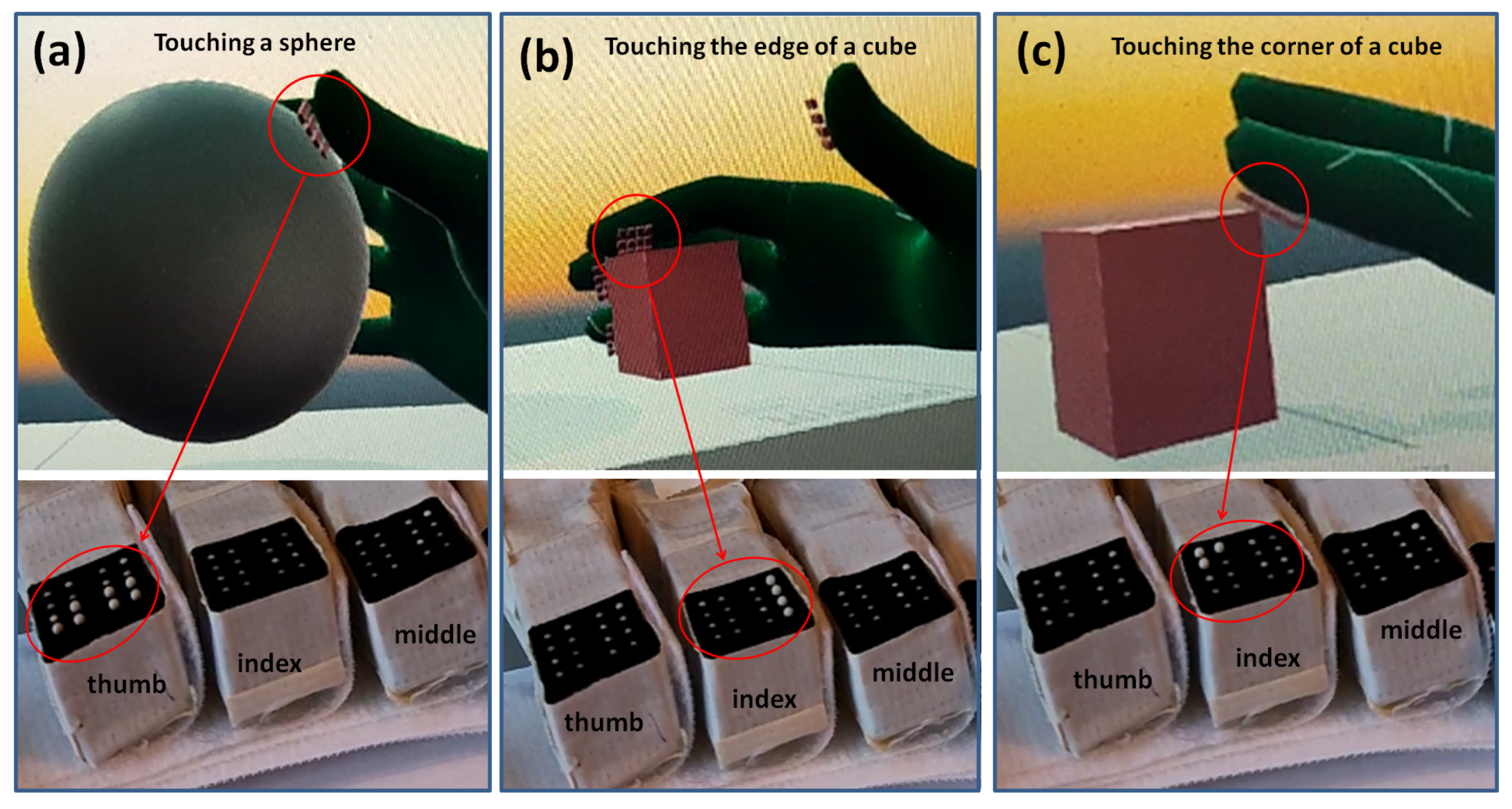

5. Application Example

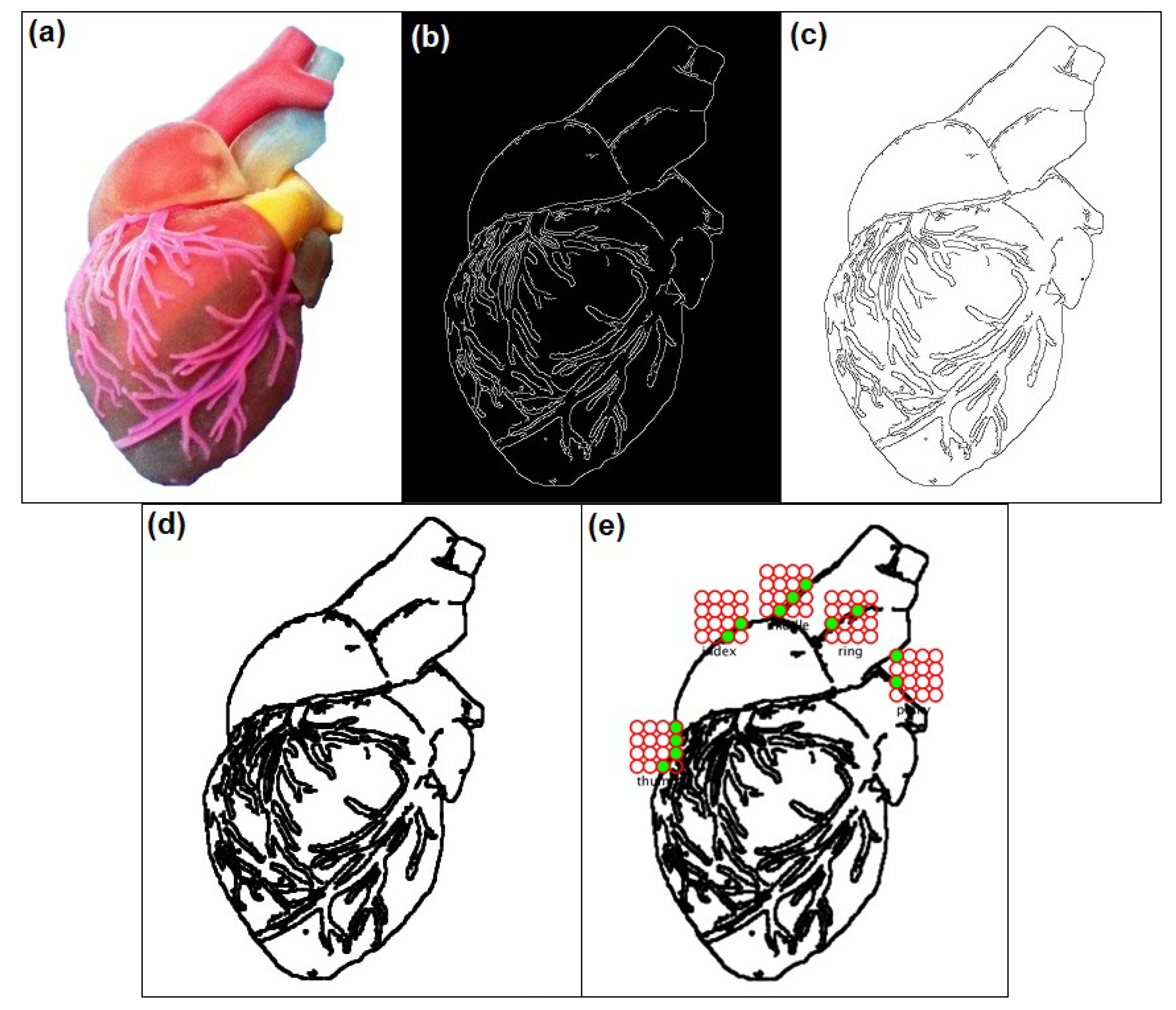

5.1. 2D Surface Scanning and Edge Detection

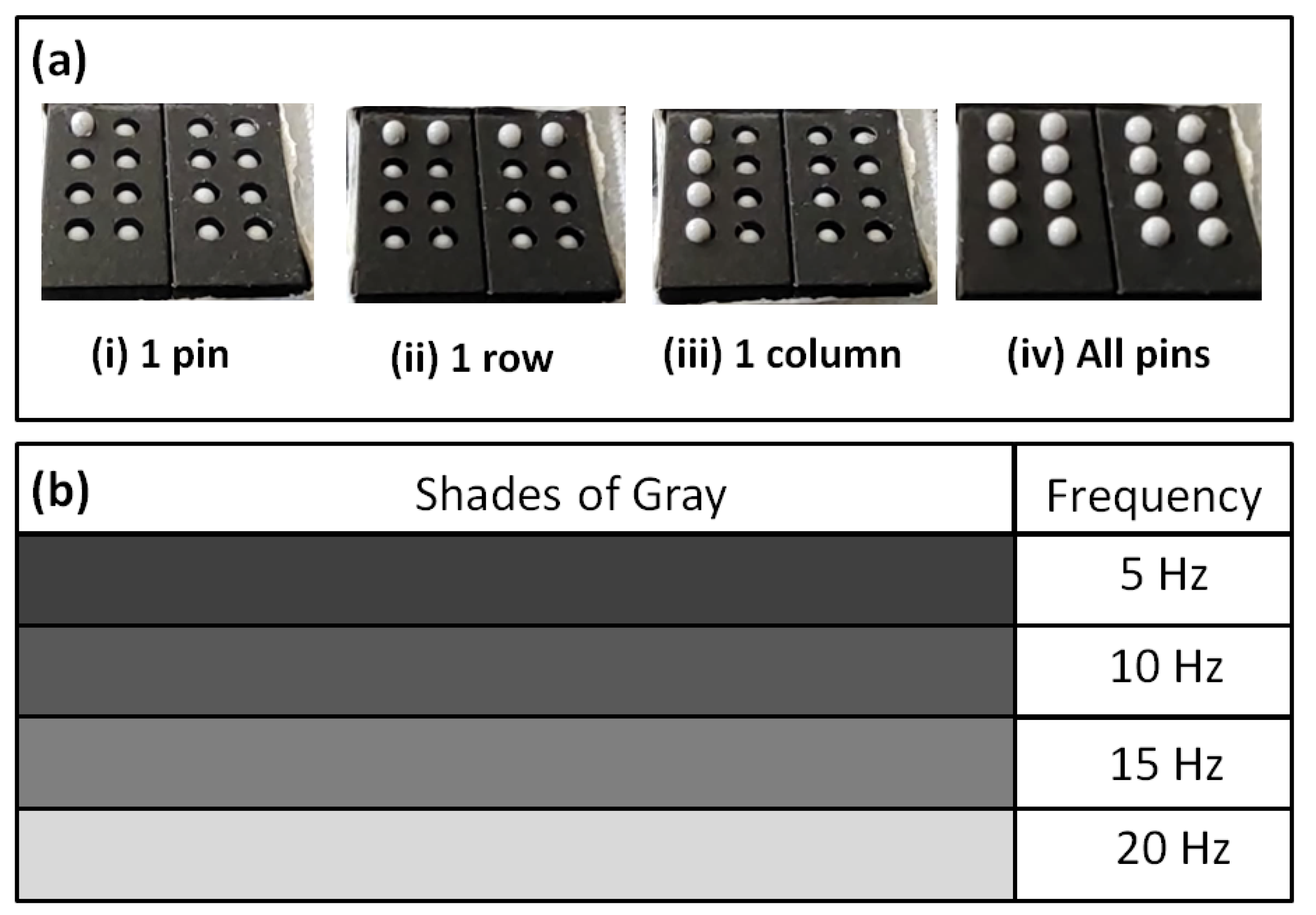

5.2. Tapping Vibration

5.3. 3D Surface Scanning and Edge Detection Using Oculus Quest2 VR Headset

5.4. Experiment Protocol

6. Experiments Involving Human Participants

6.1. Experiment Procedure

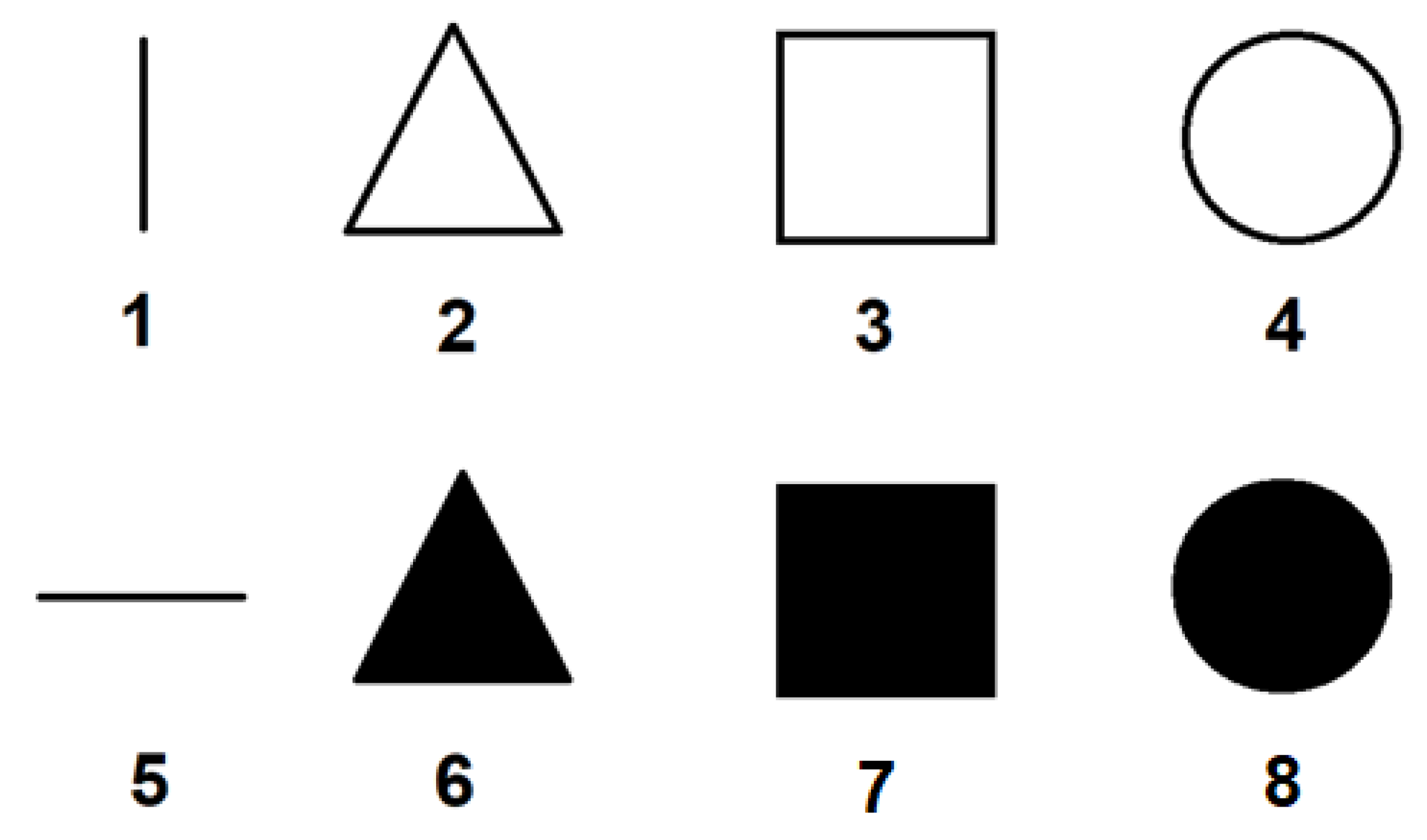

6.1.1. Experiment 1: Spatial Test

6.1.2. Experiment 2: Temporal Test

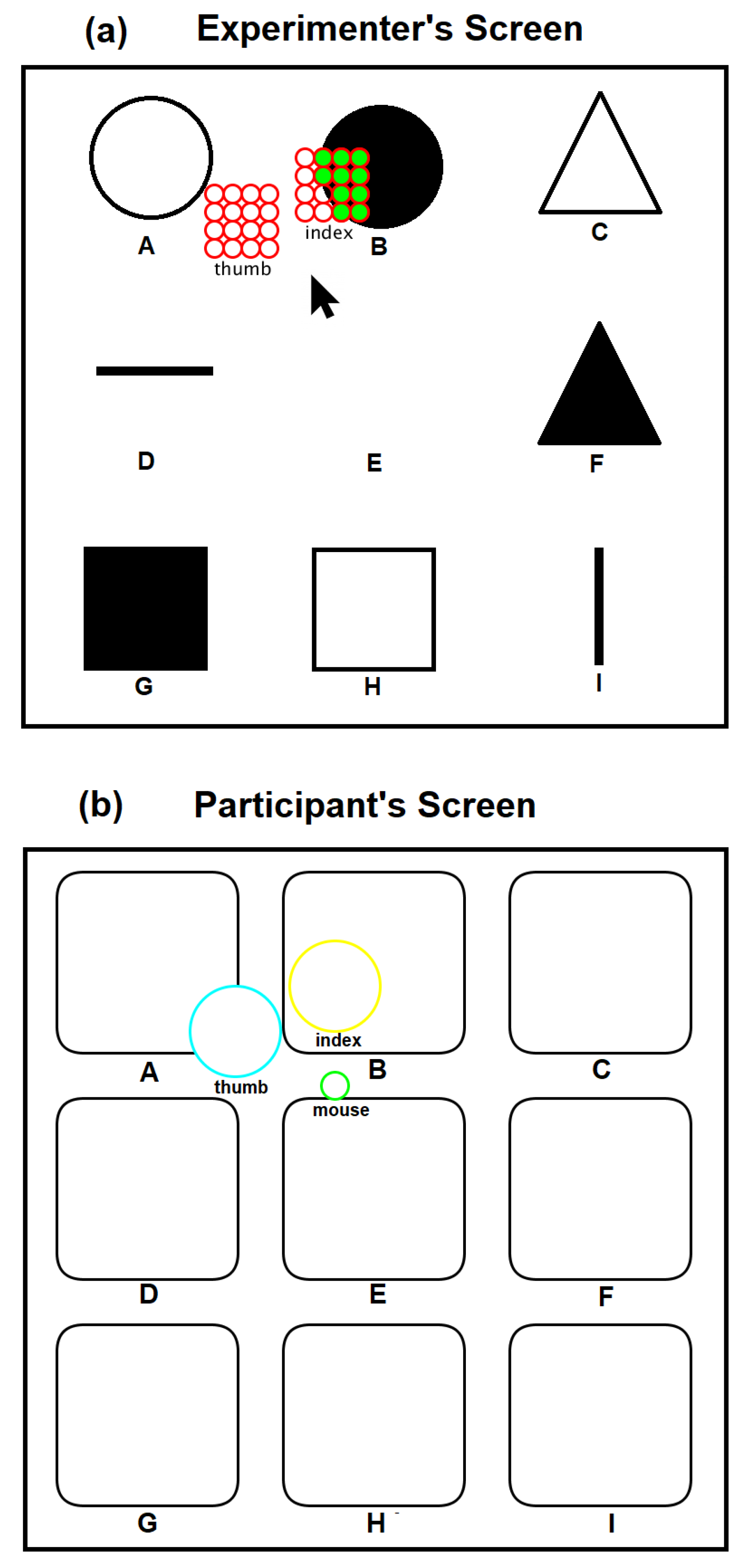

6.1.3. Experiment 3: 2D Scanning Test

6.2. Experiment Results and Discussion

6.2.1. Experiment 1: Spatial Training

6.2.2. Experiment 1: Spatial Test

6.2.3. Experiment 2: Temporal Training

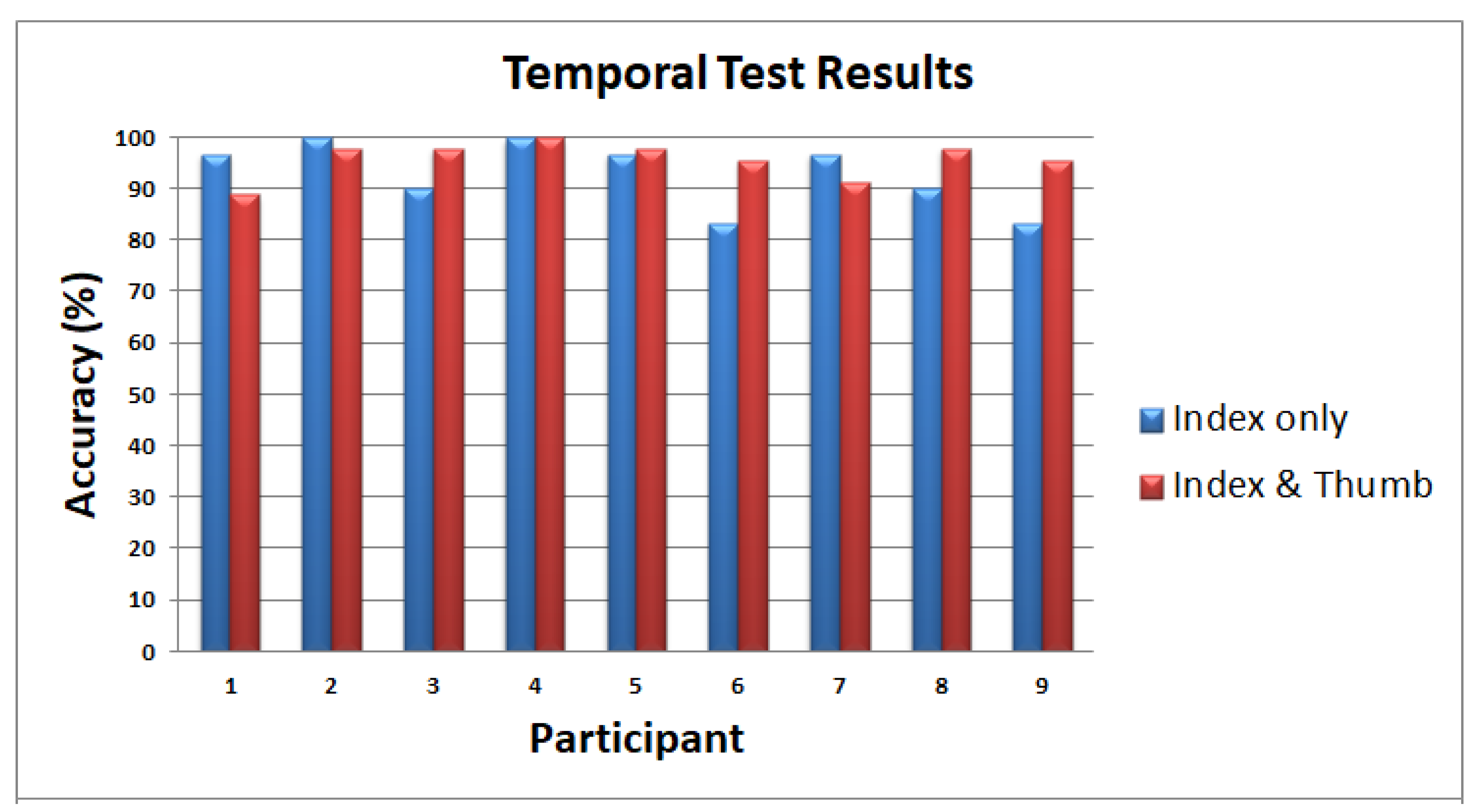

6.2.4. Experiment 2: Temporal Test

6.2.5. Experiment 3: 2D Scanning Training

6.2.6. Experiment 3: 2D Scanning Test

7. Limitations of the Current Design and Possible Future Improvements

8. Conclusions and Recommendation

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lederman, S.J.; Klatzky, R.L. Haptic perception: A tutorial. Atten. Percept. Psychophys. 2009, 71, 1439–1459. [Google Scholar] [CrossRef] [Green Version]

- Sreelakshmi, M.; Subash, T.D. Haptic technology: A comprehensive review on its applications and future prospects. Mater. Today 2017, 4, 4182–4187. [Google Scholar] [CrossRef]

- Giri, G.S.; Maddahi, Y.; Zareinia, K. An Application-Based Review of Haptics Technology. Robotics 2021, 10, 29. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Prattichizzo, D. Sensory subtraction via cutaneous feedback: A novel technique to improve the transparency of robotic surgery. In Proceedings of the 4th Joint Workshop on Computer/Robot Assisted Surgery, Genova, Italy, 14–16 October 2014. [Google Scholar]

- Pacchierotti, C.; Meli, L.; Chinello, F.; Malvezzi, M.; Prattichizzo, D. Cutaneous haptic feedback to ensure the stability of robotic teleoperation systems. Int. J. Robot. Res. 2015, 34, 1773–1787. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Sinclair, S.; Solazzi, M.; Frisoli, A.; Hayward, V.; Prattichizzo, D. Wearable haptic systems for the fingertip and the hand: Taxonomy review and perspectives. IEEE Trans. Haptics 2017, 10, 580–600. [Google Scholar] [CrossRef] [Green Version]

- Wang, D.; Song, M.; Naqash, A.; Zheng, Y.; Xu, W.; Zhang, Y. Toward Whole-Hand Kinesthetic Feedback: A Survey of Force Feedback Gloves. IEEE Trans. Haptics 2019, 12, 189–204. [Google Scholar] [CrossRef]

- Secco, E.L.; Maereg, A.T.; Reid, D.; Nagar, A.K. An integrated haptic system combining VR, a markerless motion capture system and tactile actuators. ICST Trans. Ambient. Syst. 2018, 5, 154375. [Google Scholar] [CrossRef]

- Abad, A.C.; Ormazabal, M.; Reid, D.; Ranasinghe, A. An Untethered Multimodal Haptic Hand Wearable. In Proceedings of the 2021 IEEE Sensors, Sydney, Australia, 31 October–3 November 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Tavakoli, M.; Patel, R.V. Haptics in Telerobotic Systems for Minimally Invasive Surgery. In Telesurgery; Springer: Berlin/Heidelberg, Germany, 2008; pp. 117–128. ISBN 978-3-540-72998-3. Available online: http://www.ece.ualberta.ca/~tbs/pmwiki/pdf/Chapter-Tavakoli-2008.pdf (accessed on 6 February 2022).

- Mehrdad, S.; Liu, F.; Pham, M.T.; Lelevé, A.; Atashzar, S.F. Review of Advanced Medical Telerobots. Appl. Sci. 2021, 11, 209. [Google Scholar] [CrossRef]

- Collins, C. Tactile Television-Mechanical and Electrical Image Projection. IEEE Trans. Man-Mach. Syst. 1970, 11, 65–71. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, J.; Yan, J.; Liu, W.; Song, G. Design of a Vibrotactile Vest for Contour Perception. Int. J. Adv. Robot. Syst. 2012, 9, 166. [Google Scholar] [CrossRef]

- Ranasinghe, A.; Althoefer, K.; Dasgupta, P.; Nagar, A.; Nanayakkara, T. Wearable haptic based pattern feedback sleeve system. In Proceedings of the 6th International Conference on Soft Computing for Problem Solving, Patiala, India, 23–24 December 2016. [Google Scholar]

- Scalera, L.; Seriani, S.; Gallina, P.; Di Luca, M.; Gasparetto, A. An experimental setup to test dual-joystick directional responses to vibrotactile stimuli. In Proceedings of the 2017 IEEE World Haptics Conference (WHC), Munich, Germany, 6–9 June 2017; pp. 72–77. [Google Scholar] [CrossRef] [Green Version]

- Yunus, R.; Ali, S.; Ayaz, Y.; Khan, M.; Kanwal, S.; Akhlaque, U.; Nawaz, R. Development and Testing of a Wearable Vibrotactile Haptic Feedback System for Proprioceptive Rehabilitation. IEEE Access 2020, 8, 35172–35184. [Google Scholar] [CrossRef]

- van der Putten, E.P.W.; van den Dobbelsteen, J.J.; Goossens, R.H.M.; Jakimowicz, J.J.; Dankelman, J. The Effect of Augmented Feedback on Grasp Force in Laparoscopic Grasp Control. IEEE Trans. Haptics 2010, 3, 280–291. [Google Scholar] [CrossRef]

- Benali-Khoudja, M.; Hafez, M.; Kheddar, A. VITAL: An electromagnetic integrated tactile display. Displays 2007, 28, 133–144. [Google Scholar] [CrossRef]

- Visell, Y. Tactile sensory substitution: Models for enaction in HCI. Interact. Comput. 2009, 21, 38–53. [Google Scholar] [CrossRef]

- Frisken-Gibson, S.F.; Bach-Y-Rita, P.; Tompkins, W.J.; Webster, J.G. A 64-Solenoid, Four-Level Fingertip Search Display for the Blind. IEEE Trans. Biomed. Eng. 1987, 34, 963–965. [Google Scholar] [CrossRef]

- Salsedo, F.; Marcheschi, S.; Fontana, M.; Bergamasco, M. Tactile transducer based on electromechanical solenoids. In Proceedings of the 2011 IEEE World Haptics Conference, Istanbul, Turkey, 21–24 June 2011; pp. 581–586. [Google Scholar]

- Deng, K.; Enikov, E.T. Design and Development of a Pulsed Electromagnetic Micro-Actuator for 3D Virtual Tactile Displays. Mechatronics 2010, 20, 503–509. [Google Scholar] [CrossRef]

- Fukuda, T.; Morita, H.; Arai, F.; Ishihara, H.; Matsuura, H. Micro Resonator Using Electromagnetic Actuator for Tactile Display. In Proceedings of the 1997 International Symposium on Micromechatronics and Human Science, Nagoya, Japan, 5–8 October 1997. [Google Scholar]

- Rotard, M.; Taras, C.; Ertl, T. Tactile web browsing for blind people. Multimed. Tools Appl. 2008, 37, 53–69. [Google Scholar] [CrossRef] [Green Version]

- Bliss, J.C.; Katcher, M.H.; Rogers, C.H.; Shepard, R.P. Optical-to-tactile image conversion for the blind. IEEE Trans. Man-Mach. Syst. 1970, 11, 58–65. [Google Scholar] [CrossRef]

- Wang, Q.; Hayward, V. Biomechanically optimized distributed tactile transducer based on lateral skin deformation. Int. J. Robot. Res. 2009, 29, 323–335. [Google Scholar] [CrossRef] [Green Version]

- Summers, I.R.; Chanter, C.M. A broadband tactile array on the fingertip. J. Acoust. Soc. Am. 2002, 112, 2118–2126. [Google Scholar] [CrossRef]

- Kyung, K.-U.; Lee, J.-Y. Ubi-Pen: A haptic interface with texture and vibrotactile display. IEEE Comput. Graph. Appl. 2009, 29, 24–32. [Google Scholar] [CrossRef]

- Szabo, Z.; Enikov, E.T. Developement of Wearable Micro-Actuator Array for 3-D Virtual Tactile Display. J. Electromagn. Anal. Appl. 2012, 4, 219–229. [Google Scholar]

- Besse, N.; Rosset, S.; Zárate, J.J.; Ferrari, E.; Brayda, L.; Shea, H. Understanding Graphics on a Scalable Latching Assistive Haptic Display Using a Shape Memory Polymer Membrane. IEEE Trans. Haptics 2018, 11, 30–38. [Google Scholar] [CrossRef]

- Sawada, H. Tactile Display Using the Micro-vibration of Shape-Memory Alloy Wires and Its Application to Tactile Interaction Systems. In Pervasive Haptics; Kajimoto, H., Saga, S., Konyo, M., Eds.; Springer: Tokyo, Japan, 2016. [Google Scholar]

- Kajimoto, H.; Jones, L. Wearable Tactile Display Based on Thermal Expansion of Nichrome Wire. IEEE Trans. Haptics 2019, 12, 257–268. [Google Scholar] [CrossRef]

- Shimizu, Y. Tactile display terminal for visually handicapped. Displays 1986, 7, 116–120. [Google Scholar] [CrossRef]

- Russomanno, A.; Xu, Z.; O’Modhrain, S.; Gillespie, B. A pneu shape display: Physical buttons with programmable touch response. In Proceedings of the 2017 IEEE World Haptics Conference (WHC), Munich, Germany, 6–9 June 2017; pp. 641–646. [Google Scholar] [CrossRef]

- Stanley, A.A.; Hata, K.; Okamura, A.M. Closed-loop shape control of a Haptic Jamming deformable surface. In Proceedings of the Robotics and Automation (ICRA) 2016 IEEE International Conference, Stockholm, Sweden, 16–21 May 2016; pp. 2718–2724. [Google Scholar]

- Kwon, D.-S.; Yang, T.-H.; Cho, J.Y. Trend & prospects of haptic technology in mobile devices. In Symposium on Industrial Electronics (ISIE); IEEE: Bari, Italy, 2010; pp. 3778–3783. [Google Scholar] [CrossRef]

- Kappassov, Z.; Corrales, J.-A.; Perdereau, V. Tactile sensing in dexterous robot hands—Review. In Robotics and Autonomous Systems; Elsevier: Amsterdam, The Netherlands, 2015; Volume 74, pp. 195–220. [Google Scholar]

- Shen, Y.; Liu, Y.; Kejie, L. Haptic Tactile Feedback in Teleoperation of a Multifingered Robot Hand. In Proceedings of the Third World Congress Intelligent Control and Automation, Dearborn, MI, USA, 1–3 October 2000; Volume 1, pp. 85–90. [Google Scholar]

- Xie, Y.; Chen, C.; Wu, D.; Xi, W.; Liu, H. Human-Touch-Inspired Material Recognition for Robotic Tactile Sensing. Appl. Sci. 2019, 9, 2537. [Google Scholar] [CrossRef] [Green Version]

- Ozioko, O.; Dahiya, R. Smart Tactile Gloves for Haptic Interaction, Communication, and Rehabilitation. Adv. Intell. Syst. 2022, 4, 2100091. [Google Scholar] [CrossRef]

- Lee, K.T.; Chee, P.S.; Lim, E.H.; Lim, C.C. Artificial intelligence (AI)-driven smart glove for object recognition application. Mater. Today Proc. 2022. [Google Scholar] [CrossRef]

- King, C.H.; Higa, A.T.; Culjat, M.O.; Han, S.H.; Bisley, J.W. A Pneumatic Haptic Feedback Actuator Array for Robotic Surgery or Simulation. Stud. Health Technol. Inform. 2007, 125, 217–222. [Google Scholar]

- King, C.-H.; Culjat, M.O.; Franco, M.L.; Bisley, J.W.; Dutson, E.; Grundfest, W.S. Optimization of a Pneumatic Balloon Tactile Display for Robot-Assisted Surgery Based on Human Perception. IEEE Trans. Biomed. Eng. 2008, 55, 2593–2600. [Google Scholar] [CrossRef] [Green Version]

- McMahan, W.; Gewirtz, J.; Standish, D.; Martin, P.; Kunkel, J.A.; Lilavois, M.; Wedmid, A.; Lee, D.I.; Kuchenbecker, K.J. Tool Contact Acceleration Feedback for Telerobotic Surgery. IEEE Trans. Haptics 2011, 4, 210–220. [Google Scholar] [CrossRef]

- Meli, L.; Pacchierotti, C.; Prattichizzo, D. Sensory Subtraction in Robot-Assisted Surgery: Fingertip Skin Deformation Feedback to Ensure Safety and Improve Transparency in Bimanual Haptic Interaction. IEEE Trans. Biomed. Eng. 2014, 61, 1318–1327. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Prattichizzo, D.; Kuchenbecker, K.J. Cutaneous Feedback of Fingertip Deformation and Vibration for Palpation in Robotic Surgery. IEEE Trans. Biomed. Eng. 2016, 63, 278–287. [Google Scholar] [CrossRef]

- Shao, Y.; Hu, H.; Visell, Y. A wearable tactile sensor array for large area remote vibration sensing in the hand. IEEE Sens. J. 2020, 20, 6612–6623. [Google Scholar] [CrossRef] [Green Version]

- Abad, A.C.; Swarup, D.; Reid, D.; Ranasinghe, A. 4 × 4 Fingertip Tactile Matrix Actuator with Edge Detection Scanning ROI Simulator. In 2020 IEEE SENSORS; IEEE: Rotterdam, The Netherlands, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Metec P20 Braille Cell Datasheet. Available online: https://www.metec-ag.de/downloads/p20.pdf (accessed on 6 February 2022).

- Malvezzi, M.; Chinello, F.; Prattichizzo, D.; Pacchierotti, C. Design of Personalized Wearable Haptic Interfaces to Account for Fingertip Size and shape. IEEE Trans. Haptics 2021, 14, 266–272. [Google Scholar] [CrossRef]

- Weart TouchDIVER. Available online: https://www.weart.it/touchdiver/ (accessed on 6 February 2022).

- Hands-On: Dexmo Haptic Force-Feedback Gloves Are Compact and Wireless. Available online: https://www.roadtovr.com/dexta-dexmo-vr-gloves-force-feedback-haptic-hands-on/ (accessed on 6 February 2022).

- BeBop Forte Data Gloves. Available online: https://bebopsensors.com/arvr/ (accessed on 6 February 2022).

- HaptX Gloves DK2 Haptic VR Gloves with 133 Tactile Sensors per Hand. Available online: https://www.geeky-gadgets.com/haptic-vr-gloves-28-01-2021/ (accessed on 6 February 2022).

- Dot Incorporated, Korea. Available online: https://www.dotincorp.com/ (accessed on 6 February 2022).

- ARDUINO NANO 33 IOT. Available online: https://store.arduino.cc/arduino-nano-33-iot (accessed on 6 February 2022).

- Haptic Motor Driver Hook-Up Guide. Available online: https://learn.sparkfun.com/tutorials/haptic-motor-driver-hook-up-guide/all (accessed on 6 February 2022).

- Getting Started with the ARDUINO NANO 33 IoT. Available online: https://www.arduino.cc/en/Guide/NANO33IoT (accessed on 6 February 2022).

- Arduino Nano 33 BLE Same as ESP32? Available online: https://forum.arduino.cc/index.php?topic=659059.0 (accessed on 6 February 2022).

- Arduino NINA-W102 Firmware. Available online: https://github.com/arduino/nina-fw (accessed on 6 February 2022).

- Metec DC-DC 5–200 V Boost Converter. Available online: https://www.metec-ag.de/downloads/dcdc-converter-5to200v.pdf (accessed on 6 February 2022).

- Leap Motion Controller. Available online: https://www.ultraleap.com/datasheets/Leap_Motion_Controller_Datasheet.pdf (accessed on 6 February 2022).

- Watanabe, T.; Kume, Y.; Ifukube, T. Shape discrimination with a tactile mouse. J. Inst. Image Inf. Telev. Eng. 2000, 54, 840–847. (In Japanese) [Google Scholar]

- Hribar, V.E.; Deal, L.G.; Pawluk, D.T.V. Displaying Braille and Graphics with a “Tactile mouse”. In Proceedings of the 14th International ACM SIGACCESS Conference on Computers and Accessibility, Boulder, CO, USA, 22–24 October 2012; pp. 251–252. [Google Scholar] [CrossRef]

- Owen, J.M.; Petro, J.A.; Souza, S.M.D.; Rastogi, R.; Pawluk, D.T.V. An Improved, Low-cost Tactile “Mouse” for Use by Individuals Who are Blind and Visually Impaired. In Proceedings of the 11th International ACM SIGACCESS Conference on Computers and Accessibility (Assets’09), Pittsburgh, PA, USA, 25–28 October 2009; pp. 223–224. [Google Scholar]

- Can the NANO IOT 33 Run Full Bluetooth (NOT BLE)? Available online: https://forum.arduino.cc/index.php?topic=654631.0 (accessed on 6 February 2022).

- Schubert, T.W.; D’Ausilio, A.; Canto, R. Using Arduino microcontroller boards to measure response latencies. Behav. Res. Methods 2013, 45, 1332–1346. [Google Scholar] [CrossRef] [Green Version]

- High Resolution Haptic Glove Database. Available online: https://drive.google.com/drive/u/1/folders/1jBfT5-lH9-dx2a5O3dPrMOJ_ZvB8WX5z (accessed on 6 February 2022).

- Which Haptic Effects Should You Use? Available online: https://www.precisionmicrodrives.com/content/which-haptic-effects-should-you-use/ (accessed on 6 February 2022).

- Valecillos, D. Unity3d Oculus Quest Hand Tracking with Buttons and Physics. 2020. Available online: https://www.youtube.com/watch?v=vswQO-HGoKA&t=322s (accessed on 6 February 2022).

- Van Strien, J.W. Classificatie van links—En rechtshandige proefpersonen. Classification of left- and right-handed research participants. J. Psychol. 1992, 47, 88–92. [Google Scholar]

- Headley, P.; Pawluk, D. A Low-Cost, Variable Amplitude Haptic Distributed Display for Persons who are Blind and Visually Impaired. In Proceedings of the ACM Assets, Orlando, FL, USA, 25–27 October 2010. [Google Scholar]

| Initial 4 × 4 Fingertip Tactile Matrix [48] | Current Untethered High-Resolution Haptic Hand Wearable | |

|---|---|---|

| Type of tactile actuator (tactor) | Dot Braille cell | P20 Braille cells |

| Technology used in the tactor | Electromagnet (solenoid) | Piezoelectric bimorph bender actuator |

| Number of tactors | 16 electromagnet-based pins | 80 piezo-based pins |

| Type of wearable | 1 fingertip | 5-finger exoskeleton haptic glove |

| Mode of data transfer | USB wire | USB wire or wireless (Bluetooth or WiFi) |

| Microcontroller | Arduino Mega 2560 | Arduino Nano 33 IoT |

| Tactor driver | MX1508 (H-bridge motor driver) | HV509 Shift Register for P20 Braille cells |

| Supply Voltage | 5 V DC | 5 V DC (DC–DC boost to 200 V for P20 Braille cells) |

| Application software | Python | Processing, Unity |

| Tactile simulator controller | Mouse | Mouse, Leap Motion Controller, Oculus Quest2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abad, A.C.; Reid, D.; Ranasinghe, A. A Novel Untethered Hand Wearable with Fine-Grained Cutaneous Haptic Feedback. Sensors 2022, 22, 1924. https://doi.org/10.3390/s22051924

Abad AC, Reid D, Ranasinghe A. A Novel Untethered Hand Wearable with Fine-Grained Cutaneous Haptic Feedback. Sensors. 2022; 22(5):1924. https://doi.org/10.3390/s22051924

Chicago/Turabian StyleAbad, Alexander Co, David Reid, and Anuradha Ranasinghe. 2022. "A Novel Untethered Hand Wearable with Fine-Grained Cutaneous Haptic Feedback" Sensors 22, no. 5: 1924. https://doi.org/10.3390/s22051924

APA StyleAbad, A. C., Reid, D., & Ranasinghe, A. (2022). A Novel Untethered Hand Wearable with Fine-Grained Cutaneous Haptic Feedback. Sensors, 22(5), 1924. https://doi.org/10.3390/s22051924