Analyzing Classification Performance of fNIRS-BCI for Gait Rehabilitation Using Deep Neural Networks

Abstract

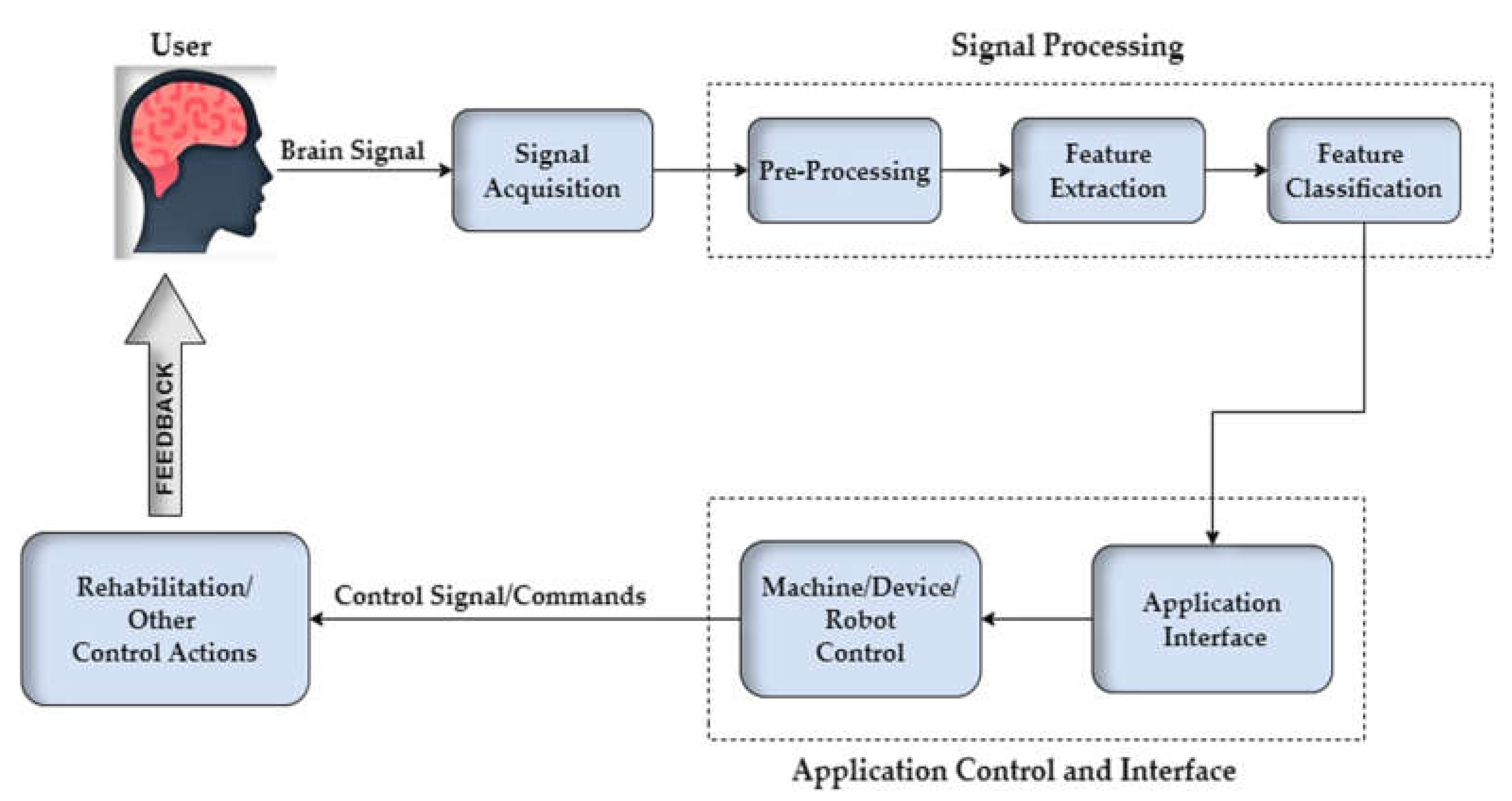

:1. Introduction

2. Materials and Methods

2.1. Experimental Paradigm

2.2. Experimental Configuration

2.3. Signal Acquisition

2.4. Signal Processing

2.5. Feature Extraction

3. Classification Using Machine-Learning Algorithms

3.1. Support Vector Machine (SVM)

3.2. k-Nearest Neighbor (k-NN)

3.3. Linear Discriminant Analysis (LDA)

4. Classification Using Deep-Learning Algorithms

4.1. Convolutional Neural Networks (CNNs)

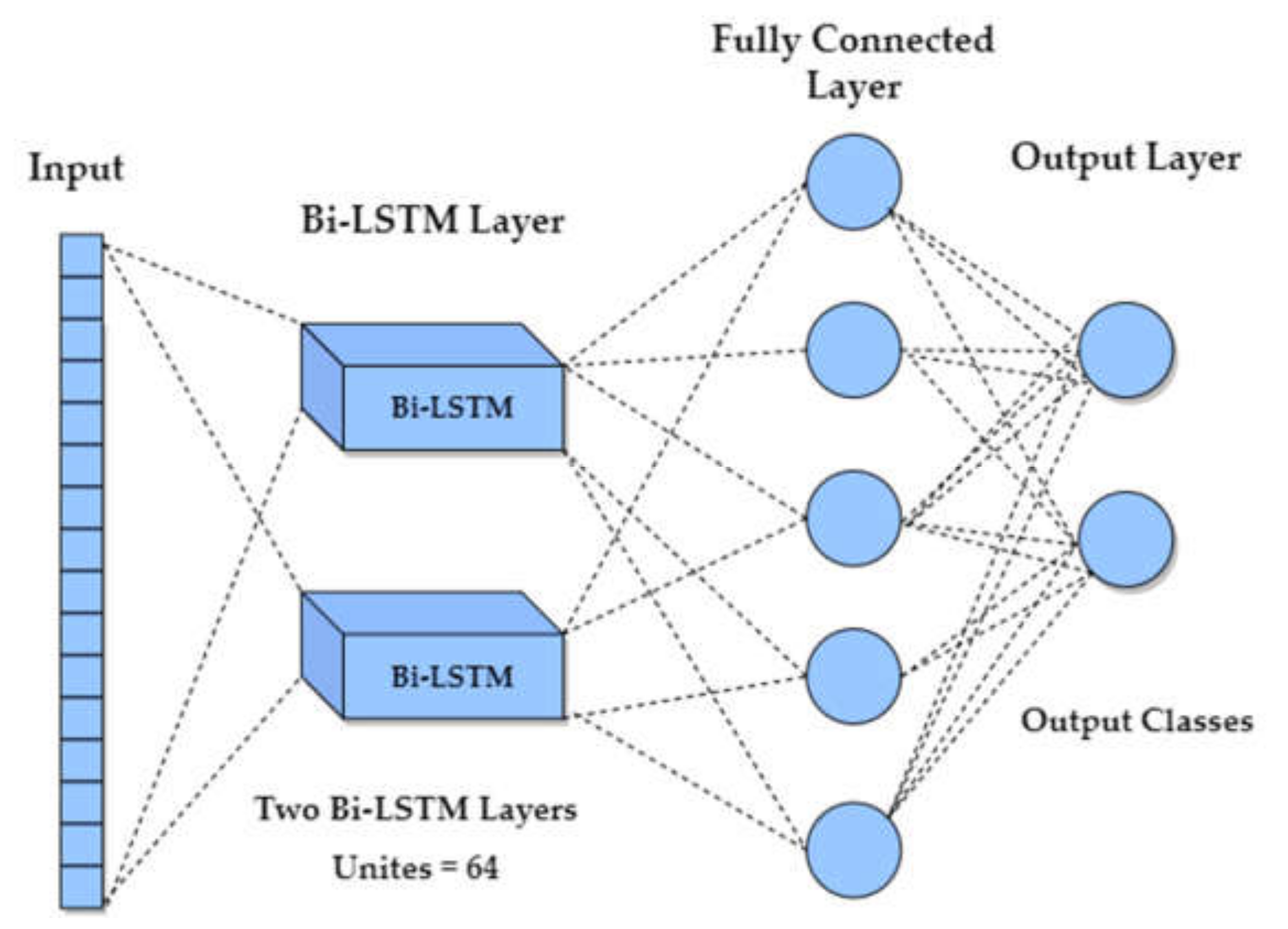

4.2. Long Short-Term Memory (LSTM) and Bi-LSTM

5. Results

5.1. Classification Accuracy of Machine-Learning Algorithms

5.2. Classification Accuracy of Deep Learning Algorithms

5.3. Validation

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain Computer Interfaces, a Review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- Nijboer, F.; Furdea, A.; Gunst, I.; Mellinger, J.; McFarland, D.J.; Birbaumer, N.; Kübler, A. An auditory brain-computer interface (BCI). J. Neurosci. Methods 2008, 167, 43–50. [Google Scholar] [CrossRef]

- Vidal, J.J. Toward Direct Brain-Computer Communication. Annu. Rev. Biophys. Bioeng. 1973, 2, 157–180. [Google Scholar] [CrossRef]

- Pinti, P.; Scholkmann, F.; Hamilton, A.; Burgess, P.; Tachtsidis, I. Current Status and Issues Regarding Pre-processing of fNIRS Neuroimaging Data: An Investigation of Diverse Signal Filtering Methods within a General Linear Model Framework. Front. Hum. Neurosci. 2019, 12, 505. [Google Scholar] [CrossRef] [Green Version]

- Nazeer, H.; Naseer, N.; Khan, R.A.; Noori, F.M.; Qureshi, N.K.; Khan, U.S.; Khan, M.J. Enhancing classification accuracy of fNIRS-BCI using features acquired from vector-based phase analysis. J. Neural Eng. 2020, 17, 056025. [Google Scholar] [CrossRef]

- Moore, M. Real-world applications for brain-computer interface technology. IEEE Trans. Neural Syst. Rehabil. Eng. 2003, 11, 162–165. [Google Scholar] [CrossRef] [Green Version]

- Paszkiel, S.; Szpulak, P. Methods of Acquisition, Archiving and Biomedical Data Analysis of Brain Functioning. Adv. Intell. Syst. Comput. 2018, 720, 158–171. [Google Scholar] [CrossRef]

- Crosson, B.; Ford, A.; McGregor, K.; Meinzer, M.; Cheshkov, S.; Li, X.; Walker-Batson, D.; Briggs, R.W. Functional imaging and related techniques: An introduction for rehabilitation researchers. J. Rehabil. Res. Dev. 2010, 47, vii–xxxiv. [Google Scholar] [CrossRef]

- Kumar, J.S.; Bhuvaneswari, P. Analysis of Electroencephalography (EEG) Signals and Its Categorization–A Study. Procedia Eng. 2012, 38, 2525–2536. [Google Scholar] [CrossRef] [Green Version]

- Cohen, D. Magnetoencephalography: Detection of the Brain’s Electrical Activity with a Superconducting Magnetometer. Science 1972, 175, 664–666. [Google Scholar] [CrossRef]

- DeYoe, E.A.; Bandettini, P.; Neitz, J.; Miller, D.; Winans, P. Functional magnetic resonance imaging (FMRI) of the human brain. J. Neurosci. Methods 1994, 54, 171–187. [Google Scholar] [CrossRef]

- Hay, L.; Duffy, A.; Gilbert, S.; Grealy, M. Functional magnetic resonance imaging (fMRI) in design studies: Methodological considerations, challenges, and recommendations. Des. Stud. 2022, 78, 101078. [Google Scholar] [CrossRef]

- Naseer, N.; Hong, K.-S. fNIRS-based brain-computer interfaces: A review. Front. Hum. Neurosci. 2015, 9, 3. [Google Scholar] [CrossRef] [Green Version]

- Yücel, M.A.; Lühmann, A.V.; Scholkmann, F.; Gervain, J.; Dan, I.; Ayaz, H.; Boas, D.; Cooper, R.J.; Culver, J.; Elwell, C.E.; et al. Best practices for fNIRS publications. Neurophotonics 2021, 8, 012101. [Google Scholar] [CrossRef]

- Hong, K.-S.; Naseer, N. Reduction of Delay in Detecting Initial Dips from Functional Near-Infrared Spectroscopy Signals Using Vector-Based Phase Analysis. Int. J. Neural Syst. 2016, 26, 1650012. [Google Scholar] [CrossRef]

- Zephaniah, P.V.; Kim, J.G. Recent functional near infrared spectroscopy based brain computer interface systems: Developments, applications and challenges. Biomed. Eng. Lett. 2014, 4, 223–230. [Google Scholar] [CrossRef]

- Pinti, P.; Tachtsidis, I.; Hamilton, A.; Hirsch, J.; Aichelburg, C.; Gilbert, S.; Burgess, P.W. The present and future use of functional near-infrared spectroscopy (fNIRS) for cognitive neuroscience. Ann. N. Y. Acad. Sci. 2020, 1464, 5–29. [Google Scholar] [CrossRef]

- Dehais, F.; Karwowski, W.; Ayaz, H. Brain at Work and in Everyday Life as the Next Frontier: Grand Field Challenges for Neuroergonomics. Front. Neuroergonomics 2020, 1. [Google Scholar] [CrossRef]

- Zhang, F.; Roeyers, H. Exploring brain functions in autism spectrum disorder: A systematic review on functional near-infrared spectroscopy (fNIRS) studies. Int. J. Psychophysiol. 2019, 137, 41–53. [Google Scholar] [CrossRef] [Green Version]

- Han, C.-H.; Hwang, H.-J.; Lim, J.-H.; Im, C.-H. Assessment of user voluntary engagement during neurorehabilitation using functional near-infrared spectroscopy: A preliminary study. J. Neuroeng. Rehabil. 2018, 15, 1–10. [Google Scholar] [CrossRef]

- Karunakaran, K.D.; Peng, K.; Berry, D.; Green, S.; Labadie, R.; Kussman, B.; Borsook, D. NIRS measures in pain and analgesia: Fundamentals, features, and function. Neurosci. Biobehav. Rev. 2021, 120, 335–353. [Google Scholar] [CrossRef]

- Berger, A.; Horst, F.; Müller, S.; Steinberg, F.; Doppelmayr, M. Current State and Future Prospects of EEG and fNIRS in Robot-Assisted Gait Rehabilitation: A Brief Review. Front. Hum. Neurosci. 2019, 13, 172. [Google Scholar] [CrossRef]

- Rea, M.; Rana, M.; Lugato, N.; Terekhin, P.; Gizzi, L.; Brötz, D.; Fallgatter, A.; Birbaumer, N.; Sitaram, R.; Caria, A. Lower limb movement preparation in chronic stroke: A pilot study toward an fnirs-bci for gait rehabilitation. Neurorehabilit. Neural Repair 2014, 28, 564–575. [Google Scholar] [CrossRef]

- Khan, H.; Naseer, N.; Yazidi, A.; Eide, P.K.; Hassan, H.W.; Mirtaheri, P. Analysis of Human Gait Using Hybrid EEG-fNIRS-Based BCI System: A Review. Front. Hum. Neurosci. 2021, 14, 605. [Google Scholar] [CrossRef]

- Li, D.; Fan, Y.; Lü, N.; Chen, G.; Wang, Z.; Chi, W. Safety Protection Method of Rehabilitation Robot Based on fNIRS and RGB-D Information Fusion. J. Shanghai Jiaotong Univ. 2021, 27, 45–54. [Google Scholar] [CrossRef]

- Afonin, A.N.; Asadullayev, R.G.; Sitnikova, M.A.; Shamrayev, A.A. A Rehabilitation Device for Paralyzed Disabled People Based on an Eye Tracker and fNIRS. Stud. Comput. Intell. 2020, 925, 65–70. [Google Scholar] [CrossRef]

- Khan, M.A.; Das, R.; Iversen, H.K.; Puthusserypady, S. Review on motor imagery based BCI systems for upper limb post-stroke neurorehabilitation: From designing to application. Comput. Biol. Med. 2020, 123, 103843. [Google Scholar] [CrossRef]

- Liu, D.; Chen, W.; Pei, Z.; Wang, J. A brain-controlled lower-limb exoskeleton for human gait training. Rev. Sci. Instrum. 2017, 88, 104302. [Google Scholar] [CrossRef]

- Hong, K.-S.; Naseer, N.; Kim, Y.-H. Classification of prefrontal and motor cortex signals for three-class fNIRS–BCI. Neurosci. Lett. 2015, 587, 87–92. [Google Scholar] [CrossRef]

- Ma, D.; Izzetoglu, M.; Holtzer, R.; Jiao, X. Machine Learning-based Classification of Active Walking Tasks in Older Adults using fNIRS. arXiv 2021, arXiv:2102.03987. [Google Scholar]

- Khan, R.A.; Naseer, N.; Qureshi, N.K.; Noori, F.M.; Nazeer, H.; Khan, M.U. fNIRS-based Neurorobotic Interface for gait rehabilitation. J. Neuroeng. Rehabil. 2018, 15, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Yan, W.; Oates, T. Time series classification from scratch with deep neural networks: A strong baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar]

- Ho, T.K.K.; Gwak, J.; Park, C.M.; Song, J.-I. Discrimination of Mental Workload Levels from Multi-Channel fNIRS Using Deep Leaning-Based Approaches. IEEE Access 2019, 7, 24392–24403. [Google Scholar] [CrossRef]

- Zheng, Y.; Liu, Q.; Chen, E.; Ge, Y.; Zhao, J.L. Time Series Classification Using Multi-Channels Deep Convolutional Neural Networks. In Web-Age Information Management. WAIM 2014; Lecture Notes in Computer Science; Li, F., Li, G., Hwang, S., Yao, B., Zhang, Z., Eds.; Springer: Cham, Switzerland, 2014; Volume 8485. [Google Scholar] [CrossRef]

- Janani, A.; Sasikala, M.; Chhabra, H.; Shajil, N.; Venkatasubramanian, G. Investigation of deep convolutional neural network for classification of motor imagery fNIRS signals for BCI applications. Biomed. Signal Process. Control 2020, 62, 102133. [Google Scholar] [CrossRef]

- Ma, T.; Wang, S.; Xia, Y.; Zhu, X.; Evans, J.; Sun, Y.; He, S. CNN-based classification of fNIRS signals in motor imagery BCI system. J. Neural Eng. 2021, 18, 056019. [Google Scholar] [CrossRef] [PubMed]

- Rojas, R.F.; Romero, J.; Lopez-Aparicio, J.; Ou, K.-L. Pain Assessment based on fNIRS using Bi-LSTM RNNs. In Proceedings of the International IEEE/EMBS Conference on Neural Engineering (NER), Virtual Event, Italy, 4–6 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 399–402. [Google Scholar] [CrossRef]

- Ma, T.; Lyu, H.; Liu, J.; Xia, Y.; Qian, C.; Evans, J.; Xu, W.; Hu, J.; Hu, S.; He, S. Distinguishing bipolar depression from major depressive disorder using fnirs and deep neural network. Prog. Electromagn. Res. 2020, 169, 73–86. [Google Scholar] [CrossRef]

- Asgher, U.; Khalil, K.; Khan, M.J.; Ahmad, R.; Butt, S.I.; Ayaz, Y.; Naseer, N.; Nazir, S. Enhanced Accuracy for Multiclass Mental Workload Detection Using Long Short-Term Memory for Brain-Computer Interface. Front. Neurosci. 2020, 14, 584. [Google Scholar] [CrossRef]

- Mihara, M.; Miyai, I.; Hatakenaka, M.; Kubota, K.; Sakoda, S. Role of the prefrontal cortex in human balance control. NeuroImage 2008, 43, 329–336. [Google Scholar] [CrossRef]

- Christie, B. Doctors revise Declaration of Helsinki. BMJ 2000, 321, 913. [Google Scholar] [CrossRef] [Green Version]

- Okada, E.; Firbank, M.; Schweiger, M.; Arridge, S.; Cope, M.; Delpy, D.T. Theoretical and experimental investigation of near-infrared light propagation in a model of the adult head. Appl. Opt. 1997, 36, 21–31. [Google Scholar] [CrossRef]

- Delpy, D.T.; Cope, M.; Van Der Zee, P.; Arridge, S.; Wray, S.; Wyatt, J. Estimation of optical pathlength through tissue from direct time of flight measurement. Phys. Med. Biol. 1988, 33, 1433–1442. [Google Scholar] [CrossRef] [Green Version]

- Santosa, H.; Hong, M.J.; Kim, S.-P.; Hong, K.-S. Noise reduction in functional near-infrared spectroscopy signals by independent component analysis. Rev. Sci. Instrum. 2013, 84, 073106. [Google Scholar] [CrossRef] [PubMed]

- Ye, J.C.; Tak, S.; Jang, K.E.; Jung, J.; Jang, J. NIRS-SPM: Statistical parametric mapping for near-infrared spectroscopy. NeuroImage 2009, 44, 428–447. [Google Scholar] [CrossRef]

- Qureshi, N.K.; Naseer, N.; Noori, F.M.; Nazeer, H.; Khan, R.; Saleem, S. Enhancing Classification Performance of Functional Near-Infrared Spectroscopy- Brain-Computer Interface Using Adaptive Estimation of General Linear Model Coefficients. Front. Neurorobot. 2017, 11, 33. [Google Scholar] [CrossRef] [PubMed]

- Nazeer, H.; Naseer, N.; Mehboob, A.; Khan, M.J.; Khan, R.A.; Khan, U.S.; Ayaz, Y. Enhancing Classification Performance of fNIRS-BCI by Identifying Cortically Active Channels Using the z-Score Method. Sensors 2020, 20, 6995. [Google Scholar] [CrossRef] [PubMed]

- Naseer, N.; Hong, M.J.; Hong, K.-S. Online binary decision decoding using functional near-infrared spectroscopy for the development of brain-computer interface. Exp. Brain Res. 2014, 232, 555–564. [Google Scholar] [CrossRef] [PubMed]

- Noori, F.M.; Naseer, N.; Qureshi, N.K.; Nazeer, H.; Khan, R. Optimal feature selection from fNIRS signals using genetic algorithms for BCI. Neurosci. Lett. 2017, 647, 61–66. [Google Scholar] [CrossRef] [PubMed]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Guo, G.; Wang, H.; Bell, D.; Bi, Y.; Greer, K. KNN Model-Based Approach in Classification. In On The Move to Meaningful Internet Systems 2003: CoopIS, DOA, and ODBASE. OTM 2003; Lecture Notes in Computer Science; Meersman, R., Tari, Z., Schmidt, D.C., Eds.; Springer: Berlin, Heidelberg, 2003; Volume 2888. [Google Scholar] [CrossRef]

- Sumantri, A.F.; Wijayanto, I.; Patmasari, R.; Ibrahim, N. Motion Artifact Contaminated Functional Near-infrared Spectroscopy Signals Classification using K-Nearest Neighbor (KNN). J. Phys. Conf. Ser. 2019, 1201, 012062. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X.; Zong, M.; Zhu, X.; Wang, R. Efficient kNN Classification with Different Numbers of Nearest Neighbors. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 1774–1785. [Google Scholar] [CrossRef]

- Naseer, N.; Noori, F.M.; Qureshi, N.K.; Hong, K.-S. Determining Optimal Feature-Combination for LDA Classification of Functional Near-Infrared Spectroscopy Signals in Brain-Computer Interface Application. Front. Hum. Neurosci. 2016, 10, 237. [Google Scholar] [CrossRef] [Green Version]

- Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B. Linear Discriminant Analysis. In Modern Multivariate Statistical Techniques; Springer: New York, NY, USA, 2012; pp. 27–33. [Google Scholar] [CrossRef]

- Trakoolwilaiwan, T.; Behboodi, B.; Lee, J.; Kim, K.; Choi, J.-W. Convolutional neural network for high-accuracy functional near-infrared spectroscopy in a brain-computer interface: Three-class classification of rest, right-, and left-hand motor execution. Neurophotonics 2017, 5, 011008. [Google Scholar] [CrossRef] [PubMed]

- Saadati, M.; Nelson, J.; Ayaz, H. Multimodal fNIRS-EEG Classification Using Deep Learning Algorithms for Brain-Computer Interfaces Purposes. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2019; Volume 953, pp. 209–220. [Google Scholar] [CrossRef]

- Saadati, M.; Nelson, J.; Ayaz, H. Convolutional Neural Network for Hybrid fNIRS-EEG Mental Workload Classification. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2019; Volume 953, pp. 221–232. [Google Scholar] [CrossRef]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar]

- Borovykh, A.; Bohte, S.; Oosterlee, C.W. Conditional Time Series Forecasting with Convolutional Neural Networks. arXiv 2017, arXiv:1703.04691. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef] [Green Version]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The Performance of LSTM and BiLSTM in Forecasting Time Series. In Proceedings of the 2019 IEEE International Conference on Big Data, Los Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar] [CrossRef]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process 1997, 45, 2673–2681. [Google Scholar] [CrossRef] [Green Version]

- Sun, Q.; Jankovic, M.V.; Bally, L.; Mougiakakou, S.G. Predicting Blood Glucose with an LSTM and Bi-LSTM Based Deep Neural Network. In Proceedings of the 2018 14th Symposium on Neural Networks and Applications, NEUREL 2018, Belgrade, Serbia, 20–21 November 2018; pp. 1–5. [Google Scholar] [CrossRef] [Green Version]

- Murad, A.; Pyun, J.-Y. Deep Recurrent Neural Networks for Human Activity Recognition. Sensors 2017, 17, 2556. [Google Scholar] [CrossRef] [Green Version]

- Kwon, J.; Im, C.-H. Subject-Independent Functional Near-Infrared Spectroscopy-Based Brain-Computer Interfaces Based on Convolutional Neural Networks. Front. Hum. Neurosci. 2021, 15, 646915. [Google Scholar] [CrossRef]

- Open Access fNIRS Dataset for Classification of the Unilateral Finger- and Foot-Tapping. Available online: https://figshare.com/articles/dataset/Open_access_fNIRS_dataset_for_classification_of_the_unilateral_finger-_and_foot-tapping/9783755/1 (accessed on 21 January 2022).

- Pamungkas, D.S.; Caesarendra, W.; Soebakti, H.; Analia, R.; Susanto, S. Overview: Types of Lower Limb Exoskeletons. Electronics 2019, 8, 1283. [Google Scholar] [CrossRef] [Green Version]

- Remsik, A.; Young, B.; Vermilyea, R.; Kiekhoefer, L.; Abrams, J.; Elmore, S.E.; Schultz, P.; Nair, V.; Edwards, D.; Williams, J.; et al. A review of the progression and future implications of brain-computer interface therapies for restoration of distal upper extremity motor function after stroke. Expert Rev. Med. Devices 2016, 13, 445–454. [Google Scholar] [CrossRef] [Green Version]

- Huppert, T.J. Commentary on the statistical properties of noise and its implication on general linear models in functional near-infrared spectroscopy. Neurophotonics 2016, 3, 010401. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Le, N.-Q.; Nguyen, B.P. Prediction of FMN Binding Sites in Electron Transport Chains Based on 2-D CNN and PSSM Profiles. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 18, 2189–2197. [Google Scholar] [CrossRef] [PubMed]

| Classifier | Sub1 | Sub2 | Sub3 | Sub4 | Sub5 | Sub6 | Sub7 | Sub8 | Sub9 |

|---|---|---|---|---|---|---|---|---|---|

| SVM | 78.90% | 76.70% | 66.70% | 71.50% | 72.00% | 72.80% | 73.50% | 75.70% | 77.40% |

| k-NN | 77.01% | 74.40% | 68.30% | 70.60% | 73.50% | 74.10% | 72.02% | 73.50% | 84.80% |

| LDA | 64.30% | 66.30% | 63.70% | 66.30% | 66.70% | 65.20% | 65.60% | 67% | 67.60% |

| CNN | Sub1 | Sub2 | Sub3 | Sub4 | Sub5 | Sub6 | Sub7 | Sub8 | Sub9 |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 95.47% | 88.10% | 85.71% | 87.72% | 95.29% | 85.63% | 85.70% | 87.37% | 85.52% |

| Precision | 90.78% | 86.65% | 88.28% | 82.94% | 93.72% | 86.18% | 79.32% | 85.23% | 83.79% |

| Recall | 87.88% | 80.74% | 84.37% | 85.63% | 90.49% | 82.87% | 82.60% | 88.06% | 81.63% |

| LSTM | Sub1 | Sub2 | Sub3 | Sub4 | Sub5 | Sub6 | Sub7 | Sub8 | Sub9 |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 83.81% | 82.84% | 82.72% | 81.83% | 95.35% | 83.04% | 81.72% | 82.00% | 84.81% |

| Precision | 78.24% | 83.36% | 80.92% | 80.83% | 90.76% | 85.49% | 80.29% | 81.43% | 82.45% |

| Recall | 80.04% | 82.32% | 81.75% | 81.25% | 93.45% | 84.35% | 81.82% | 83.63% | 79.83% |

| Bi-LSTM | Sub1 | Sub2 | Sub3 | Sub4 | Sub5 | Sub6 | Sub7 | Sub8 | Sub9 |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 95.54% | 83.55% | 81.81% | 82.42% | 93.28% | 81.67% | 81.85% | 82.62% | 83.42% |

| Precision | 90.74% | 80.23% | 82.45% | 81.72% | 95.56% | 80.48% | 84.90% | 80.53% | 85.37% |

| Recall | 92.38% | 82.08% | 80.76% | 83.62% | 91.49% | 82.43% | 83.73% | 84.29% | 80.97% |

| Classifiers | Bonferroni Correction Applied (p < 0.008) |

|---|---|

| CNN vs. SVM | 1.42 × 10−5 |

| CNN vs. k-NN | 8.63 × 10−5 |

| CNN vs. LDA | 4.01 × 10−12 |

| CNN vs. LSTM | 5.35 × 10−9 |

| CNN vs. Bi-LSTM | 2.19 × 10−8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hamid, H.; Naseer, N.; Nazeer, H.; Khan, M.J.; Khan, R.A.; Shahbaz Khan, U. Analyzing Classification Performance of fNIRS-BCI for Gait Rehabilitation Using Deep Neural Networks. Sensors 2022, 22, 1932. https://doi.org/10.3390/s22051932

Hamid H, Naseer N, Nazeer H, Khan MJ, Khan RA, Shahbaz Khan U. Analyzing Classification Performance of fNIRS-BCI for Gait Rehabilitation Using Deep Neural Networks. Sensors. 2022; 22(5):1932. https://doi.org/10.3390/s22051932

Chicago/Turabian StyleHamid, Huma, Noman Naseer, Hammad Nazeer, Muhammad Jawad Khan, Rayyan Azam Khan, and Umar Shahbaz Khan. 2022. "Analyzing Classification Performance of fNIRS-BCI for Gait Rehabilitation Using Deep Neural Networks" Sensors 22, no. 5: 1932. https://doi.org/10.3390/s22051932