Markerless vs. Marker-Based Gait Analysis: A Proof of Concept Study

Abstract

:1. Introduction

- 1.

- Keypoints detection in the image planes with a state-of-the-art Convolutional Neural Network (CNN): Pose ResNet-152 [14].

- 2.

- 3.

- Keypoints’ trajectories temporal filtering to increase the spatio-temporal consistency.

- 4.

- 3D reconstruction: Combining the detected keypoints from the different viewpoints, we reconstructed the 3D positions of each keypoint following a geometric approach [16].

- Implementation of a video-based markerless pipeline for gait analysis. The pipeline takes as input RGB videos (multiple viewpoints of the same scene) and camera calibration parameters, computes the 3D keypoints following the algorithm summarized above, and gives as outputs the kinematic parameters usually computed in gait analysis.

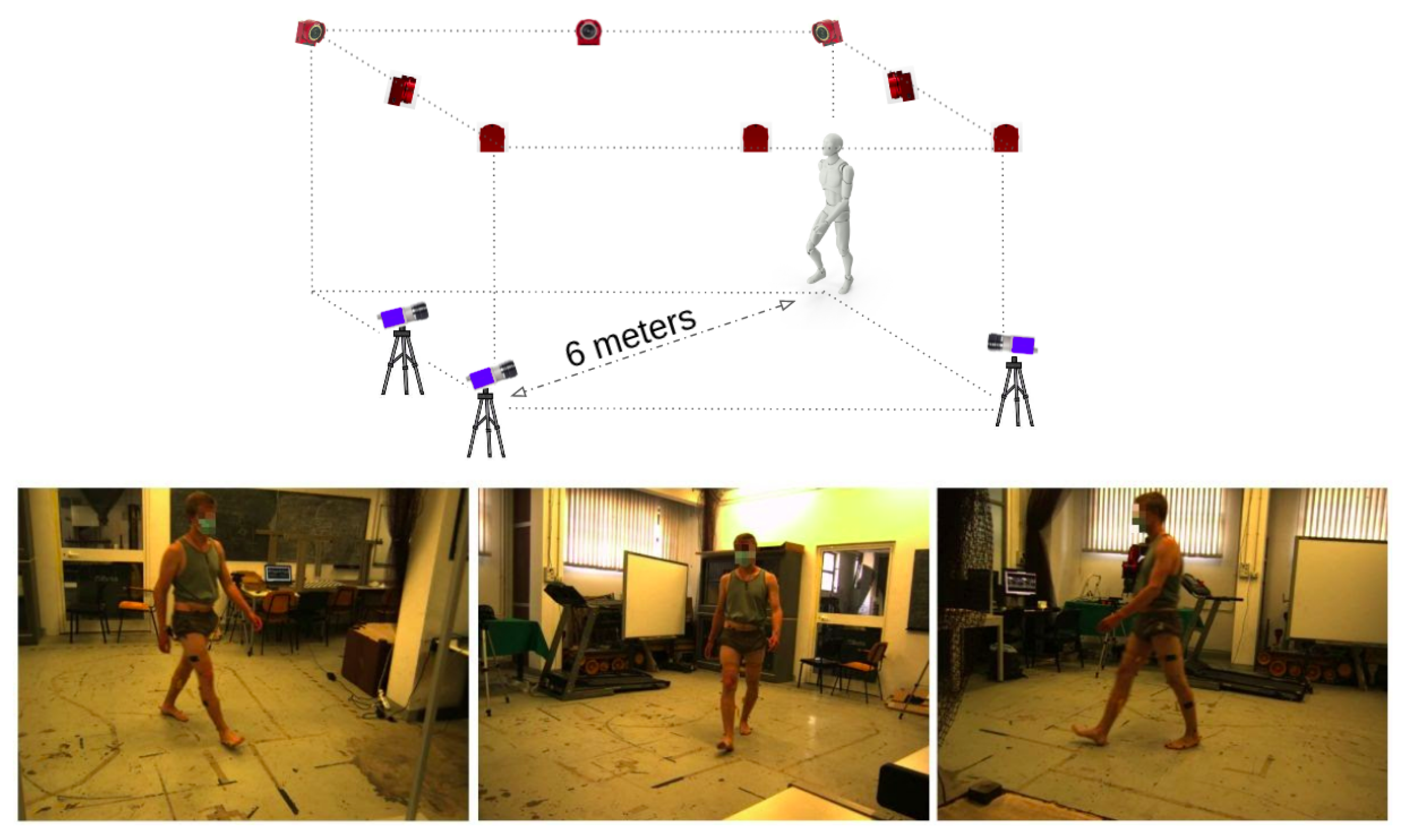

- Comparison between marker and markerless systems. We tested the reliability and the stability of the implemented pipeline. To do that, we acquired the gait of 16 healthy subjects with both a marker-based system (Optitrack) and a multi-view RGB camera system. Then, by using a biomechanical model (OpenSim Software [18]), we computed the spatio-temporal and kinematic gait parameters [4] with data from both the gold standard motion capture system and our implemented markerless pipeline. Then, we compared the results from the two systems. Experimental results obtained in a preliminary study focusing on 2D data (single viewpoint) [19] provide initial evidence of the comparability of the two approaches.

2. Related Works

3. Dataset

4. 3D Keypoints

4.1. Marker Data

4.2. Video Data

- A 2D pose estimator backbone (Pose ResNet [14]).

- A fusing deep learning architecture that refines the probability maps of each view generated in the first step. To accomplish this, the algorithm takes into account the information from neighboring views and it leverages epipolar geometry [16]. In this way it, is possible to enrich the information of each probability map at any point x by adding the information of the probability maps of its neighbor viewpoints.

- A geometric 3D reconstruction part that leverages the intrinsic and extrinsic camera parameters obtained during calibration.

4.2.1. Adafuse Training

- 1.

- 2D backbone. We first focused on the 2D backbone network creating independent probability maps of the keypoints in Figure 3B for each separate input image and we fine tuned the Pose ResNet-152 [14] pretrained on the COCO dataset [34]. We did not train the network directly from scratch to reduce time and the amount of computational resources needed. We fine tuned the network by adopting a subset of the Human3.6m training images, i.e., we considered one image every 20 frames (for a total of 180,000 training images). This allowed us to have a training set with a reasonable number of frames sufficiently different from one another.

- 2.

- Full architecture. Then we focused on the fusing network which refines the maps with the help of the neighboring views. This second part of the AdaFuse architecture should not be trained separately (as mentioned in [15]), but jointly with the 2D backbone. Thus, we initialized the first part (2D backbone) with the weights obtained with the fine tuning described above and the fusion network with random weights. In this case, the inputs of the process are not just single images (as for the previous step), but a group of images representing the same time instant but coming from different viewpoints. Additionally, we input the calibration information for the group of images containing intrinsic and extrinsic parameters. These parameters are not used by the neural network itself, but in an immediate post-processing step which computes the 3D poses at the end. The target and output for the neural network is a group of probability maps corresponding to the input images. It is worth remembering here that the outputs of the full Adafuse process are just probability maps and not 3D points, however they are more precise than those from the 2D backbone because additional information from neighboring views is fused with the backbone prediction. The 3D pose is then computed via triangulation.

4.2.2. Inference

4.3. Keypoints Detection Evaluation Metrics

5. 3D Keypoints Trajectories Processing

5.1. Gait Cycle Detection

5.2. Spatio-Temporal Parameters and Joint Angles

6. Statistical Analysis

7. Results

7.1. Architecture Evaluation

7.2. Joint Angles and Spatio-Temporal Parameters

8. Discussion and Conclusions

- 1.

- It requires less expertise and has no bias introduced by any operators. In fact, while the operator during marker-based data acquisition needs to place markers carefully on the subjects skin in order to avoid biased results, our pipeline works fully automatically, and it is independent of any human performance;

- 2.

- It does not affect the naturalness of gait in any ways since it does not require cumbersome markers and sensors. Furthermore, it makes the data acquisition easier and faster because it is not necessary to place markers on the body skin;

- 3.

- It is less expensive and with a simpler setup and is easier to use outside laboratory environments, since it requires only RGB cameras.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fritz, N.E.; Marasigan, R.E.R.; Calabresi, P.A.; Newsome, S.D.; Zackowski, K.M. The impact of dynamic balance measures on walking performance in multiple sclerosis. Neurorehabilit. Neural Repair 2015, 29, 62–69. [Google Scholar] [CrossRef] [Green Version]

- di Biase, L.; Di Santo, A.; Caminiti, M.L.; De Liso, A.; Shah, S.A.; Ricci, L.; Di Lazzaro, V. Gait analysis in Parkinson’s disease: An overview of the most accurate markers for diagnosis and symptoms monitoring. Sensors 2020, 20, 3529. [Google Scholar] [CrossRef]

- Wren, T.A.; Tucker, C.A.; Rethlefsen, S.A.; Gorton, G.E., III; Õunpuu, S. Clinical efficacy of instrumented gait analysis: Systematic review 2020 update. Gait Posture 2020, 80, 274–279. [Google Scholar] [CrossRef]

- Whittle, M.W. Gait Analysis: An Introduction; Butterworth-Heinemann: Oxford, UK, 2014. [Google Scholar]

- Cloete, T.; Scheffer, C. Benchmarking of a full-body inertial motion capture system for clinical gait analysis. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 4579–4582. [Google Scholar]

- Colyer, S.L.; Evans, M.; Cosker, D.P.; Salo, A.I. A review of the evolution of vision-based motion analysis and the integration of advanced computer vision methods towards developing a markerless system. Sport. Med.-Open 2018, 4, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Carse, B.; Meadows, B.; Bowers, R.; Rowe, P. Affordable clinical gait analysis: An assessment of the marker tracking accuracy of a new low-cost optical 3D motion analysis system. Physiotherapy 2013, 99, 347–351. [Google Scholar] [CrossRef]

- Desmarais, Y.; Mottet, D.; Slangen, P.; Montesinos, P. A review of 3D human pose estimation algorithms for markerless motion capture. Comput. Vis. Image Underst. 2021, 212, 103275. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018. [Google Scholar] [CrossRef]

- Zheng, C.; Wu, W.; Yang, T.; Zhu, S.; Chen, C.; Liu, R.; Shen, J.; Kehtarnavaz, N.; Shah, M. Deep learning-based human pose estimation: A survey. arXiv 2020, arXiv:2012.13392. [Google Scholar]

- Kwolek, B.; Michalczuk, A.; Krzeszowski, T.; Switonski, A.; Josinski, H.; Wojciechowski, K. Calibrated and synchronized multi-view video and motion capture dataset for evaluation of gait recognition. Multimed. Tools Appl. 2019, 78, 32437–32465. [Google Scholar] [CrossRef] [Green Version]

- Moro, M.; Casadio, M.; Mrotek, L.A.; Ranganathan, R.; Scheidt, R.; Odone, F. On The Precision Of Markerless 3d Semantic Features: An Experimental Study On Violin Playing. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 2733–2737. [Google Scholar]

- Needham, L.; Evans, M.; Cosker, D.P.; Wade, L.; McGuigan, P.M.; Bilzon, J.L.; Colyer, S.L. The accuracy of several pose estimation methods for 3D joint centre localisation. Sci. Rep. 2021, 11, 20673. [Google Scholar] [CrossRef]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 466–481. [Google Scholar]

- Zhang, Z.; Wang, C.; Qiu, W.; Qin, W.; Zeng, W. AdaFuse: Adaptive Multiview Fusion for Accurate Human Pose Estimation in the Wild. Int. J. Comput. Vis. 2021, 129, 703–718. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Ionescu, C.; Papava, D.; Olaru, V.; Sminchisescu, C. Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1325–1339. [Google Scholar] [CrossRef] [PubMed]

- Delp, S.L.; Anderson, F.C.; Arnold, A.S.; Loan, P.; Habib, A.; John, C.T.; Guendelman, E.; Thelen, D.G. OpenSim: Open-source software to create and analyze dynamic simulations of movement. IEEE Trans. Biomed. Eng. 2007, 54, 1940–1950. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moro, M.; Marchesi, G.; Odone, F.; Casadio, M. Markerless gait analysis in stroke survivors based on computer vision and deep learning: A pilot study. In Proceedings of the 35th Annual ACM Symposium on Applied Computing, Brno, Czech Republic, 30 March–3 April 2020; pp. 2097–2104. [Google Scholar]

- Rodrigues, T.B.; Salgado, D.P.; Catháin, C.Ó.; O’Connor, N.; Murray, N. Human gait assessment using a 3D marker-less multimodal motion capture system. Multimed. Tools Appl. 2020, 79, 2629–2651. [Google Scholar] [CrossRef]

- Corazza, S.; Muendermann, L.; Chaudhari, A.; Demattio, T.; Cobelli, C.; Andriacchi, T.P. A markerless motion capture system to study musculoskeletal biomechanics: Visual hull and simulated annealing approach. Ann. Biomed. Eng. 2006, 34, 1019–1029. [Google Scholar] [CrossRef]

- Castelli, A.; Paolini, G.; Cereatti, A.; Della Croce, U. A 2D markerless gait analysis methodology: Validation on healthy subjects. Comput. Math. Methods Med. 2015, 2015. [Google Scholar] [CrossRef] [Green Version]

- Clark, R.A.; Bower, K.J.; Mentiplay, B.F.; Paterson, K.; Pua, Y.H. Concurrent validity of the Microsoft Kinect for assessment of spatiotemporal gait variables. J. Biomech. 2013, 46, 2722–2725. [Google Scholar] [CrossRef]

- Gabel, M.; Gilad-Bachrach, R.; Renshaw, E.; Schuster, A. Full body gait analysis with Kinect. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 1964–1967. [Google Scholar]

- Saboune, J.; Charpillet, F. Markerless human motion tracking from a single camera using interval particle filtering. Int. J. Artif. Intell. Tools 2007, 16, 593–609. [Google Scholar] [CrossRef]

- Kidziński, Ł.; Yang, B.; Hicks, J.L.; Rajagopal, A.; Delp, S.L.; Schwartz, M.H. Deep neural networks enable quantitative movement analysis using single-camera videos. Nat. Commun. 2020, 11, 4054. [Google Scholar] [CrossRef]

- Borghese, N.A.; Bianchi, L.; Lacquaniti, F. Kinematic determinants of human locomotion. J. Physiol. 1996, 494, 863–879. [Google Scholar] [CrossRef] [Green Version]

- Vafadar, S.; Skalli, W.; Bonnet-Lebrun, A.; Khalifé, M.; Renaudin, M.; Hamza, A.; Gajny, L. A novel dataset and deep learning-based approach for marker-less motion capture during gait. Gait Posture 2021, 86, 70–76. [Google Scholar] [CrossRef] [PubMed]

- Iskakov, K.; Burkov, E.; Lempitsky, V.; Malkov, Y. Learnable triangulation of human pose. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 7718–7727. [Google Scholar]

- Davis III, R.B.; Ounpuu, S.; Tyburski, D.; Gage, J.R. A gait analysis data collection and reduction technique. Hum. Mov. Sci. 1991, 10, 575–587. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Motive: Optical Motion Capture Software. Available online: https://www.vicon.com/ (accessed on 1 November 2021).

- Vicon. Available online: https://optitrack.com/software/motive/ (accessed on 1 November 2021).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Yang, Y.; Ramanan, D. Articulated human detection with flexible mixtures of parts. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 2878–2890. [Google Scholar] [CrossRef] [Green Version]

- O’Connor, C.M.; Thorpe, S.K.; O’Malley, M.J.; Vaughan, C.L. Automatic detection of gait events using kinematic data. Gait Posture 2007, 25, 469–474. [Google Scholar] [CrossRef]

- Rajagopal, A.; Dembia, C.L.; DeMers, M.S.; Delp, D.D.; Hicks, J.L.; Delp, S.L. Full-body musculoskeletal model for muscle-driven simulation of human gait. IEEE Trans. Biomed. Eng. 2016, 63, 2068–2079. [Google Scholar] [CrossRef]

- Pataky, T.C.; Vanrenterghem, J.; Robinson, M.A. Zero-vs. one-dimensional, parametric vs. non-parametric, and confidence interval vs. hypothesis testing procedures in one-dimensional biomechanical trajectory analysis. J. Biomech. 2015, 48, 1277–1285. [Google Scholar] [CrossRef] [Green Version]

- Reddy, N.D.; Guigues, L.; Pishchulin, L.; Eledath, J.; Narasimhan, S.G. TesseTrack: End-to-End Learnable Multi-Person Articulated 3D Pose Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15190–15200. [Google Scholar]

- He, Y.; Yan, R.; Fragkiadaki, K.; Yu, S.I. Epipolar transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 7779–7788. [Google Scholar]

- Li, W.; Liu, H.; Ding, R.; Liu, M.; Wang, P.; Yang, W. Exploiting Temporal Contexts with Strided Transformer for 3D Human Pose Estimation. arXiv 2021, arXiv:2103.14304. [Google Scholar] [CrossRef]

- Shan, W.; Lu, H.; Wang, S.; Zhang, X.; Gao, W. Improving Robustness and Accuracy via Relative Information Encoding in 3D Human Pose Estimation. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20 October 2021; pp. 3446–3454. [Google Scholar]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2019; pp. 5693–5703. [Google Scholar]

| Keypoints | PCKh@1 | PCKh@0.75 | PCKh@0.5 |

|---|---|---|---|

| head | 96.3 | 95.8 | 95.2 |

| root | 96.6 | 95.6 | 94.8 |

| nose | 96.1 | 94.3 | 87.2 |

| neck | 96.1 | 89.3 | 77.2 |

| right shoulder | 93.4 | 87.4 | 66.7 |

| right elbow | 89.1 | 79.8 | 70.7 |

| right wrist | 85.5 | 78.6 | 67.8 |

| left shoulder | 95.2 | 88.9 | 72.7 |

| left elbow | 90.6 | 82.2 | 77.1 |

| left wrist | 85.0 | 78.7 | 70.0 |

| belly | 94.2 | 80.7 | 72.0 |

| right hip | 96.0 | 87.6 | 73.2 |

| right knee | 93.4 | 85.5 | 76.2 |

| right foot1 | 91.6 | 79.7 | 61.4 |

| right foot2 | 92.3 | 84.5 | 68.6 |

| right foot3 | 89.2 | 77.3 | 63.0 |

| left hip | 95.8 | 85.1 | 72.1 |

| left knee | 92.4 | 79.9 | 66.7 |

| left foot1 | 90.3 | 75.9 | 52.8 |

| left foot2 | 91.7 | 83.4 | 67.7 |

| left foot3 | 88.7 | 78.4 | 64.4 |

| Stance Phase (%) | Swing Phase (%) | Stride Length (m) | Step Width (m) | Stride Time (s) | Speed (m/s) | |

|---|---|---|---|---|---|---|

| Marker | 59.2 ± 2.6 | 40.8 ± 2.6 | 1.35 ± 0.11 | 0.10 ± 0.02 | 1.13 ± 0.02 | 1.31 ± 0.10 |

| Markerless | 59.6 ± 3.1 | 40.4 ± 3.1 | 1.40 ± 0.21 | 0.12 ± 0.02 | 1.11 ± 0.04 | 1.35 ± 0.16 |

| p-values | 0.644 | 0.644 | 0.474 | 0.132 | 0.291 | 0.341 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moro, M.; Marchesi, G.; Hesse, F.; Odone, F.; Casadio, M. Markerless vs. Marker-Based Gait Analysis: A Proof of Concept Study. Sensors 2022, 22, 2011. https://doi.org/10.3390/s22052011

Moro M, Marchesi G, Hesse F, Odone F, Casadio M. Markerless vs. Marker-Based Gait Analysis: A Proof of Concept Study. Sensors. 2022; 22(5):2011. https://doi.org/10.3390/s22052011

Chicago/Turabian StyleMoro, Matteo, Giorgia Marchesi, Filip Hesse, Francesca Odone, and Maura Casadio. 2022. "Markerless vs. Marker-Based Gait Analysis: A Proof of Concept Study" Sensors 22, no. 5: 2011. https://doi.org/10.3390/s22052011

APA StyleMoro, M., Marchesi, G., Hesse, F., Odone, F., & Casadio, M. (2022). Markerless vs. Marker-Based Gait Analysis: A Proof of Concept Study. Sensors, 22(5), 2011. https://doi.org/10.3390/s22052011