Automatic Extrinsic Calibration of 3D LIDAR and Multi-Cameras Based on Graph Optimization

Abstract

:1. Introduction

2. The Proposed Method

2.1. Automatic Registration of LIDAR Point Cloud and Image Feature Points

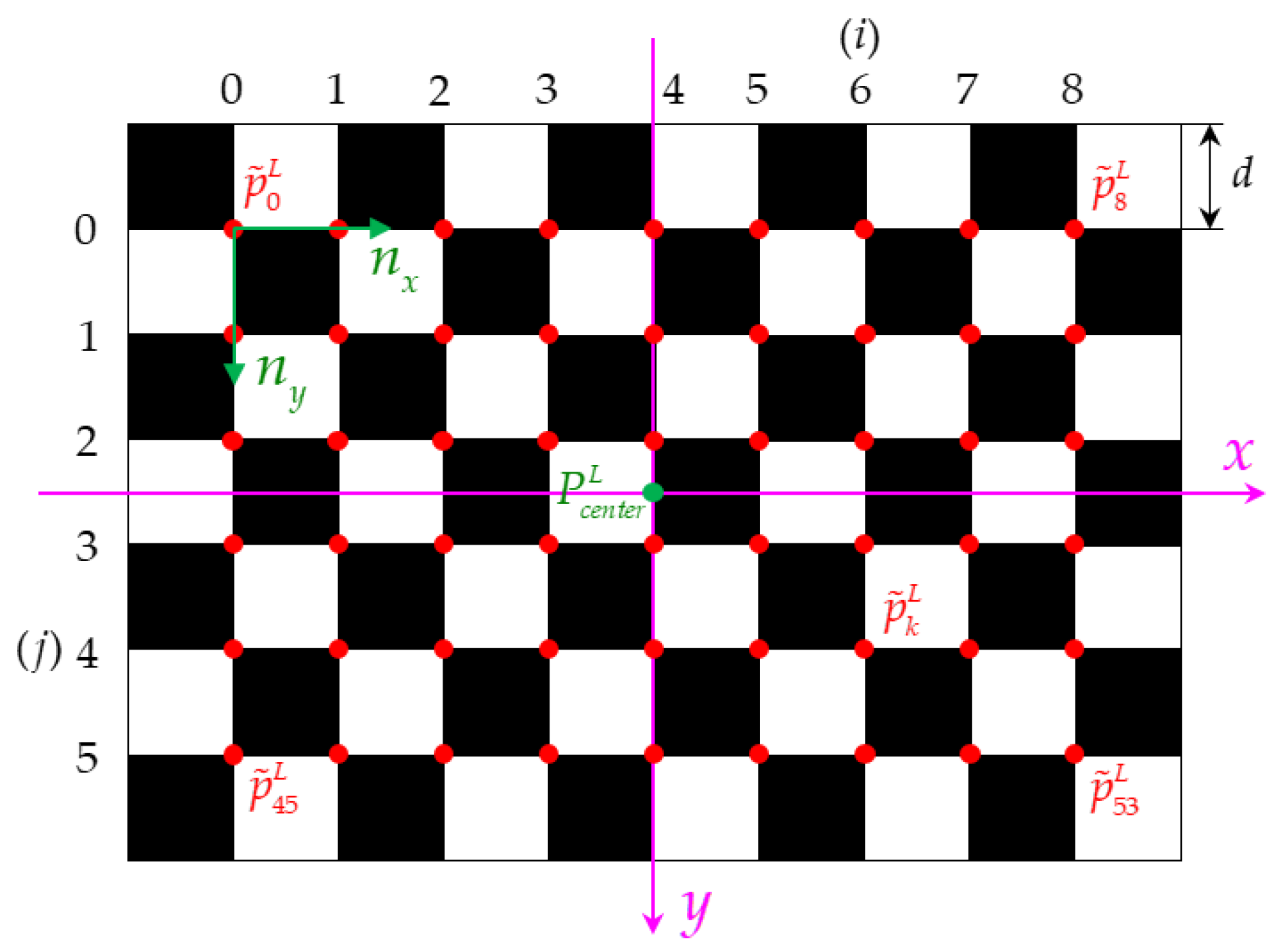

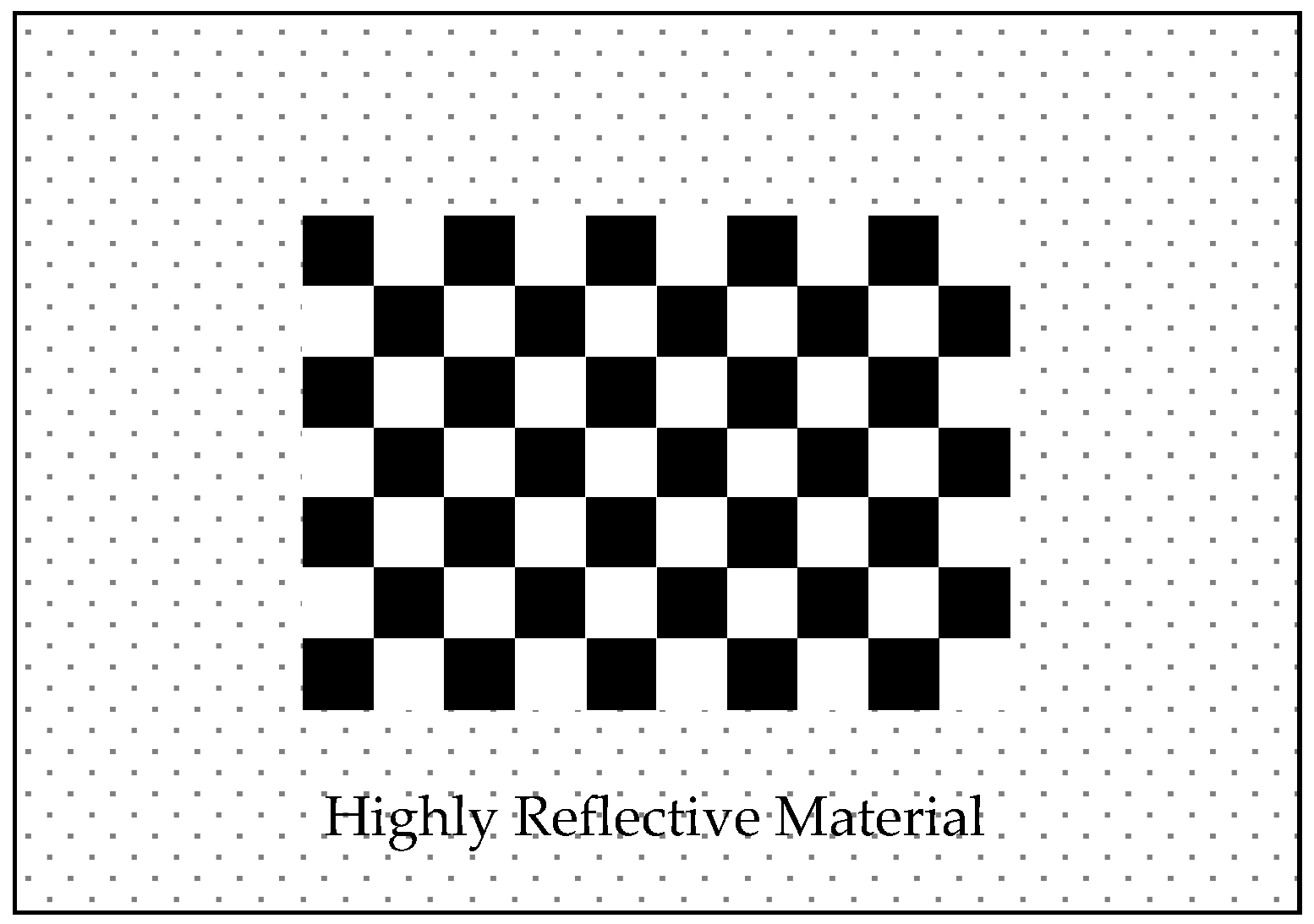

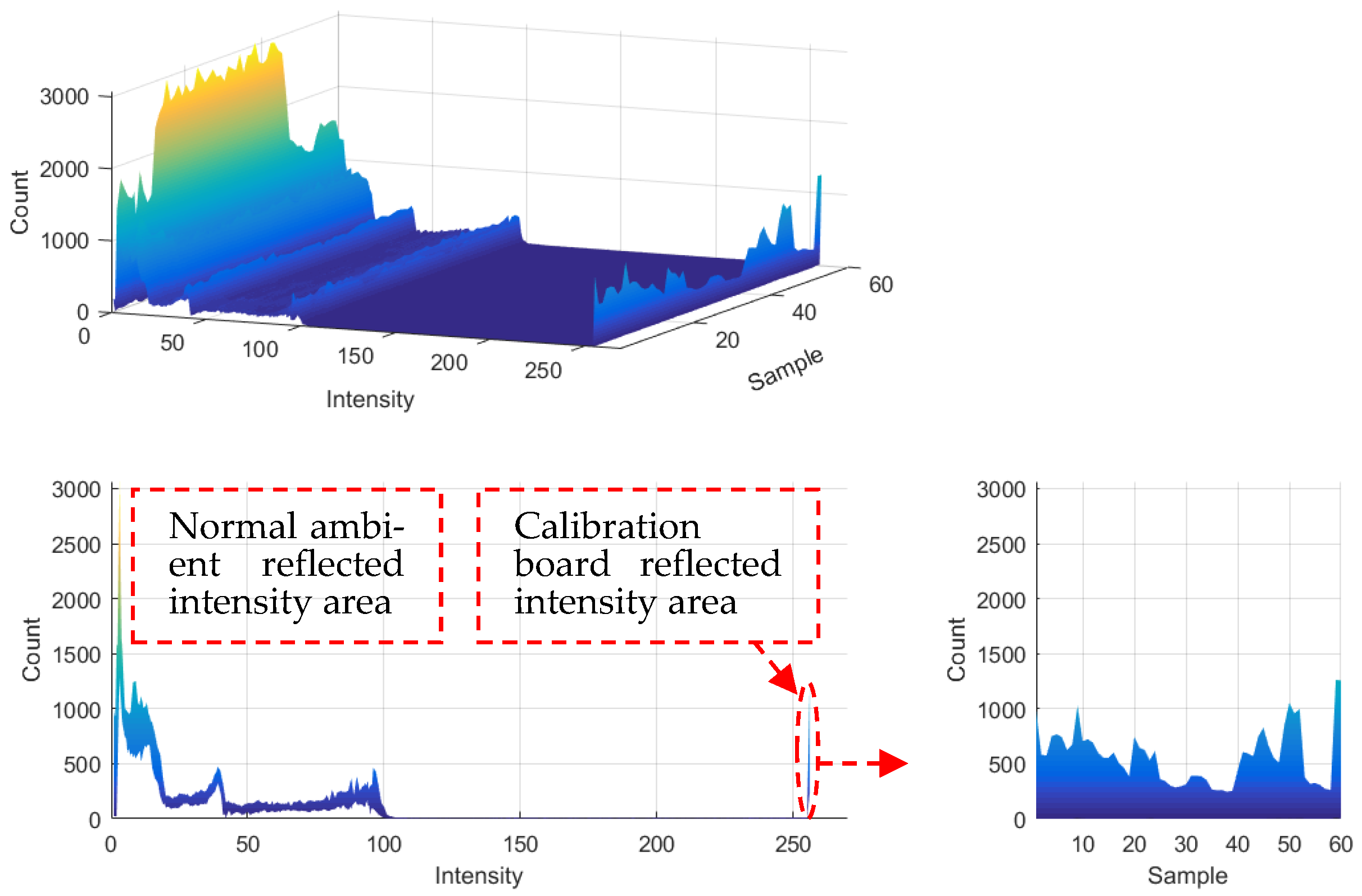

2.1.1. Autonomous Identification Calibration Board

2.1.2. Automatic Registration of Point Cloud and Image

- 1.

- Point Cloud Processing

- 2.

- Image Feature Point Extraction

2.2. Extrinsic Calibration Algorithm Based on Graph Optimization

2.2.1. Constructing the Least Squares Problem

2.2.2. Graph Optimization Model and Solution

| Algorithm 1 Graph Optimization Calibration of LIDAR-Cameras | |

| Input: Source point cloud: ; Source image: ; Camera internal parameters and distortion coefficient: . | |

| 1: | // Extract the point cloud of the calibration board: |

| 2: | for in do |

| 3: | for in do |

| 4: | if then |

| 5: | add ; |

| 6: | end if |

| 7: | end for |

| 8: | end for |

| 9: | // Virtual feature point fitting: |

| 10: | for each do |

| 11: | Plane fitting: ; |

| 12: | Filter left and right edge points: ; |

| 13: | Project edge points to : ; |

| 14: | Fit four edge lines: ; |

| 15: | Calculate the center point and coordinate axis of the calibration board: ; |

| 16: | Calculate virtual feature corners: , (Formula (1)); |

| 17: | end for |

| 18: | // Extract image feature points: |

| 19: | for in do |

| 20: | Subpixel corner point extraction: ; |

| 21: | end for |

| 22: | 3D-2D feature point matching: ; |

| 23: | Use EPnP algorithm to solve : ; |

| 24: | // Graph optimization to solve external parameters: |

| 25: | Set the initial value: ; |

| 26: | while True do |

| 27: | Calculate the Jacobian matrix and the error , (Formulas (18) and (9)); |

| 28: | Calculate the increment equation: , (Formulas (22) and (23)); |

| 29: | if then |

| 30: | ; |

| 31: | else |

| 32: | return & break; |

| 33: | end if |

| 34: | end while |

| Output: LIDAR and cameras external parameter: ; Optimized feature points . | |

3. Experiment

3.1. Experimental System Construction

3.2. Data Collection and Processing

4. Results and Discussion

5. Conclusions

- (1)

- Although the data collected by two different types of sensors are different and it is difficult to match the corresponding feature points, we use the reflectivity information of LIDAR to lock and build a virtual calibration board and base it on the virtual calibration board to establish the initial value of the optimization problem. The validity of the initial value is verified by comparing it with the true value of the calibration board size.

- (2)

- Except that the spatial position of the calibration board needs to be manually moved in the data collection stage, the rest of the steps can be completed autonomously, which greatly increases the ease of use of the method. The joint calibration method based on graph optimization can simultaneously optimize the spatial coordinates of the point cloud and the external parameters between multiple sensors, so it is more convenient to calibrate LIDAR and multiple cameras.

- (3)

- All feature points of this method are involved in the optimization calculation, so the accuracy requirements for the initial measured values of the feature points and the initial values of external parameters are relatively low. Through quantitative analysis of different calibration methods, it was found that since the original point cloud is also optimized, the average re-projection error of our calibration result on the normalized plane was 0.161 mm, which is better than the unoptimized calibration method.

- (4)

- From an intuitive qualitative point of view, in complex scenarios, the calibration results can still achieve an accurate fusion of data. The results show that this method can achieve more reliable calibration between lidar and multiple cameras.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- DeSouza, G.N.; Kak, A.C. Vision for mobile robot navigation: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 237–267. [Google Scholar] [CrossRef] [Green Version]

- Basaca-Preciado, L.C.; Sergiyenko, O.Y.; Rodriguez-Quinonez, J.C.; Garcia, X.; Tyrsa, V.V.; Rivas-Lopez, M.; Hernandez-Balbuena, D.; Mercorelli, P.; Podrygalo, M.; Gurko, A.; et al. Optical 3D laser measurement system for navigation of autonomous mobile robot. Opt. Lasers Eng. 2014, 54, 159–169. [Google Scholar] [CrossRef]

- De Silva, V.; Roche, J.; Kondoz, A. Robust Fusion of LiDAR and Wide-Angle Camera Data for Autonomous Mobile Robots. Sensors 2018, 18, 2730. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Manish, R.; Hasheminasab, S.M.; Liu, J.; Koshan, Y.; Mahlberg, J.A.; Lin, Y.-C.; Ravi, R.; Zhou, T.; McGuffey, J.; Wells, T.; et al. Image-Aided LiDAR Mapping Platform and Data Processing Strategy for Stockpile Volume Estimation. Remote Sens. 2022, 14, 231. [Google Scholar] [CrossRef]

- Tonina, D.; McKean, J.A.; Benjankar, R.M.; Wright, C.W.; Goode, J.R.; Chen, Q.W.; Reeder, W.J.; Carmichael, R.A.; Edmondson, M.R. Mapping river bathymetries: Evaluating topobathymetric LiDAR survey. Earth Surf. Processes Landf. 2019, 44, 507–520. [Google Scholar] [CrossRef]

- Tuell, G.; Barbor, K.; Wozencraft, J. Overview of the Coastal Zone Mapping and Imaging Lidar (CZMIL): A New Multisensor Airborne Mapping System for the U.S. Army Corps of Engineers; SPIE: Bellingham, WA, USA, 2010; Volume 7695. [Google Scholar]

- Bao, Y.; Lin, P.; Li, Y.; Qi, Y.; Wang, Z.; Du, W.; Fan, Q. Parallel Structure from Motion for Sparse Point Cloud Generation in Large-Scale Scenes. Sensors 2021, 21, 3939. [Google Scholar] [CrossRef]

- Jeong, J.; Yoon, T.S.; Park, J.B. Towards a Meaningful 3D Map Using a 3D Lidar and a Camera. Sensors 2018, 18, 2571. [Google Scholar] [CrossRef] [Green Version]

- Wang, R. 3D building modeling using images and LiDAR: A review. Int. J. Image Data Fusion 2013, 4, 273–292. [Google Scholar] [CrossRef]

- Vlaminck, M.; Luong, H.; Goeman, W.; Philips, W. 3D Scene Reconstruction Using Omnidirectional Vision and LiDAR: A Hybrid Approach. Sensors 2016, 16, 1923. [Google Scholar] [CrossRef] [Green Version]

- Cheng, L.; Tong, L.H.; Chen, Y.M.; Zhang, W.; Shan, J.; Liu, Y.X.; Li, M.C. Integration of LiDAR data and optical multi-view images for 3D reconstruction of building roofs. Opt. Lasers Eng. 2013, 51, 493–502. [Google Scholar] [CrossRef]

- Moreno, H.; Valero, C.; Bengochea-Guevara, J.M.; Ribeiro, A.; Garrido-Izard, M.; Andujar, D. On-Ground Vineyard Reconstruction Using a LiDAR-Based Automated System. Sensors 2020, 20, 1102. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.Z.; Langer, D.; Pink, O.; Pratt, V.; et al. Towards fully autonomous driving: Systems and algorithms. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 163–168. [Google Scholar]

- Dehzangi, O.; Taherisadr, M.; ChangalVala, R. IMU-Based Gait Recognition Using Convolutional Neural Networks and Multi-Sensor Fusion. Sensors 2017, 17, 2735. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-Task Multi-Sensor Fusion for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7337–7345. [Google Scholar] [CrossRef]

- Wan, G.; Yang, X.; Cai, R.; Li, H.; Zhou, Y.; Wang, H.; Song, S. Robust and Precise Vehicle Localization Based on Multi-Sensor Fusion in Diverse City Scenes. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4670–4677. [Google Scholar]

- Dimitrievski, M.; Veelaert, P.; Philips, W. Behavioral Pedestrian Tracking Using a Camera and LiDAR Sensors on a Moving Vehicle. Sensors 2019, 19, 391. [Google Scholar] [CrossRef] [Green Version]

- Zhen, W.K.; Hu, Y.Y.; Liu, J.F.; Scherer, S. A Joint Optimization Approach of LiDAR-Camera Fusion for Accurate Dense 3-D Reconstructions. IEEE Robot. Autom. Lett. 2019, 4, 3585–3592. [Google Scholar] [CrossRef] [Green Version]

- Debeunne, C.; Vivet, D. A Review of Visual-LiDAR Fusion based Simultaneous Localization and Mapping. Sensors 2020, 20, 2068. [Google Scholar] [CrossRef] [Green Version]

- Shaukat, A.; Blacker, P.C.; Spiteri, C.; Gao, Y. Towards Camera-LIDAR Fusion-Based Terrain Modelling for Planetary Surfaces: Review and Analysis. Sensors 2016, 16, 1952. [Google Scholar] [CrossRef] [Green Version]

- Janai, J.; Güney, F.; Behl, A.; Geiger, A. Computer Vision for Autonomous Vehicles: Problems, Datasets and State-of-the-Art. Found. Trends® Comput. Graph. Vis. 2017, 12, 1–308. [Google Scholar]

- Banerjee, K.; Notz, D.; Windelen, J.; Gavarraju, S.; He, M. Online Camera LiDAR Fusion and Object Detection on Hybrid Data for Autonomous Driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1632–1638. [Google Scholar]

- Vivet, D.; Debord, A.; Pagès, G. PAVO: A Parallax based Bi-Monocular VO Approach for Autonomous Navigation in Various Environments. In Proceedings of the DISP Conference, St Hugh College, Oxford, UK, 29–30 April 2019. [Google Scholar]

- Kim, S.; Kim, H.; Yoo, W.; Huh, K. Sensor Fusion Algorithm Design in Detecting Vehicles Using Laser Scanner and Stereo Vision. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1072–1084. [Google Scholar] [CrossRef]

- Huang, P.D.; Cheng, M.; Chen, Y.P.; Luo, H.; Wang, C.; Li, J. Traffic Sign Occlusion Detection Using Mobile Laser Scanning Point Clouds. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2364–2376. [Google Scholar] [CrossRef]

- Costa, F.A.L.; Mitishita, E.A.; Martins, M. The Influence of Sub-Block Position on Performing Integrated Sensor Orientation Using In Situ Camera Calibration and Lidar Control Points. Remote Sens. 2018, 10, 260. [Google Scholar] [CrossRef] [Green Version]

- Heikkila, J.; Silvén, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision & Pattern Recognition, San Juan, PR, USA, 17–19 June 1997. [Google Scholar]

- Bouguet, J.Y. Camera Calibration Toolbox for Matlab, 2013. Available online: www.vision.caltech.edu/bouguetj (accessed on 5 January 2022).

- Qilong, Z.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 2303, pp. 2301–2306. [Google Scholar]

- Unnikrishnan, R.; Hebert, M. Fast Extrinsic Calibration of a Laser Rangefinder to a Camera; Tech. Rep. CMU-RI-TR-05-09; Robotics Institute: Pittsburgh, PA, USA, 2005. [Google Scholar]

- Kassir, A.; Peynot, T. Reliable automatic camera-laser calibration. In Proceedings of the 2010 Australasian Conference on Robotics & Automation; Australian Robotics and Automation Association (ARAA): Melbourne, Australia, 2010. [Google Scholar]

- Geiger, A.; Moosmann, F.; Car, O.; Schuster, B. Automatic Camera and Range Sensor Calibration Using a Single Shot. In Proceedings of the IEEE International Conference on Robotics & Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 3936–3943. [Google Scholar]

- Kim, E.S.; Park, S.Y. Extrinsic Calibration between Camera and LiDAR Sensors by Matching Multiple 3D Planes. Sensors 2019, 20, 52. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Heng, L.; Furgale, P.; Pollefeys, M. Leveraging Image-based Localization for Infrastructure-based Calibration of a Multi-camera Rig. J. Field Robot. 2015, 32, 775–802. [Google Scholar] [CrossRef]

- Bai, Z.; Jiang, G.; Xu, A. LiDAR-Camera Calibration Using Line Correspondences. Sensors 2020, 20, 6319. [Google Scholar] [CrossRef]

- Schneider, N.; Piewak, F.; Stiller, C.; Franke, U. RegNet: Multimodal Sensor Registration Using Deep Neural Networks. IEEE Int. Veh. Sym. 2017, 1803–1810. [Google Scholar]

- Iyer, G.; Ram, R.K.; Murthy, J.K.; Krishna, K.M. CalibNet: Geometrically Supervised Extrinsic Calibration using 3D Spatial Transformer Networks. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019. [Google Scholar]

- Lv, X.; Wang, S.; Ye, D. CFNet: LiDAR-Camera Registration Using Calibration Flow Network. Sensors 2021, 21, 8112. [Google Scholar] [CrossRef]

- Lai, J.C.; Wang, C.Y.; Yan, W.; Li, Z.H. Research on the ranging statistical distribution of laser radar with a constant fraction discriminator. IET Optoelectron. 2018, 12, 114–117. [Google Scholar] [CrossRef]

- Dhall, A.; Chelani, K.; Radhakrishnan, V.; Krishna, K.M. LiDAR-Camera Calibration using 3D-3D Point correspondences. arXiv 2017, arXiv:1705.09785. [Google Scholar]

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus—A Paradigm for Model-Fitting with Applications to Image-Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Lucchese, L.; Mitra, S.K. Using saddle points for subpixel feature detection in camera calibration targets. In Proceedings of the Conference on Circuits & Systems, Chengdu, China, 29 June–1 July 2002. [Google Scholar]

- Bradski, G. The OpenCV library. Dr. Dobb J. Softw. Tools Prof. Program. 2000, 25, 120–123. [Google Scholar]

- Bayro-Corrochano, E.; Ortegon-Aguilar, J. Lie algebra approach for tracking and 3D motion estimation using monocular vision. Image Vis. Comput. 2007, 25, 907–921. [Google Scholar] [CrossRef]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer vision with the OpenCV library, 1st ed.; Loukides, M., Ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008; p. 376. [Google Scholar]

- Carlone, L. A convergence analysis for pose graph optimization via Gauss-Newton methods. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 965–972. [Google Scholar]

- Ranganathan, A. The Levenberg-Marquardt Algorithm. Tutoral LM Algorithm 2004, 11, 101–110. [Google Scholar]

- Xiang, G.; Tao, Z.; Yi, L. Fourteen Lectures on Visual SLAM: From Theory to Practice; Publishing House of Electronics Industry: Beijing, China, 2017; p. 112. [Google Scholar]

- Kummerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2O: A general framework for graph optimization. In Proceedings of the IEEE International Conference on Robotics & Automation, Shanghai, China, 9–13 May 2011. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Proceedings of the Vision Algorithms: Theory and Practice, Corfu, Greece, 20 September 1999; Springer: Berlin/Heidelberg, Germany, 2000; pp. 298–372. [Google Scholar]

- Lourakis, M.I.A.; Argyros, A.A. SBA: A Software Package for Generic Sparse Bundle Adjustment. ACM Trans. Math. Softw. (TOMS) 2009, 36, 2. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An Accurate O(n) Solution to the PnP Problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef] [Green Version]

- Garrido-Jurado, S.; Munoz-Salinas, R.; Madrid-Cuevas, F.J.; Medina-Carnicer, R. Generation of fiducial marker dictionaries using Mixed Integer Linear Programming. Pattern Recognit. 2016, 51, 481–491. [Google Scholar] [CrossRef]

- Romero-Ramirez, F.J.; Munoz-Salinas, R.; Medina-Carnicer, R. Speeded up detection of squared fiducial markers. Image Vis. Comput. 2018, 76, 38–47. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Number of channels | 16 |

| Effective measuring distance | 40 cm~200 m |

| Precision | ± 3 cm |

| Perspective | Horizontal 360°, vertical −25°~+15° |

| Angular resolution | Horizontal 0.1°(5 Hz)~0.4°(20 Hz), vertical 0.33° |

| Rotating speed | 300/600/1200 (5/10/20 Hz) |

| Wavelength | 905 nm |

| Laser emission angle (full angle) | Horizontal 7.4 mRad, vertical 0.7 mRad |

| Parameter | Value | |

|---|---|---|

| MER-132-43GC-P | ZED 2-L | |

| Resolution | 1292 × 964 | 1920 × 1080 |

| Pixel size | 3.75 μm × 3.75 μm | 2 μm × 2 μm |

| 1097.9 | 1057.47 | |

| 1095.4 | 1056.79 | |

| 652.383 | 958.87 | |

| 497.9676 | 545.144 | |

| Distortion coefficient | (−0.0975, 0.0879) | (−0.0437, 0.0122) |

| Methods | Re-Projection Error (mm) | ||

|---|---|---|---|

| CAD | (−3, −93, −95) | (1.5708, 0, 0) | 8.874 |

| EPnP [54] | (−0.674, −93.451, −90.725) | (1.57666, 0.00989, −0.01549) | 1.748 |

| 3D-3D [41] | (−2.112, −92.827, −93.998) | (1.57673, −0.00349, −0.01745) | 1.044 |

| Proposed method | (−2.092, −93.018, −94.249) | (1.57666, −0.00343, −0.01650) | 0.161 |

| Methods | Re-Projection Error (mm) | ||

|---|---|---|---|

| CAD | (59.5, 79.5, −5) | (1.5708, 0, 0) | 27.68 |

| EPnP [54] | (59.433, 79.623, −2.779) | (1.59471, −0.0154, 0.00543) | 1.572 |

| 3D-3D [41] | (58.801, 79.402, −5.330) | (1.59470, −0.00601, 0.01286) | 1.227 |

| Proposed method | (57.799, 79.398, −6.198) | (1.59471, −0.0061, 0.01283) | 0.293 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ou, J.; Huang, P.; Zhou, J.; Zhao, Y.; Lin, L. Automatic Extrinsic Calibration of 3D LIDAR and Multi-Cameras Based on Graph Optimization. Sensors 2022, 22, 2221. https://doi.org/10.3390/s22062221

Ou J, Huang P, Zhou J, Zhao Y, Lin L. Automatic Extrinsic Calibration of 3D LIDAR and Multi-Cameras Based on Graph Optimization. Sensors. 2022; 22(6):2221. https://doi.org/10.3390/s22062221

Chicago/Turabian StyleOu, Jinshun, Panling Huang, Jun Zhou, Yifan Zhao, and Lebin Lin. 2022. "Automatic Extrinsic Calibration of 3D LIDAR and Multi-Cameras Based on Graph Optimization" Sensors 22, no. 6: 2221. https://doi.org/10.3390/s22062221