DF-SSmVEP: Dual Frequency Aggregated Steady-State Motion Visual Evoked Potential Design with Bifold Canonical Correlation Analysis

Abstract

:1. Introduction

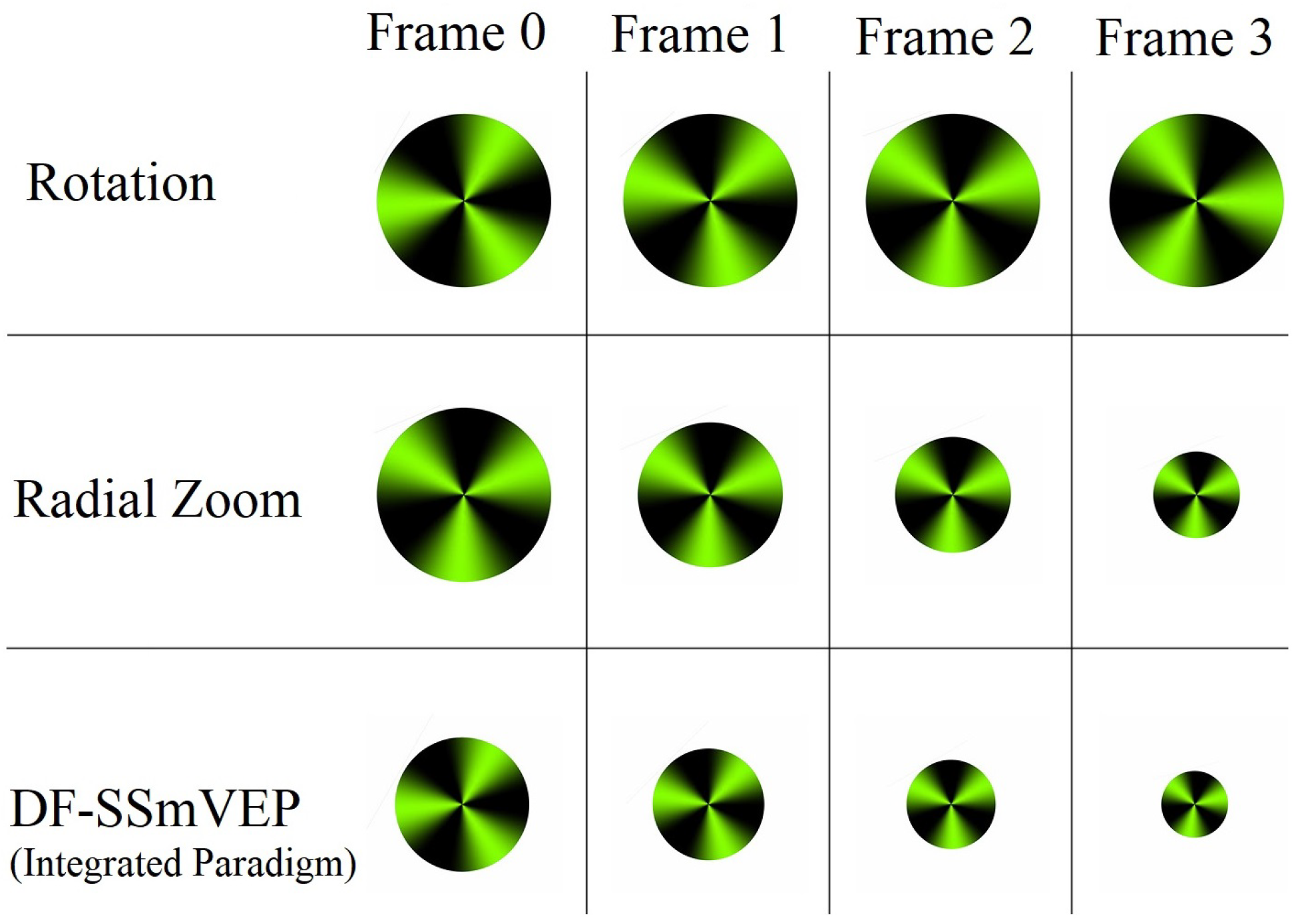

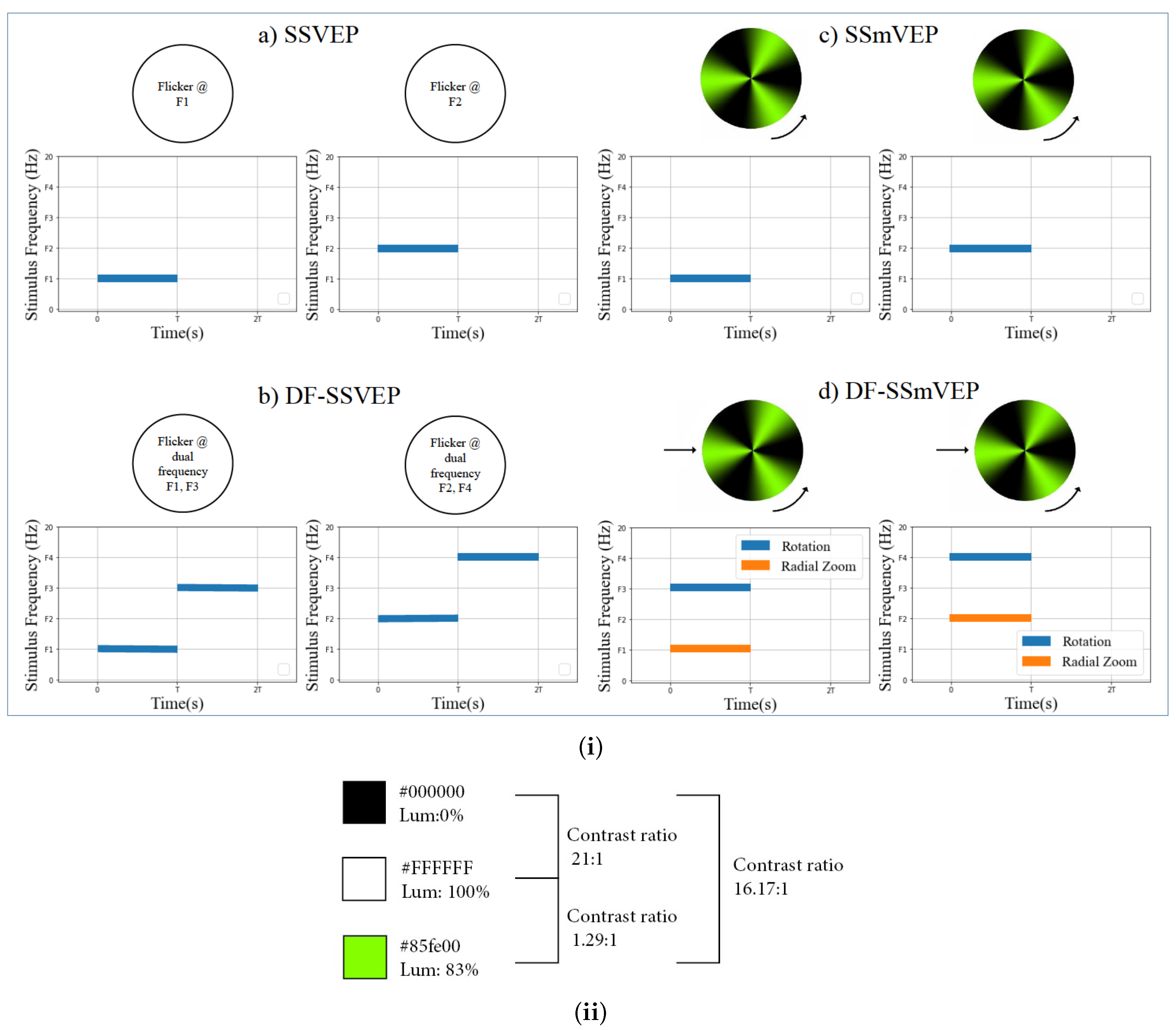

2. The Proposed DF-SSmVEP

Proposed BCCA Paradigm

3. Experimental Results

3.1. Experimental Setup

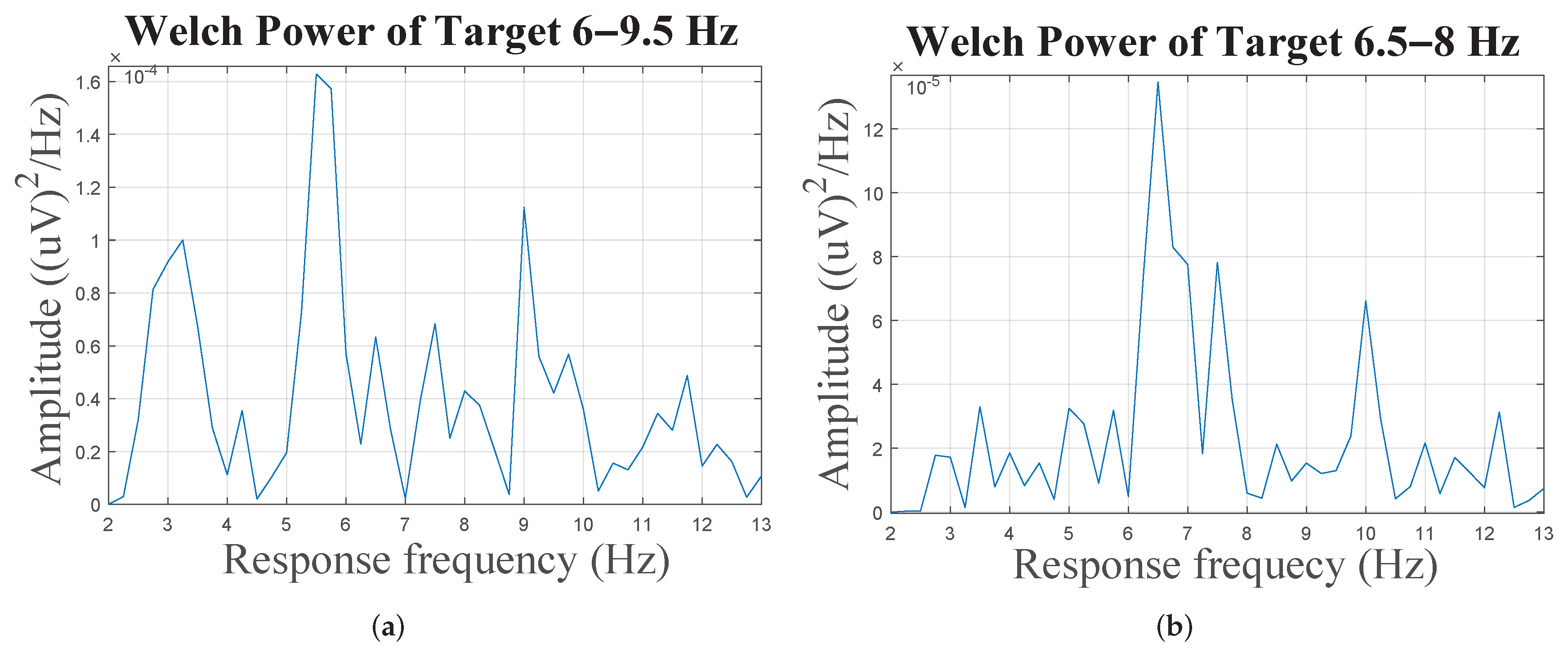

3.2. Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, X.; Xu, X.; Liu, A.; Lee, S.; Chen, X.; Zhang, X.; McKeown, M.J.; Wang, Z.J. Removal of Muscle Artifacts From the EEG: A Review and Recommendations. IEEE Sens. J. 2019, 19, 5353–5368. [Google Scholar]

- Shahtalebi, S.; Mohammadi, A. Bayesian Optimized Spectral Filters Coupled with Ternary ECOC for Single-Trial EEG Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2249–2259. [Google Scholar]

- Samanta, K.; Chatterjee, S.; Bose, R. Cross-Subject Motor Imagery Tasks EEG Signal Classification Employing Multiplex Weighted Visibility Graph and Deep Feature Extraction. IEEE Sens. Lett. 2020, 4, 1–4. [Google Scholar]

- Dagois, E.; Khalaf, A.; Sejdic, E.; Akcakaya, M. Transfer Learning for a Multimodal Hybrid EEG-fTCD Brain–Computer Interface. IEEE Sens. Lett. 2019, 3, 1–4. [Google Scholar]

- Zhang, Y.; Yin, E.; Li, F.; Zhang, Y.; Guo, D.; Yao, D.; Xu, P. Hierarchical Feature Fusion Framework for Frequency Recognition in SSVEP-based BCIs. Neural Netw. 2019, 119, 1–9. [Google Scholar]

- Kubacki, A. Use of Force Feedback Device in a Hybrid Brain-Computer Interface Based on SSVEP, EOG and Eye Tracking for Sorting Items. Sensors 2021, 21, 7244. [Google Scholar]

- Ikeda, A.; Washizawa, Y. Steady-State Visual Evoked Potential Classification Using Complex Valued Convolutional Neural Networks. Sensors 2021, 21, 5309. [Google Scholar]

- De la Cruz-Guevara, D.R.; Alfonso-Morales, W. Caicedo-Bravo, E. Solving the SSVEP Paradigm Using the Nonlinear Canonical Correlation Analysis Approach. Sensors 2021, 21, 5308. [Google Scholar]

- Chen, Y.-J.; Chen, P.-C.; Chen, S.-C.; Wu, C.-M. Denoising Autoencoder-Based Feature Extraction to Robust SSVEP-Based BCIs. Sensors 2021, 21, 5019. [Google Scholar]

- Zhao, X.; Zhao, D.; Wang, X.; Hou, X. A SSVEP Stimuli Encoding Method Using Trinary Frequency-shift Keying Encoded SSVEP (TFSK-SSVEP). Front. Hum. Neurosci. 2017, 11, 278. [Google Scholar]

- Wei, Q.; Feng, S.; Lu, Z. Stimulus Specificity of Brain-Computer Interfaces based on Code Modulation Visual Evoked Potentials. PLoS ONE 2016, 11, e0156416. [Google Scholar]

- Nakanishi, M.; Wang, Y.; Wang, Y.T.; Mitsukura, Y.; Jung, T.-P. A High-Speed Brain Speller using Steady-State Visual Evoked Potentials. Int. J. Neural Syst. 2014, 24, 1450019. [Google Scholar]

- Zhang, Y.; Xu, P.; Liu, T.; Hu, J.; Zhang, R.; Yao, D. Multiple Frequencies Sequential Coding for SSVEP-based Brain-Computer Interface. PLoS ONE 2012, 7, e29519. [Google Scholar]

- Kadioglu, B.; Yildiz, I.; Closas, P.; Fried-Oken, M.B.; Erdogmus, D. Robust Fusion of c-VEP and Gaze. IEEE Sens. Lett. 2019, 3, 1–4. [Google Scholar]

- Zhang, X.; Hou, W.; Wu, W.; Chen, L.; Jiang, N. Enhancing Detection of SSMVEP Induced by Action Observation Stimuli Based on Task-Related Component Analysis. Sensors 2021, 21, 5269. [Google Scholar]

- Beveridge, R.; Wilson, S.; Callaghan, M.; Coyle, D. Neurogaming with Motion-onset Visual Evoked Potentials (MVEPs): Adults versus Teenagers. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 572–581. [Google Scholar]

- Yan, W.; Xu, G.; Chen, L.; Zheng, X. Steady-state Motion Visual Evoked Potential (SSmVEP) Enhancement Method based on Time-Frequency Image Fusion. Comput. Intell. Neurosci. 2019, 2019, 9439407. [Google Scholar]

- Han, C.; Xu, G.; Xie, J.; Chen, C.; Zhang, S. Highly Interactive Brain-Computer Interface based on Flicker-Free Steady-State Motion Visual Evoked Potential. Sci. Rep. 2018, 8, 5835. [Google Scholar]

- Chai, X.; Zhang, Z.; Guan, K.; Liu, G.; Niu, H. A Radial Zoom Motion-based Paradigm for Steady State Motion Visual Evoked Potentials. Front. Hum. Neurosci. 2019, 13, 127. [Google Scholar]

- Yan, W.; Xu, G.; Xie, J.; Li, M.; Dan, Z. Four Novel Motion Paradigms based on Steady-state Motion Visual Evoked Potential. IEEE Trans. Biomed. Eng. 2018, 65, 1696–1704. [Google Scholar]

- Nakanishi, M.; Wang, Y.; Mitsukura, Y.; Jung, T.P. An Approximation Approach for Rendering Visual Flickers in SSVEP-based BCI using Monitor Refresh Rate. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 2176–2179. [Google Scholar]

- Wang, Y.; Jung, T. Visual stimulus design for high-rate SSVEP BCI. Electron. Lett. 2010, 46, 1057–1058. [Google Scholar]

- Available online: https://github.com/raykakarimi/DF-SSmVEP-videos (accessed on 20 December 2021).

- Xie, J.; Xu, G.; Wang, J.; Zhang, F.; Zhang, Y. Steady-state Motion Visual Evoked Potentials Produced by Oscillating Newton’s Rings: Implications for Brain-computer Interfaces. PLoS ONE 2012, 7, e39707. [Google Scholar]

- Zhang, Y.; Zhou, G.; Jin, J.; Wang, X.; Cichocki, A. Frequency recognition in SSVEP-based BCI using multiset canonical correlation analysis. Int. J. Neural Syst. 2014, 24, 1450013. [Google Scholar]

- Carvalho, S.N.; Costa, T.B.S.; Uribe, L.F.S.; Soriano, D.C.; Yared, G.F.G.; Coradine, L.C.; Attux, R. Comparative analysis of strategies for feature extraction and classification in SSVEP BCIs. Biomed. Signal Process. Control 2015, 21, 34–42. [Google Scholar]

- Yan, W.; Xu, G.; Li, M.; Xie, J.; Han, C.; Zhang, S.; Luo, A.; Chen, C. Steady-state motion visual evoked potential (SSMVEP) based on equal luminance colored enhancement. PLoS ONE 2017, 12, e0169642. [Google Scholar]

- Karimi, R.; Mohammadi, A.; Rosero, L.; Asif, A. Deep Video Canonical Correlation Analysis for Steady State motion Visual Evoked Potential Feature Extraction. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–21 January 2021; pp. 1130–1134. [Google Scholar]

| Paradigms | Radial Zoom | Rotation | DF-SSmVEP | ||||

|---|---|---|---|---|---|---|---|

| Filters | |||||||

| ACC | ITR | ACC | ITR | ACC | ITR | ||

| MCF + CCA | 68.12 | 18.35 | 77.5 | 20.52 | 81.88 | 21.89 | |

| T-F Image Fusion + CCA | 59.3 | 13.39 | 68.75 | 13.73 | 63.25 | 13.93 | |

| CCA Fusion | 63.5 | 17.24 | 76.17 | 18.05 | 84.38 | 23.73 | |

| BCCA Fusion | - | - | - | - | 92.5 | 30.7 | |

| PI | Specificity | Sensitivity | Precision | Accuracy | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classes | |||||||||||||

| R | RZ | DF | R | RZ | DF | R | RZ | DF | R | RZ | DF | ||

| 9 Hz or | 0.912 | 0.934 | 0.993 | 0.900 | 0.600 | 0.875 | 0.735 | 0.710 | 0.977 | 0.910 | 0.867 | 0.970 | |

| (9 Hz, 7.5 Hz) | ±0.052 | ±0.037 | ±0.013 | ±0.098 | ±0.226 | ±0.083 | ±0.137 | ±0.061 | ±0.047 | ±0.054 | ±0.020 | ±0.01 | |

| 6 Hz or | 0.940 | 0.893 | 0.984 | 0.725 | 0.875 | 0.962 | 0.757 | 0.702 | 0.947 | 0.897 | 0.890 | 0.980 | |

| (6 Hz, 9.5 Hz) | ±0.023 | ±0.062 | ±0.027 | ±0.098 | ±0 | ±0.060 | ±0.091 | ±0.167 | ±0.870 | ±0.027 | ±0.050 | ±0.023 | |

| 5 Hz or | 0.946 | 0.875 | 0.940 | 0.625 | 0.937 | 0.975 | 0.737 | 0.663 | 0.829 | 0.882 | 0.8875 | 0.952 | |

| (5 Hz, 8.5 Hz) | ±0.025 | ±0.044 | ±0.033 | ±0.220 | ±0.106 | ±0.053 | ±0.118 | ±0.068 | ±0.100 | ±0.054 | ±0.017 | ±0.029 | |

| 7 Hz or | 0.981 | 0.993 | 0.993 | 0.787 | 0.312 | 1 | 0.937 | 0.933 | 0.977 | 0.942 | 0.857 | 0.995 | |

| (7 Hz, 5.5 Hz) | ±0.030 | ±0.013 | ±0.013 | ±0.177 | ±0.135 | ±0 | ±0.100 | ±0.140 | ±0.047 | ±0.026 | ±0.031 | ±0.011 | |

| 8 Hz or | 0.940 | 0.906 | 0.987 | 0.850 | 0.687 | 0.812 | 0.809 | 0.649 | 0.939 | 0.922 | 0.862 | 0.952 | |

| (8 Hz, 6.5 Hz) | ±0.047 | ±0.062 | ±0.016 | ±0.098 | ±0.244 | ±0.135 | ±0.131 | ±0.221 | ±0.079 | ±0.027 | ±0.095 | ±0.036 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karimi, R.; Mohammadi, A.; Asif, A.; Benali, H. DF-SSmVEP: Dual Frequency Aggregated Steady-State Motion Visual Evoked Potential Design with Bifold Canonical Correlation Analysis. Sensors 2022, 22, 2568. https://doi.org/10.3390/s22072568

Karimi R, Mohammadi A, Asif A, Benali H. DF-SSmVEP: Dual Frequency Aggregated Steady-State Motion Visual Evoked Potential Design with Bifold Canonical Correlation Analysis. Sensors. 2022; 22(7):2568. https://doi.org/10.3390/s22072568

Chicago/Turabian StyleKarimi, Raika, Arash Mohammadi, Amir Asif, and Habib Benali. 2022. "DF-SSmVEP: Dual Frequency Aggregated Steady-State Motion Visual Evoked Potential Design with Bifold Canonical Correlation Analysis" Sensors 22, no. 7: 2568. https://doi.org/10.3390/s22072568