Robust and High-Performance Machine Vision System for Automatic Quality Inspection in Assembly Processes

Abstract

:1. Introduction

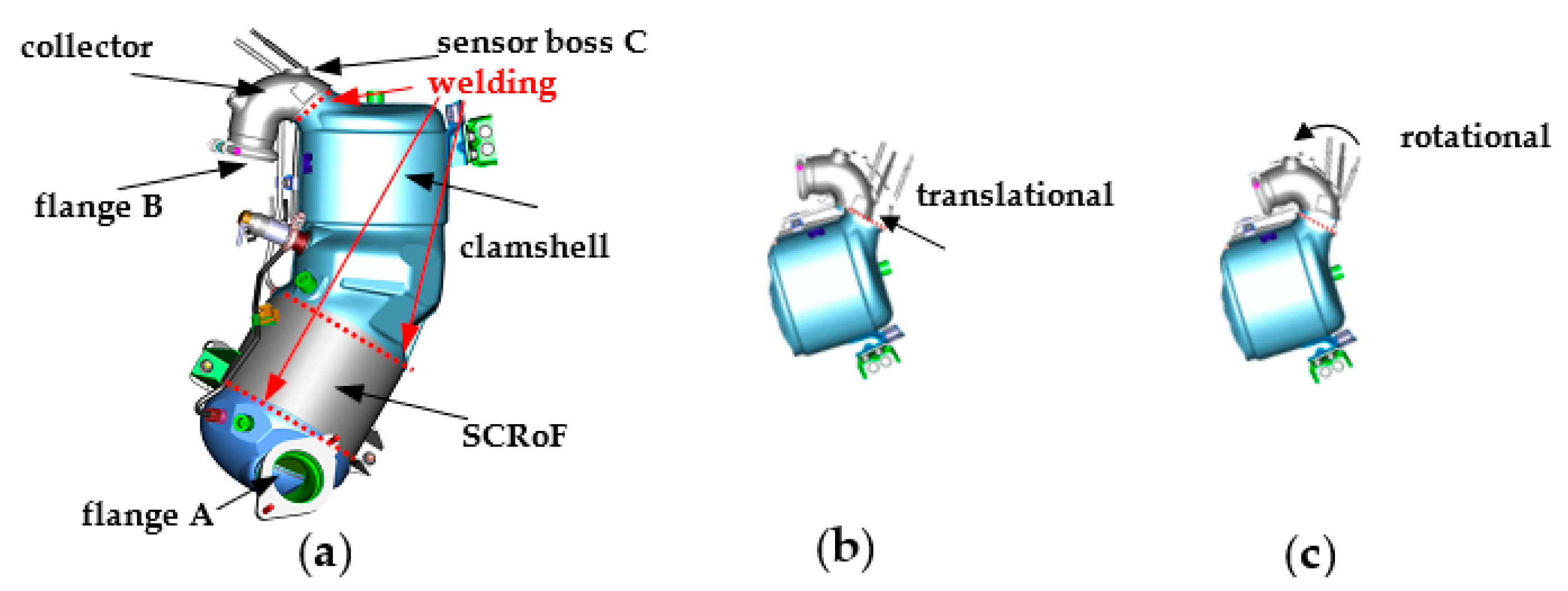

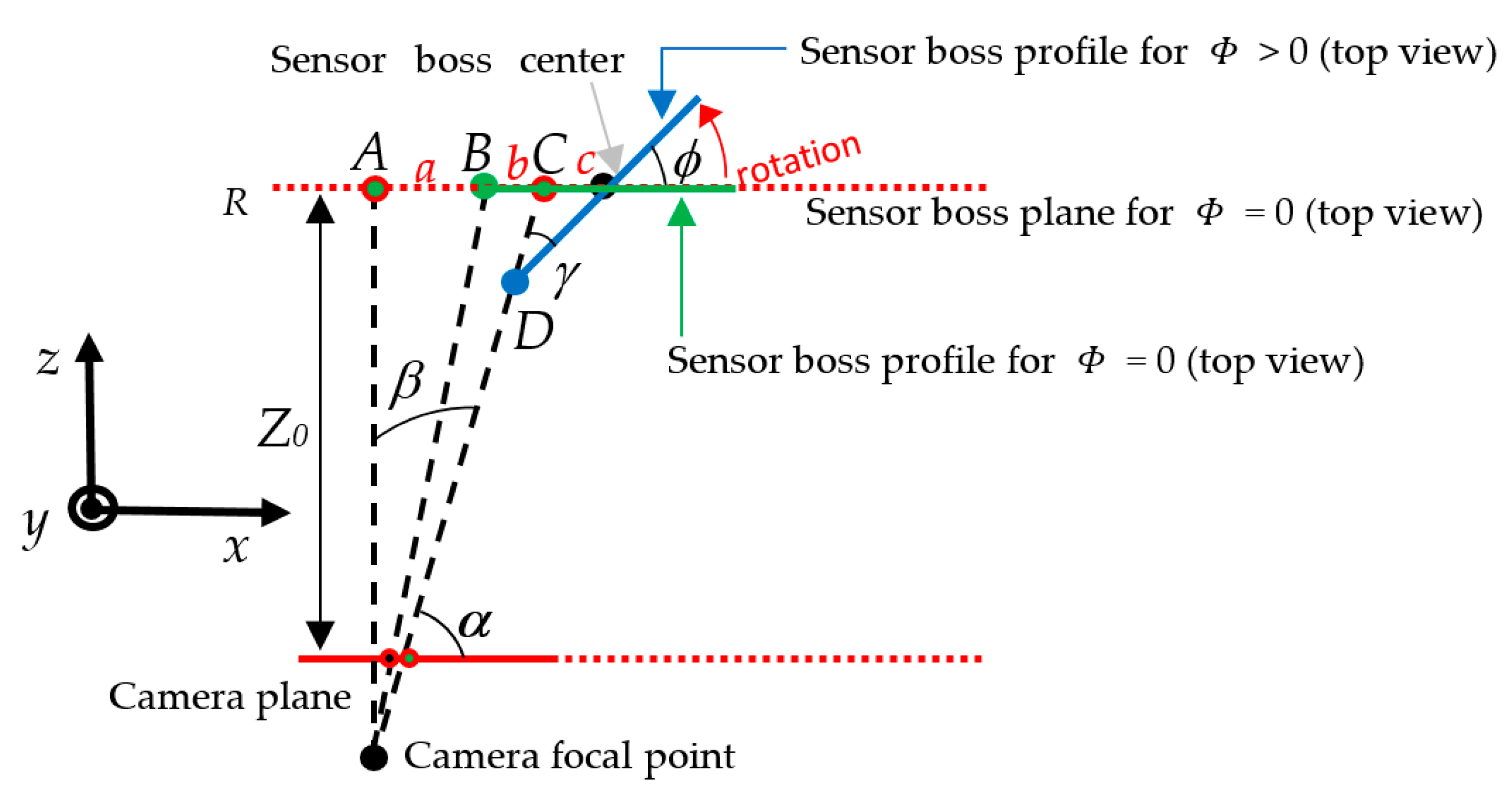

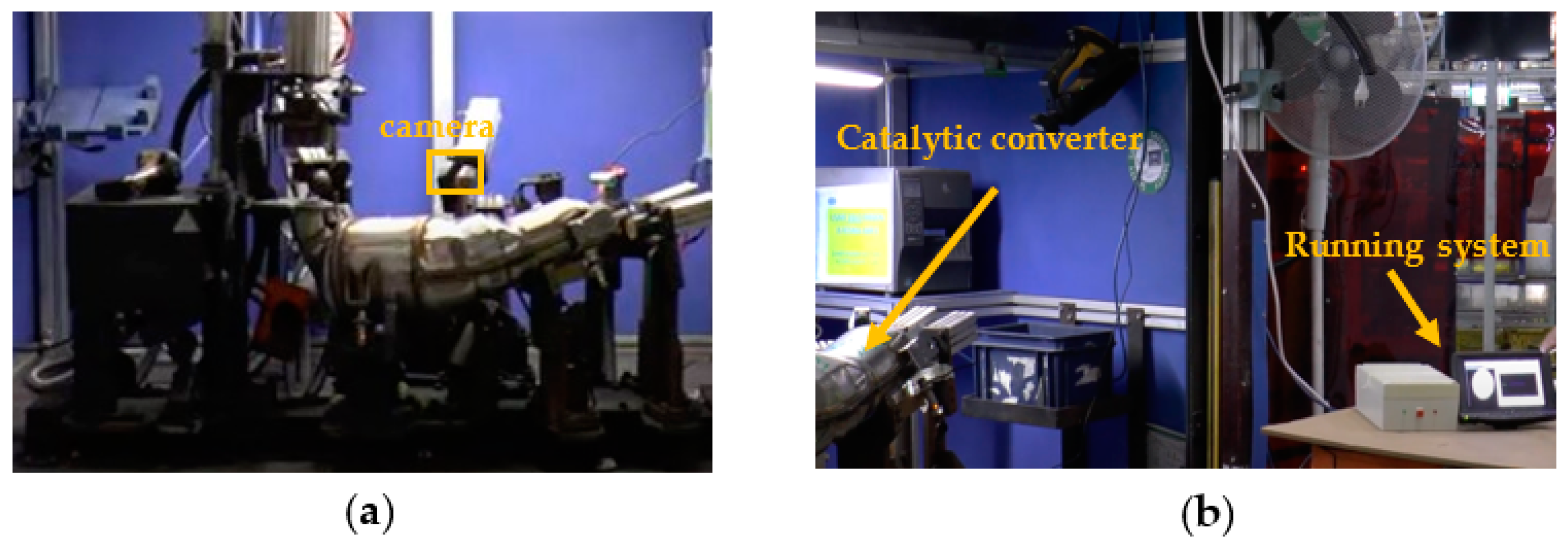

2. The Catalytic Converter Assembly Process

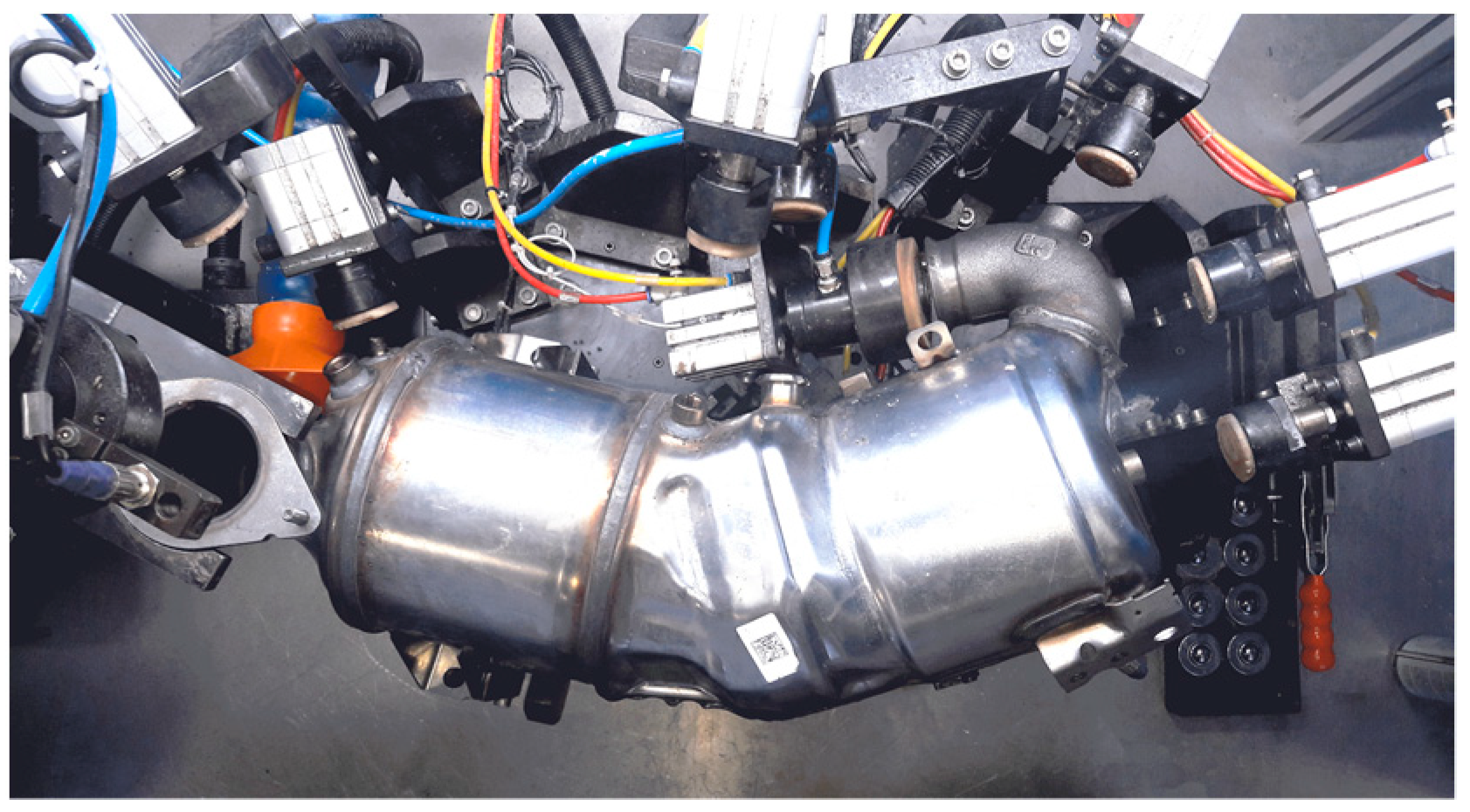

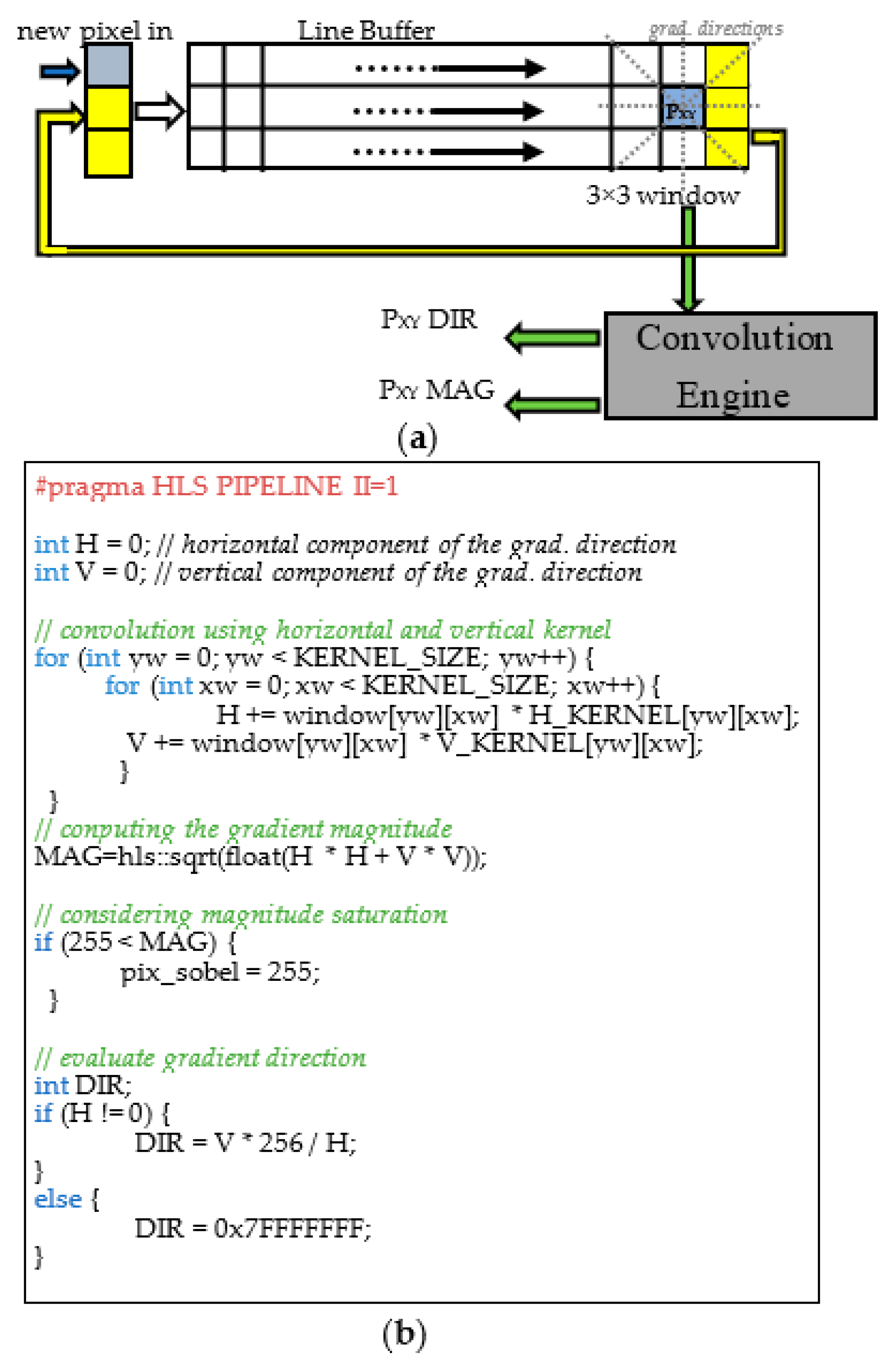

3. The Image Processing and the Geometrical Model

3.1. Image Segmentation

- The color image acquisition from the video camera (GeTCameras Inc., Eindhoven, The Netherlands); 640 × 480 image resolution was used in our experiments;

- The image conversion and cropping. It converts the input image from the RGB color space into the 8-bit grayscale domain. Moreover, it crops a 220 × 220 region of the original image, which contains the profile of the sensor boss C, to reduce the computational complexity;

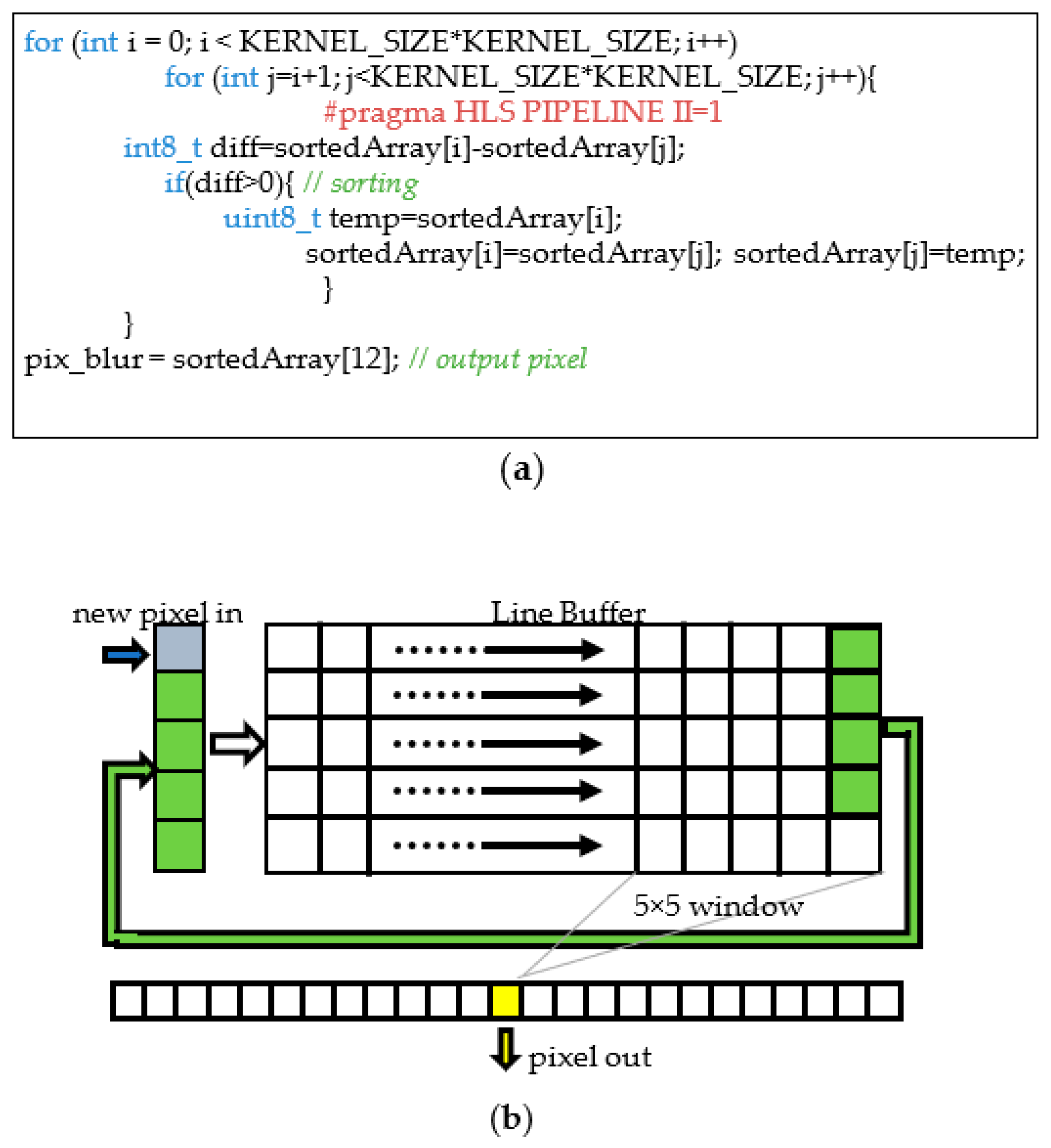

- The ROI filtering removes noise and detects the edges of the sensor boss. Firstly, a 5 × 5 median filter removes the noise from the image. Successively, a Canny filter with lower and higher thresholds of 10 and 50, respectively, detects the edges [23]. The filter dimension and its thresholds are set empirically. The Canny filter was chosen since it is well known as one of the most robust processing methods for edge detection [24,25,26,27];

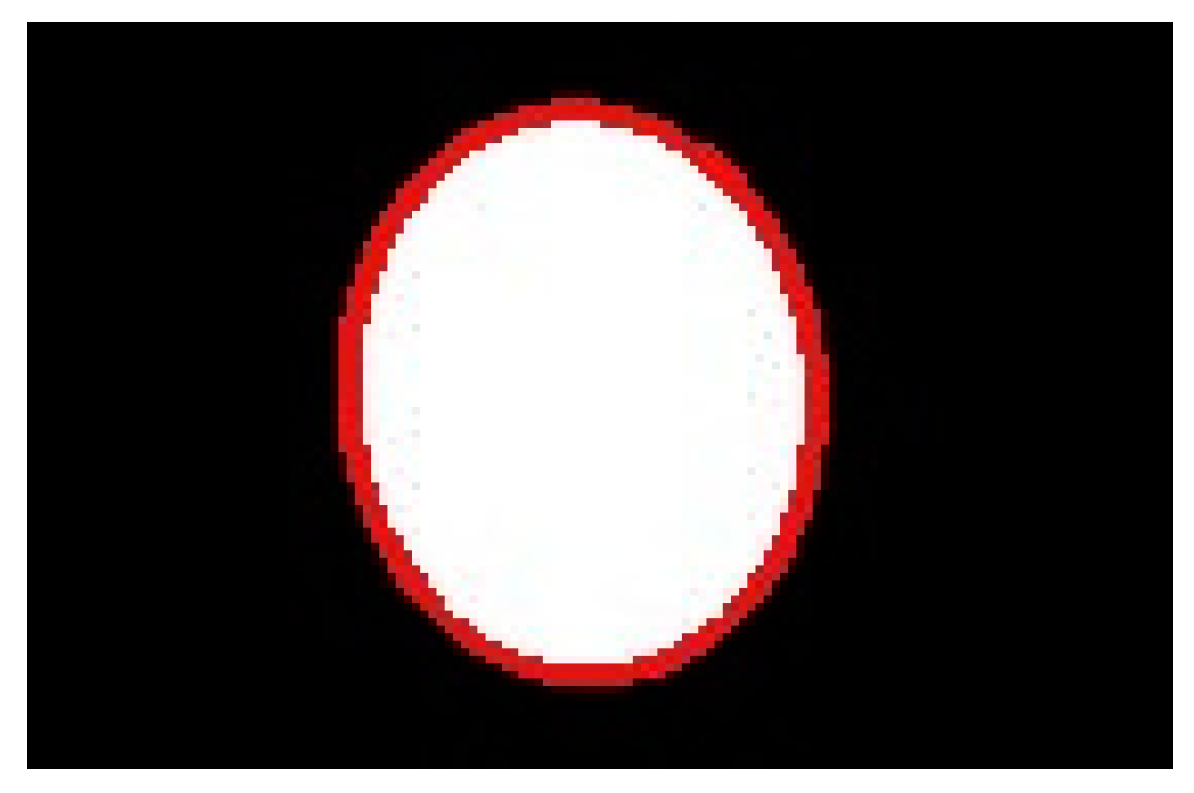

- The contour selection aims at selecting only the edge related to the external profile of the sensor boss. The inevitable irregularities on the surface of the collector, as well as noise in the illumination conditions over time, cause the detection of several edges that are not of interest. The goal is to find a reasonable procedure to select just the contour related to the profile of the sensor boss. Towards this aim, all of the contours in the filtered image produced by the previous step are stored into a data structure. The contour with the largest length is then selected as the one that most likely is related to the boundary of the sensor boss;

- The morphological filtering of the selected contour. It is important that the selected contour delimits a closed area in order to robustly discern, in the image, the area of the sensor boss from the other non-relevant regions. Due to noise, such a condition may be not satisfied. Moreover, the selected contour may still contain some irregularities that can affect the precision of the sensor boss detection procedure. To remove such non-idealities, a 3 × 3 morphological dilatation filtering is used that thickens the selected contour so that any possible gap can be filled, and, most likely, the contour can delimit a closed area. Successively, the pixels that are inside the identified area are set to the same value (i.e., 255, corresponding to white in an 8-bit grayscale image). A 3 × 3 morphological erosion filtering restores the original size of the detected enclosed area. Afterwards, two consecutive erosion and dilation morphological filtering, with their larger 21 × 21 kernel sizes, eliminate any other possible fringes outside the sensor boss area from the segmented image. Figure 3 illustrates the intermediate outputs of the above discussed steps.

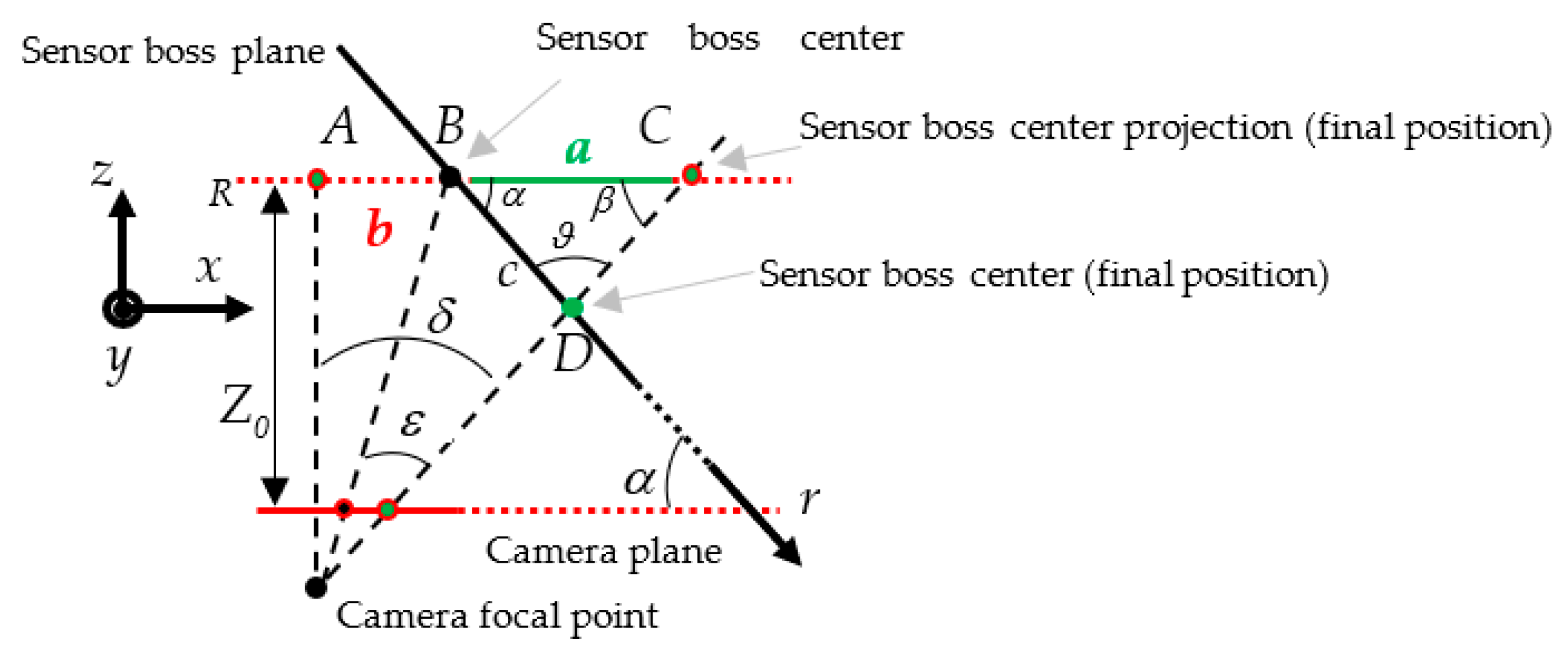

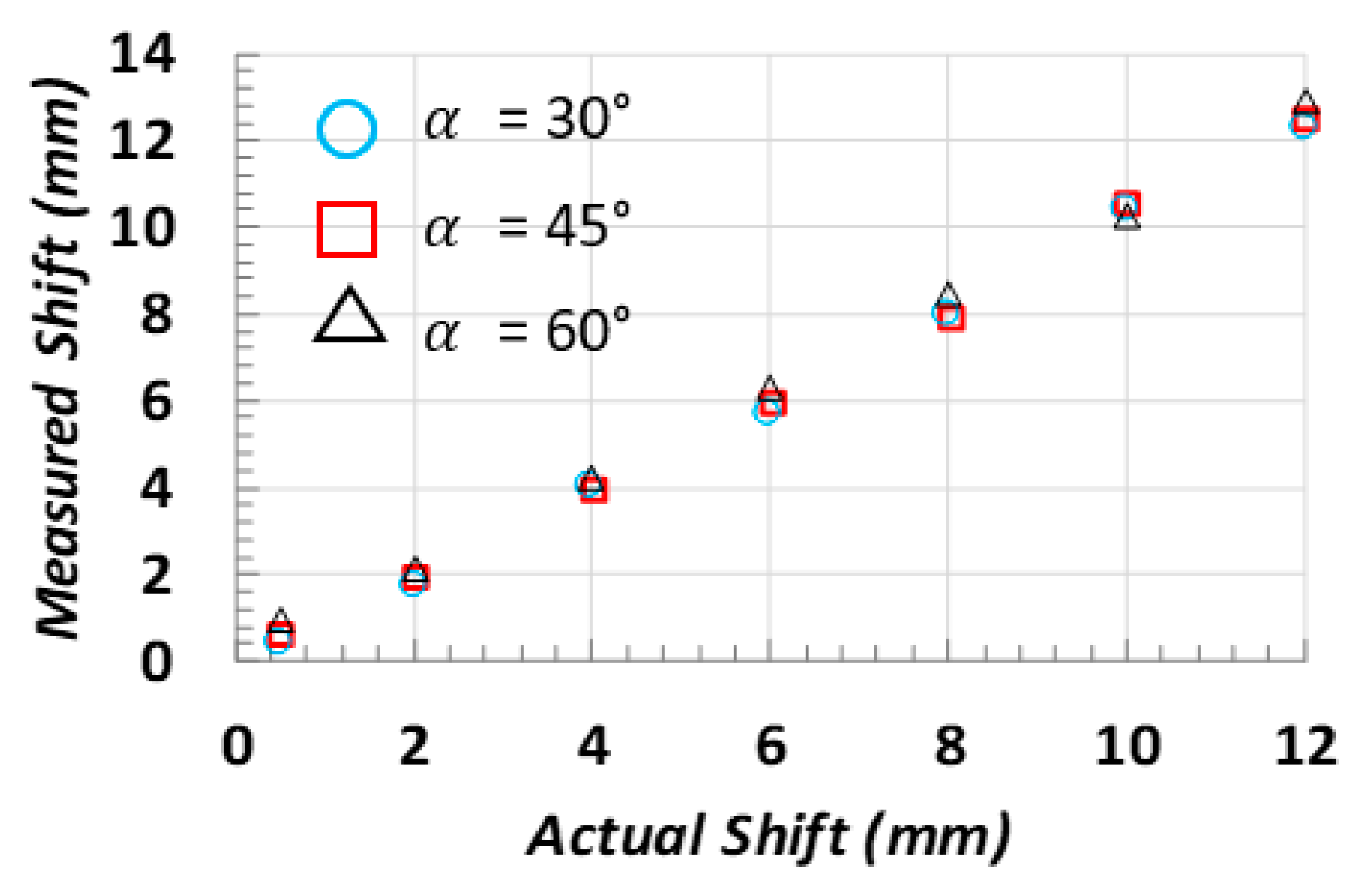

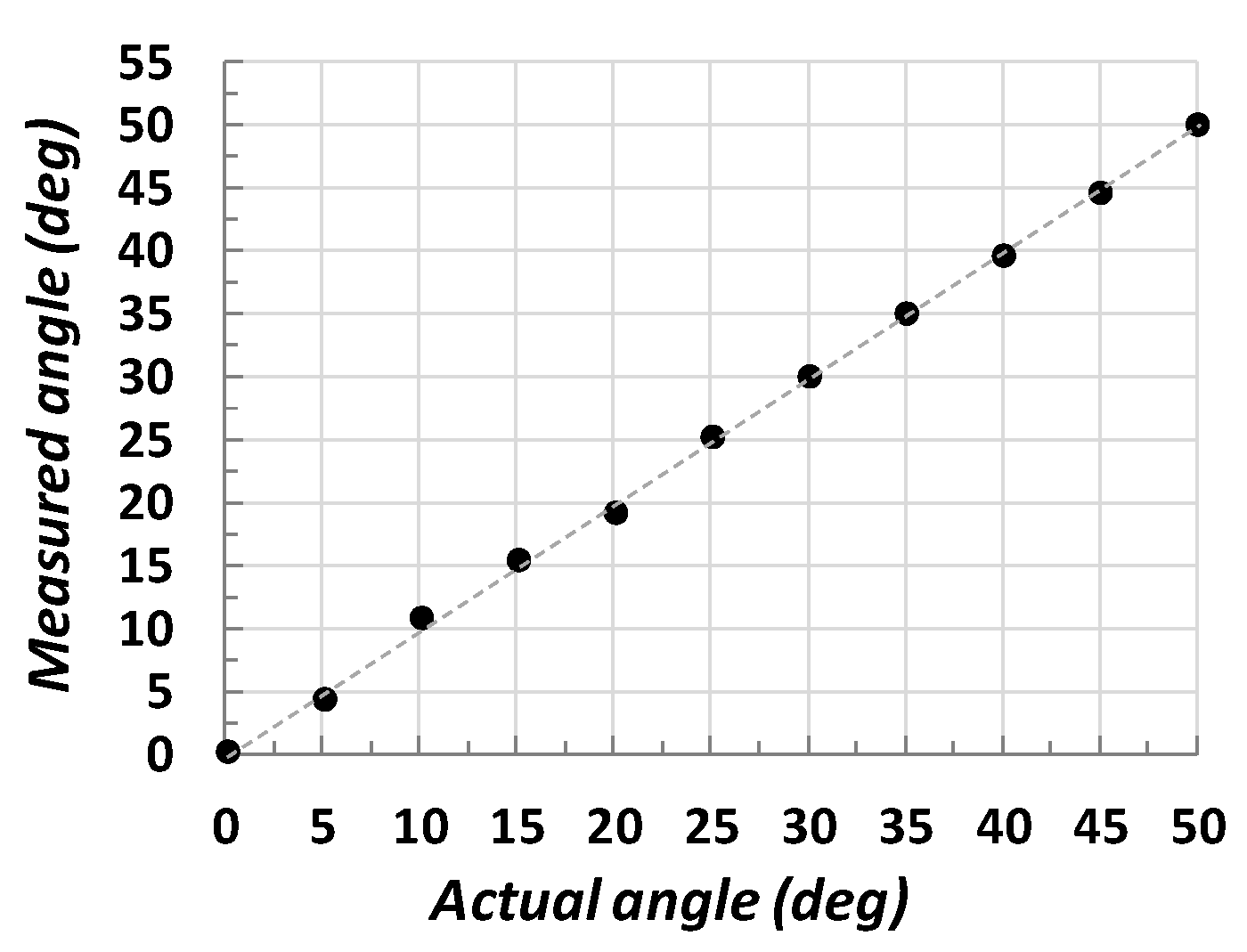

3.2. Features Extraction

4. The Hardware System

5. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Tambare, P.; Meshram, C.; Lee, C.-C.; Ramteke, R.J.; Imoize, A.L. Performance Measurement System and Quality Management in Data-Driven Industry 4.0: A Review. Sensors 2022, 22, 224. [Google Scholar] [CrossRef] [PubMed]

- Silva, R.L.; Rudek, M.; Szejka, A.L.; Junior, O.C. Machine Vision Systems for Industrial Quality Control Inspections. In Product Lifecycle Management to Support Industry 4.0, Proceedings of the IFIP Advances in Information and Communication Technology, Turin, Italy, 2–4 July 2018; Chiabert, P., Bouras, A., Noël, F., Ríos, J., Eds.; Springer: Cham, Switzerland, 2018; Volume 540, pp. 631–641. [Google Scholar]

- Golnabia, H.; Asadpourb, A. Design and application of industrial machine vision systems. In Robotics and Computer-Integrated Manufacturing; Springer: Berlin/Heidelberg, Germany, 2007; Volume 23. [Google Scholar]

- Vergara-Villegas, O.O.; Cruz-Sànchez, V.G.; Ochoa-Domìnguez, H.J.; Nandayapa-Alfaro, M.J.; Flores-Abad, A. Automatic Product Quality Inspection Using Computer Vision Systems. In Lean Manufacturing in the Developing World; García-Alcaraz, J., Maldonado-Macías, A., Cortes-Robles, G., Eds.; Springer: Cham, Switzerland, 2014; pp. 135–156. [Google Scholar]

- Chen, C.; Zhang, C.; Wang, T.; Li, D.; Guo, Y.; Zhao, Z.; Hong, J. Monitoring of Assembly Process Using Deep Learning Technology. Sensors 2020, 20, 4208. [Google Scholar] [CrossRef] [PubMed]

- Uhlemanna, T.H.-J.; Lehmanna, C.; Steinhilper, R. The Digital Twin: Realizing the Cyber-Physical Production System for Industry 4.0. Procedia CIRP 2017, 61, 335–340. [Google Scholar] [CrossRef]

- Leng, J.; Wang, D.; Shen, W.; Li, X.; Liu, Q.; Chen, X. Digital twins-based smart manufacturing system design in Industry 4.0: A review. J. Manuf. Syst. 2021, 60, 119–137. [Google Scholar] [CrossRef]

- Gaoliang, P.; Zhujun, Z.; Weiquan, L. Computer vision algorithm for measurement and inspection of O-rings. Measurements 2016, 94, 828–836. [Google Scholar]

- Ngo, N.-V.; Hsu, Q.-C.; Hsiao, W.-L.; Yang, C.-J. Development of a simple three-dimensional machine-vision measurement system for in-process mechanical parts. Adv. Mech. Eng. 2017, 9, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Petrišič, J.; Suhadolnik, A.; Kosel, F. Object Length and Area Calculations on the Digital Images. In Proceedings of the 12th International Conference Trends in the Development of Machinery and Associated Technology (TMT 2008), Istanbul, Turkey, 26–30 August 2008; pp. 713–716. [Google Scholar]

- Palousek, D.; Omasta, K.M.; Koutny, D.; Bednar, J.; Koutecky, T.; Dokoupil, F. Effect of matte coating on 3D optical measurement accuracy. Opt. Mater. 2015, 40, 1–9. [Google Scholar] [CrossRef]

- Lai, S.H.; Fang, M. A Hybrid Image Alignment System for Fast and Precise Pattern Localization. Real-Time Imaging 2002, 8, 23–33. [Google Scholar] [CrossRef]

- Mahapatra, P.K.; Thareja, R.; Kaur, M.; Kumar, A. A Machine Vision System for Tool Positioning and Its Verification. Meas. Control. 2015, 48, 249–260. [Google Scholar] [CrossRef] [Green Version]

- Anwar, A.; Lin, W.; Deng, X.; Qiu, J.; Gao, H. Quality Inspection of Remote Radio Units Using Depth-Free Image-Based Visual Servo with Acceleration Command. IEEE Trans. Ind. Electron. 2019, 66, 8214–8223. [Google Scholar] [CrossRef]

- Sassi, P.; Tripicchio, P.; Avizzano, C.A. A Smart Monitoring System for Automatic Welding Defect Detection. IEEE Trans. Ind. Electron. 2019, 66, 9641–9650. [Google Scholar] [CrossRef]

- Boaretto, N.; Centenoa, T.M. Automated detection of welding defects in pipelines from radiographic images DWDI. NDT&E Int. 2017, 86, 7–13. [Google Scholar]

- Block, S.B.; Silva, R.D.; Dorini, L.B.; Minetto, R. Inspection of Imprint Defects in Stamped Metal Surfaces Using Deep Learning and Tracking. IEEE Trans. Ind. Electron. 2019, 66, 4498–4507. [Google Scholar] [CrossRef]

- Prabhu, V.A.; Tiwaria, A.; Hutabarata, W.; Throwera, J.; Turnera, C. Dynamic Alignment Control Using Depth Imagery for Automated Wheel Assembly. Procedia CIRP 2014, 25, 161–168. [Google Scholar] [CrossRef] [Green Version]

- Fernández, A.; Acevedo, R.G.; Alvarez, E.A.; Lopez, A.C.; Garcia, D.F.; Fernandez, R.U.; Meana, M.J.; Sanchez, J.M.G. Low-Cost System for Weld Tracking Based on Artificial Vision. In Proceedings of the 2009 IEEE Industry Applications Society Annual Meeting, Houston, TX, USA, 4–8 October 2009; Volume 47, pp. 1159–1166. [Google Scholar]

- Chauhan, V.; Surgenor, B. A Comparative Study of Machine Vision Based Methods for Fault Detection in an Automated Assembly Machine. Procedia Manuf. 2015, 1, 416–428. [Google Scholar] [CrossRef] [Green Version]

- Usamentiaga, R.; Molleda, J.; Garcia, D.F.; Bulnes, F.G.; Perez, J.M. Jam Detector for Steel Pickling Lines Using Machine Vision. IEEE Trans. Ind. Appl. 2013, 49, 1954–1961. [Google Scholar] [CrossRef]

- Frustaci, F.; Perri, S.; Cocorullo, G.; Corsonello, P. An embedded machine vision system for an in-line quality check of assembly processes. Procedia Manuf. 2020, 42, 211–218. [Google Scholar] [CrossRef]

- Canny, J.F. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef]

- Choi, K.-H.; Ha, J.-E. An Adaptive Threshold for the Canny Algorithm with Deep Reinforcement Learning. IEEE Access 2021, 9, 156846–156856. [Google Scholar] [CrossRef]

- Kalbasi, M.; Nikmehr, H. Noise-Robust, Reconfigurable Canny Edge Detection and its Hardware Realization. IEEE Access 2020, 8, 39934–39945. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, H.; Kneip, L. Canny-VO: Visual Odometry with RGB-D Cameras Based on Geometric 3-D–2-D Edge Alignment. IEEE Trans. Robot. 2019, 35, 184–199. [Google Scholar] [CrossRef]

- Lee, J.; Tang, H.; Park, J. Energy Efficient Canny Edge Detector for Advanced Mobile Vision Applications. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 1037–1046. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: New York, NY, USA, 2003. [Google Scholar]

- AMBA 4 AXI4, AXI4-Lite, and AXI4-Stream Protocol Assertions User Guide. Available online: http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.ihi0022d/index.html (accessed on 26 February 2022).

- Vivado Design Suite User Guide-High-Level Synthesis, UG902 (v2020.1), May 2021. Available online: https://www.xilinx.com/support/documentation/sw_manuals/xilinx2020_2/ug902-vivado-high-level-synthesis.pdf (accessed on 26 February 2022).

- Oh, S.; You, J.-H.; Kim, Y.-K. FPGA Acceleration of Bolt Inspection Algorithm for a High-Speed Embedded Machine Vision System. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS 2019), Jeju, Korea, 15–18 October 2019; pp. 1070–1073. [Google Scholar]

- Zhong, Z.; Ma, Z. A novel defect detection algorithm for flexible integrated circuit package substrates. IEEE Trans. Ind. Electron. 2022, 69, 2117–2126. [Google Scholar] [CrossRef]

- Le, M.-T.; Tu, C.-T.; Guo, S.-M.; Lien, J.-J.J. A PCB Alignment System Using RST Template Matching with CUDA on Embedded GPU Board. Sensors 2020, 20, 2736. [Google Scholar] [CrossRef]

- Lü, X.; Gu, D.; Wang, Y.; Qu, Y.; Qin, C.; Huang, F. Feature Extraction of Welding Seam Image Based on Laser Vision. IEEE Sens. J. 2018, 18, 4715–4724. [Google Scholar] [CrossRef]

- Wang, C.; Wang, N.; Ho, S.C.; Chen, X.; Song, G. Design of a New Vision-Based Method for the Bolts Looseness Detection in Flange Connections. IEEE Trans. Ind. Electron. 2020, 67, 1366–1375. [Google Scholar] [CrossRef]

- Lies, B.; Cai, Y.; Spahr, E.; Lin, K.; Qin, H. Machine vision assisted micro-filament detection for real-time monitoring of electrohydrodynamic inkjet printing. Procedia Manuf. 2018, 26, 29–39. [Google Scholar] [CrossRef]

| Hardware Component | LUTs | FFs | DSPs | BRAMs (36 Kb) |

|---|---|---|---|---|

| DMA0 | 1671 | 2322 | 0 | 3 |

| DMA1 | 1487 | 1948 | 0 | 3 |

| RGB2Gray | 119 | 169 | 3 | 0 |

| MedianBlur | 4795 | 3383 | 0 | 1 |

| Canny | 2347 | 2455 | 2 | 3 |

| Dilate_Erode | 844 | 904 | 0 | 3 |

| Erode_Dilate | 844 | 904 | 0 | 3 |

| AXIS width converters | 154 | 366 | 0 | 0 |

| Systems Interrupt | 168 | 159 | 0 | 0 |

| PS7 AXI Peripheral | 727 | 1284 | 0 | 0 |

| AXI SMC | 3495 | 4316 | 0 | 0 |

| TOTAL | 16,651 | 18,210 | 5 | 16 |

| % of the available | 31.3% | 17.1% | 2.2% | 11.4% |

| [31] | This Work | |

|---|---|---|

| CV operations | Threshold, Morphological Filtering, Find centers. | Median Filter, Canny Filter, Contour Selection, Morphological Filtering, Connected Component Analysis. |

| Device | XCZU7EV | XC7Z020 |

| LUTs | 50,607 | 16,651 |

| FFs | 43,012 | 18,210 |

| DSPs | 0 | 5 |

| BRAMs (36 Kb) | 72 | 16 |

| Mpixels/s | 15.7 | 11.3 |

| Work | CV Operations | Time (ms) | Platform | Implementation |

|---|---|---|---|---|

| [8] | Canny, Morphological Filtering | - | PC | SW |

| [13] | Sobel edge detection, OTSU thresholding. | - | PC | SW |

| [14] | Contours Selection, Method of Moments, Template Matching. | ~65 | - | SW |

| [15] | Histogram Equalization, Gaussian Filtering, Canny, Circle detection, Inference on a DNN. | 33 | GPU | SW |

| [19] | Pixel Thresholding, Median Filter. | 250 | PC | SW |

| [21] | Canny, Hough transformation, Background Subtraction. | 400 | PC | SW |

| [32] | Contrast enhancement, Gamma transformation, Custom 3 × 3 Convolution. | 250 | - | SW |

| [33] | Template Matching. | 99 | PC | SW |

| [34] | Median Filter, OTSU thresholding | 22.5 | PC | SW |

| [35] | Image Adaptive Thresholding, Find Contours, Template matching, Hough Transform. | - | PC | SW |

| [36] | OTSU Thresholding, Blob analysis, Morphological Filtering, 3D rendering. | - | - | SW |

| This work | Median Filter, Canny Filter, Contour Selection, Morphological Filtering, Connected Component Analysis. | 26 | Xilinx Zynq XC7Z020 | HW/SW codesign |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Frustaci, F.; Spagnolo, F.; Perri, S.; Cocorullo, G.; Corsonello, P. Robust and High-Performance Machine Vision System for Automatic Quality Inspection in Assembly Processes. Sensors 2022, 22, 2839. https://doi.org/10.3390/s22082839

Frustaci F, Spagnolo F, Perri S, Cocorullo G, Corsonello P. Robust and High-Performance Machine Vision System for Automatic Quality Inspection in Assembly Processes. Sensors. 2022; 22(8):2839. https://doi.org/10.3390/s22082839

Chicago/Turabian StyleFrustaci, Fabio, Fanny Spagnolo, Stefania Perri, Giuseppe Cocorullo, and Pasquale Corsonello. 2022. "Robust and High-Performance Machine Vision System for Automatic Quality Inspection in Assembly Processes" Sensors 22, no. 8: 2839. https://doi.org/10.3390/s22082839

APA StyleFrustaci, F., Spagnolo, F., Perri, S., Cocorullo, G., & Corsonello, P. (2022). Robust and High-Performance Machine Vision System for Automatic Quality Inspection in Assembly Processes. Sensors, 22(8), 2839. https://doi.org/10.3390/s22082839