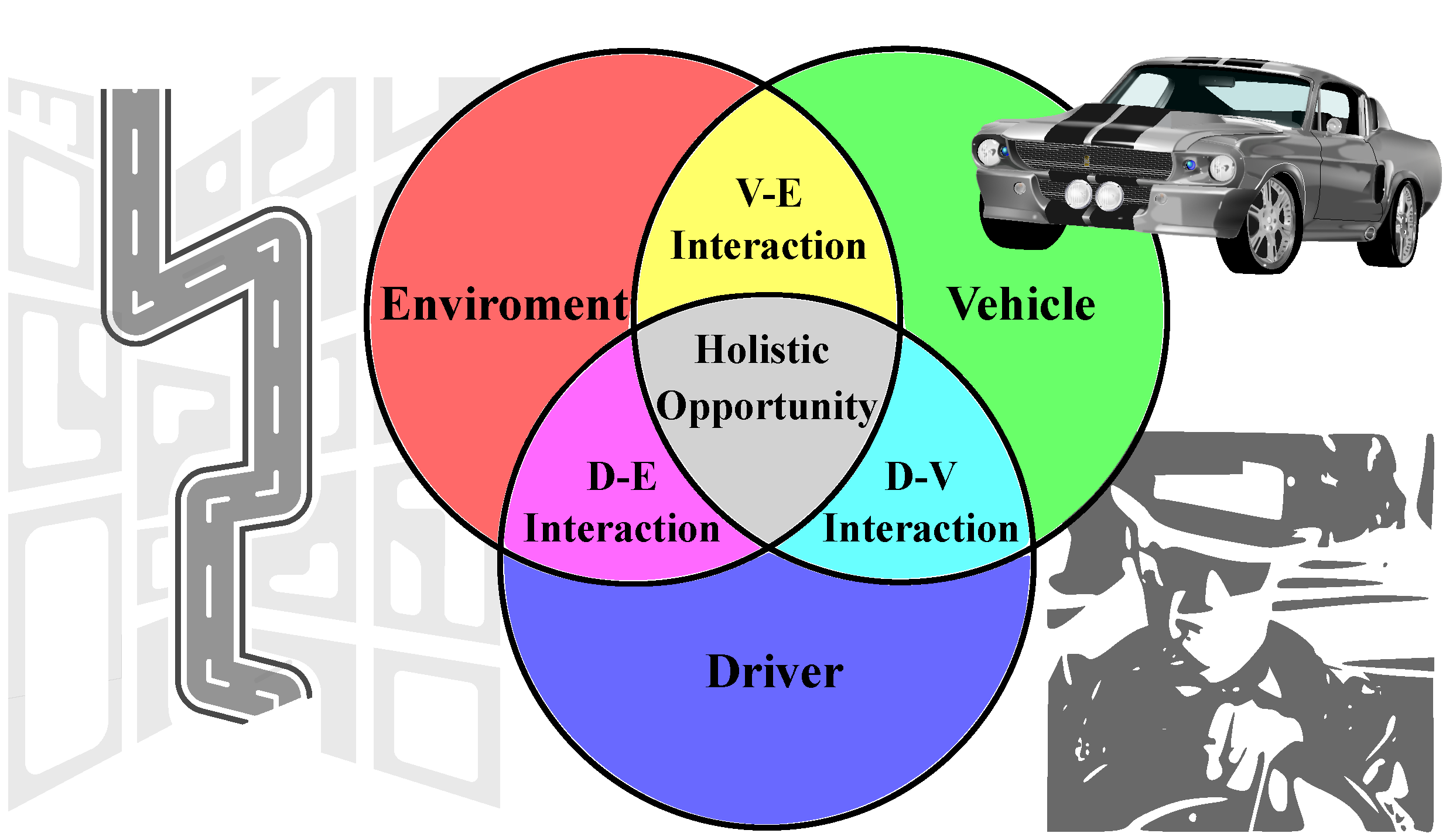

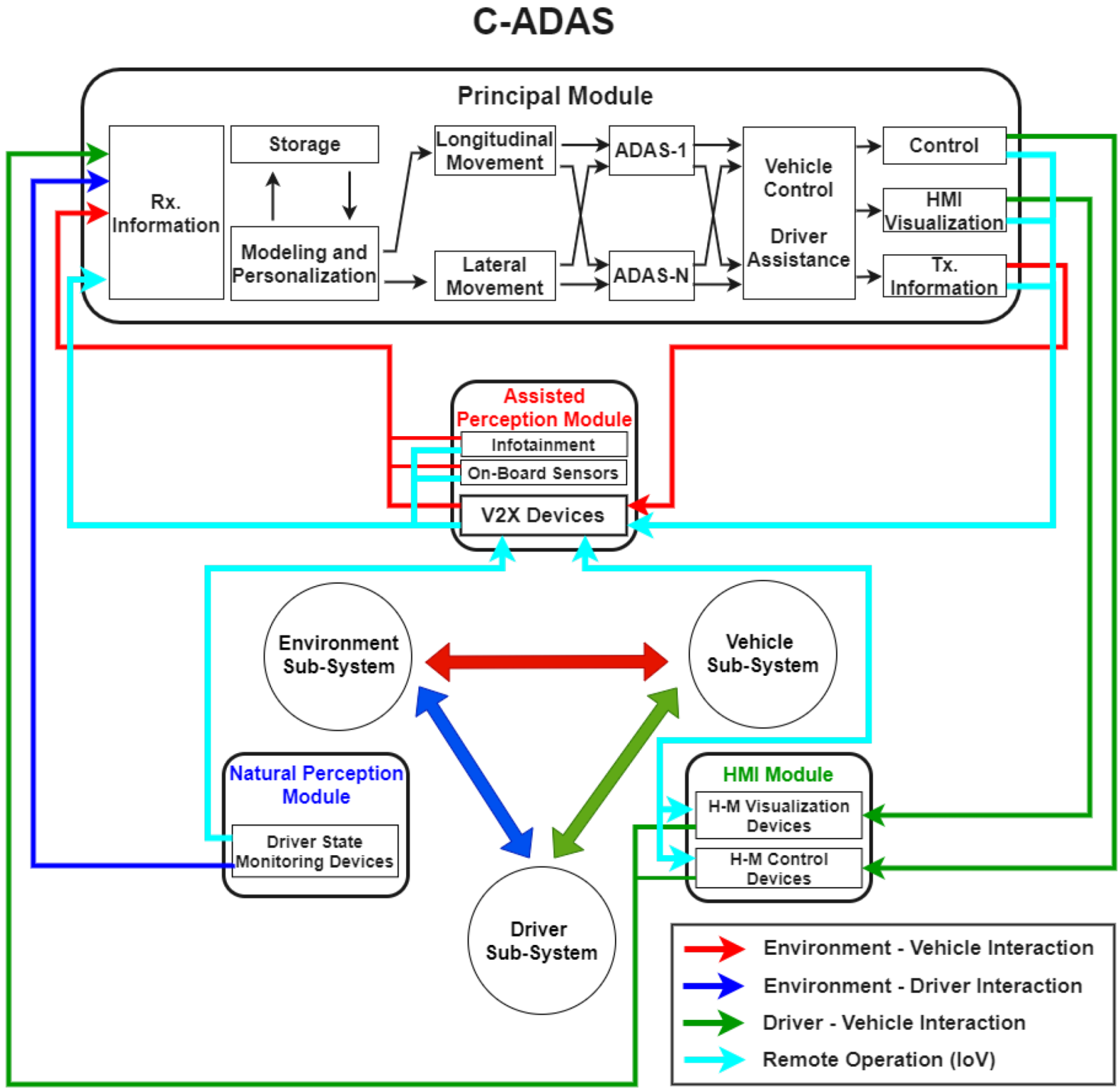

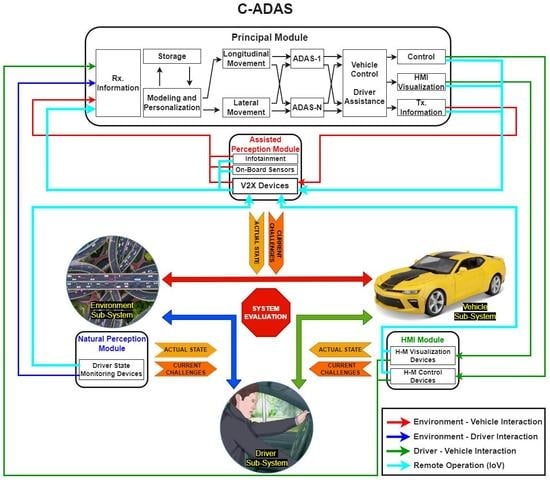

The analysis of bidirectionality in the study of the interaction between driver and environment (V–E) is relevant to all ADAS. If we analyze, for example, the information coming from the environment, we find that it can be received by the C-ADAS through several ways: (i) directly, through the information captured by the set of sensors and communication devices that are found on board the vehicle (E -> V) or else (ii) indirectly, at first through the information captured by the driver through their sensory elements and later, through the information partially captured by devices on board the vehicle in charge of monitoring the status and behavior of the driver as a reaction to the stimuli that he perceives from the environment (E -> D -> V). This alternative path of redundancy is relevant to consider, given that sensors and communication devices present limitations and challenges for their optimal operation. This degree of redundancy can only be achieved if the design of the C-ADAS is approached from a holistic and systemic perspective, where the study of directionality in interactions is considered. The works of the state of the art that are described in this section, fundamentally contribute to the first of these two ways (directly).

The main elements associated with the assisted perception module of the surrounding environment are described in this section, as well as the signaling devices, sensors, and communication technologies involved with the operation of this module. On the one hand, this perception “assists” the driver in acquiring information from the environment in which he/she operates. On the other hand, the vehicle, through its signaling and communication devices, communicates to the surrounding environment information on its movement state and also information related to the intention and actions of the driver. In a general sense, the sensors and communication technologies improve the range and precision of the information that the driver can perceive, especially in variables that are difficult to estimate, such as distances, speeds, and the relative accelerations between vehicles and other actors in the environment.

With the use of communications, high-level information can be incorporated with a high predictive degree, which allows for the anticipation of changes in the dynamics of vehicle movement before they materialize and can be perceived by drivers. In the same way, the range of perception coverage can be extended and information can be obtained beyond the local environment of the vehicle, which is associated with scenarios where there are no direct-line-of-sight conditions.

4.1. Actual State

The discussion of this section begins by grouping the works that use vision and infrared cameras to capture the information from the vehicle–environment interaction, a method that is currently widely used in the automotive industry, but with limitations related to adverse weather conditions, lighting deficiencies, and the high computational cost of image processing algorithms, to name a few. Xin et al. [

55] propose an intention-conscious model to predict the trajectory based on the estimated lane change intention of neighboring vehicles, using an architecture with two long–short-term memory (LSTM) networks. The first one receives as input sequential data that characterizes the lateral movement of the vehicle to infer the driver’s intention to stay in the lane, turn left, or turn right. Once the target lane is detected, this indicator is passed as an input to the second LSTM network, which also receives the sequential data of the longitudinal movement of the vehicle, to finally predict its position. From the view point of the ego vehicle, only the features that can be feasibly measured using on-board sensors, such as LIDAR and radar, are used as input. The database used in this paper is from the next generation simulation (NGSIM) [

56].

Deo et al. [

57] design an LSTM encoder–decoder model that uses convolutional social-grouping layers as an enhancement of social-grouping layers for the robust learning of inter-dependencies in vehicle movement. The social grouping is defined by a structure called the social tensor, which groups the LSTM states of all the agents located around the predicted agent. This is done by defining a spatial grid around the agent being predicted and filling the grid with LSTM states based on the spatial configuration of the agents in the scene. The encoder is an LSTM network with shared weights that learns vehicle dynamics based on trajectory histories. The output of the LSTM decoder generates a probability distribution on future movement for six maneuver classes and assigns a probability to each maneuver class. A lot of complementary information can be captured using visual- and map-based cues. For the experiments, they use the publicly available NGSIM database. Deo et al. [

58] propose a variation of the architecture designed in [

57], using an LSTM network as an intermediate layer to classify and assign a probability to the maneuvers instead of the convolutional social-grouping layer. The results of these analyses evidenced the importance of modeling the movement of adjacent vehicles to predict the future movement of a given vehicle, as well as the importance of detecting and exploiting common vehicle maneuvers for the prediction of future movement. They use the publicly available NGSIM database for the experiments.

Kim et al. [

59] present a collision risk assessment algorithm that quantitatively assesses collision risks for a set of local trajectories through the lane-based probabilistic motion prediction of surrounding vehicles. Initially, the target lane probabilities are calculated, representing the probability that a driver will drive or move into each lane, based on lateral position and lateral velocity in curvilinear coordinates. It assumes that the lateral offset of vehicles with respect to the road center-line is measured from a suitable sensor suite, such as a camera, radar, or LIDAR. To estimate the collision probability, the collision risk is assumed as a metric, which is modeled as an exponential distribution, dependent on the time to collision (TTC). The prediction performance of the lane-based probabilistic model is first validated by comparing the model probabilities from the probabilistic target lane detection algorithm against the maneuver probabilities obtained from real-world traffic data from the NGSIM database.

Hou et al. [

60] propose a model of mandatory lane change (maneuver of incorporation into the vehicular flow of a highway) that considers as input variables data associated with the distances and relative speeds between the vehicle that is going to carry out the maneuver and the front and rear vehicles in the lane to which it is intended to enter, in addition to the distance the vehicle has traveled on the merging lane. Bayesian classifiers and decision trees are used to predict the driver’s decision to carry out the maneuver or not, determining as the most relevant variable the relative speed between the vehicle carrying out the maneuver and the vehicle in front on the line to which it is intended to enter and, in general, the greater relevance of relative speeds over relative distances. Detailed vehicle trajectory data from the NGSIM database were used for model development (data of U.S. Highway 101) and testing (data of Inter-state 80). Liu et al. [

61] develop a deep learning model to evaluate discretionary lane change maneuver decision making. The model is based on deep neural networks and with the exception of the instantaneous states of the subject and the surrounding vehicles, the historical experience of the drivers and the memory effect from vehicle to vehicle are also taken into account for the final evaluation of the maneuvering situation of change of lane, considering the analysis of the time series of trajectory data as part of the historical behavior of drivers. The classifier used is a gated recurrent unit (GRU) neural network, which is a type of RNN. They use the traffic data of the NGSIM database to train and test the model.

Benterki et al. [

62] present a system for predicting lane change maneuvers on motorways. These maneuvers are classified into left turn, right turn, and lane keeping, using two machine learning techniques: a support vector machine (SVM) and neural networks. The system also estimates, with a time window in advance, the time in which the lane change maneuvers will take place. The lane change process is subdivided into three stages: preparation of the lane change, active execution of the lane change, and completion of the lane change. Therefore, the system proposes to exploit the changes that occurred during the lane change preparation stage for the premature detection of maneuvers. The real-driving data of the NGSIM database is used for training and testing. Ding et al. [

63] propose a method that combines high-level policy anticipation with low-level context reasoning. An LSTM network is used to anticipate the vehicle’s driving policy (go ahead, yield, turn left, and turn right) using its sequential historical observations. This policy is used to guide a low-level optimization-based reasoning process. In this reasoning process, cost maps are defined to represent the context information, which are associated with certain characteristics of the road, such as lane geometry, static objects, moving objects, the area enabled for driving, and speed limits. The open-source urban autonomous driving simulator, CAR Learning to Act (CARLA) [

64] is adopted to collect the driving data, with the use of a Logitech G29 racing wheel.

Mahjoub et al. [

65] propose a stochastic hybrid system with a cumulative relevant history based on GPs. This design is used within the context of model-based communication to jointly model driver/vehicle behavior as a stochastic object and obtain accurate predictive models for mixed driver/vehicle behavior trends in the short and long term (within 0–3 s) of the critical dynamic states of the vehicle, such as its position, speed, and acceleration, within the discrete modes of the system, which are equivalent to the different long-term behaviors (maneuvers) of the driver. The lane change maneuver is selected as a specific long-term driver behavior, and the lateral position of the vehicle is modeled through an available set of already-observed instances. This is done by building a cumulative training history of on-the-go maneuver-specific data from identical or relevant maneuvers observed in the driver’s recent driving history, and then feeding this training data to the model inference block, such as your initial training set. To evaluate the proposed method, real trajectory data of 40 lane change maneuvers from the NGSIM database were used. As a recommendation for network situations with a high degree of congestion, where frequent reception of messages is difficult, a model that combines the constant speed model with the proposed Gaussian regression model would ensure prediction for both the near future (less than one second), as well as for the far future (between one and three seconds).

The following describes the works that use more specific sensors of greater complexity and economic cost, such as radar, LIDAR, GPS, and IMU, to capture the information from the interaction with the vehicle environment. These devices, on the other hand, have disadvantages due to direct-line-of-sight obstruction problems, in the case of LIDAR radars, and in general to unfavorable environmental conditions. Batsch et al. [

66] propose a classification model using a Gaussian process (GP) for the problem of detecting the presence or absence of the risk of collision between a vehicle and the vehicle that precedes it, which circulates at a slower speed as a result of being part of a traffic congestion scenario that includes several vehicles. To train the system they use data produced by CarMaker simulation software [

67]. The tests are conducted in an automated vehicle equipped with a radar sensor, neglecting the sensor uncertainty in the velocity and aperture angle measurement. Zyner et al. [

68] present a method based on recurrent neural networks to predict driver intention by predicting multi-modal trajectories that consider a level of uncertainty. To deal with data sequences of different lengths, sequence-fill techniques are introduced, taking as reference the last known position of the vehicle. The data analyzed contains the lateral and longitudinal position track history, as well as heading and velocity. Park et al. [

69] employ the use of LSTM networks to predict the future trajectory of surrounding vehicles based on a history of their past trajectory, formulating the vehicle trajectory prediction task as a multi-class sequential classification problem. For the evaluation of the system, real-vehicle trajectory data from a highway environment was employed. To capture the vehicle–environment interaction data, the test vehicle used a radar sensors and the IMU sensors.

Next, the works that use, together with the use of cameras, the incorporation of sensors, such as radar, LIDAR, GPS, and IMU are grouped to capture the information from the interactions with the vehicle environment. It is worth noting the fact that by using a greater number of sensors of different technologies, a greater degree of robustness of the system is achieved due to the redundancy in the information that can be received, but on the other hand, a greater degree of processing is necessary for data from diverse heterogeneous sources, which increases the computational cost. Liu et al. [

70] establish an autonomous lane change (discretionary maneuvering) decision-making model based on benefit, safety, and tolerance functions that analyze not only lane change factors in autonomous vehicles associated with route planning and monitoring, but also in addition to the lane change decision-making process. The benefit function considers the relative speed and distance data between the vehicle and the predecessor vehicles in the same lane and the target lane. The safety function considers a minimum safe distance between the vehicle and the successor vehicle located in the target lane, in addition to the relative distance and speed values between the two. Finally, the tolerance function considers relative distance and speed values between the vehicle and the predecessor vehicle in the same lane, avoiding frequent lane changes if the distance between them is too great. In order to verify the effectiveness of the model in real scenarios, they realized a test verification in the vehicle. The test vehicle used is equipped with Mobileye, millimeter-wave radar, mobile station GPS, AutoBox dSPACE, IMU, and other devices.

In this site are grouped the works that exclusively employ the use of vehicular communications (DSCR) to capture the information from the interactions with the vehicle environment. Although the use of communications manages to fundamentally compensate for the range limitations presented by the use of sensors and cameras, providing greater flexibility in terms of the road safety information that can be exchanged, there are also a series of limitations associated with all technologies of wireless communication, such as packet loss, transmission errors, and communication delay, to name a few. Fallah et al. [

71] use the model-based communication scheme in a cooperative FCW system using the example of CAMPLinear and, specifically, the collision detection algorithm proposed in [

72] and later refined in [

73]. This algorithm uses speed and acceleration information as input, both from the host vehicle and from the remote vehicle, which is the vehicle ahead in its own lane. The model used to estimate the remote vehicle mobility data belongs to the family of follower car models; specifically, the model introduced in [

74] is used. The concept of hybrid automation is used [

75], which is a well-known method for modeling mixed systems of discrete and continuous states. To evaluate the model-based communications (MBC) approach, two configurations are presented (MBC1 and MBC2), which are compared with traditional communication schemes that directly transmit speed and acceleration data. In both MBC1 and MBC2, each vehicle sends its movement models once at the start of the test and then periodically transmits the updates of the model inputs (speed and acceleration). In the case of MBC2, more sporadic additional messages associated with the change in the movement pattern are also transmitted. It is precisely this second configuration that obtains the most accurate results when tracking the movement of a vehicle.

Huang et al. propose [

76] and develop [

77] a mechanism based on the real-time estimation of the position-tracking errors of neighboring vehicles, to manage the cooperative information exchange in the vehicle communications environment. They first evaluate decentralized information dissemination policies for tracking-error-dependent multiple dynamic systems and then use collision-error-dependent policy to obtain better tracking performance. Finally, the transmission probability is calculated for each vehicle every 50 milliseconds based on expected tracking errors. Upon receiving information from the channel, each vehicle updates its estimated states of the neighboring vehicles using a first-order kinematic model, i.e., a constant speed predictor. The main concept of measurements in these algorithms is that the generation of messages from the receiver and the timing of the communication must be determined so that the position-tracking error is reasonably limited. A local copy of the neighboring estimators is executed at each local estimator. The sender compares the output of this simulated estimator with its actual state, thus estimating the position-tracking error, and determines whether remote vehicles need updated messages from the sender. The decision is made by comparing the position-tracking error with a configurable error threshold, generally defined according to the requirements of the cooperative road safety application (RSA).

Mahjoub et al. [

78] propose a technology-independent hybrid model selection policy, based on the MBC scheme, for vehicle-to-everything (V2X) communication. The core idea is to implement a hybrid modeling architecture that switches between different modeling subsystems to adapt to the dynamic state of the vehicle. In this particular case, two modeling states are used: one governed by GP, which uses two kernels: one linear and one radial basis function. The other modeling state is defined by the constant velocity kinematic model. A tracking error threshold is used as a selection element when using these models. The results show the effectiveness of the proposed communication architecture both in reducing the required message exchange rate and in increasing the accuracy of remote vehicle tracking. The greater tracking accuracy of the MBC scheme can be attributed to its ability to capture higher-order vehicle dynamics as a result of harsh braking maneuvers and lane change maneuvers.

Mahjoub et al. [

79] explore the modeling capabilities of the non-parametric Bayesian inference method: GP, integrated into the MBC design scheme, accurately represents different patterns of driving behavior using only a bank of GP kernels of limited size. To do this, a group of representative trajectories from the SPMD data [

80] were selected and the properties of the required kernel bank were explored to be modeled within the GP-MBC scheme. The two fundamental metrics used to evaluate the proposed system are: the length of the transmitted message (related to the size of the kernel bank) and the message transmission rate (related to the persistence of the model). The existence of such a kernel bank allows for transmitting entities to send only the kernel ID instead of the kernel itself, which consequently reduces the length of the packet. The persistence of the model is understood as the time in which a model remains valid for the prediction, i.e., obtaining a margin of error in the prediction lower than the threshold established by the RSA requirements. The results obtained showed the feasibility of using a group of GP kernels of finite size to predict, with the precision required by the RSA, the future position of the vehicle through an indirect prediction method, i.e., by predicting the values of the time series of the future speed and direction of the vehicle. An indirect position estimation (speed, direction, or acceleration) achieves superior results compared to direct position estimation.

Vinel et al. [

81] design an analytical framework that considers the behaviors of cooperative road safety applications considering the performance of V2V communications. The relationship between the characteristics of V2V communications associated with the probability of packet loss and the packet transmission delay, with the physical mobility characteristics of the vehicle, such as the inter-vehicle safety distance, is analyzed. The case of the cooperative ASV of the emergency electronic brake light defined by the ETSI is analyzed.

Finally, the jobs that use, together with the use of vehicular communications (DSCR), cameras and sensors such as radar, LIDAR, GPS, and IMU are grouped to capture the information from the interaction with the vehicle environment. This favors the complement between communication and sensor technologies, taking the best of both and guaranteeing a more complete performance and greater possibilities for facing the challenges in the area of road safety. Mahjoub et al. [

82] design a system for the prediction of the lateral and longitudinal movement of the vehicle. For the prediction of the longitudinal trajectory, nonlinear auto-regressive exogenous models based on neural networks are used. In the case of lateral trajectory prediction, recurrent neural networks (RNN) are used. The system uses two main sources of information: (i) cameras and on-board detection devices such as radars and LIDAR that are assumed as the primary information providers for CAV applications; (ii) V2V communication, which is obtainable using dedicated short-range communication (DSRC) devices, and is regarded as an important supplementary information source whenever it is accessible. The performance of the system is evaluated not only in ideal communication conditions, but also in the presence of scenarios with up to 40% losses. In this case, a zero-hold estimation method is included to combat packet loss or sensor failure and to reconstruct the time series of vehicle parameters at a predetermined frequency. Its evaluation is simulated using real-communication scenarios with data extracted from safety pilot model deployment (SPMD) [

80]. Du et al. [

83] develop a network architecture called vehicular fog computing to implement the cooperative data census of multiple adjacent vehicles circulating in the form of a platoon. Based on this architecture, a greedy algorithm is used to maximize the census coverage (associated with the area ratio and total ratio parameters) and minimize the overlap coverage (associated with the efficiency parameter), enhancing the parallel calculation through of the distributed management of the computational resources of the platoon members. A SVM algorithm is used to merge the census data of multiple vehicles and obtain precise information on the status of the vehicles. Through an occupancy grid filtering (OGF) of the on-board sensors (LIDAR, cameras), the environment is mapped as occupancy states. These OGF maps are integrated into the head vehicle, and by means of SVM it is classified when a grid is occupied by a vehicle. To train the SVM classifier, the GPS position data extracted from the NGSIM database is used to locate the vehicles on the OGF map. Finally, the result of the merger of the census data of multiple vehicles (location of the vehicles on the OGF map) feeds the algorithm of a light GRU neural network to predict the discretionary maneuvers of lane changes to classify them into lane-keeping maneuvers or lane change maneuvers.

Moradi-Pari et al. [

84] use the model-based communications scheme to design a small-scale and large-scale modeling strategy for the dynamics of vehicular movement. The representation model of the system to describe the behavior of the vehicle is based on the representation of stochastic hybrid systems, where: (i) small-scale evolution represents actions of braking and acceleration, represented by exogenous auto-regressive models and (ii) large-scale evolution, which includes lane change maneuvers and free circulation flows, are represented by coupling these models within a Markov chain. At each model calculation time, all currently available states of the latest version of the model must be explored, and the best-fit parameter values must be found for each of them according to the new observation element. If at least one of these states satisfies the error threshold specified by the application using its new parameter values, the current model is assumed to be fully descriptive for the entire observation sequence received. However, if the minimum error reached given by the current model exceeds the required threshold, it is necessary to introduce a new state to represent the new observation segment and describe the last maneuver of the driver. To evaluate the performance of the proposed models and adaptive cruise control methods, various scenarios were simulated with different realistic data sets, including data from SPMD [

80] and driving cycles for Environmental Protection Agency testing standards [

85].

We want to emphasize that cooperative driving can significantly contribute to the development of C-ADAS, since this is the most important result of detection, communication, and automation technologies, and, in turn, significantly influences the behavior of drivers. Z. Wang et al. [

37] provide a review of the literature associated with cooperative multiple CAV longitudinal motion control systems, with an emphasis on the architecture of several cooperative CAV systems. An in-depth discussion of the control aspects of CAV systems is carried out, highlighting the main challenges generated by the existence of different information flow topologies, which are mainly focused on string stability, communication issues, and dynamics heterogeneity. Zhou et al. [

86] present a literature review of learning-based longitudinal motion planning models for autonomous vehicles, focused on the impact of these models on traffic congestion. They surveyed the non-imitation learning method and imitation learning method, and the emerging technologies used by the principal automakers for implementing cooperative driving are described.

Multiple research works have investigated in the context of cooperative driving the design of control systems that favor the management of traffic and/or the crossing of intersections. Zu et al. [

87] propose a cooperative method for connected automated vehicles that controls the timing of the traffic lights and manages the optimal speed at which the vehicles should circulate. The optimization of the traffic light times and the calculation of the arrival times of the vehicles at the intersection allows for the minimization of the total travel time for all the vehicles, as well as the fuel consumption of the individual vehicles. Zheng et al. [

88] establish analytical results on the degree of stability, controllability, and accessibility of a mixed-traffic system composed of autonomous vehicles and human-driven vehicles. The proposed system allows the flow of traffic to circulate at a higher speed and shows that the autonomous vehicles, along with cooperative driving, can save time and energy, smoothing traffic flow and reducing traffic undulations. Wang et al. [

89] propose a cooperative platoon system for CAV, based on a predictive control model with real-time operation capability, to efficiently manage the vehicle tracking behaviors of all CAVs in a platoon. The constant time advance method is used to adjust the balance gap between successive vehicles. Zhou et al. [

90] introduce a smooth-switching control-based cooperative adaptive cruise control scheme with information flow topology optimization to improve riding comfort while maintaining string stability. A Kalmann filter-based predictor is used to estimate the state of the preceding vehicle, suppressing the noise in the measurement and estimating the acceleration of the vehicle in the event of communication failures. Zhou et al. [

91] propose a hybrid cooperative intersection control framework to manage the entrance and exit of a group of vehicles to an intersection. A virtual platoon is defined to group these vehicles according to their proximity to the entrance of the conflict zone of the intersection. The location assignment of the vehicles within the virtual platoon differs from their real relative locations. This virtual platoon is obtained by linearly projecting the distances at which the vehicles are from the center of the intersection, then, platoon control rules are applied to manage the movement of vehicles approaching the intersection.

The management of the flow of information exchanged in a V2V communication environment has been addressed in [

92]. Wang et al. develop a mathematical modeling based on queues to manage the transmission of information of multiple classes with different levels of delay according to the cooperative road safety applications. In [

93], these authors also addressed traffic management in mixed environments with the presence of human-driven vehicles and autonomous and connected vehicles, analyzing the differences in the principles of route choice and the traffic patterns followed by human-driven vehicles and connected and autonomous vehicles, and they also considered the use of preferential circulation lanes with free access to connected and autonomous vehicles in [

94].

Table 3 summarizes the main works consulted that address elements of the vehicle–environment interaction. The directionality in which this interaction is approached is analyzed, as well as the way in which it is implemented.

4.2. Current Challenges

The addressed challenges in this section are focused on the sensors and communications technologies. The main challenges with regard to sensors technologies are:

(i) The sensor occlusion with respect to the line of sight of the objects and other road actors. The good performance of these technologies is affected under various climatic and environmental conditions, such as roads with markings covered by snow, heavy rain, or dense fog. Objects, people, and animals located in the vicinity of the vehicle, or obstructing each other, represent serious security problems for detection by these devices. This phenomenon is not so serious when the objects are located at greater distances, where the processing algorithms can help the sensing devices to improve detection tasks. These drawbacks can be minimized by sensor redundancy as in [

95], where a 360-degree vision system is used for parking assistance.

(ii) The high computational resource consumption of image processing algorithms present in camera-based sensors. Detection at distances greater than 200 meters requires the use of ultra-high-resolution cameras for the sensing of small details in the target image. Therefore, powerful image processing algorithms are required to analyze the high volume of image data and extract useful information from the noise associated with it [

96], which continues to represent a limitation to the adequate processing in real time that road safety demands.

(iii) The high cost of specific hardware technologies. Some well-established technologies in the market, such as vision cameras or radars, have managed to establish themselves in large-scale production, lowering their production costs. This situation, however, differs from other more specific technologies for the automotive industry, such as LIDAR devices, whose standard incorporation in vehicles considerably increases their price depending on various factors, such as the type of use for the one that the vehicle is destined for, or their sensing capabilities in 2D or 3D.

The main challenges with regard to communications technologies are:

(i) The scarce deployment of network infrastructure in the road environment. The massive implementation of V2I technologies is an expensive and time-consuming task. Various costs must be assumed depending on the location environment, such as the installation of nodes, bandwidth, and energy support, and the subsequent maintenance of the installed equipment. An important aspect is the traffic capacity of these networks, given that cooperative road safety applications require a high degree of penetration of vehicles with installed connection capabilities, e.g., in the case of the cooperative collision warning application, a density greater than 60% of the total connected vehicles is desirable [

97].

(ii) The lack of robustness of current communications networks to operate in a vehicular environment, evidenced by the loss of transmitted packets and other problems, such as communication channel congestion, transmission delay, and fading and shadowing in signal propagation. The reliability and accuracy of the exchanged data is vital to ensure the proper functioning of cooperative applications, as inaccurate data can result in bad judgment when making a decision related to road safety. These networks must guarantee robustness against sending duplicate messages or false positives in the issuance of alerts. Furthermore, even when the data exchanged is accurate, the freshness of the information is required, due to the importance of the temporal component of road safety data. It is not enough to detect a risk situation on the road and communicate it accurately, it is also required that this information arrives in time for the appropriate reaction to said danger and thus guarantee the good global operation of cooperative road safety applications. In this sense, emerging technologies such as 5G can provide greater capacity and reduced latency when exchanging this sensitive information [

98]. With the progressive increase in the volume of data, the need arises to have tools and algorithms capable of efficiently processing them. Raw data handling is becoming less feasible and more expensive. Dimensionality reduction techniques are required to identify patterns in data and achieve more scalable developments [

99].

(iii) The security and privacy of the information exchanged represent an important challenge to consider when designing C-ADAS. As in any communication network where private information is exchanged, the issue of data security and integrity is vital, but in these networks in particular it is even more important, since people’s lives are at stake, as well as overall road safety. Hacking activity in networks without adequate protection can cause attackers to take control of a vehicle’s security systems, causing traffic diversions or the activation of the emergency braking system, the stealing of personal data from users, and in the worst case scenario, the creation of conditions for the occurrence of traffic accidents. These challenges are part of the OEM and after-sales connectivity systems [

100], which is especially sensitive in automated vehicles with electronic control of their actuators. Another current problem is that network security approaches can, on occasion, compromise vehicle security due to the overload of exchanged security information, generating excessive delays in communications, which is associated with authentication mechanisms, validation, and security certificates.

The shortcomings that still persist, and the study and achievements related to the V–E interaction, are the inability to operate bidirectionally, the lack of holistic integration between the detection, communication, and processing technologies, as well as the joint operation with the driver subsystem, which allows for the exploitation of these redundant pathways to obtain information from the environment. In addition to this, there are also the challenges associated with the functioning and operation of these sensing (e.g., resolution and scope) and communication (e.g., reliability and delay) technologies, which undoubtedly limit the effectiveness of the current implementation of the proposed C-ADAS.