Time-of-Flight Imaging in Fog Using Polarization Phasor Imaging

Abstract

:1. Introduction

- Firstly, we introduce optical polarimetric defogging to ToF phasor imaging, expanding the application of polarization defogging.

- Secondly, we define the degree of polarization phasor for describing the scattering effect for ToF imaging.

- Finally, we establish a polarization phasor imaging model for recovering amplitude and depth images in the foggy scenes by estimating the scattering component.

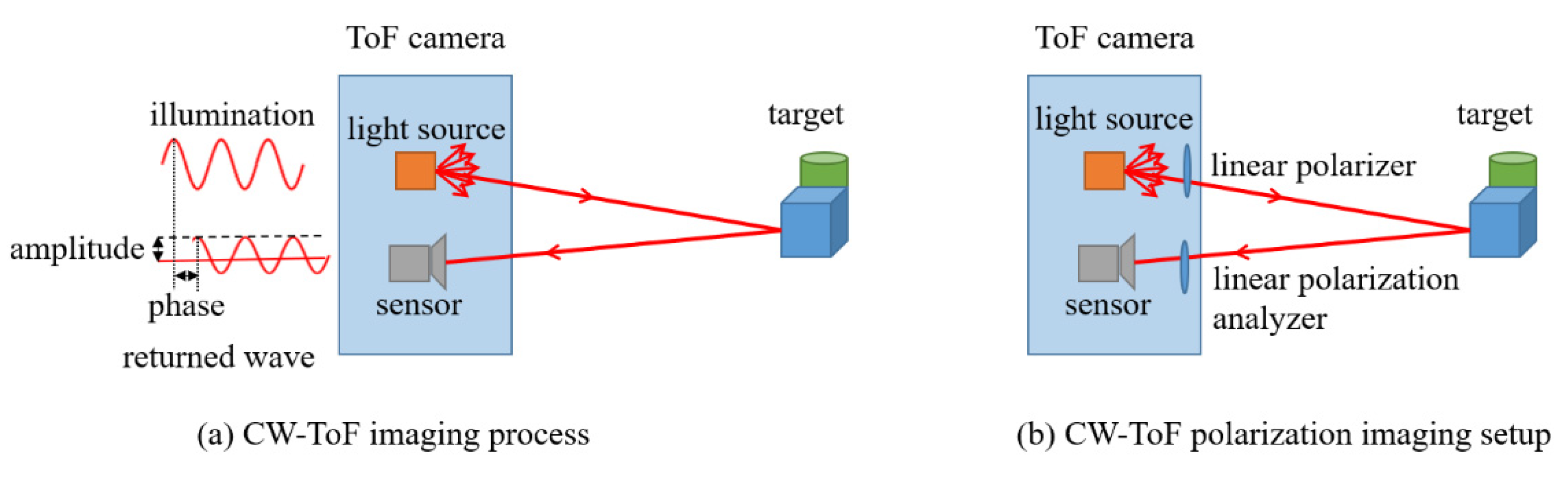

2. The Polarization Phasor Imaging Method

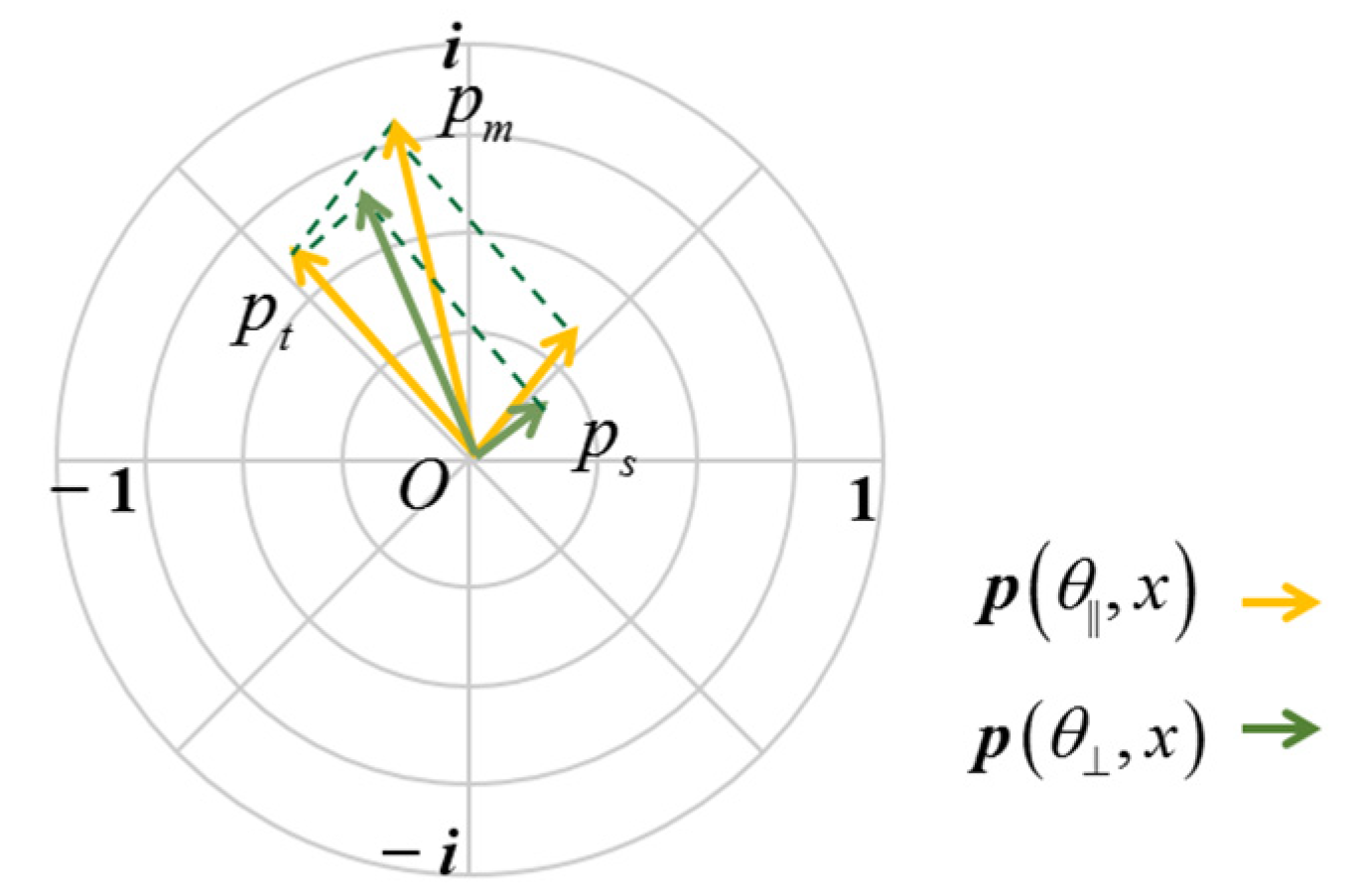

2.1. Polarization Phasor Representation

2.2. Polarization Phasor Imaging Model

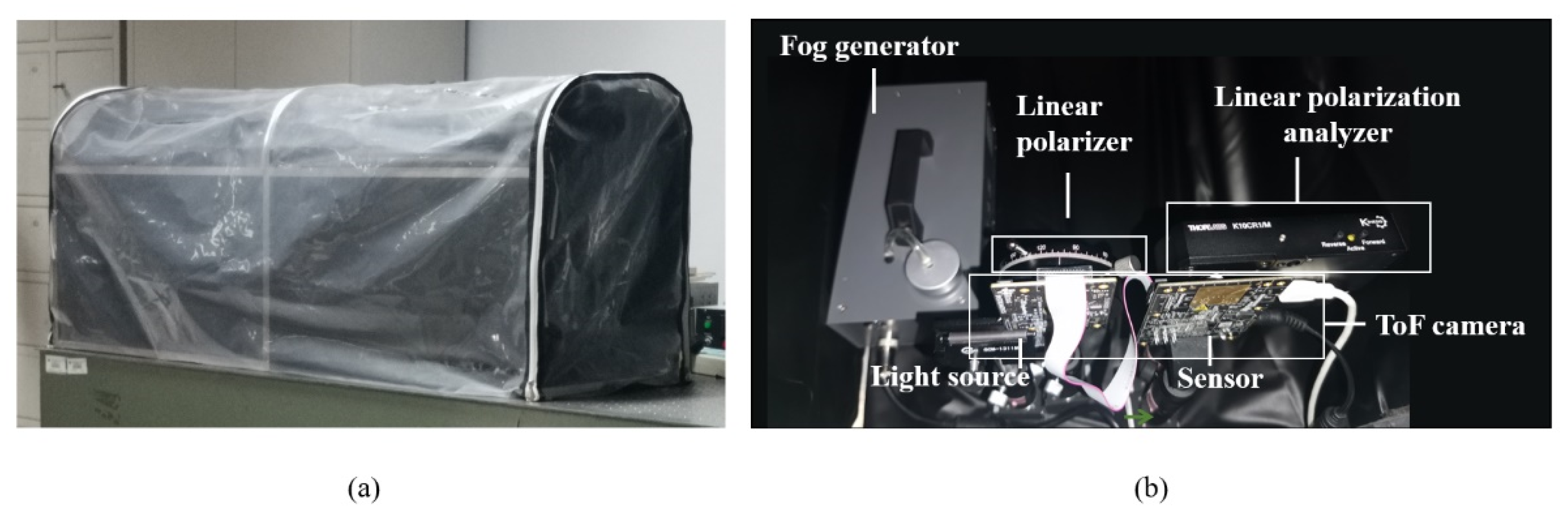

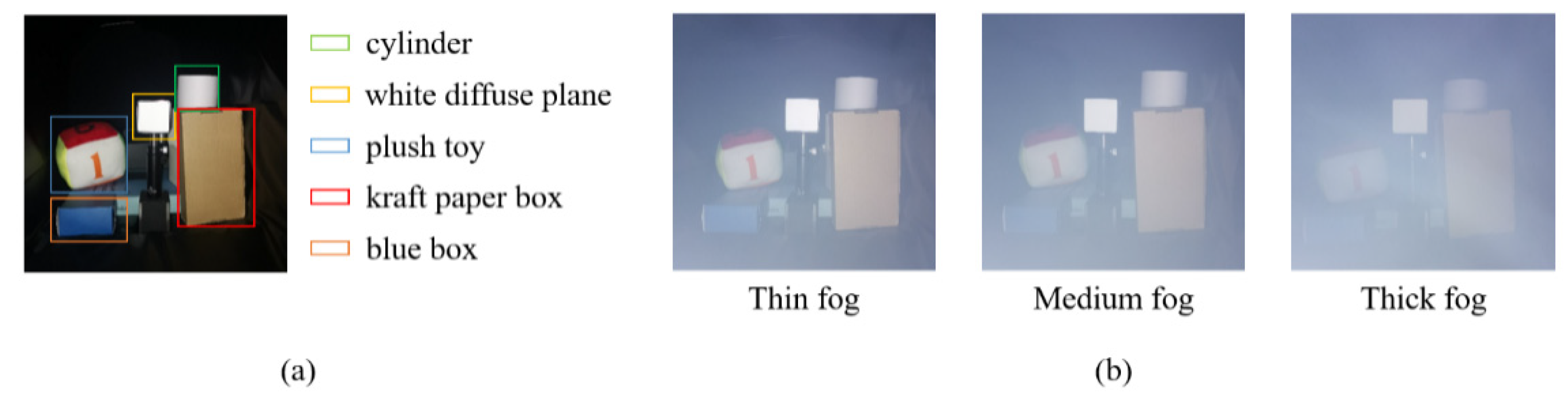

3. Experiments and Results

4. Discussion

4.1. The Depolarization Degree of Targets

4.2. The Attenuation Factor of the Amplitude

4.3. The Homogeneity of Scattering Media

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mufti, F.; Mahony, R. Statistical Analysis of Measurement Processes for Time-of-Flight Cameras. Proc. Spie Int. Soc. Opt. Eng. 2009, 7447, 720–731. [Google Scholar] [CrossRef]

- Niskanen, I.; Immonen, M.; Hallman, L.; Yamamuchi, G.; Mikkonen, M.; Hashimoto, T.; Nitta, Y.; Keränen, P.; Kostamovaara, J.; Heikkilä, R. Time-of-Flight Sensor for Getting Shape Model of Automobiles toward Digital 3D Imaging Approach of Autonomous Driving—Science Direct. Autom. Constr. 2021, 121, 103429. [Google Scholar] [CrossRef]

- Conde, M.H. A Material-Sensing Time-of-Flight Camera. IEEE Sensors Lett. 2020, 4, 1–4. [Google Scholar] [CrossRef]

- Routray, J.; Rout, S.; Panda, J.J.; Mohapatra, B.S.; Panda, H. Hand Gesture Recognition Using TOF Camera. Int. J. Appl. Eng. Res. 2021, 16, 302–307. [Google Scholar] [CrossRef]

- Lange, R.; Seitz, P. Solid-State Time-of-Flight Range Camera. IEEE J. Quantum Electron. 2001, 37, 390–397. [Google Scholar] [CrossRef] [Green Version]

- Bhandari, A.; Raskar, R. Signal Processing for Time-of-Flight Imaging Sensors: An Introduction to Inverse Problems in Computational 3-D Imaging. IEEE Signal Process. Mag. 2016, 33, 45–58. [Google Scholar] [CrossRef]

- Godbaz, J.P.; Cree, M.J.; Dorrington, A.A. Mixed Pixel Return Separation for a Full-Field Ranger. In Proceedings of the 2008 23rd International Conference Image and Vision Computing New Zealand, Christchurch, New Zealand, 26–28 November 2008; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Godbaz, J.P.; Cree, M.J.; Dorrington, A.A. Multiple Return Separation for a Full-Field Ranger via Continuous Waveform Modelling. In Image Processing: Machine Vision Applications II; SPIE: Bellingham, WA, USA, 2009; Volume 7251, pp. 269–280. [Google Scholar] [CrossRef] [Green Version]

- Bhandari, A.; Feigin, M.; Izadi, S.; Rhemann, C.; Schmidt, M.; Raskar, R. Resolving Multipath Interference in Kinect: An Inverse Problem Approach. In Proceedings of the SENSORS, 2014 IEEE, Valencia, Spain, 15 December 2014; pp. 614–617. [Google Scholar] [CrossRef]

- Dorrington, A.A.; Godbaz, J.P.; Cree, M.J.; Payne, A.D.; Streeter, L.V. Separating True Range Measurements from Multi-Path and Scattering Interference in Commercial Range Cameras. In Three-Dimensional Imaging, Interaction, and Measurement; International Society for Optics and Photonics: Bellingham, WA, USA, 2011; Volume 7864, p. 786404. [Google Scholar] [CrossRef] [Green Version]

- Kirmani, A.; Benedetti, A.; Chou, P.A. SPUMIC: Simultaneous Phase Unwrapping and Multipath Interference Cancellation in Time-of-Flight Cameras Using Spectral Methods. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo (ICME), San Jose, CA, USA, 15–19 July 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Freedman, D.; Smolin, Y.; Krupka, E.; Leichter, I.; Schmidt, M. SRA: Fast Removal of General Multipath for ToF Sensors. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Volume 8689, pp. 234–249. [Google Scholar] [CrossRef] [Green Version]

- Patil, S.; Bhade, P.M.; Inamdar, V.S. Depth Recovery in Time of Flight Range Sensors via Compressed Sensing Algorithm. Int. J. Intell. Robot. Appl. 2020, 4, 243–251. [Google Scholar] [CrossRef]

- Patil, S.S.; Inamdar, V.S. Resolving Interference in Time of Flight Range Sensors via Sparse Recovery Algorithm. In Proceedings of the ICIGP 2020: 2020 3rd International Conference on Image and Graphics Processing, New York, NY, USA, 8–10 February 2020; pp. 106–112. [Google Scholar] [CrossRef]

- Guo, Q.; Frosio, I.; Gallo, O.; Zickler, T.; Kautz, J. Tackling 3D ToF Artifacts Through Learning and the FLAT Dataset. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 368–383. [Google Scholar] [CrossRef] [Green Version]

- Agresti, G.; Schaefer, H.; Sartor, P.; Zanuttigh, P. Unsupervised Domain Adaptation for ToF Data Denoising with Adversarial Learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5579–5586. [Google Scholar] [CrossRef] [Green Version]

- Su, S.; Heide, F.; Wetzstein, G.; Heidrich, W. Deep End-to-End Time-of-Flight Imaging. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6383–6392. [Google Scholar] [CrossRef] [Green Version]

- Heide, F.; Xiao, L.; Kolb, A.; Hullin, M.B.; Heidrich, W. Imaging in Scattering Media Using Correlation Image Sensors and Sparse Convolutional Coding. Opt. Express 2014, 22, 26338–26350. [Google Scholar] [CrossRef] [Green Version]

- Gutierrez-Barragan, F.; Chen, H.G.; Gupta, M.; Velten, A.; Gu, J. IToF2dToF: A Robust and Flexible Representation for Data-Driven Time-of-Flight Imaging. IEEE Trans. Comput. Imaging 2021, 7, 1205–1214. [Google Scholar] [CrossRef]

- Kijima, D.; Kushida, T.; Kitajima, H.; Tanaka, K.; Kubo, H.; Funatomi, T.; Mukaigawa, Y. Time-of-Flight Imaging in Fog Using Multiple Time-Gated Exposures. Opt. Express 2021, 29, 6453–6467. [Google Scholar] [CrossRef]

- Fujimura, Y.; Sonogashira, M.; Iiyama, M. Defogging Kinect: Simultaneous Estimation of Object Region and Depth in Foggy Scenes. arXiv 2019, arXiv:1904.00558. [Google Scholar]

- Fujimura, Y.; Sonogashira, M.; Iiyama, M. Simultaneous Estimation of Object Region and Depth in Participating Media Using a ToF Camera. IEICE Trans. Inf. Syst. 2020, 103, 660–673. [Google Scholar] [CrossRef] [Green Version]

- Lu, H.; Zhang, Y.; Li, Y.; Zhou, Q.; Tadoh, R.; Uemura, T.; Kim, H.; Serikawa, S. Depth Map Reconstruction for Underwater Kinect Camera Using Inpainting and Local Image Mode Filtering. IEEE Access 2017, 5, 7115–7122. [Google Scholar] [CrossRef]

- Wu, R.; Suo, J.; Dai, F.; Zhang, Y.; Dai, Q. Scattering Robust 3D Reconstruction via Polarized Transient Imaging. Opt. Lett. 2016, 41, 3948–3951. [Google Scholar] [CrossRef] [PubMed]

- Wu, R.; Jarabo, A.; Suo, J.; Dai, F.; Zhang, Y.; Dai, Q.; Gutierrez, D. Adaptive Polarization-Difference Transient Imaging for Depth Estimation in Scattering Media. Opt. Lett. 2018, 43, 1299–1302. [Google Scholar] [CrossRef] [PubMed]

- Gupta, M.; Nayar, S.K.; Hullin, M.B.; Martin, J. Phasor Imaging: A Generalization of Correlation-Based Time-of-Flight Imaging. ACM Trans. Graph. 2015, 34, 156. [Google Scholar] [CrossRef]

- Muraji, T.; Tanaka, K.; Funatomi, T.; Mukaigawa, Y. Depth from Phasor Distortions in Fog. Opt. Express 2019, 27, 18858–18868. [Google Scholar] [CrossRef]

- Heide, F.; Hullin, M.; Gregson, J.; Heidrich, W. Light-in-Flight: Transient Imaging Using Photonic Mixer Devices. ACM Trans. Graph. 2013, 32, 9. [Google Scholar] [CrossRef]

- Heide, F.; Hullin, M.B.; Gregson, J.; Heidrich, W. Low-Budget Transient Imaging Using Photonic Mixer Devices. ACM Trans. Graph. 2013, 32, 45. [Google Scholar] [CrossRef]

- Lin, J.; Liu, Y.; Hullin, M.B.; Dai, Q. Fourier Analysis on Transient Imaging with a Multifrequency Time-of-Flight Camera. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA, 23–28 June 2014; pp. 3230–3237. [Google Scholar] [CrossRef] [Green Version]

- Qiao, H.; Lin, J.; Liu, Y.; Hullin, M.B.; Dai, Q. Resolving Transient Time Profile in ToF Imaging via Log-Sum Sparse Regularization. Opt. Lett. 2015, 40, 918–921. [Google Scholar] [CrossRef]

- Han, J.; Yang, K.; Xia, M.; Sun, L.; Cheng, Z.; Liu, H.; Ye, J. Resolution Enhancement in Active Underwater Polarization Imaging with Modulation Transfer Function Analysis. Appl. Opt. 2015, 54, 3294–3302. [Google Scholar] [CrossRef] [PubMed]

- Schechner, Y.Y.; Nayar, S.K.; Narasimhan, S.G. Instant Dehazing of Images Using Polarization. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Los Alamitos, CA, USA, 8–14 December 2001; Volume 2, p. 325. [Google Scholar] [CrossRef] [Green Version]

- Feng, B.; Shi, Z. PD Based Determination of Polarized Reflection Regions in Bad Weather. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar] [CrossRef] [Green Version]

- Dai, Q.; Fan, Z.; Song, Q.; Chen, Y. Polarization Defogging Method for Color Image Based on Automatic Estimation of Global Parameters. J. Appl. Opt. 2015, 39, 511–517. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Bao, K.; Zhang, X.; Nian, F.; Li, T.; Zeng, Y. Conditional Generative Adversarial Defogging Algorithm Based on Polarization Characteristics. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 2148–2155. [Google Scholar] [CrossRef]

- Huang, B.; Liu, T.; Hu, H.; Han, J.; Yu, M. Underwater Image Recovery Considering Polarization Effects of Objects. Opt. Express 2016, 24, 9826–9838. [Google Scholar] [CrossRef] [PubMed]

- Dubreuil, M.; Delrot, P.; Leonard, I.; Alfalou, A.; Brosseau, C.; Dogariu, A. Exploring Underwater Target Detection by Imaging Polarimetry and Correlation Techniques. Appl. Opt. 2013, 52, 997–1005. [Google Scholar] [CrossRef] [PubMed]

- Treibitz, T.; Schechner, Y.Y. Active Polarization Descattering. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 385–399. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, A.D.; Moscoso, M. Backscattering of Circularly Polarized Pulses. Opt. Lett. 2002, 27, 1589–1591. [Google Scholar] [CrossRef]

- Hongzhi, Y.U.; Sun, C.; Yiming, H.U. Underwater Active Polarization Defogging Algorithm for Global Parameter Estimation. J. Appl. Opt. 2020, 41, 107–113. [Google Scholar] [CrossRef]

- Otsu, N. A Thresholding Selection Method from Gray-Level Histogram. IEEE Trans Syst. Man Cybern. 1978, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Khan, U.N.; Arya, K.V.; Pattanaik, M. Histogram Statistics Based Variance Controlled Adaptive Threshold in Anisotropic Diffusion for Low Contrast Image Enhancement. Signal Processing 2013, 93, 1684–1693. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, Z.; Lei, Y.; Jiang, Y. Research on Infrared Image Enhancement Algorithm Based on Histogram. In Proceedings of the 5th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Optoelectronic Materials and Devices for Detector, Imager, Display, and Energy Conversion Technology, Dalian, China, 22 October 2010; Volume 7658, pp. 1206–1211. [Google Scholar] [CrossRef]

- Liu, J.; Shao, Z.; Cheng, Q. Color Constancy Enhancement under Poor Illumination. Opt. Lett. 2011, 36, 4821–4823. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. Properties and Performance of a Center/Surround Retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Yang, K.; Song, Z.; Peng, C. A Self-Adaptive Image Enhancing Method Based on Grayscale Power Transformation. In Proceedings of the 2011 International Conference on Multimedia Technology, Hangzhou, China, 26–28 July 2011; pp. 483–486. [Google Scholar] [CrossRef]

- Pan, J.; Yang, X. A Topological Model for Grayscale Image Transformation. In Proceedings of the 2011 Fourth International Symposium on Parallel Architectures, Algorithms and Programming, Tianjin, China, 9–11 December 2011; pp. 123–127. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Prentice Hall Int.: Hoboken, NJ, USA, 2008. [Google Scholar]

| Thickness of Fog | Amplitude | PSNR (dB) | SSIM |

|---|---|---|---|

| Thin | 59.48 | 0.338 | |

| 57.92 | 0.262 | ||

| Ours | 63.75 | 0.684 | |

| Medium | 59.47 | 0.328 | |

| 57.87 | 0.249 | ||

| Ours | 61.40 | 0.583 | |

| Thick | 59.21 | 0.294 | |

| 57.84 | 0.239 | ||

| Ours | 60.28 | 0.504 |

| Thickness of Fog | Depth | Cylinder | White Diffuse Plane | Plush Toy | Kraft Paper Box | Blue Box |

|---|---|---|---|---|---|---|

| Thin | 0.28/3.03 | 0.18/1.74 | 0.43/6.35 | 0.21/2.80 | 0.34/3.06 | |

| 0.11/1.27 | 0.05/0.45 | 0.22/3.23 | 0.09/1.21 | 0.21/2.30 | ||

| Ours | 0.02/0.18 | 0.01/0.14 | 0.03/0.57 | 0.03/0.38 | 0.04/0.49 | |

| Medium | 0.28/2.52 | 0.19/1.50 | 0.44/6.58 | 0.23/3.75 | 0.35/5.32 | |

| 0.14/1.30 | 0.07/0.55 | 0.29/4.55 | 0.12/2.05 | 0.27/3.29 | ||

| Ours | 0.02/0.20 | 0.02/0.17 | 0.04/0.60 | 0.03/0.53 | 0.05/0.66 | |

| Thick | 0.37/3.29 | 0.28/2.80 | 0.52/6.94 | 0.30/4.84 | 0.40/5.38 | |

| 0.19/1.68 | 0.10/1.04 | 0.35/4.73 | 0.16/2.66 | 0.30/4.07 | ||

| Ours | 0.03/0.23 | 0.03/0.28 | 0.06/0.67 | 0.06/0.98 | 0.05/0.70 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Wang, X.; Zhao, Y.; Fang, Y. Time-of-Flight Imaging in Fog Using Polarization Phasor Imaging. Sensors 2022, 22, 3159. https://doi.org/10.3390/s22093159

Zhang Y, Wang X, Zhao Y, Fang Y. Time-of-Flight Imaging in Fog Using Polarization Phasor Imaging. Sensors. 2022; 22(9):3159. https://doi.org/10.3390/s22093159

Chicago/Turabian StyleZhang, Yixin, Xia Wang, Yuwei Zhao, and Yujie Fang. 2022. "Time-of-Flight Imaging in Fog Using Polarization Phasor Imaging" Sensors 22, no. 9: 3159. https://doi.org/10.3390/s22093159

APA StyleZhang, Y., Wang, X., Zhao, Y., & Fang, Y. (2022). Time-of-Flight Imaging in Fog Using Polarization Phasor Imaging. Sensors, 22(9), 3159. https://doi.org/10.3390/s22093159