Figure 1.

Depth estimation results with and without the active pattern. The active pattern increases the depth estimation accuracy by providing additional information for calculating corresponding points in textureless regions. (a) Non-active image. (b) Active image. (c) Non-active depth result. (d) Active depth result.

Figure 1.

Depth estimation results with and without the active pattern. The active pattern increases the depth estimation accuracy by providing additional information for calculating corresponding points in textureless regions. (a) Non-active image. (b) Active image. (c) Non-active depth result. (d) Active depth result.

Figure 2.

Some sets of left images with synthetically projected pattern. The last image is the GT disparity map of the first image.

Figure 2.

Some sets of left images with synthetically projected pattern. The last image is the GT disparity map of the first image.

Figure 3.

Left synthetic images according to our experimental parameters affecting the attribute of IR images. The figures show changes in the pattern intensity, pattern contrast, number of pattern dots, and global gain from top to bottom.

Figure 3.

Left synthetic images according to our experimental parameters affecting the attribute of IR images. The figures show changes in the pattern intensity, pattern contrast, number of pattern dots, and global gain from top to bottom.

Figure 4.

The figures show the filtered images used for combinations for the experiment. From left to right, the figures show images with no filter, the mean filter, the LoG filter, the BilSub filter, and the rank filter applied. For visualization, contrasts of all images have been enhanced through histogram equalizing.

Figure 4.

The figures show the filtered images used for combinations for the experiment. From left to right, the figures show images with no filter, the mean filter, the LoG filter, the BilSub filter, and the rank filter applied. For visualization, contrasts of all images have been enhanced through histogram equalizing.

Figure 5.

Mean errors on the combinations of the cost and image filters, according to each stereo matching method over the synthetic active dataset. The attributes of the active IR images were set to default values. Each color represents different filters along the methods. (a) Window-based. (b) SGM. (c) GC.

Figure 5.

Mean errors on the combinations of the cost and image filters, according to each stereo matching method over the synthetic active dataset. The attributes of the active IR images were set to default values. Each color represents different filters along the methods. (a) Window-based. (b) SGM. (c) GC.

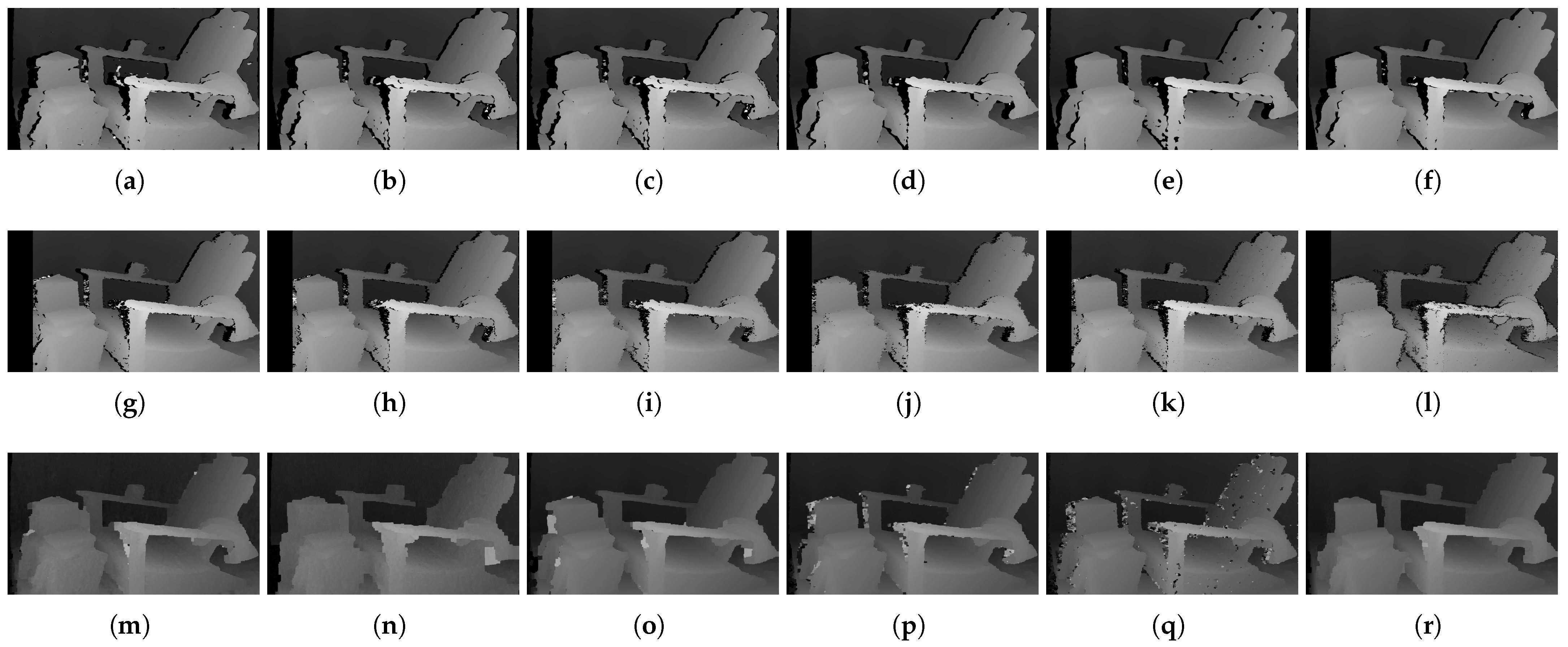

Figure 6.

Estimated disparity images of the combinations of the cost and the image filter, according to each stereo matching method over the synthetic active dataset. All attributes of the active IR images were set to default values. In SGM, the columns equal to the max disparity value are not used in the left image because the corresponding pixels in the right image cannot be used for comparison. Therefore, missing pixels appear at the left edge of the image [

33]. (

a) Window, SAD. (

b) Window, Mean/SAD. (

c) Window, LoG/SAD. (

d) Window, BilSub/SAD. (

e) Window, Rank/SAD. (

f) Window, Census. (

g) SGM, AD. (

h) SGM, Mean/AD. (

i) SGM, LoG/AD. (

j) SGM, BilSub/AD. (

k) SGM, Rank/AD. (

l) SGM, Census. (

m) GC, AD. (

n) GC, Mean/AD. (

o) GC, LoG/AD. (

p) GC, BilSub/AD. (

q) GC, Rank/AD. (

r) GC, Census.

Figure 6.

Estimated disparity images of the combinations of the cost and the image filter, according to each stereo matching method over the synthetic active dataset. All attributes of the active IR images were set to default values. In SGM, the columns equal to the max disparity value are not used in the left image because the corresponding pixels in the right image cannot be used for comparison. Therefore, missing pixels appear at the left edge of the image [

33]. (

a) Window, SAD. (

b) Window, Mean/SAD. (

c) Window, LoG/SAD. (

d) Window, BilSub/SAD. (

e) Window, Rank/SAD. (

f) Window, Census. (

g) SGM, AD. (

h) SGM, Mean/AD. (

i) SGM, LoG/AD. (

j) SGM, BilSub/AD. (

k) SGM, Rank/AD. (

l) SGM, Census. (

m) GC, AD. (

n) GC, Mean/AD. (

o) GC, LoG/AD. (

p) GC, BilSub/AD. (

q) GC, Rank/AD. (

r) GC, Census.

Figure 7.

Errors of combinations of the cost and image filter using the window-based stereo method, according to the variation of the pattern intensity parameter. (a) Non-filter. (b) Mean. (c) LoG. (d) BilSub. (e) Rank. (f) Best of Window.

Figure 7.

Errors of combinations of the cost and image filter using the window-based stereo method, according to the variation of the pattern intensity parameter. (a) Non-filter. (b) Mean. (c) LoG. (d) BilSub. (e) Rank. (f) Best of Window.

Figure 8.

Errors of combinations of cost and image filter using the SGM and GC stereo methods, according to the variation of the pattern intensity parameter. (a) SGM/AD. (b) GC/AD. (c) GC/BT.

Figure 8.

Errors of combinations of cost and image filter using the SGM and GC stereo methods, according to the variation of the pattern intensity parameter. (a) SGM/AD. (b) GC/AD. (c) GC/BT.

Figure 9.

Estimated disparity images of the combinations of cost and image filter using the window-based stereo method, according to the low and high pattern intensity parameters. The images in the top row show disparities at the low intensity parameter, and the images in the bottom row show those at the high intensity parameter, respectively. (a) Window, SAD. (b) Window, Mean/SAD. (c) Window, LoG/SAD. (d) Window, BilSub/SAD. (e) Window, Rank/SAD. (f) Window, Census. (g) Window, SAD. (h) Window, Mean/SAD. (i) Window, LoG/SAD. (j) Window, BilSub/SAD. (k) Window, Rank/SAD. (l) Window, Census.

Figure 9.

Estimated disparity images of the combinations of cost and image filter using the window-based stereo method, according to the low and high pattern intensity parameters. The images in the top row show disparities at the low intensity parameter, and the images in the bottom row show those at the high intensity parameter, respectively. (a) Window, SAD. (b) Window, Mean/SAD. (c) Window, LoG/SAD. (d) Window, BilSub/SAD. (e) Window, Rank/SAD. (f) Window, Census. (g) Window, SAD. (h) Window, Mean/SAD. (i) Window, LoG/SAD. (j) Window, BilSub/SAD. (k) Window, Rank/SAD. (l) Window, Census.

Figure 10.

Estimated disparity images of the combinations of cost and image filter using the SGM stereo method according to the low and high pattern intensity parameters. The images in the top row show disparities at the low intensity parameter, and the images in the bottom row show those at the high intensity parameter, respectively. (a) SGM, AD. (b) SGM, Mean/AD. (c) SGM, LoG/AD. (d) SGM, BilSub/AD. (e) SGM, Rank/AD. (f) SGM, Census. (g) SGM, AD. (h) SGM, Mean/AD. (i) SGM, LoG/AD. (j) SGM, BilSub/AD. (k) SGM, Rank/AD. (l) SGM, Census.

Figure 10.

Estimated disparity images of the combinations of cost and image filter using the SGM stereo method according to the low and high pattern intensity parameters. The images in the top row show disparities at the low intensity parameter, and the images in the bottom row show those at the high intensity parameter, respectively. (a) SGM, AD. (b) SGM, Mean/AD. (c) SGM, LoG/AD. (d) SGM, BilSub/AD. (e) SGM, Rank/AD. (f) SGM, Census. (g) SGM, AD. (h) SGM, Mean/AD. (i) SGM, LoG/AD. (j) SGM, BilSub/AD. (k) SGM, Rank/AD. (l) SGM, Census.

Figure 11.

Estimated disparity images of the combinations of cost and image filter using the GC stereo method according to the low and high pattern intensity parameters. The images in the top row show disparities at the low intensity parameter, and the images in the bottom row show those at the high intensity parameter, respectively. (a) GC, AD. (b) GC, Mean/AD. (c) GC, LoG/AD. (d) GC, BilSub/AD. (e) GC, Rank/AD. (e) GC, Census. (g) GC, AD. (h) GC, Mean/AD. (i) GC, LoG/AD. (j) GC, BilSub/AD. (k) GC, Rank/AD. (l) GC, Census.

Figure 11.

Estimated disparity images of the combinations of cost and image filter using the GC stereo method according to the low and high pattern intensity parameters. The images in the top row show disparities at the low intensity parameter, and the images in the bottom row show those at the high intensity parameter, respectively. (a) GC, AD. (b) GC, Mean/AD. (c) GC, LoG/AD. (d) GC, BilSub/AD. (e) GC, Rank/AD. (e) GC, Census. (g) GC, AD. (h) GC, Mean/AD. (i) GC, LoG/AD. (j) GC, BilSub/AD. (k) GC, Rank/AD. (l) GC, Census.

Figure 12.

Errors of combinations of cost and image filter using the window-based stereo method, according to the variation of the pattern contrast parameter. (a) Non-filter. (b) Mean. (c) LoG. (d) BilSub. (e) Rank. (f) Best of Window.

Figure 12.

Errors of combinations of cost and image filter using the window-based stereo method, according to the variation of the pattern contrast parameter. (a) Non-filter. (b) Mean. (c) LoG. (d) BilSub. (e) Rank. (f) Best of Window.

Figure 13.

Errors of combinations of cost and image filter using the SGM and GC stereo methods, according to the variation of the pattern contrast parameter. (a) SGM/AD. (b) GC/AD. (c) GC/BT.

Figure 13.

Errors of combinations of cost and image filter using the SGM and GC stereo methods, according to the variation of the pattern contrast parameter. (a) SGM/AD. (b) GC/AD. (c) GC/BT.

Figure 14.

Estimated disparity images of the combinations of cost and image filter using the window-based stereo method, according to the low and high pattern contrast parameters. The images in the top row show disparities at low contrast, and the images in the bottom row show those at high contrast, respectively. (a) Window, SAD. (b) Window, Mean/SAD. (c) Window, LoG/SAD. (d) Window, BilSub/SAD. (e) Window, Rank/SAD. (f) Window, Census. (g) Window, SAD. (h) Window, Mean/SAD. (i) Window, LoG/SAD. (j) Window, BilSub/SAD. (k) Window, Rank/SAD. (l) Window, Census.

Figure 14.

Estimated disparity images of the combinations of cost and image filter using the window-based stereo method, according to the low and high pattern contrast parameters. The images in the top row show disparities at low contrast, and the images in the bottom row show those at high contrast, respectively. (a) Window, SAD. (b) Window, Mean/SAD. (c) Window, LoG/SAD. (d) Window, BilSub/SAD. (e) Window, Rank/SAD. (f) Window, Census. (g) Window, SAD. (h) Window, Mean/SAD. (i) Window, LoG/SAD. (j) Window, BilSub/SAD. (k) Window, Rank/SAD. (l) Window, Census.

Figure 15.

Estimated disparity images of the combinations of cost and image filter using the SGM stereo method, according to the low and high pattern contrast parameters. The images in the top row show disparities at low contrast, and the images in the bottom row show those at high contrast, respectively. (a) SGM, AD. (b) SGM, Mean/AD. (c) SGM, LoG/AD. (d) SGM, BilSub/AD. (e) SGM, Rank/AD. (f) SGM, Census. (g) SGM, AD. (h) SGM, Mean/AD. (i) SGM, LoG/AD. (j) SGM, BilSub/AD. (k) SGM, Rank/AD. (l) SGM, Census.

Figure 15.

Estimated disparity images of the combinations of cost and image filter using the SGM stereo method, according to the low and high pattern contrast parameters. The images in the top row show disparities at low contrast, and the images in the bottom row show those at high contrast, respectively. (a) SGM, AD. (b) SGM, Mean/AD. (c) SGM, LoG/AD. (d) SGM, BilSub/AD. (e) SGM, Rank/AD. (f) SGM, Census. (g) SGM, AD. (h) SGM, Mean/AD. (i) SGM, LoG/AD. (j) SGM, BilSub/AD. (k) SGM, Rank/AD. (l) SGM, Census.

Figure 16.

Estimated disparity images of the combinations of cost and image filter using the GC stereo method, according to the low and high pattern contrast parameters. The images in the top row show disparities at low contrast, and the images in the bottom row show those at high contrast, respectively. (a) GC, AD. (b) GC, Mean/AD. (c) GC, LoG/AD. (d) GC, BilSub/AD. (e) GC, Rank/AD. (f) GC, Census. (g) GC, AD. (h) GC, Mean/AD. (i) GC, LoG/AD. (j) GC, BilSub/AD. (k) GC, Rank/AD. (l) GC, Census.

Figure 16.

Estimated disparity images of the combinations of cost and image filter using the GC stereo method, according to the low and high pattern contrast parameters. The images in the top row show disparities at low contrast, and the images in the bottom row show those at high contrast, respectively. (a) GC, AD. (b) GC, Mean/AD. (c) GC, LoG/AD. (d) GC, BilSub/AD. (e) GC, Rank/AD. (f) GC, Census. (g) GC, AD. (h) GC, Mean/AD. (i) GC, LoG/AD. (j) GC, BilSub/AD. (k) GC, Rank/AD. (l) GC, Census.

Figure 17.

Errors of combinations of cost and image filter using the window-based stereo method, according to variations of the number of pattern dots parameter. (a) Non-filter. (b) Mean. (c) LoG. (d) BilSub. (e) Rank. (f) Best of Window.

Figure 17.

Errors of combinations of cost and image filter using the window-based stereo method, according to variations of the number of pattern dots parameter. (a) Non-filter. (b) Mean. (c) LoG. (d) BilSub. (e) Rank. (f) Best of Window.

Figure 18.

Errors of combinations of cost and image filter using the SGM and GC stereo methods, according to variations of the number of pattern dots parameter. (a) SGM/AD. (b) GC/AD. (c) GC/BT.

Figure 18.

Errors of combinations of cost and image filter using the SGM and GC stereo methods, according to variations of the number of pattern dots parameter. (a) SGM/AD. (b) GC/AD. (c) GC/BT.

Figure 19.

Estimated disparity images of the combinations of cost and image filter using the window-based stereo method, according to low and high parameters for the number of pattern dots. The images in the top row show disparities with the small number of pattern dots, and the images in the bottom row show those with the large number of pattern dots, respectively. (a) Window, SAD. (b) Window, Mean/SAD. (c) Window, LoG/SAD. (d) Window, BilSub/SAD. (e) Window, Rank/SAD. (f) Window, Census. (g) Window, SAD. (h) Window, Mean/SAD. (i) Window, LoG/SAD. (j) Window, BilSub/SAD. (k) Window, Rank/SAD. (l) Window, Census.

Figure 19.

Estimated disparity images of the combinations of cost and image filter using the window-based stereo method, according to low and high parameters for the number of pattern dots. The images in the top row show disparities with the small number of pattern dots, and the images in the bottom row show those with the large number of pattern dots, respectively. (a) Window, SAD. (b) Window, Mean/SAD. (c) Window, LoG/SAD. (d) Window, BilSub/SAD. (e) Window, Rank/SAD. (f) Window, Census. (g) Window, SAD. (h) Window, Mean/SAD. (i) Window, LoG/SAD. (j) Window, BilSub/SAD. (k) Window, Rank/SAD. (l) Window, Census.

Figure 20.

Estimated disparity images of the combinations of cost and image filter using the SGM stereo method, according to low and high parameters for the number of pattern dots. The images in the top row show disparities with the small number of pattern dots, and the images in the bottom row show those with the large number of pattern dots, respectively. (a) SGM, AD. (b) SGM, Mean/AD. (c) SGM, LoG/AD. (d) SGM, BilSub/AD. (e) SGM, Rank/AD. (f) SGM, Census. (g) SGM, AD. (h) SGM, Mean/AD. (i) SGM, LoG/AD. (j) SGM, BilSub/AD. (k) SGM, Rank/AD. (l) SGM, Census.

Figure 20.

Estimated disparity images of the combinations of cost and image filter using the SGM stereo method, according to low and high parameters for the number of pattern dots. The images in the top row show disparities with the small number of pattern dots, and the images in the bottom row show those with the large number of pattern dots, respectively. (a) SGM, AD. (b) SGM, Mean/AD. (c) SGM, LoG/AD. (d) SGM, BilSub/AD. (e) SGM, Rank/AD. (f) SGM, Census. (g) SGM, AD. (h) SGM, Mean/AD. (i) SGM, LoG/AD. (j) SGM, BilSub/AD. (k) SGM, Rank/AD. (l) SGM, Census.

Figure 21.

Estimated disparity images of the combinations of cost and image filter using GC stereo method according to low and high parameter of the number of pattern dots. The images in the top row show disparities with the small number of pattern dots, and the images in the bottom row show those with the large number of pattern dots, respectively. (a) GC, AD. (b) GC, Mean/AD. (c) GC, LoG/AD. (d) GC, BilSub/AD. (e) GC, Rank/AD. (e) GC, Census. (g) GC, AD. (h) GC, Mean/AD. (i) GC, LoG/AD. (j) GC, BilSub/AD. (k) GC, Rank/AD. (l) GC, Census.

Figure 21.

Estimated disparity images of the combinations of cost and image filter using GC stereo method according to low and high parameter of the number of pattern dots. The images in the top row show disparities with the small number of pattern dots, and the images in the bottom row show those with the large number of pattern dots, respectively. (a) GC, AD. (b) GC, Mean/AD. (c) GC, LoG/AD. (d) GC, BilSub/AD. (e) GC, Rank/AD. (e) GC, Census. (g) GC, AD. (h) GC, Mean/AD. (i) GC, LoG/AD. (j) GC, BilSub/AD. (k) GC, Rank/AD. (l) GC, Census.

Figure 22.

Errors of combinations of cost and image filter using the window-based stereo method, according to the variation of the image’s gain parameter. (a) Non-filter. (b) Mean. (c) LoG. (d) BilSub. (e) Rank.

Figure 22.

Errors of combinations of cost and image filter using the window-based stereo method, according to the variation of the image’s gain parameter. (a) Non-filter. (b) Mean. (c) LoG. (d) BilSub. (e) Rank.

Figure 23.

Errors of combinations of cost and image filter using the SGM and GC stereo methods, according to the variation of the image’s gain parameter. (a) SGM/AD. (b) GC/AD. (c) GC/BT.

Figure 23.

Errors of combinations of cost and image filter using the SGM and GC stereo methods, according to the variation of the image’s gain parameter. (a) SGM/AD. (b) GC/AD. (c) GC/BT.

Figure 24.

Estimated disparity images of the combinations of cost and image filter using the window-based stereo method, according to low and high gain parameters. The images in the top row show disparities at the low gain parameter, and the images in the bottom row show those at the high gain parameter, respectively. (a) Window, SAD. (b) Window, Mean/SAD. (c) Window, LoG/SAD. (d) Window, BilSub/SAD. (e) Window, Rank/SAD. (f) Window, Census. (g) Window, SAD. (h) Window, Mean/SAD. (i) Window, LoG/SAD. (j) Window, BilSub/SAD. (k) Window, Rank/SAD. (l) Window, Census.

Figure 24.

Estimated disparity images of the combinations of cost and image filter using the window-based stereo method, according to low and high gain parameters. The images in the top row show disparities at the low gain parameter, and the images in the bottom row show those at the high gain parameter, respectively. (a) Window, SAD. (b) Window, Mean/SAD. (c) Window, LoG/SAD. (d) Window, BilSub/SAD. (e) Window, Rank/SAD. (f) Window, Census. (g) Window, SAD. (h) Window, Mean/SAD. (i) Window, LoG/SAD. (j) Window, BilSub/SAD. (k) Window, Rank/SAD. (l) Window, Census.

Figure 25.

Estimated disparity images of the combinations of cost and image filter using the SGM stereo method, according to low and high gain parameter. The images in the top row show disparities at the low gain parameter, and the images in the bottom row show those at the high gain parameter, respectively. (a) SGM, AD. (b) SGM, Mean/AD. (c) SGM, LoG/AD. (d) SGM, BilSub/AD. (e) SGM, Rank/AD. (f) SGM, Census. (g) SGM, AD. (h) SGM, Mean/AD. (i) SGM, LoG/AD. (j) SGM, BilSub/AD. (k) SGM, Rank/AD. (l) SGM, Census.

Figure 25.

Estimated disparity images of the combinations of cost and image filter using the SGM stereo method, according to low and high gain parameter. The images in the top row show disparities at the low gain parameter, and the images in the bottom row show those at the high gain parameter, respectively. (a) SGM, AD. (b) SGM, Mean/AD. (c) SGM, LoG/AD. (d) SGM, BilSub/AD. (e) SGM, Rank/AD. (f) SGM, Census. (g) SGM, AD. (h) SGM, Mean/AD. (i) SGM, LoG/AD. (j) SGM, BilSub/AD. (k) SGM, Rank/AD. (l) SGM, Census.

Figure 26.

Estimated disparity images of the combinations of cost and image filter using the GC stereo method, according to low and high gain parameters. The images in the top row show disparities at the low gain parameter, and the images in the bottom row show those at the high gain parameter, respectively. (a) GC, AD. (b) GC, Mean/AD. (c) GC, LoG/AD. (d) GC, BilSub/AD. (e) GC, Rank/AD. (e) GC, Census. (g) GC, AD. (h) GC, Mean/AD. (i) GC, LoG/AD. (j) GC, BilSub/AD. (k) GC, Rank/AD. (l) GC, Census.

Figure 26.

Estimated disparity images of the combinations of cost and image filter using the GC stereo method, according to low and high gain parameters. The images in the top row show disparities at the low gain parameter, and the images in the bottom row show those at the high gain parameter, respectively. (a) GC, AD. (b) GC, Mean/AD. (c) GC, LoG/AD. (d) GC, BilSub/AD. (e) GC, Rank/AD. (e) GC, Census. (g) GC, AD. (h) GC, Mean/AD. (i) GC, LoG/AD. (j) GC, BilSub/AD. (k) GC, Rank/AD. (l) GC, Census.

Figure 27.

Left real active images, according to environments that match four attributes of active IR images. The figures show changes in the pattern intensity, pattern contrast, number of pattern dots, and global gain of IR images from top to bottom.

Figure 27.

Left real active images, according to environments that match four attributes of active IR images. The figures show changes in the pattern intensity, pattern contrast, number of pattern dots, and global gain of IR images from top to bottom.

Figure 28.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method. All attributes of active IR images were set to default values.

Figure 28.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method. All attributes of active IR images were set to default values.

Figure 29.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method, according to the pattern intensity parameter. The upper line indicates the low parameter, and the lower line indicates the high parameter for each stereo matching method, respectively.

Figure 29.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method, according to the pattern intensity parameter. The upper line indicates the low parameter, and the lower line indicates the high parameter for each stereo matching method, respectively.

Figure 30.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method, according to the pattern contrast parameter. The upper line indicates the low parameter, and the lower line indicates the high parameter for each stereo matching method, respectively.

Figure 30.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method, according to the pattern contrast parameter. The upper line indicates the low parameter, and the lower line indicates the high parameter for each stereo matching method, respectively.

Figure 31.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method, according to the parameter of the number of pattern dots. The upper line indicates the low parameter, and the lower line indicates the high parameter for each stereo matching method, respectively.

Figure 31.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method, according to the parameter of the number of pattern dots. The upper line indicates the low parameter, and the lower line indicates the high parameter for each stereo matching method, respectively.

Figure 32.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method, according to the gain parameter. The upper line indicates the low parameter, and the lower line indicates the high parameter for each stereo matching method, respectively.

Figure 32.

Estimated real disparity images of the combinations of cost and image filter using each stereo matching method, according to the gain parameter. The upper line indicates the low parameter, and the lower line indicates the high parameter for each stereo matching method, respectively.

Table 1.

Specifications of commercial RGB-D cameras.

Table 1.

Specifications of commercial RGB-D cameras.

| D400 Series Depth Cameras | D415 | D435 | D455 |

|---|

| Depth module | D415 | D430 | D450 |

| Baseline | 55 mm | 50 mm | 95 mm |

| Left/Right Imagers Type | Standard | Wide | Wide |

| IR Projector | Standard | Wide | Wide |

| IR Projector FOV | H:67/V:41/D:75 | H:90/V:63/D:99 | H:90/V:63/D:99 |

Table 2.

Runtime of stereo matching techniques using GPU for synthetic Adirondack(s).

Table 2.

Runtime of stereo matching techniques using GPU for synthetic Adirondack(s).

| | Window-Based | SGM | GC |

|---|

| | SAD | BT | ZSAD | NCC | ZNCC | Census | AD | BT | Census | AD | BT | Census |

|---|

| Time (s) | 0.0214 | 0.0237 | 0.0317 | 0.0261 | 0.0329 | 0.0316 | 0.453 | 0.465 | 0.505 | 10.321 | 10.326 | 10.438 |

Table 3.

RMS errors of real active images (%).

Table 3.

RMS errors of real active images (%).

| Type | Laser Power | Illumination Ratio | The Number of Projectors |

|---|

| Attribute Value | 30 | 90 | 150 | 210 | 270 | 0.1 | 0.3 | 0.5 | 0.7 | 0.9 | 1 | 3 | 5 | 7 | 9 |

|---|

| (W) Non/ZNCC | 2.81 | 2.23 | 1.96 | 1.61 | 1.32 | 1.92 | 1.67 | 1.32 | 1.25 | 1.49 | 3.21 | 2.84 | 2.31 | 1.83 | 1.56 |

| (W) Mean/ZNCC | 3.27 | 2.98 | 2.42 | 1.96 | 1.41 | 2.14 | 1.63 | 1.58 | 1.71 | 1.83 | 2.98 | 2.66 | 2.20 | 1.84 | 1.45 |

| (W) LoG/ZNCC | 3.11 | 2.84 | 2.34 | 1.75 | 1.29 | 2.88 | 2.50 | 1.83 | 1.94 | 2.01 | 3.29 | 2.91 | 2.44 | 1.98 | 1.62 |

| (W) BilSub/ZNCC | 2.74 | 2.42 | 1.98 | 1.68 | 1.35 | 1.61 | 1.47 | 1.53 | 1.57 | 1.49 | 2.59 | 2.04 | 1.79 | 1.55 | 1.44 |

| (W) Rank/SAD | 2.94 | 2.71 | 2.12 | 1.56 | 1.33 | 2.09 | 1.84 | 1.56 | 1.61 | 1.66 | 3.75 | 2.91 | 2.28 | 1.87 | 1.67 |

| (W) Census | 1.71 | 1.58 | 1.41 | 1.29 | 1.31 | 1.99 | 1.71 | 1.57 | 1.45 | 1.61 | 2.71 | 2.47 | 1.89 | 1.58 | 1.41 |

| (SGM) Census | 0.96 | 0.94 | 1.08 | 1.03 | 0.99 | 1.06 | 0.95 | 1.10 | 0.99 | 1.11 | 1.07 | 1.03 | 0.98 | 0.95 | 1.01 |

| (GC) Census | 1.08 | 1.02 | 0.98 | 0.92 | 0.94 | 1.10 | 1.05 | 0.97 | 1.01 | 0.99 | 1.13 | 1.07 | 1.02 | 0.96 | 0.94 |