1. Introduction

Localization plays an important role in many applications such as robotics, unmanned driving, and unmanned aerial vehicles (UAVs). The fusion of information from multiple sensors for localization is the current mainstream method. The sensor fusion approaches have been widely studied for decades and can be divided into two streams: loosely coupled and tightly coupled approaches. Loosely coupled approaches [

1,

2] process the measurements of each sensor individually and then fuse all the results to obtain the final results. Tightly coupled approaches [

3,

4] combine measurements from all sensors and then use an estimator to process these measurements to obtain the final results. In general, the accuracy of tightly coupled approaches will be higher than that of loosely coupled approaches.

Visual odometry (VO) has received more attention due to the low price of cameras. Davison et al. [

5] introduced MonoSLAM, which is the first monocular visual SLAM system that extracts the Shi-Tomasi corner [

6]. MonoSLAM actively searches for matching point features in projected ellipses. However, because of the high computational complexity of MonoSLAM, it can only handle some small scenes. Klein et al. [

7] proposed PTAM, which is the first optimization-based monocular visual SLAM system that executes feature tracking and mapping as two independent tasks in parallel in two threads.

Because PTAM is designed for small-scale AR scenes, it also has some disadvantages. For example, it can only handle small-scale scenes, and the tracked features are easy to lose. A milestone work after PTAM is ORB-SLAM [

8] and ORB-SLAM2 [

9]. ORB-SLAM improves PTAM by detecting and tracking ORB features and adding loop closure. These improvements greatly improve the positioning precision of VO. However, ORB-SLAM can only build sparse point cloud maps and cannot track keyframes during pure rotation, which also limits its application.

In addition, when encountering environments with repeated textures, point features cannot express environmental structure information well. On the contrary, line features contain more information related to the environmental structure and can overcome the interference caused by repeated textures. Therefore, point-line feature fusion can ensure that the VO system is more robust. He Yijia et al. [

10] proposed PL-VIO, which is a VIO system based on point-line features. It uses the LSD line segment extraction algorithm [

11] from OpenCV [

12] to detect line features. However, LSD becomes the bottleneck for real-time performance due to its high computational cost [

13], which causes the performance of PL-VIO to be seriously affected. To improve the speed and positioning precision of PL-VIO, Fu Qiang et al. [

14] proposed PL-VINS, which is also a VIO framework based on point and line features. PL-VINS designs a modified LSD algorithm by studying hidden parameter tuning and length rejection strategy. The modified LSD runs at least three times faster than the LSD. PLF-VINS [

15] is another VIO system based on point-line features, which introduces two methods for fusing point and line features. The first method is to use the positional similarity of point-line features to search for the relationship between point and line features. The second method is to fuse 3D parallel lines. The residuals formed by the two methods are then added to the VIO system. The results of PLF-VINS show that its positioning precision is greatly improved compared to some classic SLAM systems, such as OKVIS [

4] and VINS-Mono [

16].

Line features can improve the positioning precision of the VO system. However, in weak textures or dark scenes, the system still cannot extract a sufficient number of point and line features, which can cause large positioning errors. To overcome the shortcoming, fusing IMU measurements with images is a very effective method. MSCKF [

17,

18] is a VIO system based on EKF, which adds camera poses to the states of the system. When cameras observe landmarks, constraints can be formed between camera poses. The system is then updated by an observation model derived from geometric constraints. Since the number of camera poses is much smaller than the number of landmarks, this greatly reduces the time complexity of the system. VINS-Mono [

16] is a tightly coupled, nonlinear optimization-based method that can obtain high-precision positioning results. Due to the existence of the loop closure and 4-DoF pose graph optimization, even if the system runs in large-scale scenes, it can still obtain accurate positioning results. In addition, to improve the performance of ORB-SLAM and eliminate the errors of ORB-SLAM, ORB-SLAM3 [

19] adds IMU measurements based on ORB-SLAM. ORB-SLAM3 is more robust and has higher positioning precision compared with ORB-SLAM.

The VIO system is more robust than the VO system. Nevertheless, the VIO system has four unobservable directions, namely

x,

y,

z, and

yaw, which lead to the accumulated drift of VIO. To eliminate the accumulated drift, an effective approach is to combine VIO with GNSS measurements. Lee et al. [

20] demonstrated that the GPS-aided VIO system is fully observable in the ENU frame. GVINS [

21] is a tightly coupled GNSS-VIO state estimator that combines VIO with GNSS pseudorange and Doppler frequency shift measurements, which achieves high positioning accuracy and is a state-of-the-art method. Li Xingxing et al. [

22] introduced a semi-tightly coupled framework based on GNSS Precise Point Positioning (PPP) and stereo VINS. The system can use S-VINS to return accurately predicted positions in GNSS-unfriendly areas. Liu Jinxu et al. [

23] also proposed a tightly coupled GNSS-VIO state estimator that fuses GNSS raw measurements with VIO. It drops all GNSS measurements in GNSS-degradation scenes, which limits its positioning precision.

However, there are many challenges in fusing the information from multiple sensors. First, the VIO system cannot extract enough point features in areas with repeated textures. Second, GNSS Single Point Positioning (SPP) uses pseudorange measurement; it can only achieve meter-level positioning accuracy. Therefore, if the pseudorange measurement and VIO are directly fused, the positioning accuracy is not greatly improved. Finally, since the GNSS receiver and IMU are fixed in different spatial positions, it is necessary to estimate the extrinsic parameter between them.

In response to the above problems, this paper proposes a new tightly coupled GNSS-VIO system. Our proposed system is drift-free and can provide global coordinates. The main contributions of the text are as follows:

To obtain environmental structure information and deal with environments with repeated textures, we extracted line features based on point features.

To combine the merits of the pseudorange and carrier phase measurements, we used the carrier phase smoothed pseudorange instead of the pseudorange measurement, which can make the GNSS-VIO system run in real-time and improve the positioning accuracy.

We demonstrate that the states represented in the ECEF frame are fully observable, and the tightly coupled GNSS-VIO state estimator is consistent.

The rest of this paper is organized as follows:

Section 2 introduces the implementation method of GNSS-VIO in detail, including line features, GNSS raw measurements processing, and observability analysis.

Section 3 summarizes the detailed structure of our system.

Section 4 conducts experiments on public datasets. Finally, conclusions are given in

Section 5.

2. Methods

2.1. Frames and Notations

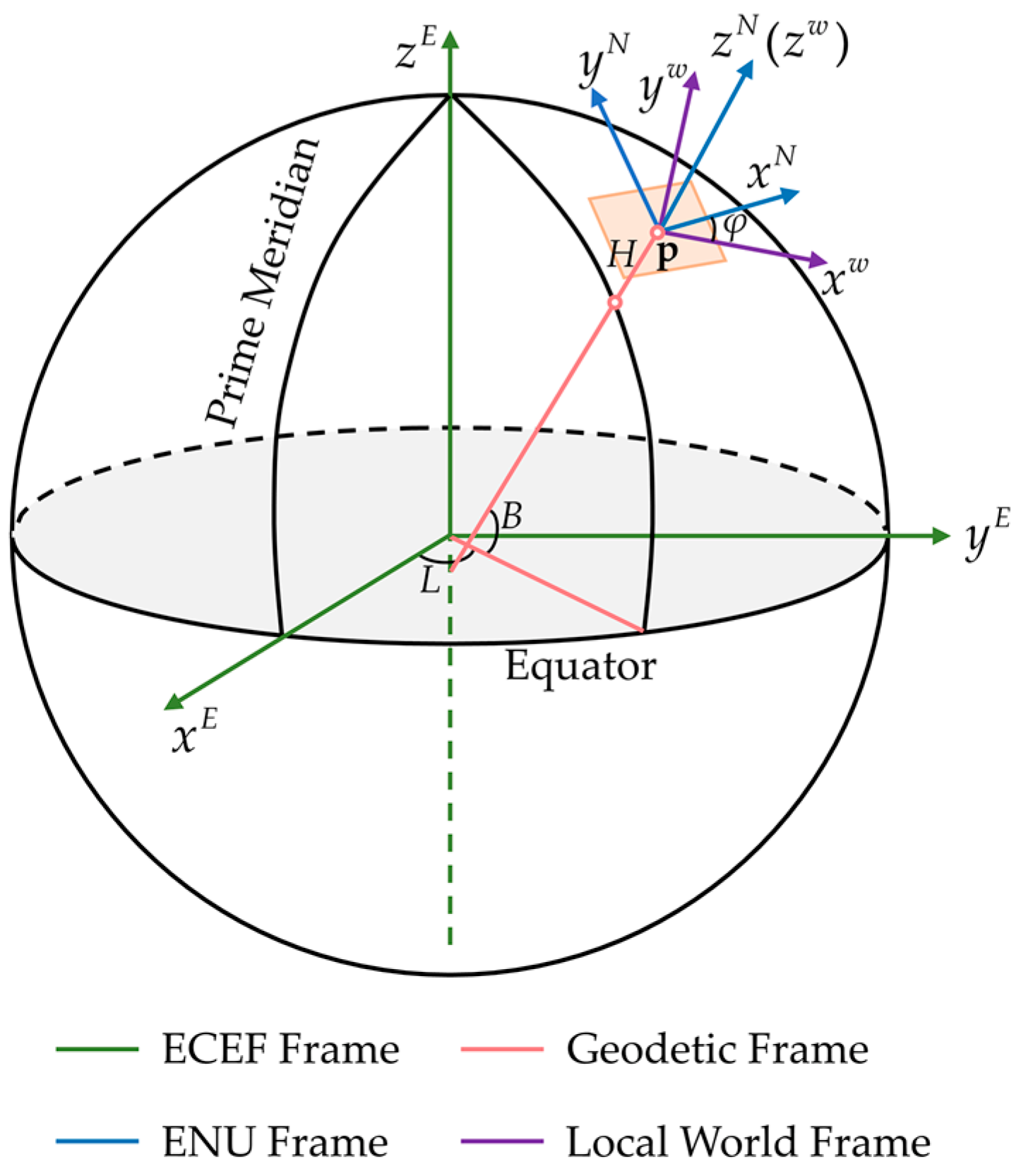

The frames involved in our system consist of:

Sensor Frame: In our system, , , and denote the camera frame, the body frame, and the GNSS receiver frame respectively.

Local World Frame: The origin of the local world frame

is the position where the VIO system starts running, and the

z-axis is gravity aligned as illustrated in

Figure 1.

ENU Frame: The ENU frame is also called the East–North–Up frame. The

x-,

y-, and

z-axis of the ENU frame point to the east, north, and up directions, respectively. In our system, the ENU frame is at the same origin as the local world, and the

z-axis of the two frames is aligned (

Figure 1). We use

to represent the ENU frame.

Geodetic Frame: As shown in

Figure 1, geodetic coordinate

of a point

p is represented by geodetic longitude

L, geodetic latitude

B, and geodetic height

H. The geodetic longitude

L of

p is the angle between the reference meridian and another meridian that passes through

p. The geodetic latitude

B of

p is the angle between the ellipsoid normal vector that passes through

p and the projection of the ellipsoid normal vector into the equatorial plane. The geodetic height

H of

p is the minimum distance between

p and the reference ellipsoid.

ECEF Frame: The Earth-Centered, Earth-Fixed (ECEF) frame

is fixed to Earth. As depicted in

Figure 1, the origin of the ECEF frame is at the center of mass of Earth. The

x-axis points to the intersection of the Equator and the Prime Meridian. The

z-axis is perpendicular to the equatorial plane in the direction of the North Pole. The

y-axis is chosen to form a right-handed coordinate system with the

x- and

z-axis.

In this paper, and represent the rotation and translation of the body frame with respect to the local world frame, and is the corresponding quaternion form of the rotation . , , and denote the velocity of the origin of the body frame measured in the local world frame, accelerometer bias, and gyroscope bias, respectively. and stand for the extrinsic parameters between the camera and IMU. and represent the receiver clock error and receiver clock drifting rate, respectively. is the yaw between the local world frame and the ENU frame. is the rotation matrix of the local world frame with respect to the ENU frame. denotes the translation of the ENU frame with respect to the ECEF frame. is the extrinsic translation parameter between the GNSS receiver and IMU. represents the skew-symmetric matrix of a 3D vector.

2.2. Line Feature

2.2.1. Plücker Coordinates

In our system, we described a spatial line with the Plücker Coordinates. Given a spatial line

, its Plücker Coordinates are represented by

, where

is the normal vector of the plane determined by

, and the origin of

and

is the line direction vector. We can transform

in the local world frame to

in the camera frame by:

2.2.2. Line Feature Triangulation

Assuming that

is observed by camera

and camera

in the normalized image plane as

and

, the two line segments can be denoted by two endpoints as shown in

Figure 2. The two endpoints of

are

and

, and the two endpoints of

are

and

.

A 3D plane

can be modeled as:

where

is the normal vector of

. For a point

on the plane, we can obtain:

According to Equations (2) and (3), we can obtain the plane

and

. As shown in

Figure 2, the normal vector of

is

, and

can be computed by the optical center

as

. To obtain

, we need to transform the two endpoints

and

to the camera frame

. Therefore, the corresponding reprojected endpoints are

and

, where

and

can be obtained by visual-inertial alignment. Similarly, the normal vector of

is

, and

can be calculated by the translation

from the camera frame

to the camera frame

as

.

After

and

are computed, we can obtain the Plücker Coordinates of

according to the dual Plücker matrix

:

2.2.3. Orthonormal Representation

Since spatial lines only have 4-DoF, there is a problem of overparameterization using the Plücker Coordinates to represent spatial lines. In contrast, the orthonormal representation

is more suitable for nonlinear optimization. Let

and

denote 3D and 2D rotation matrix, respectively, then we have:

where

and

are the 3D rotation angles around the

x-,

y-, and

z-axis of the camera frame and the 2D rotation angle.

Therefore, we can define the orthonormal representation of a spatial line by a four-dimensional vector:

In addition, given an orthonormal representation

, the corresponding Plücker Coordinates can be obtained by:

where

,

, and

is the

ith column of

.

and

have a scale factor, but they denote the same spatial line.

2.2.4. Line Feature Reprojection Residual

The line feature reprojection residual is defined in terms of point-to-line distance. Given a spatial line

, it can be projected to the image plane by [

24]:

where

is the line projection matrix.

Finally, assume that

represents the

jth spatial line

, which is observed by the

ith camera frame

. Then the spatial line reprojection residual can be modeled as:

where

and

denote the two endpoints of the line segment projected on the normalized image plane.

is the point-to-line distance:

For the corresponding Jacobian matrix, we followed the routine of [

10].

2.3. GNSS Measurements

GNSS measurements include pseudorange, carrier phase, and Doppler frequency shift.

2.3.1. Pseudorange Measurement

The pseudorange is defined as the measured distance obtained by multiplying the travel time of the satellite signal by the speed of light. Due to the influence of satellite clock error, receiver clock error, and ionospheric and tropospheric delays, the pseudorange is prefixed with “pseudo” to distinguish it from the true distance from the satellite to the GNSS receiver. Generally, although the pseudorange measurement only has meter-level positioning precision (the positioning precision of P code is about 10 m, and the positioning precision of C/A code is 20 m to 30 m), it is real-time and has no ambiguity. Therefore, the pseudorange measurement is still very important for GNSS positioning technology. For a certain satellite

and a GNSS receiver

at time

k, the pseudorange

can be modeled as:

where

and

represent the position of satellite

and the GNSS receiver

at time

k in the ECEF frame, respectively.

is the speed of light.

,

, and

are the satellite clock error and tropospheric and ionospheric delays, which can be computed according to the satellite ephemeris.

denotes the multipath and random error of the pseudorange measurement, which is subject to the Zero-mean Gaussian distribution.

2.3.2. Carrier Phase Measurement

Although pseudorange positioning is an important method for GNSS, its error is too large for some applications that require high-precision positioning. In contrast, due to the short wavelength of the carrier phase (

and

), if the carrier phase measurement is used for positioning, it can achieve centimeter-level positioning accuracy. However, since the carrier phase is a periodic sinusoidal signal, and the GNSS receiver can only measure the part of less than one wavelength, there is the problem of the whole-cycle ambiguity, which makes the positioning process time-consuming. The carrier phase is defined as the phase difference between the phase transmitted by the satellite and the reference phase generated by the GNSS receiver. Similar to the pseudorange measurement, the carrier phase measurement is also related to the position of satellite and GNSS receiver. The carrier phase measurement can be modeled as:

where

is the carrier wavelength, and

is the whole-cycle ambiguity.

represents the multipath and random error of the carrier phase measurement, which is subject to the Zero-mean Gaussian distribution.

2.3.3. Doppler Frequency Shift Measurement

The Doppler effect reveals a phenomenon in the spread of waves, that is, the wavelength of the radiation emitted by objects changes accordingly due to the relative motion of the wave source and the observer. As the wave source moves toward the observer, the wavelength becomes shorter and the frequency becomes higher due to the compression of the wave. On the contrary, as the wave source moves away from the observer, the wavelength becomes longer and the frequency becomes lower. Similarly, the Doppler effect can also occur between the satellite and the GNSS receiver. When a satellite orbits Earth in its elliptical orbit, due to the relative motion between the satellite and the GNSS receiver, the frequency of the satellite signal received by the GNSS receiver changes accordingly. This frequency change is called Doppler frequency shift, which can be modeled as:

where

is the unit vector pointing along the line of sight from the GNSS receiver

to the satellite

.

and

denote the velocity of the

jth satellite and the receiver at time

k in the ECEF frame, respectively.

is the satellite clock drifting rate, which can be calculated according to the satellite ephemeris.

represents the multipath and random error of the Doppler frequency shift measurement, which is subject to the Zero-mean Gaussian distribution.

2.4. Carrier Phase Smoothed Pseudorange

As mentioned above, the pseudorange measurement proves fast and efficient, but can only achieve meter-level positioning precision. In contrast, the carrier phase measurement can achieve centimeter-level positioning precision but is required to solve the whole-cycle ambiguity, which is complex and time-consuming. Therefore, to combine the merits of the two measurements, we can use carrier phase smoothed pseudorange (short for smoothed pseudorange) to improve the positioning precision. In general, the positioning precision of the smoothed pseudorange measurement is several times higher than that of the pseudorange measurement. For a single-frequency receiver, when the whole-cycle ambiguity and ionospheric delay are nearly constant within a period of time, the pseudorange can be smoothed by using the carrier phase. The Hatch filter is the most widely used for carrier phase smoothed pseudorange, which assumes that the ionospheric delay is nearly constant among GNSS epochs and then averages the multiepoch whole-cycle ambiguity and ionospheric delay to improve the positioning accuracy of the pseudorange measurement. The carrier phase smoothed pseudorange based on the Hatch filter can be modeled as:

where

m is the smoothed interval of the Hatch filter, usually 20 to 200 epochs.

denotes the multipath and random error of the carrier phase smoothed pseudorange measurement, which is also subject to the Zero-mean Gaussian distribution. According to Equation (15), the variance of the smoothed pseudorange is related to the variance of the pseudorange and carrier phase measurements. Assuming that the variance at different times is independent, then the variance of the smoothed pseudorange can be modeled as:

2.5. Factor Graph Representation

The factor graph of our system is shown in

Figure 3, which shows the factors in a sliding window, including point feature factors, line feature factors, IMU factors, carrier phase smoothed pseudorange factors, and Doppler frequency shift factors. Visual observation consists of the point and line features detected by our system. In nonlinear optimization, the states are optimized according to the residuals of these factors. The states of our system include:

where

n is the sliding window size,

i is the number of point features, and

j is the number of line features.

is the inverse depth of the

ith point feature in the sliding window.

The IMU preintegration and point feature residuals can be obtained according to [

16], and the line feature residual can be obtained from Equation (10). In the following, we compute the smoothed pseudorange and Doppler frequency shift residuals in detail. The position of the receiver at time

k in the ECEF frame is:

where

is the position of the receiver in the local world frame, and it can be modeled as:

is the rotation matrix of the local world frame with respect to the ENU frame. Since the two frames are gravity-aligned, the 1-DoF

is only related to the yaw offset

and can be modeled as:

represents the rotation matrix of the ENU frame with respect to the ECEF frame, which is determined by the longitude

L and latitude

B of

. Given a position

in the ECEF frame, it can be denoted in the geodetic frame as:

where

a and

b denote the semimajor axis and the semiminor axis of the elliptical orbit, respectively.

is the radius of curvature in prime vertical, and

e is the eccentricity that is related to

a and

b.

After the longitude

L and latitude

B of

are computed,

can be obtained by:

According to Equation (15), the smoothed pseudorange residual can be represented as:

In nonlinear optimization, it is necessary to calculate the Jacobian matrix of the smoothed pseudorange residual with respect to the states. Therefore, from Equation (23), the Jacobian matrix of the smoothed pseudorange can be obtained as:

with

where

The derivation details are provided in

Appendix A.

In Equation (14), the velocity of the receiver in the ECEF frame is transformed from that in the local world frame:

Therefore, the Doppler frequency shift residual can be obtained by:

Similar to the smoothed pseudorange, the Jacobian matrix of the Doppler frequency shift is:

with

The corresponding derivation rule is similar to the Jacobian matrix of the smoothed pseudorange and will not be repeated here.

After the residuals of all factors are obtained, then the system can optimize the states by Ceres solver [

25]. The cost function of our system is:

where

is the prior residual for marginalization.

is the set of IMU preintegration measurements in the sliding window.

and

are the set of point and line features in the sliding window, respectively.

is the Cauchy robust function used to suppress outliers.

2.6. GNSS-IMU Calibration

is the extrinsic translation parameter between the GNSS receiver and IMU. After our system performs ENU Origin Estimation and Yaw Estimation, then we can calibrate

. We estimate

as the initial value of nonlinear optimization through the following optimization problem:

After the GNSS-IMU calibration is successfully initialized, our system performs the nonlinear optimization.

2.7. Observability Analysis of Tightly Coupled GNSS-VIO System

A SLAM system can be described using a state equation and an output equation, where the input and output are the external variables of the system, and the state is the internal variables of the system. If the states of the system can be completely represented by the output, the system is fully observable; otherwise, the system is not fully observable. Observability plays a very important role in the state estimation problem. If some states of the system are not observable, the positioning precision of the system will be affected when running in long trajectories. The observability of the system can be represented by the observability matrix. If the dimension of the null space of the observability matrix is equal to 0, then the system is fully observable. To facilitate the observability analysis of our proposed system, some simplifications were required. First, the accelerometer and gyroscope biases were not included in the states, because the biases were observable and they did not change the results of the observability analysis [

26]. Second, we considered a single point and line feature [

27]. Third, the translation parameter

was successfully calibrated. Then the discrete-time linear error state model and residual of the system are:

where

is the error state, and

is the residual.

and

are the error-state transition matrix and the measurement Jacobian matrix, respectively.

and

represent the system noise process and the measurement noise process, respectively. The noise process is modeled as a Zero-mean white Gaussian process.

According to [

28], the observability matrix can be obtained as:

In Equation (34), the observability matrix is defined as a function of the error-state transition matrix and the measurement Jacobian matrix .

Therefore, given the linearized system in Equation (33), its observability can be demonstrated according to Equation (34). The proof is as follows:

Theorem 1. The states represented in the ECEF frame are fully observable.

Proof of Theorem1. The simplified states include:

Generally, the raw accelerometer and gyroscope measurements from IMU can be obtained by:

where

and

are the accelerometer and gyroscope measurements. The measurements are represented in the local world frame.

Given two time instants, position, velocity, and orientation states can be propagated by the IMU measurements:

where

denotes the quaternion multiplication operation.

Equation (37) is the continuous state propagation model. To analyze the observability of the system, it was necessary to perform the discretization of the continuous-time system model. Therefore, we used the Euler method to compute the discrete-time model of Equation (37):

According to Equation (38), the error propagation equation of position, velocity, and orientation can be obtained by:

The details of Equation (39) are provided in

Appendix B.

Similarly, when the states are represented in the ECEF frame, Equation (39) is still held, namely:

Therefore, the discrete-time error state model of Equation (35) can be obtained:

where

Since

is an upper triangular matrix, the error-state transition matrix

from time step

k to

k +

t is also an upper triangular matrix, namely:

where

,

, and

are nonzero entries.

For the measurement Jacobian matrix, our system consists of visual observations and GNSS measurements. GNSS measurements include pseudorange, carrier phase, and Doppler frequency shift, and any of them yields the same results for observability analysis. Therefore, in the following, we used the smoothed pseudorange measurement for observability analysis.

The Jacobian matrix of the smoothed pseudorange measurement with respect to the states in Equation (35) can be obtained by:

The derivation details are similar to the Jacobian matrix of the smoothed pseudorange, which has been computed in

Appendix A. Since the smoothed pseudorange measurement does not include velocity, orientation, the inverse depth of point feature, and the orthonormal representation of line feature, the corresponding entry of the Jacobian matrix is equal to zero.

In addition, we can obtain the Jacobian matrix of the visual observations by:

where

and

can be obtained from [

10].

Therefore, we can obtain the entry of the observability matrix:

where

According to Equation (46), we can clearly observe that the dimension of the null space of is equal to zero, which means the states represented in the ECEF frame are fully observable and the tightly coupled GNSS-VIO state estimator is consistent. □

The fact that the tightly coupled GNSS-VIO system is fully observable means that even if the system runs in long trajectories, the accumulated error can be eliminated. By leveraging the global measurements from GNSS, our system can achieve high-precision and drift-free positioning compared with the VIO system, which has four unobservable directions.

3. System Overview

The architecture of our proposed system is shown in

Figure 4.

The proposed system implements four threads including data input, preprocessing, initialization, and nonlinear optimization. As shown in

Figure 4, the white block diagrams represent the work that has been implemented by VINS-Mono [

16] and GVINS [

21], and the green block diagrams represent improvements we made. The inputs of our system are image, IMU, and GNSS measurements. In the preprocessing step, point and line feature detection and tracking were performed, IMU measurements were preintegrated, and the pseudorange measurements were smoothed by the carrier phase. In the initialization step, we followed the routine of VINS-Mono [

16] for VI-Alignment. After VI-Alignment was completed, we performed GNSS initialization, which was divided into four stages: ENU Origin Estimation (Coarse), Yaw Estimation, ENU Origin Estimation (Fine), and GNSS-IMU Calibration. The first three stages were implemented in GVINS [

21]. Finally, nonlinear optimization was performed. Nonlinear optimization will optimize the states in Equation (17) by leveraging the residuals and Jacobian matrices of different factors, which were computed in

Section 2.5.

5. Conclusions

In this paper, we propose a tightly coupled GNSS-VIO system based on point-line features. First, since line features contain more environmental structure information, we added line features to the system. Second, the pseudorange measurement can only achieve meter-level positioning precision but is fast and unambiguous. On the contrary, the carrier phase measurement can achieve the centimeter-level positioning precision but is required to solve the whole-cycle ambiguity, which is time-consuming. Therefore, we propose to combine the advantages of the two measurements and replace the pseudorange with the carrier phase smoothed pseudorange, which can greatly improve the performance of our system. Third, we considered the extrinsic translation parameter between the GNSS receiver and IMU, and our system can perform real-time parameter calibration. Finally, we demonstrated that if the states are represented in the ECEF frame, the tightly coupled GNSS-VIO system is fully observable. We conducted experiments on public datasets, which show our system achieves high-precision, robust, and real-time localization. In the future, we will further focus on improved methods for tightly coupled GNSS-VIO systems.