An Efficient Compression Method of Underwater Acoustic Sensor Signals for Underwater Surveillance

Abstract

:1. Introduction

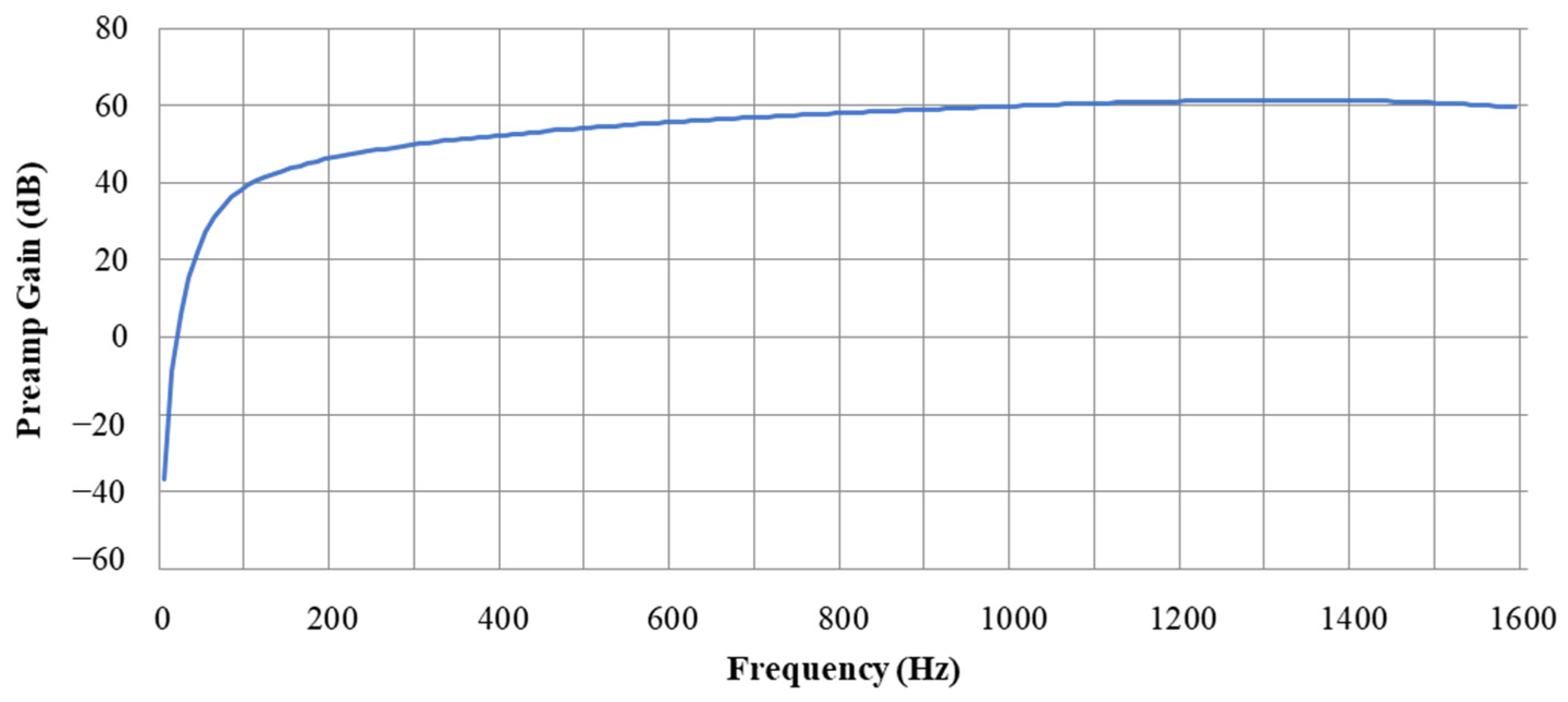

2. Underwater Sound

3. Proposed Method

3.1. Encoder

3.2. Decoder

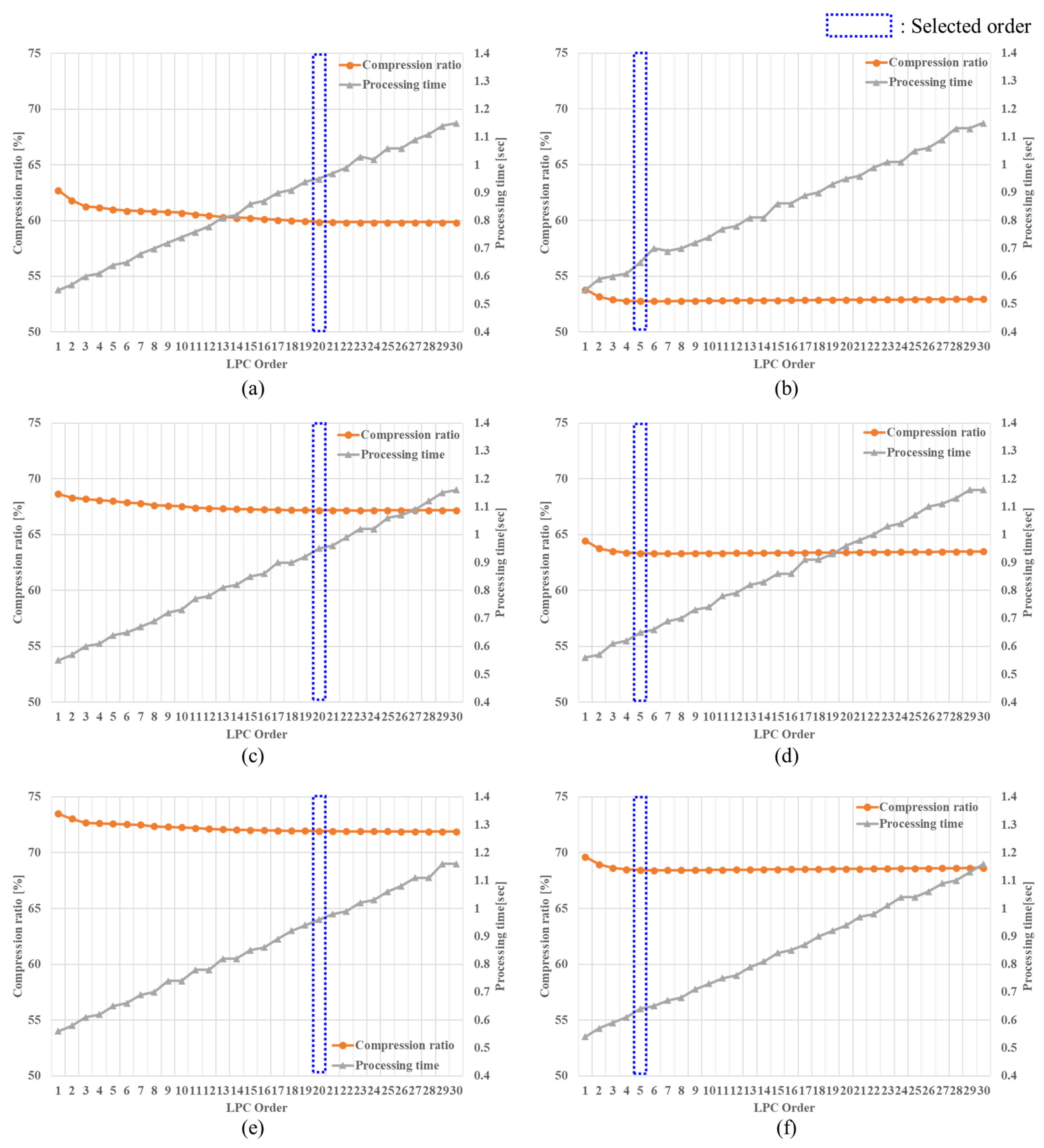

3.3. Linear Prediction

3.4. Entropy Coding

4. Performance Evaluation

4.1. Compression Efficiency

4.2. Processing Time

4.3. Analysis of Distortion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sherman, C.H.; Butler, J.L. Transducers and Arrays for Underwater Sound; Springer: New York, NY, USA, 2007. [Google Scholar]

- Yin, S.; Ruffin, P.B.; Yu, F.T.S. Fiber Optic Sensors, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Waite, A.D. Sonar for Practising Engineers, 3rd ed.; John Wiley & Sons: New York, NY, USA, 2002. [Google Scholar]

- Hodges, R.P. Underwater Acoustics: Analysis, Design and Performance of Sonar; John Wiley & Sons: Chichester, UK, 2010. [Google Scholar]

- Nielsen, R.O. Sonar Signal Processing; Artech House: Norwood, MA, USA, 1991. [Google Scholar]

- Lv, Z.; Zhang, J.; Jin, J.; Li, Q.; Gao, B. Energy consumption research of mobile data collection protocol for underwater nodes using an USV. Sensors 2018, 18, 1211. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hine, R.; Willcox, S.; Hine, G.; Richardson, T. The wave glider: A wave-powered autonomous marine vehicle. In Proceedings of the OCEANS 2009, Biloxi, MS, USA, 26–29 October 2009; IEEE: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Xiao, L.; Jiang, D.; Wan, X.; Su, W.; Tang, Y. Anti-jamming underwater transmission with mobility and learning. IEEE Commun. Lett. 2018, 22, 542–545. [Google Scholar] [CrossRef]

- Felis, I.; Martinez, R.; Ruiz, P.; Er-rachdi, H. Compression techniques of underwater acoustic signals for real-time underwater noise monitoring. Proceedings 2020, 42, 80. [Google Scholar] [CrossRef] [Green Version]

- Salomon, D. Data Compression: The Complete Reference, 4th ed.; Springer: London, UK, 2007. [Google Scholar]

- Bosi, M.; Goldberg, R.E. Introduction to Digital Audio Coding and Standards; Kluwer Academic Publishers: Norwell, MA, USA, 2002. [Google Scholar]

- Johnson, M.; Partan, J.; Hurst, T. Low complexity lossless compression of underwater sound recordings. J. Acoust. Soc. Am. 2013, 133, 1387–1398. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lixin, L.; Feng, G.; Jinqiu, W. Underwater acoustic image encoding based on interest region and correlation coefficient. Complexity 2018, 2018, 5647519. [Google Scholar] [CrossRef]

- Wong, L.S.; Allen, G.E.; Evans, B.L. Sonar data compression using non-uniform quantization and noise shaping. In Proceedings of the 48th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 2–5 November 2014; IEEE: New York, NY, USA, 2014; pp. 1895–1899. [Google Scholar] [CrossRef]

- Burstein, V.; Henkel, W. Linear predictive source coding for sonar data. In Proceedings of the 2017 Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; IEEE: New York, NY, USA, 2017; pp. 91–95. [Google Scholar] [CrossRef]

- Kim, Y.G.; Jeon, K.M.; Kim, Y.; Choi, C.-H.; Kim, H.K. A lossless compression method incorporating sensor fault detection for underwater acoustic sensor array. Int. J. Distrib. Sens. Netw. 2017, 13. [Google Scholar] [CrossRef] [Green Version]

- Shen, S.; Yang, H.; Yao, X.; Li, J.; Xu, G.; Sheng, M. Ship type classification by convolutional neural networks with auditory-like mechanisms. Sensors 2020, 20, 253. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Domingos, L.C.F.; Santos, P.E.; Skelton, P.S.M.; Brinkworth, R.S.A.; Sammut, K. A survey of underwater acoustic data classification methods using deep learning for shoreline surveillance. Sensors 2022, 22, 2181. [Google Scholar] [CrossRef] [PubMed]

- Malvar, H.S. Lossless and near-lossless audio compression using integer-reversible modulated lapped transforms. In Proceedings of the 2007 Data Compression Conference, Snowbird, UT, USA, 27–29 March 2007; IEEE: New York, NY, USA, 2007; pp. 323–332. [Google Scholar] [CrossRef]

- Knudsen, V.O.; Alford, R.S.; Emiling, J.W. Survey of Underwater Sound, Report 3, Ambient Noise; Office of Scientific Research and Development, National Defense Research Committee (NRDC): Washington, DC, USA, 1944. [Google Scholar]

- Wenz, G.M. Acoustic ambient noise in the ocean: Spectra and sources. J. Acoust. Soc. Am. 1962, 34, 1936–1956. [Google Scholar] [CrossRef]

- Urick, R.J. Principles of Underwater Sound, 3rd ed.; Peninsula Publishing: Westport, CT, USA, 1983. [Google Scholar]

- Agrawal, S.K.; Sahu, O.P. Two-channel quadrature mirror filter bank: An overview. Int. Sch. Res. Notices 2013, 2013, 815619. [Google Scholar] [CrossRef]

- Ashida, S.; Kakemizu, H.; Nagahara, M.; Yamamoto, Y. Sampled-data audio signal compression with Huffman coding. In Proceedings of the SICE 2004 Annual Conference, Sapporo, Japan, 4–6 August 2004; IEEE: New York, NY, USA, 2004; pp. 972–976. [Google Scholar]

- Robinson, T. SHORTEN: Simple Lossless and Near-Lossless Waveform Compression; Technical Report; Cambridge University Engineering Department: Cambridge, UK, 1994. [Google Scholar]

- Liebchen, T.; Reznik, Y.A. MPEG-4 ALS: An emerging standard for lossless audio coding. In Proceedings of the 2004 Data Compression Conference, Snowbird, UT, USA, 23–25 March 2004; IEEE: New York, NY, USA, 2004; pp. 439–448. [Google Scholar] [CrossRef]

- Yesh, P.S.; Rice, R.F.; Miller, W. On the Optimality of Code Options for a Universal Noiseless Coder; Technical Report; NASA, Jet Propulsion Laboratory: Pasadena, CA, USA, 1991. [Google Scholar]

- Donada, F.S. On the optimal calculation of the Rice coding parameter. Algorithms 2020, 13, 181. [Google Scholar] [CrossRef]

- Shen, H.; Pan, W.D.; Dong, Y.; Jiang, Z. Golomb-Rice coding parameter learning using deep belief network for hyperspectral image compression. In Proceedings of the 2017 International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: New York, NY, USA, 2017; pp. 2239–2242. [Google Scholar] [CrossRef]

- Reznik, Y.A. Coding of prediction residual in MPEG-4 standard for lossless audio coding (MPEG-4 ALS). In Proceedings of the 2004 International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Montreal, QC, Canada, 17–21 May 2004; IEEE: New York, NY, USA, 2004; pp. 1024–1027. [Google Scholar] [CrossRef]

- ISO/IEC 14496-5:2001/Amd.10:2007; Information Technology—Coding of Audio-Visual Objects—Part 5: Reference Software, Amendment 10: SSC, DST, ALS and SLS Reference Software. International Standards Organization: Geneva, Switzerland, 2007.

- Wan, C.R.; Goh, J.T.; Chee, H.T. Optimal tonal detectors based on the power spectrum. IEEE J. Ocean. Eng. 2000, 25, 540–552. [Google Scholar] [CrossRef]

- Wu, D.; Du, X.; Wang, K. An effective approach for underwater sonar image denoising based on sparse representation. In Proceedings of the 2018 International Conference on Image, Vision and Computing (ICIVC), Chongqing, China, 27–29 June 2018; IEEE: New York, NY, USA, 2018; pp. 389–393. [Google Scholar] [CrossRef]

- Luo, J.; Liu, H.; Huang, C.; Gu, J.; Xie, S.; Li, H. Denoising and tracking of sonar video imagery for underwater security monitoring systems. In Proceedings of the 2013 International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; IEEE: New York, NY, USA, 2013; pp. 2203–2208. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error measurement to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Horé, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; IEEE: New York, NY, USA, 2010; pp. 2366–2369. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. Mean squared error: Love it or leave it? A new look at signal fidelity measures. IEEE Signal Process. Mag. 2009, 26, 98–117. [Google Scholar] [CrossRef]

| Acquisition Sensor | Data Length | Sampling Rate | Resolution | Data Size |

|---|---|---|---|---|

| Sensor #4 | 1 h (3600 frames) | 4096 Hz | 16 bits | 28.1 MB |

| Sensor #5 | ||||

| Sensor #6 | ||||

| Sensor #7 | ||||

| Sensor #8 |

| Notation | Codec | Compression Mode |

|---|---|---|

| A-1 | MPEG-4 ALS (Fixed) | LPC order: 20 (Fixed), Rice encoding |

| A-2 | MPEG-4 ALS (Adaptive) | LPC order: Adaptive, Rice encoding |

| A-3 | MPEG-4 ALS (BGMC) | LPC order: 20 (Fixed), BGMC encoding |

| B-1 | FLAC (Fast) | Fast mode (Fixed LPC Order) |

| B-2 | FLAC (Best) | High-quality mode (maximum compression) |

| C-1 | WavPack (Fast) | Fast mode |

| C-2 | WavPack (HQ) | High-quality mode |

| Sensor | Proposed | MPEG-4 ALS (Fixed) | MPEG-4 ALS (Adaptive) | MPEG-4 ALS (BGMC) | FLAC (Fast) | FLAC (Best) | WavPack (Fast) | WavPack (HQ) |

|---|---|---|---|---|---|---|---|---|

| #4 | 57.0 | 60.4 | 60.5 | 59.8 | 63.9 | 61.0 | 61.6 | 60.7 |

| #5 | 59.7 | 63.1 | 63.1 | 62.5 | 66.5 | 63.6 | 64.4 | 63.3 |

| #6 | 66.1 | 69.5 | 69.6 | 68.9 | 72.6 | 70.1 | 70.9 | 69.8 |

| #7 | 68.4 | 71.7 | 71.8 | 71.2 | 75.3 | 72.3 | 73.4 | 72.0 |

| #8 | 63.4 | 66.5 | 66.6 | 65.9 | 71.3 | 67.1 | 68.0 | 66.7 |

| Avg. | 62.9 | 66.2 | 66.3 | 65.7 | 69.9 | 66.8 | 67.7 | 66.5 |

| Sensor | Proposed | MPEG-4 ALS (Fixed) | MPEG-4 ALS (Adaptive) | MPEG-4 ALS (BGMC) | FLAC (Fast) | FLAC (Best) | WavPack (Fast) | WavPack (HQ) |

|---|---|---|---|---|---|---|---|---|

| #4 | 3.80 | 1.92 | 1.15 | 2.61 | 0.66 | 0.75 | 0.70 | 1.00 |

| #5 | 3.68 | 1.92 | 1.17 | 2.61 | 0.55 | 0.66 | 0.74 | 1.05 |

| #6 | 3.80 | 1.91 | 1.14 | 2.62 | 0.57 | 0.67 | 0.74 | 1.02 |

| #7 | 3.70 | 1.92 | 1.15 | 2.64 | 0.54 | 0.66 | 0.73 | 1.04 |

| #8 | 3.26 | 1.93 | 1.16 | 2.64 | 0.56 | 0.68 | 0.78 | 1.01 |

| Avg. | 3.65 | 1.92 | 1.15 | 2.62 | 0.58 | 0.68 | 0.74 | 1.02 |

| Sensor | QMF Analysis | Low Sub-Band Encoding | High Sub-Band Encoding | SUM | ||||

|---|---|---|---|---|---|---|---|---|

| LPC Analysis | Entropy Coding | Etc. | LPC Analysis | Entropy Coding | Etc. | |||

| #4 | 2.20 | 0.66 | 0.17 | 0.11 | 0.38 | 0.17 | 0.11 | 3.80 |

| #5 | 2.00 | 0.69 | 0.18 | 0.14 | 0.33 | 0.22 | 0.12 | 3.68 |

| #6 | 2.17 | 0.67 | 0.17 | 0.13 | 0.33 | 0.20 | 0.13 | 3.80 |

| #7 | 2.06 | 0.66 | 0.17 | 0.15 | 0.34 | 0.20 | 0.12 | 3.70 |

| #8 | 1.91 | 0.36 | 0.19 | 0.13 | 0.35 | 0.17 | 0.15 | 3.26 |

| Avg. | 2.07 | 0.61 | 0.18 | 0.13 | 0.35 | 0.19 | 0.13 | 3.65 |

| Sensor | Proposed Method | Bit-Depth Reduction (from 16 to 8 bits) | ||||

| Compression Ratio (%) | PSNR (dB) | SSIM | Compression Ratio (%) | PSNR (dB) | SSIM | |

| #4 | 57.01 | 43.81 | 0.9995 | 50.00 | 20.33 | 0.0663 |

| #5 | 59.74 | 49.73 | 0.9994 | 50.00 | 26.04 | 0.6909 |

| #6 | 66.13 | 50.69 | 0.9998 | 50.00 | 20.60 | 0.8520 |

| #7 | 68.39 | 69.27 | 0.9998 | 50.00 | 30.60 | 0.8950 |

| #8 | 63.44 | 50.58 | 0.9996 | 50.00 | 24.11 | 0.7283 |

| Avg. | 62.94 | 58.17 | 0.9996 | 50.00 | 25.21 | 0.6465 |

| Sensor | SHORTEN (Lossy Mode: Max. 9 Bits per Sample) | SHORTEN (Lossy Mode: Max. 10 Bits per Sample) | ||||

| Compression Ratio (%) | PSNR (dB) | SSIM | Compression Ratio (%) | PSNR (dB) | SSIM | |

| #4 | 56.46 | 58.81 | 0.9987 | 61.66 | 67.33 | 0.9997 |

| #5 | 56.74 | 45.88 | 0.9987 | 62.92 | 49.84 | 0.9994 |

| #6 | 56.67 | 49.51 | 0.9989 | 62.92 | 48.48 | 0.9993 |

| #7 | 56.74 | 54.58 | 0.9988 | 62.99 | 58.17 | 0.9995 |

| #8 | 56.70 | 52.92 | 0.9985 | 61.64 | 61.72 | 0.9994 |

| Avg. | 56.66 | 53.43 | 0.9987 | 62.42 | 59.88 | 0.9995 |

| Sensor | Proposed | Bit-Depth Reduction (from 16 to 8 bits) | SHORTEN (Lossy Mode: Max. 9 Bits per Sample) | SHORTEN (Lossy Mode: Max. 10 Bits per Sample) |

|---|---|---|---|---|

| #4 | 0.0064 | 0.0963 | 0.0011 | 0.0004 |

| #5 | 0.0033 | 0.0499 | 0.0051 | 0.0032 |

| #6 | 0.0029 | 0.0934 | 0.0033 | 0.0038 |

| #7 | 0.0003 | 0.0295 | 0.0019 | 0.0012 |

| #8 | 0.0030 | 0.0623 | 0.0023 | 0.0008 |

| Avg. | 0.0032 | 0.0663 | 0.0027 | 0.0019 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, Y.G.; Kim, D.G.; Kim, K.; Choi, C.-H.; Park, N.I.; Kim, H.K. An Efficient Compression Method of Underwater Acoustic Sensor Signals for Underwater Surveillance. Sensors 2022, 22, 3415. https://doi.org/10.3390/s22093415

Kim YG, Kim DG, Kim K, Choi C-H, Park NI, Kim HK. An Efficient Compression Method of Underwater Acoustic Sensor Signals for Underwater Surveillance. Sensors. 2022; 22(9):3415. https://doi.org/10.3390/s22093415

Chicago/Turabian StyleKim, Yong Guk, Dong Gwan Kim, Kyucheol Kim, Chang-Ho Choi, Nam In Park, and Hong Kook Kim. 2022. "An Efficient Compression Method of Underwater Acoustic Sensor Signals for Underwater Surveillance" Sensors 22, no. 9: 3415. https://doi.org/10.3390/s22093415

APA StyleKim, Y. G., Kim, D. G., Kim, K., Choi, C. -H., Park, N. I., & Kim, H. K. (2022). An Efficient Compression Method of Underwater Acoustic Sensor Signals for Underwater Surveillance. Sensors, 22(9), 3415. https://doi.org/10.3390/s22093415